An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Pharm Methods

- v.1(1); Oct-Dec 2010

Bioanalytical method validation: An updated review

Gaurav tiwari.

Department of Pharmaceutics, Pranveer Singh Institute of Technology, Kalpi Road, Bhauti, Kanpur - 208 020, Uttar Pradesh, India

Ruchi Tiwari

The development of sound bioanalytical method(s) is of paramount importance during the process of drug discovery and development, culminating in a marketing approval. The objective of this paper is to review the sample preparation of drug in biological matrix and to provide practical approaches for determining selectivity, specificity, limit of detection, lower limit of quantitation, linearity, range, accuracy, precision, recovery, stability, ruggedness, and robustness of liquid chromatographic methods to support pharmacokinetic (PK), toxicokinetic, bioavailability, and bioequivalence studies. Bioanalysis, employed for the quantitative determination of drugs and their metabolites in biological fluids, plays a significant role in the evaluation and interpretation of bioequivalence, PK, and toxicokinetic studies. Selective and sensitive analytical methods for quantitative evaluation of drugs and their metabolites are critical for the successful conduct of pre-clinical and/or biopharmaceutics and clinical pharmacology studies.

INTRODUCTION

The reliability of analytical findings is a matter of great importance in forensic and clinical toxicology, as it is of course a prerequisite for correct interpretation of toxicological findings. Unreliable results might not only be contested in court, but could also lead to unjustified legal consequences for the defendant or to wrong treatment of the patient. The importance of validation, at least of routine analytical methods, can therefore hardly be overestimated. This is especially true in the context of quality management and accreditation, which have become matters of increasing importance in analytical toxicology in the recent years. This is also reflected in the increasing requirements of peer-reviewed scientific journals concerning method validation. Therefore, this topic should extensively be discussed on an international level to reach a consensus on the extent of validation experiments and on acceptance criteria for validation parameters of bioanalytical methods in forensic (and clinical) toxicology. In the last decade, similar discussions have been going on in the closely related field of pharmacokinetic (PK) studies for registration of pharmaceuticals. This is reflected by a number of publications on this topic in the last decade, of which the most important are discussed here.[ 1 ]

NEED OF BIONALYTICAL METHOD VALIDATION

It is essential to employ well-characterized and fully validated bioanalytical methods to yield reliable results that can be satisfactorily interpreted. It is recognized that bioanalytical methods and techniques are constantly undergoing changes and improvements, and in many instances, they are at the cutting edge of the technology. It is also important to emphasize that each bioanalytical technique has its own characteristics, which will vary from analyte to analyte. In these instances, specific validation criteria may need to be developed for each analyte. Moreover, the appropriateness of the technique may also be influenced by the ultimate objective of the study. When sample analysis for a given study is conducted at more than one site, it is necessary to validate the bioanalytical method(s) at each site and provide appropriate validation information for different sites to establish interlaboratory reliability.[ 2 ]

BIONALYTICAL METHOD DEVELOPMENT AND VALIDATION

The process by which a specific bioanalytical method is developed, validated, and used in routine sample analysis can be divided into

- reference standard preparation,

- bioanalytical method development and establishment of assay procedure and

- application of validated bioanalytical method to routine drug analysis and acceptance criteria for the analytical run and/or batch.

IMPORTANT PUBLICATIONS ON VALIDATION (FROM 1991 TO PRESENT)

A review on validation of bioanalytical methods was published by Karnes et al . in 1991 which was intended to provide guidance for bioanalytical chemists. One year later, Shah et al . published their report on the conference on “Analytical Methods Validation: Bioavailability, Bioequivalence and Pharmacokinetic Studies” held in Washington in 1990 (Conference Report). During this conference, consensus was reached on which parameters of bioanalytical methods should be evaluated, and some acceptance criteria were established. In the following years, this report was actually used as guidance by bioanalysts. Despite the fact, however, that some principle questions had been answered during this conference, no specific recommendations on practical issues like experimental designs or statistical evaluation had been made. In 1994, Hartmann et al . analyzed the Conference Report performing statistical experiments on the established acceptance criteria for accuracy and precision.

Requirements for Registration of Pharmaceuticals for Human Use (ICH) were approved by the regulatory agencies of the European Union, the United States of America and Japan. Despite the fact that these were focused on analytical methods for pharmaceutical products rather than bioanalysis, they still contain helpful guidance on some principal questions and definitions in the field of analytical method validation. The first document, approved in 1994, concentrated on the theoretical background and definitions, and the second one, approved in 1996, concentrated on methodology and practical issues.

TERMINOLOGY

It is accepted that during the course of a typical drug development program, a defined bioanalytical method will undergo many modifications. These evolutionary changes [e.g. addition of a metabolite, lowering of the lower limit of quantification (LLOQ)] require different levels of validation to demonstrate continuity of the validity of an assay's performance. Three different levels/types of method validations, full validation, partial validation, and cross-validation, are defined and characterized as follows.

Full validation

Full validation is necessary when developing and implementing a bioanalytical method for the first time for a new drug entity. If metabolites are added to an existing assay for quantification, then full validation of the revised assay is necessary for all analytes measured.[ 3 ]

Partial validation

Partial validations are modifications of validated bioanalytical methods that do not necessarily require full revalidations. Partial validation can range from as little as one assay accuracy and precision determination to a “nearly” full validation. Typical bioanalytical method changes that fall into this category include, but are not limited to, bioanalytical method transfers between laboratories or analysts, instrument and/or software platform changes, change in species within matrix (e.g., rat plasma to mouse plasma), changes in matrix within a species (e.g., human plasma to human urine), change in analytical methodology (e.g., change in detection systems), and change in sample processing procedures.

Cross-validation

Cross-validation is a comparison of two bioanalytical methods. Cross-validations are necessary when two or more bioanalytical methods are used to generate data within the same study. For example, an original validated bioanalytical method serves as the “reference” and the revised bioanalytical method is the “comparator.” The comparisons should be done both ways. Cross-validation with spiked matrix and subject samples should be conducted at each site or laboratory to establish interlaboratory reliability when sample analyses within a single study are conducted at more than one site, or more than one laboratory, and should be considered when data generated using different analytical techniques [e.g., LC-MS (Liquid chromatography mass spectroscopy) vs. enzyme-linked immunosorbent assay (ELISA)] in different studies are included in a regulatory submission.

VALIDATION PARAMETERS

Linearity assesses the ability of the method to obtain test results that are directly proportional to the concentration of the analyte in the sample. The linear range of the method must be determined regardless of the phase of drug development. Table 1 indicates US Food and Drug Administration (FDA) guidelines for bioanalytical method validation. ICH guidelines recommend evaluating a minimum of five concentrations to assess linearity. The five concentration levels should bracket the upper and lower concentration levels evaluated during the accuracy study.[ 4 ] ICH guidelines recommend the following concentration ranges be evaluated during method validation:

US FDA guidelines for bioanalytical method validation

- Assay (finished product or drug substance): 80–120% of the sample concentration. This range must bracket that of the accuracy study, however. If accuracy samples are to be prepared at 80, 100, and 120% of nominal, then the linearity range should be expanded to a minimum of 75–125%.

- Content uniformity method: 70–130% of the sample concentration, unless a wider, more appropriate, range is justified based on the nature of the dosage form (e.g., metered dose inhalers).

- Dissolution method: This requires ±20% of the specified range. In cases where dissolution profiles are required, the range for the linearity evaluation should start below the typical amount recovered at the initial pull point to 120% of total drug content.

- Impurity method: Reporting level to 120% of the specification.

- Impurity and assay method combined: One hundred percent level standard is used for quantification; reporting level of impurity to 120% of assay specification.

The linearity solutions are prepared by performing serial dilutions of a single stock solution; alternatively, each linearity solution may be separately weighed. The resulting active response for each linearity solution is plotted against the corresponding theoretical concentration. The linearity plot should be visually evaluated for any indications of a nonlinear relationship between concentration and response. A statistical analysis of the regression line should also be performed, evaluating the resulting correlation coefficient, Y intercept, slope of the regression line, and residual sum of squares. A plot of the residual values versus theoretical concentrations may also be beneficial for evaluating the relationship between concentration and response.

In cases where individual impurities are available, it is a good practice to establish both relative response factors and relative retention times for each impurity, compared to the active compound. Response factors allow the end user to utilize standard material of the active constituent for quantitation of individual impurities, correcting for response differences. This approach saves the end user the cost of maintaining supplies of all impurities and simplifies data processing. To determine the relative response factors, linearity curves for each impurity and the active compound should be performed from the established limit of quantitation to approximately 200% of the impurity specification. The relative response factor can be determined based upon the linearity curve generated for each impurity and the active:

There is a general agreement that at least the following validation parameters should be evaluated for quantitative procedures: selectivity, calibration model, stability, accuracy (bias, precision) and limit of quantification.[ 5 ] Additional parameters which might have to be evaluated include limit of detection (LOD), recovery, reproducibility and ruggedness (robustness).

Selectivity (Specificity)

For every phase of product development, the analytical method must demonstrate specificity. The method must have the ability to unambiguously assess the analyte of interest while in the presence of all expected components, which may consist of degradants, excipients/sample matrix, and sample blank peaks. The sample blank peaks may be attributed to things such as reagents or filters used during the sample preparation.

For identification tests, discrimination of the method should be demonstrated by obtaining positive results for samples containing the analyte and negative results for samples not containing the analyte. The method must be able to differentiate between the analyte of interest and compounds with a similar chemical structure that may be present. For a high performance liquid chromatography (HPLC) identification test, peak purity evaluation should be used to assess the homogeneity of the peak corresponding to the analyte of interest.

For assay/related substances methods, the active peak should be adequately resolved from all impurity/degradant peaks, placebo peaks, and sample blank peaks. Resolution from impurity peaks could be assessed by analyzing a spiked solution with all known available impurities present or by injecting individual impurities and comparing retention to that of the active. Placebo and sample matrix components should be analyzed without the active present in order to identify possible interferences.

If syringe filters are to be used to clarify sample solutions, an aliquot of filtered sample diluent should be analyzed for potential interferences. If the impurities/degradants are unknown or unavailable, forced degradation studies should be performed. Forced degradation studies of the active pharmaceutical ingredient (API) and finished product, using either peak purity analysis or a mass spectral evaluation, should be performed to assess resolution from potential degradant products.[ 6 ]

The forced degradation studies should consist of exposing the API and finished product to acid, base, peroxide, heat, and light conditions, until adequate degradation of the active has been achieved. An acceptable range of degradation may be 10–30% but may vary based on the active being degraded. Overdegradation of the active should be avoided to prevent the formation of secondary degradants. If placebo material is available, it should be stressed under the same conditions and for the same duration as the API or finished product. The degraded placebo samples should be evaluated to ensure that any generated degradants are resolved from the analyte peak(s) of interest.

Evaluation of the forced degraded solutions by peak purity analysis using a photodiode array detector or mass spectral evaluation must confirm that the active peak does not co-elute with any degradation products generated as a result of the forced degradation. Another, more conservative, approach for assay/related substances methods is to perform peak purity analysis or mass spectral evaluation on all generated degradation peaks and verify that co-elution does not occur for those degradant peaks as well as the active peak.

Whereas the selectivity experiments for the first approach can be performed during a prevalidation phase (no need for quantification), those for the second approach are usually performed together with the precision and accuracy experiments during the main validation phase. At this point it must be mentioned that the term specificity is used interchangeably with selectivity, although in a strict sense specificity refers to methods, which produce a response for a single analyte, whereas selectivity refers to methods that produce responses for a number of chemical entities, which may or may not be distinguished. Selective multianalyte methods (e.g., for different drugs of abuse in blood) should of course be able to differentiate all interesting analytes from each other and from the matrix.[ 7 ]

Calibration model

The choice of an appropriate calibration model is necessary for reliable quantification. Therefore, the relationship between the concentration of analyte in the sample and the corresponding detector response must be investigated. This can be done by analyzing spiked calibration samples and plotting the resulting responses versus the corresponding concentrations. The resulting standard curves can then be further evaluated by graphical or mathematical methods, the latter also allowing statistical evaluation of the response functions. Whereas there is a general agreement that calibration samples should be prepared in blank matrix and that their concentrations must cover the whole calibration range, recommendations on how many concentration levels should be studied with how many replicates per concentration level differ significantly. In the Conference Report II, “a sufficient number of standards to define adequately the relationship between concentration and response” was demanded. Furthermore, it was stated that at least five to eight concentration levels should be studied for linear relationships and it may be more for nonlinear relationships.

However, no information was given on how many replicates should be analyzed at each level. The guidelines established by the ICH and those of the Journal of Chromatography B also required at least five concentration levels, but again no specific requirements for the number of replicate set at each level were given. Causon recommended six replicates at each of the six concentration levels, whereas Wieling et al . used eight concentration levels in triplicate. This approach allows not only a reliable detection of outliers but also a better evaluation of the behavior of variance across the calibration range. The latter is important for choosing the right statistical model for the evaluation of the calibration curve. The often used ordinary least squares model for linear regression is only applicable for homoscedastic data sets (constant variance over the whole range), whereas in case of heteroskedasticity (significant difference between variances at lowest and highest concentration levels), the data should mathematically be transformed or a weighted least squares model should be applied. Usually, linear models are preferable, but, if necessary, the use of nonlinear models is not only acceptable but also recommended. However, more concentration levels are needed for the evaluation of nonlinear models than for linear models.[ 8 ]

After outliers have been purged from the data and a model has been evaluated visually and/or by, for example, residual plots, the model fit should also be tested by appropriate statistical methods. The fit of unweighted regression models (homoscedastic data) can be tested by the analysis of variance (ANOVA) lack-of-fit test. The widespread practice to evaluate a calibration model via its coefficients of correlation or determination is not acceptable from a statistical point of view.

However, one important point should be kept in mind when statistically testing the model fit: The higher the precision of a method, the higher the probability to detect a statistically significant deviation from the assumed calibration model. Therefore, the relevance of the deviation from the assumed model must also be taken into account. If the accuracy data (bias and precision) are within the required acceptance limits and an alternative calibration model is not applicable, slight deviations from the assumed model may be neglected. Once a calibration model has been established, the calibration curves for other validation experiments (precision, bias, stability, etc.) and for routine analysis can be prepared with fewer concentration levels and fewer or no replicates

Accuracy should be performed at a minimum of three concentration levels. For drug substance, accuracy can be inferred from generating acceptable results for precision, linearity, and specificity. For assay methods, the spiked placebo samples should be prepared in triplicate at 80, 100, and 120%. If placebo is not available and cannot be formulated in the laboratory, the weight of drug product may be varied in the sample preparation step of the analytical method to prepare samples at the three levels listed above. In this case, the accuracy study can be combined with method precision, where six sample preparations are prepared at the 100% level, while both the 80 and 120% levels are prepared in triplicate. For impurity/related substances methods, it is ideal if standard material is available for the individual impurities. These impurities are spiked directly into sample matrix at known concentrations, bracketing the specification level for each impurity. This approach can also be applied to accuracy studies for residual solvent methods where the specific residual solvents of interest are spiked into the product matrix.

If individual impurities are not available, placebo can be spiked with drug substance or reference standard of the active at impurity levels, and accuracy for the impurities can be inferred by obtaining acceptable accuracy results from the active spiked placebo samples. Accuracy should be performed as part of late Phase 2 and Phase 3 method validations. For early phase method qualifications, accuracy can be inferred from obtaining acceptable data for precision, linearity, and specificity.[ 9 ] Stability of the compound(s) of interest should be evaluated in sample and standard solutions at typical storage conditions, which may include room temperature and refrigerated conditions. The content of the stored solutions is evaluated at appropriate intervals against freshly prepared standard solutions. For assay methods, the change in active content must be controlled tightly to establish sample stability. If impurities are to be monitored in the method sample, solutions can be analyzed on multiple days and the change in impurity profiles can be monitored. Generally, absolute changes in the impurity profiles can be used to establish stability. If an impurity is not present in the initial sample (day 0) but appears at a level above the impurity specification during the course of the stability evaluation, then this indicates that the sample is not stable for that period of storage. In addition, impurities that are initially present and then disappear, or impurities that are initially present and grow greater than 0.1% absolute, are also indications of solution instability.

During phase 3 validation, solution stability, along with sample preparation and chromatographic robustness, should also be evaluated. For both sample preparation and chromatographic robustness evaluations, the use of experimental design could prove advantageous in identifying any sample preparation parameters or chromatographic parameters that may need to be tightly controlled in the method. For chromatographic robustness, all compounds of interest, including placebo-related and sample blank components, should be present when evaluating the effect of modifying chromatographic parameters. For an HPLC impurity method, this may include a sample preparation spiked with available known impurities at their specification level or, alternatively, a forced degraded sample solution can be utilized. The analytical method should be updated to include defined stability of solutions at evaluated storage conditions and any information regarding sample preparation and chromatographic parameters, which need to be tightly controlled. Sample preparation and chromatographic robustness may also be evaluated during method development. In this case, the evaluations do not require repeating during the actual method validation.[ 10 ]

Establishment of an appropriate qualification/validation protocol requires assessment of many factors, including phase of product development, purpose of the method, type of analytical method, and availability of supplies, among others. There are many approaches that can be taken to perform the testing required for various validation elements, and the experimental approach selected is dependent on the factors listed above. As with any analytical method, the defined system suitability criteria of the method should be monitored throughout both method qualification and method validation, ensuring that the criteria set for the suitability is appropriate and that the method is behaving as anticipated. The accuracy of a method is affected by systematic (bias) as well as random (precision) error components. This fact has been taken into account in the definition of accuracy as established by the International Organization for Standardization (ISO). However, it must be mentioned that accuracy is often used to describe only the systematic error component, that is, in the sense of bias. In the following, the term accuracy will be used in the sense of bias, which will be indicated in brackets.

According to ISO, bias is the difference between the expectation of test results and an accepted reference value. It may consist of more than one systematic error component. Bias can be measured as a percent deviation from the accepted reference value. The term trueness expresses the deviation of the mean value of a large series of measurements from the accepted reference value. It can be expressed in terms of bias. Due to the high workload of analyzing such large series, trueness is usually not determined during method validation, but rather from the results of a great number of quality control samples (QC samples) during routine application.[ 11 ]

Precision and repeatability

Repeatability reflects the closeness of agreement of a series of measurements under the same operating conditions over a short interval of time. For a chromatographic method, repeatability can be evaluated by performing a minimum of six replicate injections of a single sample solution prepared at the 100% test concentration.

Alternatively, repeatability can be determined by evaluating the precision from a minimum of nine determinations that encompass the specified range of the method. The nine determinations may be composed of triplicate determinations at each of three different concentration levels, one of which would represent the 100% test concentration.

Intermediate precision reflects within-laboratory variations such as different days, different analysts, and different equipments. Intermediate precision testing can consist of two different analysts, each preparing a total of six sample preparations, as per the analytical method. The analysts execute their testing on different days using separate instruments and analytical columns.[ 12 ]

The use of experimental design for this study could be advantageous because statistical evaluation of the resulting data could identify testing parameters (i.e., brand of HPLC system) that would need to be tightly controlled or specifically addressed in the analytical method. Results from each analyst should be evaluated to ensure a level of agreement between the two sets of data. Acceptance criteria for intermediate precision are dependent on the type of testing being performed. Typically, for assay methods, the relative standard deviation (RSD) between the two sets of data must be ≤2.0%, while the acceptance criteria for impurities is dependent on the level of impurity and the sensitivity of the method. Intermediate precision may be delayed until full ICH validation, which is typically performed during late Phase 2 or Phase 3 of drug development. However, precision testing should be conducted by one analyst for early phase method qualification.

Reproducibility reflects the precision between analytical testing sites. Each testing site can prepare a total of six sample preparations, as per the analytical method. Results are evaluated to ensure statistical equivalence among various testing sites. Acceptance criteria similar to those applied to intermediate precision also apply to reproducibility.

Repeatability expresses the precision under the same operating conditions over a short interval of time. Repeatability is also termed intra-assay precision. Repeatability is sometimes also termed within-run or within-day precision.

Intermediate precision

Intermediate precision expresses within-laboratories variations: different days, different analysts, different equipments, etc.[ 13 ] The ISO definition used the term “M-factor different intermediate precision”, where the M-factor expresses the number of factors (operator, equipment, or time) that differ between successive determinations. Intermediate precision is sometimes also called between-run, between-day, or inter-assay precision.

Reproducibility

Reproducibility expresses the precision between laboratories (collaborative studies, usually applied to standardization of methodology). Reproducibility only has to be studied, if a method is supposed to be used in different laboratories. Unfortunately, some authors also used the term reproducibility for within-laboratory studies at the level of intermediate precision. This should, however, be avoided in order to prevent confusion.[ 14 ] As already mentioned above, precision and bias can be estimated from the analysis of QC samples under specified conditions. As both precision and bias can vary substantially over the calibration range, it is necessary to evaluate these parameters at least at three concentration levels (low, medium, high). In the Conference Report II, it was further defined that the low QC sample must be within three times LLOQ. The Journal of Chromatography B requirement is to study precision and bias at two concentration levels (low and high), whereas in the experimental design proposed by Wieling et al ., four concentration levels (LLOQ, low, medium, high) were studied.[ 15 ]

Causon also suggested estimating precision at four concentration levels. Several authors have specified acceptance limits for precision and/or accuracy (bias). The Conference Reports required precision to be within 15% RSD except at the LLOQ where 20% RSD is accepted. Bias is required to be within ±15% of the accepted true value, except at the LLOQ where ±20% is accepted.[ 16 ] These requirements have been subject to criticism in the analysis of the Conference Report by Hartmann et al . They concluded from statistical considerations that it is not realistic to apply the same acceptance criteria at different levels of precision (repeatability, reproducibility) as RSD under reproducibility conditions is usually considerably greater than under repeatability conditions. Furthermore, if precision and bias estimates are close to the acceptance limits, the probability to reject an actually acceptable method (b-error) is quite high. Causon proposed the same acceptance limits of 15% RSD for precision and ±15% for accuracy (bias) for all concentration levels. The guidelines established by the Journal of Chromatography B required precision to be within 10% RSD for the high QC samples and within 20% RSD for the low QC sample. Acceptance criteria for accuracy (bias) were not specified there.

Again, the proposals on how many replicates at each concentration levels should be analyzed vary considerably.[ 17 ] The Conference Reports and Journal of Chromatography B guidelines required at least five replicates at each concentration level. However, one would assume that these requirements apply to repeatability studies; at least no specific recommendations are given for studies of intermediate precision or reproducibility. Some more practical approaches to this problem have been described by Wieling et al ., Causon, and Hartmann et al . In their experimental design, Wieling et al . analyzed three replicates at each of four concentration levels on each of 5 days.[ 18 ] Similar approaches were suggested by Causon (six replicates at each of four concentrations on each of four occasions) and Hartmann et al . (two replicates at each concentration level on each of 8 days). All three used one-way ANOVA to estimate within-run precision (repeatability) and between-run precision (intermediate precision).

In the design proposed by Hartmann et al ., the degrees of freedom for both estimations are most balanced, namely, eight for within-run precision and seven for between-run precision. In the information for authors of the Clinical Chemistry journal, an experimental design with two replicates per run, two runs per day over 20 days for each concentration level is recommended. This allows estimation of not only within-run and between-run standard deviations but also within-day, between-day, and total standard deviations, which are in fact all estimations of precision at different levels. However, it seems questionable if the additional information provided by this approach can justify the high workload and costs, compared to the other experimental designs. Daily variations of the calibration curve can influence bias estimation.[ 19 ] Therefore, bias estimation should be based on data calculated from several calibration curves. In the experimental design of Wieling et al ., the results for QC samples were calculated via daily calibration curves. Therefore, the overall means from these results at the different concentration levels reliably reflect the average bias of the method at the corresponding concentration level. Alternatively, as described in the same paper, the bias can be estimated using confidence limits around the calculated mean values at each concentration. If the calculated confidence interval includes the accepted true value, one can assume the method to be free of bias at a given level of statistical significance. Another way to test the significance of the calculated bias is to perform a t -test against the accepted true value. However, even methods exhibiting a statistically significant bias can still be acceptable, if the calculated bias lies within previously established acceptance limits.[ 20 ]

Lower limit of quantification

The LLOQ is the lowest amount of an analyte in a sample that can be quantitatively determined with suitable precision and accuracy (bias). There are different approaches to the determination of LLOQ.[ 21 ]

LLOQ based on precision and accuracy (bias) data: This is probably the most practical approach and defines the LLOQ as the lowest concentration of a sample that can still be quantified with acceptable precision and accuracy (bias). In the Conference Reports, the acceptance criteria for these two parameters at LLOQ are 20% RSD for precision and ±20% for bias. Only Causon suggested 15% RSD for precision and ±15% for bias. It should be pointed out, however, that these parameters must be determined using an LLOQ sample independent from the calibration curve. The advantage of this approach is the fact that the estimation of LLOQ is based on the same quantification procedure used for real samples.[ 22 ]

LLOQ based on signal to noise ratio (S/N): This approach can only be applied if there is baseline noise, for example, to chromatographic methods. Signal and noise can then be defined as the height of the analyte peak (signal) and the amplitude between the highest and lowest point of the baseline (noise) in a certain area around the analyte peak. For LLOQ, S/N is usually required to be equal to or greater than 10. The estimation of baseline noise can be quite difficult for bioanalytical methods, if matrix peaks elute close to the analyte peak.

Upper limit of quantification

The upper limit of quantification (ULOQ) is the maximum analyte concentration of a sample that can be quantified with acceptable precision and accuracy (bias). In general, the ULOQ is identical with the concentration of the highest calibration standard.[ 23 ]

Limit of detection

Quantification below LLOQ is by definition not acceptable. Therefore, below this value a method can only produce semi-quantitative or qualitative data. However, it can still be important to know the LOD of the method. According to ICH, it is the lowest concentration of an analyte in a sample which can be detected but not necessarily quantified as an exact value. According to Conference Report II, it is the lowest concentration of an analyte in a sample that the bioanalytical procedure can reliably differentiate from background noise.

The definition according to Conference Report II was as follows: The chemical stability of an analyte in a given matrix under specific conditions for given time intervals. Stability of the analyte during the whole analytical procedure is a prerequisite for reliable quantification. Therefore, full validation of a method must include stability experiments for the various stages of analysis, including storage prior to analysis.[ 24 ]

Long-term stability

The stability in the sample matrix should be established under storage conditions, that is, in the same vessels, at the same temperature and over a period at least as long as the one expected for authentic samples.

Freeze/thaw stability

As samples are often frozen and thawed, for example, for reanalyis, the stability of analyte during several freeze/thaw cycles should also be evaluated. The Conference Reports require a minimum of three cycles at two concentrations in triplicate, which has also been accepted by other authors.

In-process stability

The stability of analyte under the conditions of sample preparation (e.g., ambient temperature over time needed for sample preparation) is evaluated here. There is a general agreement that this type of stability should be evaluated to find out if preservatives have to be added to prevent degradation of analyte during sample preparation.[ 25 – 27 ]

Processed sample stability

Instability can occur not only in the sample matrix but also in prepared samples. It is therefore important to also test the stability of an analyte in the prepared samples under conditions of analysis (e.g., autosampler conditions for the expected maximum time of an analytical run). One should also test the stability in prepared samples under storage conditions, for example, refrigerator, in case prepared samples have to be stored prior to analysis.

As already mentioned above, recovery is not among the validation parameters regarded as essential by the Conference Reports. Most authors agree that the value for recovery is not important as long as the data for LLOQ, LOD, precision and accuracy (bias) are acceptable. It can be calculated by comparison of the analyte response after sample workup with the response of a solution containing the analyte at the theoretical maximum concentration. Therefore, absolute recoveries can usually not be determined if the sample workup includes a derivatization step, as the derivatives are usually not available as reference substances. Nevertheless, the guidelines of the Journal of Chromatography B require the determination of the recovery for analyte and internal standard at high and low concentrations.[ 28 – 31 ]

Ruggedness (Robustness)

Ruggedness is a measure for the susceptibility of a method to small changes that might occur during routine analysis like small changes of pH values, mobile phase composition, temperature, etc. Full validation must not necessarily include ruggedness testing; it can, however, be very helpful during the method development/prevalidation phase, as problems that may occur during validation are often detected in advance. Ruggedness should be tested if a method is supposed to be transferred to another laboratory.

SPECIFIC RECOMMENDATION FOR BIOANALYTICAL METHOD VALIDATION

- The matrix-based standard curve should consist of a minimum of six standard points, excluding blanks, using single or replicate samples. The standard curve should cover the entire range of expected concentrations. Standard curve fitting is determined by applying the simplest model that adequately describes the concentration–response relationship using appropriate weighting and statistical tests for goodness of fit.[ 32 ]

- LLOQ is the lowest concentration of the standard curve that can be measured with acceptable accuracy and precision. The LLOQ should be established using at least five samples independent of standards and determining the coefficient of variation (CV) and/or appropriate confidence interval. The LLOQ should serve as the lowest concentration on the standard curve and should not be confused with the LOD and/or the low QC sample. The highest standard will define the ULOQ of an analytical method.

- For validation of the bioanalytical method, accuracy and precision should be determined using a minimum of five determinations per concentration level (excluding blank samples). The mean value should be within 15% of the theoretical value, except at LLOQ, where it should not deviate by more than 20%. The precision around the mean value should not exceed 15% of the CV, except for LLOQ, where it should not exceed 20% of the CV. Other methods of assessing accuracy and precision that meet these limits may be equally acceptable.[ 33 ]

- The accuracy and precision with which known concentrations of analyte in biological matrix can be determined should be demonstrated. This can be accomplished by analysis of replicate sets of analyte samples of known concentration QC samples from an equivalent biological matrix. At a minimum, three concentrations representing the entire range of the standard curve should be studied: one within 3× the LLOQ (low QC sample), one near the center (middle QC), and one near the upper boundary of the standard curve (high QC).

- Reported method validation data and the determination of accuracy and precision should include all outliers; however, calculations of accuracy and precision excluding values that are statistically determined as outliers can also be reported.

- The stability of the analyte in biological matrix at the intended storage temperatures should be established. The influence of freeze–thaw cycles (a minimum of three cycles at two concentrations in triplicate) should be studied.

- The stability of the analyte in matrix at ambient temperature should be evaluated over a time period equal to the typical sample preparation, sample handling, and analytical run times.

- Reinjection reproducibility should be evaluated to determine if an analytical run could be reanalyzed in the case of instrument failure.[ 34 ]

- The specificity of the assay methodology should be established using a minimum of six independent sources of the same matrix. For hyphenated mass spectrometry based methods, however, testing six independent matrices for interference may not be important. In the case of LC-MS and LC-MS-MS based procedures, matrix effects should be investigated to ensure that precision, selectivity, and sensitivity will not be compromised. Method selectivity should be evaluated during method development and throughout method validation and can continue throughout application of the method to actual study samples.

- Acceptance/rejection criteria for spiked, matrix-based calibration standards and validation QC samples should be based on the nominal (theoretical) concentration of analytes. Specific criteria can be set up in advance and achieved for accuracy and precision over the range of the standards, if so desired.

DOCUMENTATION

The validity of an analytical method should be established and verified by laboratory studies and documentation of successful completion of such studies should be provided in the assay validation report. General and specific SOPs(standard operating procedure) and good record keeping are an essential part of a validated analytical method. The data generated for bioanalytical method establishment and the QCs should be documented and available for data audit and inspection. Documentation for submission to the agency should include[ 35 ]

- Summary information,

- Method development and establishment,

- Bioanalytical reports of the application of any methods to routine sample analysis and

- Other information applicable to method development and establishment and/or to routine sample analysis.

Summary information

- Summary table of validation reports, including analytical method validation, partial revalidation, and cross-validation reports. The table should be in chronological sequence and include assay method identification code, type of assay, and the reason for the new method or additional validation (e.g., to lower the limit of quantitation).

- Summary table with a list, by protocol, of assay methods used. The protocol number, protocol title, assay type, assay method identification code, and bioanalytical report code should be provided.

- A summary table allowing cross-referencing of multiple identification codes should be provided (e.g., when an assay has different codes for the assay method, validation reports, and bioanalytical reports, especially when the sponsor and a contract laboratory assign different codes).[ 36 ]

Documentation for method establishment

Documentation for method development and establishment should include:

- An operational description of the analytical method.

- Evidence of purity and identity of drug standards, metabolite standards, and internal standards used in validation experiments[ 37 ].

- A description of stability studies and supporting data.

- A description of experiments conducted to determine accuracy, precision, recovery, selectivity, limit of quantification, calibration curve (equations and weighting functions used, if any), and relevant data obtained from these studies.

- Documentation of intra- and inter-assay precision and accuracy.

- In NDA (new drug approval) submissions, information about cross-validation study data, if applicable.

- Legible annotated chromatograms or mass spectrograms, if applicable and

- Any deviations from SOPs, protocols, or (Good Laboratory Practice) GLPs (if applicable), and justifications for deviations.[ 38 ]

Application to routine drug analysis

Documentation of the application of validated bioanalytical methods to routine drug analysis should include the following.

- Evidence of purity and identity of drug standards, metabolite standards, and internal standards used during routine analyses.

- Summary tables containing information on sample processing and storage : Tables should include sample identification, collection dates, storage prior to shipment, information on shipment batch, and storage prior to analysis. Information should include dates, times, sample condition, and any deviation from protocols.

- Summary tables of analytical runs of clinical or preclinical samples : Information should include assay run identification, date and time of analysis, assay method, analysts, start and stop times, duration, significant equipment and material changes, and any potential issues or deviation from the established method.[ 39 ]

- Equations used for back-calculation of results.

- Tables of calibration curve data used in analyzing samples and calibration curve summary data.

- Summary information on intra- and inter-assay values of QC samples and data on intra- and inter-assay accuracy and precision from calibration curves and QC samples used for accepting the analytical run. QC graphs and trend analyses in addition to raw data and summary statistics are encouraged.

- Data tables from analytical runs of clinical or preclinical samples : Tables should include assay run identification, sample identification, raw data and back-calculated results, integration codes, and/or other reporting codes.

- Complete serial chromatograms from 5 to 20% of subjects, with standards and QC samples from those analytical runs : For pivotal bioequivalence studies for marketing, chromatograms from 20% of serially selected subjects should be included. In other studies, chromatograms from 5% of randomly selected subjects in each study should be included. Subjects whose chromatograms are to be submitted should be defined prior to the analysis of any clinical samples.

- Reasons for missing samples.

- Documentation for repeat analyses : Documentation should include the initial and repeat analysis results, the reported result, assay run identification, the reason for the repeat analysis, the requestor of the repeat analysis, and the manager authorizing reanalysis. Repeat analysis of a clinical or preclinical sample should be performed only under a predefined SOP.[ 40 ]

- Documentation for reintegrated data : Documentation should include the initial and repeat integration results, the method used for reintegration, the reported result, assay run identification, the reason for the reintegration, the requestor of the reintegration, and the manager authorizing reintegration. Reintegration of a clinical or preclinical sample should be performed only under a predefined SOP.

- Deviations from the analysis protocol or SOP, with reasons and justifications for the deviations.

OTHER INFORMATION

Other information applicable to both method development and establishment and/or to routine sample analysis could include: lists of abbreviations and any additional codes used, including sample condition codes, integration codes, and reporting codes, reference lists and legible copies of any references.[ 41 – 43 ]

SOPs or protocols cover the following areas:

- calibration standard acceptance or rejection criteria,

- calibration curve acceptance or rejection criteria,

- QC sample and assay run acceptance or rejection criteria,

- acceptance criteria for reported values when all unknown samples are assayed in duplicate,

- sample code designations, including clinical or preclinical sample codes and bioassay sample code,

- assignment of clinical or preclinical samples to assay batches,

- sample collection, processing, and storage and

- repeat analyses of samples, reintegration of samples.

APPLICATION OF VALIDATED METHOD TO ROUTINE DRUG ANALYSIS

Assays of all samples of an analyte in a biological matrix should be completed within the time period for which stability data are available. In general, biological samples can be analyzed with a single determination without duplicate or replicate analysis if the assay method has acceptable variability as defined by validation data.[ 44 ] This is true for procedures where precision and accuracy variabilities routinely fall within acceptable tolerance limits. For a difficult procedure with a labile analyte where high precision and accuracy specifications may be difficult to achieve, duplicate or even triplicate analyses can be performed for a better estimate of analyte.

The following recommendations should be noted in applying a bioanalytical method to routine drug analysis.

- A matrix-based standard curve should consist of a minimum of six standard points, excluding blanks (either single or replicate), covering the entire range.

- Response function : Typically, the same curve fitting, weighting, and goodness of fit determined during pre-study validation should be used for the standard curve within the study. Response function is determined by appropriate statistical tests based on the actual standard points during each run in the validation. Changes in the response function relationship between pre-study validation and routine run validation indicate potential problems.

- The QC samples should be used to accept or reject the run. These QC samples are matrix spiked with analyte.[ 45 ]

- System suitability : Based on the analyte and technique, a specific SOP (or sample) should be identified to ensure optimum operation of the system used.

- Any required sample dilutions should use like matrix (e.g., human to human) obviating the need to incorporate actual within-study dilution matrix QC samples.

- Repeat analysis : It is important to establish an SOP or guideline for repeat analysis and acceptance criteria. This SOP or guideline should explain the reasons for repeating sample analysis. Reasons for repeat analyses could include repeat analysis of clinical or preclinical samples for regulatory purposes, inconsistent replicate analysis, samples outside of the assay range, sample processing errors, equipment failure, poor chromatography, and inconsistent PK data. Reassays should be done in triplicate if the sample volume allows. The rationale for the repeat analysis and the reporting of the repeat analysis should be clearly documented.

- Sample data reintegration : An SOP or guideline for sample data reintegration should be established. This SOP or guideline should explain the reasons for reintegration and how the reintegration is to be performed. The rationale for the reintegration should be clearly described and documented. Original and reintegration data should be reported.

ACCEPTANCE CRITERIA FOR THE RUN

The following acceptance criteria should be considered for accepting the analytical run.

- Standards and QC samples can be prepared from the same spiking stock solution, provided the solution stability and accuracy have been verified. A single source of matrix may also be used, provided selectivity has been verified.

- Standard curve samples, blanks, QCs, and study samples can be arranged as considered appropriate within the run.

- Placement of standards and QC samples within a run should be designed to detect assay drift over the run.

- Matrix-based standard calibration samples : 75%, or a minimum of six standards, when back-calculated (including ULOQ), should fall within 15%, except for LLOQ, when it should be 20% of the nominal value. Values falling outside these limits can be discarded, provided they do not change the established model.

- Specific recommendation for method validation should be provided for both the intra-day and intra-run experiment.[ 46 ]

- QC samples : QC samples replicated (at least once) at a minimum of three concentrations [one within 3× of the LLOQ (low QC), one in the midrange (middle QC), and one approaching the high end of the range (high QC)] should be incorporated into each run. The results of the QC samples provide the basis of accepting or rejecting the run. At least 67% (four out of six) of the QC samples should be within 15% of their respective nominal (theoretical) values; 33% of the QC samples (not all replicates at the same concentration) can be outside the 15% of the nominal value. A confidence interval approach yielding comparable accuracy and precision is an appropriate alternative.

- The minimum number of samples (in multiples of three) should be at least 5% of the number of unknown samples or six total QCs, whichever is greater.

- Samples involving multiple analytes should not be rejected based on the data from one analyte failing the acceptance criteria.

- The data from rejected runs need not be documented, but the fact that a run was rejected and the reason for failure should be recorded.[ 47 ]

Bioanalysis and the production of PK, toxicokinetic and metabolic data play a fundamental role in pharmaceutical research and development; therefore, the data must be produced to acceptable scientific standards. For this reason and the need to satisfy regulatory authority requirements, all bioanalytical methods should be properly validated and documented. The lack of a clear experimental and statistical approach for the validation of bioanalytical methods has led scientists in charge of the development of these methods to propose a practical strategy to demonstrate and assess the reliability of chromatographic methods employed in bioanalysis. The aim of this article is to provide simple to use approaches with a correct scientific background to improve the quality of the bioanalytical method development and validation process. Despite the widespread availability of different bioanalytical procedures for low-molecular weight drug candidates, ligand binding assay remains of critical importance for certain bioanalytical applications in support of drug development such as for antibody, receptor, etc. This article gives an idea about which criteria bioanalysis based on immunoassay should follow to reach for proper acceptance. Applications of bioanalytical method in routine drug analysis are also taken into consideration in this article. These various essential development and validation characteristics for bioanalytical methodology have been discussed with a view to improving the standard and acceptance in this area of research.

Source of Support: Nil

Conflict of Interest: None declared.

M10: bioanalytical method validation and study sample analysis : guidance for industry

- Skip to main content

- Skip to FDA Search

- Skip to in this section menu

- Skip to footer links

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

U.S. Food and Drug Administration

- Search

- Menu

- Regulatory Information

- Search for FDA Guidance Documents

- Bioanalytical Method Validation Guidance for Industry

GUIDANCE DOCUMENT

Bioanalytical Method Validation Guidance for Industry May 2018

The Food and Drug Administration (FDA or Agency) is announcing the availability of a final guidance for industry entitled “Bioanalytical Method Validation.” This final guidance incorporates public comments to the revised draft published in 2013 as well as the latest scientific feedback concerning bioanalytical method validation and provides the most up-to-date information needed by drug developers to ensure the bioanalytical quality of their data.

Submit Comments

You can submit online or written comments on any guidance at any time (see 21 CFR 10.115(g)(5))

If unable to submit comments online, please mail written comments to:

Dockets Management Food and Drug Administration 5630 Fishers Lane, Rm 1061 Rockville, MD 20852

All written comments should be identified with this document's docket number: FDA-2013-D-1020 .

- The Biopharmaceutical and Gene Therapy Terminology Guide

- CHROMtalks 2024

- Publications

- Conferences

Validation of Stability-Indicating HPLC Methods for Pharmaceuticals: Overview, Methodologies, and Case Studies

- Anissa W. Wong

In the pharmaceutical industry, method validation is essential. But what are the best practices? We review regulatory requirements, validation parameters, methodologies, acceptance criteria, trends, and software tools.

This installment is the third in a series of three articles on stability testing of small-molecule pharmaceuticals. This article provides a comprehensive and updated overview of the validation of stability-indicating methods for drug substances and drug products, and addresses regulatory requirements, validation parameters, methodologies, acceptance criteria, trends, and software tools. Examples of generic protocols, reporting templates, and data summaries are included as supplemental reference resources.

The validation of analytical procedures used in regulated stability testing of drug substances (DS) and drug products (DP) is required by law and regulatory guidelines. For instance:

"The accuracy, sensitivity, specificity, and reproducibility of test methods employed by the firm shall be established and documented. Such validation and documentation may be accomplished in accordance with 211.194(a)" (1).

"The objective of validation of an analytical procedure is to demonstrate that it is suitable for its intended purpose" (2).

Method validation is the process of ensuring that a test procedure is accurate, reproducible, and sensitive within the specified analysis range for the intended application. Although regulatory authorities require method validation for the analytical procedures used in the quality assessments of DS and DP, the actual implementation is open to interpretation and may differ widely among organizations and in different phases of drug development. The reader is referred to regulations (1), guidelines (2–5), books (6–9), journal references (10, 11), and other resources (12) for further descriptions or discussions of associated regulations, methodologies, and common practices. This article focuses on methodologies for small-molecule DS and DP (such as tablets and capsules). Analytical procedures for biologics, gene and cell therapies, and genotoxic impurities are not discussed (6).

The purpose of method validation is to confirm that a method can execute reliably and reproducibly as well as ensure accurate data are generated to monitor the quality of DS and DP. It is essential to understand the intended use of the method to design an appropriate validation plan. The requirements of the plan also must be suitable for the phase of development, because method validation is an ongoing process through the life cycle of the product.

The method validation process can be broken down into three main steps: method design, method validation, and method maintenance (continued verification). Thus, the method itself continues to evolve throughout the product development life cycle. A method is typically “fully” validated at a late phase prior to testing of the biobatches (validation batches). Based on the International Council for Harmonization (ICH) Q6 guideline (13), analytical procedures are also part of the specifications that are submitted to and approved by a regulatory agency. Therefore, changes in a method must be monitored closely (13). After product launch, changes may need to be managed through a formal change control program, depending upon the changes, because prior approval from the regulatory agency, based on ICH Q10, may be required (14).

This section describes data elements required for method validation (see Figure 1) extracted from ICH Q2 (R1) and United States Pharmacopeia (USP) general chapter <1225> (3). Table I lists definitions of the required method validation parameters, extracted from ICH Q2 R1. Discussions of each parameter follow in the next section.

Table II lists the data requirements of different types of analytical procedures. as listed in USP <1225> (3). As described in the previous article in this series (15), the analytical procedures used today are predominantly “composite” reversed-phase liquid chromatography (RPLC) gradient methods with UV detection for the simultaneous determinations of both potency (active pharmaceutical ingredient, or API) and impurities and degradation products. These high-performance liquid chromatography (HPLC) methods often do double duty as a secondary identification test to supplement the spectroscopic identification (such as infrared or UV) of the API in DS or DP samples. For these reasons, the validation data elements required include those for USP Assay Category I (assay), Category II (quantitative), and Category IV (identification), as shown in Table II.

Table XIV provides a summary of validation results for this stability-indicating composite assay and impurity method. This data set is included to illustrate a real-life validation summary to document the scientific soundness of the method (20, 26). However, the data collected exceeded the typical requirements expected for early development.

Challenges in the Analytical Characterization of VLPs Through HPLC-Based Methods

This article discusses the challenges and effective solutions for high performance liquid chromatography (HPLC)-based analytical characterization of virus-like particles (VLPs).

Eyes on the Prize: Overcoming Uncertainty to Realize the Power of 2D-LC Separations

In this month’s column, I highlight some of the primary considerations we face in method development and point to resources that can help users overcome uncertainty and develop highly effective 2D-LC methods.

Recent Developments in HPLC & UHPLC

In the world of liquid chromatography, innovative strides in column technology continue to take place. We are also reminded that there is always more to learn about “well-known” methodologies, and our craft is continuously influenced by important social concerns.

Applying Sustainability Concepts to Modern Liquid Chromatography

Governments are striving to implement policy changes towards a greater use of green technology, specifically around the generation of energy. Individuals and industrial organizations have also taken up the challenge, and now many companies are driving to significantly reduce their environmental footprint, or indeed become carbon negative within very short time frames.

On the Surprising Retention Order of Ketamine Analogs Using a Biphenyl Stationary Phase

An unexpected retention order for ketamine analogs was observed when using a biphenyl stationary phase for liquid chromatography-mass spectrometry (LC–MS).

The LCGC Blog: Celebrating Women in Separation Chemistry at Pittcon 2024

In this edition of The LCGC Blog, Emanuela Gionfriddo discusses the two world-class scientists and trailblazer women in separation chemistry and the awards they received at Pittcon 2024.

2 Commerce Drive Cranbury, NJ 08512

609-716-7777

- Open access

- Published: 05 June 2024

A miRNA-disease association prediction model based on tree-path global feature extraction and fully connected artificial neural network with multi-head self-attention mechanism

- Hou Biyu 1 ,

- Li Mengshan 1 ,

- Hou Yuxin 2 ,

- Zeng Ming 1 ,

- Wang Nan 3 &

- Guan Lixin 1

BMC Cancer volume 24 , Article number: 683 ( 2024 ) Cite this article

117 Accesses

1 Altmetric

Metrics details

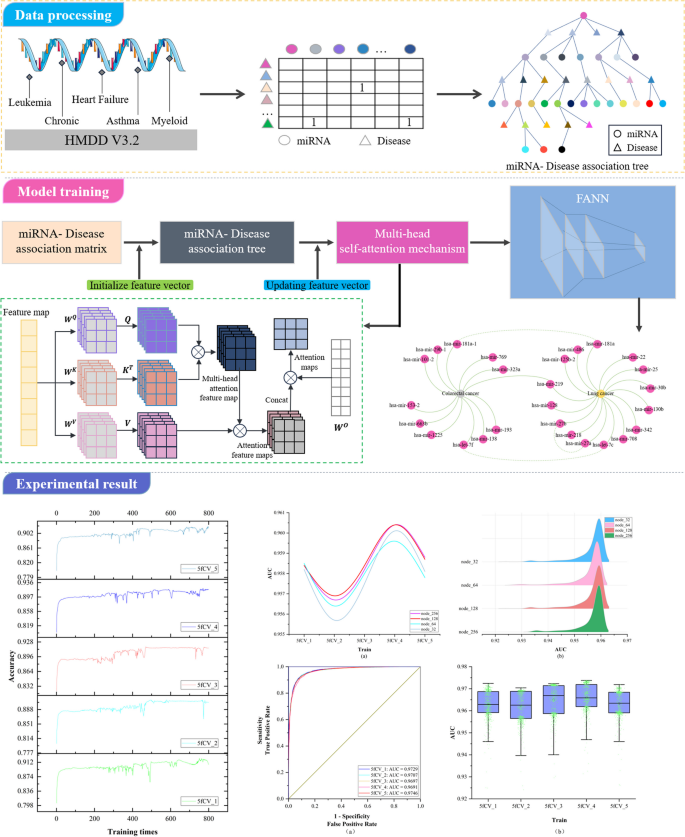

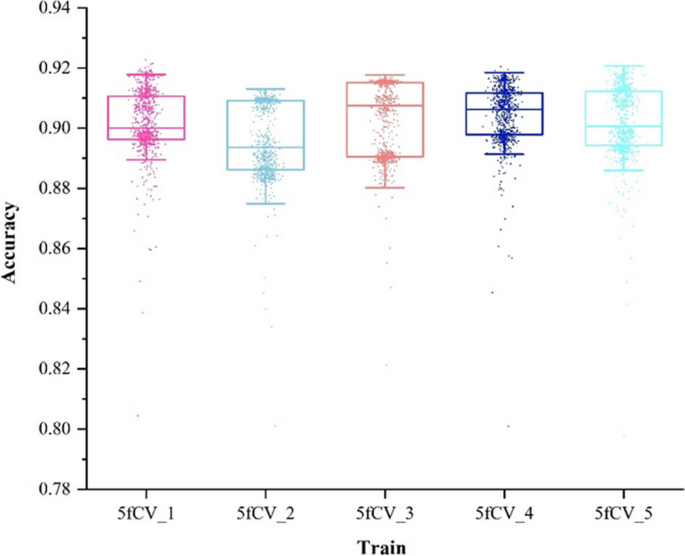

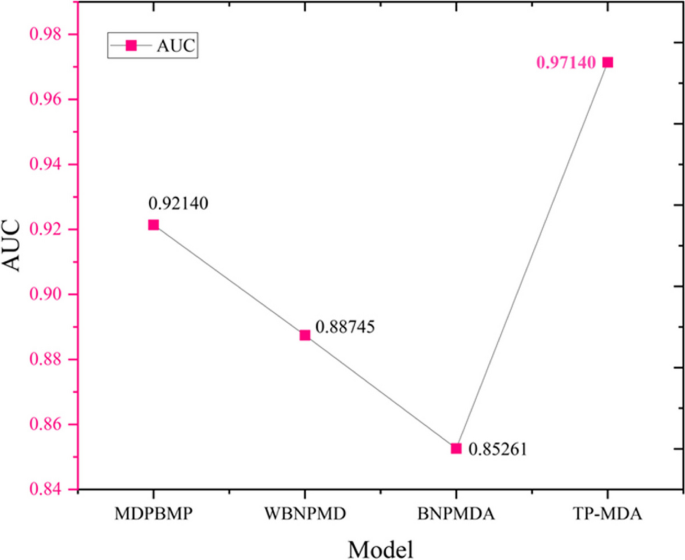

MicroRNAs (miRNAs) emerge in various organisms, ranging from viruses to humans, and play crucial regulatory roles within cells, participating in a variety of biological processes. In numerous prediction methods for miRNA-disease associations, the issue of over-dependence on both similarity measurement data and the association matrix still hasn’t been improved. In this paper, a miRNA-Disease association prediction model (called TP-MDA) based on tree path global feature extraction and fully connected artificial neural network (FANN) with multi-head self-attention mechanism is proposed. The TP-MDA model utilizes an association tree structure to represent the data relationships, multi-head self-attention mechanism for extracting feature vectors, and fully connected artificial neural network with 5-fold cross-validation for model training.

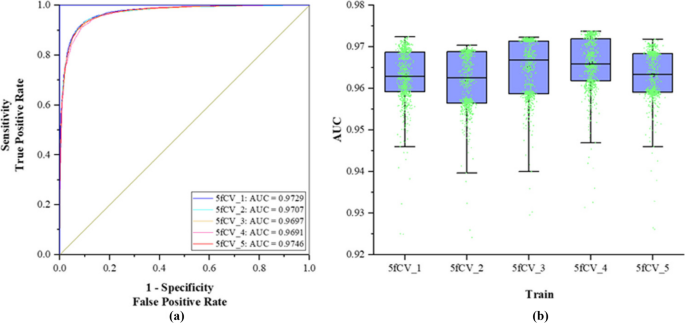

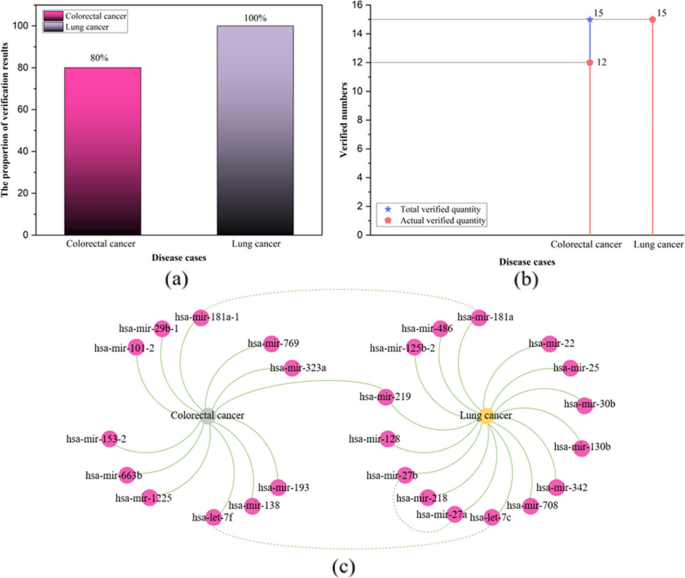

The experimental results indicate that the TP-MDA model outperforms the other comparative models, AUC is 0.9714. In the case studies of miRNAs associated with colorectal cancer and lung cancer, among the top 15 miRNAs predicted by the model, 12 in colorectal cancer and 15 in lung cancer were validated respectively, the accuracy is as high as 0.9227.

Conclusions

The model proposed in this paper can accurately predict the miRNA-disease association, and can serve as a valuable reference for data mining and association prediction in the fields of life sciences, biology, and disease genetics, among others.

Graphical Abstract

Peer Review reports

Introduction

MicroRNA (miRNA) is a class of short 20–24 nucleotide non-coding RNA molecules that play critical regulatory roles in cells [ 1 , 2 ]. They form a complex regulatory network and are involved in various biological processes such as cell proliferation, differentiation and apoptosis [ 3 ]. In addition, miRNA is closely related to the occurrence and development of cancer, cardiovascular diseases, nervous system diseases and other diseases [ 4 , 5 , 6 , 7 ]. For example, cancer stem cell-like cells (CSCs) are increasingly recognized as key cell tumor populations that drive not only tumorigenesis, but also cancer progression, treatment resistance, and metastatic recurrence. Existing evidence suggests that different metabolic pathways regulated by let-7 miRNA can impact CSC self-renewal, differentiation, and treatment resistance [ 8 ]. Therefore, in-depth research on the association between miRNAs and diseases is of great importance for understanding cellular regulatory mechanisms, discovering new therapeutic targets, and developing relevant biomedical applications [ 9 , 10 , 11 , 12 ].

With the continuous advancement of bioinformatics and the advent of the artificial intelligence era, researchers are increasingly using machine learning and deep learning algorithms to predict miRNA-disease associations [ 13 , 14 , 15 ]. It can provide validation guidance for biological experiments, thereby conserving resources and further advancing the field of miRNA and disease association prediction [ 16 , 17 , 18 ].It also has the potential to drive further advances in miRNA-disease association prediction. Based on different prediction strategies, existing methods can be categorized into four types: machine learning-based methods, information propagation-based methods, scoring function-based methods, and matrix transformation-based methods [ 19 , 20 ]. Machine learning-based prediction methods have recently become a focus and are gaining popularity among researchers [ 21 , 22 ]. Yu et al. [ 23 ] constructed a heterogeneous information network including miRNA, diseases, and genes. They defined seven symmetric meta-paths based on different semantic interpretations. After initializing the feature vectors for all nodes, they extracted and aggregated the vector information carried by all nodes on meta-path instances and updated the starting node’s feature vector. Then, they aggregated the vector information obtained from nodes on different meta-paths. Finally, they used miRNA and disease embedding feature vectors to compute their association scores. Xie et al. [ 24 ] constructed miRNA-disease bias scores using aggregated hierarchical clustering. A bipartite network recommendation algorithm was then used to assign transfer weights based on these bias ratings to predict potential miRNA-disease associations. Chen et al. [ 25 ] combined known miRNA and disease similarities to establish transfer weights and appropriately configured initial information. They then used a two-stage bipartite network algorithm to infer potential miRNA-disease associations.

In the study of miRNA-disease associations, there are two areas that need improvement: (1) The ability to capture indirect association features is inadequate. Among various computational methods, researchers use miRNA-disease heterogeneous networks to structure miRNA-disease association data and then extract feature vectors from the heterogeneous network. However, the associations within the heterogeneous network are limited to direct relationships between miRNAs and diseases, and their ability to capture indirect associations is often weak. This limitation may result in reduced model performance. (2) Over-reliance on similarity measurement data. Many computational methods rely on similarity information such as miRNA similarity and disease similarity for model training. The reliance on similarity data can, to a certain extent, influence the discriminative ability of the model and have an impact on its predictive accuracy.

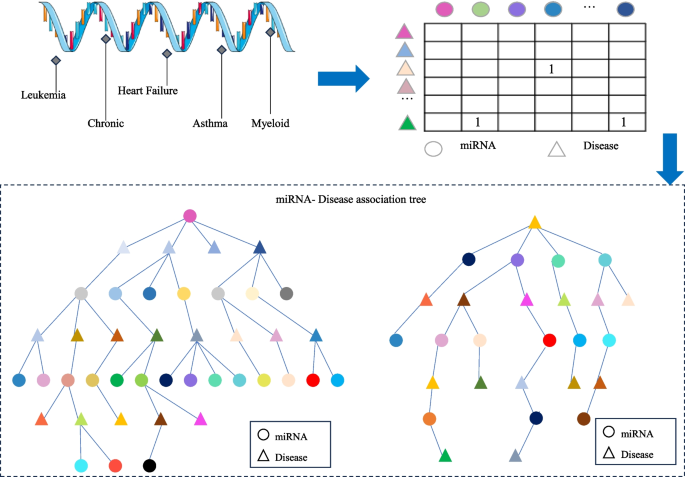

To address the first issue, this paper investigates a data organization approach based on a tree-like topological structure. It represents miRNAs or diseases as root nodes and then searches for all related diseases or miRNAs as the second layer of the tree. All miRNAs or disease nodes associated with each disease or miRNA in the second layer are then found in the dataset. This process is repeated until the entire dataset has been thoroughly searched. At this point, there is a unique tree with the miRNA or disease as the root node, called the miRNA-disease association tree. This tree contains all association relationships related to that miRNA or disease within the dataset. Next, the vector information carried by all nodes on each path instance is extracted on the paths of the tree. Vector information obtained from nodes on different tree-paths is aggregated to generate feature vectors for model training. The miRNA-disease association tree has the potential to improve the capture of indirect association features. In response to Problem 2, since the similarity of data is often subjective based on some human-set metric, these data may produce misleading results in some cases, which in turn affects the performance of the algorithm. In contrast to similarity measures, multi-head self-attention mechanisms better capture long-distance dependencies in input sequences by allowing the model to focus on information from different locations, which in turn improves the predictive performance of the model. In this paper, we explore the use of the multi-head self-attention mechanism to fully extract the long dependencies carried by association trees, avoiding the bias created by using similarity measures and overcoming the problem of over-reliance on similarity measure data. As a result, the paper introduces a miRNA-disease association prediction model. This model uses a multi-head self-attention mechanism for comprehensive feature extraction on the tree-paths. It then trains the dataset using the Fully Connected Artificial Neural Network (FANN) model in a 5-fold cross-validation experiment. This model is referred to as TP-MDA.

Materials and methods

Establishing the association matrix.

Based on the miRNA-disease association information, remove duplicate, missing, and invalid data in order to construct the miRNA-disease association matrix. Given m miRNAs, M={m 1 、…、m i 、…、m m },and n diseases, D = {d 1 , …, d j , …, d n },the miRNA-disease association matrix is defined as R, where R ∈ R m×n , as shown in Eq. ( 1 ):

Subsequently, the miRNA-disease association tree is constructed by continuously exploring the association matrix. The process of association tree construction is shown in Fig. 1 .

The construction of the association tree

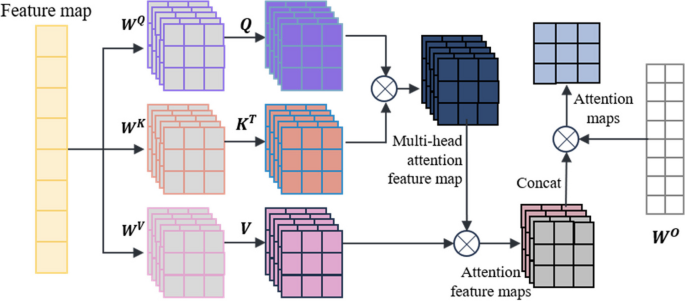

- Multi-head self-attention mechanism

The self-attention mechanism is a special type of attention mechanism used to handle relationships between different positions in sequence data. The multi-head self-attention mechanism is a common extension of the attention mechanism in deep learning that employs multiple attention heads at the same level, allowing for the fusion of different attention weights. In this paper, a multi-head self-attention mechanism is used to process the feature vectors extracted from the miRNA-disease association tree. The self-attention mechanism is as shown in Eqs. ( 2 ) and ( 3 ):

In the equations, X represents the vector information extracted from the miRNA-disease association tree, and Q, K, V represent the query matrix, key matrix, and value matrix, respectively. These three matrices are obtained by linear transformations of X using W Q , W K , and W V . Here, d k represents the dimension of the query, key, or value.

The multi-head self-attention mechanism transforms the linear matrices from a set ( \({\varvec{W}}^{\varvec{Q}},\) \({\varvec{W}}^{\varvec{K}},\) \({\varvec{W}}^{\varvec{V}}\) ) to multiple sets {( \({\varvec{W}}_{0}^{\varvec{Q}}\) , \({\varvec{W}}_{0}^{\varvec{K}}\) , \({\varvec{W}}_{0}^{\varvec{V}}\) ), …, ( \({\varvec{W}}_{\varvec{i}}^{\varvec{Q}}\) , \({\varvec{W}}_{\varvec{i}}^{\varvec{K}}\) , \({\varvec{W}}_{\varvec{i}}^{\varvec{V}}\) ) }. Different sets of linear matrices with random initialization ( \({\varvec{W}}^{\varvec{Q}}\) , \({\varvec{W}}^{\varvec{K}}\) , \({\varvec{W}}^{\varvec{V}}\) ) can map the input vectors to different subspaces, allowing the model to understand input information from different spatial dimensions. The multi-head attention mechanism is represented as shown in Eqs. ( 4 ) and ( 5 ):

In these equations, \({\varvec{W}}_{\varvec{i}}^{\varvec{Q}}\) , \({\varvec{W}}_{\varvec{i}}^{\varvec{K}}\) , \({\varvec{W}}_{\varvec{i}}^{\varvec{V}}\) represent the query matrix, key matrix, and value matrix for the i-th head, where h is the number of heads. \({\varvec{W}}^{\varvec{O}}\) is the linear transformation matrix used to map the output of the multi-head self-attention mechanism into the same dimensional space.

The key point of the self-attention mechanism is the ability to consider information about all other elements in the sequence while calculating the association between each element, rather than considering only a fixed number of adjacent elements as in traditional fixed window or convolution operations. Therefore, the self-attention mechanism can effectively manage long dependencies, allowing for improved capture of semantic information within the sequence, and there are numerous long dependencies to be addressed within the miRNA-disease association tree. In this paper, after the initial feature vector information is extracted from the tree nodes, the multi-head self-attention mechanism is used for information processing, resulting in the acquisition of the updated feature vector, which is used as input for model training. The operation principle is shown in Fig. 2 .

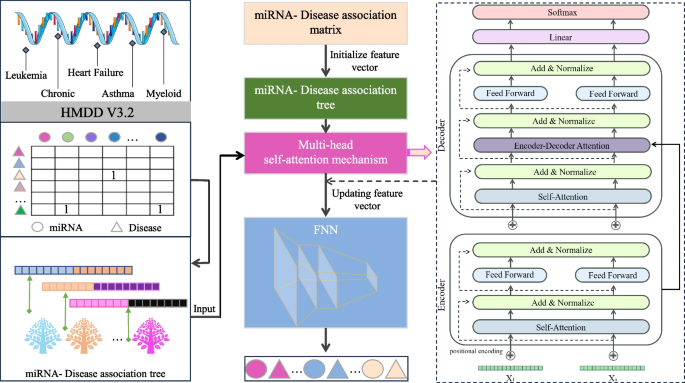

TP-MDA model

In the TP-MDA model, the miRNA-disease association matrix is transformed into a miRNA-disease association tree to explore long dependencies between nodes. A multi-head self-attention mechanism network is used to aggregate and extract information along the tree-paths. The outputs are concatenated to create feature vectors, which are subsequently used as input for training the FANN model. The schematic diagram of the TP-MDA model is illustrated in Fig. 3 .

Model diagram

In this paper, a Fully Connected Artificial Neural Network (FANN) is used to train the data. In addition to the input and output layers, three hidden layers have been configured. The ReLU (Rectified Linear Unit) function is used as the activation function, as depicted in Eq. ( 6 ):

For the output layer, a sigmoid function is set as the activation function, as shown in Eq. ( 7 ):

The loss function used is cross-entropy loss, and the TP-MDA model is trained using the Adam optimizer. The learning rate is set to 0.000001 and the number of iterations is set to 800. The prediction results of the model represent the predicted values for miRNA-disease associations.

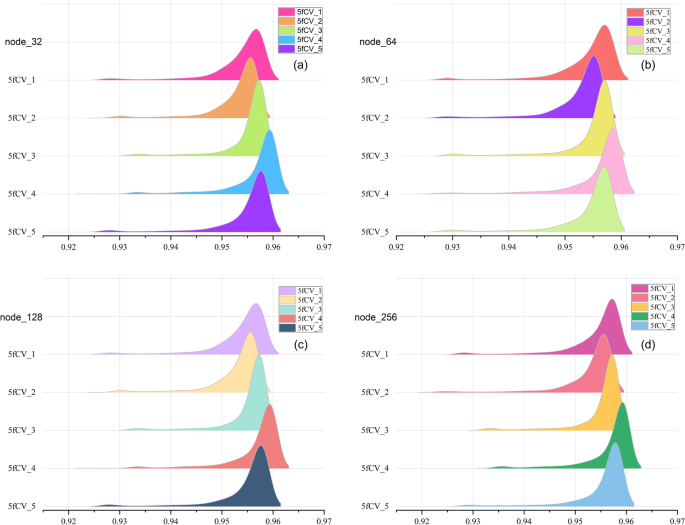

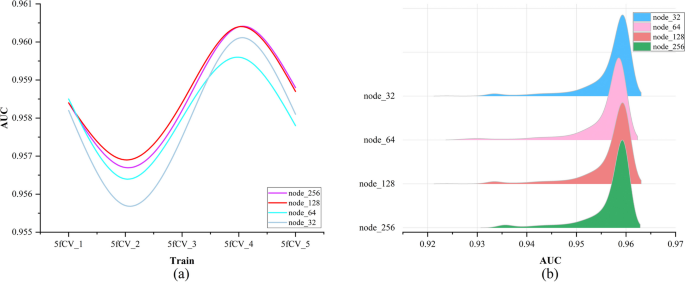

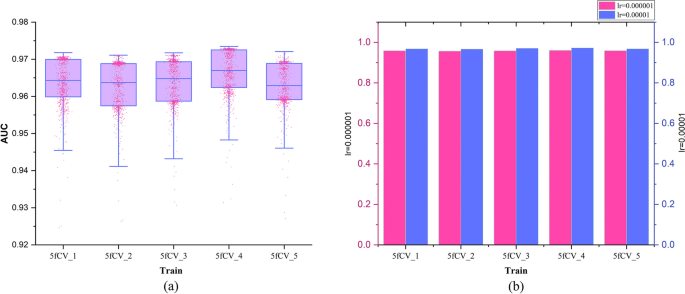

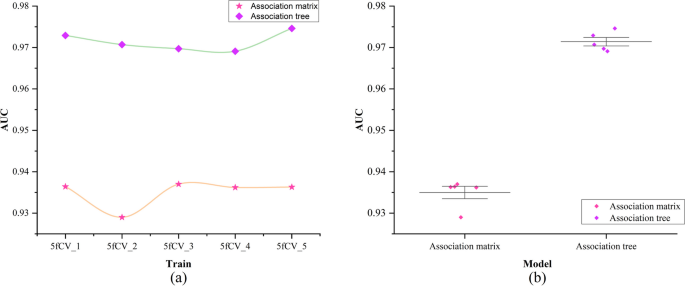

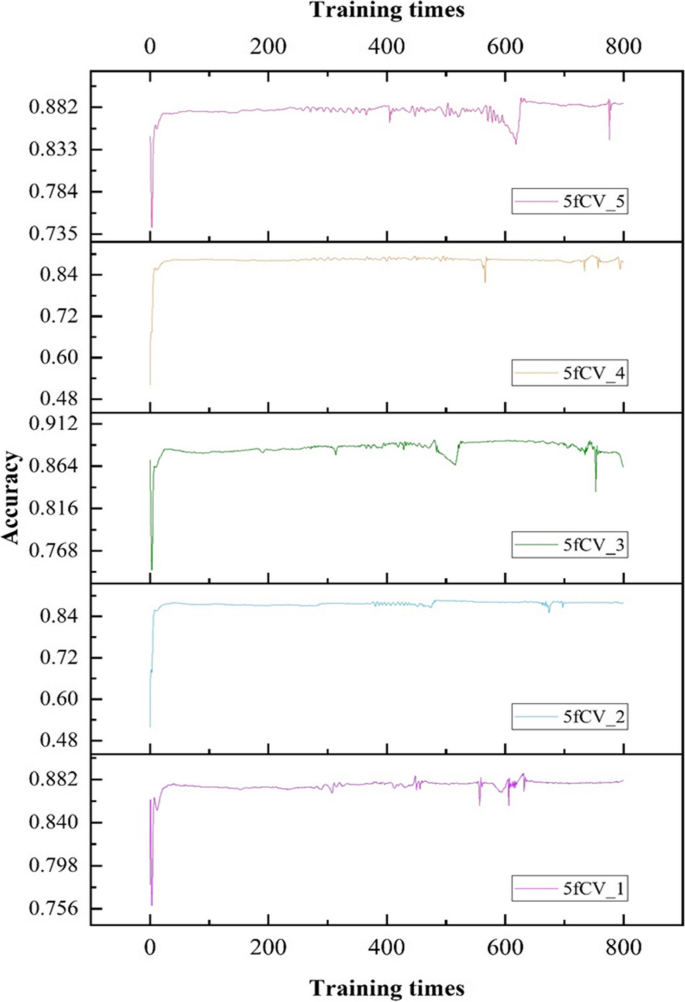

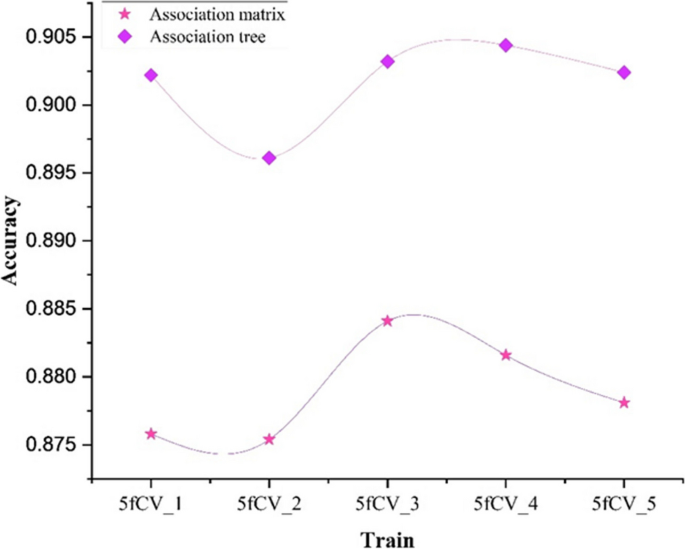

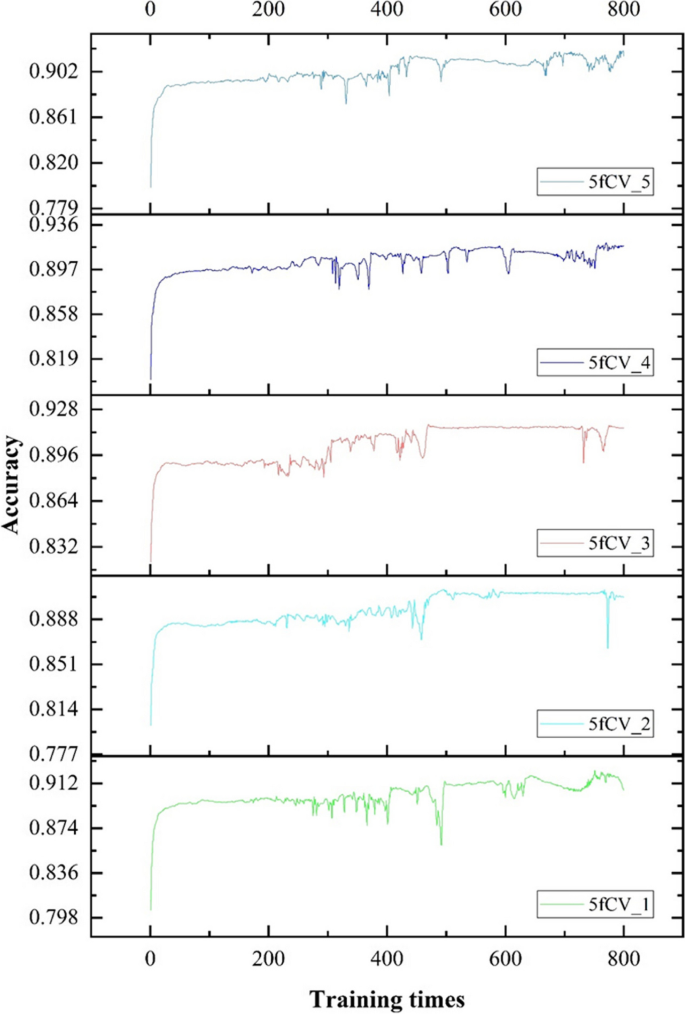

Data source and model evaluation