HORIZONTV FEATURING BD+C : WATCH EPISODES ON DEMAND AT HORIZONTV

Currently Reading

Higher education construction costs for 2023.

Fresh data from Gordian breaks down the average cost per square foot for a two-story college classroom building across 10 U.S. cities.

Colleges and universities manage more than 6 billion square feet of campus space in 210,000 buildings nationwide, with a replacement value of $2 trillion and a backlog of urgent capital renewal needs exceeding $112 billion.

The state of campus facilities will define the financial future of higher ed institutions more than any other single factor. Data collected from a thorough assessment of campus conditions should be used to prioritize building portfolio needs and establish a strategic framework for linking today’s investment realities with future campus aspirations.

Readying campus facilities for future students can take the form of renovations, updates or wholesale replacement, with the driver of that decision based in the alignment of available resources with institutional programmatic priorities.

As North America’s leading construction cost database, Gordian’s RSMeans Data has been synonymous with reliability since the 1940s, so you can trust it when budgeting for projects that will transform your campus for students, alumni, professors and staff.

With localized square-foot costs on over 100 building models, Gordian’s RSMeans Data allows architects, engineers and other preconstruction professionals to quickly and accurately create conceptual estimates for future builds. This table shows the most recent costs per square foot for two-story college classrooms in select cities, with sustainable, “green” building considerations.

Visit rsmeans.com/bdandc for more information about Gordian’s RSMeans Data.

| Cost per Square Foot for Two-Story College Classrooms with Green Building Considerations | ||||

| City | 2020 | 2021 | 2022 | 2023 |

| National Average | $220.32 | $221.38 | $229.47 | $243.54 |

| Athens, GA | $178.55 | $175.75 | $182.06 | $188.76 |

| Austin, TX | $176.56 | $179.01 | $183.94 | $196.80 |

| Tuscaloosa, AL | $186.09 | $186.01 | $194.43 | $207.45 |

| Tempe, AR | $188.93 | $188.88 | $194.50 | $207.94 |

| Charlottesville, VA | $185.32 | $190.71 | $196.39 | $201.75 |

| Boulder, CO | $195.82 | $193.08 | $201.54 | $210.98 |

| Bloomington, IN | $199.80 | $199.26 | $206.05 | $216.72 |

| Ann Arbor, MI | $216.59 | $219.54 | $224.73 | $235.17 |

| Madison, WI | $216.75 | $224.27 | $227.99 | $242.15 |

| Berkeley, CA | $280.11 | $283.40 | $287.92 | $303.11 |

Please note: Square foot models are used for planning and budgeting and are not meant for detailed estimates.

Related Stories

University Buildings | Jun 28, 2024

The american university in cairo launches a 270,000-sf expansion of its campus in new cairo, egypt.

In New Cairo, Egypt, The American University in Cairo (AUC) has broken ground on a roughly 270,000-sf expansion of its campus. The project encompasses two new buildings intended to enhance the physical campus and support AUC’s mission to provide top-tier education and research.

University Buildings | Jun 18, 2024

Uc riverside’s new school of medicine building supports team-based learning, showcases passive design strategies.

The University of California, Riverside, School of Medicine has opened the 94,576-sf, five-floor Education Building II (EDII). Created by the design-build team of CO Architects and Hensel Phelps, the medical school’s new home supports team-based student learning, offers social spaces, and provides departmental offices for faculty and staff.

Headquarters | Jun 5, 2024

Several new projects are upgrading historic princeton, n.j..

Multifamily, cultural, and office additions are among the new construction.

Mass Timber | May 31, 2024

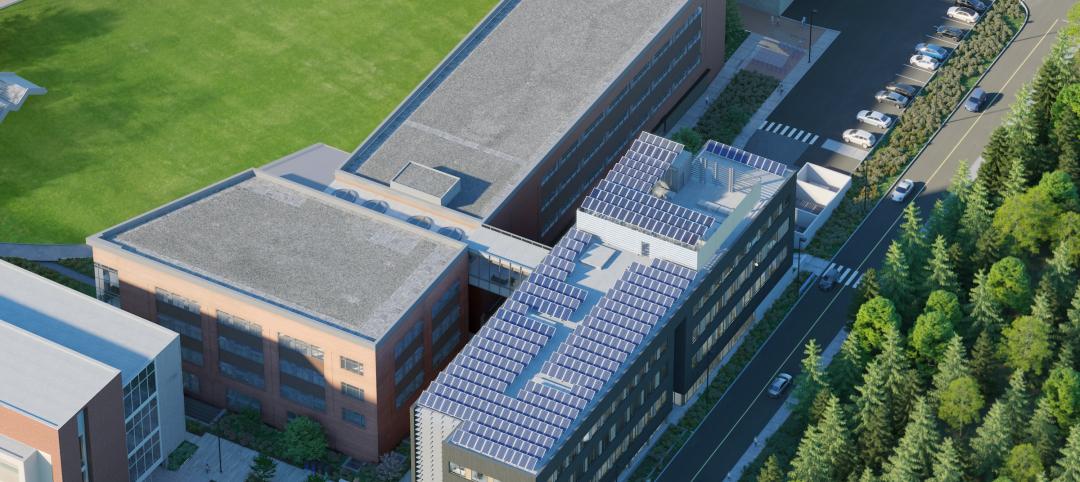

Mass timber a big part of western washington university’s net-zero ambitions.

Western Washington University, in Bellingham, Wash., 90 miles from Seattle, is in the process of expanding its ABET-accredited programs for electrical engineering, computer engineering and science, and energy science. As part of that process, the university is building Kaiser Borsari Hall, the 54,000-sf new home for those academic disciplines that will include teaching labs, research labs, classrooms, collaborative spaces, and administrative offices.

University Buildings | May 30, 2024

Washington university school of medicine opens one of the world’s largest neuroscience research buildings.

In St. Louis’ Cortex Innovation District, Washington University School of Medicine recently opened its new Jeffrey T. Fort Neuroscience Research Building. Designed by CannonDesign and Perkins&Will, the 11-story, 609,000-sf facility is one of the largest neuroscience buildings in the world.

University Buildings | May 10, 2024

Unc chapel hill’s new medical education building offers seminar rooms and midsize classrooms—and notably, no lecture halls.

The University of North Carolina at Chapel Hill has unveiled a new medical education building, Roper Hall. Designed by The S/L/A/M Collaborative (SLAM) and Flad Architects, the UNC School of Medicine’s new building intends to train new generations of physicians through dynamic and active modes of learning.

Mass Timber | Apr 25, 2024

Bjarke ingels group designs a mass timber cube structure for the university of kansas.

Bjarke Ingels Group (BIG) and executive architect BNIM have unveiled their design for a new mass timber cube structure called the Makers’ KUbe for the University of Kansas School of Architecture & Design. A six-story, 50,000-sf building for learning and collaboration, the light-filled KUbe will house studio and teaching space, 3D-printing and robotic labs, and a ground-level cafe, all organized around a central core.

Student Housing | Apr 17, 2024

Student housing partnership gives residents free mental health support.

Text-based mental health support app Counslr has partnered with Aptitude Development to provide free mental health support to residents of student housing locations.

Student Housing | Apr 12, 2024

Construction begins on auburn university’s new first-year residence hall.

The new first-year residence hall along Auburn University's Haley Concourse.

University Buildings | Apr 10, 2024

Columbia university to begin construction on new york city’s first all-electric academic research building.

Columbia University will soon begin construction on New York City’s first all-electric academic research building. Designed by Kohn Pedersen Fox (KPF), the 80,700-sf building for the university’s Vagelos College of Physicians and Surgeons will provide eight floors of biomedical research and lab facilities as well as symposium and community engagement spaces.

Top Articles

- University Buildings

Senior Living Design

The country’s largest retirement community expands with educational facilities, mfpro+ new projects, chicago’s long-vacant spire site will be home to a two-tower residential development, more in category, headquarters, mass timber, most popular content.

- BD+C University (CEUs)

- MultifamilyPro+

- Giants 400 Rankings

- Life of an Architect

Building Sector Reports

- Airport Terminals

- Data Centers

- Government Buildings

- Healthcare Facilities

- Hotel Facilities

- Industrial Facilities

- Justice Facilities

- K-12 Schools

Building Sector Continue

- Laboratories

- Military Construction

- Modular Building

- Multifamily Housing

- Office Building Design

- Performing Arts Centers

- Religious Facilities

- Retail Centers

- Senior Living Facilities

- Sports and Recreational Facilities

- Transportation & Parking Facilities

- Burns & McDonnell

- CallisonRTKL

- CannonDesign

- Clark Nexsen

- CO Architects

- Design Collaborative

- FXCollaborative

- Gresham Smith

Blogs Continue

- HMC Architects

- IA Interior Architects

- Kimley-Horn

- Legat Architects

- NAC Architecture

- Nadel Architecture & Planning

- Perkins and Will

- Perkins Eastman

- Rider Levett Bucknall

- Shepley Bulfinch

- Southland Industries

- SRG Partnership

- Ted Goldstein, Atlas Tube

- Vessel Architecture & Design

- Walter P. Moore

Building Team Reports

- Contractors

- Building Owners

- BD+C University

- Building Enclosures Series

- Case Studies

- Codes and Standards

- COVID-19 Reports

- Native Series

- Press Releases

- White Papers

BD+C Awards Programs

- 75 Top Products

- 40 Under 40

- Women in Design+Construction

- See all events

Magazine Subscription

Get our newsletters.

Each day, our editors assemble the latest breaking industry news, hottest trends, and most relevant research, delivered to your inbox.

Follow BD+C:

- Construction ›

- Building Construction

Industry-specific and extensively researched technical data (partially from exclusive partnerships). A paid subscription is required for full access.

- Construction costs for educational buildings in the U.S. 2024, by city

Average construction costs of educational buildings in the United States in 1st quarter of 2024, by city (in U.S. dollars per square foot)

| Characteristic | Elementary | High school | University |

|---|---|---|---|

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

| - | - | - | - |

To access all Premium Statistics, you need a paid Statista Account

- Immediate access to all statistics

- Incl. source references

- Download as PDF, XLS, PNG and PPT

Additional Information

Show sources information Show publisher information Use Ask Statista Research Service

United States

1st quarter of 2024

The figures provided are averages, calculated from the low and high costs found in the respective market.

Hard construction costs per square foot of gross floor area.

Other statistics on the topic Public construction

Politics & Government

- U.S. government - budget by agency for 2025

Construction

- The 50 biggest construction companies in the U.S. based on revenue 2022

- Largest electrical contractors in the U.S. based on revenue 2014-2022

- U.S. construction spending in public and private sectors 1993-2022

To download this statistic in XLS format you need a Statista Account

To download this statistic in PNG format you need a Statista Account

To download this statistic in PDF format you need a Statista Account

To download this statistic in PPT format you need a Statista Account

As a Premium user you get access to the detailed source references and background information about this statistic.

As a Premium user you get access to background information and details about the release of this statistic.

As soon as this statistic is updated, you will immediately be notified via e-mail.

… to incorporate the statistic into your presentation at any time.

You need at least a Starter Account to use this feature.

- Immediate access to statistics, forecasts & reports

- Usage and publication rights

- Download in various formats

* For commercial use only

Basic Account

- Free Statistics

Starter Account

- Premium Statistics

The statistic on this page is a Premium Statistic and is included in this account.

Professional Account

- Free + Premium Statistics

- Market Insights

1 All prices do not include sales tax. The account requires an annual contract and will renew after one year to the regular list price.

Statistics on " Public construction in the United States "

- U.S. real value added to GDP 1990-2022, by sector

- Value of new federal construction in the U.S. 1999-2023

- Value of new state and local construction in the U.S. 2009-2022

- Value of public residential construction spending in the U.S. 2002-2022

- Public non-residential construction spending in the U.S. 2002-2022

- Public highway and street construction spending in the U.S. 2008-2022

- U.S. sewage and waste disposal projects construction spending 2009-2022

- U.S. public construction spending on water supply projects 2009-2019

- U.S. public construction spending on power projects 2009-2019

- Local and state government construction value: education 2005-2019

- Revenue share of the largest construction design firms in the U.S. 2022, by sector

- Largest construction contractors in the U.S. 2022, based on new contracts

- Leading construction companies in the U.S. 2022, based on revenue

- Leading construction design firms in the U.S. 2020-2021, by revenue

- Largest specialty contractors in the U.S. 2018-2021

Other statistics that may interest you Public construction in the United States

Construction value

- Premium Statistic U.S. real value added to GDP 1990-2022, by sector

- Premium Statistic U.S. construction spending in public and private sectors 1993-2022

- Premium Statistic U.S. government - budget by agency for 2025

- Premium Statistic Value of new federal construction in the U.S. 1999-2023

- Basic Statistic Value of new state and local construction in the U.S. 2009-2022

Public construction

- Premium Statistic Value of public residential construction spending in the U.S. 2002-2022

- Premium Statistic Public non-residential construction spending in the U.S. 2002-2022

- Premium Statistic Public highway and street construction spending in the U.S. 2008-2022

- Premium Statistic U.S. sewage and waste disposal projects construction spending 2009-2022

- Premium Statistic U.S. public construction spending on water supply projects 2009-2019

- Premium Statistic U.S. public construction spending on power projects 2009-2019

- Premium Statistic Construction costs for educational buildings in the U.S. 2024, by city

- Basic Statistic Local and state government construction value: education 2005-2019

Leading contractors

- Premium Statistic Revenue share of the largest construction design firms in the U.S. 2022, by sector

- Premium Statistic The 50 biggest construction companies in the U.S. based on revenue 2022

- Premium Statistic Largest construction contractors in the U.S. 2022, based on new contracts

- Premium Statistic Leading construction companies in the U.S. 2022, based on revenue

- Premium Statistic Leading construction design firms in the U.S. 2020-2021, by revenue

- Premium Statistic Largest specialty contractors in the U.S. 2018-2021

- Premium Statistic Largest electrical contractors in the U.S. based on revenue 2014-2022

Further related statistics

- Basic Statistic Share of Americans who would further their education if it was free in 2011

- Premium Statistic Construction costs of industrial buildings in selected European cities 2023, by type

- Premium Statistic General hospital construction costs in the U.S. Q1 2024, by city

- Premium Statistic Industrial warehouse construction costs in the U.S. in Q1 2024, by city

- Premium Statistic Average costs per square meter for building retail spaces in UK 2023

- Premium Statistic Average parking construction costs in the U.S. Q3 2023, by city

- Premium Statistic Construction value of U.S. education planning projects 2017

- Premium Statistic Global educational building costs by select city 2021

- Premium Statistic Annual revenue of Linköping University 2013-2019

- Premium Statistic Value of building consents for education facilities New Zealand 2017-2023

- Premium Statistic Number of students at Linköping University 2015-2021

- Premium Statistic U.S. single family home construction cost breakdown 2019

- Premium Statistic U.S. school construction completed by type 2015

- Premium Statistic Cuba: number of higher education institutions 2014-2022

- Premium Statistic Students in Technical and Vocational Education Training (TVET) in Kenya 2013-2022

Further Content: You might find this interesting as well

- Share of Americans who would further their education if it was free in 2011

- Construction costs of industrial buildings in selected European cities 2023, by type

- General hospital construction costs in the U.S. Q1 2024, by city

- Industrial warehouse construction costs in the U.S. in Q1 2024, by city

- Average costs per square meter for building retail spaces in UK 2023

- Average parking construction costs in the U.S. Q3 2023, by city

- Construction value of U.S. education planning projects 2017

- Global educational building costs by select city 2021

- Annual revenue of Linköping University 2013-2019

- Value of building consents for education facilities New Zealand 2017-2023

- Number of students at Linköping University 2015-2021

- U.S. single family home construction cost breakdown 2019

- U.S. school construction completed by type 2015

- Cuba: number of higher education institutions 2014-2022

- Students in Technical and Vocational Education Training (TVET) in Kenya 2013-2022

- How We're Funded

- Staff Directory

- Board of Directors

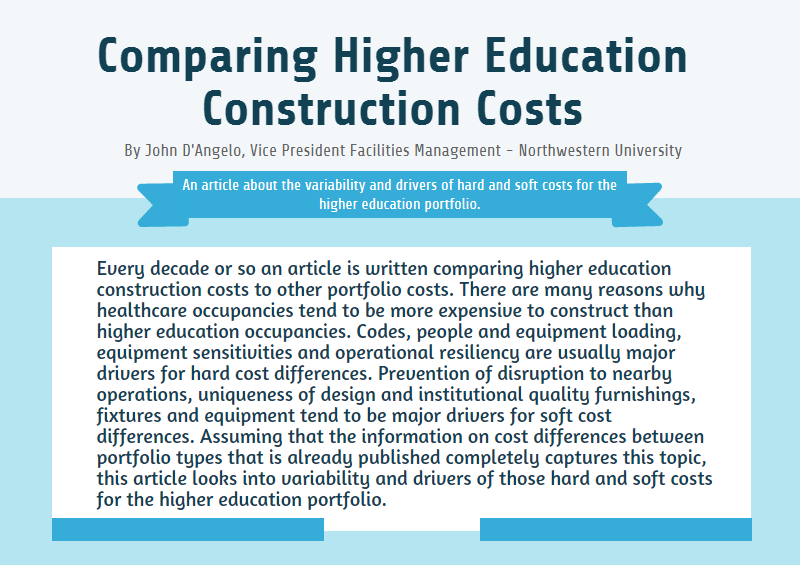

Cost Analysis for Education Projects: Resources and Reflections

Recommended.

You’ve got an education program, and you’re confident that it’s having an impact. But is it worth the cost? How can you know, and how can you compare it to other education programs? Cost-effectiveness analysis tells you how much you pay for a given increase in student learning or student school participation, but most evaluations don’t include it (for various reasons ).

If you want to do cost analysis, here are a few resources (depending on what you want) and a few of my own reflections. (There are many more resources out there; if you have favorites, share them in the comments!)

If you want a quick introduction (10 pages or less):

- The Abdul Latif Jameel Poverty Action Lab (J-PAL) has a helpful brief , including suggestions on what to do if you can’t completely break down the costs into different components.

- A brief from the IRC and the World Bank provides a simple 5-step process for gathering cost data.

- USAID has a 2-pager to motivate how you can use cost data and the kinds of questions you can use it to answer, and how to get started with cost analysis.

If you want templates to help you capture the costs:

- J-PAL has a detailed Excel template that will spit out an estimate of cost-effectiveness for you in the final tab.

- Hey, that seems too complicated! Is there a simpler template? More detailed cost data will get you better estimates, but J-PAL also provides a much simpler template to get you a general sense of the cost of the program.

- USAID has templates as well ; they’re a little less automated.

If you want more detail on how to get cost analysis right:

- As a basic introduction, you can’t beat Patrick McEwan’s 20-page primer on “ Cost-effectiveness analysis of education and health interventions in developing countries .”

- USAID has a cost analysis guide (100+ pages) for education projects that explains all the methods and then a guide to doing this in practice.

- Dhaliwal and others walk readers through many of the decisions you have to make in cost analysis and their implications.

Reflections

First, remember that just like estimates of the impact of a program, cost-effectiveness estimates also come with errors. Popova and I showed that taking those errors into account can dramatically re-order lists of which programs are most cost effective. As a result, I wouldn’t put much stock in small differences in cost-effectiveness.

Second, if you’re using cost-effectiveness analysis from one place to decide whether to implement a program from one place in another place, remember that costs can vary dramatically from place to place. Working with data from a large NGO, Tulloch showed that “costs for the same intervention can vary as much as twenty times when scale or context is changed”! Imagine you’re implementing a program that involves driving to visit schools. Popova and I showed that the transportation cost per school was 27 times higher in rural Kenya than in urban India. So if you want to transport cost-effectiveness from one place to another, make sure you update the costs based on local prices. (And don’t forget that the size of the program’s impact—the “effectiveness” in cost-effectiveness—can change a lot from context to context as well!)

Third, remember that cost-effectiveness won’t be (and shouldn’t be) the only factor in deciding which programs to focus investments on. A program with small impacts that is very cheap may be highly cost effective. It’s great to do that program, but maybe not if it distracts teams with limited operational capacity from programs that will deliver big impacts.

Even with these caveats, just as I’d never make a major purchase without looking at the price tag, I’d never recommend that an education system implement a new policy or program without trying to estimate the cost and thinking through the benefits that come with those costs.

-----------------------------------------

A few other miscellaneous resources for the curious

- The Systematic Cost Analysis Consortium distributes a tool called Dioptra, which actually plugs into programs’ accounting data to facilitate cost analysis and make it maximally comparable across programs. Learn more here !

- With others, I’ve done some analysis on How to Improve Education Outcomes Most Efficiently? using cost-effectiveness.

If you want someone else’s take on the best resources for cost-effectiveness analysis (not limited to education), Glandon and others provide their “ten best resources” for cost-effectiveness analysis in impact evaluations .

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.

View the discussion thread.

More Reading

POLICY PAPERS

Ideas to action: independent research for global prosperity

© 2024 Center for Global Development | Privacy Notice and Cookie Policy

Sign up to get weekly development updates:

- Resources by Topic

- View Resources by State

- Trending Topics

- Data Systems

- Early Care and Education

- Postsecondary Affordability

- School Choice

- School Readiness

- Student Health and Wellness

- Student Learning

- Teaching Profession

- Workforce Development

- State Education Policy Tracking

- State Education Policy Watchlist

- Governors' State of the State Addresses: Education-Related Proposals

- The Commission

- Chair’s Initiative

- Newsletters

- Request Information

Education Commission of the States is the trusted source for comprehensive knowledge and unbiased resources on education policy issues ranging from early learning through postsecondary education.

Subscribe to our publications and stay informed.

Request Assistance

Need more information? Contact one of our policy experts.

50-State Comparison: K-12 School Construction Funding

Elementary and secondary school construction — referred to here as capital school construction — is the nation's second-largest capital investment for state and local governments. It is surpassed only by road construction. This funding's main objective is to ensure students have access to modern, updated facilities that facilitate learning and development. While local governments are responsible for most school construction costs, state governments have played a significant role in financing the construction of school buildings.

State governments provide financial support for school capital construction through direct appropriations and financing support. Securing adequate funding for school infrastructure is crucial for schools to maintain and enhance their facilities. However, during periods of budgetary constraints, school construction funding may face challenges and not be prioritized. So, funding may be reduced or delayed, adversely affecting schools' capacity to provide safe and modern learning environments for students.

In this review, capital school construction is defined as major facility projects that involve the construction of new structures or major renovations. This may involve planning, design, site acquisition or the retrofit and replacement of buildings. These expenses are typically funded through the capital budget and often financed with bonds. Not discussed here is funding for maintenance and operations projects that involve regular, routine facility work, such as cleaning, grounds keeping, minor repairs, utilities and building security.

Financial Assistance

- 90% of states ( 45 states and the District of Columbia ) offer financial assistance to school districts for school construction costs.

- 28 states provide both appropriations and financing options, while 10 states solely offer appropriations, and 7 states along with the District of Columbia provide financing options.

- At least 19 states have established dedicated revenue sources for school construction funding. This includes sales and use taxes, excise taxes, lottery revenue, and proceeds from the sale and use of state lands.

- Appropriations

- 38 states provide aid to school districts for upfront planning or construction costs through appropriations. Five states have programs established in law that are not currently active.

- 28 states incorporate an equity component within their appropriation policy. This means they prioritize or provide more funding for projects for school districts with lower levels of property wealth.

- Payments take the form of direct grant aid to defray the costs incurred by projects or to reimburse locally issued debt, without requiring school districts to repay the state.

- 35 states and the District of Columbia employ various financing mechanisms, such as bond issuance, to fund school construction costs. One state has a program established in law that is not currently active.

- This support includes state issued bonds, support for locally issued bonds and state funded loan programs, allowing schools to obtain loans for their construction needs.

- Oversight and Prioritization

- School districts seeking state appropriations or financing support typically require approval from the state or designated authority. The resource highlights the entity overseeing school construction funding requests in each state.

- In many cases, voter approval is necessary at the state and/or local level to issue debt for financing school construction projects. Our research shows that 35 states require some level of voter approval for such debt issuance.

Explore the 50-state comparisons below to see how states provide funding through appropriations and financing support, while exercising authority through oversight and prioritization. View a specific state’s approach by going to the state profiles page.

50-State Comparisons

- Financial Assistance and Revenue

Click here to see all data points for all states

Related Resources

- 50-State Comparison: K-12 and Special Education Funding

- Student Counts in K-12 Funding Models

- Innovative State Strategies for Using ESSER Funds

Education Level:

Adrienne Fischer , Chris Duncombe , Eric Syverson

Resource Type:

50-State Comparison

Copyright 2024 / Education Commission of the States. All rights reserved.

Cost Estimating for K-12 School Projects: An Invaluable Tool for Budget Management

As school districts nationwide plan for long-term maintenance and construction costs, they share a number of common concerns. Districts ask, “How much is a typical monthly maintenance bill, and what does it include? How much does it cost to bring a school up to ‘21 st century learning’ standards? How much would it cost to build new? What’s the monthly maintenance cost difference between a renovated building and a new facility? What is the up-front cost difference between renovation and new construction?” Understandably, clients want to be able to track costs at every stage of a project, and cost estimates (current and life cycle) are valuable planning and design tools.

Big Picture Budget

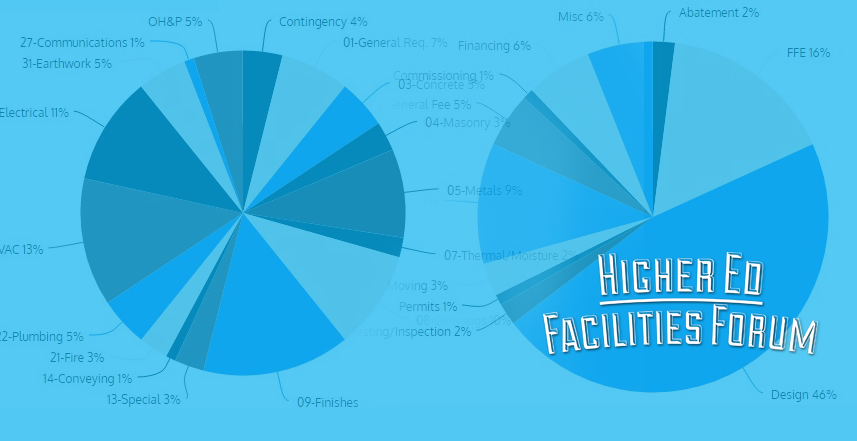

To assist our education clients in informed decision making, we use a detailed preliminary cost estimate which reflects the total project cost. The total project cost includes both “hard” and “soft” costs. “Hard” costs are any costs related to construction – the materials, labor, and work to complete the building. “Soft” costs are project costs in addition to the construction of the building which can include land; furniture, fixtures, and equipment ( FF & E) costs; surveys; testing; design fees; escalation; owner contingencies; and other elements. Budgets for public work are typically all inclusive, so the total project cost has to stay within the set budget. Tracking total project costs throughout each design phase provides a much clearer picture than construction costs alone.

A line-item spreadsheet for hard and soft costs is an important decision-making tool throughout the design process, helping clients to prioritize spending. For example, buying new land for $1 million means $1 million less spent on building square footage or technology, while reusing technology, equipment, and furniture from another facility may allow a school system to build more classrooms. The project timeline is a critical factor in cost estimating; typically, the longer the schedule, the more construction costs are likely to escalate over time. As of early 2023, contractors are generally estimating for 1-1.5% escalation per month for labor and materials, though unforeseen disasters such as hurricanes can significantly impact the cost of labor and materials and make costs challenging to predict over the long term.

Programming and Analysis

Whether the project is a renovation or new construction, cost control begins at the earliest project stages. Simultaneous programming and cost estimating helps districts visualize in real time how each program space impacts the budget, helping to keep design goals and budget aligned. The design team works closely with stakeholders to create a programming document based on the district’s goals, needs, vision, and typical space requirements for each program requirement. This document is the basis for a preliminary construction cost estimate with line-item hard and soft project costs.

At this stage, the team will also analyze life cycle costs for major systems such as HVAC and lighting. This Life Cycle Assessment (LCA) helps clients understand both first costs and long-term costs, and is a powerful tool for making decisions about mechanical and electrical systems. Often, up-front investments in high-performance systems can offset long-term maintenance and operational costs, saving money over the life of the system.

Refining the Process

By the end of the design development phase, the district will have a clearer picture of building size, construction type, finish materials, quantities, mechanical systems, and site utilities which will be factored into a refined cost estimate. With this additional level of detail, a cost estimator or GC estimating group can provide a more accurate estimate of construction costs based on market and regional trends. This estimate may inform adjustments to the design to help align the program and budget.

Innovative design and construction strategies may help to complete the project on time, in budget, and at the highest level of quality. For example, an early grading or site package is often used to accelerate construction (and thus potentially reduce costs) while the rest of the building package is still in design development. Furthermore, after the project is complete, documentation of operational costs and building performance over time can provide essential metrics for estimating future projects (both for the district and for the designers).

Transparency Is Key

Ideally, every project would start with a clear and realistic cost estimate that can be monitored and updated from start to finish. Communication and transparency are always critical to a successful project, most especially in ongoing project cost discussions. Districts and designers can set the team up for success by creating a plan for programming and cost estimating at the outset with the goal of aligning expectations, managing uncertainty, and avoiding “scope creep.”

Cost estimates must be as realistic as possible to be useful planning tools. If cost estimates are accurate and are updated at each stage of the design, they can be invaluable in helping stakeholders visualize the “domino effect” impacts and trade-offs of each program space and support wise decisions throughout the process.

About Ginny

Ginny Magrath, AIA, is a member of LS3P’s K-12 studio. Ginny earned a Bachelor of Arts in Architecture from Clemson University and a Master of Architecture from UNC Charlotte, and first joined LS3P as a student intern in 2012. Since joining the firm full-time in 2015, Ginny has developed significant experience in the education sector including public, private, and higher education projects. She was recognized as a firm Associate in 2017.

Use the search box to find the product you are looking for.

Don't miss tomorrow's construction industry news

Let Construction Dive's free newsletter keep you informed, straight from your inbox.

- Daily Dive M-F

- Tech Weekly Every Wednesday

- Infrastructure Weekly Every Tuesday

- Labor & Safety Weekly Every Thursday

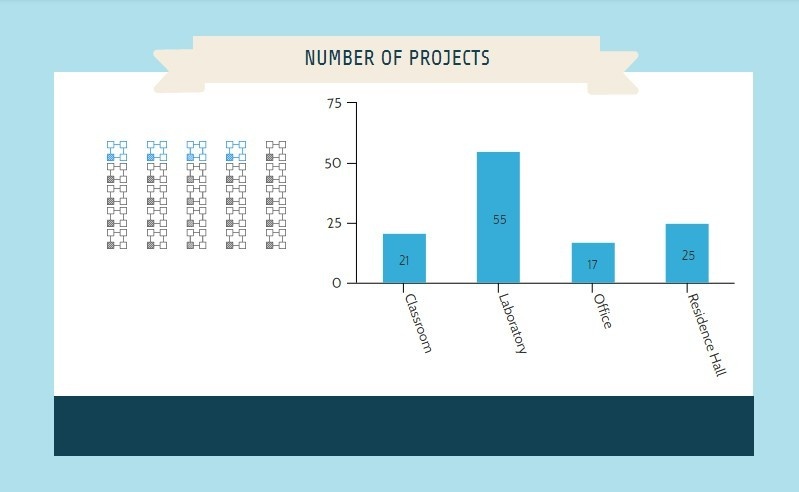

Making the grade: Why school construction costs are climbing and projects are stalling

A roll call of some important education contractors finds that labor and material prices and complex design, health and technology needs are putting pressure on the delivery of school builds.

When California-based C.W. Driver Cos. began work on the new 94,000-square-foot K-8 Cadence Park School campus in Irvine in 2016, the overall construction costs came in at $475 per square foot.

But in 2019, as the firm started mapping out the construction of Heritage Fields School No. 3, another K-8 campus for the Irvine Unified School District, costs had surged to $598 a square foot.

That’s a jump of 26% in just three years, and it echoes a trend experienced around the country.

Basic algebra: Costs are adding up

“Over the last few years, the cost increase per square foot has been abnormally high,” said Jonathan Keene, senior project manager at C.W. Driver, which specializes in K-12 and higher education construction. “We’ve seen abnormally high increases in labor costs as well as huge increases in material costs like structural steel.”

School construction costs aren't just rising in high-priced locales like California. From Maryland to Washington State, school and university construction projects are seeing cost increases that are forcing school boards and university trustees to reconsider their original plans or go back to the drawing board altogether.

In an extreme example at St. Paul (Minnesota) Public Schools, cost estimates on 18 projects grew by more than 60% between 2016 and 2019, according to the Twin Cities Pioneer Press newspaper .

“In some of the bigger districts, where they thought they could do 30 schools, they’re now saying we can only do 18,” said Mary Filardo, executive director of the Washington, D.C.-based 21 st Century School Fund, a nonprofit that supports and advocates for improved school infrastructure nationally. “They’re definitely feeling it.”

On a national basis, school construction costs now range from a low of $230 per square foot for a high school in Nashville, to a high of $558 in New York, according to construction cost consultant Cumming . Dan Pomfrett, Cumming’s chief forecaster, said costs in the sector are up around 15% over the last three years. While that’s in line with other sectors of commercial construction, schools’ unique designs can lead to higher overall price tags.

“Add in a gym, science building or magnet school, and it goes up from there,” Pomfrett told Construction Dive. “There’s a lot of sticker shock.”

Higher construction costs are being amplified at the university level, too, especially as institutions compete for a shrinking number of enrolled students .

“We're really seeing an arms race in higher education right now,” said Ripley Bickerstaff , director of business development at Birmingham, Ala.-based Hoar Construction, which specializes in university projects. He points to two-story recreation centers with hanging, inclined running tracks and 360-degree motion-capture systems in health sciences departments.

“Whatever it's going to take to recruit students and get their enrollment numbers up, that’s what they want," he said.

A course load of causes

Like other sectors in commercial construction, labor and material costs are playing an increasingly larger role in development costs. School boards’ appetite for technology has also contributed, as has the length of the current economic expansion. Schools’ longer lifespans, robust structural specifications and more specialized indoor air quality requirements all come into play as well.

For example, at Fairfax County Public Schools, the largest school district in Virginia, assistant superintendent Jeff Platenberg said that HVAC and mechanical systems at schools are more expensive because of changing perspectives of children’s unique physical needs.

“Air inflow and the conditioning of air have more stringent requirements, because you're dealing with children, and children breathe at a more rapid rate than adults,” said Platenberg. He also noted that stormwater management requirements, due to schools’ large tracts of playing fields, recreation courts and expansive roofs, also drive up costs.

Given the length of the current economic expansion, schools are now building during the upside of the cycle, whereas traditionally, educational institutions tended to build when the market was down and costs were more favorable, said Tony Schmitz, an architect at Kansas City-based Hoefer Wysocki Architecture, whose portfolio includes more than 1.9 million square feet of education facilities. “But we've been in an up economy for so long now, they are building in an up cycle, which leads to an uptick in construction costs," he said.

In addition, there’s the ballooning amount of technology and automation that’s going into today’s schools to make sure they will provide students with the tools they need well into the future.

“With the increased focus on technology, science and the overall student experience, the projects we build today look much different than the ones we did a decade ago,” said Tony Church, executive vice president of operations, at St. Louis-based McCarthy Building Cos. “Some of our K-12 projects are more complex and costly than the higher-ed projects we’ve completed recently.”

Security is also driving up costs, with electronic access control becoming more common. Defensive design elements, such as wing walls for students to hide behind in case of a lockdown, also contribute, Filardo said.

Stay in school: Where's the labor?

Against that backdrop, schools are dealing with the same labor issues as other sectors of commercial construction.

“You literally have a workforce that will walk off the job in the middle of the day to go down the street where somebody’s paying 25 cents more an hour,” said Schmitz. “The labor force bounces around daily.”

It’s also increasingly hard to get subs to bid on jobs, Bickerstaff said.

“We used to have six mechanical guys looking at a job, and now you'll be lucky to get two or three,” he told Construction Dive. “They're booked. And for that reason, you've basically got two guys competing over this job, so you’re going to see a 10% increase right there, just because there's nobody else to do it.”

That’s a factor that impacting all facets of construction. Construction managers are being realistic about what it costs to fill these positions, and as a result, customers are seeing higher bid prices, said Michael Regan, project management practice leader at Middletown, N.J.-based engineering firm T&M Associates.

“At any given time, there are three times as many jobs on the street as there were ten years ago,” he said. “We are in a bidder’s market."

Economics 101

To deal with the rising costs, contractors and their subs are turning to various strategies, including writing cost escalation clauses into contracts.

“Contractors need to do a lot more today to protect themselves from rising costs, including building in cost escalators or fuel surcharges to their contracts,” according to Ian Shapiro, a CPA and co-leader of the real estate and construction practice in the Miami office of tax and accounting consultancy BDO.

When costs do escalate beyond expectations, value engineering becomes a contractor’s best friend. At the Performing Arts Complex of Woodbridge High School in Irvine, California , C.W. Driver shaved $2 million off the numbers by only using shot-blast concrete block on the exteriors, instead of throughout the building, while re-drawing window and door openings to line up with the factory measurements of whole blocks to reduce the cutting needed in the field.

But while material prices can be tied to commodity indices, labor costs are still an "X factor," the proverbial moving target that contractors need to set a bead on early. Key to that is having a binding, detailed bid schedule up front, as is holding subs to it for the scheduled duration of the project, said Sean Edwards, Chief Operating Officer of the education arm at Boston-based Suffolk Construction, which is currently working on projects at Northeastern and Boston University.

“We have a plan and control process to bring trade partners on as early as the preconstruction phase,” he said. “That way, we can all work on the same design alongside the architects and owners to better predict constructability issues that can drive up costs — before they happen.”

Key to the process are detailed check-ins with the whole team every two weeks, he added.

Others hone in on the labor issue by making sure they’re the contractor of choice for their subs. “Companies are getting creative to pay subcontractors a lot quicker, to help build loyalty with their subs,” Shapiro said. “Sometimes, for that prompt payment, they may even get a little cost reduction.”

At Fairfax County Public Schools, Platenberg strives to be a client of choice, too. “We pay on time to keep the blood flowing in the arteries,” Platenberg says. “That keeps people focused.”

Construction Dive news delivered to your inbox

Get the free daily newsletter read by industry experts

- Select Newsletter: Daily Dive M-F

- Select Newsletter: Tech Weekly Every Wednesday

- Select Newsletter: Infrastructure Weekly Every Tuesday

- Select Newsletter: Labor & Safety Weekly Every Thursday

- Select user consent: By signing up to receive our newsletter, you agree to our Terms of Use and Privacy Policy . You can unsubscribe at anytime.

Editors' picks

Upping the luxury quotient: How stadium construction is evolving

From kids’ teams to pro franchises, sports fans and athletes have higher expectations for stadium design, according to an architect.

The top 10 residential builders of 2024

Even with high interest rates, most of the country’s top home builders have held steady or improved their closing numbers, according to Builder magazine’s annual ranking.

Keep up with the story. Subscribe to the Construction Dive free daily newsletter

Company Announcements

- Chicago DOB still needs to fix broken inspection process: report By Matthew Thibault

- June 2024 wins: Contractors tout their latest awards By Construction Dive Staff

- Entegris to get $75M in CHIPS funding for Colorado plant By Joelle Anselmo

- Florida contractor brings Pride to construction By Julie Strupp

hello from header.njk!!

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

hello from incl-embed-site-alert.njk

hello from incl-embed-local-nav.njk

Education and Workforce Development Cost-Benefit Analysis Guidance

June 30, 2023

View as PDF

Sarah M. Bishop & Peter Glick, MCC

Acronyms and abbreviations

- CA – Constraints Analysis

- CBA – Cost Benefit Analysis

- CEA – Cost-Effectiveness Analysis

- EA – Economic Analysis

- EIF – Entry into Force

- EMIS – Education Management Information System

- ERR – Economic Rate of Return

- GDP – Gross Domestic Product

- IMC – Investment Management Committee

- LSMS – Living Standards Measurement Study

- MCA – Millennium Challenge Account

- MCA – Multi-Criteria Analysis

- MCC – Millennium Challenge Corporation

- M&E – Monitoring and Evaluation

- NPV – Net Present Value

- O&M – Operations and Maintenance

- PIR – Policy and Institutional Reform

- PV – Present Value

- TVET – Technical and Vocational Education and Training

- RBF – Results Based Financing

- RCA – Root Cause Analysis

- SD – Standard Deviation, of test scores

- SCDPs – Sector Consistent Design Patterns documents

- UA – Uncertainty Assessment

- USD – United States Dollars

- VA – Value Added

- WTP – Willingness to Pay

I. INTRODUCTION

A. motivation.

Between 2005 and late 2022, MCC invested over $628 million in education and workforce development interventions at almost every level of education (from primary through tertiary and adult continuing education), formal and non-formal, and in education that results in both academic and technical credentials. 1 As with all MCC investments, those in education and workforce development aim to support the agency’s mission to reduce poverty through economic growth. Theory and evidence points to the importance of human capital —and especially the knowledge and skills attained through education, training, and work experience— as a key determinant of economic growth. 2 In particular, greater levels of human capital facilitate the adoption of new technologies, and can increase efficiency and productivity, and thus enhance economic growth. 3 At the firm level, the supply of skills is one of the criteria that businesses consider when deciding whether to invest, expand, upgrade technology, and hire more workers.

From the perspective of individuals, one of the most robust associations in research on economic development is that between earnings and level of education, bearing out the prediction from human capital theory that more educated people are, on average, more productive and therefore have higher incomes. Reviewing this relationship across countries and across time, Psacharopoulos and Patrinos (2018) find a global average of private (individual) returns to an additional year of schooling to be about 9 percent – a result that has remained stable across decades. Furthermore, disaggregated data show that women continue to experience higher average returns to schooling, and that returns to all are also higher in low-income countries. These findings highlight that targeted education and training investments can have strong implications for income distribution, poverty reduction and inclusion, given that education can equip less advantaged citizens with the means to better access income generating and welfare improving opportunities.

Despite a widespread understanding that education can provide significant benefits to individuals and to economies, notable market failures lead to underinvestment in human capital by private actors (both households and firms) and thus implies a role for public policy. A key market failure is the inability of poor families to borrow to finance their children’s schooling, despite the high potential benefits. In the absence of credit, opportunity costs loom large for poor families (and youth), since children’s work on farms or domestic work is often needed to sustain household consumption, while older children may need to be employed to support themselves or their families. Parents may also lack information about, or not fully value, these benefits. As a consequence, the benefits are not fully taken into account in their decisions regarding investment in their children’s education, implying an underinvestment from a social perspective. On the part of firms, while they would clearly benefit from having a more educated and highly skilled workforce, they do not have an incentive to invest heavily in education (or training) as they generally will be unable to capture these benefits, since those who receive the education are free to work for any firm, not just the one providing the education—i.e, there is an externality. For industry specific skills, firms often do provide on the job-training, though this tends to be less than socially optimal, for the same reason. Related to this externality is a collective action problem. For example, firms in a given industry could collectively provide relevant training and thus meet their skills needs, but enforcing this cooperation can be difficult.

These market failures imply an important role for the public sector to fund, and usually to directly provide, education services. Governments indeed devote substantial resources to education. In 2014 the global average indicated that countries spent about 5% of their GDP on education (World Bank DataBank), 4 and many countries have experienced an increase in private sector provision at all levels of education, as well as a mix of public and private institutions. In many countries, private education provided by religious organizations, for example through Koranic and Catholic schools, is significant. Rising education expenditures have led to a dramatic increase in access to education over the past five decades in developing countries, leading to significant improvements in the quantity of schooling attained. Nevertheless, achieving universal secondary enrollment and completion remains an elusive goal, and the quality of education and learning has also lagged and is often extremely poor, which some recent research suggests explains the lack of association of education and growth at the country level. 5 In significant part this is because governments lack the resources to further expand access to education and improve its quality. Other factors, including poor management of the education sector, are also important. Donor agencies such as MCC can play an important role by infusing resources as well as providing technical assistance for reforms in education, assisting with changes targeting the system/sector, school, teacher, and student levels.

While much of the foregoing has emphasized constraints on increasing the supply of human capital or skills, it should be obvious that this is not the whole picture as the skills provided to individuals need to be demanded in the labor market for there to be significant welfare improving, income and economic growth impacts. 6 In a broad sense, this consideration motivates MCC’s starting point in the compact process, which is usually stated as the identification of binding constraints to private investment and economic growth--private investment being the main source of the demand for labor and for labor with specific skills. Within the scope of the present SCDP, labor market demand considerations play a crucial role in problem diagnosis and program design in education and training, particularly for technical and vocational education and training (TVET), which is—or should be—tightly linked with the labor market and the needs of employers.

B. MCC Experience

Across countries, one can recognize similar patterns and causes of poor education and training outcomes, yet the details of the problems and their potential solutions remain country specific. Evidence-based policy and data analysis are foundational to MCC’s model, a process that begins as soon as a country is selected as an eligible partner. MCC and country counterparts begin collaborating on the Constraints Analysis (CA) to identify the most significant and binding constraints to that country’s economic growth. Since MCC conducted its first CA in late 2007, by 2022, 41 analyses have been completed with MCC partner countries, of which 11 found inadequate education and skills to be one of the binding constraints. Building on the CA findings, the next step in the process is to conduct a root cause analysis (RCA) on prioritized binding constraints. The RCA is intended to drill down to the crux of why specific underlying problems exist. This leads the way to identify where public policy--and MCC as an international development funder--can serve to improve market outcomes and pave the way to greater economic growth and poverty reduction.

To address the country-specific challenges related to education and workforce development, MCC has dedicated its resources to a variety of investment categories including infrastructure and equipment, policy and institutional reforms, technical assistance, curriculum development and training of teachers and others employed within the education system. Interventions have targeted all levels of the education system, from the national government or education ministries to schools and training centers, to educators, to students and their households, and even to strengthening linkages between education and the labor market. Spanning 10 countries in Central America, Africa, Asia, and Eastern Europe, the interventions to date have also typically included efforts to improve education equity and inclusion of marginalized populations.

As project teams move from problem diagnosis to project identification, project logics are developed, and MCC economists carry out a cost-benefit analysis (CBA) of the projects. MCC’s CBA practice is a reflection of the Agency’s strong commitment to the use of rigorous evidence to inform investment decisions and is a requirement for all MCC compact investments. 7 The CBA methodology requires the analyst to quantify all anticipated costs and benefits (private and social) of a potential investment, 8 and then express those costs and benefits in monetary terms to estimate an economic rate of return (ERR) on the investment. This summary metric allows for comparison to other potential projects as well as to some minimum required rate of return. For MCC projects the typical ERR threshold that projects are expected to meet is 10%. The analysis is conducted at several points in time over the life of a compact investment. The most important is the CBA that informs the initial investment decision. During program implementation, a revised CBA may be needed to guide decision making when changes occur to the originally designed project. Additionally, MCC economists produce a ‘closeout’ CBA model within about a year after a compact’s closure, which will fully capture changes to project cost and scope. Finally, an independent entity is typically engaged to conduct an impact evaluation of the project, the findings from which are increasingly being utilized to conduct ‘evaluation-based’ CBAs. Note that for this final stage, the CBA uses actual information on realized project benefits from the evaluation, in contrast to predicted benefits in earlier versions of the CBA.

The closeout CBA and evaluation-based CBA are often linked to the last step in a project’s lifecycle: assessing the success of its implementation and its ability to achieve the intended objective. Evaluation is integral to MCC’s commitment to accountability, learning, transparency, and evidence-based decision making. MCC published its first general education evaluation findings in 2011. The Insights from General Education Evaluations Brief reviews and synthesizes MCC’s findings from its independent evaluations in general education, covering investments in both primary and secondary education. The evaluation results are supplemented with lessons learned developed by MCC staff. MCC plans to conduct a deeper analysis of lessons learned for general education, which will be published in a forthcoming Principles into Practice paper. The 2020 Principles into Practice: Training Service Delivery for Jobs and Productivity reviewed MCC’s lessons in technical and vocational education and training.

Lastly, it should be noted that CBA is not the only approach to assessing education projects. For example, cost-effectiveness analysis (CEA) is often used to compare education-related investments based on the costs to achieve a specific educational outcome, such as higher test scores or grade attainment, that is quantified but not assigned a monetary value. CEA could be more broadly considered at MCC, and particularly for education when multiple intervention options are on the table to support improvements in a specific educational outcome. Further, MCC’s own Beneficiary Analysis, discussed briefly in this paper, assesses projects on how well they target specific populations of interest, including the poor and women.

C. Purpose and Organization of the Guidance

This Sector Consistent Design Pattern (SCDP) guidance in Education follows similar guidance documents for other key sectors, namely Water Supply and Sanitation (Osborne, 2019), Land (Bowen and Ngeleza, 2019), Transport (Carter, 2020), Power (Epley, Mulangu, and Bowen, 2021), 9 and Agriculture (Szott and Motamed, 2023). There is also a forthcoming SCDP in Health (Myers, Osborne, and Payaam, 2023). Each SCDP is built upon MCC’s general Guidelines for Economic and Beneficiary Analysis, 10 which provides the overall principles that should be applied to projects in all sectors. The SCDPs are intended to serve as “living” resources that will be updated as necessary, as evidence and methods evolve and MCC’s approach shifts to reflect the latest findings in the sector.

The main purpose of this document is to provide guidance for MCC’s economic analysis of interventions in education. It will support analysts and economists who will conduct or review this work. These individuals will include MCC analysts, consultants to MCC, peer reviewers and counterparts in our partner countries. To reach this objective, this guidance seeks to provide a way of thinking about costs, benefits, uncertainty, and other topics, noting the strengths and weakness of different methodological approaches. It does not provide specific tools or spreadsheets that could serve as a paint-by-numbers approach to doing CBA in education. In fact, a main takeaway from the paper should be that the specifications of each model will be highly dependent on the location, context, problems being addressed, and detailed objectives of the proposed interventions.

This documentation of MCC practices and experience can also support several other purposes such as to improve consistency in MCC’s own work in education CBAs as carried out by different economists; facilitate peer reviews; and increase the transparency and accountability related to this work. By fostering dialogue on the strengths and limitations of CBA for different types of education interventions, this guide can help to advance the fields of education, monitoring and evaluation, and economic analysis.

This guidance is based on the broad field of education and training research but is grounded in MCC’s experience and the interventions that the Agency normally supports. Reflecting MCC’s investment portfolio, we focus on general education at the primary and secondary levels, with some reference to tertiary. Not covered here are pre-school/pre-kindergarten level interventions. While such early interventions have been shown to have significant long-term impacts, MCC has not yet invested in this space, so we do not consider such projects. 11 In addition to general education, the paper also considers technical and vocational education and training (TVET) programs, which are intended to provide skills for the world of work, thus encompassing (in a broad definition) any education and training which provides knowledge and skills to enhance productivity and success at work, whether provided through formal or non-formal approaches and in school or work-based settings. Clearly, TVET is part of education in a broad sense (and the reverse, since all schooling ultimately prepares young people for the world of work in addition to other objectives), but given its particularly tight linkages to livelihoods, it is also distinct and therefore is given separate treatment in the paper.

Note should also be made of two topics tightly related to education and training that are touched upon frequently within this guidance: labor markets and health. With regard to the former, as mentioned above, the impacts of the education system play out most significantly in the labor market. Thus, labor market characteristics and conditions influence the returns to education and training investments, potentially reducing the expected impacts. MCC has occasionally made investments aimed to improve the operation of labor markets. Such interventions are not covered by this CBA guidance, but the discussion below will refer to labor market factors, especially when considering TVET programs. With regard to health, an individual’s health, not just education or skills, is encompassed within the concept of human capital. 12 This will be further discussed below, but as with labor market interventions, this guide does not address CBA of health interventions, as a separate forthcoming guidance paper will be dedicated to economic analysis of MCC’s health and nutrition programs.

Organization of the Guidance

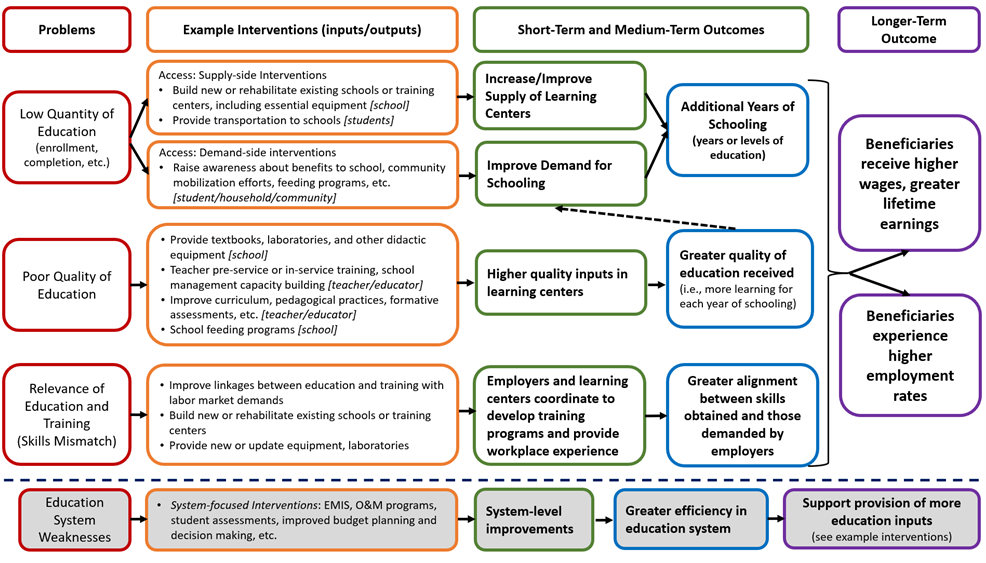

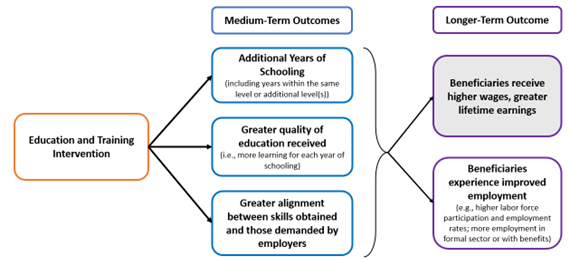

With this framing in mind, the remainder of this education and workforce development CBA guidance is organized into two main sections. Section II is focused on providing key background and programmatic information that is essential for designing a strong CBA model that captures the characteristics and objectives of the education investment. This section includes the following three components: the identification of problems; a typology of interventions or ‘projects’ to address those problems; and the use of these components to develop the project’s logic to get from inputs to outputs to outcomes and the longer-term impacts. Through Section II the reader should have a better understanding of the education sector and be more equipped to dive into the technical aspects of the economic analysis, which is the subject of Section III.

Section III begins by introducing the economic logic, which uses a well-defined counterfactual (i.e., a without project scenario) to capture the program logic’s outputs and outcomes as the CBA model’s intended benefit streams, with note on how these change across time. Section III. A, provides considerations for how to develop the counterfactual, time horizon, and discount rate for education and training projects. Section III. B follows with a focus on the work to identify, quantify, and monetize benefit streams. Three interventions based on MCC experience are included to provide concrete examples of applying these concepts to education and training projects. The discussion shifts in Section III. C to outline key cost considerations and MCC practices in defining and measuring all social costs require to reach the intervention’s intended benefits. All the topics in Section III are brought together in subsection D to determine the CBA results, with a description of useful sensitivity analysis that can inform the CBA metrics reported, as well as distributional analysis that can highlight results by important groups. Finally, Section III. E concludes by acknowledging the limitations of this guidance and provides recommendations on areas for further examination or coverage in a future version the SCDP in education and training.

II. EDUCATION AND TRAINING SECTOR: SETTING THE STAGE FOR CBA

A. what’s the problem.

The field of education is complex, and interventions can be motivated by the desire to address numerous problems. This guidance does not attempt to go into detail on every type of problem that might be encountered in the education sphere, but rather to describe the problem diagnosis approach at MCC and highlight aspects specific to education and training. In particular, the section outlines three core problems identified in the literature and through MCC’s experience in education and training: – (1) inadequate quantity of education (encompassing low enrollment and completion rates), (2) poor quality of the education received, and (3) low relevance of skills obtained in the labor market (also referred to somewhat more narrowly as a skills mismatch). 13 This section also provides insights into the potential root causes of these core problems—the next step in the process of designing interventions to address well-defined problems – and explores the linkages between education-related problems with other related topics, namely social and gender inclusion, labor markets and health.

For any sector, correctly identifying the problem, who is affected by it and how, is critical for the design and success of an investment in achieving the desired outcomes. Well-defined problems support the development of appropriate interventions to address those identified problems, as well as the proper selection and measurement of the related benefit streams, and ultimately the development of a more cost-effective project overall. Within the MCC context, after a country is selected as an eligible partner, joint work by MCC and the partner country team begins on the problem diagnosis through a Constraints Analysis (CA) to identify the greatest constraints to that country’s economic growth. Within the MCC team, the economist leads this work, which is built upon the foundations outlined in the Growth Diagnostic work by Hausmann, Rodrik, and Velasco (2005). Since MCC conducted its first CA in late 2007, 41 such analyses have been completed with MCC partner countries, of which 11 found human capital, particularly in education, to be one of the binding constraints to their economic growth (Annex I).

Given that human capital, broadly defined, is a key input into production, it is not surprising that constraints analysis for developing countries may find that inadequate human capital is constraining a country’s economic growth and its opportunities to reduce poverty through economic growth. Human capital can be a constraint either through low productivity due to poor current health or developmental deficiencies stemming from poor nutrition in childhood (as covered in the forthcoming health SCDP), or—the more commonly analyzed pathway and our focus here-- through a deficient supply of skills for productive work. From the perspective of private firms, the availability or cost of human capital will affect decisions about investment, choice of technology, and exporting, thus strongly influencing productivity and economic growth. An education or skills-related human capital constraint occurs when the supply of skills does not meet the actual or potential demand of employers that would allow them to effectively manage, operate, and/or expand businesses overall or at a competitive cost. This is distinct from labor market problems on the demand or policy side that do not directly involve a human capital shortfall. For example, excessive labor regulations can make it difficult or costly to hire individuals with the right skills, or to dismiss those without the necessary skills. Finally, private investment and the associated demand for labor may be constrained by various factors, such as a poor macroeconomic policy environment, that lie outside the labor market (as well as the education sector) itself.

It is important to highlight as well that human capital constraints have especially strong implications for income distribution and poverty reduction, given that education can equip citizens with the means to access income generating and welfare improving opportunities. Targeted investments to tackle such constraints among marginalized subsets of the population can thus support greater inclusion and income distribution.

After the binding constraints to growth are identified, the next step in the process requires MCC country teams to conduct a root cause analysis (RCA) on each constraint. The RCA asks why a constraint exists. For example, root causes of low quantity of education might be found to be one or a combination of a lack of physical access to schools, high private costs of education (combined with lack of access to credit), or a belief that there are no or few benefits of schooling or schooling beyond some level ( especially for girls), or a perception that schools or teachers, hence the education provided, are of poor quality. This is detailed further below with additional examples of root causes for education and training constraints.

Annex II lists the 13 MCC country programs to date with education investments, noting whether a CA was conducted and, if so, then whether it found education as a constraint, or as a root cause to a different binding constraint. This provides a more complete picture of the connection between the CA, RCA and where MCC has invested in education. In summary, of the 13 country programs with education investments, 7 of them were from the period before CAs became an MCC practice. Of the remaining 6 that were based on CAs, in all but one case education was identified as a binding constraint. The remaining case was the CA for El Salvador’s second compact, for which education was found to be between a binding constraint and a root cause--The analysis framed the main constraint as low productivity in exports, with one of the three main barriers to this constraint found to be human capital deficiencies related to education. 14 There are also 5 additional countries where education was found to be one of several binding constraints, but due to other MCC decision factors these did not result in education-related investments.

The shift from speaking about constraints to problems occurs when MCC begins to assess what aspects of the constraint could be addressed by an MCC-supported intervention – i.e., taking into consideration the other MCC decision criteria. 15 The defined ‘core problem’ will be closely related to a constraint but is often narrower, and will become the focus for the RCA, which will be directed at identifying root causes of MCC-actionable problems. This process to further define the problem will result in a specific problem statement that can be used to inform project identification, project logic, and the economic logic that underpins the CBA. For the remainder of this subsection, the term ‘core problem’ is used, but as noted these are often similar to the identified constraints.

During the last 15 years of conducting CAs, three main education-related constraints, referred to simply as problems here, have emerged. 16 Table 1 summarizes these problems, which are by no means mutually exclusive. The second column of the table indicates what kind of evidence would lead to identification of that problem, and the third column points to possible underlying causes of that problem that may emerge in the RCA. 17

In no particular order, the first problem is described as an inadequate quantity of education. In this situation, it would be difficult for employers to find enough workers with the requisite level of education to meet their needs. In some countries there could be a lack of graduates from primary or lower secondary school, resulting in low literacy and numeracy among those in the labor market. Note that ‘quantity’ could be indicated by graduation rates for different levels of schooling, by grade attainment, or simply enrollment overall or at different levels.

Second is the low quality of the education. When education quality is a problem, employers may observe adequate numbers of graduates at different levels of education (i.e., quantity of schooling), and in the right fields, but their skills are poorly developed. One might observe that many students graduate from secondary school without obtaining adequate skills, for example. For the analyst, assessing whether skills are up to a certain level may be easiest for general education in areas such as basic numeracy and literacy, where national, regional, or international exams may assess students’ competency against pre-defined standards for a given level of education. Note that the low education quality problem is distinct from, but potentially related to quantity of schooling. One can imagine a situation where low quality of education and resulting poor learning outcomes for students reduces the expected productivity and earnings benefit of additional years of schooling, which lead parents or students to choose not to proceed beyond some relatively low level of years of schooling.

The third problem is a skills mismatch, or more broadly, a lack of labor market ‘relevance’ of schooling obtained. In this situation, there may be plenty of graduates but not in key fields of study, or with the types of skills, needed by employers. The mismatch problem may be most relevant to TVET and higher education, where students tend to select a particular course of study and may select fields that do not match the specific needs of employers. However, it should be noted that the notion of skills mismatch is a broad one. Perhaps most obviously, it encompasses a lack of individuals with specific skills for particular industries, such as food processing technicians or aerospace engineers. Yet it could also refer to a lack of more general technical skills that can be used in many sectors, such as IT skills, accounting, or engineering. Even more broadly, it could describe a situation where students, with an eye toward ‘safe’ public sector jobs, tend to select a range of fields of study like law or humanities rather than STEM fields. The latter may be more valued by the private sector, but the jobs offered by the private sector may be viewed as less secure or having fewer benefits and thus be considered less desirable than employment in the public sector (a pattern observed in many developing economies). Clearly, the broader definitions of ‘mismatch’ may start to look more like ‘general skills deficit’ than a highly specific ‘skills mismatch’. Whatever the terminology applied, it is important to be clear about the type of mismatch or relevance problem that exists in a given situation, as the root cause of the problem and indicated interventions may differ. For example, the first case noted above could imply the need for industry specific TVET, while the third and likely also the second could imply that changes are needed in general education coursework or in incentives to entice students to enter or complete their studies for in-demand fields.

As just noted, TVET investments are typically thought of as a solution to the third problem, a skills mismatch. Defined more broadly, however, TVET also encompasses interventions to address aspects of both the first and second problems. This is particularly relevant for training programs that focus on general and remedial skill building, such as literacy training or soft skills training for individuals who are no longer in formal schooling, and who are currently unemployed or economically inactive, i.e., out of the labor force. This and other types of TVET interventions will be defined in the next section of the paper.

| Problem | Potential Evidence of the Problem | Potential areas to examine during Root Cause Analysis |

|---|---|---|

| There are not enough graduates to satisfy market demand. It could be that there are not enough graduates at all levels of education or at only certain levels. | If there are not enough graduates, questions for the RCA would include: |

| Problem | Potential Evidence of the Problem | Potential areas to examine during Root Cause Analysis |

|---|---|---|

| There may be enough graduates at the different levels of education, and they may be graduating in the right fields, but their skills are poorly developed. | During the RCA the team will try to understand if this problem is due to: |

| Problem | Potential Evidence of the Problem | Potential areas to examine during Root Cause Analysis |

|---|---|---|

| If there are plenty of graduates (e.g., of secondary school), but they have not acquired skills that the labor market demands then this could be an example of a “skills mismatch,” which may be most relevant to the TVET and higher education arenas. | and probably high levels of unemployment. However, high levels of unemployment may also signal other problems, such as labor market regulations or higher reservation wages than what is being offered on the market. | The RCA may question whether there are problems with the quality of instruction, quality or relevance of curricula, not enough programs provided in the market at the right level or in the right fields, market distortions, or other mismatched incentives/preferences (e.g., prejudice against technical vocational training). |

Examining Separate, but Related Problems: Considering their implications for education-related interventions