- Privacy Policy

Home » 500+ Computer Science Research Topics

500+ Computer Science Research Topics

Computer Science is a constantly evolving field that has transformed the world we live in today. With new technologies emerging every day, there are countless research opportunities in this field. Whether you are interested in artificial intelligence, machine learning, cybersecurity, data analytics, or computer networks, there are endless possibilities to explore. In this post, we will delve into some of the most interesting and important research topics in Computer Science. From the latest advancements in programming languages to the development of cutting-edge algorithms, we will explore the latest trends and innovations that are shaping the future of Computer Science. So, whether you are a student or a professional, read on to discover some of the most exciting research topics in this dynamic and rapidly expanding field.

Computer Science Research Topics

Computer Science Research Topics are as follows:

- Using machine learning to detect and prevent cyber attacks

- Developing algorithms for optimized resource allocation in cloud computing

- Investigating the use of blockchain technology for secure and decentralized data storage

- Developing intelligent chatbots for customer service

- Investigating the effectiveness of deep learning for natural language processing

- Developing algorithms for detecting and removing fake news from social media

- Investigating the impact of social media on mental health

- Developing algorithms for efficient image and video compression

- Investigating the use of big data analytics for predictive maintenance in manufacturing

- Developing algorithms for identifying and mitigating bias in machine learning models

- Investigating the ethical implications of autonomous vehicles

- Developing algorithms for detecting and preventing cyberbullying

- Investigating the use of machine learning for personalized medicine

- Developing algorithms for efficient and accurate speech recognition

- Investigating the impact of social media on political polarization

- Developing algorithms for sentiment analysis in social media data

- Investigating the use of virtual reality in education

- Developing algorithms for efficient data encryption and decryption

- Investigating the impact of technology on workplace productivity

- Developing algorithms for detecting and mitigating deepfakes

- Investigating the use of artificial intelligence in financial trading

- Developing algorithms for efficient database management

- Investigating the effectiveness of online learning platforms

- Developing algorithms for efficient and accurate facial recognition

- Investigating the use of machine learning for predicting weather patterns

- Developing algorithms for efficient and secure data transfer

- Investigating the impact of technology on social skills and communication

- Developing algorithms for efficient and accurate object recognition

- Investigating the use of machine learning for fraud detection in finance

- Developing algorithms for efficient and secure authentication systems

- Investigating the impact of technology on privacy and surveillance

- Developing algorithms for efficient and accurate handwriting recognition

- Investigating the use of machine learning for predicting stock prices

- Developing algorithms for efficient and secure biometric identification

- Investigating the impact of technology on mental health and well-being

- Developing algorithms for efficient and accurate language translation

- Investigating the use of machine learning for personalized advertising

- Developing algorithms for efficient and secure payment systems

- Investigating the impact of technology on the job market and automation

- Developing algorithms for efficient and accurate object tracking

- Investigating the use of machine learning for predicting disease outbreaks

- Developing algorithms for efficient and secure access control

- Investigating the impact of technology on human behavior and decision making

- Developing algorithms for efficient and accurate sound recognition

- Investigating the use of machine learning for predicting customer behavior

- Developing algorithms for efficient and secure data backup and recovery

- Investigating the impact of technology on education and learning outcomes

- Developing algorithms for efficient and accurate emotion recognition

- Investigating the use of machine learning for improving healthcare outcomes

- Developing algorithms for efficient and secure supply chain management

- Investigating the impact of technology on cultural and societal norms

- Developing algorithms for efficient and accurate gesture recognition

- Investigating the use of machine learning for predicting consumer demand

- Developing algorithms for efficient and secure cloud storage

- Investigating the impact of technology on environmental sustainability

- Developing algorithms for efficient and accurate voice recognition

- Investigating the use of machine learning for improving transportation systems

- Developing algorithms for efficient and secure mobile device management

- Investigating the impact of technology on social inequality and access to resources

- Machine learning for healthcare diagnosis and treatment

- Machine Learning for Cybersecurity

- Machine learning for personalized medicine

- Cybersecurity threats and defense strategies

- Big data analytics for business intelligence

- Blockchain technology and its applications

- Human-computer interaction in virtual reality environments

- Artificial intelligence for autonomous vehicles

- Natural language processing for chatbots

- Cloud computing and its impact on the IT industry

- Internet of Things (IoT) and smart homes

- Robotics and automation in manufacturing

- Augmented reality and its potential in education

- Data mining techniques for customer relationship management

- Computer vision for object recognition and tracking

- Quantum computing and its applications in cryptography

- Social media analytics and sentiment analysis

- Recommender systems for personalized content delivery

- Mobile computing and its impact on society

- Bioinformatics and genomic data analysis

- Deep learning for image and speech recognition

- Digital signal processing and audio processing algorithms

- Cloud storage and data security in the cloud

- Wearable technology and its impact on healthcare

- Computational linguistics for natural language understanding

- Cognitive computing for decision support systems

- Cyber-physical systems and their applications

- Edge computing and its impact on IoT

- Machine learning for fraud detection

- Cryptography and its role in secure communication

- Cybersecurity risks in the era of the Internet of Things

- Natural language generation for automated report writing

- 3D printing and its impact on manufacturing

- Virtual assistants and their applications in daily life

- Cloud-based gaming and its impact on the gaming industry

- Computer networks and their security issues

- Cyber forensics and its role in criminal investigations

- Machine learning for predictive maintenance in industrial settings

- Augmented reality for cultural heritage preservation

- Human-robot interaction and its applications

- Data visualization and its impact on decision-making

- Cybersecurity in financial systems and blockchain

- Computer graphics and animation techniques

- Biometrics and its role in secure authentication

- Cloud-based e-learning platforms and their impact on education

- Natural language processing for machine translation

- Machine learning for predictive maintenance in healthcare

- Cybersecurity and privacy issues in social media

- Computer vision for medical image analysis

- Natural language generation for content creation

- Cybersecurity challenges in cloud computing

- Human-robot collaboration in manufacturing

- Data mining for predicting customer churn

- Artificial intelligence for autonomous drones

- Cybersecurity risks in the healthcare industry

- Machine learning for speech synthesis

- Edge computing for low-latency applications

- Virtual reality for mental health therapy

- Quantum computing and its applications in finance

- Biomedical engineering and its applications

- Cybersecurity in autonomous systems

- Machine learning for predictive maintenance in transportation

- Computer vision for object detection in autonomous driving

- Augmented reality for industrial training and simulations

- Cloud-based cybersecurity solutions for small businesses

- Natural language processing for knowledge management

- Machine learning for personalized advertising

- Cybersecurity in the supply chain management

- Cybersecurity risks in the energy sector

- Computer vision for facial recognition

- Natural language processing for social media analysis

- Machine learning for sentiment analysis in customer reviews

- Explainable Artificial Intelligence

- Quantum Computing

- Blockchain Technology

- Human-Computer Interaction

- Natural Language Processing

- Cloud Computing

- Robotics and Automation

- Augmented Reality and Virtual Reality

- Cyber-Physical Systems

- Computational Neuroscience

- Big Data Analytics

- Computer Vision

- Cryptography and Network Security

- Internet of Things

- Computer Graphics and Visualization

- Artificial Intelligence for Game Design

- Computational Biology

- Social Network Analysis

- Bioinformatics

- Distributed Systems and Middleware

- Information Retrieval and Data Mining

- Computer Networks

- Mobile Computing and Wireless Networks

- Software Engineering

- Database Systems

- Parallel and Distributed Computing

- Human-Robot Interaction

- Intelligent Transportation Systems

- High-Performance Computing

- Cyber-Physical Security

- Deep Learning

- Sensor Networks

- Multi-Agent Systems

- Human-Centered Computing

- Wearable Computing

- Knowledge Representation and Reasoning

- Adaptive Systems

- Brain-Computer Interface

- Health Informatics

- Cognitive Computing

- Cybersecurity and Privacy

- Internet Security

- Cybercrime and Digital Forensics

- Cloud Security

- Cryptocurrencies and Digital Payments

- Machine Learning for Natural Language Generation

- Cognitive Robotics

- Neural Networks

- Semantic Web

- Image Processing

- Cyber Threat Intelligence

- Secure Mobile Computing

- Cybersecurity Education and Training

- Privacy Preserving Techniques

- Cyber-Physical Systems Security

- Virtualization and Containerization

- Machine Learning for Computer Vision

- Network Function Virtualization

- Cybersecurity Risk Management

- Information Security Governance

- Intrusion Detection and Prevention

- Biometric Authentication

- Machine Learning for Predictive Maintenance

- Security in Cloud-based Environments

- Cybersecurity for Industrial Control Systems

- Smart Grid Security

- Software Defined Networking

- Quantum Cryptography

- Security in the Internet of Things

- Natural language processing for sentiment analysis

- Blockchain technology for secure data sharing

- Developing efficient algorithms for big data analysis

- Cybersecurity for internet of things (IoT) devices

- Human-robot interaction for industrial automation

- Image recognition for autonomous vehicles

- Social media analytics for marketing strategy

- Quantum computing for solving complex problems

- Biometric authentication for secure access control

- Augmented reality for education and training

- Intelligent transportation systems for traffic management

- Predictive modeling for financial markets

- Cloud computing for scalable data storage and processing

- Virtual reality for therapy and mental health treatment

- Data visualization for business intelligence

- Recommender systems for personalized product recommendations

- Speech recognition for voice-controlled devices

- Mobile computing for real-time location-based services

- Neural networks for predicting user behavior

- Genetic algorithms for optimization problems

- Distributed computing for parallel processing

- Internet of things (IoT) for smart cities

- Wireless sensor networks for environmental monitoring

- Cloud-based gaming for high-performance gaming

- Social network analysis for identifying influencers

- Autonomous systems for agriculture

- Robotics for disaster response

- Data mining for customer segmentation

- Computer graphics for visual effects in movies and video games

- Virtual assistants for personalized customer service

- Natural language understanding for chatbots

- 3D printing for manufacturing prototypes

- Artificial intelligence for stock trading

- Machine learning for weather forecasting

- Biomedical engineering for prosthetics and implants

- Cybersecurity for financial institutions

- Machine learning for energy consumption optimization

- Computer vision for object tracking

- Natural language processing for document summarization

- Wearable technology for health and fitness monitoring

- Internet of things (IoT) for home automation

- Reinforcement learning for robotics control

- Big data analytics for customer insights

- Machine learning for supply chain optimization

- Natural language processing for legal document analysis

- Artificial intelligence for drug discovery

- Computer vision for object recognition in robotics

- Data mining for customer churn prediction

- Autonomous systems for space exploration

- Robotics for agriculture automation

- Machine learning for predicting earthquakes

- Natural language processing for sentiment analysis in customer reviews

- Big data analytics for predicting natural disasters

- Internet of things (IoT) for remote patient monitoring

- Blockchain technology for digital identity management

- Machine learning for predicting wildfire spread

- Computer vision for gesture recognition

- Natural language processing for automated translation

- Big data analytics for fraud detection in banking

- Internet of things (IoT) for smart homes

- Robotics for warehouse automation

- Machine learning for predicting air pollution

- Natural language processing for medical record analysis

- Augmented reality for architectural design

- Big data analytics for predicting traffic congestion

- Machine learning for predicting customer lifetime value

- Developing algorithms for efficient and accurate text recognition

- Natural Language Processing for Virtual Assistants

- Natural Language Processing for Sentiment Analysis in Social Media

- Explainable Artificial Intelligence (XAI) for Trust and Transparency

- Deep Learning for Image and Video Retrieval

- Edge Computing for Internet of Things (IoT) Applications

- Data Science for Social Media Analytics

- Cybersecurity for Critical Infrastructure Protection

- Natural Language Processing for Text Classification

- Quantum Computing for Optimization Problems

- Machine Learning for Personalized Health Monitoring

- Computer Vision for Autonomous Driving

- Blockchain Technology for Supply Chain Management

- Augmented Reality for Education and Training

- Natural Language Processing for Sentiment Analysis

- Machine Learning for Personalized Marketing

- Big Data Analytics for Financial Fraud Detection

- Cybersecurity for Cloud Security Assessment

- Artificial Intelligence for Natural Language Understanding

- Blockchain Technology for Decentralized Applications

- Virtual Reality for Cultural Heritage Preservation

- Natural Language Processing for Named Entity Recognition

- Machine Learning for Customer Churn Prediction

- Big Data Analytics for Social Network Analysis

- Cybersecurity for Intrusion Detection and Prevention

- Artificial Intelligence for Robotics and Automation

- Blockchain Technology for Digital Identity Management

- Virtual Reality for Rehabilitation and Therapy

- Natural Language Processing for Text Summarization

- Machine Learning for Credit Risk Assessment

- Big Data Analytics for Fraud Detection in Healthcare

- Cybersecurity for Internet Privacy Protection

- Artificial Intelligence for Game Design and Development

- Blockchain Technology for Decentralized Social Networks

- Virtual Reality for Marketing and Advertising

- Natural Language Processing for Opinion Mining

- Machine Learning for Anomaly Detection

- Big Data Analytics for Predictive Maintenance in Transportation

- Cybersecurity for Network Security Management

- Artificial Intelligence for Personalized News and Content Delivery

- Blockchain Technology for Cryptocurrency Mining

- Virtual Reality for Architectural Design and Visualization

- Natural Language Processing for Machine Translation

- Machine Learning for Automated Image Captioning

- Big Data Analytics for Stock Market Prediction

- Cybersecurity for Biometric Authentication Systems

- Artificial Intelligence for Human-Robot Interaction

- Blockchain Technology for Smart Grids

- Virtual Reality for Sports Training and Simulation

- Natural Language Processing for Question Answering Systems

- Machine Learning for Sentiment Analysis in Customer Feedback

- Big Data Analytics for Predictive Maintenance in Manufacturing

- Cybersecurity for Cloud-Based Systems

- Artificial Intelligence for Automated Journalism

- Blockchain Technology for Intellectual Property Management

- Virtual Reality for Therapy and Rehabilitation

- Natural Language Processing for Language Generation

- Machine Learning for Customer Lifetime Value Prediction

- Big Data Analytics for Predictive Maintenance in Energy Systems

- Cybersecurity for Secure Mobile Communication

- Artificial Intelligence for Emotion Recognition

- Blockchain Technology for Digital Asset Trading

- Virtual Reality for Automotive Design and Visualization

- Natural Language Processing for Semantic Web

- Machine Learning for Fraud Detection in Financial Transactions

- Big Data Analytics for Social Media Monitoring

- Cybersecurity for Cloud Storage and Sharing

- Artificial Intelligence for Personalized Education

- Blockchain Technology for Secure Online Voting Systems

- Virtual Reality for Cultural Tourism

- Natural Language Processing for Chatbot Communication

- Machine Learning for Medical Diagnosis and Treatment

- Big Data Analytics for Environmental Monitoring and Management.

- Cybersecurity for Cloud Computing Environments

- Virtual Reality for Training and Simulation

- Big Data Analytics for Sports Performance Analysis

- Cybersecurity for Internet of Things (IoT) Devices

- Artificial Intelligence for Traffic Management and Control

- Blockchain Technology for Smart Contracts

- Natural Language Processing for Document Summarization

- Machine Learning for Image and Video Recognition

- Blockchain Technology for Digital Asset Management

- Virtual Reality for Entertainment and Gaming

- Natural Language Processing for Opinion Mining in Online Reviews

- Machine Learning for Customer Relationship Management

- Big Data Analytics for Environmental Monitoring and Management

- Cybersecurity for Network Traffic Analysis and Monitoring

- Artificial Intelligence for Natural Language Generation

- Blockchain Technology for Supply Chain Transparency and Traceability

- Virtual Reality for Design and Visualization

- Natural Language Processing for Speech Recognition

- Machine Learning for Recommendation Systems

- Big Data Analytics for Customer Segmentation and Targeting

- Cybersecurity for Biometric Authentication

- Artificial Intelligence for Human-Computer Interaction

- Blockchain Technology for Decentralized Finance (DeFi)

- Virtual Reality for Tourism and Cultural Heritage

- Machine Learning for Cybersecurity Threat Detection and Prevention

- Big Data Analytics for Healthcare Cost Reduction

- Cybersecurity for Data Privacy and Protection

- Artificial Intelligence for Autonomous Vehicles

- Blockchain Technology for Cryptocurrency and Blockchain Security

- Virtual Reality for Real Estate Visualization

- Natural Language Processing for Question Answering

- Big Data Analytics for Financial Markets Prediction

- Cybersecurity for Cloud-Based Machine Learning Systems

- Artificial Intelligence for Personalized Advertising

- Blockchain Technology for Digital Identity Verification

- Virtual Reality for Cultural and Language Learning

- Natural Language Processing for Semantic Analysis

- Machine Learning for Business Forecasting

- Big Data Analytics for Social Media Marketing

- Artificial Intelligence for Content Generation

- Blockchain Technology for Smart Cities

- Virtual Reality for Historical Reconstruction

- Natural Language Processing for Knowledge Graph Construction

- Machine Learning for Speech Synthesis

- Big Data Analytics for Traffic Optimization

- Artificial Intelligence for Social Robotics

- Blockchain Technology for Healthcare Data Management

- Virtual Reality for Disaster Preparedness and Response

- Natural Language Processing for Multilingual Communication

- Machine Learning for Emotion Recognition

- Big Data Analytics for Human Resources Management

- Cybersecurity for Mobile App Security

- Artificial Intelligence for Financial Planning and Investment

- Blockchain Technology for Energy Management

- Virtual Reality for Cultural Preservation and Heritage.

- Big Data Analytics for Healthcare Management

- Cybersecurity in the Internet of Things (IoT)

- Artificial Intelligence for Predictive Maintenance

- Computational Biology for Drug Discovery

- Virtual Reality for Mental Health Treatment

- Machine Learning for Sentiment Analysis in Social Media

- Human-Computer Interaction for User Experience Design

- Cloud Computing for Disaster Recovery

- Quantum Computing for Cryptography

- Intelligent Transportation Systems for Smart Cities

- Cybersecurity for Autonomous Vehicles

- Artificial Intelligence for Fraud Detection in Financial Systems

- Social Network Analysis for Marketing Campaigns

- Cloud Computing for Video Game Streaming

- Machine Learning for Speech Recognition

- Augmented Reality for Architecture and Design

- Natural Language Processing for Customer Service Chatbots

- Machine Learning for Climate Change Prediction

- Big Data Analytics for Social Sciences

- Artificial Intelligence for Energy Management

- Virtual Reality for Tourism and Travel

- Cybersecurity for Smart Grids

- Machine Learning for Image Recognition

- Augmented Reality for Sports Training

- Natural Language Processing for Content Creation

- Cloud Computing for High-Performance Computing

- Artificial Intelligence for Personalized Medicine

- Virtual Reality for Architecture and Design

- Augmented Reality for Product Visualization

- Natural Language Processing for Language Translation

- Cybersecurity for Cloud Computing

- Artificial Intelligence for Supply Chain Optimization

- Blockchain Technology for Digital Voting Systems

- Virtual Reality for Job Training

- Augmented Reality for Retail Shopping

- Natural Language Processing for Sentiment Analysis in Customer Feedback

- Cloud Computing for Mobile Application Development

- Artificial Intelligence for Cybersecurity Threat Detection

- Blockchain Technology for Intellectual Property Protection

- Virtual Reality for Music Education

- Machine Learning for Financial Forecasting

- Augmented Reality for Medical Education

- Natural Language Processing for News Summarization

- Cybersecurity for Healthcare Data Protection

- Artificial Intelligence for Autonomous Robots

- Virtual Reality for Fitness and Health

- Machine Learning for Natural Language Understanding

- Augmented Reality for Museum Exhibits

- Natural Language Processing for Chatbot Personality Development

- Cloud Computing for Website Performance Optimization

- Artificial Intelligence for E-commerce Recommendation Systems

- Blockchain Technology for Supply Chain Traceability

- Virtual Reality for Military Training

- Augmented Reality for Advertising

- Natural Language Processing for Chatbot Conversation Management

- Cybersecurity for Cloud-Based Services

- Artificial Intelligence for Agricultural Management

- Blockchain Technology for Food Safety Assurance

- Virtual Reality for Historical Reenactments

- Machine Learning for Cybersecurity Incident Response.

- Secure Multiparty Computation

- Federated Learning

- Internet of Things Security

- Blockchain Scalability

- Quantum Computing Algorithms

- Explainable AI

- Data Privacy in the Age of Big Data

- Adversarial Machine Learning

- Deep Reinforcement Learning

- Online Learning and Streaming Algorithms

- Graph Neural Networks

- Automated Debugging and Fault Localization

- Mobile Application Development

- Software Engineering for Cloud Computing

- Cryptocurrency Security

- Edge Computing for Real-Time Applications

- Natural Language Generation

- Virtual and Augmented Reality

- Computational Biology and Bioinformatics

- Internet of Things Applications

- Robotics and Autonomous Systems

- Explainable Robotics

- 3D Printing and Additive Manufacturing

- Distributed Systems

- Parallel Computing

- Data Center Networking

- Data Mining and Knowledge Discovery

- Information Retrieval and Search Engines

- Network Security and Privacy

- Cloud Computing Security

- Data Analytics for Business Intelligence

- Neural Networks and Deep Learning

- Reinforcement Learning for Robotics

- Automated Planning and Scheduling

- Evolutionary Computation and Genetic Algorithms

- Formal Methods for Software Engineering

- Computational Complexity Theory

- Bio-inspired Computing

- Computer Vision for Object Recognition

- Automated Reasoning and Theorem Proving

- Natural Language Understanding

- Machine Learning for Healthcare

- Scalable Distributed Systems

- Sensor Networks and Internet of Things

- Smart Grids and Energy Systems

- Software Testing and Verification

- Web Application Security

- Wireless and Mobile Networks

- Computer Architecture and Hardware Design

- Digital Signal Processing

- Game Theory and Mechanism Design

- Multi-agent Systems

- Evolutionary Robotics

- Quantum Machine Learning

- Computational Social Science

- Explainable Recommender Systems.

- Artificial Intelligence and its applications

- Cloud computing and its benefits

- Cybersecurity threats and solutions

- Internet of Things and its impact on society

- Virtual and Augmented Reality and its uses

- Blockchain Technology and its potential in various industries

- Web Development and Design

- Digital Marketing and its effectiveness

- Big Data and Analytics

- Software Development Life Cycle

- Gaming Development and its growth

- Network Administration and Maintenance

- Machine Learning and its uses

- Data Warehousing and Mining

- Computer Architecture and Design

- Computer Graphics and Animation

- Quantum Computing and its potential

- Data Structures and Algorithms

- Computer Vision and Image Processing

- Robotics and its applications

- Operating Systems and its functions

- Information Theory and Coding

- Compiler Design and Optimization

- Computer Forensics and Cyber Crime Investigation

- Distributed Computing and its significance

- Artificial Neural Networks and Deep Learning

- Cloud Storage and Backup

- Programming Languages and their significance

- Computer Simulation and Modeling

- Computer Networks and its types

- Information Security and its types

- Computer-based Training and eLearning

- Medical Imaging and its uses

- Social Media Analysis and its applications

- Human Resource Information Systems

- Computer-Aided Design and Manufacturing

- Multimedia Systems and Applications

- Geographic Information Systems and its uses

- Computer-Assisted Language Learning

- Mobile Device Management and Security

- Data Compression and its types

- Knowledge Management Systems

- Text Mining and its uses

- Cyber Warfare and its consequences

- Wireless Networks and its advantages

- Computer Ethics and its importance

- Computational Linguistics and its applications

- Autonomous Systems and Robotics

- Information Visualization and its importance

- Geographic Information Retrieval and Mapping

- Business Intelligence and its benefits

- Digital Libraries and their significance

- Artificial Life and Evolutionary Computation

- Computer Music and its types

- Virtual Teams and Collaboration

- Computer Games and Learning

- Semantic Web and its applications

- Electronic Commerce and its advantages

- Multimedia Databases and their significance

- Computer Science Education and its importance

- Computer-Assisted Translation and Interpretation

- Ambient Intelligence and Smart Homes

- Autonomous Agents and Multi-Agent Systems.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

200+ Funny Research Topics

500+ Sports Research Topics

300+ American History Research Paper Topics

500+ Cyber Security Research Topics

500+ Environmental Research Topics

500+ Economics Research Topics

Explore your training options in 10 minutes Get Started

- Graduate Stories

- Partner Spotlights

- Bootcamp Prep

- Bootcamp Admissions

- University Bootcamps

- Coding Tools

- Software Engineering

- Web Development

- Data Science

- Tech Guides

- Tech Resources

- Career Advice

- Online Learning

- Internships

- Apprenticeships

- Tech Salaries

- Associate Degree

- Bachelor's Degree

- Master's Degree

- University Admissions

- Best Schools

- Certifications

- Bootcamp Financing

- Higher Ed Financing

- Scholarships

- Financial Aid

- Best Coding Bootcamps

- Best Online Bootcamps

- Best Web Design Bootcamps

- Best Data Science Bootcamps

- Best Technology Sales Bootcamps

- Best Data Analytics Bootcamps

- Best Cybersecurity Bootcamps

- Best Digital Marketing Bootcamps

- Los Angeles

- San Francisco

- Browse All Locations

- Digital Marketing

- Machine Learning

- See All Subjects

- Bootcamps 101

- Full-Stack Development

- Career Changes

- View all Career Discussions

- Mobile App Development

- Cybersecurity

- Product Management

- UX/UI Design

- What is a Coding Bootcamp?

- Are Coding Bootcamps Worth It?

- How to Choose a Coding Bootcamp

- Best Online Coding Bootcamps and Courses

- Best Free Bootcamps and Coding Training

- Coding Bootcamp vs. Community College

- Coding Bootcamp vs. Self-Learning

- Bootcamps vs. Certifications: Compared

- What Is a Coding Bootcamp Job Guarantee?

- How to Pay for Coding Bootcamp

- Ultimate Guide to Coding Bootcamp Loans

- Best Coding Bootcamp Scholarships and Grants

- Education Stipends for Coding Bootcamps

- Get Your Coding Bootcamp Sponsored by Your Employer

- GI Bill and Coding Bootcamps

- Tech Intevriews

- Our Enterprise Solution

- Connect With Us

- Publication

- Reskill America

- Partner With Us

- Resource Center

- Bachelor’s Degree

- Master’s Degree

The Top 10 Most Interesting Computer Science Research Topics

Computer science touches nearly every area of our lives. With new advancements in technology, the computer science field is constantly evolving, giving rise to new computer science research topics. These topics attempt to answer various computer science research questions and how they affect the tech industry and the larger world.

Computer science research topics can be divided into several categories, such as artificial intelligence, big data and data science, human-computer interaction, security and privacy, and software engineering. If you are a student or researcher looking for computer research paper topics. In that case, this article provides some suggestions on examples of computer science research topics and questions.

Find your bootcamp match

What makes a strong computer science research topic.

A strong computer science topic is clear, well-defined, and easy to understand. It should also reflect the research’s purpose, scope, or aim. In addition, a strong computer science research topic is devoid of abbreviations that are not generally known, though, it can include industry terms that are currently and generally accepted.

Tips for Choosing a Computer Science Research Topic

- Brainstorm . Brainstorming helps you develop a few different ideas and find the best topic for you. Some core questions you should ask are, What are some open questions in computer science? What do you want to learn more about? What are some current trends in computer science?

- Choose a sub-field . There are many subfields and career paths in computer science . Before choosing a research topic, ensure that you point out which aspect of computer science the research will focus on. That could be theoretical computer science, contemporary computing culture, or even distributed computing research topics.

- Aim to answer a question . When you’re choosing a research topic in computer science, you should always have a question in mind that you’d like to answer. That helps you narrow down your research aim to meet specified clear goals.

- Do a comprehensive literature review . When starting a research project, it is essential to have a clear idea of the topic you plan to study. That involves doing a comprehensive literature review to better understand what has been learned about your topic in the past.

- Keep the topic simple and clear. The topic should reflect the scope and aim of the research it addresses. It should also be concise and free of ambiguous words. Hence, some researchers recommended that the topic be limited to five to 15 substantive words. It can take the form of a question or a declarative statement.

What’s the Difference Between a Research Topic and a Research Question?

A research topic is the subject matter that a researcher chooses to investigate. You may also refer to it as the title of a research paper. It summarizes the scope of the research and captures the researcher’s approach to the research question. Hence, it may be broad or more specific. For example, a broad topic may read, Data Protection and Blockchain, while a more specific variant can read, Potential Strategies to Privacy Issues on the Blockchain.

On the other hand, a research question is the fundamental starting point for any research project. It typically reflects various real-world problems and, sometimes, theoretical computer science challenges. As such, it must be clear, concise, and answerable.

How to Create Strong Computer Science Research Questions

To create substantial computer science research questions, one must first understand the topic at hand. Furthermore, the research question should generate new knowledge and contribute to the advancement of the field. It could be something that has not been answered before or is only partially answered. It is also essential to consider the feasibility of answering the question.

Top 10 Computer Science Research Paper Topics

1. battery life and energy storage for 5g equipment.

The 5G network is an upcoming cellular network with much higher data rates and capacity than the current 4G network. According to research published in the European Scientific Institute Journal, one of the main concerns with the 5G network is the high energy consumption of the 5G-enabled devices . Hence, this research on this topic can highlight the challenges and proffer unique solutions to make more energy-efficient designs.

2. The Influence of Extraction Methods on Big Data Mining

Data mining has drawn the scientific community’s attention, especially with the explosive rise of big data. Many research results prove that the extraction methods used have a significant effect on the outcome of the data mining process. However, a topic like this analyzes algorithms. It suggests strategies and efficient algorithms that may help understand the challenge or lead the way to find a solution.

3. Integration of 5G with Analytics and Artificial Intelligence

According to the International Finance Corporation, 5G and AI technologies are defining emerging markets and our world. Through different technologies, this research aims to find novel ways to integrate these powerful tools to produce excellent results. Subjects like this often spark great discoveries that pioneer new levels of research and innovation. A breakthrough can influence advanced educational technology, virtual reality, metaverse, and medical imaging.

4. Leveraging Asynchronous FPGAs for Crypto Acceleration

To support the growing cryptocurrency industry, there is a need to create new ways to accelerate transaction processing. This project aims to use asynchronous Field-Programmable Gate Arrays (FPGAs) to accelerate cryptocurrency transaction processing. It explores how various distributed computing technologies can influence mining cryptocurrencies faster with FPGAs and generally enjoy faster transactions.

5. Cyber Security Future Technologies

Cyber security is a trending topic among businesses and individuals, especially as many work teams are going remote. Research like this can stretch the length and breadth of the cyber security and cloud security industries and project innovations depending on the researcher’s preferences. Another angle is to analyze existing or emerging solutions and present discoveries that can aid future research.

6. Exploring the Boundaries Between Art, Media, and Information Technology

The field of computers and media is a vast and complex one that intersects in many ways. They create images or animations using design technology like algorithmic mechanism design, design thinking, design theory, digital fabrication systems, and electronic design automation. This paper aims to define how both fields exist independently and symbiotically.

7. Evolution of Future Wireless Networks Using Cognitive Radio Networks

This research project aims to study how cognitive radio technology can drive evolution in future wireless networks. It will analyze the performance of cognitive radio-based wireless networks in different scenarios and measure its impact on spectral efficiency and network capacity. The research project will involve the development of a simulation model for studying the performance of cognitive radios in different scenarios.

8. The Role of Quantum Computing and Machine Learning in Advancing Medical Predictive Systems

In a paper titled Exploring Quantum Computing Use Cases for Healthcare , experts at IBM highlighted precision medicine and diagnostics to benefit from quantum computing. Using biomedical imaging, machine learning, computational biology, and data-intensive computing systems, researchers can create more accurate disease progression prediction, disease severity classification systems, and 3D Image reconstruction systems vital for treating chronic diseases.

9. Implementing Privacy and Security in Wireless Networks

Wireless networks are prone to attacks, and that has been a big concern for both individual users and organizations. According to the Cyber Security and Infrastructure Security Agency CISA, cyber security specialists are working to find reliable methods of securing wireless networks . This research aims to develop a secure and privacy-preserving communication framework for wireless communication and social networks.

10. Exploring the Challenges and Potentials of Biometric Systems Using Computational Techniques

Much discussion surrounds biometric systems and the potential for misuse and privacy concerns. When exploring how biometric systems can be effectively used, issues such as verification time and cost, hygiene, data bias, and cultural acceptance must be weighed. The paper may take a critical study into the various challenges using computational tools and predict possible solutions.

Other Examples of Computer Science Research Topics & Questions

Computer research topics.

- The confluence of theoretical computer science, deep learning, computational algorithms, and performance computing

- Exploring human-computer interactions and the importance of usability in operating systems

- Predicting the limits of networking and distributed systems

- Controlling data mining on public systems through third-party applications

- The impact of green computing on the environment and computational science

Computer Research Questions

- Why are there so many programming languages?

- Is there a better way to enhance human-computer interactions in computer-aided learning?

- How safe is cloud computing, and what are some ways to enhance security?

- Can computers effectively assist in the sequencing of human genes?

- How valuable is SCRUM methodology in Agile software development?

Choosing the Right Computer Science Research Topic

Computer science research is a vast field, and it can be challenging to choose the right topic. There are a few things to keep in mind when making this decision. Choose a topic that you are interested in. This will make it easier to stay motivated and produce high-quality research for your computer science degree .

Select a topic that is relevant to your field of study. This will help you to develop specialized knowledge in the area. Choose a topic that has potential for future research. This will ensure that your research is relevant and up-to-date. Typically, coding bootcamps provide a framework that streamlines students’ projects to a specific field, doing their search for a creative solution more effortless.

Computer Science Research Topics FAQ

To start a computer science research project, you should look at what other content is out there. Complete a literature review to know the available findings surrounding your idea. Design your research and ensure that you have the necessary skills and resources to complete the project.

The first step to conducting computer science research is to conceptualize the idea and review existing knowledge about that subject. You will design your research and collect data through surveys or experiments. Analyze your data and build a prototype or graphical model. You will also write a report and present it to a recognized body for review and publication.

You can find computer science research jobs on the job boards of many universities. Many universities have job boards on their websites that list open positions in research and academia. Also, many Slack and GitHub channels for computer scientists provide regular updates on available projects.

There are several hot topics and questions in AI that you can build your research on. Below are some AI research questions you may consider for your research paper.

- Will it be possible to build artificial emotional intelligence?

- Will robots replace humans in all difficult cumbersome jobs as part of the progress of civilization?

- Can artificial intelligence systems self-improve with knowledge from the Internet?

About us: Career Karma is a platform designed to help job seekers find, research, and connect with job training programs to advance their careers. Learn about the CK publication .

What's Next?

Get matched with top bootcamps

Ask a question to our community, take our careers quiz.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

- Who’s Teaching What

- Subject Updates

- MEng program

- Opportunities

- Minor in Computer Science

- Resources for Current Students

- Program objectives and accreditation

- Graduate program requirements

- Admission process

- Degree programs

- Graduate research

- EECS Graduate Funding

- Resources for current students

- Student profiles

- Instructors

- DEI data and documents

- Recruitment and outreach

- Community and resources

- Get involved / self-education

- Rising Stars in EECS

- Graduate Application Assistance Program (GAAP)

- MIT Summer Research Program (MSRP)

- Sloan-MIT University Center for Exemplary Mentoring (UCEM)

- Electrical Engineering

- Computer Science

- Artificial Intelligence + Decision-making

AI and Society

Ai for healthcare and life sciences, artificial intelligence and machine learning, biological and medical devices and systems, communications systems.

- Computational Biology

Computational Fabrication and Manufacturing

Computer architecture, educational technology, electronic, magnetic, optical and quantum materials and devices, graphics and vision, human-computer interaction, information science and systems, integrated circuits and systems, nanoscale materials, devices, and systems, natural language and speech processing, optics + photonics, optimization and game theory, programming languages and software engineering, quantum computing, communication, and sensing, security and cryptography, signal processing, systems and networking, systems theory, control, and autonomy, theory of computation.

- Departmental History

- Departmental Organization

- Visiting Committee

- Explore all research areas

EECS’ research covers a wide variety of topics in electrical engineering , computer science , and artificial intelligence and decision-making .

The future of our society is interwoven with the future of data-driven thinking—most prominently, artificial intelligence is set to reshape every aspect of our lives. Research in this area studies the interface between AI-driven systems and human actors, exploring both the impact of data-driven decision-making on human behavior and experience, and how AI technologies can be used to improve access to opportunities. This research combines a variety of areas including AI, machine learning, economics, social psychology, and law.

Our goal is to develop AI technologies that will change the landscape of healthcare. This includes early diagnostics, drug discovery, care personalization and management. Building on MIT’s pioneering history in artificial intelligence and life sciences, we are working on algorithms suitable for modeling biological and clinical data across a range of modalities including imaging, text and genomics.

Our research covers a wide range of topics of this fast-evolving field, advancing how machines learn, predict, and control, while also making them secure, robust and trustworthy. Research covers both the theory and applications of ML. This broad area studies ML theory (algorithms, optimization, …), statistical learning (inference, graphical models, causal analysis, …), deep learning, reinforcement learning, symbolic reasoning ML systems, as well as diverse hardware implementations of ML.

We develop the technology and systems that will transform the future of biology and healthcare. Specific areas include biomedical sensors and electronics, nano- and micro-technologies, imaging, and computational modeling of disease.

We develop the next generation of wired and wireless communications systems, from new physical principles (e.g., light, terahertz waves) to coding and information theory, and everything in between.

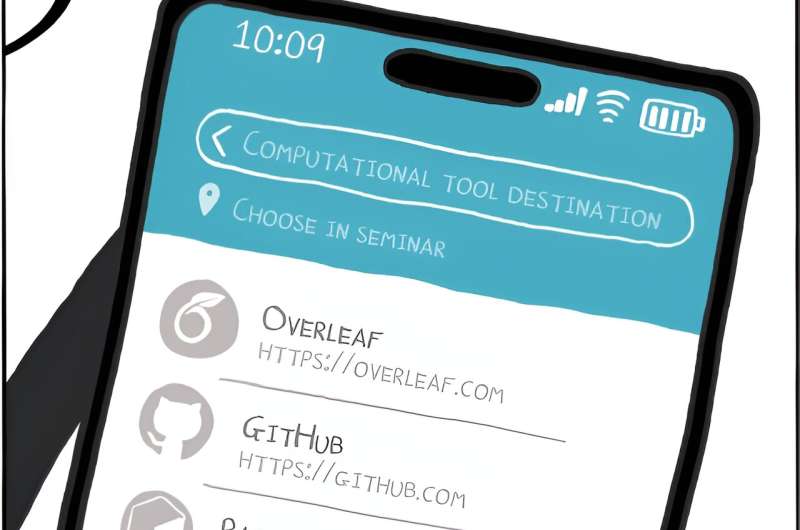

We bring some of the most powerful tools in computation to bear on design problems, including modeling, simulation, processing and fabrication.

We design the next generation of computer systems. Working at the intersection of hardware and software, our research studies how to best implement computation in the physical world. We design processors that are faster, more efficient, easier to program, and secure. Our research covers systems of all scales, from tiny Internet-of-Things devices with ultra-low-power consumption to high-performance servers and datacenters that power planet-scale online services. We design both general-purpose processors and accelerators that are specialized to particular application domains, like machine learning and storage. We also design Electronic Design Automation (EDA) tools to facilitate the development of such systems.

Educational technology combines both hardware and software to enact global change, making education accessible in unprecedented ways to new audiences. We develop the technology that makes better understanding possible.

Our research spans a wide range of materials that form the next generation of devices, and includes groundbreaking research on graphene & 2D materials, quantum computing, MEMS & NEMS, and new substrates for computation.

Our research focuses on solving challenges related to the transduction, transmission, and control of energy and energy systems. We develop new materials for energy storage, devices and power electronics for harvesting, generation and processing of energy, and control of large-scale energy systems.

The shared mission of Visual Computing is to connect images and computation, spanning topics such as image and video generation and analysis, photography, human perception, touch, applied geometry, and more.

The focus of our research in Human-Computer Interaction (HCI) is inventing new systems and technology that lie at the interface between people and computation, and understanding their design, implementation, and societal impact.

This broad research theme covered activities across all aspects of systems that process information, and the underlying science and mathematics, and includes communications, networking & information theory; numerical and computational simulation and prototyping; signal processing and inference; medical imaging; data science, statistics and inference.

Our field deals with the design and creation of sophisticated circuits and systems for applications ranging from computation to sensing.

Our research focuses on the creation of materials and devices at the nano scale to create novel systems across a wide variety of application areas.

Our research encompasses all aspects of speech and language processing—ranging from the design of fundamental machine learning methods to the design of advanced applications that can extract information from documents, translate between languages, and execute instructions in real-world environments.

Our work focuses on materials, devices, and systems for optical and photonic applications, with applications in communications and sensing, femtosecond optics, laser technologies, photonic bandgap fibers and devices, laser medicine and medical imaging, and millimeter-wave and terahertz devices.

Research in this area focuses on developing efficient and scalable algorithms for solving large scale optimization problems in engineering, data science and machine learning. Our work also studies optimal decision making in networked settings, including communication networks, energy systems and social networks. The multi-agent nature of many of these systems also has led to several research activities that rely on game-theoretic approaches.

We develop new approaches to programming, whether that takes the form of programming languages, tools, or methodologies to improve many aspects of applications and systems infrastructure.

Our work focuses on developing the next substrate of computing, communication and sensing. We work all the way from new materials to superconducting devices to quantum computers to theory.

Our research focuses on robotic hardware and algorithms, from sensing to control to perception to manipulation.

Our research is focused on making future computer systems more secure. We bring together a broad spectrum of cross-cutting techniques for security, from theoretical cryptography and programming-language ideas, to low-level hardware and operating-systems security, to overall system designs and empirical bug-finding. We apply these techniques to a wide range of application domains, such as blockchains, cloud systems, Internet privacy, machine learning, and IoT devices, reflecting the growing importance of security in many contexts.

Signal processing focuses on algorithms and hardware for analyzing, modifying and synthesizing signals and data, across a wide variety of application domains. As a technology it plays a key role in virtually every aspect of modern life including for example entertainment, communications, travel, health, defense and finance.

From distributed systems and databases to wireless, the research conducted by the systems and networking group aims to improve the performance, robustness, and ease of management of networks and computing systems.

Our theoretical research includes quantification of fundamental capabilities and limitations of feedback systems, inference and control over networks, and development of practical methods and algorithms for decision making under uncertainty.

Theory of Computation (TOC) studies the fundamental strengths and limits of computation, how these strengths and limits interact with computer science and mathematics, and how they manifest themselves in society, biology, and the physical world.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Computer science articles from across Nature Portfolio

Computer science is the study and development of the protocols required for automated processing and manipulation of data. This includes, for example, creating algorithms for efficiently searching large volumes of information or encrypting data so that it can be stored and transmitted securely.

Latest Research and Reviews

Fine tuning deep learning models for breast tumor classification

- Abeer Heikal

- Amir El-Ghamry

- M. Z. Rashad

Classification performance assessment for imbalanced multiclass data

- Jesús S. Aguilar-Ruiz

- Marcin Michalak

ROSPaCe: Intrusion Detection Dataset for a ROS2-Based Cyber-Physical System and IoT Networks

- Tommaso Puccetti

- Simone Nardi

- Andrea Ceccarelli

A maturity model for catalogues of semantic artefacts

- Oscar Corcho

- Fajar J. Ekaputra

- Emanuele Storti

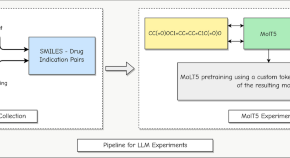

Emerging opportunities of using large language models for translation between drug molecules and indications

- David Oniani

- Jordan Hilsman

- Yanshan Wang

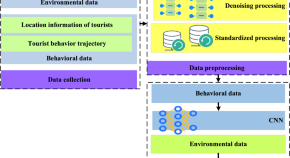

The analysis of ecological security and tourist satisfaction of ice-and-snow tourism under deep learning and the Internet of Things

- Baiju Zhang

News and Comment

Autonomous interference-avoiding machine-to-machine communications

An article in IEEE Journal on Selected Areas in Communications proposes algorithmic solutions to dynamically optimize MIMO waveforms to minimize or eliminate interference in autonomous machine-to-machine communications.

AI now beats humans at basic tasks — new benchmarks are needed, says major report

Stanford University’s 2024 AI Index charts the meteoric rise of artificial-intelligence tools.

- Nicola Jones

Medical artificial intelligence should do no harm

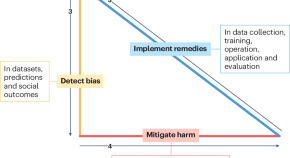

Bias and distrust in medicine have been perpetuated by the misuse of medical equations, algorithms and devices. Artificial intelligence (AI) can exacerbate these problems. However, AI also has potential to detect, mitigate and remedy the harmful effects of bias to build trust and improve healthcare for everyone.

- Melanie E. Moses

- Sonia M. Gipson Rankin

AI hears hidden X factor in zebra finch love songs

Machine learning detects song differences too subtle for humans to hear, and physicists harness the computing power of the strange skyrmion.

- Nick Petrić Howe

- Benjamin Thompson

Three reasons why AI doesn’t model human language

- Johan J. Bolhuis

- Stephen Crain

- Andrea Moro

Generative artificial intelligence in chemical engineering

Generative artificial intelligence will transform the way we design and operate chemical processes, argues Artur M. Schweidtmann.

- Artur M. Schweidtmann

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

7 Important Computer Science Trends 2024-2027

You may also like:

- Key Data Science Trends

- Top AI and Machine Learning Trends

- Important Technology Trends

Here are the 7 fastest-growing computer science trends happening right now.

And how these technologies are challenging the status quo in the office and on college campuses.

Whether you’re a fresh computer science graduate or a veteran IT executive, these are the top trends to explore.

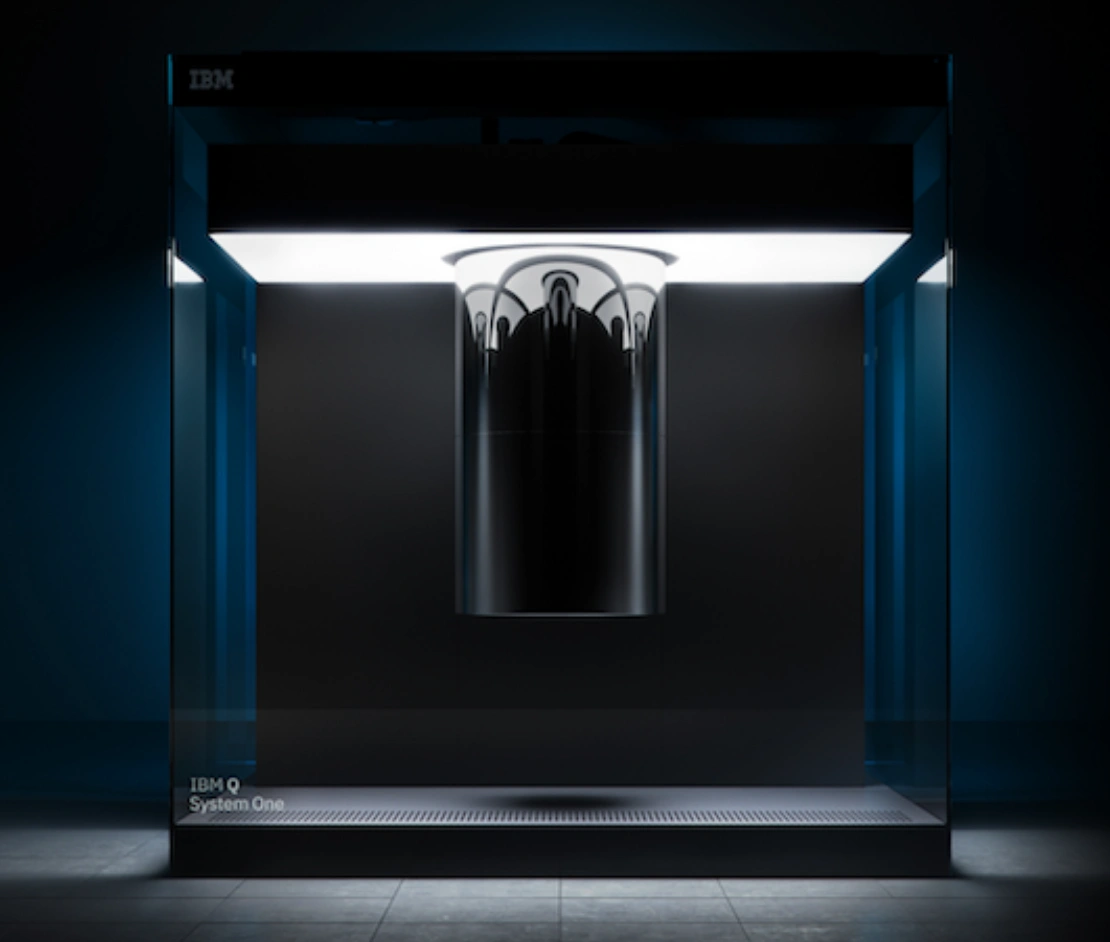

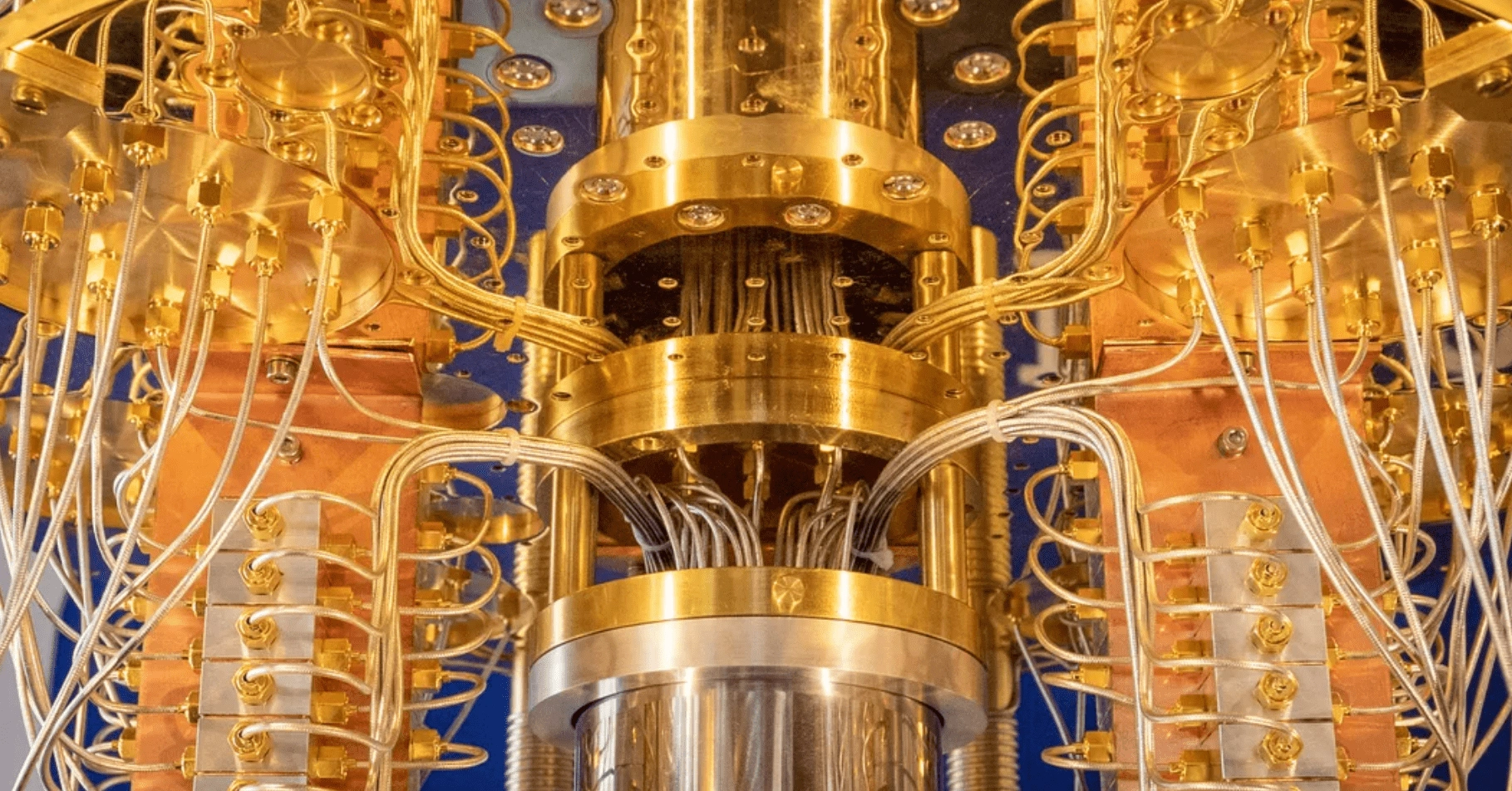

1. Quantum computing makes waves

Quantum computing is the use of quantum mechanics, such as entanglement and superposition, to perform computations.

It uses quantum bits ( qubits ) in a similar way that regular computers use bits.

Quantum computers have the potential to solve problems that would take the world's most powerful supercomputers millions of years .

Companies including IBM, Microsoft and Google are all in competition to build reliable quantum computers.

In fact, In September 2019, Google AI and NASA published a joint paper that claimed to have achieved "quantum supremacy".

This is when a quantum computer outperforms a traditional one at a particular task.

Quantum computers have the potential to completely transform data science.

They also have the potential to accelerate the development of artificial intelligence, virtual reality, big data, deep learning, encryption, medicine and more.

The downside is that quantum computers are currently incredibly difficult to build and sensitive to interference.

Despite current limitations, it's fair to expect further advances from Google and others that will help make quantum computers practical to use.

Which would position quantum computing as one of the most important computer science trends in the coming years.

2. Zero Trust becomes the norm

Most information security frameworks used by organizations use traditional trust authentication methods (like passwords).

These frameworks focus on protecting network access.

And they assume that anyone that has access to the network should be able to access any data and resources they'd like.

There's a big downside to this approach: a bad actor who has got in via any entry point can then move around freely to access all data or delete it altogether.

Zero Trust information security models aim to prevent this potential vulnerability.

Zero Trust models replace the old assumption that every user within an organization’s network can be trusted.

Instead, nobody is trusted, whether they’re already inside or outside the network.

Verification is required from everyone trying to gain access to any resource on the network.

Huge companies like Cisco are investing heavily to develop Zero Trust solutions.

This security architecture is quickly moving from just a computer science concept to industry best practice.

And it’s little wonder why: IBM reports that the average data breach costs a company $3.86 million in damages .

And that it takes an average of 280 days to fully recover.

We will see demand for this technology continue to skyrocket in 2024 and beyond as businesses adopt zero-trust security to mitigate this risk.

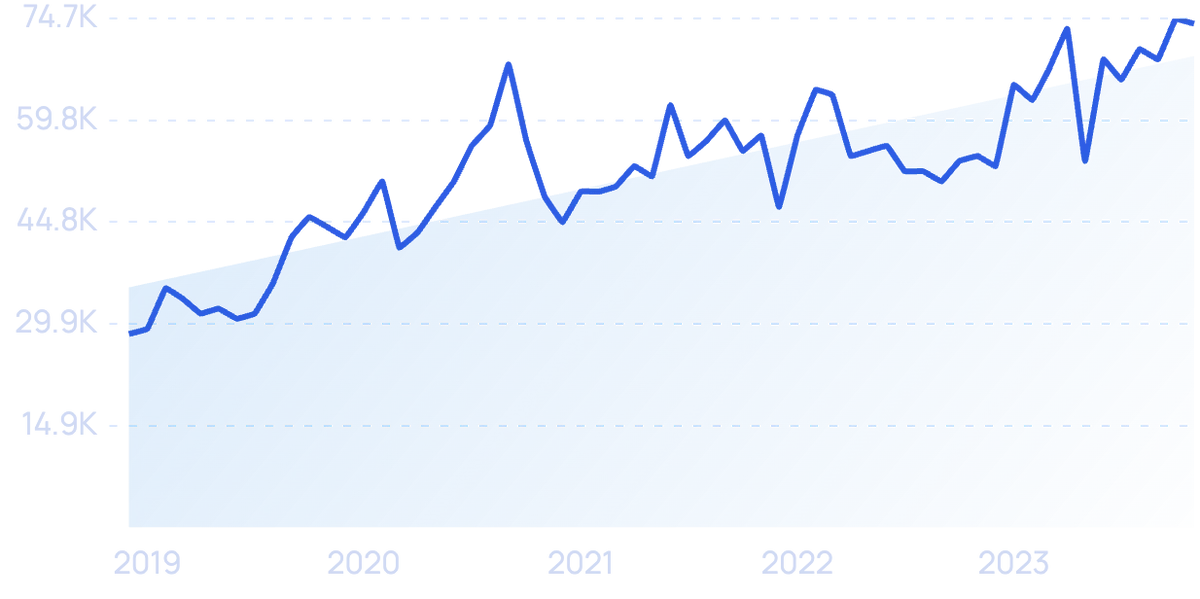

3. Cloud computing hits the edge

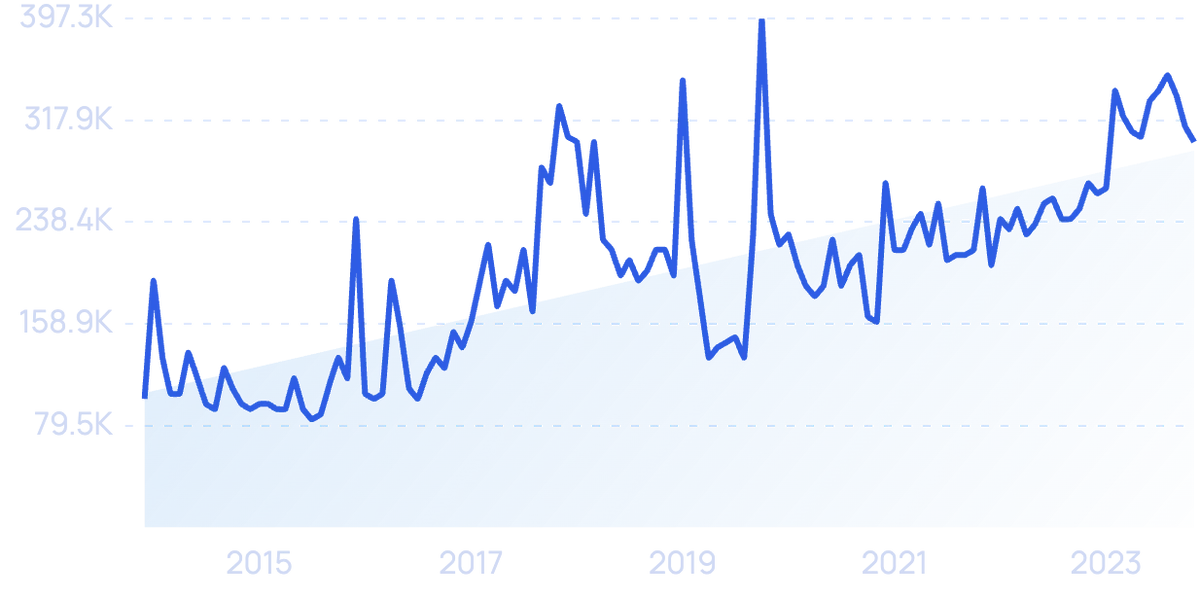

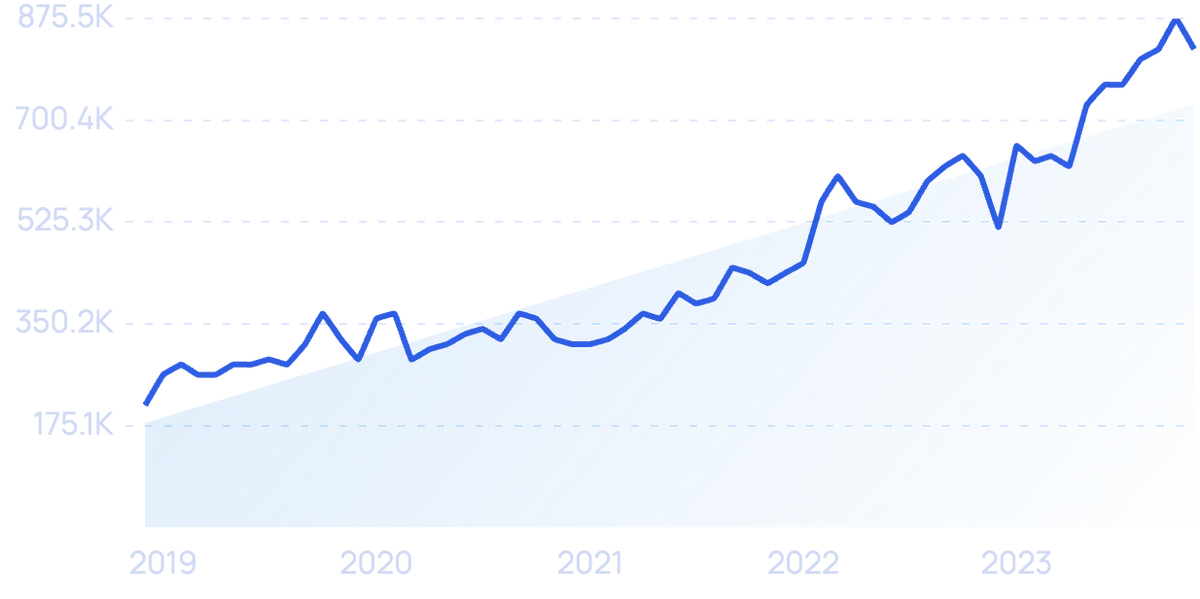

“ Edge computing ” searches have risen 161% over the past 5 years. This market may be worth $8.67 billion by 2025.

Gartner estimates that 80% of enterprises will shut down their traditional data centers by 2025.

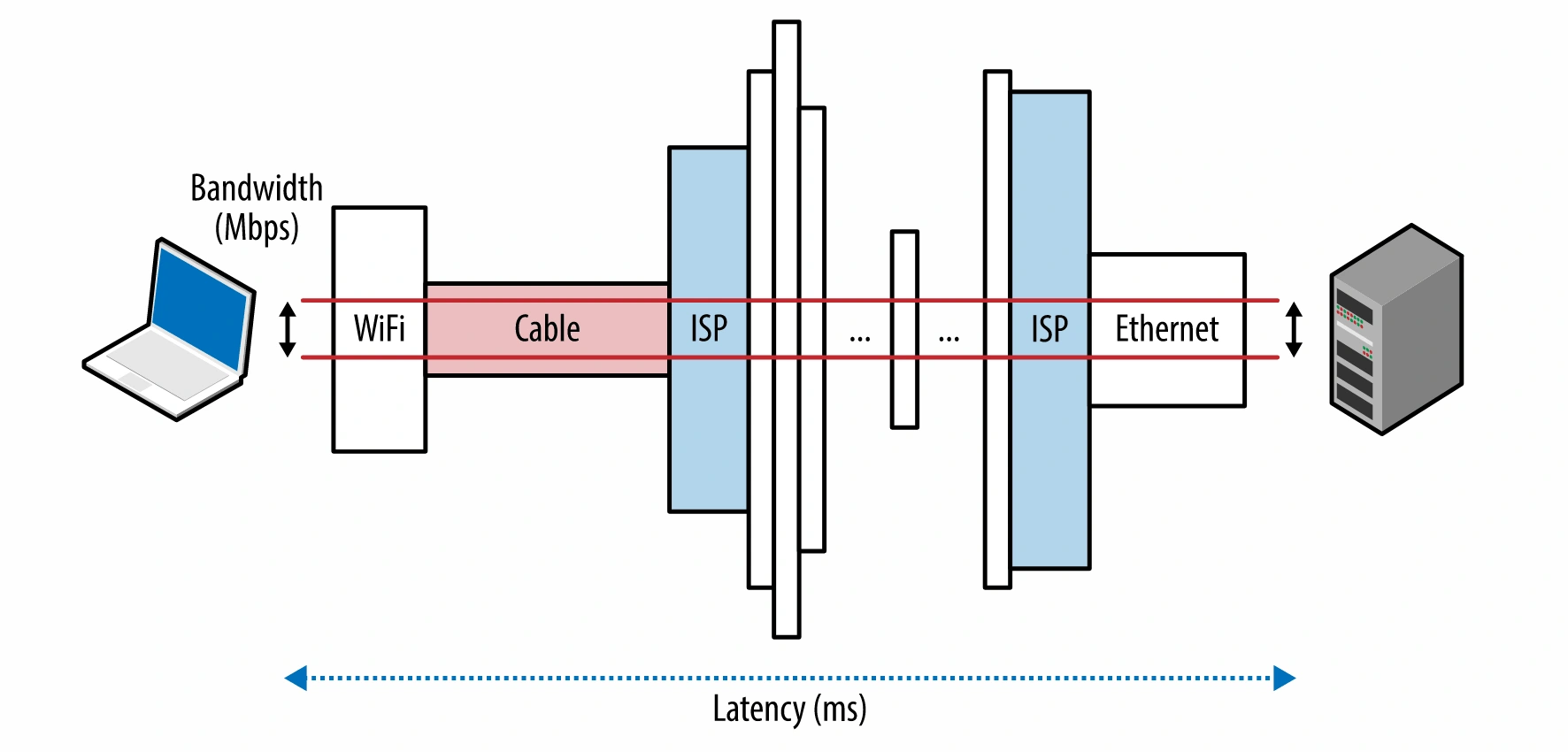

This is mainly because traditional cloud computing relies on servers in one central location.

If the end-user is in another country, they have to wait while data travels thousands of miles.

Latency issues like this can really hamper an application’s performance (especially for high-bandwidth media, like video).

Which is why many companies are moving over to edge computing service providers instead.

Modern edge computing brings computation, data storage, and data analytics as close as possible to the end-user location.

And when edge servers host web applications the result is massively improved response times.

As a result, some estimates suggest that the edge computing market will be worth $61.14 billion by 2028.

And Content Delivery Networks like Cloudflare that make edge computing easy and accessible will increasingly power the web.

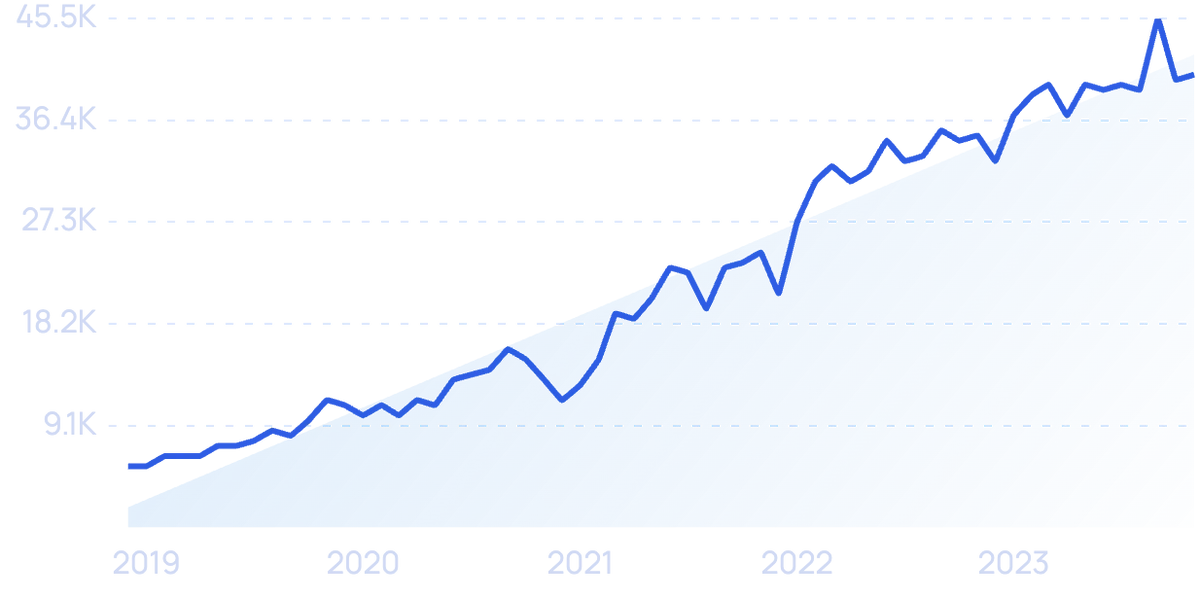

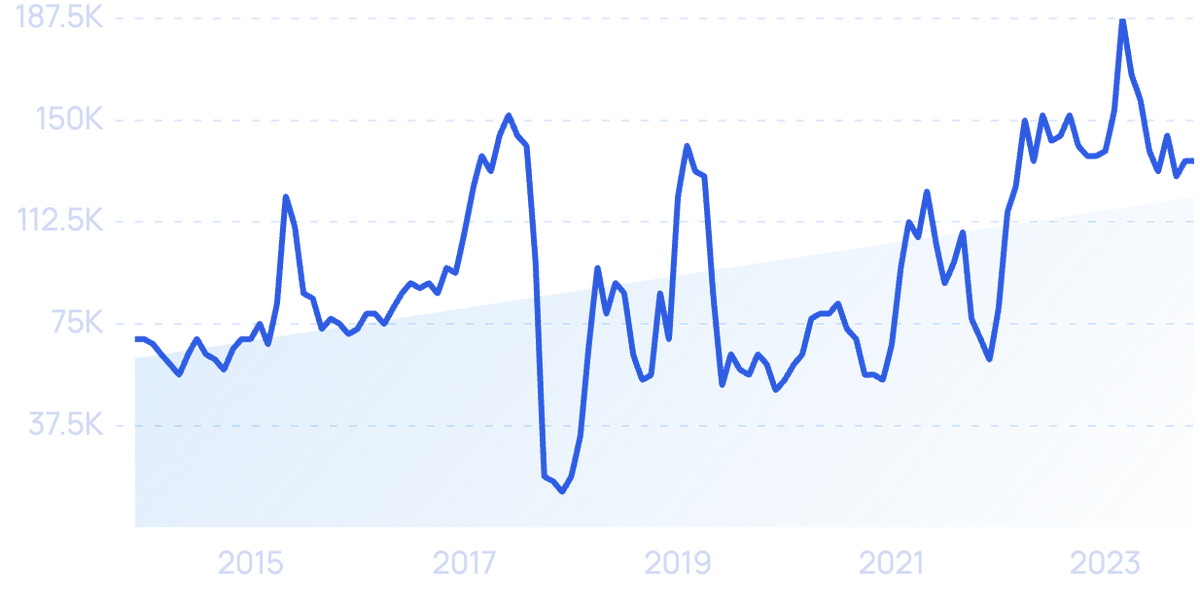

4. Kotlin overtakes Java

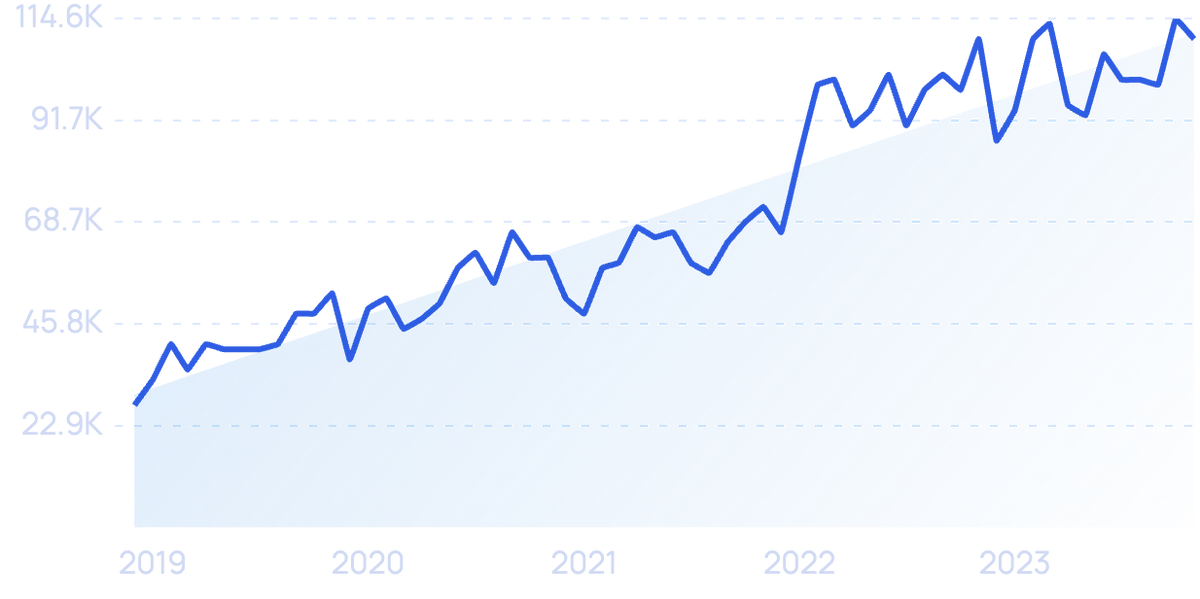

“ Kotlin ” searches are up 95% in 5 years. Interest in this programming language rocketed in 2022.

Kotlin is a general-purpose programming language that first appeared in 2011.

It’s designed specifically to be a more concise and streamlined version of Java.

And so it works for both JVM (Java Virtual Machine) and Android development.

Kotlin is billed as a modern programming language that makes developers happier.

There are over 7 million Java programmers in the world right now.

Since Kotlin offers big advantages over Java, we can expect more and more programmers to make the switch between 2023 and 2026.

Google even made the announcement in 2019 that Kotlin is now its preferred language for Android app developers.

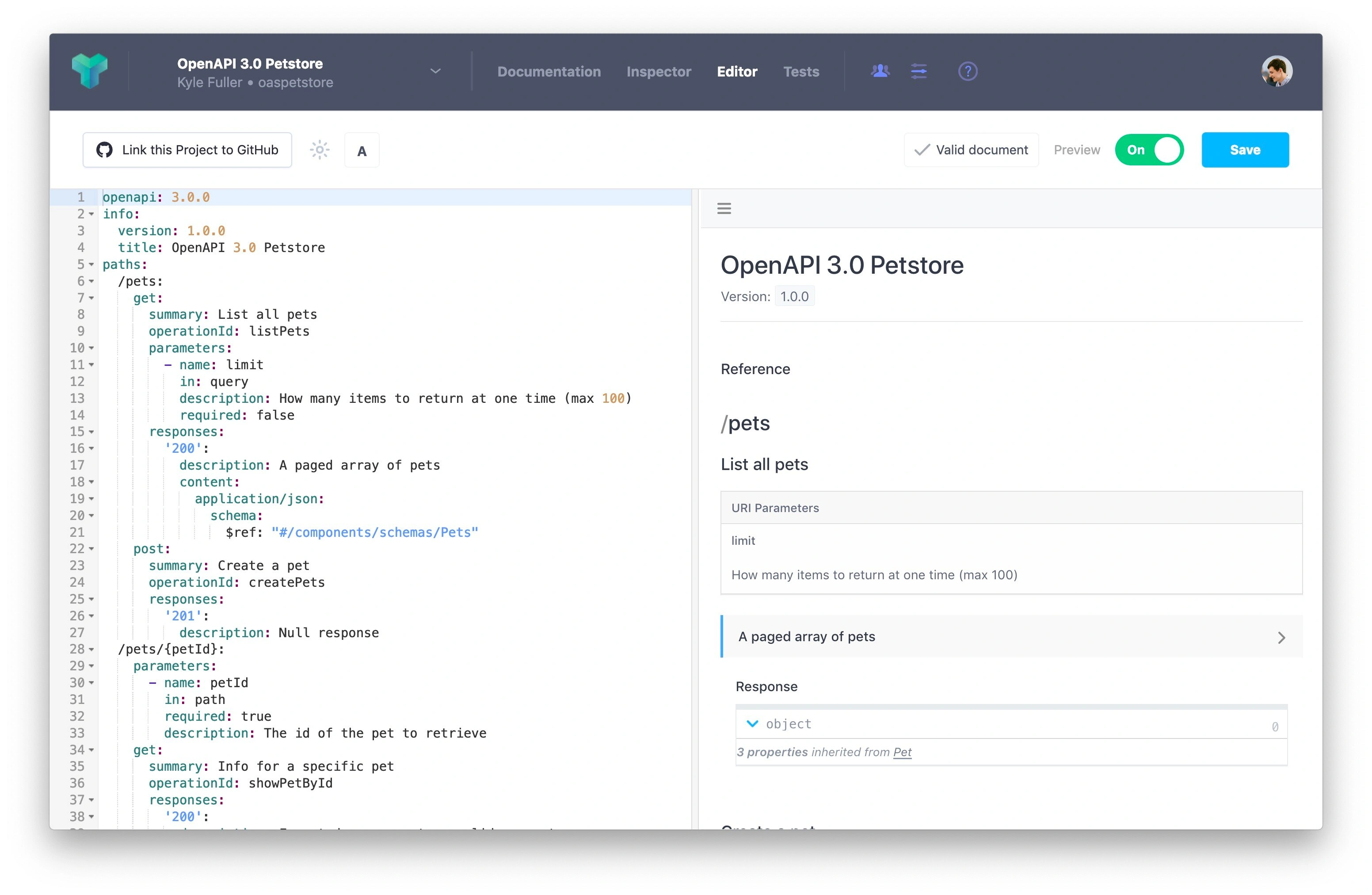

5. The web becomes more standardized

REST (Representational State Transfer) web services power the internet and the data behind it.

But the structure of each REST API data source varies wildly.

It depends entirely on how the individual programmer behind it decided to design it.

The OpenAPI Specification (OAS) changes this. It’s essentially a description format for REST APIs.

Data sources that implement OAS are easy to learn and readable to both humans and machines.

This is because an OpenAPI file describes the entire API, including available endpoints, operations and outputs.

This standardization enables the automation of previously time-consuming tasks.

For example, tools like Swagger generate code, documentation and test cases given the OAS interface file.

This can save a huge amount of engineering time both upfront and in the long run.

Another technology that takes this concept to the next level is GraphQL . This is a data query language for APIs developed at Facebook .

It provides a complete description of the data available in a particular source. And it also gives clients the ability to ask for only the specific parts of the data they need and nothing more.

GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data.

It too has become widely used and massively popular. Frameworks and specifications like this that standardize all aspects of the internet will continue to gain wide adoption.

6. More digital twins

Interest in “ Digital twin ” has steadily grown (300%) over the last 5 years.

A digital twin is a software representation of a real-world entity or process, from which you can generate and analyze simulation data.

This way you can improve efficiency and avoid problems before devices are even built and deployed.

GE is the big name in the field and has developed internal digital twin technology to improve its own jet-engine manufacturing process.

GE's Predix platform is a huge player in the digital twin technology market.

This technology was initially only available at the big enterprise level, with GE’s Predix industrial Internet of Things (IoT) platform.

But now we’re seeing its usage permeate across other sectors like retail warehousing, auto manufacturing, and healthcare planning.

Yet case studies of these real-world use cases are thin on the ground, so the people who produce them will set themselves up as industry experts in their field.

7. Demand for cybersecurity expertise skyrockets

“ Hack The Box ” searches have increased by 285% over 5 years.

According to CNET, at least 7.9 billion records (including credit card numbers, home addresses and phone numbers) were exposed through data breaches in 2019 alone.

As a consequence, large numbers of companies seek cybersecurity expertise to protect themselves.

Hack The Box is an online platform that has a wealth of educational information and hundreds of cybersecurity-themed challenges.

And they have 290,000 active users that test and improve their skills in penetration testing.

So they’ve become the go-to place for companies to recruit new talent for their cybersecurity teams.

Hack The Box is a hacker haven both in terms of content and design.

And software that helps people to identify if they’ve had their credentials compromised by data breaches will also trend.

One of the most well-known tools currently is Have I Been Pwned .

It allows you to search across multiple data breaches to see if your email address has been compromised.

That's our list of the 7 most important computer science trends to keep an eye on over the next 3-4 years.

From machine learning to blockchain to AR, it's an exciting time to be in the computer science field.

CS has always been a rapidly changing industry.

But with the growth of completely new technologies (especially cloud computing and machine learning), it's fair to expect that the rate of change will increase in 2024 and beyond.

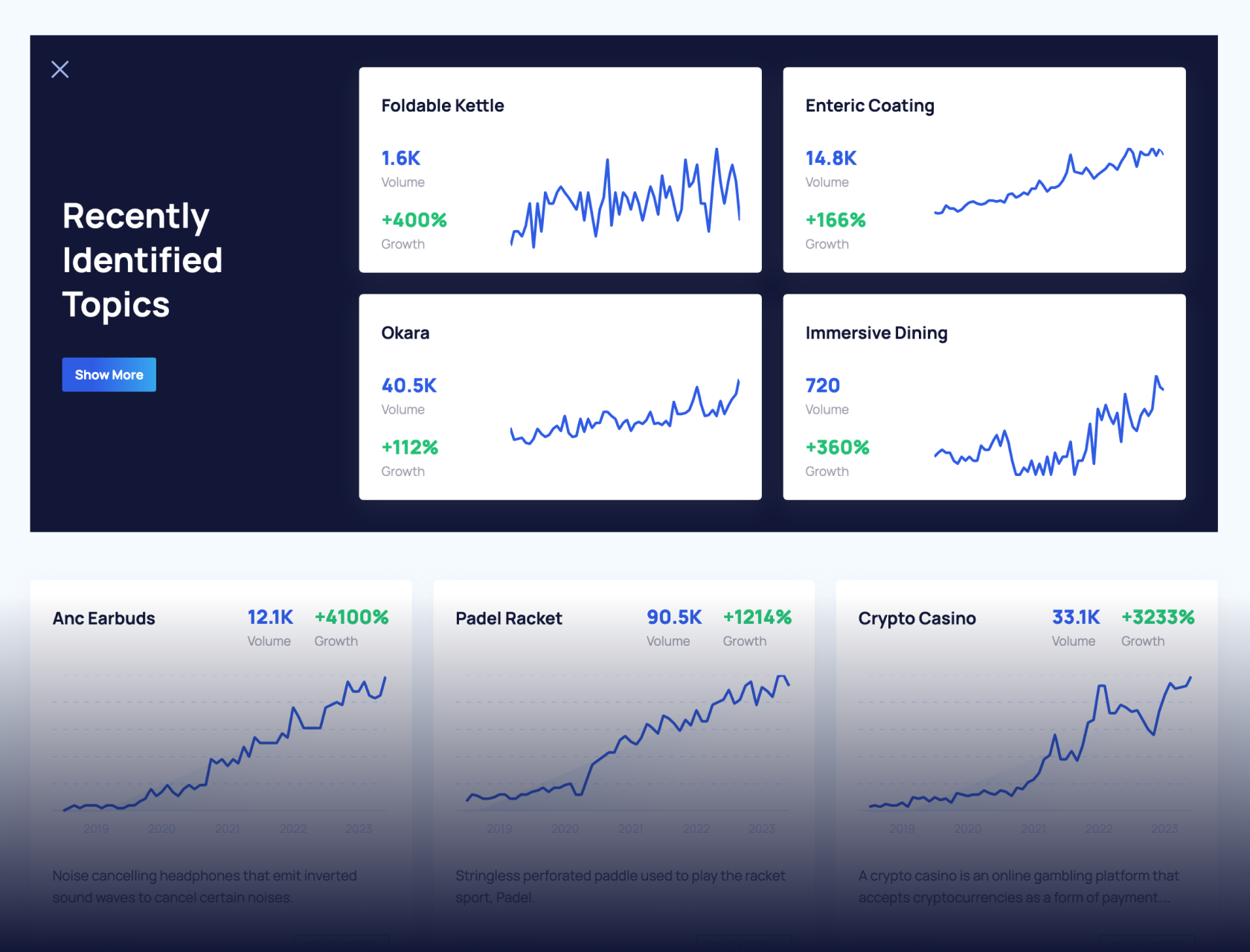

Find Thousands of Trending Topics With Our Platform

What Are the Latest Trends in Computer Science and Technology?

Holland Webb

Contributing Writer

Learn about our editorial process .

Updated April 30, 2024

Mitch Jacobson

Contributing Editor

Reviewed by

Monali Mirel Chuatico

Contributing Reviewer

Our Integrity Network

ComputerScience.org is committed to delivering content that is objective and actionable. To that end, we have built a network of industry professionals across higher education to review our content and ensure we are providing the most helpful information to our readers.

Drawing on their firsthand industry expertise, our Integrity Network members serve as an additional step in our editing process, helping us confirm our content is accurate and up to date. These contributors:

- Suggest changes to inaccurate or misleading information.

- Provide specific, corrective feedback.

- Identify critical information that writers may have missed.

Integrity Network members typically work full time in their industry profession and review content for ComputerScience.org as a side project. All Integrity Network members are paid members of the Red Ventures Education Integrity Network.

Explore our full list of Integrity Network members.

ComputerScience.org is an advertising-supported site. Featured or trusted partner programs and all school search, finder, or match results are for schools that compensate us. This compensation does not influence our school rankings, resource guides, or other editorially-independent information published on this site.

Are you ready to discover your college program?

Computer science is among the most future-proof career fields — it's always changing and becomes more enmeshed in our lives every day. The latest computer technology can learn to adjust its actions to suit its environment, help carry out a complex surgery, map an organism's genome, or drive a car.

A complex web of industry needs, national security interests, healthcare opportunities, supply chain fragility, and user demand drive these trends. Technology developers and engineers are building the tools that may solve the climate crisis, make exceptional healthcare accessible to rural residents, or optimize the global supply chain.

If you plan to serve in computer science, you need to stay abreast of the trends — or risk falling behind.

Popular Online Programs

Learn about start dates, transferring credits, availability of financial aid, and more by contacting the universities below.

Top Computer Science Trends

Jump to a Computer Science Trend: Generative AI | Quantum Computing | Bioinformatics | Remote Healthcare | Cybersecurity | Autonomic, Autonomous, and Hybrid Systems | Regenerative AgriTech

Generative AI

Generative artificial intelligence (AI) is a type of artificial intelligence that can create new content, such as articles, images, and videos. Anyone who has used ChatGPT or Microsoft Copilot has toyed with generative AI. These AI models can summarize and classify information or answer questions because they have been trained to recognize patterns in data.

The research firm McKinsey & Company predicts that in the coming years, generative AI could contribute $4.4 trillion to the economy annually. Despite its obvious business advantages, many AI tools create content that sounds convincing and authoritative but is riddled with inaccuracies. Using this content can put companies at risk of regulatory penalties or consumer pushback.

Still, machine learning engineers, data scientists , and chatbot developers strive to make AI better and more accessible.

Job Outlook: The U.S. Bureau of Labor Statistics (BLS) projects that computer and information research scientist jobs, a broad career category that includes AI researchers, will grow 23% from 2022 to 2032. These workers earned a median wage of $145,080 in 2023. According to Payscale , as of April 2024, machine learning engineers make an average annual salary of $118,350.

Potential Careers:

- Computer Researcher

- Machine Learning Engineer

- Senior Data Scientist

- Robotics Engineer

- Algorithm Engineer

Education Required: Entry-level artificial intelligence jobs may require a bachelor's degree, but engineers, researchers, and data scientists often need a master's degree or a doctorate.

Quantum Computing

Quantum computing operates on subatomic particles rather than a stream of binary impulses, and its bits, called qubits, can exist in more than one state simultaneously. It is much more powerful, but far less well-developed, than traditional computing. Should quantum computing become widely accessible, it would challenge current communication and cryptography practices.

Only a few quantum computing devices exist, and these are highly specialized. Although the concept of quantum computing has generated a lot of buzz, some experts wonder if the concept is truly viable, and whether the benefits outweigh the costs.

Nevertheless, organizations like the Central Intelligence Agency and tech companies like IBM are hiring quantum computing specialists. Many of these jobs call for a master's degree in a technical field like physics, mathematics, or electrical engineering.

Job Outlook: ZipRecruiter reports quantum computing professionals earn an average annual salary of $131,240 as of March 2024. Since quantum computing is still a relatively niche field, reliable job growth data is unavailable.

- Quantum Machine Learning Scientist

- Quantum Software Developer

- Quantum Algorithm Researcher

- Quantum Control Researcher

- Qubit Researcher

Education Required: Quantum computing careers usually require a graduate degree.

Bioinformatics

Bioinformatics combines biology with computer science and generally focuses on data collection and analysis. Biologists use bioinformatics to spot patterns in their data. For example, a scientist can use bioinformatics tools and techniques to help sequence organisms' genomes.

As knowledge of biology expands, so does interest in bioinformatics. For example, research from BDO , a professional services firm, indicates spending on research and development in biotech grew nearly 22% from 2018 to 2019. Bioinformatics companies include Helix, Seven Bridges, and Thermo Fisher Scientific.

Bioinformatics specialists should have skills in cluster analysis, algorithm development, server cluster management, and protein sequencing analysis. Careers in this industry include biostatistician, bioinformatician, and bioinformatics scientist.

Job Outlook: The BLS projects that bioengineer and biomedical engineer jobs will grow 5% from 2022 to 2032. These workers earned a median wage of $100,730 in 2023.

- Bioinformatics Research Scientist

- Bioinformatics Engineer

- Biomedical Researcher

- Biostatistician

- Computational Biologist

Education Required: Most bioinformatics careers require a bachelor's degree or higher. Leadership, research, and teaching positions may require a master's degree or Ph.D.

Remote Healthcare

Remote healthcare lets medical providers use technology to monitor the health of patients who cannot travel to providers' offices. Supporters of this field say remote healthcare improves patient outcomes while reducing costs.

EMARKETER produced a 2021 report that projects 70.6 million people will use remote patient monitoring devices by 2025, up from 29.1 million in 2020. The rising prevalence of chronic conditions, an aging patient population, and the need for cost-effective medical services are all helping drive this trend.

Physicians, therapists, and advanced practice professionals can use remote patient monitoring, but affordability, patient behaviors, and lack of awareness threaten widespread adoption. Companies in this field include GYANT, Medopad, and Cardiomo.

Job Outlook: The BLS projects that the healthcare field will have 1.8 million annual openings from 2022 to 2032. Healthcare practitioners and technical workers earned a median wage of $80,820 in 2023.

- Physician Assistant

- Nurse Practitioner

- Registered Nurse

- Healthcare Business Analyst

- Health Tech Software Engineer

- Licensed Professional Counselor

Education Required: Doctors need an MD or DO, advanced practice professionals and counselors must hold a master's degree, and registered nurses need at least an associate degree. All medical providers must also hold licensure.

Cybersecurity

Cybersecurity is an umbrella term that refers to protecting digital assets from cyberthreats. Most attacks are coordinated efforts to access or change information, extort money, or disrupt business.

In 2022, McKinsey & Associates projected the cost of cybersecurity attacks would grow to $10.5 trillion annually by 2025, increasing 300% from 2015. Sophisticated artificial intelligence tools drive an increase in deep fakes, hacking, and data breaches. Companies such as Cisco, IBM, and Palo Alto Networks are building novel cybersecurity technologies to combat these threats.

Jobs in the field include security engineer, cryptographer, and ethical hacker. These positions often pay lucrative salaries and require academic degrees and professional credentials, such as the CompTIA security+ certification. Cybersecurity is not generally an entry-level field , so most new practitioners have computer science experience.

Job Outlook: The BLS projects that information security analyst jobs will grow 32% from 2022 to 2032. These workers earned a median wage of $120,360 in 2023.

- Information Security Analyst

- Digital Forensic Examiner

- Penetration Tester

- Security Engineer

Education Required: Cybersecurity experts usually need a bachelor's degree and relevant professional certifications.

Autonomic, Autonomous, and Hybrid Systems

Autonomous may sound synonymous with autonomic, but the two words actually have different meanings. Autonomous machines operate with little or no human control — think of industrial robots or self-driving cars. Autonomic computing, in contrast, controls itself while also responding to its environment — think of the industrial Internet of Things and predictive AI.