Research Methods In Psychology

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

Research methods in psychology are systematic procedures used to observe, describe, predict, and explain behavior and mental processes. They include experiments, surveys, case studies, and naturalistic observations, ensuring data collection is objective and reliable to understand and explain psychological phenomena.

Hypotheses are statements about the prediction of the results, that can be verified or disproved by some investigation.

There are four types of hypotheses :

- Null Hypotheses (H0 ) – these predict that no difference will be found in the results between the conditions. Typically these are written ‘There will be no difference…’

- Alternative Hypotheses (Ha or H1) – these predict that there will be a significant difference in the results between the two conditions. This is also known as the experimental hypothesis.

- One-tailed (directional) hypotheses – these state the specific direction the researcher expects the results to move in, e.g. higher, lower, more, less. In a correlation study, the predicted direction of the correlation can be either positive or negative.

- Two-tailed (non-directional) hypotheses – these state that a difference will be found between the conditions of the independent variable but does not state the direction of a difference or relationship. Typically these are always written ‘There will be a difference ….’

All research has an alternative hypothesis (either a one-tailed or two-tailed) and a corresponding null hypothesis.

Once the research is conducted and results are found, psychologists must accept one hypothesis and reject the other.

So, if a difference is found, the Psychologist would accept the alternative hypothesis and reject the null. The opposite applies if no difference is found.

Sampling techniques

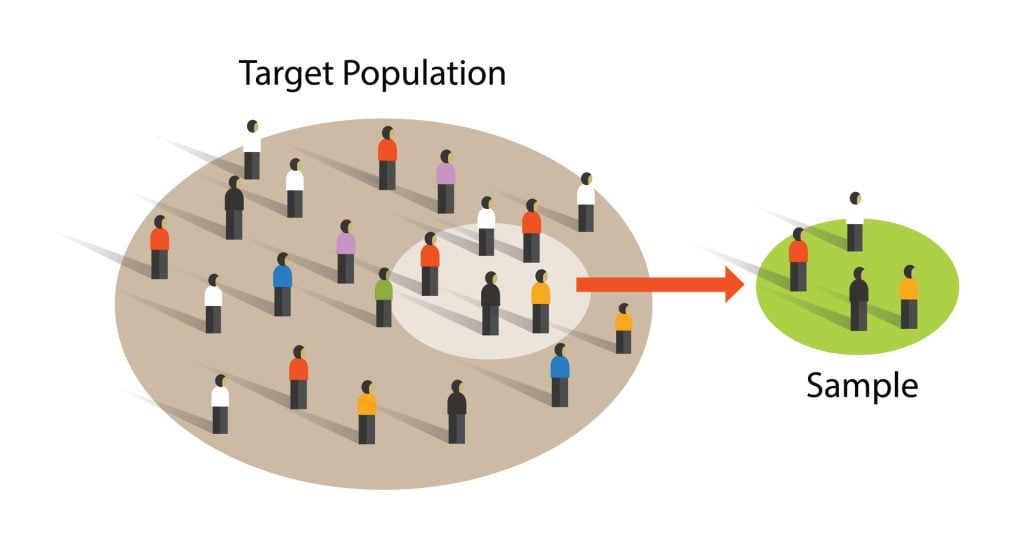

Sampling is the process of selecting a representative group from the population under study.

A sample is the participants you select from a target population (the group you are interested in) to make generalizations about.

Representative means the extent to which a sample mirrors a researcher’s target population and reflects its characteristics.

Generalisability means the extent to which their findings can be applied to the larger population of which their sample was a part.

- Volunteer sample : where participants pick themselves through newspaper adverts, noticeboards or online.

- Opportunity sampling : also known as convenience sampling , uses people who are available at the time the study is carried out and willing to take part. It is based on convenience.

- Random sampling : when every person in the target population has an equal chance of being selected. An example of random sampling would be picking names out of a hat.

- Systematic sampling : when a system is used to select participants. Picking every Nth person from all possible participants. N = the number of people in the research population / the number of people needed for the sample.

- Stratified sampling : when you identify the subgroups and select participants in proportion to their occurrences.

- Snowball sampling : when researchers find a few participants, and then ask them to find participants themselves and so on.

- Quota sampling : when researchers will be told to ensure the sample fits certain quotas, for example they might be told to find 90 participants, with 30 of them being unemployed.

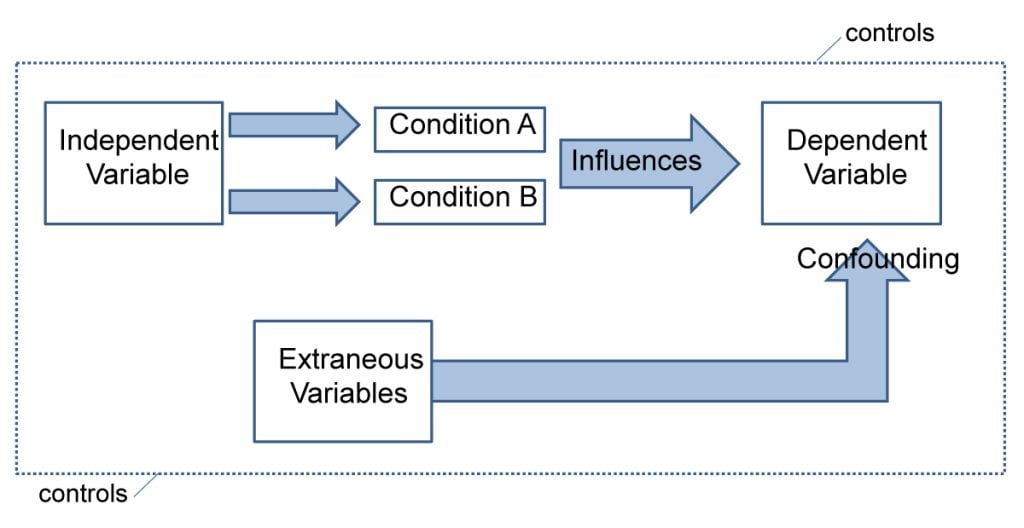

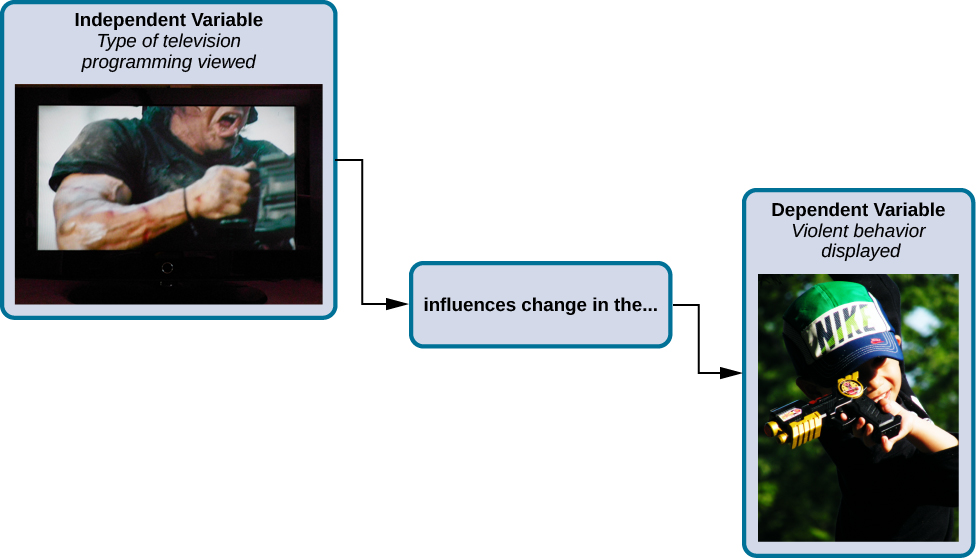

Experiments always have an independent and dependent variable .

- The independent variable is the one the experimenter manipulates (the thing that changes between the conditions the participants are placed into). It is assumed to have a direct effect on the dependent variable.

- The dependent variable is the thing being measured, or the results of the experiment.

Operationalization of variables means making them measurable/quantifiable. We must use operationalization to ensure that variables are in a form that can be easily tested.

For instance, we can’t really measure ‘happiness’, but we can measure how many times a person smiles within a two-hour period.

By operationalizing variables, we make it easy for someone else to replicate our research. Remember, this is important because we can check if our findings are reliable.

Extraneous variables are all variables which are not independent variable but could affect the results of the experiment.

It can be a natural characteristic of the participant, such as intelligence levels, gender, or age for example, or it could be a situational feature of the environment such as lighting or noise.

Demand characteristics are a type of extraneous variable that occurs if the participants work out the aims of the research study, they may begin to behave in a certain way.

For example, in Milgram’s research , critics argued that participants worked out that the shocks were not real and they administered them as they thought this was what was required of them.

Extraneous variables must be controlled so that they do not affect (confound) the results.

Randomly allocating participants to their conditions or using a matched pairs experimental design can help to reduce participant variables.

Situational variables are controlled by using standardized procedures, ensuring every participant in a given condition is treated in the same way

Experimental Design

Experimental design refers to how participants are allocated to each condition of the independent variable, such as a control or experimental group.

- Independent design ( between-groups design ): each participant is selected for only one group. With the independent design, the most common way of deciding which participants go into which group is by means of randomization.

- Matched participants design : each participant is selected for only one group, but the participants in the two groups are matched for some relevant factor or factors (e.g. ability; sex; age).

- Repeated measures design ( within groups) : each participant appears in both groups, so that there are exactly the same participants in each group.

- The main problem with the repeated measures design is that there may well be order effects. Their experiences during the experiment may change the participants in various ways.

- They may perform better when they appear in the second group because they have gained useful information about the experiment or about the task. On the other hand, they may perform less well on the second occasion because of tiredness or boredom.

- Counterbalancing is the best way of preventing order effects from disrupting the findings of an experiment, and involves ensuring that each condition is equally likely to be used first and second by the participants.

If we wish to compare two groups with respect to a given independent variable, it is essential to make sure that the two groups do not differ in any other important way.

Experimental Methods

All experimental methods involve an iv (independent variable) and dv (dependent variable)..

- Field experiments are conducted in the everyday (natural) environment of the participants. The experimenter still manipulates the IV, but in a real-life setting. It may be possible to control extraneous variables, though such control is more difficult than in a lab experiment.

- Natural experiments are when a naturally occurring IV is investigated that isn’t deliberately manipulated, it exists anyway. Participants are not randomly allocated, and the natural event may only occur rarely.

Case studies are in-depth investigations of a person, group, event, or community. It uses information from a range of sources, such as from the person concerned and also from their family and friends.

Many techniques may be used such as interviews, psychological tests, observations and experiments. Case studies are generally longitudinal: in other words, they follow the individual or group over an extended period of time.

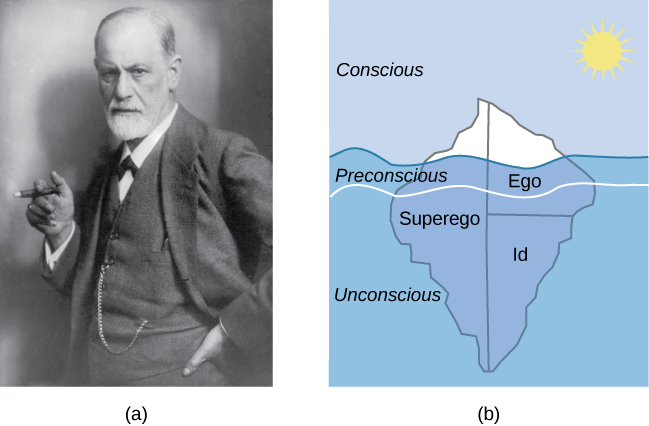

Case studies are widely used in psychology and among the best-known ones carried out were by Sigmund Freud . He conducted very detailed investigations into the private lives of his patients in an attempt to both understand and help them overcome their illnesses.

Case studies provide rich qualitative data and have high levels of ecological validity. However, it is difficult to generalize from individual cases as each one has unique characteristics.

Correlational Studies

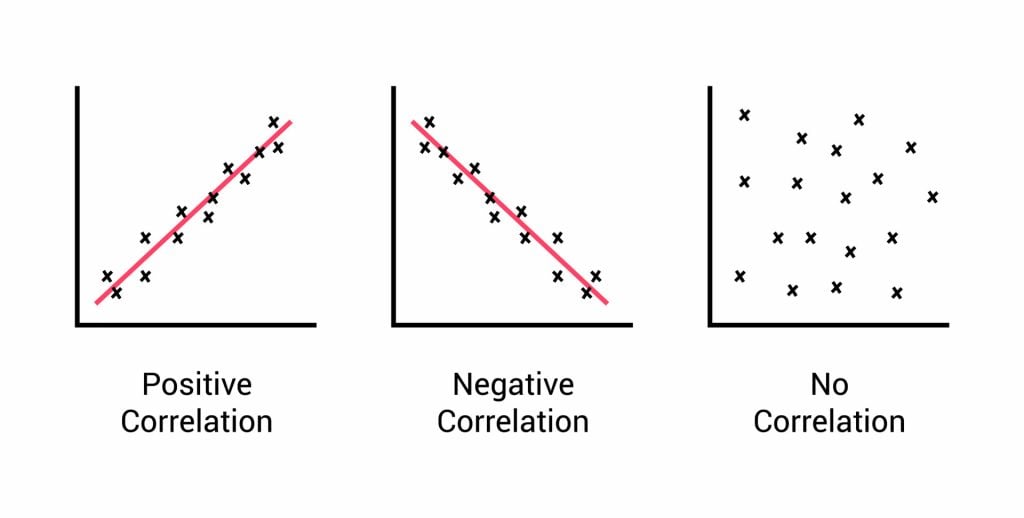

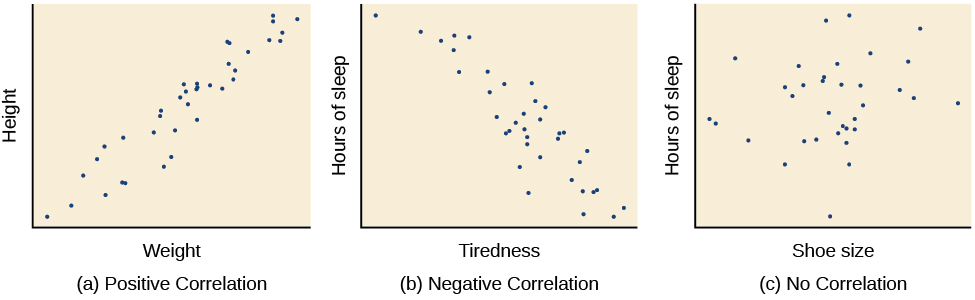

Correlation means association; it is a measure of the extent to which two variables are related. One of the variables can be regarded as the predictor variable with the other one as the outcome variable.

Correlational studies typically involve obtaining two different measures from a group of participants, and then assessing the degree of association between the measures.

The predictor variable can be seen as occurring before the outcome variable in some sense. It is called the predictor variable, because it forms the basis for predicting the value of the outcome variable.

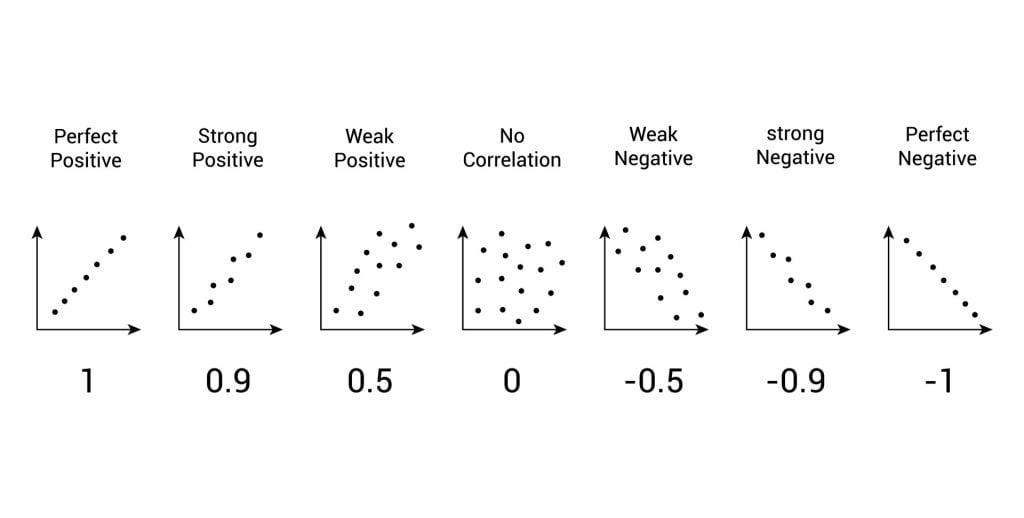

Relationships between variables can be displayed on a graph or as a numerical score called a correlation coefficient.

- If an increase in one variable tends to be associated with an increase in the other, then this is known as a positive correlation .

- If an increase in one variable tends to be associated with a decrease in the other, then this is known as a negative correlation .

- A zero correlation occurs when there is no relationship between variables.

After looking at the scattergraph, if we want to be sure that a significant relationship does exist between the two variables, a statistical test of correlation can be conducted, such as Spearman’s rho.

The test will give us a score, called a correlation coefficient . This is a value between 0 and 1, and the closer to 1 the score is, the stronger the relationship between the variables. This value can be both positive e.g. 0.63, or negative -0.63.

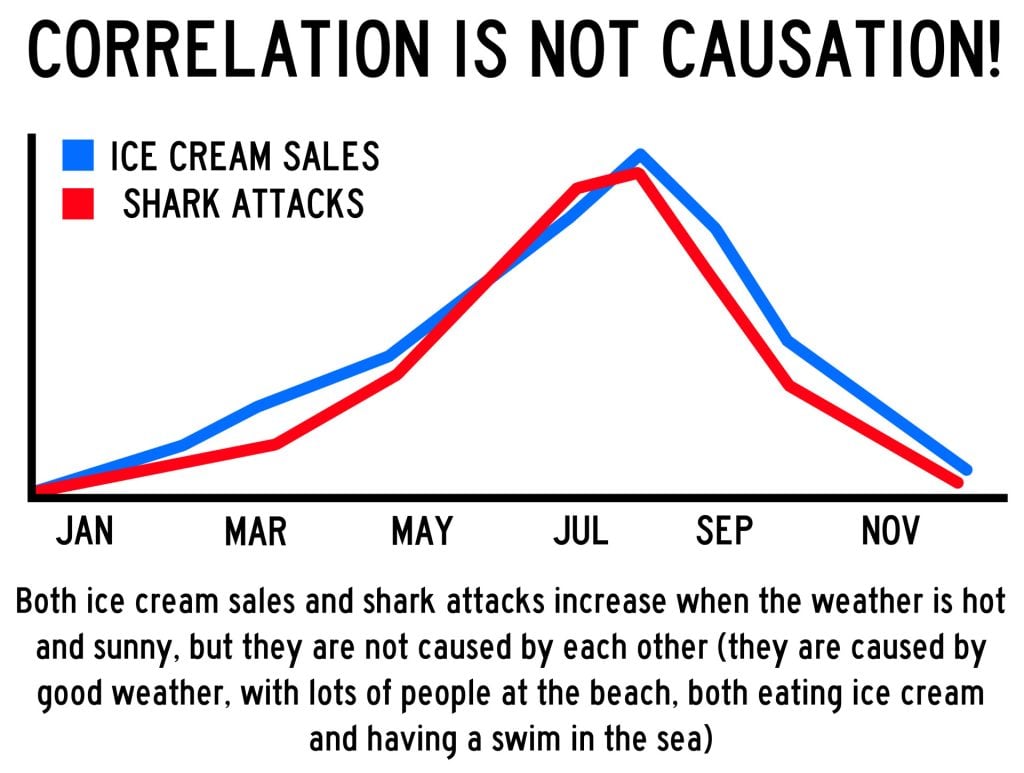

A correlation between variables, however, does not automatically mean that the change in one variable is the cause of the change in the values of the other variable. A correlation only shows if there is a relationship between variables.

Correlation does not always prove causation, as a third variable may be involved.

Interview Methods

Interviews are commonly divided into two types: structured and unstructured.

A fixed, predetermined set of questions is put to every participant in the same order and in the same way.

Responses are recorded on a questionnaire, and the researcher presets the order and wording of questions, and sometimes the range of alternative answers.

The interviewer stays within their role and maintains social distance from the interviewee.

There are no set questions, and the participant can raise whatever topics he/she feels are relevant and ask them in their own way. Questions are posed about participants’ answers to the subject

Unstructured interviews are most useful in qualitative research to analyze attitudes and values.

Though they rarely provide a valid basis for generalization, their main advantage is that they enable the researcher to probe social actors’ subjective point of view.

Questionnaire Method

Questionnaires can be thought of as a kind of written interview. They can be carried out face to face, by telephone, or post.

The choice of questions is important because of the need to avoid bias or ambiguity in the questions, ‘leading’ the respondent or causing offense.

- Open questions are designed to encourage a full, meaningful answer using the subject’s own knowledge and feelings. They provide insights into feelings, opinions, and understanding. Example: “How do you feel about that situation?”

- Closed questions can be answered with a simple “yes” or “no” or specific information, limiting the depth of response. They are useful for gathering specific facts or confirming details. Example: “Do you feel anxious in crowds?”

Its other practical advantages are that it is cheaper than face-to-face interviews and can be used to contact many respondents scattered over a wide area relatively quickly.

Observations

There are different types of observation methods :

- Covert observation is where the researcher doesn’t tell the participants they are being observed until after the study is complete. There could be ethical problems or deception and consent with this particular observation method.

- Overt observation is where a researcher tells the participants they are being observed and what they are being observed for.

- Controlled : behavior is observed under controlled laboratory conditions (e.g., Bandura’s Bobo doll study).

- Natural : Here, spontaneous behavior is recorded in a natural setting.

- Participant : Here, the observer has direct contact with the group of people they are observing. The researcher becomes a member of the group they are researching.

- Non-participant (aka “fly on the wall): The researcher does not have direct contact with the people being observed. The observation of participants’ behavior is from a distance

Pilot Study

A pilot study is a small scale preliminary study conducted in order to evaluate the feasibility of the key s teps in a future, full-scale project.

A pilot study is an initial run-through of the procedures to be used in an investigation; it involves selecting a few people and trying out the study on them. It is possible to save time, and in some cases, money, by identifying any flaws in the procedures designed by the researcher.

A pilot study can help the researcher spot any ambiguities (i.e. unusual things) or confusion in the information given to participants or problems with the task devised.

Sometimes the task is too hard, and the researcher may get a floor effect, because none of the participants can score at all or can complete the task – all performances are low.

The opposite effect is a ceiling effect, when the task is so easy that all achieve virtually full marks or top performances and are “hitting the ceiling”.

Research Design

In cross-sectional research , a researcher compares multiple segments of the population at the same time

Sometimes, we want to see how people change over time, as in studies of human development and lifespan. Longitudinal research is a research design in which data-gathering is administered repeatedly over an extended period of time.

In cohort studies , the participants must share a common factor or characteristic such as age, demographic, or occupation. A cohort study is a type of longitudinal study in which researchers monitor and observe a chosen population over an extended period.

Triangulation means using more than one research method to improve the study’s validity.

Reliability

Reliability is a measure of consistency, if a particular measurement is repeated and the same result is obtained then it is described as being reliable.

- Test-retest reliability : assessing the same person on two different occasions which shows the extent to which the test produces the same answers.

- Inter-observer reliability : the extent to which there is an agreement between two or more observers.

Meta-Analysis

A meta-analysis is a systematic review that involves identifying an aim and then searching for research studies that have addressed similar aims/hypotheses.

This is done by looking through various databases, and then decisions are made about what studies are to be included/excluded.

Strengths: Increases the conclusions’ validity as they’re based on a wider range.

Weaknesses: Research designs in studies can vary, so they are not truly comparable.

Peer Review

A researcher submits an article to a journal. The choice of the journal may be determined by the journal’s audience or prestige.

The journal selects two or more appropriate experts (psychologists working in a similar field) to peer review the article without payment. The peer reviewers assess: the methods and designs used, originality of the findings, the validity of the original research findings and its content, structure and language.

Feedback from the reviewer determines whether the article is accepted. The article may be: Accepted as it is, accepted with revisions, sent back to the author to revise and re-submit or rejected without the possibility of submission.

The editor makes the final decision whether to accept or reject the research report based on the reviewers comments/ recommendations.

Peer review is important because it prevent faulty data from entering the public domain, it provides a way of checking the validity of findings and the quality of the methodology and is used to assess the research rating of university departments.

Peer reviews may be an ideal, whereas in practice there are lots of problems. For example, it slows publication down and may prevent unusual, new work being published. Some reviewers might use it as an opportunity to prevent competing researchers from publishing work.

Some people doubt whether peer review can really prevent the publication of fraudulent research.

The advent of the internet means that a lot of research and academic comment is being published without official peer reviews than before, though systems are evolving on the internet where everyone really has a chance to offer their opinions and police the quality of research.

Types of Data

- Quantitative data is numerical data e.g. reaction time or number of mistakes. It represents how much or how long, how many there are of something. A tally of behavioral categories and closed questions in a questionnaire collect quantitative data.

- Qualitative data is virtually any type of information that can be observed and recorded that is not numerical in nature and can be in the form of written or verbal communication. Open questions in questionnaires and accounts from observational studies collect qualitative data.

- Primary data is first-hand data collected for the purpose of the investigation.

- Secondary data is information that has been collected by someone other than the person who is conducting the research e.g. taken from journals, books or articles.

Validity means how well a piece of research actually measures what it sets out to, or how well it reflects the reality it claims to represent.

Validity is whether the observed effect is genuine and represents what is actually out there in the world.

- Concurrent validity is the extent to which a psychological measure relates to an existing similar measure and obtains close results. For example, a new intelligence test compared to an established test.

- Face validity : does the test measure what it’s supposed to measure ‘on the face of it’. This is done by ‘eyeballing’ the measuring or by passing it to an expert to check.

- Ecological validit y is the extent to which findings from a research study can be generalized to other settings / real life.

- Temporal validity is the extent to which findings from a research study can be generalized to other historical times.

Features of Science

- Paradigm – A set of shared assumptions and agreed methods within a scientific discipline.

- Paradigm shift – The result of the scientific revolution: a significant change in the dominant unifying theory within a scientific discipline.

- Objectivity – When all sources of personal bias are minimised so not to distort or influence the research process.

- Empirical method – Scientific approaches that are based on the gathering of evidence through direct observation and experience.

- Replicability – The extent to which scientific procedures and findings can be repeated by other researchers.

- Falsifiability – The principle that a theory cannot be considered scientific unless it admits the possibility of being proved untrue.

Statistical Testing

A significant result is one where there is a low probability that chance factors were responsible for any observed difference, correlation, or association in the variables tested.

If our test is significant, we can reject our null hypothesis and accept our alternative hypothesis.

If our test is not significant, we can accept our null hypothesis and reject our alternative hypothesis. A null hypothesis is a statement of no effect.

In Psychology, we use p < 0.05 (as it strikes a balance between making a type I and II error) but p < 0.01 is used in tests that could cause harm like introducing a new drug.

A type I error is when the null hypothesis is rejected when it should have been accepted (happens when a lenient significance level is used, an error of optimism).

A type II error is when the null hypothesis is accepted when it should have been rejected (happens when a stringent significance level is used, an error of pessimism).

Ethical Issues

- Informed consent is when participants are able to make an informed judgment about whether to take part. It causes them to guess the aims of the study and change their behavior.

- To deal with it, we can gain presumptive consent or ask them to formally indicate their agreement to participate but it may invalidate the purpose of the study and it is not guaranteed that the participants would understand.

- Deception should only be used when it is approved by an ethics committee, as it involves deliberately misleading or withholding information. Participants should be fully debriefed after the study but debriefing can’t turn the clock back.

- All participants should be informed at the beginning that they have the right to withdraw if they ever feel distressed or uncomfortable.

- It causes bias as the ones that stayed are obedient and some may not withdraw as they may have been given incentives or feel like they’re spoiling the study. Researchers can offer the right to withdraw data after participation.

- Participants should all have protection from harm . The researcher should avoid risks greater than those experienced in everyday life and they should stop the study if any harm is suspected. However, the harm may not be apparent at the time of the study.

- Confidentiality concerns the communication of personal information. The researchers should not record any names but use numbers or false names though it may not be possible as it is sometimes possible to work out who the researchers were.

Related Articles

Research Methodology

Mixed Methods Research

Conversation Analysis

Discourse Analysis

Phenomenology In Qualitative Research

Ethnography In Qualitative Research

Narrative Analysis In Qualitative Research

2.2 Research Methods

Learning objectives.

By the end of this section, you should be able to:

- Recall the 6 Steps of the Scientific Method

- Differentiate between four kinds of research methods: surveys, field research, experiments, and secondary data analysis.

- Explain the appropriateness of specific research approaches for specific topics.

Sociologists examine the social world, see a problem or interesting pattern, and set out to study it. They use research methods to design a study. Planning the research design is a key step in any sociological study. Sociologists generally choose from widely used methods of social investigation: primary source data collection such as survey, participant observation, ethnography, case study, unobtrusive observations, experiment, and secondary data analysis , or use of existing sources. Every research method comes with plusses and minuses, and the topic of study strongly influences which method or methods are put to use. When you are conducting research think about the best way to gather or obtain knowledge about your topic, think of yourself as an architect. An architect needs a blueprint to build a house, as a sociologist your blueprint is your research design including your data collection method.

When entering a particular social environment, a researcher must be careful. There are times to remain anonymous and times to be overt. There are times to conduct interviews and times to simply observe. Some participants need to be thoroughly informed; others should not know they are being observed. A researcher wouldn’t stroll into a crime-ridden neighborhood at midnight, calling out, “Any gang members around?”

Making sociologists’ presence invisible is not always realistic for other reasons. That option is not available to a researcher studying prison behaviors, early education, or the Ku Klux Klan. Researchers can’t just stroll into prisons, kindergarten classrooms, or Klan meetings and unobtrusively observe behaviors or attract attention. In situations like these, other methods are needed. Researchers choose methods that best suit their study topics, protect research participants or subjects, and that fit with their overall approaches to research.

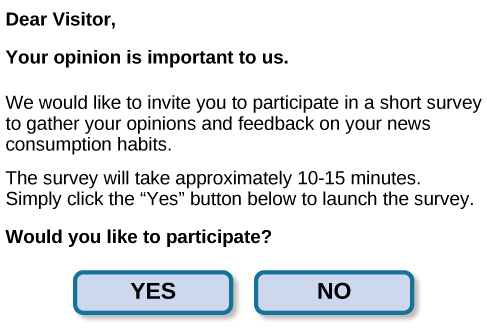

As a research method, a survey collects data from subjects who respond to a series of questions about behaviors and opinions, often in the form of a questionnaire or an interview. The survey is one of the most widely used scientific research methods. The standard survey format allows individuals a level of anonymity in which they can express personal ideas.

At some point, most people in the United States respond to some type of survey. The 2020 U.S. Census is an excellent example of a large-scale survey intended to gather sociological data. Since 1790, United States has conducted a survey consisting of six questions to received demographical data pertaining to residents. The questions pertain to the demographics of the residents who live in the United States. Currently, the Census is received by residents in the United Stated and five territories and consists of 12 questions.

Not all surveys are considered sociological research, however, and many surveys people commonly encounter focus on identifying marketing needs and strategies rather than testing a hypothesis or contributing to social science knowledge. Questions such as, “How many hot dogs do you eat in a month?” or “Were the staff helpful?” are not usually designed as scientific research. The Nielsen Ratings determine the popularity of television programming through scientific market research. However, polls conducted by television programs such as American Idol or So You Think You Can Dance cannot be generalized, because they are administered to an unrepresentative population, a specific show’s audience. You might receive polls through your cell phones or emails, from grocery stores, restaurants, and retail stores. They often provide you incentives for completing the survey.

Sociologists conduct surveys under controlled conditions for specific purposes. Surveys gather different types of information from people. While surveys are not great at capturing the ways people really behave in social situations, they are a great method for discovering how people feel, think, and act—or at least how they say they feel, think, and act. Surveys can track preferences for presidential candidates or reported individual behaviors (such as sleeping, driving, or texting habits) or information such as employment status, income, and education levels.

A survey targets a specific population , people who are the focus of a study, such as college athletes, international students, or teenagers living with type 1 (juvenile-onset) diabetes. Most researchers choose to survey a small sector of the population, or a sample , a manageable number of subjects who represent a larger population. The success of a study depends on how well a population is represented by the sample. In a random sample , every person in a population has the same chance of being chosen for the study. As a result, a Gallup Poll, if conducted as a nationwide random sampling, should be able to provide an accurate estimate of public opinion whether it contacts 2,000 or 10,000 people.

After selecting subjects, the researcher develops a specific plan to ask questions and record responses. It is important to inform subjects of the nature and purpose of the survey up front. If they agree to participate, researchers thank subjects and offer them a chance to see the results of the study if they are interested. The researcher presents the subjects with an instrument, which is a means of gathering the information.

A common instrument is a questionnaire. Subjects often answer a series of closed-ended questions . The researcher might ask yes-or-no or multiple-choice questions, allowing subjects to choose possible responses to each question. This kind of questionnaire collects quantitative data —data in numerical form that can be counted and statistically analyzed. Just count up the number of “yes” and “no” responses or correct answers, and chart them into percentages.

Questionnaires can also ask more complex questions with more complex answers—beyond “yes,” “no,” or checkbox options. These types of inquiries use open-ended questions that require short essay responses. Participants willing to take the time to write those answers might convey personal religious beliefs, political views, goals, or morals. The answers are subjective and vary from person to person. How do you plan to use your college education?

Some topics that investigate internal thought processes are impossible to observe directly and are difficult to discuss honestly in a public forum. People are more likely to share honest answers if they can respond to questions anonymously. This type of personal explanation is qualitative data —conveyed through words. Qualitative information is harder to organize and tabulate. The researcher will end up with a wide range of responses, some of which may be surprising. The benefit of written opinions, though, is the wealth of in-depth material that they provide.

An interview is a one-on-one conversation between the researcher and the subject, and it is a way of conducting surveys on a topic. However, participants are free to respond as they wish, without being limited by predetermined choices. In the back-and-forth conversation of an interview, a researcher can ask for clarification, spend more time on a subtopic, or ask additional questions. In an interview, a subject will ideally feel free to open up and answer questions that are often complex. There are no right or wrong answers. The subject might not even know how to answer the questions honestly.

Questions such as “How does society’s view of alcohol consumption influence your decision whether or not to take your first sip of alcohol?” or “Did you feel that the divorce of your parents would put a social stigma on your family?” involve so many factors that the answers are difficult to categorize. A researcher needs to avoid steering or prompting the subject to respond in a specific way; otherwise, the results will prove to be unreliable. The researcher will also benefit from gaining a subject’s trust, from empathizing or commiserating with a subject, and from listening without judgment.

Surveys often collect both quantitative and qualitative data. For example, a researcher interviewing people who are incarcerated might receive quantitative data, such as demographics – race, age, sex, that can be analyzed statistically. For example, the researcher might discover that 20 percent of incarcerated people are above the age of 50. The researcher might also collect qualitative data, such as why people take advantage of educational opportunities during their sentence and other explanatory information.

The survey can be carried out online, over the phone, by mail, or face-to-face. When researchers collect data outside a laboratory, library, or workplace setting, they are conducting field research, which is our next topic.

Field Research

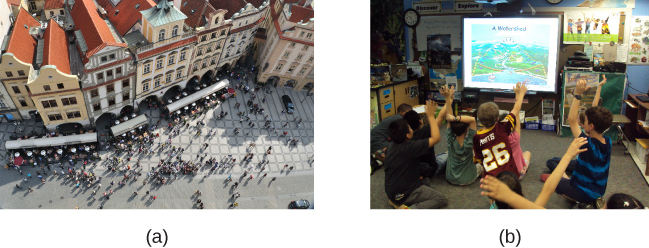

The work of sociology rarely happens in limited, confined spaces. Rather, sociologists go out into the world. They meet subjects where they live, work, and play. Field research refers to gathering primary data from a natural environment. To conduct field research, the sociologist must be willing to step into new environments and observe, participate, or experience those worlds. In field work, the sociologists, rather than the subjects, are the ones out of their element.

The researcher interacts with or observes people and gathers data along the way. The key point in field research is that it takes place in the subject’s natural environment, whether it’s a coffee shop or tribal village, a homeless shelter or the DMV, a hospital, airport, mall, or beach resort.

While field research often begins in a specific setting , the study’s purpose is to observe specific behaviors in that setting. Field work is optimal for observing how people think and behave. It seeks to understand why they behave that way. However, researchers may struggle to narrow down cause and effect when there are so many variables floating around in a natural environment. And while field research looks for correlation, its small sample size does not allow for establishing a causal relationship between two variables. Indeed, much of the data gathered in sociology do not identify a cause and effect but a correlation .

Sociology in the Real World

Beyoncé and lady gaga as sociological subjects.

Sociologists have studied Lady Gaga and Beyoncé and their impact on music, movies, social media, fan participation, and social equality. In their studies, researchers have used several research methods including secondary analysis, participant observation, and surveys from concert participants.

In their study, Click, Lee & Holiday (2013) interviewed 45 Lady Gaga fans who utilized social media to communicate with the artist. These fans viewed Lady Gaga as a mirror of themselves and a source of inspiration. Like her, they embrace not being a part of mainstream culture. Many of Lady Gaga’s fans are members of the LGBTQ community. They see the “song “Born This Way” as a rallying cry and answer her calls for “Paws Up” with a physical expression of solidarity—outstretched arms and fingers bent and curled to resemble monster claws.”

Sascha Buchanan (2019) made use of participant observation to study the relationship between two fan groups, that of Beyoncé and that of Rihanna. She observed award shows sponsored by iHeartRadio, MTV EMA, and BET that pit one group against another as they competed for Best Fan Army, Biggest Fans, and FANdemonium. Buchanan argues that the media thus sustains a myth of rivalry between the two most commercially successful Black women vocal artists.

Participant Observation

In 2000, a comic writer named Rodney Rothman wanted an insider’s view of white-collar work. He slipped into the sterile, high-rise offices of a New York “dot com” agency. Every day for two weeks, he pretended to work there. His main purpose was simply to see whether anyone would notice him or challenge his presence. No one did. The receptionist greeted him. The employees smiled and said good morning. Rothman was accepted as part of the team. He even went so far as to claim a desk, inform the receptionist of his whereabouts, and attend a meeting. He published an article about his experience in The New Yorker called “My Fake Job” (2000). Later, he was discredited for allegedly fabricating some details of the story and The New Yorker issued an apology. However, Rothman’s entertaining article still offered fascinating descriptions of the inside workings of a “dot com” company and exemplified the lengths to which a writer, or a sociologist, will go to uncover material.

Rothman had conducted a form of study called participant observation , in which researchers join people and participate in a group’s routine activities for the purpose of observing them within that context. This method lets researchers experience a specific aspect of social life. A researcher might go to great lengths to get a firsthand look into a trend, institution, or behavior. A researcher might work as a waitress in a diner, experience homelessness for several weeks, or ride along with police officers as they patrol their regular beat. Often, these researchers try to blend in seamlessly with the population they study, and they may not disclose their true identity or purpose if they feel it would compromise the results of their research.

At the beginning of a field study, researchers might have a question: “What really goes on in the kitchen of the most popular diner on campus?” or “What is it like to be homeless?” Participant observation is a useful method if the researcher wants to explore a certain environment from the inside.

Field researchers simply want to observe and learn. In such a setting, the researcher will be alert and open minded to whatever happens, recording all observations accurately. Soon, as patterns emerge, questions will become more specific, observations will lead to hypotheses, and hypotheses will guide the researcher in analyzing data and generating results.

In a study of small towns in the United States conducted by sociological researchers John S. Lynd and Helen Merrell Lynd, the team altered their purpose as they gathered data. They initially planned to focus their study on the role of religion in U.S. towns. As they gathered observations, they realized that the effect of industrialization and urbanization was the more relevant topic of this social group. The Lynds did not change their methods, but they revised the purpose of their study.

This shaped the structure of Middletown: A Study in Modern American Culture , their published results (Lynd & Lynd, 1929).

The Lynds were upfront about their mission. The townspeople of Muncie, Indiana, knew why the researchers were in their midst. But some sociologists prefer not to alert people to their presence. The main advantage of covert participant observation is that it allows the researcher access to authentic, natural behaviors of a group’s members. The challenge, however, is gaining access to a setting without disrupting the pattern of others’ behavior. Becoming an inside member of a group, organization, or subculture takes time and effort. Researchers must pretend to be something they are not. The process could involve role playing, making contacts, networking, or applying for a job.

Once inside a group, some researchers spend months or even years pretending to be one of the people they are observing. However, as observers, they cannot get too involved. They must keep their purpose in mind and apply the sociological perspective. That way, they illuminate social patterns that are often unrecognized. Because information gathered during participant observation is mostly qualitative, rather than quantitative, the end results are often descriptive or interpretive. The researcher might present findings in an article or book and describe what he or she witnessed and experienced.

This type of research is what journalist Barbara Ehrenreich conducted for her book Nickel and Dimed . One day over lunch with her editor, Ehrenreich mentioned an idea. How can people exist on minimum-wage work? How do low-income workers get by? she wondered. Someone should do a study . To her surprise, her editor responded, Why don’t you do it?

That’s how Ehrenreich found herself joining the ranks of the working class. For several months, she left her comfortable home and lived and worked among people who lacked, for the most part, higher education and marketable job skills. Undercover, she applied for and worked minimum wage jobs as a waitress, a cleaning woman, a nursing home aide, and a retail chain employee. During her participant observation, she used only her income from those jobs to pay for food, clothing, transportation, and shelter.

She discovered the obvious, that it’s almost impossible to get by on minimum wage work. She also experienced and observed attitudes many middle and upper-class people never think about. She witnessed firsthand the treatment of working class employees. She saw the extreme measures people take to make ends meet and to survive. She described fellow employees who held two or three jobs, worked seven days a week, lived in cars, could not pay to treat chronic health conditions, got randomly fired, submitted to drug tests, and moved in and out of homeless shelters. She brought aspects of that life to light, describing difficult working conditions and the poor treatment that low-wage workers suffer.

The book she wrote upon her return to her real life as a well-paid writer, has been widely read and used in many college classrooms.

Ethnography

Ethnography is the immersion of the researcher in the natural setting of an entire social community to observe and experience their everyday life and culture. The heart of an ethnographic study focuses on how subjects view their own social standing and how they understand themselves in relation to a social group.

An ethnographic study might observe, for example, a small U.S. fishing town, an Inuit community, a village in Thailand, a Buddhist monastery, a private boarding school, or an amusement park. These places all have borders. People live, work, study, or vacation within those borders. People are there for a certain reason and therefore behave in certain ways and respect certain cultural norms. An ethnographer would commit to spending a determined amount of time studying every aspect of the chosen place, taking in as much as possible.

A sociologist studying a tribe in the Amazon might watch the way villagers go about their daily lives and then write a paper about it. To observe a spiritual retreat center, an ethnographer might sign up for a retreat and attend as a guest for an extended stay, observe and record data, and collate the material into results.

Institutional Ethnography

Institutional ethnography is an extension of basic ethnographic research principles that focuses intentionally on everyday concrete social relationships. Developed by Canadian sociologist Dorothy E. Smith (1990), institutional ethnography is often considered a feminist-inspired approach to social analysis and primarily considers women’s experiences within male- dominated societies and power structures. Smith’s work is seen to challenge sociology’s exclusion of women, both academically and in the study of women’s lives (Fenstermaker, n.d.).

Historically, social science research tended to objectify women and ignore their experiences except as viewed from the male perspective. Modern feminists note that describing women, and other marginalized groups, as subordinates helps those in authority maintain their own dominant positions (Social Sciences and Humanities Research Council of Canada n.d.). Smith’s three major works explored what she called “the conceptual practices of power” and are still considered seminal works in feminist theory and ethnography (Fensternmaker n.d.).

Sociological Research

The making of middletown: a study in modern u.s. culture.

In 1924, a young married couple named Robert and Helen Lynd undertook an unprecedented ethnography: to apply sociological methods to the study of one U.S. city in order to discover what “ordinary” people in the United States did and believed. Choosing Muncie, Indiana (population about 30,000) as their subject, they moved to the small town and lived there for eighteen months.

Ethnographers had been examining other cultures for decades—groups considered minorities or outsiders—like gangs, immigrants, and the poor. But no one had studied the so-called average American.

Recording interviews and using surveys to gather data, the Lynds objectively described what they observed. Researching existing sources, they compared Muncie in 1890 to the Muncie they observed in 1924. Most Muncie adults, they found, had grown up on farms but now lived in homes inside the city. As a result, the Lynds focused their study on the impact of industrialization and urbanization.

They observed that Muncie was divided into business and working class groups. They defined business class as dealing with abstract concepts and symbols, while working class people used tools to create concrete objects. The two classes led different lives with different goals and hopes. However, the Lynds observed, mass production offered both classes the same amenities. Like wealthy families, the working class was now able to own radios, cars, washing machines, telephones, vacuum cleaners, and refrigerators. This was an emerging material reality of the 1920s.

As the Lynds worked, they divided their manuscript into six chapters: Getting a Living, Making a Home, Training the Young, Using Leisure, Engaging in Religious Practices, and Engaging in Community Activities.

When the study was completed, the Lynds encountered a big problem. The Rockefeller Foundation, which had commissioned the book, claimed it was useless and refused to publish it. The Lynds asked if they could seek a publisher themselves.

Middletown: A Study in Modern American Culture was not only published in 1929 but also became an instant bestseller, a status unheard of for a sociological study. The book sold out six printings in its first year of publication, and has never gone out of print (Caplow, Hicks, & Wattenberg. 2000).

Nothing like it had ever been done before. Middletown was reviewed on the front page of the New York Times. Readers in the 1920s and 1930s identified with the citizens of Muncie, Indiana, but they were equally fascinated by the sociological methods and the use of scientific data to define ordinary people in the United States. The book was proof that social data was important—and interesting—to the U.S. public.

Sometimes a researcher wants to study one specific person or event. A case study is an in-depth analysis of a single event, situation, or individual. To conduct a case study, a researcher examines existing sources like documents and archival records, conducts interviews, engages in direct observation and even participant observation, if possible.

Researchers might use this method to study a single case of a foster child, drug lord, cancer patient, criminal, or rape victim. However, a major criticism of the case study as a method is that while offering depth on a topic, it does not provide enough evidence to form a generalized conclusion. In other words, it is difficult to make universal claims based on just one person, since one person does not verify a pattern. This is why most sociologists do not use case studies as a primary research method.

However, case studies are useful when the single case is unique. In these instances, a single case study can contribute tremendous insight. For example, a feral child, also called “wild child,” is one who grows up isolated from human beings. Feral children grow up without social contact and language, which are elements crucial to a “civilized” child’s development. These children mimic the behaviors and movements of animals, and often invent their own language. There are only about one hundred cases of “feral children” in the world.

As you may imagine, a feral child is a subject of great interest to researchers. Feral children provide unique information about child development because they have grown up outside of the parameters of “normal” growth and nurturing. And since there are very few feral children, the case study is the most appropriate method for researchers to use in studying the subject.

At age three, a Ukranian girl named Oxana Malaya suffered severe parental neglect. She lived in a shed with dogs, and she ate raw meat and scraps. Five years later, a neighbor called authorities and reported seeing a girl who ran on all fours, barking. Officials brought Oxana into society, where she was cared for and taught some human behaviors, but she never became fully socialized. She has been designated as unable to support herself and now lives in a mental institution (Grice 2011). Case studies like this offer a way for sociologists to collect data that may not be obtained by any other method.

Experiments

You have probably tested some of your own personal social theories. “If I study at night and review in the morning, I’ll improve my retention skills.” Or, “If I stop drinking soda, I’ll feel better.” Cause and effect. If this, then that. When you test the theory, your results either prove or disprove your hypothesis.

One way researchers test social theories is by conducting an experiment , meaning they investigate relationships to test a hypothesis—a scientific approach.

There are two main types of experiments: lab-based experiments and natural or field experiments. In a lab setting, the research can be controlled so that more data can be recorded in a limited amount of time. In a natural or field- based experiment, the time it takes to gather the data cannot be controlled but the information might be considered more accurate since it was collected without interference or intervention by the researcher.

As a research method, either type of sociological experiment is useful for testing if-then statements: if a particular thing happens (cause), then another particular thing will result (effect). To set up a lab-based experiment, sociologists create artificial situations that allow them to manipulate variables.

Classically, the sociologist selects a set of people with similar characteristics, such as age, class, race, or education. Those people are divided into two groups. One is the experimental group and the other is the control group. The experimental group is exposed to the independent variable(s) and the control group is not. To test the benefits of tutoring, for example, the sociologist might provide tutoring to the experimental group of students but not to the control group. Then both groups would be tested for differences in performance to see if tutoring had an effect on the experimental group of students. As you can imagine, in a case like this, the researcher would not want to jeopardize the accomplishments of either group of students, so the setting would be somewhat artificial. The test would not be for a grade reflected on their permanent record of a student, for example.

And if a researcher told the students they would be observed as part of a study on measuring the effectiveness of tutoring, the students might not behave naturally. This is called the Hawthorne effect —which occurs when people change their behavior because they know they are being watched as part of a study. The Hawthorne effect is unavoidable in some research studies because sociologists have to make the purpose of the study known. Subjects must be aware that they are being observed, and a certain amount of artificiality may result (Sonnenfeld 1985).

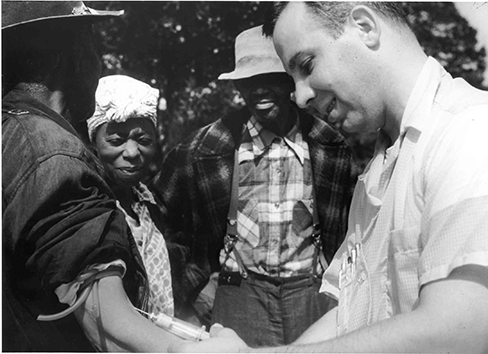

A real-life example will help illustrate the process. In 1971, Frances Heussenstamm, a sociology professor at California State University at Los Angeles, had a theory about police prejudice. To test her theory, she conducted research. She chose fifteen students from three ethnic backgrounds: Black, White, and Hispanic. She chose students who routinely drove to and from campus along Los Angeles freeway routes, and who had had perfect driving records for longer than a year.

Next, she placed a Black Panther bumper sticker on each car. That sticker, a representation of a social value, was the independent variable. In the 1970s, the Black Panthers were a revolutionary group actively fighting racism. Heussenstamm asked the students to follow their normal driving patterns. She wanted to see whether seeming support for the Black Panthers would change how these good drivers were treated by the police patrolling the highways. The dependent variable would be the number of traffic stops/citations.

The first arrest, for an incorrect lane change, was made two hours after the experiment began. One participant was pulled over three times in three days. He quit the study. After seventeen days, the fifteen drivers had collected a total of thirty-three traffic citations. The research was halted. The funding to pay traffic fines had run out, and so had the enthusiasm of the participants (Heussenstamm, 1971).

Secondary Data Analysis

While sociologists often engage in original research studies, they also contribute knowledge to the discipline through secondary data analysis . Secondary data does not result from firsthand research collected from primary sources, but are the already completed work of other researchers or data collected by an agency or organization. Sociologists might study works written by historians, economists, teachers, or early sociologists. They might search through periodicals, newspapers, or magazines, or organizational data from any period in history.

Using available information not only saves time and money but can also add depth to a study. Sociologists often interpret findings in a new way, a way that was not part of an author’s original purpose or intention. To study how women were encouraged to act and behave in the 1960s, for example, a researcher might watch movies, televisions shows, and situation comedies from that period. Or to research changes in behavior and attitudes due to the emergence of television in the late 1950s and early 1960s, a sociologist would rely on new interpretations of secondary data. Decades from now, researchers will most likely conduct similar studies on the advent of mobile phones, the Internet, or social media.

Social scientists also learn by analyzing the research of a variety of agencies. Governmental departments and global groups, like the U.S. Bureau of Labor Statistics or the World Health Organization (WHO), publish studies with findings that are useful to sociologists. A public statistic like the foreclosure rate might be useful for studying the effects of a recession. A racial demographic profile might be compared with data on education funding to examine the resources accessible by different groups.

One of the advantages of secondary data like old movies or WHO statistics is that it is nonreactive research (or unobtrusive research), meaning that it does not involve direct contact with subjects and will not alter or influence people’s behaviors. Unlike studies requiring direct contact with people, using previously published data does not require entering a population and the investment and risks inherent in that research process.

Using available data does have its challenges. Public records are not always easy to access. A researcher will need to do some legwork to track them down and gain access to records. To guide the search through a vast library of materials and avoid wasting time reading unrelated sources, sociologists employ content analysis , applying a systematic approach to record and value information gleaned from secondary data as they relate to the study at hand.

Also, in some cases, there is no way to verify the accuracy of existing data. It is easy to count how many drunk drivers, for example, are pulled over by the police. But how many are not? While it’s possible to discover the percentage of teenage students who drop out of high school, it might be more challenging to determine the number who return to school or get their GED later.

Another problem arises when data are unavailable in the exact form needed or do not survey the topic from the precise angle the researcher seeks. For example, the average salaries paid to professors at a public school is public record. But these figures do not necessarily reveal how long it took each professor to reach the salary range, what their educational backgrounds are, or how long they’ve been teaching.

When conducting content analysis, it is important to consider the date of publication of an existing source and to take into account attitudes and common cultural ideals that may have influenced the research. For example, when Robert S. Lynd and Helen Merrell Lynd gathered research in the 1920s, attitudes and cultural norms were vastly different then than they are now. Beliefs about gender roles, race, education, and work have changed significantly since then. At the time, the study’s purpose was to reveal insights about small U.S. communities. Today, it is an illustration of 1920s attitudes and values.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/introduction-sociology-3e/pages/1-introduction

- Authors: Tonja R. Conerly, Kathleen Holmes, Asha Lal Tamang

- Publisher/website: OpenStax

- Book title: Introduction to Sociology 3e

- Publication date: Jun 3, 2021

- Location: Houston, Texas

- Book URL: https://openstax.org/books/introduction-sociology-3e/pages/1-introduction

- Section URL: https://openstax.org/books/introduction-sociology-3e/pages/2-2-research-methods

© Jan 18, 2024 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

Research Methods | Definitions, Types, Examples

Research methods are specific procedures for collecting and analyzing data. Developing your research methods is an integral part of your research design . When planning your methods, there are two key decisions you will make.

First, decide how you will collect data . Your methods depend on what type of data you need to answer your research question :

- Qualitative vs. quantitative : Will your data take the form of words or numbers?

- Primary vs. secondary : Will you collect original data yourself, or will you use data that has already been collected by someone else?

- Descriptive vs. experimental : Will you take measurements of something as it is, or will you perform an experiment?

Second, decide how you will analyze the data .

- For quantitative data, you can use statistical analysis methods to test relationships between variables.

- For qualitative data, you can use methods such as thematic analysis to interpret patterns and meanings in the data.

Table of contents

Methods for collecting data, examples of data collection methods, methods for analyzing data, examples of data analysis methods, other interesting articles, frequently asked questions about research methods.

Data is the information that you collect for the purposes of answering your research question . The type of data you need depends on the aims of your research.

Qualitative vs. quantitative data

Your choice of qualitative or quantitative data collection depends on the type of knowledge you want to develop.

For questions about ideas, experiences and meanings, or to study something that can’t be described numerically, collect qualitative data .

If you want to develop a more mechanistic understanding of a topic, or your research involves hypothesis testing , collect quantitative data .

| Qualitative | to broader populations. . | |

|---|---|---|

| Quantitative | . |

You can also take a mixed methods approach , where you use both qualitative and quantitative research methods.

Primary vs. secondary research

Primary research is any original data that you collect yourself for the purposes of answering your research question (e.g. through surveys , observations and experiments ). Secondary research is data that has already been collected by other researchers (e.g. in a government census or previous scientific studies).

If you are exploring a novel research question, you’ll probably need to collect primary data . But if you want to synthesize existing knowledge, analyze historical trends, or identify patterns on a large scale, secondary data might be a better choice.

| Primary | . | methods. |

|---|---|---|

| Secondary |

Descriptive vs. experimental data

In descriptive research , you collect data about your study subject without intervening. The validity of your research will depend on your sampling method .

In experimental research , you systematically intervene in a process and measure the outcome. The validity of your research will depend on your experimental design .

To conduct an experiment, you need to be able to vary your independent variable , precisely measure your dependent variable, and control for confounding variables . If it’s practically and ethically possible, this method is the best choice for answering questions about cause and effect.

| Descriptive | . . | |

|---|---|---|

| Experimental |

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

| Research method | Primary or secondary? | Qualitative or quantitative? | When to use |

|---|---|---|---|

| Primary | Quantitative | To test cause-and-effect relationships. | |

| Primary | Quantitative | To understand general characteristics of a population. | |

| Interview/focus group | Primary | Qualitative | To gain more in-depth understanding of a topic. |

| Observation | Primary | Either | To understand how something occurs in its natural setting. |

| Secondary | Either | To situate your research in an existing body of work, or to evaluate trends within a research topic. | |

| Either | Either | To gain an in-depth understanding of a specific group or context, or when you don’t have the resources for a large study. |

Your data analysis methods will depend on the type of data you collect and how you prepare it for analysis.

Data can often be analyzed both quantitatively and qualitatively. For example, survey responses could be analyzed qualitatively by studying the meanings of responses or quantitatively by studying the frequencies of responses.

Qualitative analysis methods

Qualitative analysis is used to understand words, ideas, and experiences. You can use it to interpret data that was collected:

- From open-ended surveys and interviews , literature reviews , case studies , ethnographies , and other sources that use text rather than numbers.

- Using non-probability sampling methods .

Qualitative analysis tends to be quite flexible and relies on the researcher’s judgement, so you have to reflect carefully on your choices and assumptions and be careful to avoid research bias .

Quantitative analysis methods

Quantitative analysis uses numbers and statistics to understand frequencies, averages and correlations (in descriptive studies) or cause-and-effect relationships (in experiments).

You can use quantitative analysis to interpret data that was collected either:

- During an experiment .

- Using probability sampling methods .

Because the data is collected and analyzed in a statistically valid way, the results of quantitative analysis can be easily standardized and shared among researchers.

| Research method | Qualitative or quantitative? | When to use |

|---|---|---|

| Quantitative | To analyze data collected in a statistically valid manner (e.g. from experiments, surveys, and observations). | |

| Meta-analysis | Quantitative | To statistically analyze the results of a large collection of studies. Can only be applied to studies that collected data in a statistically valid manner. |

| Qualitative | To analyze data collected from interviews, , or textual sources. To understand general themes in the data and how they are communicated. | |

| Either | To analyze large volumes of textual or visual data collected from surveys, literature reviews, or other sources. Can be quantitative (i.e. frequencies of words) or qualitative (i.e. meanings of words). |

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

A sample is a subset of individuals from a larger population . Sampling means selecting the group that you will actually collect data from in your research. For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

In statistics, sampling allows you to test a hypothesis about the characteristics of a population.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts and meanings, use qualitative methods .

- If you want to analyze a large amount of readily-available data, use secondary data. If you want data specific to your purposes with control over how it is generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Methodology refers to the overarching strategy and rationale of your research project . It involves studying the methods used in your field and the theories or principles behind them, in order to develop an approach that matches your objectives.

Methods are the specific tools and procedures you use to collect and analyze data (for example, experiments, surveys , and statistical tests ).

In shorter scientific papers, where the aim is to report the findings of a specific study, you might simply describe what you did in a methods section .

In a longer or more complex research project, such as a thesis or dissertation , you will probably include a methodology section , where you explain your approach to answering the research questions and cite relevant sources to support your choice of methods.

Is this article helpful?

Other students also liked, writing strong research questions | criteria & examples.

- What Is a Research Design | Types, Guide & Examples

- Data Collection | Definition, Methods & Examples

More interesting articles

- Between-Subjects Design | Examples, Pros, & Cons

- Cluster Sampling | A Simple Step-by-Step Guide with Examples

- Confounding Variables | Definition, Examples & Controls

- Construct Validity | Definition, Types, & Examples

- Content Analysis | Guide, Methods & Examples

- Control Groups and Treatment Groups | Uses & Examples

- Control Variables | What Are They & Why Do They Matter?

- Correlation vs. Causation | Difference, Designs & Examples

- Correlational Research | When & How to Use

- Critical Discourse Analysis | Definition, Guide & Examples

- Cross-Sectional Study | Definition, Uses & Examples

- Descriptive Research | Definition, Types, Methods & Examples

- Ethical Considerations in Research | Types & Examples

- Explanatory and Response Variables | Definitions & Examples

- Explanatory Research | Definition, Guide, & Examples

- Exploratory Research | Definition, Guide, & Examples

- External Validity | Definition, Types, Threats & Examples

- Extraneous Variables | Examples, Types & Controls

- Guide to Experimental Design | Overview, Steps, & Examples

- How Do You Incorporate an Interview into a Dissertation? | Tips

- How to Do Thematic Analysis | Step-by-Step Guide & Examples

- How to Write a Literature Review | Guide, Examples, & Templates

- How to Write a Strong Hypothesis | Steps & Examples

- Inclusion and Exclusion Criteria | Examples & Definition

- Independent vs. Dependent Variables | Definition & Examples

- Inductive Reasoning | Types, Examples, Explanation

- Inductive vs. Deductive Research Approach | Steps & Examples

- Internal Validity in Research | Definition, Threats, & Examples

- Internal vs. External Validity | Understanding Differences & Threats

- Longitudinal Study | Definition, Approaches & Examples

- Mediator vs. Moderator Variables | Differences & Examples

- Mixed Methods Research | Definition, Guide & Examples

- Multistage Sampling | Introductory Guide & Examples

- Naturalistic Observation | Definition, Guide & Examples

- Operationalization | A Guide with Examples, Pros & Cons

- Population vs. Sample | Definitions, Differences & Examples

- Primary Research | Definition, Types, & Examples

- Qualitative vs. Quantitative Research | Differences, Examples & Methods

- Quasi-Experimental Design | Definition, Types & Examples

- Questionnaire Design | Methods, Question Types & Examples

- Random Assignment in Experiments | Introduction & Examples

- Random vs. Systematic Error | Definition & Examples

- Reliability vs. Validity in Research | Difference, Types and Examples

- Reproducibility vs Replicability | Difference & Examples

- Reproducibility vs. Replicability | Difference & Examples

- Sampling Methods | Types, Techniques & Examples

- Semi-Structured Interview | Definition, Guide & Examples

- Simple Random Sampling | Definition, Steps & Examples

- Single, Double, & Triple Blind Study | Definition & Examples

- Stratified Sampling | Definition, Guide & Examples

- Structured Interview | Definition, Guide & Examples

- Survey Research | Definition, Examples & Methods

- Systematic Review | Definition, Example, & Guide

- Systematic Sampling | A Step-by-Step Guide with Examples

- Textual Analysis | Guide, 3 Approaches & Examples

- The 4 Types of Reliability in Research | Definitions & Examples

- The 4 Types of Validity in Research | Definitions & Examples

- Transcribing an Interview | 5 Steps & Transcription Software

- Triangulation in Research | Guide, Types, Examples

- Types of Interviews in Research | Guide & Examples

- Types of Research Designs Compared | Guide & Examples

- Types of Variables in Research & Statistics | Examples

- Unstructured Interview | Definition, Guide & Examples

- What Is a Case Study? | Definition, Examples & Methods

- What Is a Case-Control Study? | Definition & Examples

- What Is a Cohort Study? | Definition & Examples

- What Is a Conceptual Framework? | Tips & Examples

- What Is a Controlled Experiment? | Definitions & Examples

- What Is a Double-Barreled Question?

- What Is a Focus Group? | Step-by-Step Guide & Examples

- What Is a Likert Scale? | Guide & Examples

- What Is a Prospective Cohort Study? | Definition & Examples

- What Is a Retrospective Cohort Study? | Definition & Examples

- What Is Action Research? | Definition & Examples

- What Is an Observational Study? | Guide & Examples

- What Is Concurrent Validity? | Definition & Examples

- What Is Content Validity? | Definition & Examples

- What Is Convenience Sampling? | Definition & Examples

- What Is Convergent Validity? | Definition & Examples

- What Is Criterion Validity? | Definition & Examples

- What Is Data Cleansing? | Definition, Guide & Examples

- What Is Deductive Reasoning? | Explanation & Examples

- What Is Discriminant Validity? | Definition & Example

- What Is Ecological Validity? | Definition & Examples

- What Is Ethnography? | Definition, Guide & Examples

- What Is Face Validity? | Guide, Definition & Examples

- What Is Non-Probability Sampling? | Types & Examples

- What Is Participant Observation? | Definition & Examples

- What Is Peer Review? | Types & Examples

- What Is Predictive Validity? | Examples & Definition

- What Is Probability Sampling? | Types & Examples

- What Is Purposive Sampling? | Definition & Examples

- What Is Qualitative Observation? | Definition & Examples

- What Is Qualitative Research? | Methods & Examples

- What Is Quantitative Observation? | Definition & Examples

- What Is Quantitative Research? | Definition, Uses & Methods

What is your plagiarism score?

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Ch 2: Psychological Research Methods

Have you ever wondered whether the violence you see on television affects your behavior? Are you more likely to behave aggressively in real life after watching people behave violently in dramatic situations on the screen? Or, could seeing fictional violence actually get aggression out of your system, causing you to be more peaceful? How are children influenced by the media they are exposed to? A psychologist interested in the relationship between behavior and exposure to violent images might ask these very questions.

The topic of violence in the media today is contentious. Since ancient times, humans have been concerned about the effects of new technologies on our behaviors and thinking processes. The Greek philosopher Socrates, for example, worried that writing—a new technology at that time—would diminish people’s ability to remember because they could rely on written records rather than committing information to memory. In our world of quickly changing technologies, questions about the effects of media continue to emerge. Is it okay to talk on a cell phone while driving? Are headphones good to use in a car? What impact does text messaging have on reaction time while driving? These are types of questions that psychologist David Strayer asks in his lab.

Watch this short video to see how Strayer utilizes the scientific method to reach important conclusions regarding technology and driving safety.

You can view the transcript for “Understanding driver distraction” here (opens in new window) .

How can we go about finding answers that are supported not by mere opinion, but by evidence that we can all agree on? The findings of psychological research can help us navigate issues like this.

Introduction to the Scientific Method

Learning objectives.

- Explain the steps of the scientific method

- Describe why the scientific method is important to psychology

- Summarize the processes of informed consent and debriefing

- Explain how research involving humans or animals is regulated

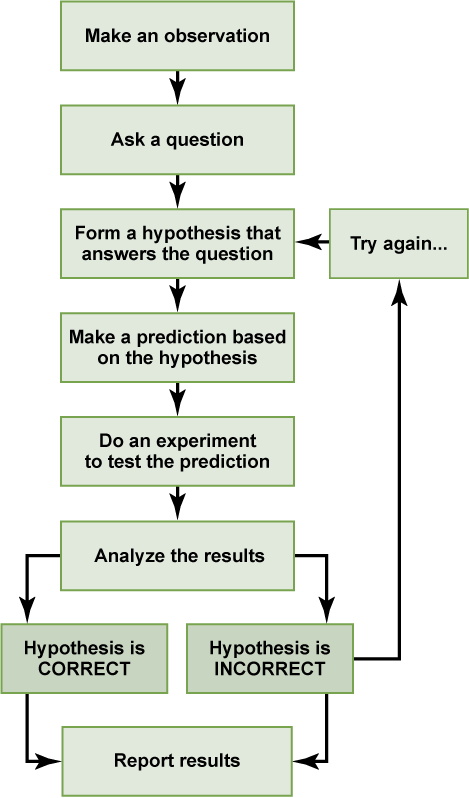

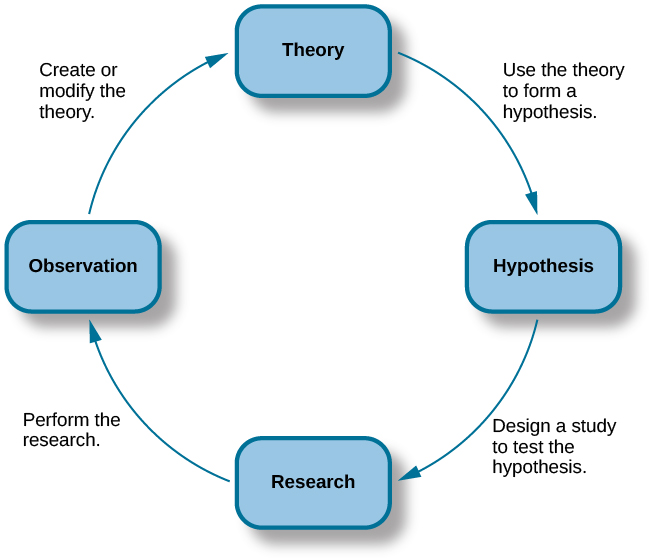

Scientists are engaged in explaining and understanding how the world around them works, and they are able to do so by coming up with theories that generate hypotheses that are testable and falsifiable. Theories that stand up to their tests are retained and refined, while those that do not are discarded or modified. In this way, research enables scientists to separate fact from simple opinion. Having good information generated from research aids in making wise decisions both in public policy and in our personal lives. In this section, you’ll see how psychologists use the scientific method to study and understand behavior.

The Scientific Process

The goal of all scientists is to better understand the world around them. Psychologists focus their attention on understanding behavior, as well as the cognitive (mental) and physiological (body) processes that underlie behavior. In contrast to other methods that people use to understand the behavior of others, such as intuition and personal experience, the hallmark of scientific research is that there is evidence to support a claim. Scientific knowledge is empirical : It is grounded in objective, tangible evidence that can be observed time and time again, regardless of who is observing.

While behavior is observable, the mind is not. If someone is crying, we can see the behavior. However, the reason for the behavior is more difficult to determine. Is the person crying due to being sad, in pain, or happy? Sometimes we can learn the reason for someone’s behavior by simply asking a question, like “Why are you crying?” However, there are situations in which an individual is either uncomfortable or unwilling to answer the question honestly, or is incapable of answering. For example, infants would not be able to explain why they are crying. In such circumstances, the psychologist must be creative in finding ways to better understand behavior. This module explores how scientific knowledge is generated, and how important that knowledge is in forming decisions in our personal lives and in the public domain.

Process of Scientific Research