- Innovation at WSU

- Directories

- Give to WSU

- Academic Calendar

- A-Z Directory

- Calendar of Events

- Office Hours

- Policies and Procedures

- Schedule of Courses

- Shocker Store

- Student Webmail

- Technology HelpDesk

- Transfer to WSU

- University Libraries

Unit 04: How to Think Critically about Psychological Science

Unit 4: how to think critically about psychological science.

Unit 4: Assignment #1 (due before 11:59 pm Central on Monday September 6) :

- Watch Crash Course’s (2014) YouTube, “ Psychological Research . ” Because the narrator of the video speaks quite rapidly, you might need to watch the video at least twice (or use the speed-controller in the settings options on YouTube).

- Read Halonen’s (1996) article, “ On Critical Thinking [in Psychology] . ” Note that in Unit 2, we worked on what Halonen refers to as “Practical” critical thinking skills. In this Unit, we will be working on what Halonen refers to as “Methodological” critical thinking skills.

- Read the first page of Dewey’s (2007) chapter, “ Critical Thinking [in Psychology] . ”

- Read Stafford’s (2014) article, “ What It Means To Be Critical [about Psychological Research] ,” which is more about how to be critical of psychological science than why it’s important to be critical, but Stafford’s article will prepare you for other Assignments in this Unit.

- You may write either a Reasons/Arguments Essay OR an Examples Essay.

- Remember to begin by jotting down somewhere your three Reasons/Arguments or your three Examples.

- Next, you should write your three Reasons/Arguments paragraphs or your three Examples paragraphs.

- Then, you should write your Thesis Statement.

- Next, write your Introduction Paragraph, including a hook.

- The last step is to write your Conclusion Paragraph, in which you restate your Thesis Statement and end with something witty or profound

- a Topic Sentence;

- three Supporting Sentences; and

- a Conclusion Sentence.

- Save your essay as PDF and name the file YourLastname_CriticalThinkingEssay.pdf .

- Go to the Unit 4: Assignment #1 Discussion Board and make a new Discussion Board post to which you attach your essay, saved as a PDF. Remember to “Attach” your essay’s PDF (don’t embed your file or use use the “File” tool; instead, use the “Attach” tool).

Unit 4: Assignment #2 (due before 11:59 pm Central on Tuesday September 7) :

- Read about the first (“Sensationalized Headlines”), second (“Misinterpreted Results”), third (“Conflicts of Interest”), and twelfth (“Non-Peer Reviewed Material”) indicator of bad science in Compound Interest’s (2015) infographic “ A Rough Guide to Spotting Bad Science .”

- To see examples of the first (“Sensationalized Headlines”) and twelfth (“Non-Peer Reviewed Material”) indicators of bad science, watch Above the Noise’s (2017) YouTube, “ T op 4 Tips To Spot Bad Science Reporting .”

- Read Ossola’s (2017) article, “ Can You Tell If a Health Story Is Total BS? ” Ossola’s indicators of “Check the Label” and “Control the Spin” are like Compound Interest’s “Sensationalized Headlines” indicator; however, Ossola presents a novel indicator “Beware the Animal Study.”

- To see examples of these indicators of bad science, watch Last Week Tonight with John Oliver’s (2016) YouTube, “ Scientific Studies .” Warning: John Oliver is a late-night comedian/TV host. Therefore, this video contains adult content, adult language, and extreme irreverence toward a wide swath of people. The video presents numerous examples of bad science indicators; however, if you’d prefer not to watch the video, then please don’t.

- “Sensationalized Headlines”

- “Misinterpreted Results”

- “Non-Peer Reviewed Material”

- “Beware the Animal Study”

- “Conflicts of Interest”

- Be sure to find three different news reports, each of which reports about a different research study , rather than three news reports all of which report about the same research study.

- Describe each of the three news reports, preferably each in its own paragraph.

- Identify which indicator of bad science characterizes each news report.

- Provide for each news report either its URL (using the technique you learned from the Course How To ) or, if a video, its YouTube or Vimeo (using the technique you learned from the Course How To ).

Unit 4: Assignment #3 (due before 11:59 pm Central on Wednesday September 8) :

- Watch TEDxDelft’s (2012) YouTube, “ The Danger of Mixing Up Causality and Correlation . ”

- Watch PsychU’s (2015) YouTube, “ Correlation vs. Causation – PSY 101 . ”

- Because PsychU momentarily confuses the term “hypothesis” with the term “theory,” watch PBS’s (2015) YouTube “ Fact vs. Theory vs. Hypothesis vs. Law … Explained! ”

- Make sure you know the meanings of, and differences among, the four terms: Fact, Theory, Hypothesis, and Law. Not only will you will need to know these terms and their differences throughout the rest of this course, but everyone should know these terms and their differences.

- Watch AsapScience’s (2017) YouTube, “ This ≠ That .”

- Watch Khan Academy’s (2011) YouTube, “ Correlation and Causality . ”

- Jot down at least six examples of correlation not proving causation from the videos you watched. One example is the correlation between the amount of ice cream purchased (during each month of the year) and the number of drowning deaths (during each month of the year) not proving that ice cream causes drowning.

- Make sure you understand that two variables (e.g., ice cream purchases per month and drowning deaths per month) might both be caused by another variable (e.g., season of the year). That other variable is often called a confounding variable.

- Make sure you understand that the correlation between two variables (e.g., pool drownings per year and Nicholas Cage films per year) might simply be due to coincidence.

- Make sure you understand that rather than one variable (e.g., skipping breakfast) causing another variable (e.g., obesity), the causation might be reversed.

- When you are teaching each person, provide examples of correlations that do not prove causation, using the examples you saw in the videos.

- To make sure that each of the three people learned why correlation should not be interpreted as causation, ask each person to tell you another example (an example that you did not tell them) of correlation not proving causation.

- Also teach each of the three persons the difference between hypothesis and theory.

- describe how you taught the three persons that correlation cannot be interpreted as causation;

- state each of the three persons’ initials (e.g., MG) and their approximate age; and

- report the examples each person told you of correlations that should not be confused with causation.

Unit 4: Assignment #4 (due before 11:59 pm Central on Thursday September 9) :

- To cement your learning about critical thinking and psychological science, read Tesler’s (2016) article, “ Can You Believe It? Seven Questions to Ask About Any Scientific Claim .”

- Go to the Unit 4: Assignment #2 and #4 Discussion Board and read all the posts made in Unit 4: Assignment #2 by the other members (or member) of your Chat Group.

- If you are in a Chat Group with two other members, and one of the two other Chat Group members hasn’t yet posted their Unit 4: Assignment #2 — and the due date for Unit 4: Assignment #2 has passed — you can choose two studies from the Chat Group member who has already posted their Unit 4: Assignment #2.

- If you are in a Chat Group with only one other member , and that other member hasn’t yet posted their Unit 4: Assignment #2 — and the due date for Unit 4: Assignment #2 has passed — you can choose two studies from students who are not in your Chat Group.

- Now, pretend that instead of your Chat Group member(s) finding and posting these two studies on our class Discussion Board as examples of bad science, two relatives or acquaintances of yours posted the two studies on Facebook, Twitter, or other social media as recommendations to their friends and followers (of good science).

- You are required to make two separate Discussion Board response posts (replies); each response post should be at least 200 words, and each post should be in response to only one of the two studies.

- Even if you are replying to the same student, you must make two separate Discussion Board posts.

- Each of your two response posts must incorporate what you learned from Tesler’s (2016) article.

- Address your two responses not to your Chat Group Member(s) but instead to fictitious people, such as “Dear Aunt Bessie” or “Hey, Freshman Roommate.”

Unit 4: Assignment #5 (due before 11:59 pm Central on Saturday September 11) :

- download (to your own computer) and save The Logic of Science’s (no date) Hierarchy of Scientific Evidence graphic (PDF format) ; (Text-Only Word format) , and

- read Brunning’s (2015) article, “ A Rough Guide to Types of Scientific Evidence .”

- Then, read about some of the most famous psychology case reports in Jarrett’s (2015) article, “ Psychology’s Greatest Case Studies – Digested .”

- Think about why it was important for the various scientists who reported these case studies to report these case studies.

- Then, read about an example of a randomized controlled study in Fradera’s (2017) article, “ How Much Are Readers Misled by Headlines that Imply Correlational Findings Are Causal? ”

- Think about why it was important for these scientists to conduct a randomized controlled study on this topic.

- Then, to see an example of a journal article reporting a meta-analysis, read the abstract of Hyde and Lynn’s (1988) article, “ Gender Differences in Verbal Ability: A Meta-Analysis .”

- Think about why it was important for these scientists to conduct a meta-analysis on this topic.

- Begin by jotting down your three Reasons/Arguments or your three Examples.

- Then, write your three Reasons/Arguments Paragraphs or your three Examples Paragraphs.

- For each of your three Reasons/Arguments Paragraphs or each of your three Examples Paragraphs, write a Topic Sentence, three Supporting Sentences, and a Conclusion Sentence.

- Then, write your essay’s Thesis Statement.

- Then, write your essay’s Introduction Paragraph, including a hook.

- Then, write your essay’s Conclusion Paragraph, in which you restate your Thesis Statement and end with something witty or profound.

- Remember that all five of your paragraphs, your Introduction Paragraph, each of your three Reasons/Arguments Paragraphs or your three Examples Paragraphs, and your Conclusion Paragraph, must have a Topic Sentence, three Supporting Sentences, and a Conclusion Sentence.

- Save your essay as a PDF and name the file YourLastname_EvidenceEssay.pdf .

- Make a new Discussion Board post to which you attach your essay, saved as a PDF. This is described in the Course How To (under the topic, “How to Save and Attach a Group Text Chat Transcript”).

Unit 4: Assignment #6 (due before 11:59 pm Central on Monday September 13) :

- To learn what replication means in research, read CK-12’s (no date) article, “ Replication .”

- To get a quick overview of replication courtesy of the NBC comedy TV show, “The Good Place,” read Harnett’s 2019 tweet .

- Be sure to notice in the excerpt from Dewey’s article the methodological reasons he gives for why a result might not replicate.

- Be sure to read the entire cartoon, frame by frame.

- Although it might seem repetitive, the point is to read closely through all 20 of the frames that report the scientists’ results.

- Then read the final page’s “Explanation.”

- Now that you understand what p-hacking means, read Hobbes’s (2019) tweet about the problems with studies that report a link between “a single food” (e.g., eggs) and “a single health outcome” (e.g., heart disease).

- To ensure that you understand the role of chance and statistical probability in producing a result that might not replicate, read Hanna’s (2012) article, “ Why Is Replication So Important? ”

- To understand the difference between the term replication and reproduction , read the handout, “ Replication (and Replicability) versus Reproduction (and Reproducibility) . ”

- To understand the role of false positives in research, watch Veritasium’s (2016) YouTube, “ Is Most Published Research Wrong? ”

- This YouTube is a more complex video than some of the others you’ve watched in this Unit.

- Try to understand as much as you can. You’ll have a chance to discuss what you don’t understand in this video with your Chat Group.

- If your last name comes first alphabetically in your Chat Group, watch NOVA’s (2017) video “ What Makes Science True ? ”

- If your last name comes last alphabetically in your Chat Group, read all the way through Naro’s (2016) comic strip, “ Why Can’t Anyone Replicate the Scientific Studies from Those Eye-Grabbing Headlines? ”

- If your last name comes neither first nor last in your Chat Group (or if you are in a Chat Group with only two members), read Carroll’s (2017) article, “ Science Needs a Solution for the Temptation of Positive Results ” and Leyser et al.’s (2017) article, “ The Science ‘Reproducibility Crisis’ – and What Can Be Done about It? ”

- Begin by reviewing the articles and videos that everyone in your Chat Group read and watched.

- Next, take turns telling the other member(s) of your Chat Group about the article, video, or comic strip that individual Chat Group members read.

- identify one statistical (probability) reason ;

- identify one researcher motivation ; and

- one news media motivation that could lead to the public seeing headlines such as these two conflicting headlines .

- propose one solution to the statistical (probability) reason you identified;

- propose one solution to the researcher motivation you identified; and

- propose one solution to the the news media motivation you identified.

- Nominate one member of your Chat Group (who participated in the Chat) to make a post on the Unit 4: Assignment #6 Discussion Board that summarizes your Group Chat in at least 200 words.

- Then, this member of the Chat Group needs to make a post on the Unit 4: Assignment #6 Discussion Board and attach the Chat transcript, saved as a PDF, to that Discussion Board post.

- Nominate a third member of your Chat Group (who also participated in the Chat) to make another post on the Unit 4: Assignment #6 Discussion Board that states the name of your Chat Group, the names of the Chat Group members who participated the Chat, the date of your Chat, and the start and stop time of your Group Chat.

- If only two persons participated in the Chat, then one of those two persons needs to do two of the above three tasks.

- Before ending the Group Chat, arrange the date and time for the Group Chat you will need to hold during the next Unit (Unit 5: Assignment #6).

- All members of the Chat Group must record a typical Unit entry in their own Course Journal for Unit 4.

Congratulations, you have finished Unit 4! Onward to Unit 5 !

Open-Access Active-Learning Research Methods Course by Morton Ann Gernsbacher, PhD is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License . The materials have been modified to add various ADA-compliant accessibility features, in some cases including alternative text-only versions. Permissions beyond the scope of this license may be available at http://www.gernsbacherlab.org .

A Crash Course in Critical Thinking

What you need to know—and read—about one of the essential skills needed today..

Posted April 8, 2024 | Reviewed by Michelle Quirk

- In research for "A More Beautiful Question," I did a deep dive into the current crisis in critical thinking.

- Many people may think of themselves as critical thinkers, but they actually are not.

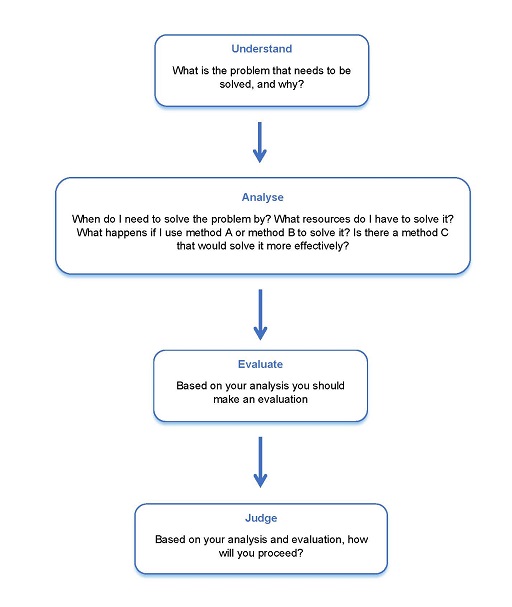

- Here is a series of questions you can ask yourself to try to ensure that you are thinking critically.

Conspiracy theories. Inability to distinguish facts from falsehoods. Widespread confusion about who and what to believe.

These are some of the hallmarks of the current crisis in critical thinking—which just might be the issue of our times. Because if people aren’t willing or able to think critically as they choose potential leaders, they’re apt to choose bad ones. And if they can’t judge whether the information they’re receiving is sound, they may follow faulty advice while ignoring recommendations that are science-based and solid (and perhaps life-saving).

Moreover, as a society, if we can’t think critically about the many serious challenges we face, it becomes more difficult to agree on what those challenges are—much less solve them.

On a personal level, critical thinking can enable you to make better everyday decisions. It can help you make sense of an increasingly complex and confusing world.

In the new expanded edition of my book A More Beautiful Question ( AMBQ ), I took a deep dive into critical thinking. Here are a few key things I learned.

First off, before you can get better at critical thinking, you should understand what it is. It’s not just about being a skeptic. When thinking critically, we are thoughtfully reasoning, evaluating, and making decisions based on evidence and logic. And—perhaps most important—while doing this, a critical thinker always strives to be open-minded and fair-minded . That’s not easy: It demands that you constantly question your assumptions and biases and that you always remain open to considering opposing views.

In today’s polarized environment, many people think of themselves as critical thinkers simply because they ask skeptical questions—often directed at, say, certain government policies or ideas espoused by those on the “other side” of the political divide. The problem is, they may not be asking these questions with an open mind or a willingness to fairly consider opposing views.

When people do this, they’re engaging in “weak-sense critical thinking”—a term popularized by the late Richard Paul, a co-founder of The Foundation for Critical Thinking . “Weak-sense critical thinking” means applying the tools and practices of critical thinking—questioning, investigating, evaluating—but with the sole purpose of confirming one’s own bias or serving an agenda.

In AMBQ , I lay out a series of questions you can ask yourself to try to ensure that you’re thinking critically. Here are some of the questions to consider:

- Why do I believe what I believe?

- Are my views based on evidence?

- Have I fairly and thoughtfully considered differing viewpoints?

- Am I truly open to changing my mind?

Of course, becoming a better critical thinker is not as simple as just asking yourself a few questions. Critical thinking is a habit of mind that must be developed and strengthened over time. In effect, you must train yourself to think in a manner that is more effortful, aware, grounded, and balanced.

For those interested in giving themselves a crash course in critical thinking—something I did myself, as I was working on my book—I thought it might be helpful to share a list of some of the books that have shaped my own thinking on this subject. As a self-interested author, I naturally would suggest that you start with the new 10th-anniversary edition of A More Beautiful Question , but beyond that, here are the top eight critical-thinking books I’d recommend.

The Demon-Haunted World: Science as a Candle in the Dark , by Carl Sagan

This book simply must top the list, because the late scientist and author Carl Sagan continues to be such a bright shining light in the critical thinking universe. Chapter 12 includes the details on Sagan’s famous “baloney detection kit,” a collection of lessons and tips on how to deal with bogus arguments and logical fallacies.

Clear Thinking: Turning Ordinary Moments Into Extraordinary Results , by Shane Parrish

The creator of the Farnham Street website and host of the “Knowledge Project” podcast explains how to contend with biases and unconscious reactions so you can make better everyday decisions. It contains insights from many of the brilliant thinkers Shane has studied.

Good Thinking: Why Flawed Logic Puts Us All at Risk and How Critical Thinking Can Save the World , by David Robert Grimes

A brilliant, comprehensive 2021 book on critical thinking that, to my mind, hasn’t received nearly enough attention . The scientist Grimes dissects bad thinking, shows why it persists, and offers the tools to defeat it.

Think Again: The Power of Knowing What You Don't Know , by Adam Grant

Intellectual humility—being willing to admit that you might be wrong—is what this book is primarily about. But Adam, the renowned Wharton psychology professor and bestselling author, takes the reader on a mind-opening journey with colorful stories and characters.

Think Like a Detective: A Kid's Guide to Critical Thinking , by David Pakman

The popular YouTuber and podcast host Pakman—normally known for talking politics —has written a terrific primer on critical thinking for children. The illustrated book presents critical thinking as a “superpower” that enables kids to unlock mysteries and dig for truth. (I also recommend Pakman’s second kids’ book called Think Like a Scientist .)

Rationality: What It Is, Why It Seems Scarce, Why It Matters , by Steven Pinker

The Harvard psychology professor Pinker tackles conspiracy theories head-on but also explores concepts involving risk/reward, probability and randomness, and correlation/causation. And if that strikes you as daunting, be assured that Pinker makes it lively and accessible.

How Minds Change: The Surprising Science of Belief, Opinion and Persuasion , by David McRaney

David is a science writer who hosts the popular podcast “You Are Not So Smart” (and his ideas are featured in A More Beautiful Question ). His well-written book looks at ways you can actually get through to people who see the world very differently than you (hint: bludgeoning them with facts definitely won’t work).

A Healthy Democracy's Best Hope: Building the Critical Thinking Habit , by M Neil Browne and Chelsea Kulhanek

Neil Browne, author of the seminal Asking the Right Questions: A Guide to Critical Thinking, has been a pioneer in presenting critical thinking as a question-based approach to making sense of the world around us. His newest book, co-authored with Chelsea Kulhanek, breaks down critical thinking into “11 explosive questions”—including the “priors question” (which challenges us to question assumptions), the “evidence question” (focusing on how to evaluate and weigh evidence), and the “humility question” (which reminds us that a critical thinker must be humble enough to consider the possibility of being wrong).

Warren Berger is a longtime journalist and author of A More Beautiful Question .

- Find a Therapist

- Find a Treatment Center

- Find a Psychiatrist

- Find a Support Group

- Find Online Therapy

- United States

- Brooklyn, NY

- Chicago, IL

- Houston, TX

- Los Angeles, CA

- New York, NY

- Portland, OR

- San Diego, CA

- San Francisco, CA

- Seattle, WA

- Washington, DC

- Asperger's

- Bipolar Disorder

- Chronic Pain

- Eating Disorders

- Passive Aggression

- Personality

- Goal Setting

- Positive Psychology

- Stopping Smoking

- Low Sexual Desire

- Relationships

- Child Development

- Self Tests NEW

- Therapy Center

- Diagnosis Dictionary

- Types of Therapy

At any moment, someone’s aggravating behavior or our own bad luck can set us off on an emotional spiral that threatens to derail our entire day. Here’s how we can face our triggers with less reactivity so that we can get on with our lives.

- Emotional Intelligence

- Gaslighting

- Affective Forecasting

- Neuroscience

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- CBE Life Sci Educ

- v.17(1); Spring 2018

Understanding the Complex Relationship between Critical Thinking and Science Reasoning among Undergraduate Thesis Writers

Jason e. dowd.

† Department of Biology, Duke University, Durham, NC 27708

Robert J. Thompson, Jr.

‡ Department of Psychology and Neuroscience, Duke University, Durham, NC 27708

Leslie A. Schiff

§ Department of Microbiology and Immunology, University of Minnesota, Minneapolis, MN 55455

Julie A. Reynolds

Associated data.

This study empirically examines the relationship between students’ critical-thinking skills and scientific reasoning as reflected in undergraduate thesis writing in biology. Writing offers a unique window into studying this relationship, and the findings raise potential implications for instruction.

Developing critical-thinking and scientific reasoning skills are core learning objectives of science education, but little empirical evidence exists regarding the interrelationships between these constructs. Writing effectively fosters students’ development of these constructs, and it offers a unique window into studying how they relate. In this study of undergraduate thesis writing in biology at two universities, we examine how scientific reasoning exhibited in writing (assessed using the Biology Thesis Assessment Protocol) relates to general and specific critical-thinking skills (assessed using the California Critical Thinking Skills Test), and we consider implications for instruction. We find that scientific reasoning in writing is strongly related to inference , while other aspects of science reasoning that emerge in writing (epistemological considerations, writing conventions, etc.) are not significantly related to critical-thinking skills. Science reasoning in writing is not merely a proxy for critical thinking. In linking features of students’ writing to their critical-thinking skills, this study 1) provides a bridge to prior work suggesting that engagement in science writing enhances critical thinking and 2) serves as a foundational step for subsequently determining whether instruction focused explicitly on developing critical-thinking skills (particularly inference ) can actually improve students’ scientific reasoning in their writing.

INTRODUCTION

Critical-thinking and scientific reasoning skills are core learning objectives of science education for all students, regardless of whether or not they intend to pursue a career in science or engineering. Consistent with the view of learning as construction of understanding and meaning ( National Research Council, 2000 ), the pedagogical practice of writing has been found to be effective not only in fostering the development of students’ conceptual and procedural knowledge ( Gerdeman et al. , 2007 ) and communication skills ( Clase et al. , 2010 ), but also scientific reasoning ( Reynolds et al. , 2012 ) and critical-thinking skills ( Quitadamo and Kurtz, 2007 ).

Critical thinking and scientific reasoning are similar but different constructs that include various types of higher-order cognitive processes, metacognitive strategies, and dispositions involved in making meaning of information. Critical thinking is generally understood as the broader construct ( Holyoak and Morrison, 2005 ), comprising an array of cognitive processes and dispostions that are drawn upon differentially in everyday life and across domains of inquiry such as the natural sciences, social sciences, and humanities. Scientific reasoning, then, may be interpreted as the subset of critical-thinking skills (cognitive and metacognitive processes and dispositions) that 1) are involved in making meaning of information in scientific domains and 2) support the epistemological commitment to scientific methodology and paradigm(s).

Although there has been an enduring focus in higher education on promoting critical thinking and reasoning as general or “transferable” skills, research evidence provides increasing support for the view that reasoning and critical thinking are also situational or domain specific ( Beyer et al. , 2013 ). Some researchers, such as Lawson (2010) , present frameworks in which science reasoning is characterized explicitly in terms of critical-thinking skills. There are, however, limited coherent frameworks and empirical evidence regarding either the general or domain-specific interrelationships of scientific reasoning, as it is most broadly defined, and critical-thinking skills.

The Vision and Change in Undergraduate Biology Education Initiative provides a framework for thinking about these constructs and their interrelationship in the context of the core competencies and disciplinary practice they describe ( American Association for the Advancement of Science, 2011 ). These learning objectives aim for undergraduates to “understand the process of science, the interdisciplinary nature of the new biology and how science is closely integrated within society; be competent in communication and collaboration; have quantitative competency and a basic ability to interpret data; and have some experience with modeling, simulation and computational and systems level approaches as well as with using large databases” ( Woodin et al. , 2010 , pp. 71–72). This framework makes clear that science reasoning and critical-thinking skills play key roles in major learning outcomes; for example, “understanding the process of science” requires students to engage in (and be metacognitive about) scientific reasoning, and having the “ability to interpret data” requires critical-thinking skills. To help students better achieve these core competencies, we must better understand the interrelationships of their composite parts. Thus, the next step is to determine which specific critical-thinking skills are drawn upon when students engage in science reasoning in general and with regard to the particular scientific domain being studied. Such a determination could be applied to improve science education for both majors and nonmajors through pedagogical approaches that foster critical-thinking skills that are most relevant to science reasoning.

Writing affords one of the most effective means for making thinking visible ( Reynolds et al. , 2012 ) and learning how to “think like” and “write like” disciplinary experts ( Meizlish et al. , 2013 ). As a result, student writing affords the opportunities to both foster and examine the interrelationship of scientific reasoning and critical-thinking skills within and across disciplinary contexts. The purpose of this study was to better understand the relationship between students’ critical-thinking skills and scientific reasoning skills as reflected in the genre of undergraduate thesis writing in biology departments at two research universities, the University of Minnesota and Duke University.

In the following subsections, we discuss in greater detail the constructs of scientific reasoning and critical thinking, as well as the assessment of scientific reasoning in students’ thesis writing. In subsequent sections, we discuss our study design, findings, and the implications for enhancing educational practices.

Critical Thinking

The advances in cognitive science in the 21st century have increased our understanding of the mental processes involved in thinking and reasoning, as well as memory, learning, and problem solving. Critical thinking is understood to include both a cognitive dimension and a disposition dimension (e.g., reflective thinking) and is defined as “purposeful, self-regulatory judgment which results in interpretation, analysis, evaluation, and inference, as well as explanation of the evidential, conceptual, methodological, criteriological, or contextual considerations upon which that judgment is based” ( Facione, 1990, p. 3 ). Although various other definitions of critical thinking have been proposed, researchers have generally coalesced on this consensus: expert view ( Blattner and Frazier, 2002 ; Condon and Kelly-Riley, 2004 ; Bissell and Lemons, 2006 ; Quitadamo and Kurtz, 2007 ) and the corresponding measures of critical-thinking skills ( August, 2016 ; Stephenson and Sadler-McKnight, 2016 ).

Both the cognitive skills and dispositional components of critical thinking have been recognized as important to science education ( Quitadamo and Kurtz, 2007 ). Empirical research demonstrates that specific pedagogical practices in science courses are effective in fostering students’ critical-thinking skills. Quitadamo and Kurtz (2007) found that students who engaged in a laboratory writing component in the context of a general education biology course significantly improved their overall critical-thinking skills (and their analytical and inference skills, in particular), whereas students engaged in a traditional quiz-based laboratory did not improve their critical-thinking skills. In related work, Quitadamo et al. (2008) found that a community-based inquiry experience, involving inquiry, writing, research, and analysis, was associated with improved critical thinking in a biology course for nonmajors, compared with traditionally taught sections. In both studies, students who exhibited stronger presemester critical-thinking skills exhibited stronger gains, suggesting that “students who have not been explicitly taught how to think critically may not reach the same potential as peers who have been taught these skills” ( Quitadamo and Kurtz, 2007 , p. 151).

Recently, Stephenson and Sadler-McKnight (2016) found that first-year general chemistry students who engaged in a science writing heuristic laboratory, which is an inquiry-based, writing-to-learn approach to instruction ( Hand and Keys, 1999 ), had significantly greater gains in total critical-thinking scores than students who received traditional laboratory instruction. Each of the four components—inquiry, writing, collaboration, and reflection—have been linked to critical thinking ( Stephenson and Sadler-McKnight, 2016 ). Like the other studies, this work highlights the value of targeting critical-thinking skills and the effectiveness of an inquiry-based, writing-to-learn approach to enhance critical thinking. Across studies, authors advocate adopting critical thinking as the course framework ( Pukkila, 2004 ) and developing explicit examples of how critical thinking relates to the scientific method ( Miri et al. , 2007 ).

In these examples, the important connection between writing and critical thinking is highlighted by the fact that each intervention involves the incorporation of writing into science, technology, engineering, and mathematics education (either alone or in combination with other pedagogical practices). However, critical-thinking skills are not always the primary learning outcome; in some contexts, scientific reasoning is the primary outcome that is assessed.

Scientific Reasoning

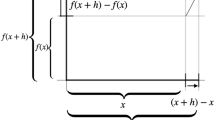

Scientific reasoning is a complex process that is broadly defined as “the skills involved in inquiry, experimentation, evidence evaluation, and inference that are done in the service of conceptual change or scientific understanding” ( Zimmerman, 2007 , p. 172). Scientific reasoning is understood to include both conceptual knowledge and the cognitive processes involved with generation of hypotheses (i.e., inductive processes involved in the generation of hypotheses and the deductive processes used in the testing of hypotheses), experimentation strategies, and evidence evaluation strategies. These dimensions are interrelated, in that “experimentation and inference strategies are selected based on prior conceptual knowledge of the domain” ( Zimmerman, 2000 , p. 139). Furthermore, conceptual and procedural knowledge and cognitive process dimensions can be general and domain specific (or discipline specific).

With regard to conceptual knowledge, attention has been focused on the acquisition of core methodological concepts fundamental to scientists’ causal reasoning and metacognitive distancing (or decontextualized thinking), which is the ability to reason independently of prior knowledge or beliefs ( Greenhoot et al. , 2004 ). The latter involves what Kuhn and Dean (2004) refer to as the coordination of theory and evidence, which requires that one question existing theories (i.e., prior knowledge and beliefs), seek contradictory evidence, eliminate alternative explanations, and revise one’s prior beliefs in the face of contradictory evidence. Kuhn and colleagues (2008) further elaborate that scientific thinking requires “a mature understanding of the epistemological foundations of science, recognizing scientific knowledge as constructed by humans rather than simply discovered in the world,” and “the ability to engage in skilled argumentation in the scientific domain, with an appreciation of argumentation as entailing the coordination of theory and evidence” ( Kuhn et al. , 2008 , p. 435). “This approach to scientific reasoning not only highlights the skills of generating and evaluating evidence-based inferences, but also encompasses epistemological appreciation of the functions of evidence and theory” ( Ding et al. , 2016 , p. 616). Evaluating evidence-based inferences involves epistemic cognition, which Moshman (2015) defines as the subset of metacognition that is concerned with justification, truth, and associated forms of reasoning. Epistemic cognition is both general and domain specific (or discipline specific; Moshman, 2015 ).

There is empirical support for the contributions of both prior knowledge and an understanding of the epistemological foundations of science to scientific reasoning. In a study of undergraduate science students, advanced scientific reasoning was most often accompanied by accurate prior knowledge as well as sophisticated epistemological commitments; additionally, for students who had comparable levels of prior knowledge, skillful reasoning was associated with a strong epistemological commitment to the consistency of theory with evidence ( Zeineddin and Abd-El-Khalick, 2010 ). These findings highlight the importance of the need for instructional activities that intentionally help learners develop sophisticated epistemological commitments focused on the nature of knowledge and the role of evidence in supporting knowledge claims ( Zeineddin and Abd-El-Khalick, 2010 ).

Scientific Reasoning in Students’ Thesis Writing

Pedagogical approaches that incorporate writing have also focused on enhancing scientific reasoning. Many rubrics have been developed to assess aspects of scientific reasoning in written artifacts. For example, Timmerman and colleagues (2011) , in the course of describing their own rubric for assessing scientific reasoning, highlight several examples of scientific reasoning assessment criteria ( Haaga, 1993 ; Tariq et al. , 1998 ; Topping et al. , 2000 ; Kelly and Takao, 2002 ; Halonen et al. , 2003 ; Willison and O’Regan, 2007 ).

At both the University of Minnesota and Duke University, we have focused on the genre of the undergraduate honors thesis as the rhetorical context in which to study and improve students’ scientific reasoning and writing. We view the process of writing an undergraduate honors thesis as a form of professional development in the sciences (i.e., a way of engaging students in the practices of a community of discourse). We have found that structured courses designed to scaffold the thesis-writing process and promote metacognition can improve writing and reasoning skills in biology, chemistry, and economics ( Reynolds and Thompson, 2011 ; Dowd et al. , 2015a , b ). In the context of this prior work, we have defined scientific reasoning in writing as the emergent, underlying construct measured across distinct aspects of students’ written discussion of independent research in their undergraduate theses.

The Biology Thesis Assessment Protocol (BioTAP) was developed at Duke University as a tool for systematically guiding students and faculty through a “draft–feedback–revision” writing process, modeled after professional scientific peer-review processes ( Reynolds et al. , 2009 ). BioTAP includes activities and worksheets that allow students to engage in critical peer review and provides detailed descriptions, presented as rubrics, of the questions (i.e., dimensions, shown in Table 1 ) upon which such review should focus. Nine rubric dimensions focus on communication to the broader scientific community, and four rubric dimensions focus on the accuracy and appropriateness of the research. These rubric dimensions provide criteria by which the thesis is assessed, and therefore allow BioTAP to be used as an assessment tool as well as a teaching resource ( Reynolds et al. , 2009 ). Full details are available at www.science-writing.org/biotap.html .

Theses assessment protocol dimensions

In previous work, we have used BioTAP to quantitatively assess students’ undergraduate honors theses and explore the relationship between thesis-writing courses (or specific interventions within the courses) and the strength of students’ science reasoning in writing across different science disciplines: biology ( Reynolds and Thompson, 2011 ); chemistry ( Dowd et al. , 2015b ); and economics ( Dowd et al. , 2015a ). We have focused exclusively on the nine dimensions related to reasoning and writing (questions 1–9), as the other four dimensions (questions 10–13) require topic-specific expertise and are intended to be used by the student’s thesis supervisor.

Beyond considering individual dimensions, we have investigated whether meaningful constructs underlie students’ thesis scores. We conducted exploratory factor analysis of students’ theses in biology, economics, and chemistry and found one dominant underlying factor in each discipline; we termed the factor “scientific reasoning in writing” ( Dowd et al. , 2015a , b , 2016 ). That is, each of the nine dimensions could be understood as reflecting, in different ways and to different degrees, the construct of scientific reasoning in writing. The findings indicated evidence of both general and discipline-specific components to scientific reasoning in writing that relate to epistemic beliefs and paradigms, in keeping with broader ideas about science reasoning discussed earlier. Specifically, scientific reasoning in writing is more strongly associated with formulating a compelling argument for the significance of the research in the context of current literature in biology, making meaning regarding the implications of the findings in chemistry, and providing an organizational framework for interpreting the thesis in economics. We suggested that instruction, whether occurring in writing studios or in writing courses to facilitate thesis preparation, should attend to both components.

Research Question and Study Design

The genre of thesis writing combines the pedagogies of writing and inquiry found to foster scientific reasoning ( Reynolds et al. , 2012 ) and critical thinking ( Quitadamo and Kurtz, 2007 ; Quitadamo et al. , 2008 ; Stephenson and Sadler-McKnight, 2016 ). However, there is no empirical evidence regarding the general or domain-specific interrelationships of scientific reasoning and critical-thinking skills, particularly in the rhetorical context of the undergraduate thesis. The BioTAP studies discussed earlier indicate that the rubric-based assessment produces evidence of scientific reasoning in the undergraduate thesis, but it was not designed to foster or measure critical thinking. The current study was undertaken to address the research question: How are students’ critical-thinking skills related to scientific reasoning as reflected in the genre of undergraduate thesis writing in biology? Determining these interrelationships could guide efforts to enhance students’ scientific reasoning and writing skills through focusing instruction on specific critical-thinking skills as well as disciplinary conventions.

To address this research question, we focused on undergraduate thesis writers in biology courses at two institutions, Duke University and the University of Minnesota, and examined the extent to which students’ scientific reasoning in writing, assessed in the undergraduate thesis using BioTAP, corresponds to students’ critical-thinking skills, assessed using the California Critical Thinking Skills Test (CCTST; August, 2016 ).

Study Sample

The study sample was composed of students enrolled in courses designed to scaffold the thesis-writing process in the Department of Biology at Duke University and the College of Biological Sciences at the University of Minnesota. Both courses complement students’ individual work with research advisors. The course is required for thesis writers at the University of Minnesota and optional for writers at Duke University. Not all students are required to complete a thesis, though it is required for students to graduate with honors; at the University of Minnesota, such students are enrolled in an honors program within the college. In total, 28 students were enrolled in the course at Duke University and 44 students were enrolled in the course at the University of Minnesota. Of those students, two students did not consent to participate in the study; additionally, five students did not validly complete the CCTST (i.e., attempted fewer than 60% of items or completed the test in less than 15 minutes). Thus, our overall rate of valid participation is 90%, with 27 students from Duke University and 38 students from the University of Minnesota. We found no statistically significant differences in thesis assessment between students with valid CCTST scores and invalid CCTST scores. Therefore, we focus on the 65 students who consented to participate and for whom we have complete and valid data in most of this study. Additionally, in asking students for their consent to participate, we allowed them to choose whether to provide or decline access to academic and demographic background data. Of the 65 students who consented to participate, 52 students granted access to such data. Therefore, for additional analyses involving academic and background data, we focus on the 52 students who consented. We note that the 13 students who participated but declined to share additional data performed slightly lower on the CCTST than the 52 others (perhaps suggesting that they differ by other measures, but we cannot determine this with certainty). Among the 52 students, 60% identified as female and 10% identified as being from underrepresented ethnicities.

In both courses, students completed the CCTST online, either in class or on their own, late in the Spring 2016 semester. This is the same assessment that was used in prior studies of critical thinking ( Quitadamo and Kurtz, 2007 ; Quitadamo et al. , 2008 ; Stephenson and Sadler-McKnight, 2016 ). It is “an objective measure of the core reasoning skills needed for reflective decision making concerning what to believe or what to do” ( Insight Assessment, 2016a ). In the test, students are asked to read and consider information as they answer multiple-choice questions. The questions are intended to be appropriate for all users, so there is no expectation of prior disciplinary knowledge in biology (or any other subject). Although actual test items are protected, sample items are available on the Insight Assessment website ( Insight Assessment, 2016b ). We have included one sample item in the Supplemental Material.

The CCTST is based on a consensus definition of critical thinking, measures cognitive and metacognitive skills associated with critical thinking, and has been evaluated for validity and reliability at the college level ( August, 2016 ; Stephenson and Sadler-McKnight, 2016 ). In addition to providing overall critical-thinking score, the CCTST assesses seven dimensions of critical thinking: analysis, interpretation, inference, evaluation, explanation, induction, and deduction. Scores on each dimension are calculated based on students’ performance on items related to that dimension. Analysis focuses on identifying assumptions, reasons, and claims and examining how they interact to form arguments. Interpretation, related to analysis, focuses on determining the precise meaning and significance of information. Inference focuses on drawing conclusions from reasons and evidence. Evaluation focuses on assessing the credibility of sources of information and claims they make. Explanation, related to evaluation, focuses on describing the evidence, assumptions, or rationale for beliefs and conclusions. Induction focuses on drawing inferences about what is probably true based on evidence. Deduction focuses on drawing conclusions about what must be true when the context completely determines the outcome. These are not independent dimensions; the fact that they are related supports their collective interpretation as critical thinking. Together, the CCTST dimensions provide a basis for evaluating students’ overall strength in using reasoning to form reflective judgments about what to believe or what to do ( August, 2016 ). Each of the seven dimensions and the overall CCTST score are measured on a scale of 0–100, where higher scores indicate superior performance. Scores correspond to superior (86–100), strong (79–85), moderate (70–78), weak (63–69), or not manifested (62 and below) skills.

Scientific Reasoning in Writing

At the end of the semester, students’ final, submitted undergraduate theses were assessed using BioTAP, which consists of nine rubric dimensions that focus on communication to the broader scientific community and four additional dimensions that focus on the exhibition of topic-specific expertise ( Reynolds et al. , 2009 ). These dimensions, framed as questions, are displayed in Table 1 .

Student theses were assessed on questions 1–9 of BioTAP using the same procedures described in previous studies ( Reynolds and Thompson, 2011 ; Dowd et al. , 2015a , b ). In this study, six raters were trained in the valid, reliable use of BioTAP rubrics. Each dimension was rated on a five-point scale: 1 indicates the dimension is missing, incomplete, or below acceptable standards; 3 indicates that the dimension is adequate but not exhibiting mastery; and 5 indicates that the dimension is excellent and exhibits mastery (intermediate ratings of 2 and 4 are appropriate when different parts of the thesis make a single category challenging). After training, two raters independently assessed each thesis and then discussed their independent ratings with one another to form a consensus rating. The consensus score is not an average score, but rather an agreed-upon, discussion-based score. On a five-point scale, raters independently assessed dimensions to be within 1 point of each other 82.4% of the time before discussion and formed consensus ratings 100% of the time after discussion.

In this study, we consider both categorical (mastery/nonmastery, where a score of 5 corresponds to mastery) and numerical treatments of individual BioTAP scores to better relate the manifestation of critical thinking in BioTAP assessment to all of the prior studies. For comprehensive/cumulative measures of BioTAP, we focus on the partial sum of questions 1–5, as these questions relate to higher-order scientific reasoning (whereas questions 6–9 relate to mid- and lower-order writing mechanics [ Reynolds et al. , 2009 ]), and the factor scores (i.e., numerical representations of the extent to which each student exhibits the underlying factor), which are calculated from the factor loadings published by Dowd et al. (2016) . We do not focus on questions 6–9 individually in statistical analyses, because we do not expect critical-thinking skills to relate to mid- and lower-order writing skills.

The final, submitted thesis reflects the student’s writing, the student’s scientific reasoning, the quality of feedback provided to the student by peers and mentors, and the student’s ability to incorporate that feedback into his or her work. Therefore, our assessment is not the same as an assessment of unpolished, unrevised samples of students’ written work. While one might imagine that such an unpolished sample may be more strongly correlated with critical-thinking skills measured by the CCTST, we argue that the complete, submitted thesis, assessed using BioTAP, is ultimately a more appropriate reflection of how students exhibit science reasoning in the scientific community.

Statistical Analyses

We took several steps to analyze the collected data. First, to provide context for subsequent interpretations, we generated descriptive statistics for the CCTST scores of the participants based on the norms for undergraduate CCTST test takers. To determine the strength of relationships among CCTST dimensions (including overall score) and the BioTAP dimensions, partial-sum score (questions 1–5), and factor score, we calculated Pearson’s correlations for each pair of measures. To examine whether falling on one side of the nonmastery/mastery threshold (as opposed to a linear scale of performance) was related to critical thinking, we grouped BioTAP dimensions into categories (mastery/nonmastery) and conducted Student’s t tests to compare the means scores of the two groups on each of the seven dimensions and overall score of the CCTST. Finally, for the strongest relationship that emerged, we included additional academic and background variables as covariates in multiple linear-regression analysis to explore questions about how much observed relationships between critical-thinking skills and science reasoning in writing might be explained by variation in these other factors.

Although BioTAP scores represent discreet, ordinal bins, the five-point scale is intended to capture an underlying continuous construct (from inadequate to exhibiting mastery). It has been argued that five categories is an appropriate cutoff for treating ordinal variables as pseudo-continuous ( Rhemtulla et al. , 2012 )—and therefore using continuous-variable statistical methods (e.g., Pearson’s correlations)—as long as the underlying assumption that ordinal scores are linearly distributed is valid. Although we have no way to statistically test this assumption, we interpret adequate scores to be approximately halfway between inadequate and mastery scores, resulting in a linear scale. In part because this assumption is subject to disagreement, we also consider and interpret a categorical (mastery/nonmastery) treatment of BioTAP variables.

We corrected for multiple comparisons using the Holm-Bonferroni method ( Holm, 1979 ). At the most general level, where we consider the single, comprehensive measures for BioTAP (partial-sum and factor score) and the CCTST (overall score), there is no need to correct for multiple comparisons, because the multiple, individual dimensions are collapsed into single dimensions. When we considered individual CCTST dimensions in relation to comprehensive measures for BioTAP, we accounted for seven comparisons; similarly, when we considered individual dimensions of BioTAP in relation to overall CCTST score, we accounted for five comparisons. When all seven CCTST and five BioTAP dimensions were examined individually and without prior knowledge, we accounted for 35 comparisons; such a rigorous threshold is likely to reject weak and moderate relationships, but it is appropriate if there are no specific pre-existing hypotheses. All p values are presented in tables for complete transparency, and we carefully consider the implications of our interpretation of these data in the Discussion section.

CCTST scores for students in this sample ranged from the 39th to 99th percentile of the general population of undergraduate CCTST test takers (mean percentile = 84.3, median = 85th percentile; Table 2 ); these percentiles reflect overall scores that range from moderate to superior. Scores on individual dimensions and overall scores were sufficiently normal and far enough from the ceiling of the scale to justify subsequent statistical analyses.

Descriptive statistics of CCTST dimensions a

a Scores correspond to superior (86–100), strong (79–85), moderate (70–78), weak (63–69), or not manifested (62 and lower) skills.

The Pearson’s correlations between students’ cumulative scores on BioTAP (the factor score based on loadings published by Dowd et al. , 2016 , and the partial sum of scores on questions 1–5) and students’ overall scores on the CCTST are presented in Table 3 . We found that the partial-sum measure of BioTAP was significantly related to the overall measure of critical thinking ( r = 0.27, p = 0.03), while the BioTAP factor score was marginally related to overall CCTST ( r = 0.24, p = 0.05). When we looked at relationships between comprehensive BioTAP measures and scores for individual dimensions of the CCTST ( Table 3 ), we found significant positive correlations between the both BioTAP partial-sum and factor scores and CCTST inference ( r = 0.45, p < 0.001, and r = 0.41, p < 0.001, respectively). Although some other relationships have p values below 0.05 (e.g., the correlations between BioTAP partial-sum scores and CCTST induction and interpretation scores), they are not significant when we correct for multiple comparisons.

Correlations between dimensions of CCTST and dimensions of BioTAP a

a In each cell, the top number is the correlation, and the bottom, italicized number is the associated p value. Correlations that are statistically significant after correcting for multiple comparisons are shown in bold.

b This is the partial sum of BioTAP scores on questions 1–5.

c This is the factor score calculated from factor loadings published by Dowd et al. (2016) .

When we expanded comparisons to include all 35 potential correlations among individual BioTAP and CCTST dimensions—and, accordingly, corrected for 35 comparisons—we did not find any additional statistically significant relationships. The Pearson’s correlations between students’ scores on each dimension of BioTAP and students’ scores on each dimension of the CCTST range from −0.11 to 0.35 ( Table 3 ); although the relationship between discussion of implications (BioTAP question 5) and inference appears to be relatively large ( r = 0.35), it is not significant ( p = 0.005; the Holm-Bonferroni cutoff is 0.00143). We found no statistically significant relationships between BioTAP questions 6–9 and CCTST dimensions (unpublished data), regardless of whether we correct for multiple comparisons.

The results of Student’s t tests comparing scores on each dimension of the CCTST of students who exhibit mastery with those of students who do not exhibit mastery on each dimension of BioTAP are presented in Table 4 . Focusing first on the overall CCTST scores, we found that the difference between those who exhibit mastery and those who do not in discussing implications of results (BioTAP question 5) is statistically significant ( t = 2.73, p = 0.008, d = 0.71). When we expanded t tests to include all 35 comparisons—and, like above, corrected for 35 comparisons—we found a significant difference in inference scores between students who exhibit mastery on question 5 and students who do not ( t = 3.41, p = 0.0012, d = 0.88), as well as a marginally significant difference in these students’ induction scores ( t = 3.26, p = 0.0018, d = 0.84; the Holm-Bonferroni cutoff is p = 0.00147). Cohen’s d effect sizes, which reveal the strength of the differences for statistically significant relationships, range from 0.71 to 0.88.

The t statistics and effect sizes of differences in dimensions of CCTST across dimensions of BioTAP a

a In each cell, the top number is the t statistic for each comparison, and the middle, italicized number is the associated p value. The bottom number is the effect size. Correlations that are statistically significant after correcting for multiple comparisons are shown in bold.

Finally, we more closely examined the strongest relationship that we observed, which was between the CCTST dimension of inference and the BioTAP partial-sum composite score (shown in Table 3 ), using multiple regression analysis ( Table 5 ). Focusing on the 52 students for whom we have background information, we looked at the simple relationship between BioTAP and inference (model 1), a robust background model including multiple covariates that one might expect to explain some part of the variation in BioTAP (model 2), and a combined model including all variables (model 3). As model 3 shows, the covariates explain very little variation in BioTAP scores, and the relationship between inference and BioTAP persists even in the presence of all of the covariates.

Partial sum (questions 1–5) of BioTAP scores ( n = 52)

** p < 0.01.

*** p < 0.001.

The aim of this study was to examine the extent to which the various components of scientific reasoning—manifested in writing in the genre of undergraduate thesis and assessed using BioTAP—draw on general and specific critical-thinking skills (assessed using CCTST) and to consider the implications for educational practices. Although science reasoning involves critical-thinking skills, it also relates to conceptual knowledge and the epistemological foundations of science disciplines ( Kuhn et al. , 2008 ). Moreover, science reasoning in writing , captured in students’ undergraduate theses, reflects habits, conventions, and the incorporation of feedback that may alter evidence of individuals’ critical-thinking skills. Our findings, however, provide empirical evidence that cumulative measures of science reasoning in writing are nonetheless related to students’ overall critical-thinking skills ( Table 3 ). The particularly significant roles of inference skills ( Table 3 ) and the discussion of implications of results (BioTAP question 5; Table 4 ) provide a basis for more specific ideas about how these constructs relate to one another and what educational interventions may have the most success in fostering these skills.

Our results build on previous findings. The genre of thesis writing combines pedagogies of writing and inquiry found to foster scientific reasoning ( Reynolds et al. , 2012 ) and critical thinking ( Quitadamo and Kurtz, 2007 ; Quitadamo et al. , 2008 ; Stephenson and Sadler-McKnight, 2016 ). Quitadamo and Kurtz (2007) reported that students who engaged in a laboratory writing component in a general education biology course significantly improved their inference and analysis skills, and Quitadamo and colleagues (2008) found that participation in a community-based inquiry biology course (that included a writing component) was associated with significant gains in students’ inference and evaluation skills. The shared focus on inference is noteworthy, because these prior studies actually differ from the current study; the former considered critical-thinking skills as the primary learning outcome of writing-focused interventions, whereas the latter focused on emergent links between two learning outcomes (science reasoning in writing and critical thinking). In other words, inference skills are impacted by writing as well as manifested in writing.

Inference focuses on drawing conclusions from argument and evidence. According to the consensus definition of critical thinking, the specific skill of inference includes several processes: querying evidence, conjecturing alternatives, and drawing conclusions. All of these activities are central to the independent research at the core of writing an undergraduate thesis. Indeed, a critical part of what we call “science reasoning in writing” might be characterized as a measure of students’ ability to infer and make meaning of information and findings. Because the cumulative BioTAP measures distill underlying similarities and, to an extent, suppress unique aspects of individual dimensions, we argue that it is appropriate to relate inference to scientific reasoning in writing . Even when we control for other potentially relevant background characteristics, the relationship is strong ( Table 5 ).

In taking the complementary view and focusing on BioTAP, when we compared students who exhibit mastery with those who do not, we found that the specific dimension of “discussing the implications of results” (question 5) differentiates students’ performance on several critical-thinking skills. To achieve mastery on this dimension, students must make connections between their results and other published studies and discuss the future directions of the research; in short, they must demonstrate an understanding of the bigger picture. The specific relationship between question 5 and inference is the strongest observed among all individual comparisons. Altogether, perhaps more than any other BioTAP dimension, this aspect of students’ writing provides a clear view of the role of students’ critical-thinking skills (particularly inference and, marginally, induction) in science reasoning.

While inference and discussion of implications emerge as particularly strongly related dimensions in this work, we note that the strongest contribution to “science reasoning in writing in biology,” as determined through exploratory factor analysis, is “argument for the significance of research” (BioTAP question 2, not question 5; Dowd et al. , 2016 ). Question 2 is not clearly related to critical-thinking skills. These findings are not contradictory, but rather suggest that the epistemological and disciplinary-specific aspects of science reasoning that emerge in writing through BioTAP are not completely aligned with aspects related to critical thinking. In other words, science reasoning in writing is not simply a proxy for those critical-thinking skills that play a role in science reasoning.

In a similar vein, the content-related, epistemological aspects of science reasoning, as well as the conventions associated with writing the undergraduate thesis (including feedback from peers and revision), may explain the lack of significant relationships between some science reasoning dimensions and some critical-thinking skills that might otherwise seem counterintuitive (e.g., BioTAP question 2, which relates to making an argument, and the critical-thinking skill of argument). It is possible that an individual’s critical-thinking skills may explain some variation in a particular BioTAP dimension, but other aspects of science reasoning and practice exert much stronger influence. Although these relationships do not emerge in our analyses, the lack of significant correlation does not mean that there is definitively no correlation. Correcting for multiple comparisons suppresses type 1 error at the expense of exacerbating type 2 error, which, combined with the limited sample size, constrains statistical power and makes weak relationships more difficult to detect. Ultimately, though, the relationships that do emerge highlight places where individuals’ distinct critical-thinking skills emerge most coherently in thesis assessment, which is why we are particularly interested in unpacking those relationships.

We recognize that, because only honors students submit theses at these institutions, this study sample is composed of a selective subset of the larger population of biology majors. Although this is an inherent limitation of focusing on thesis writing, links between our findings and results of other studies (with different populations) suggest that observed relationships may occur more broadly. The goal of improved science reasoning and critical thinking is shared among all biology majors, particularly those engaged in capstone research experiences. So while the implications of this work most directly apply to honors thesis writers, we provisionally suggest that all students could benefit from further study of them.

There are several important implications of this study for science education practices. Students’ inference skills relate to the understanding and effective application of scientific content. The fact that we find no statistically significant relationships between BioTAP questions 6–9 and CCTST dimensions suggests that such mid- to lower-order elements of BioTAP ( Reynolds et al. , 2009 ), which tend to be more structural in nature, do not focus on aspects of the finished thesis that draw strongly on critical thinking. In keeping with prior analyses ( Reynolds and Thompson, 2011 ; Dowd et al. , 2016 ), these findings further reinforce the notion that disciplinary instructors, who are most capable of teaching and assessing scientific reasoning and perhaps least interested in the more mechanical aspects of writing, may nonetheless be best suited to effectively model and assess students’ writing.

The goal of the thesis writing course at both Duke University and the University of Minnesota is not merely to improve thesis scores but to move students’ writing into the category of mastery across BioTAP dimensions. Recognizing that students with differing critical-thinking skills (particularly inference) are more or less likely to achieve mastery in the undergraduate thesis (particularly in discussing implications [question 5]) is important for developing and testing targeted pedagogical interventions to improve learning outcomes for all students.

The competencies characterized by the Vision and Change in Undergraduate Biology Education Initiative provide a general framework for recognizing that science reasoning and critical-thinking skills play key roles in major learning outcomes of science education. Our findings highlight places where science reasoning–related competencies (like “understanding the process of science”) connect to critical-thinking skills and places where critical thinking–related competencies might be manifested in scientific products (such as the ability to discuss implications in scientific writing). We encourage broader efforts to build empirical connections between competencies and pedagogical practices to further improve science education.

One specific implication of this work for science education is to focus on providing opportunities for students to develop their critical-thinking skills (particularly inference). Of course, as this correlational study is not designed to test causality, we do not claim that enhancing students’ inference skills will improve science reasoning in writing. However, as prior work shows that science writing activities influence students’ inference skills ( Quitadamo and Kurtz, 2007 ; Quitadamo et al. , 2008 ), there is reason to test such a hypothesis. Nevertheless, the focus must extend beyond inference as an isolated skill; rather, it is important to relate inference to the foundations of the scientific method ( Miri et al. , 2007 ) in terms of the epistemological appreciation of the functions and coordination of evidence ( Kuhn and Dean, 2004 ; Zeineddin and Abd-El-Khalick, 2010 ; Ding et al. , 2016 ) and disciplinary paradigms of truth and justification ( Moshman, 2015 ).

Although this study is limited to the domain of biology at two institutions with a relatively small number of students, the findings represent a foundational step in the direction of achieving success with more integrated learning outcomes. Hopefully, it will spur greater interest in empirically grounding discussions of the constructs of scientific reasoning and critical-thinking skills.

This study contributes to the efforts to improve science education, for both majors and nonmajors, through an empirically driven analysis of the relationships between scientific reasoning reflected in the genre of thesis writing and critical-thinking skills. This work is rooted in the usefulness of BioTAP as a method 1) to facilitate communication and learning and 2) to assess disciplinary-specific and general dimensions of science reasoning. The findings support the important role of the critical-thinking skill of inference in scientific reasoning in writing, while also highlighting ways in which other aspects of science reasoning (epistemological considerations, writing conventions, etc.) are not significantly related to critical thinking. Future research into the impact of interventions focused on specific critical-thinking skills (i.e., inference) for improved science reasoning in writing will build on this work and its implications for science education.

Supplementary Material

Acknowledgments.

We acknowledge the contributions of Kelaine Haas and Alexander Motten to the implementation and collection of data. We also thank Mine Çetinkaya-Rundel for her insights regarding our statistical analyses. This research was funded by National Science Foundation award DUE-1525602.

- American Association for the Advancement of Science. (2011). Vision and change in undergraduate biology education: A call to action . Washington, DC: Retrieved September 26, 2017, from https://visionandchange.org/files/2013/11/aaas-VISchange-web1113.pdf . [ Google Scholar ]

- August D. (2016). California Critical Thinking Skills Test user manual and resource guide . San Jose: Insight Assessment/California Academic Press. [ Google Scholar ]

- Beyer C. H., Taylor E., Gillmore G. M. (2013). Inside the undergraduate teaching experience: The University of Washington’s growth in faculty teaching study . Albany, NY: SUNY Press. [ Google Scholar ]

- Bissell A. N., Lemons P. P. (2006). A new method for assessing critical thinking in the classroom . BioScience , ( 1 ), 66–72. https://doi.org/10.1641/0006-3568(2006)056[0066:ANMFAC]2.0.CO;2 . [ Google Scholar ]

- Blattner N. H., Frazier C. L. (2002). Developing a performance-based assessment of students’ critical thinking skills . Assessing Writing , ( 1 ), 47–64. [ Google Scholar ]

- Clase K. L., Gundlach E., Pelaez N. J. (2010). Calibrated peer review for computer-assisted learning of biological research competencies . Biochemistry and Molecular Biology Education , ( 5 ), 290–295. [ PubMed ] [ Google Scholar ]

- Condon W., Kelly-Riley D. (2004). Assessing and teaching what we value: The relationship between college-level writing and critical thinking abilities . Assessing Writing , ( 1 ), 56–75. https://doi.org/10.1016/j.asw.2004.01.003 . [ Google Scholar ]

- Ding L., Wei X., Liu X. (2016). Variations in university students’ scientific reasoning skills across majors, years, and types of institutions . Research in Science Education , ( 5 ), 613–632. https://doi.org/10.1007/s11165-015-9473-y . [ Google Scholar ]

- Dowd J. E., Connolly M. P., Thompson R. J., Jr., Reynolds J. A. (2015a). Improved reasoning in undergraduate writing through structured workshops . Journal of Economic Education , ( 1 ), 14–27. https://doi.org/10.1080/00220485.2014.978924 . [ Google Scholar ]

- Dowd J. E., Roy C. P., Thompson R. J., Jr., Reynolds J. A. (2015b). “On course” for supporting expanded participation and improving scientific reasoning in undergraduate thesis writing . Journal of Chemical Education , ( 1 ), 39–45. https://doi.org/10.1021/ed500298r . [ Google Scholar ]

- Dowd J. E., Thompson R. J., Jr., Reynolds J. A. (2016). Quantitative genre analysis of undergraduate theses: Uncovering different ways of writing and thinking in science disciplines . WAC Journal , , 36–51. [ Google Scholar ]

- Facione P. A. (1990). Critical thinking: a statement of expert consensus for purposes of educational assessment and instruction. Research findings and recommendations . Newark, DE: American Philosophical Association; Retrieved September 26, 2017, from https://philpapers.org/archive/FACCTA.pdf . [ Google Scholar ]

- Gerdeman R. D., Russell A. A., Worden K. J., Gerdeman R. D., Russell A. A., Worden K. J. (2007). Web-based student writing and reviewing in a large biology lecture course . Journal of College Science Teaching , ( 5 ), 46–52. [ Google Scholar ]

- Greenhoot A. F., Semb G., Colombo J., Schreiber T. (2004). Prior beliefs and methodological concepts in scientific reasoning . Applied Cognitive Psychology , ( 2 ), 203–221. https://doi.org/10.1002/acp.959 . [ Google Scholar ]

- Haaga D. A. F. (1993). Peer review of term papers in graduate psychology courses . Teaching of Psychology , ( 1 ), 28–32. https://doi.org/10.1207/s15328023top2001_5 . [ Google Scholar ]

- Halonen J. S., Bosack T., Clay S., McCarthy M., Dunn D. S., Hill G. W., Whitlock K. (2003). A rubric for learning, teaching, and assessing scientific inquiry in psychology . Teaching of Psychology , ( 3 ), 196–208. https://doi.org/10.1207/S15328023TOP3003_01 . [ Google Scholar ]

- Hand B., Keys C. W. (1999). Inquiry investigation . Science Teacher , ( 4 ), 27–29. [ Google Scholar ]

- Holm S. (1979). A simple sequentially rejective multiple test procedure . Scandinavian Journal of Statistics , ( 2 ), 65–70. [ Google Scholar ]

- Holyoak K. J., Morrison R. G. (2005). The Cambridge handbook of thinking and reasoning . New York: Cambridge University Press. [ Google Scholar ]

- Insight Assessment. (2016a). California Critical Thinking Skills Test (CCTST) Retrieved September 26, 2017, from www.insightassessment.com/Products/Products-Summary/Critical-Thinking-Skills-Tests/California-Critical-Thinking-Skills-Test-CCTST .

- Insight Assessment. (2016b). Sample thinking skills questions. Retrieved September 26, 2017, from www.insightassessment.com/Resources/Teaching-Training-and-Learning-Tools/node_1487 .

- Kelly G. J., Takao A. (2002). Epistemic levels in argument: An analysis of university oceanography students’ use of evidence in writing . Science Education , ( 3 ), 314–342. https://doi.org/10.1002/sce.10024 . [ Google Scholar ]

- Kuhn D., Dean D., Jr. (2004). Connecting scientific reasoning and causal inference . Journal of Cognition and Development , ( 2 ), 261–288. https://doi.org/10.1207/s15327647jcd0502_5 . [ Google Scholar ]

- Kuhn D., Iordanou K., Pease M., Wirkala C. (2008). Beyond control of variables: What needs to develop to achieve skilled scientific thinking? . Cognitive Development , ( 4 ), 435–451. https://doi.org/10.1016/j.cogdev.2008.09.006 . [ Google Scholar ]