An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HCA Healthc J Med

- v.1(2); 2020

- PMC10324782

Introduction to Research Statistical Analysis: An Overview of the Basics

Christian vandever.

1 HCA Healthcare Graduate Medical Education

Description

This article covers many statistical ideas essential to research statistical analysis. Sample size is explained through the concepts of statistical significance level and power. Variable types and definitions are included to clarify necessities for how the analysis will be interpreted. Categorical and quantitative variable types are defined, as well as response and predictor variables. Statistical tests described include t-tests, ANOVA and chi-square tests. Multiple regression is also explored for both logistic and linear regression. Finally, the most common statistics produced by these methods are explored.

Introduction

Statistical analysis is necessary for any research project seeking to make quantitative conclusions. The following is a primer for research-based statistical analysis. It is intended to be a high-level overview of appropriate statistical testing, while not diving too deep into any specific methodology. Some of the information is more applicable to retrospective projects, where analysis is performed on data that has already been collected, but most of it will be suitable to any type of research. This primer will help the reader understand research results in coordination with a statistician, not to perform the actual analysis. Analysis is commonly performed using statistical programming software such as R, SAS or SPSS. These allow for analysis to be replicated while minimizing the risk for an error. Resources are listed later for those working on analysis without a statistician.

After coming up with a hypothesis for a study, including any variables to be used, one of the first steps is to think about the patient population to apply the question. Results are only relevant to the population that the underlying data represents. Since it is impractical to include everyone with a certain condition, a subset of the population of interest should be taken. This subset should be large enough to have power, which means there is enough data to deliver significant results and accurately reflect the study’s population.

The first statistics of interest are related to significance level and power, alpha and beta. Alpha (α) is the significance level and probability of a type I error, the rejection of the null hypothesis when it is true. The null hypothesis is generally that there is no difference between the groups compared. A type I error is also known as a false positive. An example would be an analysis that finds one medication statistically better than another, when in reality there is no difference in efficacy between the two. Beta (β) is the probability of a type II error, the failure to reject the null hypothesis when it is actually false. A type II error is also known as a false negative. This occurs when the analysis finds there is no difference in two medications when in reality one works better than the other. Power is defined as 1-β and should be calculated prior to running any sort of statistical testing. Ideally, alpha should be as small as possible while power should be as large as possible. Power generally increases with a larger sample size, but so does cost and the effect of any bias in the study design. Additionally, as the sample size gets bigger, the chance for a statistically significant result goes up even though these results can be small differences that do not matter practically. Power calculators include the magnitude of the effect in order to combat the potential for exaggeration and only give significant results that have an actual impact. The calculators take inputs like the mean, effect size and desired power, and output the required minimum sample size for analysis. Effect size is calculated using statistical information on the variables of interest. If that information is not available, most tests have commonly used values for small, medium or large effect sizes.

When the desired patient population is decided, the next step is to define the variables previously chosen to be included. Variables come in different types that determine which statistical methods are appropriate and useful. One way variables can be split is into categorical and quantitative variables. ( Table 1 ) Categorical variables place patients into groups, such as gender, race and smoking status. Quantitative variables measure or count some quantity of interest. Common quantitative variables in research include age and weight. An important note is that there can often be a choice for whether to treat a variable as quantitative or categorical. For example, in a study looking at body mass index (BMI), BMI could be defined as a quantitative variable or as a categorical variable, with each patient’s BMI listed as a category (underweight, normal, overweight, and obese) rather than the discrete value. The decision whether a variable is quantitative or categorical will affect what conclusions can be made when interpreting results from statistical tests. Keep in mind that since quantitative variables are treated on a continuous scale it would be inappropriate to transform a variable like which medication was given into a quantitative variable with values 1, 2 and 3.

Categorical vs. Quantitative Variables

Both of these types of variables can also be split into response and predictor variables. ( Table 2 ) Predictor variables are explanatory, or independent, variables that help explain changes in a response variable. Conversely, response variables are outcome, or dependent, variables whose changes can be partially explained by the predictor variables.

Response vs. Predictor Variables

Choosing the correct statistical test depends on the types of variables defined and the question being answered. The appropriate test is determined by the variables being compared. Some common statistical tests include t-tests, ANOVA and chi-square tests.

T-tests compare whether there are differences in a quantitative variable between two values of a categorical variable. For example, a t-test could be useful to compare the length of stay for knee replacement surgery patients between those that took apixaban and those that took rivaroxaban. A t-test could examine whether there is a statistically significant difference in the length of stay between the two groups. The t-test will output a p-value, a number between zero and one, which represents the probability that the two groups could be as different as they are in the data, if they were actually the same. A value closer to zero suggests that the difference, in this case for length of stay, is more statistically significant than a number closer to one. Prior to collecting the data, set a significance level, the previously defined alpha. Alpha is typically set at 0.05, but is commonly reduced in order to limit the chance of a type I error, or false positive. Going back to the example above, if alpha is set at 0.05 and the analysis gives a p-value of 0.039, then a statistically significant difference in length of stay is observed between apixaban and rivaroxaban patients. If the analysis gives a p-value of 0.91, then there was no statistical evidence of a difference in length of stay between the two medications. Other statistical summaries or methods examine how big of a difference that might be. These other summaries are known as post-hoc analysis since they are performed after the original test to provide additional context to the results.

Analysis of variance, or ANOVA, tests can observe mean differences in a quantitative variable between values of a categorical variable, typically with three or more values to distinguish from a t-test. ANOVA could add patients given dabigatran to the previous population and evaluate whether the length of stay was significantly different across the three medications. If the p-value is lower than the designated significance level then the hypothesis that length of stay was the same across the three medications is rejected. Summaries and post-hoc tests also could be performed to look at the differences between length of stay and which individual medications may have observed statistically significant differences in length of stay from the other medications. A chi-square test examines the association between two categorical variables. An example would be to consider whether the rate of having a post-operative bleed is the same across patients provided with apixaban, rivaroxaban and dabigatran. A chi-square test can compute a p-value determining whether the bleeding rates were significantly different or not. Post-hoc tests could then give the bleeding rate for each medication, as well as a breakdown as to which specific medications may have a significantly different bleeding rate from each other.

A slightly more advanced way of examining a question can come through multiple regression. Regression allows more predictor variables to be analyzed and can act as a control when looking at associations between variables. Common control variables are age, sex and any comorbidities likely to affect the outcome variable that are not closely related to the other explanatory variables. Control variables can be especially important in reducing the effect of bias in a retrospective population. Since retrospective data was not built with the research question in mind, it is important to eliminate threats to the validity of the analysis. Testing that controls for confounding variables, such as regression, is often more valuable with retrospective data because it can ease these concerns. The two main types of regression are linear and logistic. Linear regression is used to predict differences in a quantitative, continuous response variable, such as length of stay. Logistic regression predicts differences in a dichotomous, categorical response variable, such as 90-day readmission. So whether the outcome variable is categorical or quantitative, regression can be appropriate. An example for each of these types could be found in two similar cases. For both examples define the predictor variables as age, gender and anticoagulant usage. In the first, use the predictor variables in a linear regression to evaluate their individual effects on length of stay, a quantitative variable. For the second, use the same predictor variables in a logistic regression to evaluate their individual effects on whether the patient had a 90-day readmission, a dichotomous categorical variable. Analysis can compute a p-value for each included predictor variable to determine whether they are significantly associated. The statistical tests in this article generate an associated test statistic which determines the probability the results could be acquired given that there is no association between the compared variables. These results often come with coefficients which can give the degree of the association and the degree to which one variable changes with another. Most tests, including all listed in this article, also have confidence intervals, which give a range for the correlation with a specified level of confidence. Even if these tests do not give statistically significant results, the results are still important. Not reporting statistically insignificant findings creates a bias in research. Ideas can be repeated enough times that eventually statistically significant results are reached, even though there is no true significance. In some cases with very large sample sizes, p-values will almost always be significant. In this case the effect size is critical as even the smallest, meaningless differences can be found to be statistically significant.

These variables and tests are just some things to keep in mind before, during and after the analysis process in order to make sure that the statistical reports are supporting the questions being answered. The patient population, types of variables and statistical tests are all important things to consider in the process of statistical analysis. Any results are only as useful as the process used to obtain them. This primer can be used as a reference to help ensure appropriate statistical analysis.

Funding Statement

This research was supported (in whole or in part) by HCA Healthcare and/or an HCA Healthcare affiliated entity.

Conflicts of Interest

The author declares he has no conflicts of interest.

Christian Vandever is an employee of HCA Healthcare Graduate Medical Education, an organization affiliated with the journal’s publisher.

This research was supported (in whole or in part) by HCA Healthcare and/or an HCA Healthcare affiliated entity. The views expressed in this publication represent those of the author(s) and do not necessarily represent the official views of HCA Healthcare or any of its affiliated entities.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Statistics articles from across Nature Portfolio

Statistics is the application of mathematical concepts to understanding and analysing large collections of data. A central tenet of statistics is to describe the variations in a data set or population using probability distributions. This analysis aids understanding of what underlies these variations and enables predictions of future changes.

Latest Research and Reviews

Medical history predicts phenome-wide disease onset and enables the rapid response to emerging health threats

Preventive interventions often require strategies to identify high-risk individuals. Here, the authors illustrate the potential utility of medical history in predicting the onset risk for thousands of diseases across clinical specialties including COVID-19.

- Jakob Steinfeldt

- Benjamin Wild

- Roland Eils

Unsupervised detection of large-scale weather patterns in the northern hemisphere via Markov State Modelling: from blockings to teleconnections

- Sebastian Springer

- Alessandro Laio

- Valerio Lucarini

Modified correlated measurement errors model for estimation of population mean utilizing auxiliary information

- Housila P. Singh

Employing machine learning for enhanced abdominal fat prediction in cavitation post-treatment

- Doaa A. Abdel Hady

- Omar M. Mabrouk

- Tarek Abd El-Hafeez

Unexpected HCHO transnational transport: influence on the temporal and spatial distribution of HCHO in Tibet from 2013 to 2021 based on satellite

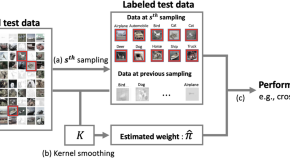

Towards optimal model evaluation: enhancing active testing with actively improved estimators

- JooChul Lee

- Likhitha Kolla

News and Comment

Efficient learning of many-body systems

The Hamiltonian describing a quantum many-body system can be learned using measurements in thermal equilibrium. Now, a learning algorithm applicable to many natural systems has been found that requires exponentially fewer measurements than existing methods.

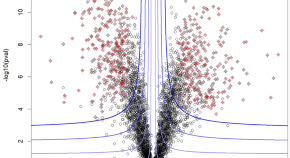

Fudging the volcano-plot without dredging the data

Selecting omic biomarkers using both their effect size and their differential status significance ( i.e. , selecting the “volcano-plot outer spray”) has long been equally biologically relevant and statistically troublesome. However, recent proposals are paving the way to resolving this dilemma.

- Thomas Burger

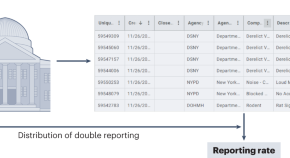

Disentangling truth from bias in naturally occurring data

A technique that leverages duplicate records in crowdsourcing data could help to mitigate the effects of biases in research and services that are dependent on government records.

- Daniel T. O’Brien

Sciama’s argument on life in a random universe and distinguishing apples from oranges

Dennis Sciama has argued that the existence of life depends on many quantities—the fundamental constants—so in a random universe life should be highly unlikely. However, without full knowledge of these constants, his argument implies a universe that could appear to be ‘intelligently designed’.

- Zhi-Wei Wang

- Samuel L. Braunstein

A method for generating constrained surrogate power laws

A paper in Physical Review X presents a method for numerically generating data sequences that are as likely to be observed under a power law as a given observed dataset.

- Zoe Budrikis

Connected climate tipping elements

Tipping elements are regions that are vulnerable to climate change and capable of sudden drastic changes. Now research establishes long-distance linkages between tipping elements, with the network analysis offering insights into their interactions on a global scale.

- Valerie N. Livina

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Youth Program

- Wharton Online

Research Papers / Publications

Data Science: the impact of statistics

- Regular Paper

- Open access

- Published: 16 February 2018

- Volume 6 , pages 189–194, ( 2018 )

Cite this article

You have full access to this open access article

- Claus Weihs 1 &

- Katja Ickstadt 2

40k Accesses

47 Citations

17 Altmetric

Explore all metrics

In this paper, we substantiate our premise that statistics is one of the most important disciplines to provide tools and methods to find structure in and to give deeper insight into data, and the most important discipline to analyze and quantify uncertainty. We give an overview over different proposed structures of Data Science and address the impact of statistics on such steps as data acquisition and enrichment, data exploration, data analysis and modeling, validation and representation and reporting. Also, we indicate fallacies when neglecting statistical reasoning.

Similar content being viewed by others

Data Analysis

Data science vs. statistics: two cultures?

Data Science: An Introduction

Avoid common mistakes on your manuscript.

1 Introduction and premise

Data Science as a scientific discipline is influenced by informatics, computer science, mathematics, operations research, and statistics as well as the applied sciences.

In 1996, for the first time, the term Data Science was included in the title of a statistical conference (International Federation of Classification Societies (IFCS) “Data Science, classification, and related methods”) [ 37 ]. Even though the term was founded by statisticians, in the public image of Data Science, the importance of computer science and business applications is often much more stressed, in particular in the era of Big Data.

Already in the 1970s, the ideas of John Tukey [ 43 ] changed the viewpoint of statistics from a purely mathematical setting , e.g., statistical testing, to deriving hypotheses from data ( exploratory setting ), i.e., trying to understand the data before hypothesizing.

Another root of Data Science is Knowledge Discovery in Databases (KDD) [ 36 ] with its sub-topic Data Mining . KDD already brings together many different approaches to knowledge discovery, including inductive learning, (Bayesian) statistics, query optimization, expert systems, information theory, and fuzzy sets. Thus, KDD is a big building block for fostering interaction between different fields for the overall goal of identifying knowledge in data.

Nowadays, these ideas are combined in the notion of Data Science, leading to different definitions. One of the most comprehensive definitions of Data Science was recently given by Cao as the formula [ 12 ]:

data science = (statistics + informatics + computing + communication + sociology + management) | (data + environment + thinking) .

In this formula, sociology stands for the social aspects and | (data + environment + thinking) means that all the mentioned sciences act on the basis of data, the environment and the so-called data-to-knowledge-to-wisdom thinking.

A recent, comprehensive overview of Data Science provided by Donoho in 2015 [ 16 ] focuses on the evolution of Data Science from statistics. Indeed, as early as 1997, there was an even more radical view suggesting to rename statistics to Data Science [ 50 ]. And in 2015, a number of ASA leaders [ 17 ] released a statement about the role of statistics in Data Science, saying that “statistics and machine learning play a central role in data science.”

In our view, statistical methods are crucial in most fundamental steps of Data Science. Hence, the premise of our contribution is:

Statistics is one of the most important disciplines to provide tools and methods to find structure in and to give deeper insight into data, and the most important discipline to analyze and quantify uncertainty.

This paper aims at addressing the major impact of statistics on the most important steps in Data Science.

2 Steps in data science

One of forerunners of Data Science from a structural perspective is the famous CRISP-DM (Cross Industry Standard Process for Data Mining) which is organized in six main steps: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, and Deployment [ 10 ], see Table 1 , left column. Ideas like CRISP-DM are now fundamental for applied statistics.

In our view, the main steps in Data Science have been inspired by CRISP-DM and have evolved, leading to, e.g., our definition of Data Science as a sequence of the following steps: Data Acquisition and Enrichment, Data Storage and Access , Data Exploration, Data Analysis and Modeling, Optimization of Algorithms , Model Validation and Selection, Representation and Reporting of Results, and Business Deployment of Results . Note that topics in small capitals indicate steps where statistics is less involved, cp. Table 1 , right column.

Usually, these steps are not just conducted once but are iterated in a cyclic loop. In addition, it is common to alternate between two or more steps. This holds especially for the steps Data Acquisition and Enrichment , Data Exploration , and Statistical Data Analysis , as well as for Statistical Data Analysis and Modeling and Model Validation and Selection .

Table 1 compares different definitions of steps in Data Science. The relationship of terms is indicated by horizontal blocks. The missing step Data Acquisition and Enrichment in CRISP-DM indicates that that scheme deals with observational data only. Moreover, in our proposal, the steps Data Storage and Access and Optimization of Algorithms are added to CRISP-DM, where statistics is less involved.

The list of steps for Data Science may even be enlarged, see, e.g., Cao in [ 12 ], Figure 6, cp. also Table 1 , middle column, for the following recent list: Domain-specific Data Applications and Problems, Data Storage and Management, Data Quality Enhancement, Data Modeling and Representation, Deep Analytics, Learning and Discovery, Simulation and Experiment Design, High-performance Processing and Analytics, Networking, Communication, Data-to-Decision and Actions.

In principle, Cao’s and our proposal cover the same main steps. However, in parts, Cao’s formulation is more detailed; e.g., our step Data Analysis and Modeling corresponds to Data Modeling and Representation, Deep Analytics, Learning and Discovery . Also, the vocabularies differ slightly, depending on whether the respective background is computer science or statistics. In that respect note that Experiment Design in Cao’s definition means the design of the simulation experiments.

In what follows, we will highlight the role of statistics discussing all the steps, where it is heavily involved, in Sects. 2.1 – 2.6 . These coincide with all steps in our proposal in Table 1 except steps in small capitals. The corresponding entries Data Storage and Access and Optimization of Algorithms are mainly covered by informatics and computer science , whereas Business Deployment of Results is covered by Business Management .

2.1 Data acquisition and enrichment

Design of experiments (DOE) is essential for a systematic generation of data when the effect of noisy factors has to be identified. Controlled experiments are fundamental for robust process engineering to produce reliable products despite variation in the process variables. On the one hand, even controllable factors contain a certain amount of uncontrollable variation that affects the response. On the other hand, some factors, like environmental factors, cannot be controlled at all. Nevertheless, at least the effect of such noisy influencing factors should be controlled by, e.g., DOE.

DOE can be utilized, e.g.,

to systematically generate new data ( data acquisition ) [ 33 ],

for systematically reducing data bases [ 41 ], and

for tuning (i.e., optimizing) parameters of algorithms [ 1 ], i.e., for improving the data analysis methods (see Sect. 2.3 ) themselves.

Simulations [ 7 ] may also be used to generate new data. A tool for the enrichment of data bases to fill data gaps is the imputation of missing data [ 31 ].

Such statistical methods for data generation and enrichment need to be part of the backbone of Data Science. The exclusive use of observational data without any noise control distinctly diminishes the quality of data analysis results and may even lead to wrong result interpretation. The hope for “The End of Theory: The Data Deluge Makes the Scientific Method Obsolete” [ 4 ] appears to be wrong due to noise in the data.

Thus, experimental design is crucial for the reliability, validity, and replicability of our results.

2.2 Data exploration

Exploratory statistics is essential for data preprocessing to learn about the contents of a data base. Exploration and visualization of observed data was, in a way, initiated by John Tukey [ 43 ]. Since that time, the most laborious part of data analysis, namely data understanding and transformation, became an important part in statistical science.

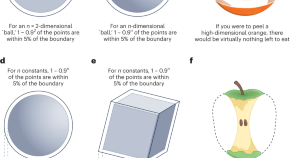

Data exploration or data mining is fundamental for the proper usage of analytical methods in Data Science. The most important contribution of statistics is the notion of distribution . It allows us to represent variability in the data as well as (a-priori) knowledge of parameters, the concept underlying Bayesian statistics. Distributions also enable us to choose adequate subsequent analytic models and methods.

2.3 Statistical data analysis

Finding structure in data and making predictions are the most important steps in Data Science. Here, in particular, statistical methods are essential since they are able to handle many different analytical tasks. Important examples of statistical data analysis methods are the following.

Hypothesis testing is one of the pillars of statistical analysis. Questions arising in data driven problems can often be translated to hypotheses. Also, hypotheses are the natural links between underlying theory and statistics. Since statistical hypotheses are related to statistical tests, questions and theory can be tested for the available data. Multiple usage of the same data in different tests often leads to the necessity to correct significance levels. In applied statistics, correct multiple testing is one of the most important problems, e.g., in pharmaceutical studies [ 15 ]. Ignoring such techniques would lead to many more significant results than justified.

Classification methods are basic for finding and predicting subpopulations from data. In the so-called unsupervised case, such subpopulations are to be found from a data set without a-priori knowledge of any cases of such subpopulations. This is often called clustering.

In the so-called supervised case, classification rules should be found from a labeled data set for the prediction of unknown labels when only influential factors are available.

Nowadays, there is a plethora of methods for the unsupervised [ 22 ] as well for the supervised case [ 2 ].

In the age of Big Data, a new look at the classical methods appears to be necessary, though, since most of the time the calculation effort of complex analysis methods grows stronger than linear with the number of observations n or the number of features p . In the case of Big Data, i.e., if n or p is large, this leads to too high calculation times and to numerical problems. This results both, in the comeback of simpler optimization algorithms with low time-complexity [ 9 ] and in re-examining the traditional methods in statistics and machine learning for Big Data [ 46 ].

Regression methods are the main tool to find global and local relationships between features when the target variable is measured. Depending on the distributional assumption for the underlying data, different approaches may be applied. Under the normality assumption, linear regression is the most common method, while generalized linear regression is usually employed for other distributions from the exponential family [ 18 ]. More advanced methods comprise functional regression for functional data [ 38 ], quantile regression [ 25 ], and regression based on loss functions other than squared error loss like, e.g., Lasso regression [ 11 , 21 ]. In the context of Big Data, the challenges are similar to those for classification methods given large numbers of observations n (e.g., in data streams) and / or large numbers of features p . For the reduction of n , data reduction techniques like compressed sensing, random projection methods [ 20 ] or sampling-based procedures [ 28 ] enable faster computations. For decreasing the number p to the most influential features, variable selection or shrinkage approaches like the Lasso [ 21 ] can be employed, keeping the interpretability of the features. (Sparse) principal component analysis [ 21 ] may also be used.

Time series analysis aims at understanding and predicting temporal structure [ 42 ]. Time series are very common in studies of observational data, and prediction is the most important challenge for such data. Typical application areas are the behavioral sciences and economics as well as the natural sciences and engineering. As an example, let us have a look at signal analysis, e.g., speech or music data analysis. Here, statistical methods comprise the analysis of models in the time and frequency domains. The main aim is the prediction of future values of the time series itself or of its properties. For example, the vibrato of an audio time series might be modeled in order to realistically predict the tone in the future [ 24 ] and the fundamental frequency of a musical tone might be predicted by rules learned from elapsed time periods [ 29 ].

In econometrics, multiple time series and their co-integration are often analyzed [ 27 ]. In technical applications, process control is a common aim of time series analysis [ 34 ].

2.4 Statistical modeling

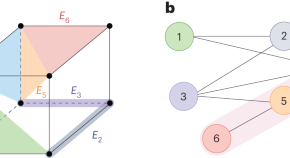

Complex interactions between factors can be modeled by graphs or networks . Here, an interaction between two factors is modeled by a connection in the graph or network [ 26 , 35 ]. The graphs can be undirected as, e.g., in Gaussian graphical models, or directed as, e.g., in Bayesian networks. The main goal in network analysis is deriving the network structure. Sometimes, it is necessary to separate (unmix) subpopulation specific network topologies [ 49 ].

Stochastic differential and difference equations can represent models from the natural and engineering sciences [ 3 , 39 ]. The finding of approximate statistical models solving such equations can lead to valuable insights for, e.g., the statistical control of such processes, e.g., in mechanical engineering [ 48 ]. Such methods can build a bridge between the applied sciences and Data Science.

Local models and globalization Typically, statistical models are only valid in sub-regions of the domain of the involved variables. Then, local models can be used [ 8 ]. The analysis of structural breaks can be basic to identify the regions for local modeling in time series [ 5 ]. Also, the analysis of concept drifts can be used to investigate model changes over time [ 30 ].

In time series, there are often hierarchies of more and more global structures. For example, in music, a basic local structure is given by the notes and more and more global ones by bars, motifs, phrases, parts etc. In order to find global properties of a time series, properties of the local models can be combined to more global characteristics [ 47 ].

Mixture models can also be used for the generalization of local to global models [ 19 , 23 ]. Model combination is essential for the characterization of real relationships since standard mathematical models are often much too simple to be valid for heterogeneous data or bigger regions of interest.

2.5 Model validation and model selection

In cases where more than one model is proposed for, e.g., prediction, statistical tests for comparing models are helpful to structure the models, e.g., concerning their predictive power [ 45 ].

Predictive power is typically assessed by means of so-called resampling methods where the distribution of power characteristics is studied by artificially varying the subpopulation used to learn the model. Characteristics of such distributions can be used for model selection [ 7 ].

Perturbation experiments offer another possibility to evaluate the performance of models. In this way, the stability of the different models against noise is assessed [ 32 , 44 ].

Meta-analysis as well as model averaging are methods to evaluate combined models [ 13 , 14 ].

Model selection became more and more important in the last years since the number of classification and regression models proposed in the literature increased with higher and higher speed.

2.6 Representation and reporting

Visualization to interpret found structures and storing of models in an easy-to-update form are very important tasks in statistical analyses to communicate the results and safeguard data analysis deployment. Deployment is decisive for obtaining interpretable results in Data Science. It is the last step in CRISP-DM [ 10 ] and underlying the data-to-decision and action step in Cao [ 12 ].

Besides visualization and adequate model storing, for statistics, the main task is reporting of uncertainties and review [ 6 ].

3 Fallacies

The statistical methods described in Sect. 2 are fundamental for finding structure in data and for obtaining deeper insight into data, and thus, for a successful data analysis. Ignoring modern statistical thinking or using simplistic data analytics/statistical methods may lead to avoidable fallacies. This holds, in particular, for the analysis of big and/or complex data.

As mentioned at the end of Sect. 2.2 , the notion of distribution is the key contribution of statistics. Not taking into account distributions in data exploration and in modeling restricts us to report values and parameter estimates without their corresponding variability. Only the notion of distributions enables us to predict with corresponding error bands.

Moreover, distributions are the key to model-based data analytics. For example, unsupervised learning can be employed to find clusters in data. If additional structure like dependency on space or time is present, it is often important to infer parameters like cluster radii and their spatio-temporal evolution. Such model-based analysis heavily depends on the notion of distributions (see [ 40 ] for an application to protein clusters).

If more than one parameter is of interest, it is advisable to compare univariate hypothesis testing approaches to multiple procedures, e.g., in multiple regression, and choose the most adequate model by variable selection. Restricting oneself to univariate testing, would ignore relationships between variables.

Deeper insight into data might require more complex models, like, e.g., mixture models for detecting heterogeneous groups in data. When ignoring the mixture, the result often represents a meaningless average, and learning the subgroups by unmixing the components might be needed. In a Bayesian framework, this is enabled by, e.g., latent allocation variables in a Dirichlet mixture model. For an application of decomposing a mixture of different networks in a heterogeneous cell population in molecular biology see [ 49 ].

A mixture model might represent mixtures of components of very unequal sizes, with small components (outliers) being of particular importance. In the context of Big Data, naïve sampling procedures are often employed for model estimation. However, these have the risk of missing small mixture components. Hence, model validation or sampling according to a more suitable distribution as well as resampling methods for predictive power are important.

4 Conclusion

Following the above assessment of the capabilities and impacts of statistics our conclusion is:

The role of statistics in Data Science is under-estimated as, e.g., compared to computer science. This yields, in particular, for the areas of data acquisition and enrichment as well as for advanced modeling needed for prediction.

Stimulated by this conclusion, statisticians are well-advised to more offensively play their role in this modern and well accepted field of Data Science.

Only complementing and/or combining mathematical methods and computational algorithms with statistical reasoning, particularly for Big Data, will lead to scientific results based on suitable approaches. Ultimately, only a balanced interplay of all sciences involved will lead to successful solutions in Data Science.

Adenso-Diaz, B., Laguna, M.: Fine-tuning of algorithms using fractional experimental designs and local search. Oper. Res. 54 (1), 99–114 (2006)

Article Google Scholar

Aggarwal, C.C. (ed.): Data Classification: Algorithms and Applications. CRC Press, Boca Raton (2014)

Google Scholar

Allen, E., Allen, L., Arciniega, A., Greenwood, P.: Construction of equivalent stochastic differential equation models. Stoch. Anal. Appl. 26 , 274–297 (2008)

Article MathSciNet Google Scholar

Anderson, C.: The End of Theory: The Data Deluge Makes the Scientific Method Obsolete. Wired Magazine https://www.wired.com/2008/06/pb-theory/ (2008)

Aue, A., Horváth, L.: Structural breaks in time series. J. Time Ser. Anal. 34 (1), 1–16 (2013)

Berger, R.E.: A scientific approach to writing for engineers and scientists. IEEE PCS Professional Engineering Communication Series IEEE Press, Wiley (2014)

Book Google Scholar

Bischl, B., Mersmann, O., Trautmann, H., Weihs, C.: Resampling methods for meta-model validation with recommendations for evolutionary computation. Evol. Comput. 20 (2), 249–275 (2012)

Bischl, B., Schiffner, J., Weihs, C.: Benchmarking local classification methods. Comput. Stat. 28 (6), 2599–2619 (2013)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. arXiv preprint arXiv:1606.04838 (2016)

Brown, M.S.: Data Mining for Dummies. Wiley, London (2014)

Bühlmann, P., Van De Geer, S.: Statistics for High-Dimensional Data: Methods, Theory and Applications. Springer, Berlin (2011)

Cao, L.: Data science: a comprehensive overview. ACM Comput. Surv. (2017). https://doi.org/10.1145/3076253

Claeskens, G., Hjort, N.L.: Model Selection and Model Averaging. Cambridge University Press, Cambridge (2008)

Cooper, H., Hedges, L.V., Valentine, J.C.: The Handbook of Research Synthesis and Meta-analysis. Russell Sage Foundation, New York City (2009)

Dmitrienko, A., Tamhane, A.C., Bretz, F.: Multiple Testing Problems in Pharmaceutical Statistics. Chapman and Hall/CRC, London (2009)

Donoho, D.: 50 Years of Data Science. http://courses.csail.mit.edu/18.337/2015/docs/50YearsDataScience.pdf (2015)

Dyk, D.V., Fuentes, M., Jordan, M.I., Newton, M., Ray, B.K., Lang, D.T., Wickham, H.: ASA Statement on the Role of Statistics in Data Science. http://magazine.amstat.org/blog/2015/10/01/asa-statement-on-the-role-of-statistics-in-data-science/ (2015)

Fahrmeir, L., Kneib, T., Lang, S., Marx, B.: Regression: Models, Methods and Applications. Springer, Berlin (2013)

Frühwirth-Schnatter, S.: Finite Mixture and Markov Switching Models. Springer, Berlin (2006)

MATH Google Scholar

Geppert, L., Ickstadt, K., Munteanu, A., Quedenfeld, J., Sohler, C.: Random projections for Bayesian regression. Stat. Comput. 27 (1), 79–101 (2017). https://doi.org/10.1007/s11222-015-9608-z

Article MathSciNet MATH Google Scholar

Hastie, T., Tibshirani, R., Wainwright, M.: Statistical Learning with Sparsity: The Lasso and Generalizations. CRC Press, Boca Raton (2015)

Hennig, C., Meila, M., Murtagh, F., Rocci, R.: Handbook of Cluster Analysis. Chapman & Hall, London (2015)

Klein, H.U., Schäfer, M., Porse, B.T., Hasemann, M.S., Ickstadt, K., Dugas, M.: Integrative analysis of histone chip-seq and transcription data using Bayesian mixture models. Bioinformatics 30 (8), 1154–1162 (2014)

Knoche, S., Ebeling, M.: The musical signal: physically and psychologically, chap 2. In: Weihs, C., Jannach, D., Vatolkin, I., Rudolph, G. (eds.) Music Data Analysis—Foundations and Applications, pp. 15–68. CRC Press, Boca Raton (2017)

Koenker, R.: Quantile Regression. Econometric Society Monographs, vol. 38 (2010)

Koller, D., Friedman, N.: Probabilistic Graphical Models: Principles and Techniques. MIT press, Cambridge (2009)

Lütkepohl, H.: New Introduction to Multiple Time Series Analysis. Springer, Berlin (2010)

Ma, P., Mahoney, M.W., Yu, B.: A statistical perspective on algorithmic leveraging. In: Proceedings of the 31th International Conference on Machine Learning, ICML 2014, Beijing, China, 21–26 June 2014, pp 91–99. http://jmlr.org/proceedings/papers/v32/ma14.html (2014)

Martin, R., Nagathil, A.: Digital filters and spectral analysis, chap 4. In: Weihs, C., Jannach, D., Vatolkin, I., Rudolph, G. (eds.) Music Data Analysis—Foundations and Applications, pp. 111–143. CRC Press, Boca Raton (2017)

Mejri, D., Limam, M., Weihs, C.: A new dynamic weighted majority control chart for data streams. Soft Comput. 22(2), 511–522. https://doi.org/10.1007/s00500-016-2351-3

Molenberghs, G., Fitzmaurice, G., Kenward, M.G., Tsiatis, A., Verbeke, G.: Handbook of Missing Data Methodology. CRC Press, Boca Raton (2014)

Molinelli, E.J., Korkut, A., Wang, W.Q., Miller, M.L., Gauthier, N.P., Jing, X., Kaushik, P., He, Q., Mills, G., Solit, D.B., Pratilas, C.A., Weigt, M., Braunstein, A., Pagnani, A., Zecchina, R., Sander, C.: Perturbation Biology: Inferring Signaling Networks in Cellular Systems. arXiv preprint arXiv:1308.5193 (2013)

Montgomery, D.C.: Design and Analysis of Experiments, 8th edn. Wiley, London (2013)

Oakland, J.: Statistical Process Control. Routledge, London (2007)

Pearl, J.: Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann, Los Altos (1988)

Chapter Google Scholar

Piateski, G., Frawley, W.: Knowledge Discovery in Databases. MIT Press, Cambridge (1991)

Press, G.: A Very Short History of Data Science. https://www.forbescom/sites/gilpress/2013/05/28/a-very-short-history-of-data-science/#5c515ed055cf (2013). [last visit: March 19, 2017]

Ramsay, J., Silverman, B.W.: Functional Data Analysis. Springer, Berlin (2005)

Särkkä, S.: Applied Stochastic Differential Equations. https://users.aalto.fi/~ssarkka/course_s2012/pdf/sde_course_booklet_2012.pdf (2012). [last visit: March 6, 2017]

Schäfer, M., Radon, Y., Klein, T., Herrmann, S., Schwender, H., Verveer, P.J., Ickstadt, K.: A Bayesian mixture model to quantify parameters of spatial clustering. Comput. Stat. Data Anal. 92 , 163–176 (2015). https://doi.org/10.1016/j.csda.2015.07.004

Schiffner, J., Weihs, C.: D-optimal plans for variable selection in data bases. Technical Report, 14/09, SFB 475 (2009)

Shumway, R.H., Stoffer, D.S.: Time Series Analysis and Its Applications: With R Examples. Springer, Berlin (2010)

Tukey, J.W.: Exploratory Data Analysis. Pearson, London (1977)

Vatcheva, I., de Jong, H., Mars, N.: Selection of perturbation experiments for model discrimination. In: Horn, W. (ed.) Proceedings of the 14th European Conference on Artificial Intelligence, ECAI-2000, IOS Press, pp 191–195 (2000)

Vatolkin, I., Weihs, C.: Evaluation, chap 13. In: Weihs, C., Jannach, D., Vatolkin, I., Rudolph, G. (eds.) Music Data Analysis—Foundations and Applications, pp. 329–363. CRC Press, Boca Raton (2017)

Weihs, C.: Big data classification — aspects on many features. In: Michaelis, S., Piatkowski, N., Stolpe, M. (eds.) Solving Large Scale Learning Tasks: Challenges and Algorithms, Springer Lecture Notes in Artificial Intelligence, vol. 9580, pp. 139–147 (2016)

Weihs, C., Ligges, U.: From local to global analysis of music time series. In: Morik, K., Siebes, A., Boulicault, J.F. (eds.) Detecting Local Patterns, Springer Lecture Notes in Artificial Intelligence, vol. 3539, pp. 233–245 (2005)

Weihs, C., Messaoud, A., Raabe, N.: Control charts based on models derived from differential equations. Qual. Reliab. Eng. Int. 26 (8), 807–816 (2010)

Wieczorek, J., Malik-Sheriff, R.S., Fermin, Y., Grecco, H.E., Zamir, E., Ickstadt, K.: Uncovering distinct protein-network topologies in heterogeneous cell populations. BMC Syst. Biol. 9 (1), 24 (2015)

Wu, J.: Statistics = data science? http://www2.isye.gatech.edu/~jeffwu/presentations/datascience.pdf (1997)

Download references

Acknowledgements

The authors would like to thank the editor, the guest editors and all reviewers for valuable comments on an earlier version of the manuscript. They also thank Leo Geppert for fruitful discussions.

Author information

Authors and affiliations.

Computational Statistics, TU Dortmund University, 44221, Dortmund, Germany

Claus Weihs

Mathematical Statistics and Biometric Applications, TU Dortmund University, 44221, Dortmund, Germany

Katja Ickstadt

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Claus Weihs .

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0 /), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Weihs, C., Ickstadt, K. Data Science: the impact of statistics. Int J Data Sci Anal 6 , 189–194 (2018). https://doi.org/10.1007/s41060-018-0102-5

Download citation

Received : 20 March 2017

Accepted : 25 January 2018

Published : 16 February 2018

Issue Date : November 2018

DOI : https://doi.org/10.1007/s41060-018-0102-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Structures of data science

- Impact of statistics on data science

- Fallacies in data science

- Find a journal

- Publish with us

- Track your research

- Search by keyword

- Search by citation

Page 1 of 3

A generalization to the log-inverse Weibull distribution and its applications in cancer research

In this paper we consider a generalization of a log-transformed version of the inverse Weibull distribution. Several theoretical properties of the distribution are studied in detail including expressions for i...

- View Full Text

Approximations of conditional probability density functions in Lebesgue spaces via mixture of experts models

Mixture of experts (MoE) models are widely applied for conditional probability density estimation problems. We demonstrate the richness of the class of MoE models by proving denseness results in Lebesgue space...

Structural properties of generalised Planck distributions

A family of generalised Planck (GP) laws is defined and its structural properties explored. Sometimes subject to parameter restrictions, a GP law is a randomly scaled gamma law; it arises as the equilibrium la...

New class of Lindley distributions: properties and applications

A new generalized class of Lindley distribution is introduced in this paper. This new class is called the T -Lindley{ Y } class of distributions, and it is generated by using the quantile functions of uniform, expon...

Tolerance intervals in statistical software and robustness under model misspecification

A tolerance interval is a statistical interval that covers at least 100 ρ % of the population of interest with a 100(1− α ) % confidence, where ρ and α are pre-specified values in (0, 1). In many scientific fields, su...

Combining assumptions and graphical network into gene expression data analysis

Analyzing gene expression data rigorously requires taking assumptions into consideration but also relies on using information about network relations that exist among genes. Combining these different elements ...

A comparison of zero-inflated and hurdle models for modeling zero-inflated count data

Counts data with excessive zeros are frequently encountered in practice. For example, the number of health services visits often includes many zeros representing the patients with no utilization during a follo...

A general stochastic model for bivariate episodes driven by a gamma sequence

We propose a new stochastic model describing the joint distribution of ( X , N ), where N is a counting variable while X is the sum of N independent gamma random variables. We present the main properties of this gene...

A flexible multivariate model for high-dimensional correlated count data

We propose a flexible multivariate stochastic model for over-dispersed count data. Our methodology is built upon mixed Poisson random vectors ( Y 1 ,…, Y d ), where the { Y i } are conditionally independent Poisson random...

Generalized fiducial inference on the mean of zero-inflated Poisson and Poisson hurdle models

Zero-inflated and hurdle models are widely applied to count data possessing excess zeros, where they can simultaneously model the process from how the zeros were generated and potentially help mitigate the eff...

Multivariate distributions of correlated binary variables generated by pair-copulas

Correlated binary data are prevalent in a wide range of scientific disciplines, including healthcare and medicine. The generalized estimating equations (GEEs) and the multivariate probit (MP) model are two of ...

On two extensions of the canonical Feller–Spitzer distribution

We introduce two extensions of the canonical Feller–Spitzer distribution from the class of Bessel densities, which comprise two distinct stochastically decreasing one-parameter families of positive absolutely ...

A new trivariate model for stochastic episodes

We study the joint distribution of stochastic events described by ( X , Y , N ), where N has a 1-inflated (or deflated) geometric distribution and X , Y are the sum and the maximum of N exponential random variables. Mod...

A flexible univariate moving average time-series model for dispersed count data

Al-Osh and Alzaid ( 1988 ) consider a Poisson moving average (PMA) model to describe the relation among integer-valued time series data; this model, however, is constrained by the underlying equi-dispersion assumpt...

Spatio-temporal analysis of flood data from South Carolina

To investigate the relationship between flood gage height and precipitation in South Carolina from 2012 to 2016, we built a conditional autoregressive (CAR) model using a Bayesian hierarchical framework. This ...

Affine-transformation invariant clustering models

We develop a cluster process which is invariant with respect to unknown affine transformations of the feature space without knowing the number of clusters in advance. Specifically, our proposed method can iden...

Distributions associated with simultaneous multiple hypothesis testing

We develop the distribution for the number of hypotheses found to be statistically significant using the rule from Simes (Biometrika 73: 751–754, 1986) for controlling the family-wise error rate (FWER). We fin...

New families of bivariate copulas via unit weibull distortion

This paper introduces a new family of bivariate copulas constructed using a unit Weibull distortion. Existing copulas play the role of the base or initial copulas that are transformed or distorted into a new f...

Generalized logistic distribution and its regression model

A new generalized asymmetric logistic distribution is defined. In some cases, existing three parameter distributions provide poor fit to heavy tailed data sets. The proposed new distribution consists of only t...

The spherical-Dirichlet distribution

Today, data mining and gene expressions are at the forefront of modern data analysis. Here we introduce a novel probability distribution that is applicable in these fields. This paper develops the proposed sph...

Item fit statistics for Rasch analysis: can we trust them?

To compare fit statistics for the Rasch model based on estimates of unconditional or conditional response probabilities.

Exact distributions of statistics for making inferences on mixed models under the default covariance structure

At this juncture when mixed models are heavily employed in applications ranging from clinical research to business analytics, the purpose of this article is to extend the exact distributional result of Wald (A...

A new discrete pareto type (IV) model: theory, properties and applications

Discrete analogue of a continuous distribution (especially in the univariate domain) is not new in the literature. The work of discretizing continuous distributions begun with the paper by Nakagawa and Osaki (197...

Density deconvolution for generalized skew-symmetric distributions

The density deconvolution problem is considered for random variables assumed to belong to the generalized skew-symmetric (GSS) family of distributions. The approach is semiparametric in that the symmetric comp...

The unifed distribution

We introduce a new distribution with support on (0,1) called unifed. It can be used as the response distribution for a GLM and it is suitable for data aggregation. We make a comparison to the beta regression. ...

On Burr III Marshal Olkin family: development, properties, characterizations and applications

In this paper, a flexible family of distributions with unimodel, bimodal, increasing, increasing and decreasing, inverted bathtub and modified bathtub hazard rate called Burr III-Marshal Olkin-G (BIIIMO-G) fam...

The linearly decreasing stress Weibull (LDSWeibull): a new Weibull-like distribution

Motivated by an engineering pullout test applied to a steel strip embedded in earth, we show how the resulting linearly decreasing force leads naturally to a new distribution, if the force under constant stress i...

Meta analysis of binary data with excessive zeros in two-arm trials

We present a novel Bayesian approach to random effects meta analysis of binary data with excessive zeros in two-arm trials. We discuss the development of likelihood accounting for excessive zeros, the prior, a...

On ( p 1 ,…, p k )-spherical distributions

The class of ( p 1 ,…, p k )-spherical probability laws and a method of simulating random vectors following such distributions are introduced using a new stochastic vector representation. A dynamic geometric disintegra...

A new class of survival distribution for degradation processes subject to shocks

Many systems experience gradual degradation while simultaneously being exposed to a stream of random shocks of varying magnitudes that eventually cause failure when a shock exceeds the residual strength of the...

A new extended normal regression model: simulations and applications

Various applications in natural science require models more accurate than well-known distributions. In this context, several generators of distributions have been recently proposed. We introduce a new four-par...

Multiclass analysis and prediction with network structured covariates

Technological advances associated with data acquisition are leading to the production of complex structured data sets. The recent development on classification with multiclass responses makes it possible to in...

High-dimensional star-shaped distributions

Stochastic representations of star-shaped distributed random vectors having heavy or light tail density generating function g are studied for increasing dimensions along with corresponding geometric measure repre...

A unified complex noncentral Wishart type distribution inspired by massive MIMO systems

The eigenvalue distributions from a complex noncentral Wishart matrix S = X H X has been the subject of interest in various real world applications, where X is assumed to be complex matrix variate normally distribute...

Particle swarm based algorithms for finding locally and Bayesian D -optimal designs

When a model-based approach is appropriate, an optimal design can guide how to collect data judiciously for making reliable inference at minimal cost. However, finding optimal designs for a statistical model w...

Admissible Bernoulli correlations

A multivariate symmetric Bernoulli distribution has marginals that are uniform over the pair {0,1}. Consider the problem of sampling from this distribution given a prescribed correlation between each pair of v...

On p -generalized elliptical random processes

We introduce rank- k -continuous axis-aligned p -generalized elliptically contoured distributions and study their properties such as stochastic representations, moments, and density-like representations. Applying th...

Parameters of stochastic models for electroencephalogram data as biomarkers for child’s neurodevelopment after cerebral malaria

The objective of this study was to test statistical features from the electroencephalogram (EEG) recordings as predictors of neurodevelopment and cognition of Ugandan children after coma due to cerebral malari...

A new generalization of generalized half-normal distribution: properties and regression models

In this paper, a new extension of the generalized half-normal distribution is introduced and studied. We assess the performance of the maximum likelihood estimators of the parameters of the new distribution vi...

Analytical properties of generalized Gaussian distributions

The family of Generalized Gaussian (GG) distributions has received considerable attention from the engineering community, due to the flexible parametric form of its probability density function, in modeling ma...

A new Weibull- X family of distributions: properties, characterizations and applications

We propose a new family of univariate distributions generated from the Weibull random variable, called a new Weibull-X family of distributions. Two special sub-models of the proposed family are presented and t...

The transmuted geometric-quadratic hazard rate distribution: development, properties, characterizations and applications

We propose a five parameter transmuted geometric quadratic hazard rate (TG-QHR) distribution derived from mixture of quadratic hazard rate (QHR), geometric and transmuted distributions via the application of t...

A nonparametric approach for quantile regression

Quantile regression estimates conditional quantiles and has wide applications in the real world. Estimating high conditional quantiles is an important problem. The regular quantile regression (QR) method often...

Mean and variance of ratios of proportions from categories of a multinomial distribution

Ratio distribution is a probability distribution representing the ratio of two random variables, each usually having a known distribution. Currently, there are results when the random variables in the ratio fo...

The power-Cauchy negative-binomial: properties and regression

We propose and study a new compounded model to extend the half-Cauchy and power-Cauchy distributions, which offers more flexibility in modeling lifetime data. The proposed model is analytically tractable and c...

Families of distributions arising from the quantile of generalized lambda distribution

In this paper, the class of T-R { generalized lambda } families of distributions based on the quantile of generalized lambda distribution has been proposed using the T-R { Y } framework. In the development of the T - R {

Risk ratios and Scanlan’s HRX

Risk ratios are distribution function tail ratios and are widely used in health disparities research. Let A and D denote advantaged and disadvantaged populations with cdfs F ...

Joint distribution of k -tuple statistics in zero-one sequences of Markov-dependent trials

We consider a sequence of n , n ≥3, zero (0) - one (1) Markov-dependent trials. We focus on k -tuples of 1s; i.e. runs of 1s of length at least equal to a fixed integer number k , 1≤ k ≤ n . The statistics denoting the n...

Quantile regression for overdispersed count data: a hierarchical method

Generalized Poisson regression is commonly applied to overdispersed count data, and focused on modelling the conditional mean of the response. However, conditional mean regression models may be sensitive to re...

Describing the Flexibility of the Generalized Gamma and Related Distributions

The generalized gamma (GG) distribution is a widely used, flexible tool for parametric survival analysis. Many alternatives and extensions to this family have been proposed. This paper characterizes the flexib...

- ISSN: 2195-5832 (electronic)

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Researchers

- Survey Respondents

- Census.gov /

- Statistical Research /

- Statistical Research Reports and Annual Reports /

- Reports and Studies

Statistical Research Reports and Studies

These research reports are intended to make results of Census Bureau research available to others and to encourage discussion on a variety of topics.we offer a number of research reports on topics in statistics and computing.

Beginning August 1, 2001

- The Research Report Series primarily includes papers which report results from the conduct of systematic, critical, intensive investigation directed toward the development of new or more comprehensive scientific knowledge of the subject studied. The series is subdivided into categories, including: Computing (RRC) , and Statistics (RRS) .

- The Study Series primarily includes papers which report results from the conduct of systematic, critical, intensive investigation directed toward applying known scientific knowledge to a specific problem. The series is subdivided into categories, including: Computing (SSC) , and Statistics (SSS) .

Prior to August 1, 2001

- The Statistical Research Report Series (RR) covers research in statistical methodology and estimation.

Please read our disclaimer .

Sorted by date of release (newest from top/left).

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- How to Report Statistics

Ensure appropriateness and rigor, avoid flexibility and above all never manipulate results

In many fields, a statistical analysis forms the heart of both the methods and results sections of a manuscript. Learn how to report statistical analyses, and what other context is important for publication success and future reproducibility.

A matter of principle

First and foremost, the statistical methods employed in research must always be:

Appropriate for the study design

Rigorously reported in sufficient detail for others to reproduce the analysis

Free of manipulation, selective reporting, or other forms of “spin”

Just as importantly, statistical practices must never be manipulated or misused . Misrepresenting data, selectively reporting results or searching for patterns that can be presented as statistically significant, in an attempt to yield a conclusion that is believed to be more worthy of attention or publication is a serious ethical violation. Although it may seem harmless, using statistics to “spin” results can prevent publication, undermine a published study, or lead to investigation and retraction.

Supporting public trust in science through transparency and consistency

Along with clear methods and transparent study design, the appropriate use of statistical methods and analyses impacts editorial evaluation and readers’ understanding and trust in science.

In 2011 False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant exposed that “flexibility in data collection, analysis, and reporting dramatically increases actual false-positive rates” and demonstrated “how unacceptably easy it is to accumulate (and report) statistically significant evidence for a false hypothesis”.

Arguably, such problems with flexible analysis lead to the “ reproducibility crisis ” that we read about today.

A constant principle of rigorous science The appropriate, rigorous, and transparent use of statistics is a constant principle of rigorous, transparent, and Open Science. Aim to be thorough, even if a particular journal doesn’t require the same level of detail. Trust in science is all of our responsibility. You cannot create any problems by exceeding a minimum standard of information and reporting.

Sound statistical practices

While it is hard to provide statistical guidelines that are relevant for all disciplines, types of research, and all analytical techniques, adherence to rigorous and appropriate principles remains key. Here are some ways to ensure your statistics are sound.

Define your analytical methodology before you begin Take the time to consider and develop a thorough study design that defines your line of inquiry, what you plan to do, what data you will collect, and how you will analyze it. (If you applied for research grants or ethical approval, you probably already have a plan in hand!) Refer back to your study design at key moments in the research process, and above all, stick to it.

To avoid flexibility and improve the odds of acceptance, preregister your study design with a journal Many journals offer the option to submit a study design for peer review before research begins through a practice known as preregistration. If the editors approve your study design, you’ll receive a provisional acceptance for a future research article reporting the results. Preregistering is a great way to head off any intentional or unintentional flexibility in analysis. By declaring your analytical approach in advance you’ll increase the credibility and reproducibility of your results and help address publication bias, too. Getting peer review feedback on your study design and analysis plan before it has begun (when you can still make changes!) makes your research even stronger AND increases your chances of publication—even if the results are negative or null. Never underestimate how much you can help increase the public’s trust in science by planning your research in this way.

Imagine replicating or extending your own work, years in the future Imagine that you are describing your approach to statistical analysis for your future self, in exactly the same way as we have described for writing your methods section . What would you need to know to replicate or extend your own work? When you consider that you might be at a different institution, working with different colleagues, using different programs, applications, resources — or maybe even adopting new statistical techniques that have emerged — you can help yourself imagine the level of reporting specificity that you yourself would require to redo or extend your work. Consider:

- Which details would you need to be reminded of?

- What did you do to the raw data before analysis?

- Did the purpose of the analysis change before or during the experiments?

- What participants did you decide to exclude?

- What process did you adjust, during your work?

Even if a necessary adjustment you made was not ideal, transparency is the key to ensuring this is not regarded as an issue in the future. It is far better to transparently convey any non-optimal techniques or constraints than to conceal them, which could result in reproducibility or ethical issues downstream.

Existing standards, checklists, guidelines for specific disciplines

You can apply the Open Science practices outlined above no matter what your area of expertise—but in many cases, you may still need more detailed guidance specific to your own field. Many disciplines, fields, and projects have worked hard to develop guidelines and resources to help with statistics, and to identify and avoid bad statistical practices. Below, you’ll find some of the key materials.

TIP: Do you have a specific journal in mind?

Be sure to read the submission guidelines for the specific journal you are submitting to, in order to discover any journal- or field-specific policies, initiatives or tools to utilize.

Articles on statistical methods and reporting

Makin, T.R., Orban de Xivry, J. Science Forum: Ten common statistical mistakes to watch out for when writing or reviewing a manuscript . eLife 2019;8:e48175 (2019). https://doi.org/10.7554/eLife.48175

Munafò, M., Nosek, B., Bishop, D. et al. A manifesto for reproducible science . Nat Hum Behav 1, 0021 (2017). https://doi.org/10.1038/s41562-016-0021

Writing tips

Your use of statistics should be rigorous, appropriate, and uncompromising in avoidance of analytical flexibility. While this is difficult, do not compromise on rigorous standards for credibility!

- Remember that trust in science is everyone’s responsibility.

- Keep in mind future replicability.

- Consider preregistering your analysis plan to have it (i) reviewed before results are collected to check problems before they occur and (ii) to avoid any analytical flexibility.

- Follow principles, but also checklists and field- and journal-specific guidelines.

- Consider a commitment to rigorous and transparent science a personal responsibility, and not simple adhering to journal guidelines.

- Be specific about all decisions made during the experiments that someone reproducing your work would need to know.

- Consider a course in advanced and new statistics, if you feel you have not focused on it enough during your research training.

Don’t

- Misuse statistics to influence significance or other interpretations of results

- Conduct your statistical analyses if you are unsure of what you are doing—seek feedback (e.g. via preregistration) from a statistical specialist first.

- How to Write a Great Title

- How to Write an Abstract

- How to Write Your Methods

- How to Write Discussions and Conclusions

- How to Edit Your Work

The contents of the Peer Review Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

The contents of the Writing Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

There’s a lot to consider when deciding where to submit your work. Learn how to choose a journal that will help your study reach its audience, while reflecting your values as a researcher…

- ArXiv Papers

Simplifying Debiased Inference via Automatic Differentiation and Probabilistic Programming

Published 5/14/2024

We introduce an algorithm that simplifies the construction of efficient estimators, making them accessible to a broader audience. 'Dimple' takes as input computer code representing a parameter of interest and outputs an efficient estimator. Unlike standard approaches, it does not require users to derive a functional derivative known as the efficient influence function. Dimple avoids this task by applying automatic differentiation to the statistical functional of interest. Doing so requires expressing this functional as a composition of primitives satisfying a novel differentiability condition. Dimple also uses this composition to determine the nuisances it must estimate. In software, primitives can be implemented independently of one another and reused across different estimation problems. We provide a proof-of-concept Python implementation and showcase through examples how it allows users to go from parameter specification to efficient estimation with just a few lines of code.

Estimating the Effects of Political Pressure on the Fed: A Narrative Approach with New Data

This paper combines new data and a narrative approach to identify shocks to political pressure on the Federal Reserve. From archival records, I build a data set of personal interactions between U.S. Presidents and Fed officials between 1933 and 2016. Since personal interactions do not necessarily reflect political pressure, I develop a narrative identification strategy based on President Nixon's pressure on Fed Chair Burns. I exploit this narrative through restrictions on a structural vector autoregression that includes the personal interaction data. I find that political pressure shocks (i) increase inflation strongly and persistently, (ii) lead to statistically weak negative effects on activity, (iii) contributed to inflationary episodes outside of the Nixon era, and (iv) transmit differently from standard expansionary monetary policy shocks, by having a stronger effect on inflation expectations. Quantitatively, increasing political pressure by half as much as Nixon, for six months, raises the price level more than 8%.

I thank Juan Antolin-Diaz, Jonas Arias, Boragan Aruoba, Miguel Bandeira, Francesco Bianchi, Allan Drazen, Leland Farmer, Yuriy Gorodnichenko, Amy Handlan, Fatima Hussein, Hanno Lustig, Fernando Martin, Emi Nakamura, Evgenia Passari, Jon Steinsson and Sarah Zubairy for detailed discussions. Seminar and conference participants at UC Berkeley, Stanford SITE, the NBER Monetary Economics Spring Meeting 2024, the Federal Reserve Board, the Boston Fed, Philadelphia Fed, Minneapolis Fed, Richmond Fed, St. Louis Fed, Texas A&M University, and the University of Maryland provided very useful suggestions. I am grateful to Seho Kim, Ko Miura and Daniel Schwindt for excellent research assistance. The views expressed herein are those of the author and do not necessarily reflect the views of the National Bureau of Economic Research.

MARC RIS BibTeΧ

Download Citation Data

- President-Fed personal interaction time series data

Conferences

Mentioned in the news, more from nber.

In addition to working papers , the NBER disseminates affiliates’ latest findings through a range of free periodicals — the NBER Reporter , the NBER Digest , the Bulletin on Retirement and Disability , the Bulletin on Health , and the Bulletin on Entrepreneurship — as well as online conference reports , video lectures , and interviews .

An official website of the United States government

- The BEA Wire | BEA's Official Blog

Experimental R&D Value Added Statistics for the U.S. and States Now Available

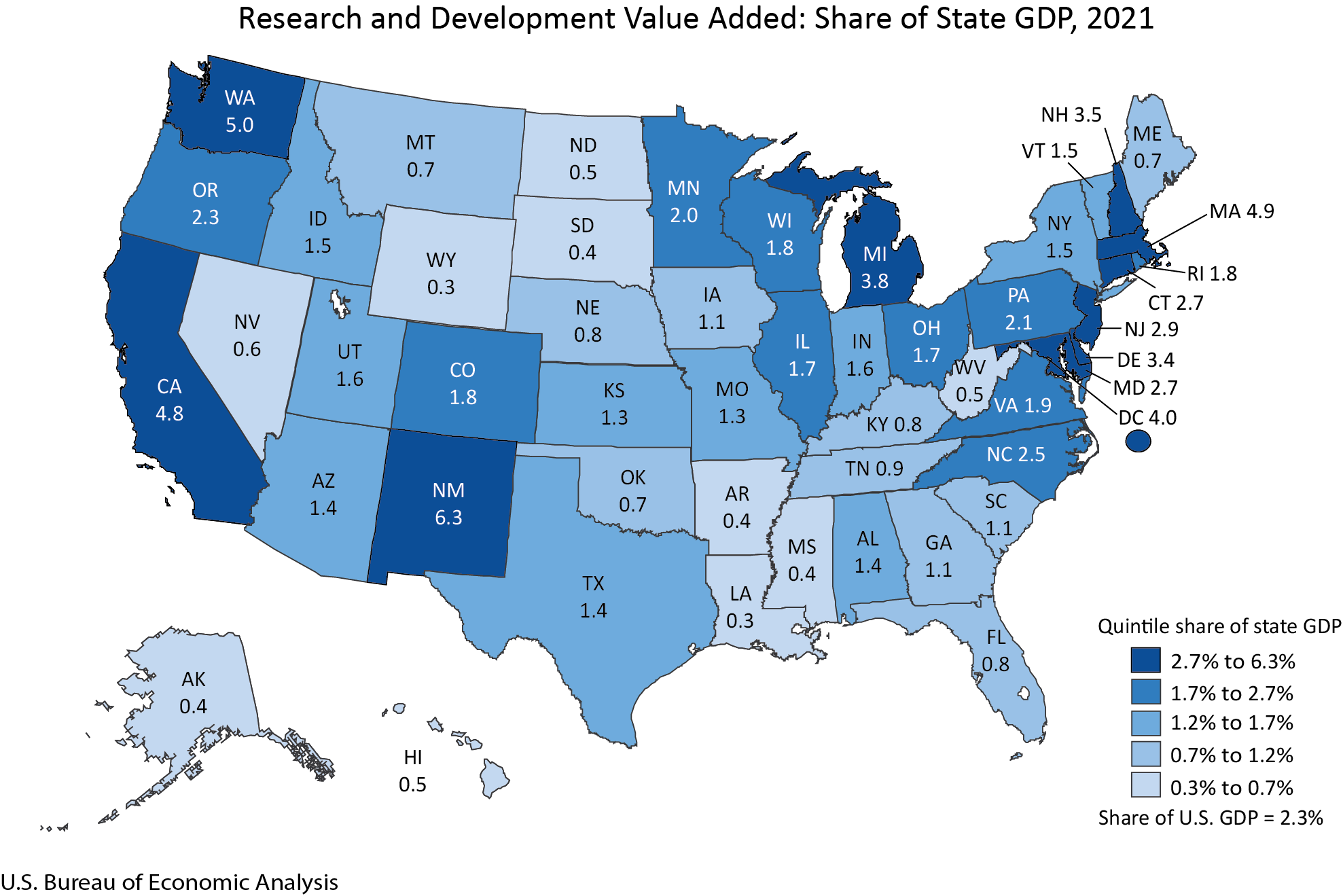

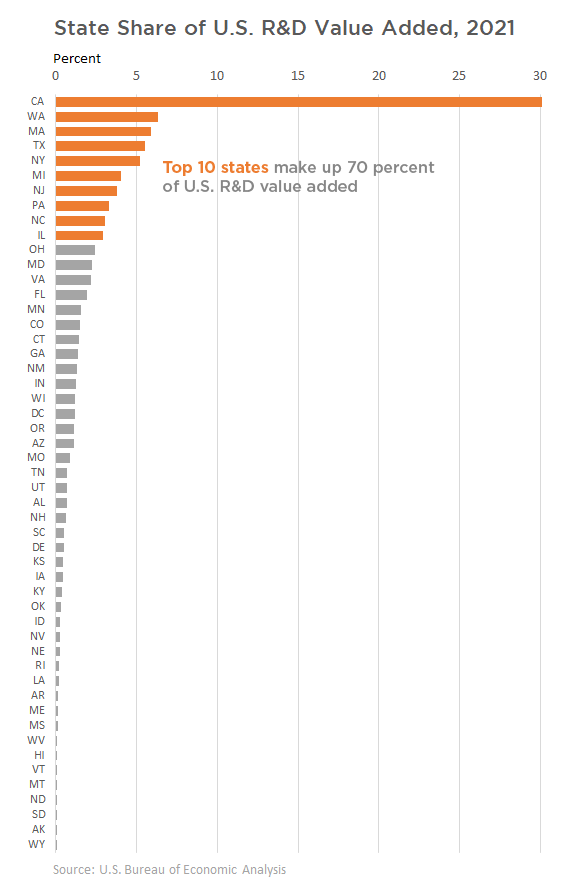

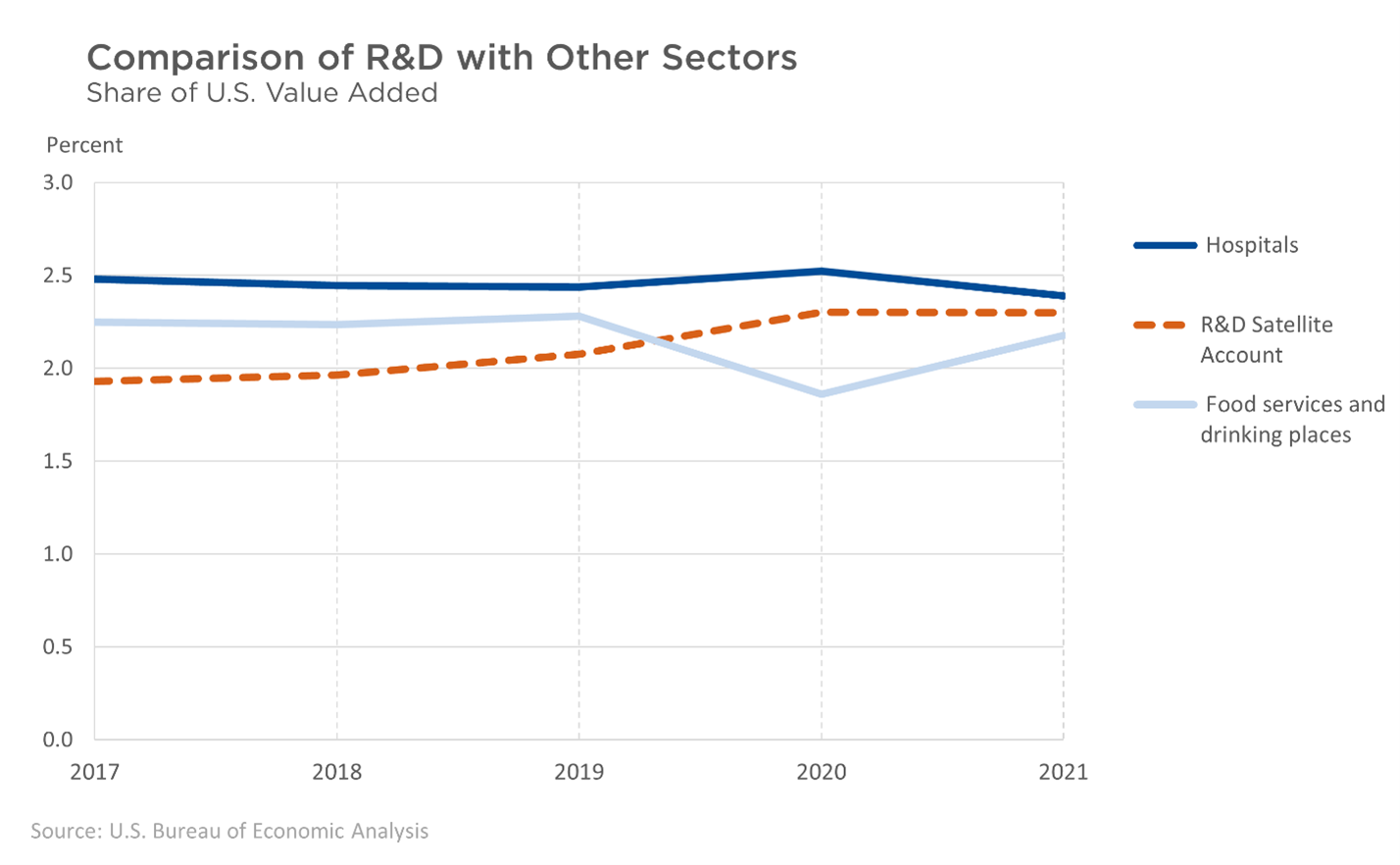

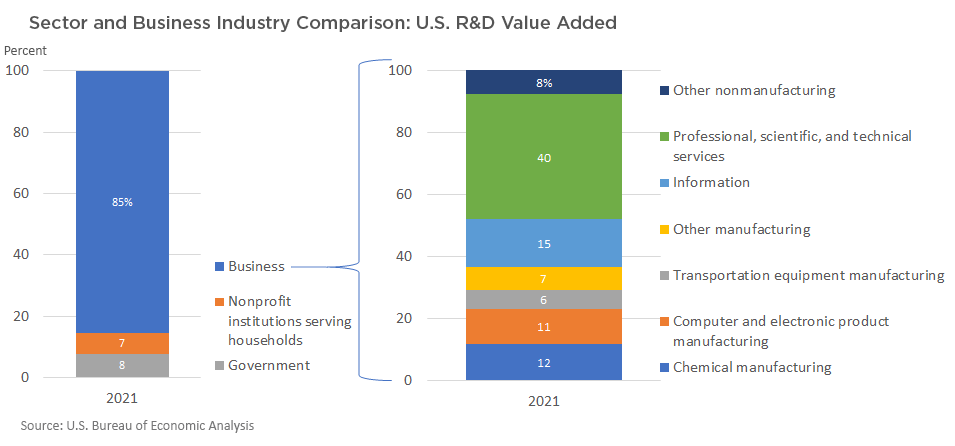

Research and development activity accounted for 2.3 percent of the U.S. economy in 2021, according to new experimental statistics released today by the Bureau of Economic Analysis. R&D as a share of each state’s gross domestic product, or GDP, ranged from 0.3 percent in Louisiana and Wyoming to 6.3 percent in New Mexico, home to federally funded Los Alamos National Laboratory and Sandia National Laboratories.

These statistics are part of a new Research and Development Satellite Account BEA is developing in partnership with the National Center for Science and Engineering Statistics of the National Science Foundation . The statistics complement BEA’s national data on R&D investment and provide BEA’s first state-by-state numbers on R&D.

The new statistics, covering 2017 to 2021, provide information on the contribution of R&D to GDP (known as R&D value added), compensation, and employment for the nation, all 50 states, and the District of Columbia. In the state statistics, R&D is attributed to the state where the R&D is performed.

Some highlights from the newly released statistics: