Features/VT-d

Introduction.

This page talks about VT-d emulation (guest vIOMMU) in QEMU, and all the related stuffs.

Please see the References section for detailed information related to the technology.

General Usage

The guest vIOMMU is a general device in QEMU. Currently only Q35 platform supports guest vIOMMU. Here is a simplest example to boot a Q35 machine with an e1000 card and a guest vIOMMU:

Here intremap=[on|off] shows whether the guest vIOMMU will support interrupt remapping. To fully enable vIOMMU functionality, we need to provide intremap=on here. Currently, interrupt remapping does not support full kernel irqchip, only "split" and "off" are supported.

Most of the full emulated devices (like e1000 mentioned above) should be able to work seamlessly now with Intel vIOMMU. However there are some special devices that need extra cares. These devices are:

- Assigned devices (like, vfio-pci)

- Virtio devices (like, virtio-net-pci)

We'll mention them seperately later.

With Assigned Devices

Device assignment has special dependency when enabled with vIOMMU device. Some introduction below.

Command Line Example

We can use the following command to boot a VM with both VT-d unit and assigned device:

Here caching-mode=on is required when we have assigned devices with the intel-iommu device. The above example assigned the host PCI device 02:00.0 to the guest.

Meanwhile, the intel-iommu device must be specified as the first device in the parameter list (before all the rest of the devices).

Device Assignment In General

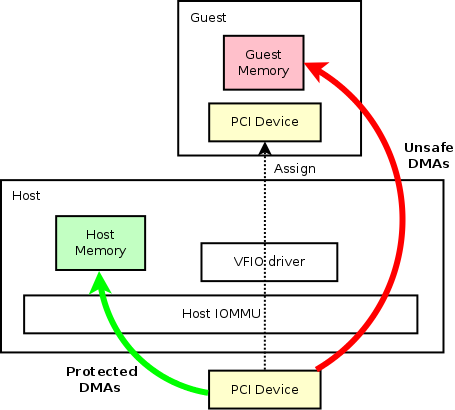

Below picture shows a basic device assignment use case in QEMU.

Let's consider a generic PCI device above, which is a real hardware attached to host system. The host can use generic kernel drivers to drive the device. In that case, all the reads/writes of that device will be protected by host IOMMU, which is safe. The protected DMAs are shown in green arrow.

The PCI device can also be assigned to a guest. By leveraging VFIO driver in the host kernel, the device can be exclusively managed by any userspace programs like QEMU. In the guest with assigned device, we should be able to see exactly the same device just like in the host (as shown in the imaginary line). Here, the hypervisor is capable of modifying the device information, like capability bits, etc.. But that's out of the scope of this page. By assigning the device to a guest, we can have merely the same performance in guest comparing to in the host.

On the other hand, when the device is assigned to the guest, guest memory address space is totally exposed to the hardware PCI device. So there would have no protection when the device do DMAs to the guest system, especially writes. Malicious writes can corrupt the guest in no time. Those unsafe DMAs are shown with a red arrow.

That's why we need a vIOMMU in the guest.

Use Case 1: Guest Device Assignment with vIOMMU

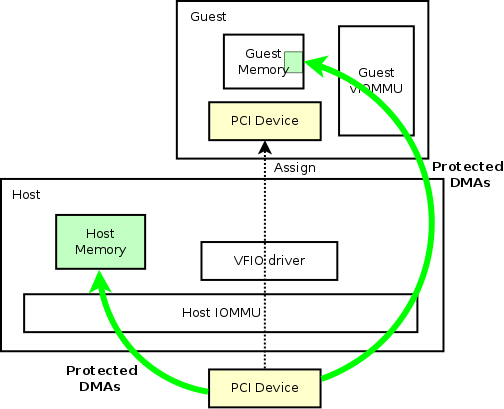

To protect the guest memory from malicious assigned devices, we can have vIOMMU in the guest, just like what host IOMMU does to the host. Then the picture will be like:

In the above figure, the only difference is that we introduced guest vIOMMU to do DMA protections. With that, guest DMAs are safe now.

Here, our use case targets at the guests that are using kernel drivers. One thing to mention is that, currently, this use case can have significant performance impact on the assigned device. The dynamic allocation of guest IOVA mapping will cause lots of work in the hypervisor in order to sync the shadow page table with the real hardware. However, in cases where the memory mapping is static, there should not have a significant impact on the performance (DPDK is one use case, which I'll mention specifically in the next chapter). With the general case of dynamic memory mapping, more work is needed to further reduce the negative impact that the protection has brought.

Use Case 2: Guest Device Assignment with vIOMMU - DPDK Scenario

DPDK (the so-called DataPlane Development Kit) is vastly used in high performance scenarios, which moved the kernel space drivers into userspace for the sake of even better performance. Normally, the DPDK program can run directly inside a bare metal to achieve the best performance with specific hardware. Meanwhile, it can also be run inside guest to drive either an assigned device from host, or an emulated device like virtio ones.

For the guest DPDK use case mentioned, host can still continue leveraging DPDK to maximum the packet delivery in the virtual switches. OVS-DPDK is a good example.

Nevertheless, DPDK introduced a problem that since we cannot really trust any userspace application program, we cannot trust DPDK applications as well, especially if it can have full access to the system memory via the hardware and taint the kernel address space. Here vIOMMU protects not only the malicious devices like hardware errors, it also protects guest from buggy userspace drivers like DPDK (via VFIO driver in the guest).

Actually there are at least three ways that DPDK applications can manage a device in the userspace (and these methods are mostly general as well not limited to DPDK use cases):

- VFIO no-iommu mode

UIO is going to be obsolete since its lacking of features and unsafety.

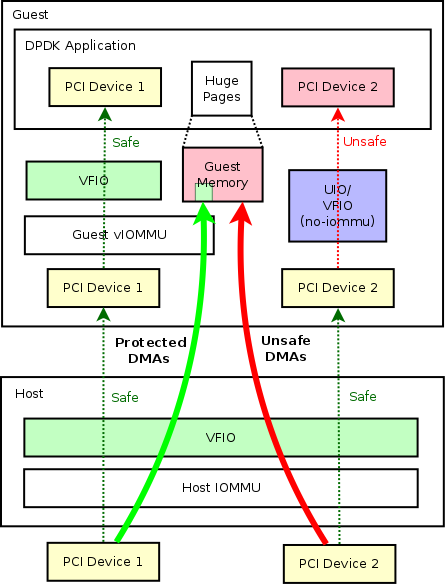

Let's consider a use case with guest DPDK application with two PCI devices. To clarify the difference of above methods, I used different ways to assign the device to the DPDK applications:

In above case, PCI Device 1 and PCI Device 2 are two devices that are assigned to guest DPDK applications. In the host, both of the devices are assigned to guest using kernel VFIO driver (here we cannot use either "VFIO no-iommu mode" or "UIO", the reason behind is out of the scope of this page though :). While in the guest, when we assign devices to DPDK applications, we can use one of the three methods mentioned above. However, only if we assign device with generic VFIO driver (which requires a vIOMMU) could we get a safely assigned device. Either assigning the device by "UIO" or "VFIO no-iommu mode" is unsafe.

In our case, PCI Device 1 is safe, while PCI Device 2 is unsafe.

Use Case 3: Nested Guest Device Assignment

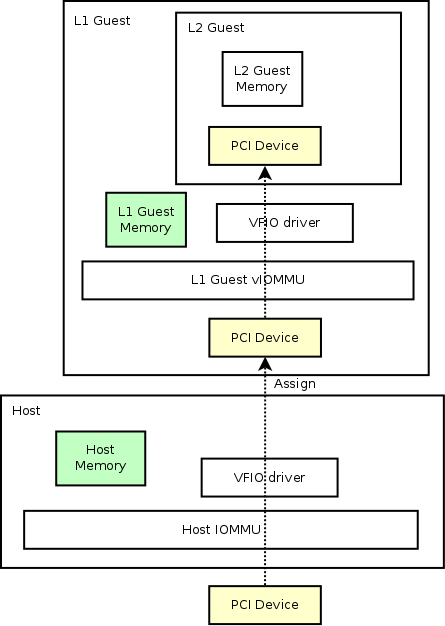

Another use case that device assignment with vIOMMU would help is that nested device assignment will work just like magic with it.

As we have mentioned in the first section, an IOMMU is required for device assignment to work. Here, to assign a L1 guest device to a L2 guest, we also need a vIOMMU inside L1 guest to build up the page mappings required for device assignment work.

Nested device assignment looks like:

With Virtio Devices

Virtio devices are special since by default they are bypassing DMA Remapping (not using it in kernel drivers). We need some special parameters to explicitly enable DMA Remapping for it. While for Interrrupt Remapping, it is not depending on the device type, so it is enabled/disabled just like other non-virtio devices.

A simplest command line to enable DMAR for a virtio-net-pci device would be:

Here we need these things in extra:

- Need "device-iotlb=on" for the emulated vIOMMU. This enables device IOTLB support for the vIOMMU, and it is pairly used with ats=on below.

- Need one more ioh3420 device. It is used to make sure virtio-net-pci device is under a PCIe root port

- under the created PCIe root port,

- make sure modern virtio is used,

- make sure iommu_platform=on for it,

- set "ats=on" which is parily used with "device-iotlb=on" above.

Similar things are required for other types of virtio PCI devices besides virtio-net-pci.

- Understanding Intel VT-d

- VT-d Specification

- Completed feature pages

Daniel P. Berrangé

Writing about open source software, virtualization & more, setting up a nested kvm guest for developing & testing pci device assignment with numa.

Over the past few years OpenStack Nova project has gained support for managing VM usage of NUMA, huge pages and PCI device assignment. One of the more challenging aspects of this is availability of hardware to develop and test against. In the ideal world it would be possible to emulate everything we need using KVM, enabling developers / test infrastructure to exercise the code without needing access to bare metal hardware supporting these features. KVM has long has support for emulating NUMA topology in guests, and guest OS can use huge pages inside the guest. What was missing were pieces around PCI device assignment, namely IOMMU support and the ability to associate NUMA nodes with PCI devices. Co-incidentally a QEMU community member was already working on providing emulation of the Intel IOMMU. I made a request to the Red Hat KVM team to fill in the other missing gap related to NUMA / PCI device association. To do this required writing code to emulate a PCI/PCI-E Expander Bridge (PXB) device, which provides a light weight host bridge that can be associated with a NUMA node. Individual PCI devices are then attached to this PXB instead of the main PCI host bridge, thus gaining affinity with a NUMA node. With this, it is now possible to configure a KVM guest such that it can be used as a virtual host to test NUMA, huge page and PCI device assignment integration. The only real outstanding gap is support for emulating some kind of SRIOV network device, but even without this, it is still possible to test most of the Nova PCI device assignment logic – we’re merely restricted to using physical functions, no virtual functions. This blog posts will describe how to configure such a virtual host.

First of all, this requires very new libvirt & QEMU to work, specifically you’ll want libvirt >= 2.3.0 and QEMU 2.7.0. We could technically support earlier QEMU versions too, but that’s pending on a patch to libvirt to deal with some command line syntax differences in QEMU for older versions. No currently released Fedora has new enough packages available, so even on Fedora 25, you must enable the “ Virtualization Preview ” repository on the physical host to try this out – F25 has new enough QEMU, so you just need a libvirt update.

For sake of illustration I’m using Fedora 25 as the OS inside the virtual guest, but any other Linux OS will do just fine. The initial task is to install guest with 8 GB of RAM & 8 CPUs using virt-install

The guest needs to use host CPU passthrough to ensure the guest gets to see VMX, as well as other modern instructions and have 3 virtual NUMA nodes. The first guest NUMA node will have 4 CPUs and 4 GB of RAM, while the second and third NUMA nodes will each have 2 CPUs and 2 GB of RAM. We are just going to let the guest float freely across host NUMA nodes since we don’t care about performance for dev/test, but in production you would certainly pin each guest NUMA node to a distinct host NUMA node.

QEMU emulates various different chipsets and historically for x86, the default has been to emulate the ancient PIIX4 (it is 20+ years old dating from circa 1995). Unfortunately this is too ancient to be able to use the Intel IOMMU emulation with, so it is neccessary to tell QEMU to emulate the marginally less ancient chipset Q35 (it is only 9 years old, dating from 2007).

The complete virt-install command line thus looks like

Once the installation is completed, shut down this guest since it will be necessary to make a number of changes to the guest XML configuration to enable features that virt-install does not know about, using “ virsh edit “. With the use of Q35, the guest XML should initially show three PCI controllers present, a “pcie-root”, a “dmi-to-pci-bridge” and a “pci-bridge”

PCI endpoint devices are not themselves associated with NUMA nodes, rather the bus they are connected to has affinity. The default pcie-root is not associated with any NUMA node, but extra PCI-E Expander Bridge controllers can be added and associated with a NUMA node. So while in edit mode, add the following to the XML config

It is not possible to plug PCI endpoint devices directly into the PXB, so the next step is to add PCI-E root ports into each PXB – we’ll need one port per device to be added, so 9 ports in total. This is where the requirement for libvirt >= 2.3.0 – earlier versions mistakenly prevented you adding more than one root port to the PXB

Notice that the values in ‘ bus ‘ attribute on the <address> element is matching the value of the ‘ index ‘ attribute on the <controller> element of the parent device in the topology. The PCI controller topology now looks like this

All the existing devices are attached to the “ pci-bridge ” (the controller with index == 2). The devices we intend to use for PCI device assignment inside the virtual host will be attached to the new “ pcie-root-port ” controllers. We will provide 3 e1000 per NUMA node, so that’s 9 devices in total to add

Note that we’re using the “user” networking, aka SLIRP. Normally one would never want to use SLIRP but we don’t care about actually sending traffic over these NICs, and so using SLIRP avoids polluting our real host with countless TAP devices.

The final configuration change is to simply add the Intel IOMMU device

It is a capability integrated into the chipset, so it does not need any <address> element of its own. At this point, save the config and start the guest once more. Use the “virsh domifaddrs” command to discover the IP address of the guest’s primary NIC and ssh into it.

We can now do some sanity check that everything visible in the guest matches what was enabled in the libvirt XML config in the host. For example, confirm the NUMA topology shows 3 nodes

Confirm that the PCI topology shows the three PCI-E Expander Bridge devices, each with three NICs attached

The IOMMU support will not be enabled yet as the kernel defaults to leaving it off. To enable it, we must update the kernel command line parameters with grub.

While intel-iommu device in QEMU can do interrupt remapping, there is no way enable that feature via libvirt at this time. So we need to set a hack for vfio

This is also a good time to install libvirt and KVM inside the guest

Note we’re disabling the default libvirt network, since it’ll clash with the IP address range used by this guest. An alternative would be to edit the default.xml to change the IP subnet.

Now reboot the guest. When it comes back up, there should be a /dev/kvm device present in the guest.

If this is not the case, make sure the physical host has nested virtualization enabled for the “kvm-intel” or “kvm-amd” kernel modules.

The IOMMU should have been detected and activated

The key message confirming everything is good is the last line there – if that’s missing something went wrong – don’t be mislead by the earlier “DMAR: IOMMU enabled” line which merely says the kernel saw the “intel_iommu=on” command line option.

The IOMMU should also have registered the PCI devices into various groups

Libvirt meanwhile should have detected all the PCI controllers/devices

And if you look at at specific PCI device, it should report the NUMA node it is associated with and the IOMMU group it is part of

Finally, libvirt should also be reporting the NUMA topology

Everything should be ready and working at this point, so lets try and install a nested guest, and assign it one of the e1000e PCI devices. For simplicity we’ll just do the exact same install for the nested guest, as we used for the top level guest we’re currently running in. The only difference is that we’ll assign it a PCI device

If everything went well, you should now have a nested guest with an assigned PCI device attached to it.

This turned out to be a rather long blog posting, but this is not surprising as we’re experimenting with some cutting edge KVM features trying to emulate quite a complicated hardware setup, that deviates from normal KVM guest setup quite a way. Perhaps in the future virt-install will be able to simplify some of this, but at least for the short-medium term there’ll be a fair bit of work required. The positive thing though is that this has clearly demonstrated that KVM is now advanced enough that you can now reasonably expect to do development and testing of features like NUMA and PCI device assignment inside nested guests.

The next step is to convince someone to add QEMU emulation of an Intel SRIOV network device….volunteers please :-)

One Response to “Setting up a nested KVM guest for developing & testing PCI device assignment with NUMA”

very good article! I have a question, when I check the iommu group in L1 guest, the result as below, I just don’t know why and what I can do?

# dmesg | grep -i iommu |grep device [ 2.160976] iommu: Adding device 0000:00:00.0 to group 0 [ 2.160985] iommu: Adding device 0000:00:01.0 to group 1 [ 2.161053] iommu: Adding device 0000:00:02.0 to group 2 [ 2.161071] iommu: Adding device 0000:00:02.1 to group 2 [ 2.161089] iommu: Adding device 0000:00:02.2 to group 2 [ 2.161107] iommu: Adding device 0000:00:02.3 to group 2 [ 2.161124] iommu: Adding device 0000:00:02.4 to group 2 [ 2.161142] iommu: Adding device 0000:00:02.5 to group 2 [ 2.161160] iommu: Adding device 0000:00:1d.0 to group 3 [ 2.161168] iommu: Adding device 0000:00:1d.1 to group 3 [ 2.161176] iommu: Adding device 0000:00:1d.2 to group 3 [ 2.161183] iommu: Adding device 0000:00:1d.7 to group 3 [ 2.161198] iommu: Adding device 0000:00:1f.0 to group 4 [ 2.161205] iommu: Adding device 0000:00:1f.2 to group 4 [ 2.161213] iommu: Adding device 0000:00:1f.3 to group 4 [ 2.161224] iommu: Adding device 0000:01:00.0 to group 2 [ 2.161228] iommu: Adding device 0000:02:01.0 to group 2 [ 2.161241] iommu: Adding device 0000:04:00.0 to group 2 [ 2.161252] iommu: Adding device 0000:05:00.0 to group 2 [ 2.161262] iommu: Adding device 0000:06:00.0 to group 2 [ 2.161272] iommu: Adding device 0000:07:00.0 to group 2

# cat hostdev.xml

# virsh attach-device rhel hostdev.xml error: Failed to attach device from hostdev.xml error: internal error: unable to execute QEMU command ‘device_add’: vfio error: 0000:07:00.0: group 2 is not viable

Leave a Reply

XHTML: You can use these tags: <a href="" title=""> <abbr title=""> <acronym title=""> <b> <blockquote cite=""> <cite> <code> <del datetime=""> <em> <i> <q cite=""> <s> <strike> <strong>

- Astronomy & Photography Blog

- Flickr Photostream

- Mastodon Profile

- Photography Portfolio

- Twitter Profile

- YouTube Videos

- Coding Tips (60)

- Entangle (24)

- Fedora (146)

- Gtk-Vnc (15)

- libvirt (124)

- OpenStack (55)

- Photography (29)

- Security (24)

- Test-AutoBuild (1)

- Uncategorized (145)

- Virt Tools (144)

Browse Posts

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 9 | 11 | 12 | ||

| 13 | 14 | 15 | 17 | 18 | 19 | |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | |||||

- Perl Modules

Recent Posts

- Visualizing potential solar PV generation vs smart meter electricity usage

- ANNOUNCE: libvirt-glib release 5.0.0

- Bye Bye BIOS: a tool for when you need to warn users the VM image is EFI only

- make-tiny-image.py: creating tiny initrds for testing QEMU or Linux kernel/userspace behaviour

- Trying sd-boot and unified kernel images in a KVM virtual machine

- Light (default)

The Theseus OS Book

Pci passthrough of devices with qemu.

PCI passthrough can be used to allow a guest OS to directly access a physical device. The following instructions are a combination of this guide on host setup for VFIO passthrough devices and this kernel documentation on VFIO.

There are three main steps to prepare a device for PCI passthrough:

- Find device information

Detach device from current driver

Attach device to vfio driver.

Once these steps are completed, the device slot information can be passed to QEMU using the vfio flag. For example, for device 59:00.0, we run:

Finding device information

First, run lspci -vnn to find the slot information, the kernel driver in use for the device, and the vendor ID and device code for the device you want to use. Below is sample output for a Mellanox ethernet card we'd like to access using PCI passthrough:

To detach the device from the kernel driver, run the following command, filling in the slot_info and driver_name with values you retrieved in the previous step.

In the above example, this would look like:

If you run lspci -v now, you'll see that a kernel driver is no longer attached to this device.

First, load the VFIO driver by doing:

To attach the new driver, run the following command, filling in the vendor_id and device_code with values you retrieved in the first step.

e.g. echo 15b3 1019 > /sys/bus/pci/drivers/vfio-pci/new_id

Now, QEMU can be launched with direct access to the device.

Return device to the Host OS

To reset the device, you can either reboot the system or return the device to the host OS using the following commands (replacing $slot_info with the value previously retrieved):

Note: access for unprivileged users

To give access to an unprivileged user to this VFIO device, find the IOMMU group the device belongs to:

for example:

for which we obtain the output below, in which 74 is the group number:

../../../../kernel/iommu_groups/74

Finally, give access to the current user via this command:

- Fedora Docs

- Fedora Magazine

- What Can I Do?

- Code of Conduct

- Ambassadors

- Community Operations

Documentation

- Infrastructure

- Internationalization

- Localization

- Package Maintainers

- Quality Assurance

- All projects

Features/KVM PCI Device Assignment

< Features

- 3.1 Completed

- 4 Detailed Description

- 5 Benefit to Fedora

- 7 How To Test

- 8 User Experience

- 9 Dependencies

- 10 Contingency Plan

- 11 Documentation

- 12 Release Notes

- 13 Comments and Discussion

Assign PCI devices from your KVM host machine to guest virtual machines. A common example is assigning a network card to a guest.

- Name: Mark McLoughlin

Current status

- Targeted release: Fedora 11

- Last updated: 2009-03-11

- Percentage of completion: 100%

- Support in kvm.ko and qemu-kvm

- pci-stub.ko to reserve host devices

- libvirt host device enumeration and assignment [in libvirt 0.6.1]

- libvirt host device assignment [in libvirt 0.6.1]

- libvirt patches for dettach/reattach [in libvirt 0.6.1]

- Backport remove_id

- Before assignment, unbind its driver, bind to pci-stub.ko and reset the device. [in libvirt 0.6.1]

- Ability to use assigned NICs for guest installation in virtinst [in 0.400.2 release]

- Ability to assign NICs in virt-manager [Expected before beta release] [in 0.7.1 release]

Detailed Description

KVM guests usually have access to either virtio devices or emulated devices. If the guest has access to suitable drivers, then virtio is preferred because it allows high performance to be achieved.

On host machines which have Intel VT-d or AMD IOMMU hardware support, another option is possible. PCI devices may be assigned directly to the guest, allowing the device to be used with minimal performance overhead.

However, device assignment is not always the best option even when it is available. Problems include:

- All of the guest's memory must kept permanently in memory. This is because the guest may program the device with any address in its address space and the hypervisor has no way of handling a DMA page fault.

- It isn't possible to migrate the guest to another host. Even if the exact same hardware exists on the remote host, it is impossible for the hypervisor to migrate the device state between hosts.

- Graphics cards cannot currently be assigned because they require access to the video BIOS.

In summary, PCI device assignment is possible given the appropriate hardware, but it is only suitable in certain situations where the flexibility of memory over-commit and migration is not required.

Benefit to Fedora

Fedora users will be able to assign PCI network cards, hard disk controllers, phone line termination cards etc. to their virtual machines.

Device assignment is an important feature for any virtualization platform. As such, the feature will improve Fedora's virtualization standings in any competitive analysis.

The core device assignment support in the kernel includes:

- VT-d and AMD IOMMU support

- Device assignment in kvm.ko

Support is also required in qemu-kvm , the userspace component of KVM.

libvirt, python-virtinst and virt-manager also require support to be added in order to allow users to easily assign devices. Not only is the ability to assign devices needed, but also the ability to list what devices are available to assign.

Further complications include:

- Preventing a guest assigned device from being used in the host

- Detecting which host devices are assignable

- Ensuring that devices are properly reset.

How To Test

Perhaps the most straightforward test is to use virt-manager create a Fedora 11 KVM guest with a PCI network card.

- Run virt-manager, click on New and go through the usual process of creating a guest

- In the network configuration screen, choose the "assign physical device" option and select an available network card from the list

- Start the guest install process, check that it completes successfully

- Check the newly installed guest reboots and has access to the assigned network card

Also see: Procedures from Virtualization Test Day .

- You need to have a machine with Intel VT-d or AMD IOMMU support.

- If you have only one network card on the host machine, the host will not be able to access the network during the test. So, e.g. you would need to local install media to start the installation.

User Experience

The user experience is similar to that described above. Users will be able to easily assign PCI devices to their KVM guests.

Dependencies

The changes described above are for the kernel, kvm, libvirt, python-virtinst and virt-manager.

Ensuring that VT-d support is solid enough that intel_iommu=on is the default.

Contingency Plan

If the tools support is not complete in time, no contingency is needed. The funcionality just wouldn't be available.

However, if the functionality was so broken that we didn't want to expose it to users, we might have to disable the support in the tools - e.g. remove the device assignment UI from virt-manager.

Searching the KVM mailing list archives may prove helpful.

Some documentation for the libvirt support is available on the libvirt site .

Wikipedia references some information for Intel VT-d , including the full specification .

The AMD IOMMU spec is also available.

A post to [email protected] describing some of the requirements around device unbinding, reset and filtering. bug #487103 tracks the needed backport of remove_id .

bug #479996 tracks some of the major issues which were fixed with VT-d support.

Release Notes

Fedora 11 expands its virtualization capabilities to include KVM PCI device assignment support. KVM users can now give virtual machines exclusive access to physical PCI devices using Fedora's virtualization toools, including the Virtual Machine Manager application.

Intel VT-d or AMD IOMMU hardware platform support is required in order for this feature to be available.

Comments and Discussion

- See Talk:Features/KVM PCI Device Assignment

- Virtualization

- F11 Virt Features

- FeatureAcceptedF11

Copyright © 2024 Red Hat, Inc. and others. All Rights Reserved. For comments or queries, please contact us .

The Fedora Project is maintained and driven by the community and sponsored by Red Hat. This is a community maintained site. Red Hat is not responsible for content.

- This page was last edited on 17 May 2009, at 16:52.

- Content is available under Attribution-Share Alike 4.0 International unless otherwise noted.

- Privacy policy

- About Fedora Project Wiki

- Disclaimers

- Trademark Guidelines

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement . We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Windows Server 2025 drivers #1037

kroese commented Jan 28, 2024

| Two days ago, Microsoft released a preview for Windows Server 2025, see I tried to install it, but it does not accept the Windows Server 2022 drivers for , nor the Windows 11 one. Maybe it just needs a modified file, as I don't assume anything changed in the driver API compared to Win11/Win22? Anyway, it would be nice to be able install the 2025 version, so my feature request is to include a release of the drivers. |

| The text was updated successfully, but these errors were encountered: |

kroese commented Jan 28, 2024 • edited

| I just tried it again using the "Azure Edition" instead of the normal one, and that one accepts the Win22 drivers for some reason. EDIT: Nevermind, it only accepts it now during the first screen, but a few screens further it cannot locate any disks, and when manually loading the w2k22 driver again it prompts "Cannot load driver". |

Sorry, something went wrong.

kostyanf14 commented Jan 29, 2024

| Hi What build number of Windows Server do you have? |

kroese commented Jan 29, 2024

| Build 26040 |

- 👍 1 reaction

| Its a bit strange installation. The first step asks for drivers for a device that displays your hardware. I assumed it was asking for the scsi drivers (as every other Windows setup asks this as the first step), so that it doesnt accept the scsi driver here makes sense in hindsight. But I have no idea what a "device to display your hardware" is. I tried , , and lots of others, but none of them were correct. Server 2025 is based on Win11 LTSC so I also dont understand why it cannot load the Win11 scsi driver at the disk screen later on. |

vrozenfe commented Jan 29, 2024

| |

| I tried all three versions, the "normal", the Azure and the Annual edition. All have the same problem that they dont accept any VirtIO driver. |

| Tried to install vNext 26040.1000.240122-1157 Standard Server on seems to work fine with viostor, netkvm and viogpudo drivers build for ws2022 my qemu command line: #!/bin/sh FLAGS=',hv_stimer,hv_synic,hv_vpindex,hv_relaxed,hv_spinlocks=0xfff,hv_vapic,hv_time,hv_frequencies,hv_runtime,hv_tlbflush,hv_reenlightenment,hv_stimer_direct,hv_ipi,+kvm_pv_unhalt' VGA='-device virtio-vga,edid=on,xres=1280,yres=800' |

| Interesting... We have almost an identical QEMU commandline, and the same version of the VirtIO drivers, so I am a bit puzzled... Did you get the screen 'Select a device to display hardware' in the first step of the installation? Did you use the win22 'viostor.inf' or the 'vioscsi.inf'? |

| I try both viostor and vioscsi (with corresponding device), and both work fine |

|

Do you run Windows with OVMF (secure-boot or no) or SeaBIOS? |

kroese commented Jan 29, 2024 • edited

| I discovered that this command causes the error with loading the drivers: And this command causes NO error in loading the drivers: I really would like to know why. Because both commands are almost identical. The only obvious difference is the vs the . So it seems by using it causes Win2025 to fail to load any driver. Maybe it is just the driver that is incompatible, as the one seems to work fine. |

fkonradmain commented Mar 15, 2024

| So, I got almost all of the drivers to work, by hard code activating directly via powershell. I just wanted to share it with you, because it seems to be a certificate issue as well. My environment: About the installation: I wrote a script, that Define the paths to the MSI files $virtioWinMSI = "D:\virtio-win-gt-x64.msi" $guestAgentMSI = "D:\guest-agent\qemu-ga-x86_64.msi" # Install Virtio-Win MSI Start-Process msiexec.exe -ArgumentList "/i `"$virtioWinMSI`" /qn" -Wait # Add the Root CA for the drivers to the TrustedPublisher and the Root CA store Import-Certificate -FilePath "D:\cert\Virtio_Win_Red_Hat_CA.cer" -CertStoreLocation "Cert:\LocalMachine\TrustedPublisher" Import-Certificate -FilePath "D:\cert\Virtio_Win_Red_Hat_CA.cer" -CertStoreLocation "Cert:\LocalMachine\Root" # Install Virtio drivers pnputil -i -a "D:\amd64\2k22\vioscsi.inf" pnputil -i -a "D:\amd64\2k22\viostor.inf" pnputil -i -a "D:\Balloon\2k22\amd64\balloon.inf" pnputil -i -a "D:\NetKVM\2k22\amd64\netkvm.inf" pnputil -i -a "D:\fwcfg\2k22\amd64\fwcfg.inf" pnputil -i -a "D:\pvpanic\2k22\amd64\pvpanic.inf" pnputil -i -a "D:\pvpanic\2k22\amd64\pvpanic-pci.inf" pnputil -i -a "D:\qemufwcfg\2k22\amd64\qemufwcfg.inf" pnputil -i -a "D:\qemupciserial\2k22\amd64\qemupciserial.inf" pnputil -i -a "D:\smbus\2k22\amd64\smbus.inf" pnputil -i -a "D:\sriov\2k22\amd64\vioprot.inf" pnputil -i -a "D:\viofs\2k22\amd64\viofs.inf" pnputil -i -a "D:\viogpudo\2k22\amd64\viogpudo.inf" pnputil -i -a "D:\vioinput\2k22\amd64\vioinput.inf" pnputil -i -a "D:\viorng\2k22\amd64\viorng.inf" pnputil -i -a "D:\vioscsi\2k22\amd64\vioscsi.inf" pnputil -i -a "D:\vioserial\2k22\amd64\vioser.inf" pnputil -i -a "D:\viostor\2k22\amd64\viostor.inf" # Install QEMU Guest Agent MSI Start-Process msiexec.exe -ArgumentList "/i `"$guestAgentMSI`" /qn" -WaitAt the end, only one device is not recognized (no idea, which it actually is, though): |

vrozenfe commented Mar 15, 2024

| |

kroese commented May 25, 2024

| Solution provided in 1100 |

No branches or pull requests

- Welcome to QEMU’s documentation!

- Edit on GitLab

Welcome to QEMU’s documentation!

- Supported build platforms

- Deprecated features

- Removed features

- Introduction

- Device Emulation

- Keys in the graphical frontends

- Keys in the character backend multiplexer

- QEMU Monitor

- Disk Images

- QEMU virtio-net standby (net_failover)

- Direct Linux Boot

- Generic Loader

- Guest Loader

- QEMU Barrier Client

- VNC security

- TLS setup for network services

- Providing secret data to QEMU

- Client authorization

- Record/replay

- Managed start up options

- Managing device boot order with bootindex properties

- Virtual CPU hotplug

- Persistent reservation managers

- QEMU System Emulator Targets

- Multi-process QEMU

- Confidential Guest Support

- QEMU VM templating

- QEMU User space emulator

- QEMU disk image utility

- QEMU Storage Daemon

- QEMU Disk Network Block Device Server

- QEMU persistent reservation helper

- QEMU SystemTap trace tool

- QEMU 9p virtfs proxy filesystem helper

- Barrier client protocol

- Dirty Bitmaps and Incremental Backup

- D-Bus VMState

- D-Bus display

- Live Block Device Operations

- Persistent reservation helper protocol

- QEMU Machine Protocol Specification

- QEMU Guest Agent

- QEMU Guest Agent Protocol Reference

- QEMU QMP Reference Manual

- QEMU Storage Daemon QMP Reference Manual

- Vhost-user Protocol

- Vhost-user-gpu Protocol

- Vhost-vdpa Protocol

- Virtio balloon memory statistics

- VNC LED state Pseudo-encoding

- PCI IDs for QEMU

- QEMU PCI serial devices

- QEMU PCI test device

- POWER9 XIVE interrupt controller

- XIVE for sPAPR (pseries machines)

- NUMA mechanics for sPAPR (pseries machines)

- How the pseries Linux guest calculates NUMA distances

- pseries NUMA mechanics

- Legacy (5.1 and older) pseries NUMA mechanics

- QEMU and ACPI BIOS Generic Event Device interface

- QEMU TPM Device

- APEI tables generating and CPER record

- QEMU<->ACPI BIOS CPU hotplug interface

- QEMU<->ACPI BIOS memory hotplug interface

- QEMU<->ACPI BIOS PCI hotplug interface

- QEMU<->ACPI BIOS NVDIMM interface

- ACPI ERST DEVICE

- QEMU/Guest Firmware Interface for AMD SEV and SEV-ES

- QEMU Firmware Configuration (fw_cfg) Device

- IBM’s Flexible Service Interface (FSI)

- VMWare PVSCSI Device Interface

- Device Specification for Inter-VM shared memory device

- PVPANIC DEVICE

- QEMU Standard VGA

- Virtual System Controller

- VMCoreInfo device

- Virtual Machine Generation ID Device

- QEMU Community Processes

- QEMU Build and Test System

- Internal QEMU APIs

- Internal Subsystem Information

- TCG Emulation

- Stack Overflow Public questions & answers

- Stack Overflow for Teams Where developers & technologists share private knowledge with coworkers

- Talent Build your employer brand

- Advertising Reach developers & technologists worldwide

- Labs The future of collective knowledge sharing

- About the company

Collectives™ on Stack Overflow

Find centralized, trusted content and collaborate around the technologies you use most.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Get early access and see previews of new features.

How to emulate a pcie device which has a cpu?

Now, some pcie device has a cpu, ex:DPU.

I want to use qemu to emulate this device.

Can qemu support this requirment?

- qemu is open source, so just roll some of your own code and volia it will support this device – X3R0 Feb 16, 2022 at 9:45

2 Answers 2

QEMU's emulation framework doesn't support having devices which have fully programmable CPUs which can execute arbitrary guest-provided code in the same way as the main system emulated CPUs. (The main blocker is that all the CPUs in the system have to be the same architecture, eg all x86 or all Arm.)

For devices that have a CPU on them as part of their implementation but where that CPU is generally running fixed firmware that exposes a more limited interface to guest code, a QEMU device model can provide direct emulation of that limited interface, which is typically more efficient anyway.

In theory you could write a device that did a purely interpreted emulation of an onboard CPU, using QEMU facilities like timers and bottom-half callbacks to interpret a small chunk of instructions every so often. I don't know of any examples of anybody having written a device like that, though. It would be quite a lot of work and the speed of the resulting emulation would not be very fast.

This can be done by hoisting two instances of QEMU, one built with the host system architecture and the other with the secondary architecture, and connecting them through some interface.

This has been done by Xilinx by starting two separate QEMU processes with some inter-process communication between them, and by Neuroblade by building QEMU in nios2 architecture as a shared library and then loading it from a QEMU process that models the host architecture (in this case the interface can simply be modelled by direct function calls).

- How can I use QEMU to simulate mixed platforms?

- https://lists.gnu.org/archive/html/qemu-devel/2021-12/msg01969.html

Your Answer

Reminder: Answers generated by artificial intelligence tools are not allowed on Stack Overflow. Learn more

Sign up or log in

Post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Not the answer you're looking for? Browse other questions tagged linux qemu pci-e or ask your own question .

- The Overflow Blog

- Introducing Staging Ground: The private space to get feedback on questions...

- How to prevent your new chatbot from giving away company secrets

- Featured on Meta

- The [tax] tag is being burninated

- The return of Staging Ground to Stack Overflow

- The 2024 Developer Survey Is Live

- Policy: Generative AI (e.g., ChatGPT) is banned

Hot Network Questions

- Datasheet recommends driving relay in an uncommon configuration

- What legal reason, if any, does my bank have to know if I am a dual-citizen of the US?

- Transformer with same size symbol meaning

- Calculating Living Area on a Concentric Shellworld

- Is bike tyre pressure info deliberately hard to read?

- How can I obtain a record of my fathers' medals from WW2?

- Effects if a human was shot by a femtosecond laser

- Visual Studio Code crashes with [...ERROR:process_memory_range.cc(75)] read out of range

- Why do airplanes sometimes turn more than 180 degrees after takeoff?

- What should I get paid for if I can't work due to circumstances outside of my control?

- Did Gambit and Rogue have children?

- Does Japanese advertises selling something with full price?

- Is the barrier to entry for mathematics research increasing, and is it at risk of becoming less accessible in the future?

- What is the U.N. list of shame and how does it affect Israel which was recently added?

- Verifying if "Hinge Vehicle Roof Holder 1 x 4 x 2 (4214)" in blue and white is LEGO

- What’s the history behind Rogue’s ability to touch others directly without harmful effects in the comics?

- Is it common to email researchers to "sell" your work?

- Linear regression: interpret coefficient in terms of percentage change when the outcome variable is a count number

- Is it true that engines built in Russia are still used to launch American spacecraft?

- Tools like leanblueprint for other proof assistants, especially Coq?

- My vehicle shut off in traffic and will not turn on

- How does Death Ward interact with Band of Loyalty?

- Is 1.5 hours enough for flight transfer in Frankfurt?

- What percentage of light gets scattered by a mirror?

IMAGES

VIDEO

COMMENTS

You can refer to Redhat's KVM guide: Assigning a PCI device. I followed the instruction and successfully assigned a PCI device to guest before, but not sure if this works in a nested KVM environment. You can try to assign the same PCI device with identical PCI ID to the guest and nested one. For your problems, in my memory, KVM supports nested ...

KVM: PCI device assignment Chris Wright Red Hat August 10, 2010. Red Hat, Inc. 2 Agenda • Anatomy of a PCI device • Current mechanism • Shortcomings • Future. ... KVM Device Assignment: qemu • Add device to guest pci bus • Manages config space access - PCI sysfs files • Calls KVM ioctl interface. Red Hat, Inc. 14

Device Assignment with Nested Guest and DPDK Peter Xu <[email protected]> Red Hat Virtualization Team. 2 ... Assigned PCI Device QEMU Guest Memory Core API (1) Guest (2) Memory (3) (1) IO request (2) Allocate DMA buffer, setup device page table (IOVA->GPA) (3) Send MAP notification

To add a multi-function PCI device to a KVM guest virtual machine: Run the virsh edit guestname command to edit the XML configuration file for the guest virtual machine. In the <address> element, add a multifunction='on' attribute. This enables the use of other functions for the particular multifunction PCI device. Copy.

The above example assigned the host PCI device 02:00.0 to the guest. Meanwhile, the intel-iommu device must be specified as the first device in the parameter list (before all the rest of the devices). Device Assignment In General. Below picture shows a basic device assignment use case in QEMU.

It's a USB controller/port/whatever. I found some code on the internet which suggests using -device pci-assign,host=0d:00.3 but that doesn't work any more: qemu-system-x86_64: -device pci-assign,host=0d:00.3: 'pci-assign' is not a valid device model name so I'm lost here. The script I use has "modprobe vfio-pci" and then uses vfio-pci where ...

A device front end is how a device is presented to the guest. The type of device presented should match the hardware that the guest operating system is expecting to see. All devices can be specified with the --device command line option. Running QEMU with the command line options --device help will list all devices it is aware of. Using the ...

With this, it is now possible to configure a KVM guest such that it can be used as a virtual host to test NUMA, huge page and PCI device assignment integration. The only real outstanding gap is support for emulating some kind of SRIOV network device, but even without this, it is still possible to test most of the Nova PCI device assignment ...

PCI passthrough of devices with QEMU. PCI passthrough can be used to allow a guest OS to directly access a physical device. The following instructions are a combination of this guide on host setup for VFIO passthrough devices and this kernel documentation on VFIO. There are three main steps to prepare a device for PCI passthrough:

Support in kvm.ko and qemu-kvm; pci-stub.ko to reserve host devices; libvirt host device enumeration and assignment [in libvirt 0.6.1] libvirt host device assignment [in libvirt 0.6.1] libvirt patches for dettach/reattach [in libvirt 0.6.1] Backport remove_id; Before assignment, unbind its driver, bind to pci-stub.ko and reset the device. [in ...

QEMU, Device Assignment & vIOMMU. QEMU had device assignment since 2012. QEMU had vIOMMU emulation (VT-d) since 2014. Emulated devices are supported by vIOMMU. Using QEMU's memory API when DMA. DMA happens with QEMU's awareness. Either full-emulated, or para-virtualized (vhost is special!) Assigned devices are not supported by vIOMMU.

PCI device assignment is only available on hardware platforms supporting either Intel VT-d or AMD IOMMU. These Intel VT-d or AMD IOMMU specifications must be enabled in BIOS for PCI device assignment to function. Procedure 9.1. Preparing an Intel system for PCI device assignment. Enable the Intel VT-d specifications.

Why PCI Express? New features: enhancements as a successor Used as express is widely accepted in the market. Some device drivers require express They check if the device is really express Existing PCI device assignment isn't enough Hardware certification requires express Current PCI support is also limited

4 PCI device slots are configured with 5 emulated devices (two devices are in slot 1) by default. However, users can explicitly remove 2 of the emulated devices that are configured by default if the guest operating system does not require them for operation (the video adapter device in slot 2; and the memory balloon driver device in the lowest available slot, usually slot 3).

While the zpci device will be autogenerated if not specified, it is recommended to specify it explicitly so that you can pass s390-specific PCI configuration. For example, in order to pass a PCI device 0000:00:00.0 through to the guest, you would specify: qemu-system-s390x ... -device zpci,uid=1,fid=0,target=hostdev0,id=zpci1 \.

Double-click a VM Guest entry in the Virtual Machine Manager to open its console, then switch to the Details view with View Details. Click Add Hardware and choose the PCI Host Device or USB Host Device from the left list. A list of available PCI devices appears in the right part of the window.

QEMU PCI test device. pci-testdev is a device used for testing low level IO. The device implements up to three BARs: BAR0, BAR1 and BAR2. Each of BAR 0+1 can be memory or IO. Guests must detect BAR types and act accordingly. BAR 0+1 size is up to 4K bytes each. BAR 0+1 starts with the following header:

Procedure 16.1. Preparing an Intel system for PCI device assignment. Enable the Intel VT-d specifications. The Intel VT-d specifications provide hardware support for directly assigning a physical device to a virtual machine. These specifications are required to use PCI device assignment with Red Hat Enterprise Linux.

PCI device assignment breaks free of KVM Alex Williamson <[email protected]> 2 PCI 101 - Config space ... Signal host PCI errors to guest Notify QEMU of host suspend/resume

The only obvious difference is the device virtio-blk-pci vs the device scsi-hd. So it seems by using virtio-blk it causes Win2025 to fail to load any driver. Maybe it is just the viostor driver that is incompatible, as the vioscsi one seems to work fine.

QEMU/Guest Firmware Interface for AMD SEV and SEV-ES. QEMU Firmware Configuration (fw_cfg) Device. IBM's Flexible Service Interface (FSI) VMWare PVSCSI Device Interface. EDU device. Device Specification for Inter-VM shared memory device. PVPANIC DEVICE.

2. QEMU's emulation framework doesn't support having devices which have fully programmable CPUs which can execute arbitrary guest-provided code in the same way as the main system emulated CPUs. (The main blocker is that all the CPUs in the system have to be the same architecture, eg all x86 or all Arm.) For devices that have a CPU on them as ...

A PCI network device (specified in the domain XML by the <source> element) can be directly connected to the guest using direct device assignment (sometimes referred to as passthrough).Due to limitations in standard single-port PCI ethernet card driver design, only Single Root I/O Virtualization (SR-IOV) virtual function (VF) devices can be assigned in this manner; to assign a standard single ...