- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Overview of the Problem-Solving Mental Process

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Rachel Goldman, PhD FTOS, is a licensed psychologist, clinical assistant professor, speaker, wellness expert specializing in eating behaviors, stress management, and health behavior change.

:max_bytes(150000):strip_icc():format(webp)/Rachel-Goldman-1000-a42451caacb6423abecbe6b74e628042.jpg)

- Identify the Problem

- Define the Problem

- Form a Strategy

- Organize Information

- Allocate Resources

- Monitor Progress

- Evaluate the Results

Frequently Asked Questions

Problem-solving is a mental process that involves discovering, analyzing, and solving problems. The ultimate goal of problem-solving is to overcome obstacles and find a solution that best resolves the issue.

The best strategy for solving a problem depends largely on the unique situation. In some cases, people are better off learning everything they can about the issue and then using factual knowledge to come up with a solution. In other instances, creativity and insight are the best options.

It is not necessary to follow problem-solving steps sequentially, It is common to skip steps or even go back through steps multiple times until the desired solution is reached.

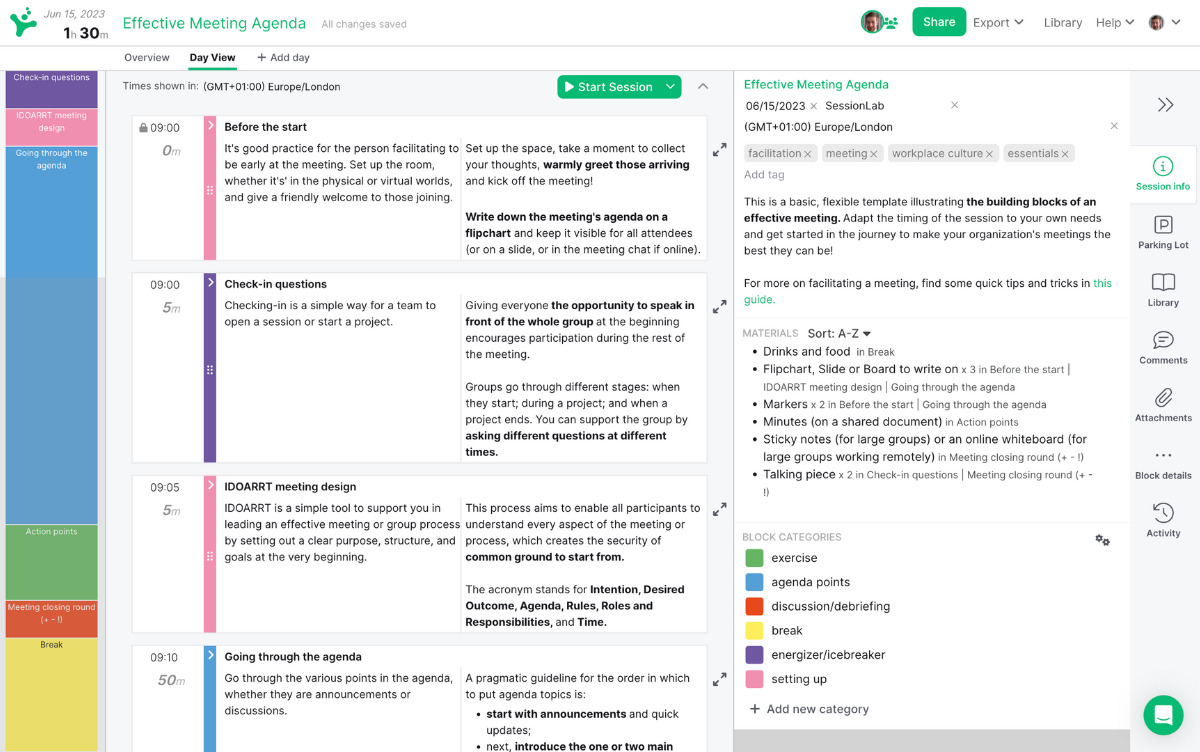

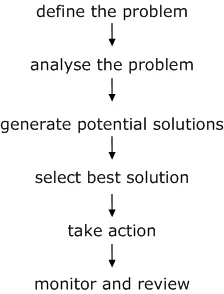

In order to correctly solve a problem, it is often important to follow a series of steps. Researchers sometimes refer to this as the problem-solving cycle. While this cycle is portrayed sequentially, people rarely follow a rigid series of steps to find a solution.

The following steps include developing strategies and organizing knowledge.

1. Identifying the Problem

While it may seem like an obvious step, identifying the problem is not always as simple as it sounds. In some cases, people might mistakenly identify the wrong source of a problem, which will make attempts to solve it inefficient or even useless.

Some strategies that you might use to figure out the source of a problem include :

- Asking questions about the problem

- Breaking the problem down into smaller pieces

- Looking at the problem from different perspectives

- Conducting research to figure out what relationships exist between different variables

2. Defining the Problem

After the problem has been identified, it is important to fully define the problem so that it can be solved. You can define a problem by operationally defining each aspect of the problem and setting goals for what aspects of the problem you will address

At this point, you should focus on figuring out which aspects of the problems are facts and which are opinions. State the problem clearly and identify the scope of the solution.

3. Forming a Strategy

After the problem has been identified, it is time to start brainstorming potential solutions. This step usually involves generating as many ideas as possible without judging their quality. Once several possibilities have been generated, they can be evaluated and narrowed down.

The next step is to develop a strategy to solve the problem. The approach used will vary depending upon the situation and the individual's unique preferences. Common problem-solving strategies include heuristics and algorithms.

- Heuristics are mental shortcuts that are often based on solutions that have worked in the past. They can work well if the problem is similar to something you have encountered before and are often the best choice if you need a fast solution.

- Algorithms are step-by-step strategies that are guaranteed to produce a correct result. While this approach is great for accuracy, it can also consume time and resources.

Heuristics are often best used when time is of the essence, while algorithms are a better choice when a decision needs to be as accurate as possible.

4. Organizing Information

Before coming up with a solution, you need to first organize the available information. What do you know about the problem? What do you not know? The more information that is available the better prepared you will be to come up with an accurate solution.

When approaching a problem, it is important to make sure that you have all the data you need. Making a decision without adequate information can lead to biased or inaccurate results.

5. Allocating Resources

Of course, we don't always have unlimited money, time, and other resources to solve a problem. Before you begin to solve a problem, you need to determine how high priority it is.

If it is an important problem, it is probably worth allocating more resources to solving it. If, however, it is a fairly unimportant problem, then you do not want to spend too much of your available resources on coming up with a solution.

At this stage, it is important to consider all of the factors that might affect the problem at hand. This includes looking at the available resources, deadlines that need to be met, and any possible risks involved in each solution. After careful evaluation, a decision can be made about which solution to pursue.

6. Monitoring Progress

After selecting a problem-solving strategy, it is time to put the plan into action and see if it works. This step might involve trying out different solutions to see which one is the most effective.

It is also important to monitor the situation after implementing a solution to ensure that the problem has been solved and that no new problems have arisen as a result of the proposed solution.

Effective problem-solvers tend to monitor their progress as they work towards a solution. If they are not making good progress toward reaching their goal, they will reevaluate their approach or look for new strategies .

7. Evaluating the Results

After a solution has been reached, it is important to evaluate the results to determine if it is the best possible solution to the problem. This evaluation might be immediate, such as checking the results of a math problem to ensure the answer is correct, or it can be delayed, such as evaluating the success of a therapy program after several months of treatment.

Once a problem has been solved, it is important to take some time to reflect on the process that was used and evaluate the results. This will help you to improve your problem-solving skills and become more efficient at solving future problems.

A Word From Verywell

It is important to remember that there are many different problem-solving processes with different steps, and this is just one example. Problem-solving in real-world situations requires a great deal of resourcefulness, flexibility, resilience, and continuous interaction with the environment.

Get Advice From The Verywell Mind Podcast

Hosted by therapist Amy Morin, LCSW, this episode of The Verywell Mind Podcast shares how you can stop dwelling in a negative mindset.

Follow Now : Apple Podcasts / Spotify / Google Podcasts

You can become a better problem solving by:

- Practicing brainstorming and coming up with multiple potential solutions to problems

- Being open-minded and considering all possible options before making a decision

- Breaking down problems into smaller, more manageable pieces

- Asking for help when needed

- Researching different problem-solving techniques and trying out new ones

- Learning from mistakes and using them as opportunities to grow

It's important to communicate openly and honestly with your partner about what's going on. Try to see things from their perspective as well as your own. Work together to find a resolution that works for both of you. Be willing to compromise and accept that there may not be a perfect solution.

Take breaks if things are getting too heated, and come back to the problem when you feel calm and collected. Don't try to fix every problem on your own—consider asking a therapist or counselor for help and insight.

If you've tried everything and there doesn't seem to be a way to fix the problem, you may have to learn to accept it. This can be difficult, but try to focus on the positive aspects of your life and remember that every situation is temporary. Don't dwell on what's going wrong—instead, think about what's going right. Find support by talking to friends or family. Seek professional help if you're having trouble coping.

Davidson JE, Sternberg RJ, editors. The Psychology of Problem Solving . Cambridge University Press; 2003. doi:10.1017/CBO9780511615771

Sarathy V. Real world problem-solving . Front Hum Neurosci . 2018;12:261. Published 2018 Jun 26. doi:10.3389/fnhum.2018.00261

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

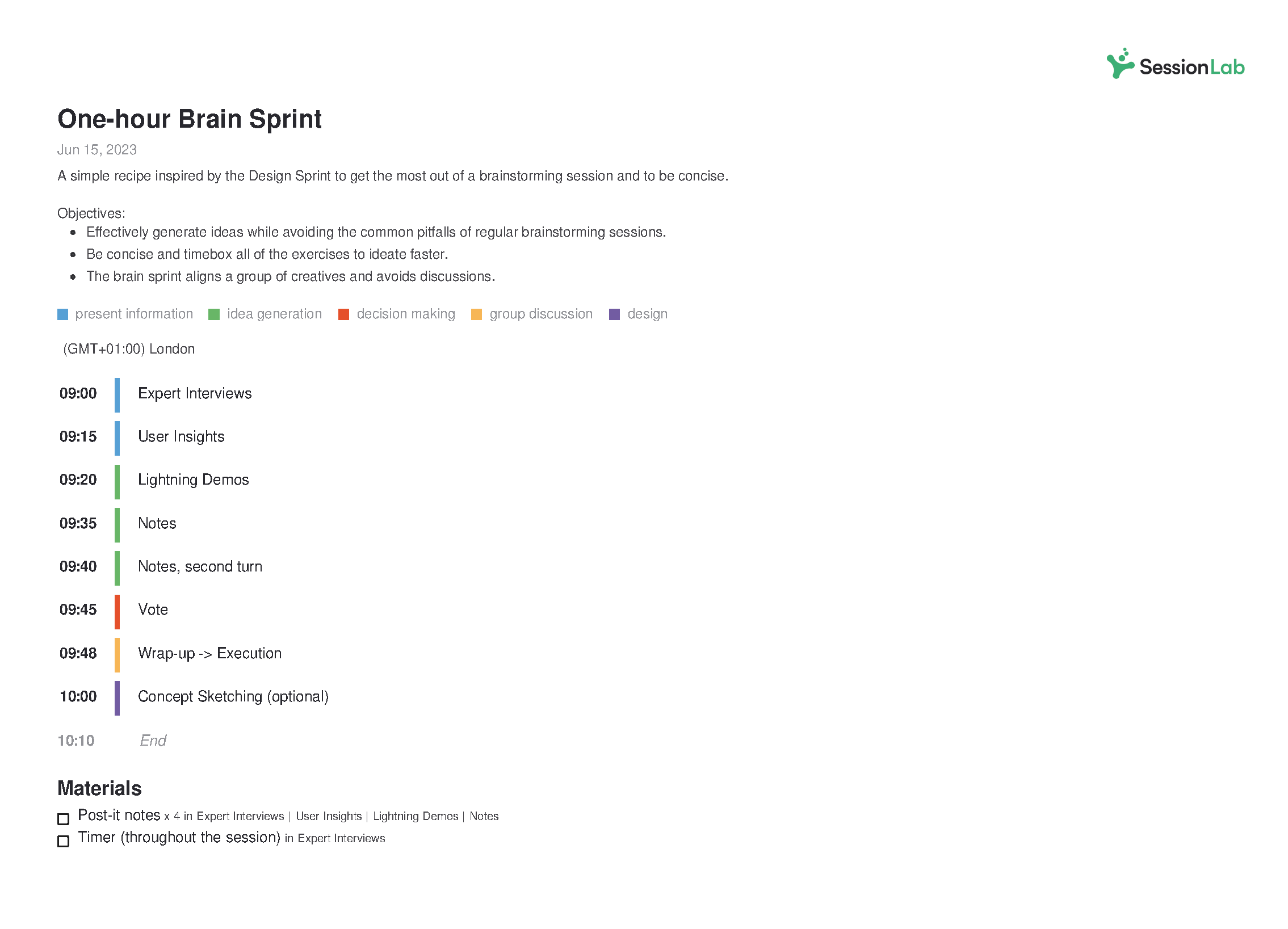

What is Problem Solving? (Steps, Techniques, Examples)

By Status.net Editorial Team on May 7, 2023 — 5 minutes to read

What Is Problem Solving?

Definition and importance.

Problem solving is the process of finding solutions to obstacles or challenges you encounter in your life or work. It is a crucial skill that allows you to tackle complex situations, adapt to changes, and overcome difficulties with ease. Mastering this ability will contribute to both your personal and professional growth, leading to more successful outcomes and better decision-making.

Problem-Solving Steps

The problem-solving process typically includes the following steps:

- Identify the issue : Recognize the problem that needs to be solved.

- Analyze the situation : Examine the issue in depth, gather all relevant information, and consider any limitations or constraints that may be present.

- Generate potential solutions : Brainstorm a list of possible solutions to the issue, without immediately judging or evaluating them.

- Evaluate options : Weigh the pros and cons of each potential solution, considering factors such as feasibility, effectiveness, and potential risks.

- Select the best solution : Choose the option that best addresses the problem and aligns with your objectives.

- Implement the solution : Put the selected solution into action and monitor the results to ensure it resolves the issue.

- Review and learn : Reflect on the problem-solving process, identify any improvements or adjustments that can be made, and apply these learnings to future situations.

Defining the Problem

To start tackling a problem, first, identify and understand it. Analyzing the issue thoroughly helps to clarify its scope and nature. Ask questions to gather information and consider the problem from various angles. Some strategies to define the problem include:

- Brainstorming with others

- Asking the 5 Ws and 1 H (Who, What, When, Where, Why, and How)

- Analyzing cause and effect

- Creating a problem statement

Generating Solutions

Once the problem is clearly understood, brainstorm possible solutions. Think creatively and keep an open mind, as well as considering lessons from past experiences. Consider:

- Creating a list of potential ideas to solve the problem

- Grouping and categorizing similar solutions

- Prioritizing potential solutions based on feasibility, cost, and resources required

- Involving others to share diverse opinions and inputs

Evaluating and Selecting Solutions

Evaluate each potential solution, weighing its pros and cons. To facilitate decision-making, use techniques such as:

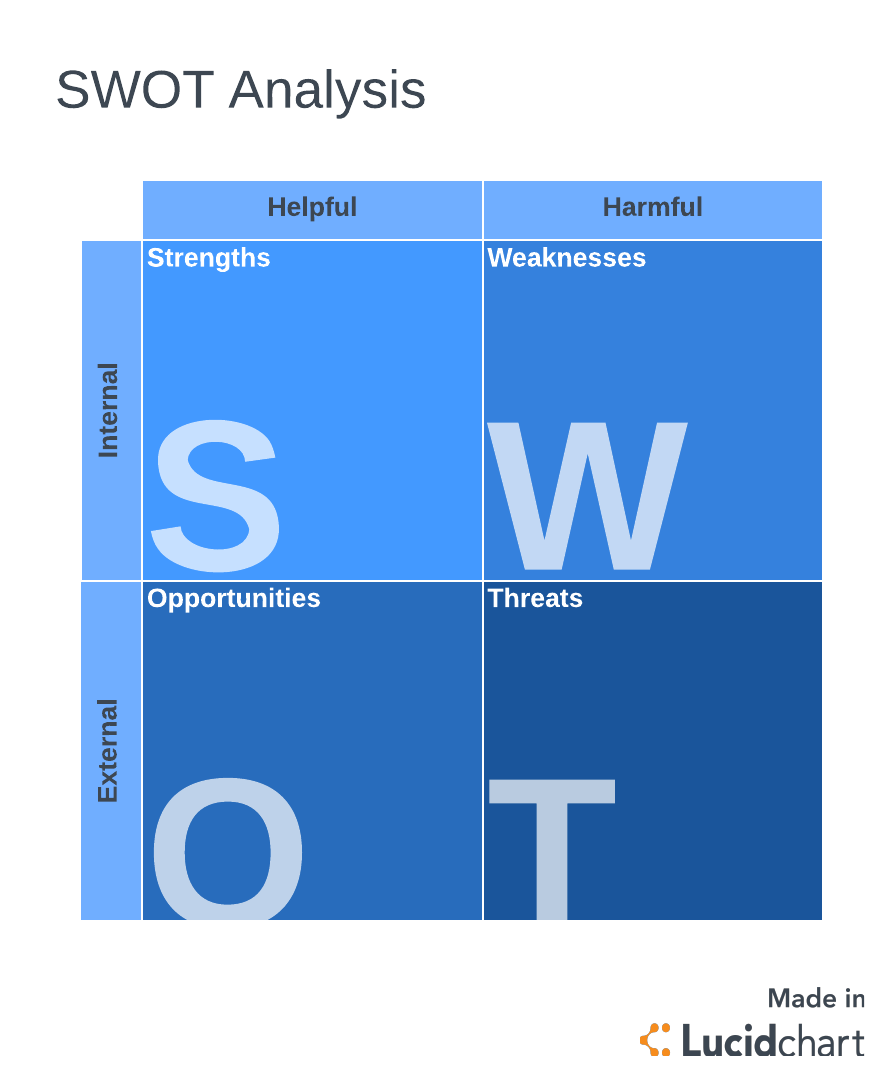

- SWOT analysis (Strengths, Weaknesses, Opportunities, Threats)

- Decision-making matrices

- Pros and cons lists

- Risk assessments

After evaluating, choose the most suitable solution based on effectiveness, cost, and time constraints.

Implementing and Monitoring the Solution

Implement the chosen solution and monitor its progress. Key actions include:

- Communicating the solution to relevant parties

- Setting timelines and milestones

- Assigning tasks and responsibilities

- Monitoring the solution and making adjustments as necessary

- Evaluating the effectiveness of the solution after implementation

Utilize feedback from stakeholders and consider potential improvements. Remember that problem-solving is an ongoing process that can always be refined and enhanced.

Problem-Solving Techniques

During each step, you may find it helpful to utilize various problem-solving techniques, such as:

- Brainstorming : A free-flowing, open-minded session where ideas are generated and listed without judgment, to encourage creativity and innovative thinking.

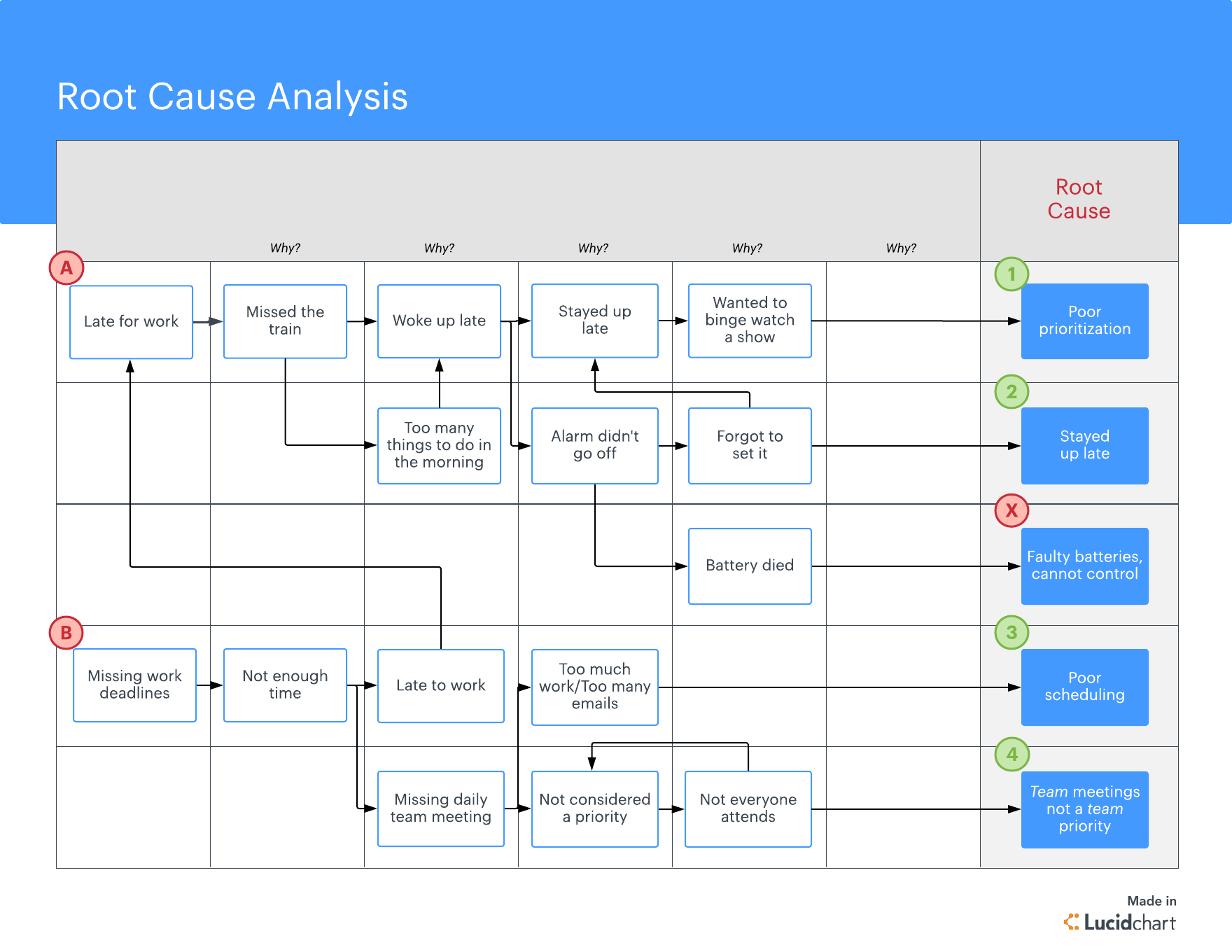

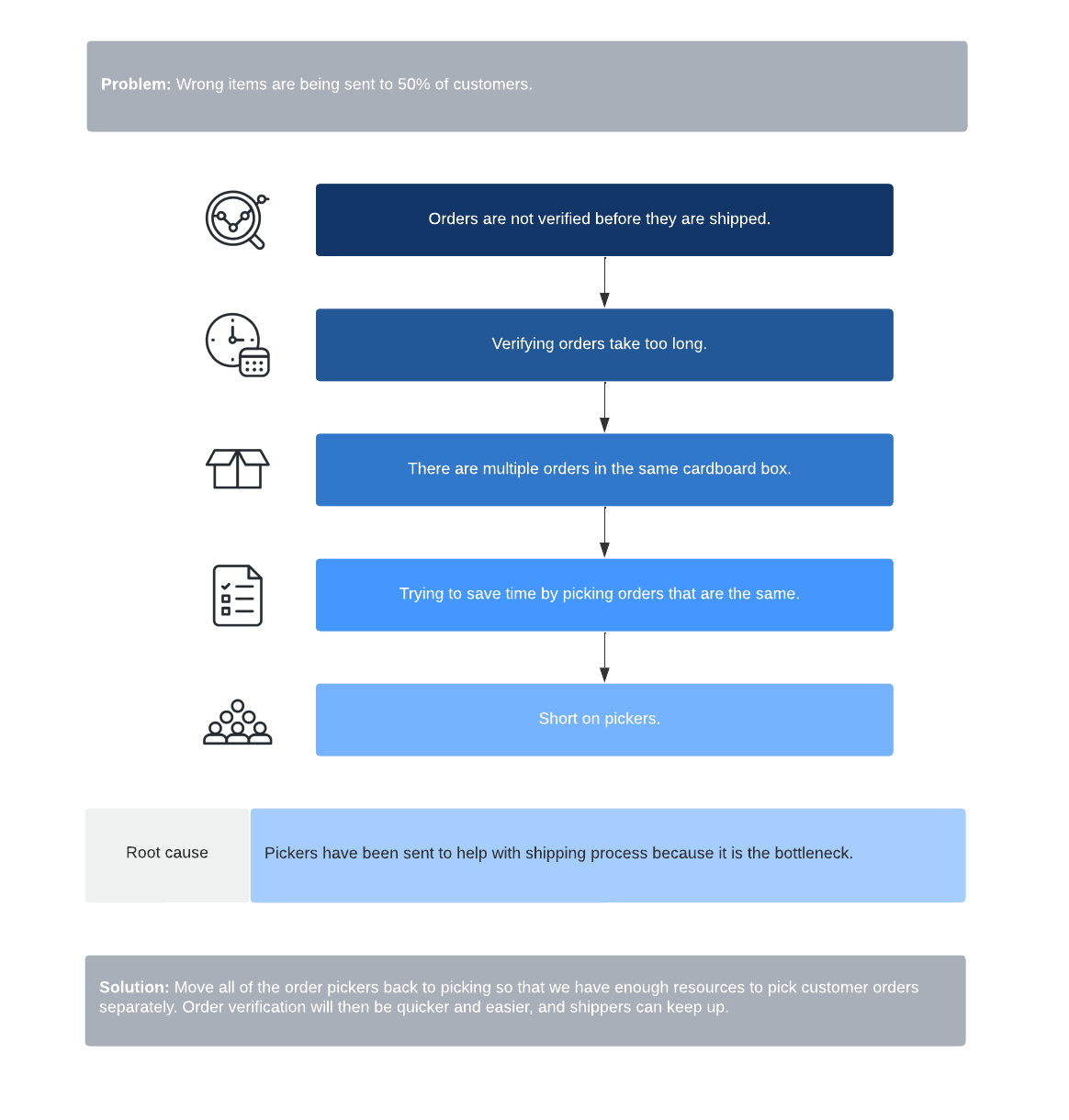

- Root cause analysis : A method that explores the underlying causes of a problem to find the most effective solution rather than addressing superficial symptoms.

- SWOT analysis : A tool used to evaluate the strengths, weaknesses, opportunities, and threats related to a problem or decision, providing a comprehensive view of the situation.

- Mind mapping : A visual technique that uses diagrams to organize and connect ideas, helping to identify patterns, relationships, and possible solutions.

Brainstorming

When facing a problem, start by conducting a brainstorming session. Gather your team and encourage an open discussion where everyone contributes ideas, no matter how outlandish they may seem. This helps you:

- Generate a diverse range of solutions

- Encourage all team members to participate

- Foster creative thinking

When brainstorming, remember to:

- Reserve judgment until the session is over

- Encourage wild ideas

- Combine and improve upon ideas

Root Cause Analysis

For effective problem-solving, identifying the root cause of the issue at hand is crucial. Try these methods:

- 5 Whys : Ask “why” five times to get to the underlying cause.

- Fishbone Diagram : Create a diagram representing the problem and break it down into categories of potential causes.

- Pareto Analysis : Determine the few most significant causes underlying the majority of problems.

SWOT Analysis

SWOT analysis helps you examine the Strengths, Weaknesses, Opportunities, and Threats related to your problem. To perform a SWOT analysis:

- List your problem’s strengths, such as relevant resources or strong partnerships.

- Identify its weaknesses, such as knowledge gaps or limited resources.

- Explore opportunities, like trends or new technologies, that could help solve the problem.

- Recognize potential threats, like competition or regulatory barriers.

SWOT analysis aids in understanding the internal and external factors affecting the problem, which can help guide your solution.

Mind Mapping

A mind map is a visual representation of your problem and potential solutions. It enables you to organize information in a structured and intuitive manner. To create a mind map:

- Write the problem in the center of a blank page.

- Draw branches from the central problem to related sub-problems or contributing factors.

- Add more branches to represent potential solutions or further ideas.

Mind mapping allows you to visually see connections between ideas and promotes creativity in problem-solving.

Examples of Problem Solving in Various Contexts

In the business world, you might encounter problems related to finances, operations, or communication. Applying problem-solving skills in these situations could look like:

- Identifying areas of improvement in your company’s financial performance and implementing cost-saving measures

- Resolving internal conflicts among team members by listening and understanding different perspectives, then proposing and negotiating solutions

- Streamlining a process for better productivity by removing redundancies, automating tasks, or re-allocating resources

In educational contexts, problem-solving can be seen in various aspects, such as:

- Addressing a gap in students’ understanding by employing diverse teaching methods to cater to different learning styles

- Developing a strategy for successful time management to balance academic responsibilities and extracurricular activities

- Seeking resources and support to provide equal opportunities for learners with special needs or disabilities

Everyday life is full of challenges that require problem-solving skills. Some examples include:

- Overcoming a personal obstacle, such as improving your fitness level, by establishing achievable goals, measuring progress, and adjusting your approach accordingly

- Navigating a new environment or city by researching your surroundings, asking for directions, or using technology like GPS to guide you

- Dealing with a sudden change, like a change in your work schedule, by assessing the situation, identifying potential impacts, and adapting your plans to accommodate the change.

- How to Resolve Employee Conflict at Work [Steps, Tips, Examples]

- How to Write Inspiring Core Values? 5 Steps with Examples

- 30 Employee Feedback Examples (Positive & Negative)

General Problem-solving Process

Introduction

The following is a general problem-solving process that characterizes the steps that can be followed by any discipline when approaching and rationally solving a problem. When used in conjunction with reasoning and decision-making skills, the process works well for one or more participants. Its main purpose is to guide participants through a procedure for solving many types of problems that have a varying level of complexity.

More importantly, the process is both descriptive and prescriptive. This means it can be used to look at past, present, and potential future problems and their solutions in a clear systematic way that is consistent and able to be generalized. At each step along the way to a solution, various types of research must be conducted to successfully accomplish the steps of the process and thus arrive at an effective solution that is viable. A description of research follows the problem solving process. In both the problem solving and research processes, good decision-making, critical-thinking and self-assessment is vital to a high quality result. At each step in the process, the problem-solver may need to go back to earlier steps and reexamine decisions made. It is this revisiting of earlier choices that make the process iterative and allows for improvement of the final outcomes.

Steps in the General Problem-solving Process

- Become aware of the problem

- Define the problem

- Choose the particular problem to be solved

- Identify potential solutions

- Evaluate the valid potential solutions to select the best one

- Develop an action plan to implement the best solution

Become Aware of the Problem

The first step of any problem-solving process is becoming aware. This awareness can be generated from inside or outside the individual. Many times the awareness is part of a stated task or assignment given to the individual by someone else. In other cases, a person can observe a specific problem or a clear gap in knowledge that they feel must be addressed. In the end, as long as a problem is perceived by oneself or others, awareness of this problem is achieved. However, the level of awareness and the research associated with this level is vital to the initiation of the problem solving process.

Define the Problem

After the problem is recognized, research is conducted. Initially, research must be done to help define the problem as well as identify the assumptions being made and determine the parameters of the situation.

In the end, the main purpose of this step is to evaluate the constraints on the problem and the problem solver to better understand the goals that are trying to be reached. Once these goals are identified, the objectives that must be attained in order to reach the goals can be specified and utilized to help narrow the scope of the problem. Once the goals and objectives are clearly understood, the problem to be solved can be selected. An easy way to think of goals and objectives is that goals are what you hope to achieve while objectives are how you will go about accomplishing the goal.

Just as research might have been the impetus for engaging in the problem solving process—it made the problem-solver aware—research is vital to the specification of parameters and assumptions. The heart of this step is the series of decisions made to narrow the scope of the problem made by the problem-solver. Parameters are those factual boundaries and constraints set by the problem statement or discovered through research. Assumptions by contrast are those constraints that the problem-solver sets without having incontrovertible factual backing for those decisions. A clear understanding of the assumptions being made when engaging in the process is important. If an unsatisfactory outcome is reached, it may be necessary to adjust these assumptions. Even if the final solution is arrived at, knowing one’s assumptions assists the problem-solver in explaining and defending their conclusions.

Choose Which Problem to Be Solved

Once a goal and set of objectives has been specified and the parameters and assumptions have been identified, it is necessary to choose a particular problem to solve. Any large problem can be broken into smaller problems that are in turn broken into even smaller problems to be addressed. Each problem is an achievable goal that consists of objectives. Each of these objectives is a sub-problem that must be solved first in order to solve the larger overarching problem.

There are many different reasons to choose a particular problem to solve. It is important to do risk assessment on the problems involved and examine why the problem is being solved. There are many reasons why a particular problem is chosen as the one to solve. For example, the problem might be the most important, most immediate, most far reaching, or most politically important at the moment. Whatever the choice, the individual or group must have clear reasons why they choose the problem to be solved.

Once the aspects of the problem are known, the problem must be phrased as a question that each solution can answer affirmatively. An example of a problem statement might be "How might I increase the use of problem solving techniques by college graduates of four year universities in America today?" This specific type of question has four separate parts: question statement, active verb, object, and parameters and assumptions.

The first part is the question statement which transforms the problem into a question to be answered. It takes the form "How might I" or "In what ways might I." If the process is being undertaken by a group, it should be phrased as we instead of I. At times, an individual or a group may examine an issue concerning a third party. For example, students may work on problems facing their institution or that must be handled by the government. In this case, the question might become, "how might our school," or "In what way might the United States government." In all of these cases, the object is to create a question that must be answered as well as specify the group who is designated to answer it. Each solution must then apply to that group and be able to be accomplished by them as well.

Next is the active verb or the action used to solve the problem. Some of the most useful of these active verbs are the ones that describe change without specifying an absolute end or any one action. For example: Accelerate, alleviate, broaden, increase, minimize, reduce, and stabilize. It is important to realize that the stronger the verb, the more difficult it might be to accomplish workable solutions. For example, it is easier to reduce crime than to eliminate it. Keep this in mind when choosing verbs because verb choice is vital to good solution finding. If necessary, two or more verbs can be used and should be separated by the following conjunctions: And, Or, or And/Or. To assist in the verb choice process, some active verbs are listed below:

Active Verbs

Figure 2 is a list action verbs that can be used when formulating a problem statement.

The third part of the problem statement is the object of the sentence that relates to the problem being solved. The object states what is being acted upon by the verb to help solve the problem. Each solution must directly or indirectly affect this object. In our earlier statement, "How might I increase the use of problem solving techniques by college graduates of four year universities in America today?" the object is "use of problem solving."

Finally, the parameters and assumptions that are bounding the solution are listed. These help to focus the solutions that are generated. Though parameters are not necessary, they are often useful to help limit and focus the scope of the process. Be careful not to leave too broad a problem. Broad problems lead to a wide number of solutions that can be difficult to choose between and implement with weak or ineffectual results. At the same time, an overly narrow problem statement can lead to a small number of solutions that provide little useable results. In our example, "college graduates of four year universities in America today?" are the parameters. This is identified with the conjunction ‘by’ and is used to mark who should have the use of problem solving increased.

Once the problem statement is phrased properly, solutions can be generated. However, it is important to note that this statement might have to be modified as more research becomes available or as the remainder of the process is worked through. As the process is iterated, small modifications to the problem statement can be made and refinements in the scope and specificity accomplished through changes in the verb, object and parameters.

Identify Potential Solutions

Once the problem statement has been chosen, it is necessary to generate potential solutions. This is the most creative portion of the process. Even so, conducting research into existing solutions to the problem or similar problems is helpful to generate workable solutions. The main criteria for judging solutions in this step is simply whether or not they answer the problem statement with a ‘yes.’ At this point, it may also be possible to eliminate some solutions because they do not agree with commonly held moral and ethical guidelines. Even though not stated specifically, these guidelines are understood and assumed to be upheld when reviewing solutions. For example, a solution to global pollution might be to kill every human. This is obviously not a good solution even though it would give a ‘yes’ answer to the question of "How might we minimize global air pollution caused by humans?"

When working in groups, it is important to work together to generate solutions. Also, it should be realized that the solution process takes time depending upon the problem complexity. At this point, do not judge solutions for more than their ability to answer the stated problem questions with a "yes" because they will be evaluated more closely in the next step. Many times it is possible to use discarded solutions to develop new ideas for solutions. However, it is important to be able to distinguish between similar solutions. Saying the same thing in ten different ways may not be ten different solutions. Try to group similar solutions together. If all the solutions fall into one group, then perhaps the best solution is to implement that group with different variations for different cases of the problems. Just as there are many unique problems, the solutions to these problems are all unique and need to be adapted to the particular situations being discussed. This will be addressed in the last section of the problem solving process.

Evaluate the Valid Potential Solutions to Select a Best Solution

Once a list of potential solutions has been generated, the evaluation process can begin. First, a list of criteria for judging all solutions equally must be chosen. It is vital to eliminate personal bias towards particular solutions as well as to utilize a consistent set of criteria to evaluate all solutions fairly. For example: most cost effective, most socially acceptable, most easily implemented, most directly solves the problem, most far reaching effects, most lasting effects, least government intervention required, least limiting to development, or quickest to implement. It is important to have research and logical reasons for the criteria chosen as well as factual support for the rankings given to a particular solution for each criteria.

Once the criteria are chosen, they should be given a weighting. In most cases, all the criteria have the same weight. However, it is possible to give other weightings to criteria so that a particular factor is seen as more important. Many times, the cost, time to complete, or political nature of a project is more important than other factors and so that criteria may have a higher ranking than others used to judge.

Once the criteria are chosen and weighted, all qualified solutions must then be ranked. Two types of procedures for ranking exist. If the number of solutions is large, usually greater than ten, an independent ranking must be conducted to narrow the number of choices. Each solution is listed along one side of a grid and then given a score for each criteria from 1-5 where 5 is the highest (other ranges can be used). The rankings for the various criteria are then totaled and a score for each solution is reported. These scores are compared to create a subset of solutions that have the highest score.

If the number of solutions is initially small or the independent ranking has been conducted, the remaining solutions are placed into a grid with the criteria for a comparative analysis. Though all the solutions may be seen as good, the comparative analysis gives the best solution. The total number of solutions listed gives the range of numbers for each criteria. For example, if there are six (6) solutions, then the rankings will go from 1-6 with 6 being the highest. Each solution is ranked for each criteria in comparison to the other solutions for that criteria. However, within a criteria no two solutions can have the same number. If two are equal, the adjacent numbers should be added and then divided by 2. The result is then placed in the space for each solution. See the charts below for an example. If the question being asked was "How might we control development in order to preserve the integrity and character of the town of Bedminster?"

Sample Table of Potential Solutions

Figure A3. 3 is a list of the potential solutions to be evaluated.

Sample Table of Evaluation Criteria

Figure 4 is a list of the criteria to be used to evaluate the potential solutions.

Sample Table of a Comparative Analysis

Figure 5 is a comparative analysis of the solutions from the table in figure 6 based upon the listed criteria shown in figure 7 for the problem stated earlier. The values used for scoring range from 6 as most satisfies criteria to 1 that least satisfies criteria.

Once all the solutions are ranked for all criteria and the weighting is applied appropriately, the scores for each solution are totaled. The highest score is then the best solution. If two solutions are close in score then there may be two solutions that are equally as good but differ in their strong points.

It is important to remember that the criteria that are used to judge the solutions are reflective of the choices being made. Each criteria is a ruler or a gauge by which to measure an outcome. Different rulers will yield different results so be sure to choose the proper rulers as well as use them properly. In order to choose the correct ruler and interpret it in the correct way, it is necessary to understand many different disciplines and the tools they use. In the end, however, each individual must have good decision-making skills to choose and use criteria.

Develop an Action Plan to Implement the Solution

After selecting the best solution, it is necessary to give some thought to the way in which it might be implemented. Giving insight into funding, potential problems with implementing the solution, and the time frame of the solution is necessary for any workable solution to a problem. Not all solutions can be implemented. Unforeseen problems may arise as solutions are tested and put to work. Many times, unexpected resistance to solutions can be encountered. Other times, unacceptable results can require that another solution be used.

In some circumstances the problem may have been originally selected incorrectly, have been misunderstood, or have changed as a result of research or altered circumstances. In the end, mistakes happen and the action plan helps the problem solver be prepared for such eventualities. In any event, the action plan can be used to make others aware of potential problems that might be faced while putting the selected solution into effect. Even when solving a current problem, this process will automatically assist the problem solver in thinking of potential problems and thus assist in avoiding unwanted outcomes. Whatever the outcome, it is vital to understand that the choices made during this entire process rely upon research.

Problem Solving Skills for the Digital Age

Lucid Content

Reading time: about 6 min

Let’s face it: Things don’t always go according to plan. Systems fail, wires get crossed, projects fall apart.

Problems are an inevitable part of life and work. They’re also an opportunity to think critically and find solutions. But knowing how to get to the root of unexpected situations or challenges can mean the difference between moving forward and spinning your wheels.

Here, we’ll break down the key elements of problem solving, some effective problem solving approaches, and a few effective tools to help you arrive at solutions more quickly.

So, what is problem solving?

Broadly defined, problem solving is the process of finding solutions to difficult or complex issues. But you already knew that. Understanding problem solving frameworks, however, requires a deeper dive.

Think about a recent problem you faced. Maybe it was an interpersonal issue. Or it could have been a major creative challenge you needed to solve for a client at work. How did you feel as you approached the issue? Stressed? Confused? Optimistic? Most importantly, which problem solving techniques did you use to tackle the situation head-on? How did you organize thoughts to arrive at the best possible solution?

Solve your problem-solving problem

Here’s the good news: Good problem solving skills can be learned. By its nature, problem solving doesn’t adhere to a clear set of do’s and don’ts—it requires flexibility, communication, and adaptation. However, most problems you face, at work or in life, can be tackled using four basic steps.

First, you must define the problem . This step sounds obvious, but often, you can notice that something is amiss in a project or process without really knowing where the core problem lies. The most challenging part of the problem solving process is uncovering where the problem originated.

Second, you work to generate alternatives to address the problem directly. This should be a collaborative process to ensure you’re considering every angle of the issue.

Third, you evaluate and test potential solutions to your problem. This step helps you fully understand the complexity of the issue and arrive at the best possible solution.

Finally, fourth, you select and implement the solution that best addresses the problem.

Following this basic four-step process will help you approach every problem you encounter with the same rigorous critical and strategic thinking process, recognize commonalities in new problems, and avoid repeating past mistakes.

In addition to these basic problem solving skills, there are several best practices that you should incorporate. These problem solving approaches can help you think more critically and creatively about any problem:

You may not feel like you have the right expertise to resolve a specific problem. Don’t let that stop you from tackling it. The best problem solvers become students of the problem at hand. Even if you don’t have particular expertise on a topic, your unique experience and perspective can lend itself to creative solutions.

Challenge the status quo

Standard problem solving methodologies and problem solving frameworks are a good starting point. But don’t be afraid to challenge assumptions and push boundaries. Good problem solvers find ways to apply existing best practices into innovative problem solving approaches.

Think broadly about and visualize the issue

Sometimes it’s hard to see a problem, even if it’s right in front of you. Clear answers could be buried in rows of spreadsheet data or lost in miscommunication. Use visualization as a problem solving tool to break down problems to their core elements. Visuals can help you see bottlenecks in the context of the whole process and more clearly organize your thoughts as you define the problem.

Hypothesize, test, and try again

It might be cliche, but there’s truth in the old adage that 99% of inspiration is perspiration. The best problem solvers ask why, test, fail, and ask why again. Whether it takes one or 1,000 iterations to solve a problem, the important part—and the part that everyone remembers—is the solution.

Consider other viewpoints

Today’s problems are more complex, more difficult to solve, and they often involve multiple disciplines. They require group expertise and knowledge. Being open to others’ expertise increases your ability to be a great problem solver. Great solutions come from integrating your ideas with those of others to find a better solution. Excellent problem solvers build networks and know how to collaborate with other people and teams. They are skilled in bringing people together and sharing knowledge and information.

4 effective problem solving tools

As you work through the problem solving steps, try these tools to better define the issue and find the appropriate solution.

Root cause analysis

Similar to pulling weeds from your garden, if you don’t get to the root of the problem, it’s bound to come back. A root cause analysis helps you figure out the root cause behind any disruption or problem, so you can take steps to correct the problem from recurring. The root cause analysis process involves defining the problem, collecting data, and identifying causal factors to pinpoint root causes and arrive at a solution.

Less structured than other more traditional problem solving methods, the 5 Whys is simply what it sounds like: asking why over and over to get to the root of an obstacle or setback. This technique encourages an open dialogue that can trigger new ideas about a problem, whether done individually or with a group. Each why piggybacks off the answer to the previous why. Get started with the template below—both flowcharts and fishbone diagrams can also help you track your answers to the 5 Whys.

Brainstorming

A meeting of the minds, a brain dump, a mind meld, a jam session. Whatever you call it, collaborative brainstorming can help surface previously unseen issues, root causes, and alternative solutions. Create and share a mind map with your team members to fuel your brainstorming session.

Gap analysis

Sometimes you don’t know where the problem is until you determine where it isn’t. Gap filling helps you analyze inadequacies that are preventing you from reaching an optimized state or end goal. For example, a content gap analysis can help a content marketer determine where holes exist in messaging or the customer experience. Gap analysis is especially helpful when it comes to problem solving because it requires you to find workable solutions. A SWOT analysis chart that looks at a problem through the lens of strengths, opportunities, opportunities, and threats can be a helpful problem solving framework as you start your analysis.

A better way to problem solve

Beyond these practical tips and tools, there are myriad methodical and creative approaches to move a project forward or resolve a conflict. The right approach will depend on the scope of the issue and your desired outcome.

Depending on the problem, Lucidchart offers several templates and diagrams that could help you identify the cause of the issue and map out a plan to resolve it. Learn more about how Lucidchart can help you take control of your problem solving process .

About Lucidchart

Lucidchart, a cloud-based intelligent diagramming application, is a core component of Lucid Software's Visual Collaboration Suite. This intuitive, cloud-based solution empowers teams to collaborate in real-time to build flowcharts, mockups, UML diagrams, customer journey maps, and more. Lucidchart propels teams forward to build the future faster. Lucid is proud to serve top businesses around the world, including customers such as Google, GE, and NBC Universal, and 99% of the Fortune 500. Lucid partners with industry leaders, including Google, Atlassian, and Microsoft. Since its founding, Lucid has received numerous awards for its products, business, and workplace culture. For more information, visit lucidchart.com.

Related articles

How you can use creative problem solving at work.

Sometimes you're faced with challenges that traditional problem solving can't fix. Creative problem solving encourages you to find new, creative ways of thinking that can help you overcome the issue at hand more quickly.

Solve issues faster with the root cause analysis process

Root cause analysis refers to any problem-solving method used to trace an issue back to its origin. Learn how to complete a root cause analysis—we've even included templates to get you started.

Bring your bright ideas to life.

or continue with

By registering, you agree to our Terms of Service and you acknowledge that you have read and understand our Privacy Policy .

Search form

- Table of Contents

- Troubleshooting Guide

- A Model for Getting Started

- Justice Action Toolkit

- Best Change Processes

- Databases of Best Practices

- Online Courses

- Ask an Advisor

- Subscribe to eNewsletter

- Community Stories

- YouTube Channel

- About the Tool Box

- How to Use the Tool Box

- Privacy Statement

- Workstation/Check Box Sign-In

- Online Training Courses

- Capacity Building Training

- Training Curriculum - Order Now

- Community Check Box Evaluation System

- Build Your Toolbox

- Facilitation of Community Processes

- Community Health Assessment and Planning

- Section 3. Defining and Analyzing the Problem

Chapter 17 Sections

- Section 1. An Introduction to the Problem-Solving Process

- Section 2. Thinking Critically

- Section 4. Analyzing Root Causes of Problems: The "But Why?" Technique

- Section 5. Addressing Social Determinants of Health and Development

- Section 6. Generating and Choosing Solutions

- Section 7. Putting Your Solution into Practice

- Main Section

The nature of problems

Clarifying the problem, deciding to solve the problem, analyzing the problem.

We've all had our share of problems - more than enough, if you come right down to it. So it's easy to think that this section, on defining and analyzing the problem, is unnecessary. "I know what the problem is," you think. "I just don't know what to do about it."

Not so fast! A poorly defined problem - or a problem whose nuances you don't completely understand - is much more difficult to solve than a problem you have clearly defined and analyzed. The way a problem is worded and understood has a huge impact on the number, quality, and type of proposed solutions.

In this section, we'll begin with the basics, focusing primarily on four things. First, we'll consider the nature of problems in general, and then, more specifically, on clarifying and defining the problem you are working on. Then, we'll talk about whether or not you really want to solve the problem, or whether you are better off leaving it alone. Finally, we'll talk about how to do an in-depth analysis of the problem.

So, what is a problem? It can be a lot of things. We know in our gut when there is a problem, whether or not we can easily put it into words. Maybe you feel uncomfortable in a given place, but you're not sure why. A problem might be just the feeling that something is wrong and should be corrected. You might feel some sense of distress, or of injustice.

Stated most simply, a problem is the difference between what is , and what might or should be . "No child should go to bed hungry, but one-quarter of all children do in this country," is a clear, potent problem statement. Another example might be, "Communication in our office is not very clear." In this instance, the explanation of "what might or should be" is simply alluded to.

As these problems illustrate, some problems are more serious than others; the problem of child hunger is a much more severe problem than the fact that the new youth center has no exercise equipment, although both are problems that can and should be addressed. Generally, problems that affect groups of people - children, teenage mothers, the mentally ill, the poor - can at least be addressed and in many cases lessened using the process outlined in this Chapter.

Although your organization may have chosen to tackle a seemingly insurmountable problem, the process you will use to solve it is not complex. It does, however, take time, both to formulate and to fully analyze the problem. Most people underestimate the work they need to do here and the time they'll need to spend. But this is the legwork, the foundation on which you'll lay effective solutions. This isn't the time to take shortcuts.

Three basic concepts make up the core of this chapter: clarifying, deciding, and analyzing. Let's look at each in turn.

If you are having a problem-solving meeting, then you already understand that something isn't quite right - or maybe it's bigger than that; you understand that something is very, very wrong. This is your beginning, and of course, it makes most sense to...

- Start with what you know . When group members walk through the door at the beginning of the meeting, what do they think about the situation? There are a variety of different ways to garner this information. People can be asked in advance to write down what they know about the problem. Or the facilitator can lead a brainstorming session to try to bring out the greatest number of ideas. Remember that a good facilitator will draw out everyone's opinions, not only those of the more vocal participants.

- Decide what information is missing . Information is the key to effective decision making. If you are fighting child hunger, do you know which children are hungry? When are they hungry - all the time, or especially at the end of the month, when the money has run out? If that's the case, your problem statement might be, "Children in our community are often hungry at the end of the month because their parents' paychecks are used up too early."

Compare this problem statement on child hunger to the one given in "The nature of problems" above. How might solutions for the two problems be different?

- Facts (15% of the children in our community don't get enough to eat.)

- Inference (A significant percentage of children in our community are probably malnourished/significantly underweight.)

- Speculation (Many of the hungry children probably live in the poorer neighborhoods in town.)

- Opinion (I think the reason children go hungry is because their parents spend all of their money on cigarettes.)

When you are gathering information, you will probably hear all four types of information, and all can be important. Speculation and opinion can be especially important in gauging public opinion. If public opinion on your issue is based on faulty assumptions, part of your solution strategy will probably include some sort of informational campaign.

For example, perhaps your coalition is campaigning against the death penalty, and you find that most people incorrectly believe that the death penalty deters violent crime. As part of your campaign, therefore, you will probably want to make it clear to the public that it simply isn't true.

Where and how do you find this information? It depends on what you want to know. You can review surveys, interviews, the library and the internet.

- Define the problem in terms of needs, and not solutions. If you define the problem in terms of possible solutions, you're closing the door to other, possibly more effective solutions. "Violent crime in our neighborhood is unacceptably high," offers space for many more possible solutions than, "We need more police patrols," or, "More citizens should have guns to protect themselves."

- Define the problem as one everyone shares; avoid assigning blame for the problem. This is particularly important if different people (or groups) with a history of bad relations need to be working together to solve the problem. Teachers may be frustrated with high truancy rates, but blaming students uniquely for problems at school is sure to alienate students from helping to solve the problem.

You can define the problem in several ways; The facilitator can write a problem statement on the board, and everyone can give feedback on it, until the statement has developed into something everyone is pleased with, or you can accept someone else's definition of the problem, or use it as a starting point, modifying it to fit your needs.

After you have defined the problem, ask if everyone understands the terminology being used. Define the key terms of your problem statement, even if you think everyone understands them.

The Hispanic Health Coalition, has come up with the problem statement "Teen pregnancy is a problem in our community." That seems pretty clear, doesn't it? But let's examine the word "community" for a moment. You may have one person who defines community as "the city you live in," a second who defines it as, "this neighborhood" and a third who considers "our community" to mean Hispanics.

At this point, you have already spent a fair amount of time on the problem at hand, and naturally, you want to see it taken care of. Before you go any further, however, it's important to look critically at the problem and decide if you really want to focus your efforts on it. You might decide that right now isn't the best time to try to fix it. Maybe your coalition has been weakened by bad press, and chance of success right now is slim. Or perhaps solving the problem right now would force you to neglect another important agency goal. Or perhaps this problem would be more appropriately handled by another existing agency or organization.

You and your group need to make a conscious choice that you really do want to attack the problem. Many different factors should be a part of your decision. These include:

Importance . In judging the importance of the issue, keep in mind the f easibility . Even if you have decided that the problem really is important, and worth solving, will you be able to solve it, or at least significantly improve the situation? The bottom line: Decide if the good you can do will be worth the effort it takes. Are you the best people to solve the problem? Is someone else better suited to the task?

For example, perhaps your organization is interested in youth issues, and you have recently come to understand that teens aren't participating in community events mostly because they don't know about them. A monthly newsletter, given out at the high schools, could take care of this fairly easily. Unfortunately, you don't have much publishing equipment. You do have an old computer and a desktop printer, and you could type something up, but it's really not your forte. A better solution might be to work to find writing, design and/or printing professionals who would donate their time and/or equipment to create a newsletter that is more exciting, and that students would be more likely to want to read.

Negative impacts . If you do succeed in bringing about the solution you are working on, what are the possible consequences? If you succeed in having safety measures implemented at a local factory, how much will it cost? Where will the factory get that money? Will they cut salaries, or lay off some of their workers?

Even if there are some unwanted results, you may well decide that the benefits outweigh the negatives. As when you're taking medication, you'll put up with the side effects to cure the disease. But be sure you go into the process with your eyes open to the real costs of solving the problem at hand.

Choosing among problems

You might have many obstacles you'd like to see removed. In fact, it's probably a pretty rare community group that doesn't have a laundry list of problems they would like to resolve, given enough time and resources. So how do you decide which to start with?

A simple suggestion might be to list all of the problems you are facing, and whether or not they meet the criteria listed above (importance, feasibility, et cetera). It's hard to assign numerical values for something like this, because for each situation, one of the criteria may strongly outweigh the others. However, just having all of the information in front of the group can help the actual decision making a much easier task.

Now that the group has defined the problem and agreed that they want to work towards a solution, it's time to thoroughly analyze the problem. You started to do this when you gathered information to define the problem, but now, it's time to pay more attention to details and make sure everyone fully understands the problem.

Answer all of the question words.

The facilitator can take group members through a process of understanding every aspect of the problem by answering the "question words" - what, why, who, when, and how much. This process might include the following types of questions:

What is the problem? You already have your problem statement, so this part is more or less done. But it's important to review your work at this point.

Why does the problem exist? There should be agreement among meeting participants as to why the problem exists to begin with. If there isn't, consider trying one of the following techniques.

- The "but why" technique. This simple exercise can be done easily with a large group, or even on your own. Write the problem statement, and ask participants, "Why does this problem exist?" Write down the answer given, and ask, "But why does (the answer) occur?"

"Children often fall asleep in class," But why? "Because they have no energy." But why? "Because they don't eat breakfast." But why?

Continue down the line until participants can comfortably agree on the root cause of the problem . Agreement is essential here; if people don't even agree about the source of the problem, an effective solution may well be out of reach.

- Start with the definition you penned above.

- Draw a line down the center of the paper. Or, if you are working with a large group of people who cannot easily see what you are writing, use two pieces.

- On the top of one sheet/side, write "Restraining Forces."

- On the other sheet/side, write, "Driving Forces."

- Under "Restraining Forces," list all of the reasons you can think of that keep the situation the same; why the status quo is the way it is. As with all brainstorming sessions, this should be a "free for all;" no idea is too "far out" to be suggested and written down.

- In the same manner, under "Driving Forces," list all of the forces that are pushing the situation to change.

- When all of the ideas have been written down, group members can edit them as they see fit and compile a list of the important factors that are causing the situation.

Clearly, these two exercises are meant for different times. The "but why" technique is most effective when the facilitator (or the group as a whole) decides that the problem hasn't been looked at deeply enough and that the group's understanding is somewhat superficial. The force field analysis, on the other hand, can be used when people are worried that important elements of the problem haven't been noticed -- that you're not looking at the whole picture.

Who is causing the problem, and who is affected by it? A simple brainstorming session is an excellent way to determine this.

When did the problem first occur, or when did it become significant? Is this a new problem or an old one? Knowing this can give you added understanding of why the problem is occurring now. Also, the longer a problem has existed, the more entrenched it has become, and the more difficult it will be to solve. People often get used to things the way they are and resist change, even when it's a change for the better.

How much , or to what extent, is this problem occurring? How many people are affected by the problem? How significant is it? Here, you should revisit the questions on importance you looked at when you were defining the problem. This serves as a brief refresher and gives you a complete analysis from which you can work.

If time permits, you might want to summarize your analysis on a single sheet of paper for participants before moving on to generating solutions, the next step in the process. That way, members will have something to refer back to during later stages in the work.

Also, after you have finished this analysis, the facilitator should ask for agreement from the group. Have people's perceptions of the problem changed significantly? At this point, check back and make sure that everyone still wants to work together to solve the problem.

The first step in any effective problem-solving process may be the most important. Take your time to develop a critical definition, and let this definition, and the analysis that follows, guide you through the process. You're now ready to go on to generating and choosing solutions, which are the next steps in the problem-solving process, and the focus of the following section.

Print Resources

Avery, M., Auvine, B., Streibel, B., & Weiss, L. (1981). A handbook for consensus decision making: Building united judgement . Cambridge, MA: Center for Conflict Resolution.

Dale, D., & Mitiguy, N. Planning, for a change: A citizen's guide to creative planning and program development .

Dashiell, K. (1990). Managing meetings for collaboration and consensus . Honolulu, HI: Neighborhood Justice Center of Honolulu, Inc.

Interaction Associates (1987). Facilitator institute . San Francisco, CA: Author.

Lawson, L., Donant, F., & Lawson, J. (1982). Lead on! The complete handbook for group leaders . San Luis Obispo, CA: Impact Publishers.

Meacham, W. (1980). Human development training manual . Austin, TX: Human Development Training.

Morrison, E. (1994). Leadership skills: Developing volunteers for organizational success . Tucson, AZ: Fisher Books.

- Guide: Problem Solving

Daniel Croft

Daniel Croft is an experienced continuous improvement manager with a Lean Six Sigma Black Belt and a Bachelor's degree in Business Management. With more than ten years of experience applying his skills across various industries, Daniel specializes in optimizing processes and improving efficiency. His approach combines practical experience with a deep understanding of business fundamentals to drive meaningful change.

- Last Updated: January 7, 2024

- Learn Lean Sigma

Problem-solving stands as a fundamental skill, crucial in navigating the complexities of both everyday life and professional environments. Far from merely providing quick fixes, it entails a comprehensive process involving the identification, analysis, and resolution of issues.

This multifaceted approach requires an understanding of the problem’s nature, the exploration of its various components, and the development of effective solutions. At its core, problem-solving serves as a bridge from the current situation to a desired outcome, requiring not only the recognition of an existing gap but also the precise definition and thorough analysis of the problem to find viable solutions.

Table of Contents

What is problem solving.

At its core, problem-solving is about bridging the gap between the current situation and the desired outcome. It starts with recognizing that a discrepancy exists, which requires intervention to correct or improve. The ability to identify a problem is the first step, but it’s equally crucial to define it accurately. A well-defined problem is half-solved, as the saying goes.

Analyzing the problem is the next critical step. This analysis involves breaking down the problem into smaller parts to understand its intricacies. It requires looking at the problem from various angles and considering all relevant factors – be they environmental, social, technical, or economic. This comprehensive analysis aids in developing a deeper understanding of the problem’s root causes, rather than just its symptoms.

Finally, effective problem-solving involves the implementation of the chosen solution and its subsequent evaluation. This stage tests the practicality of the solution and its effectiveness in the real world. It’s a critical phase where theoretical solutions meet practical application.

The Nature of Problems

The nature of the problem significantly influences the approach to solving it. Problems vary greatly in their complexity and structure, and understanding this is crucial for effective problem-solving.

Simple vs. Complex Problems : Simple problems are straightforward, often with clear solutions. They usually have a limited number of variables and predictable outcomes. On the other hand, complex problems are multi-faceted. They involve multiple variables, stakeholders, and potential outcomes, often requiring a more sophisticated analysis and a multi-pronged approach to solving.

Structured vs. Unstructured Problems : Structured problems are well-defined. They follow a specific pattern or set of rules, making their outcomes more predictable. These problems often have established methodologies for solving. For example, mathematical problems usually fall into this category. Unstructured problems, in contrast, are more ambiguous. They lack a clear pattern or set of rules, making their outcomes uncertain. These problems require a more exploratory approach, often involving trial and error, to identify potential solutions.

Understanding the type of problem at hand is essential, as it dictates the approach. For instance, a simple problem might require a straightforward solution, while a complex problem might need a more comprehensive, step-by-step approach. Similarly, structured problems might benefit from established methodologies, whereas unstructured problems might need more innovative and creative problem-solving techniques.

The Problem-Solving Process

The process of problem-solving is a methodical approach that involves several distinct stages. Each stage plays a crucial role in navigating from the initial recognition of a problem to its final resolution. Let’s explore each of these stages in detail.

Step 1: Identifying the Problem

Step 2: Defining the Problem

Once the problem is identified, the next step is to define it clearly and precisely. This is a critical phase because a well-defined problem often suggests its solution. Defining the problem involves breaking it down into smaller, more manageable parts. It also includes understanding the scope and impact of the problem. A clear definition helps in focusing efforts and resources efficiently and serves as a guide to stay on track during the problem-solving process.

Step 3: Analyzing the Problem

Step 4: Generating Solutions

Step 5: Evaluating and Selecting Solutions

After generating a list of possible solutions, the next step is to evaluate each one critically. This evaluation includes considering the feasibility, costs, benefits, and potential impact of each solution. Techniques like cost-benefit analysis, risk assessment, and scenario planning can be useful here. The aim is to select the solution that best addresses the problem in the most efficient and effective way, considering the available resources and constraints.

Step 6: Implementing the Solution

Step 7: Reviewing and Reflecting

The final stage in the problem-solving process is to review the implemented solution and reflect on its effectiveness and the process as a whole. This involves assessing whether the solution met its intended goals and what could have been done differently. Reflection is a critical part of learning and improvement. It helps in understanding what worked well and what didn’t, providing valuable insights for future problem-solving efforts.

Tools and Techniques for Effective Problem Solving

Problem-solving is a multifaceted endeavor that requires a variety of tools and techniques to navigate effectively. Different stages of the problem-solving process can benefit from specific strategies, enhancing the efficiency and effectiveness of the solutions developed. Here’s a detailed look at some key tools and techniques:

Brainstorming

SWOT Analysis (Strengths, Weaknesses, Opportunities, Threats)

Root Cause Analysis

This is a method used to identify the underlying causes of a problem, rather than just addressing its symptoms. One popular technique within root cause analysis is the “ 5 Whys ” method. This involves asking “why” multiple times (traditionally five) until the fundamental cause of the problem is uncovered. This technique encourages deeper thinking and can reveal connections that aren’t immediately obvious. By addressing the root cause, solutions are more likely to be effective and long-lasting.

Mind Mapping

Each of these tools and techniques can be adapted to different types of problems and situations. Effective problem solvers often use a combination of these methods, depending on the nature of the problem and the context in which it exists. By leveraging these tools, one can enhance their ability to dissect complex problems, generate creative solutions, and implement effective strategies to address challenges.

Developing Problem-Solving Skills

Developing problem-solving skills is a dynamic process that hinges on both practice and introspection. Engaging with a diverse array of problems enhances one’s ability to adapt and apply different strategies. This exposure is crucial as it allows individuals to encounter various scenarios, ranging from straightforward to complex, each requiring a unique approach. Collaborating with others in teams is especially beneficial. It broadens one’s perspective, offering insights into different ways of thinking and approaching problems. Such collaboration fosters a deeper understanding of how diverse viewpoints can contribute to more robust solutions.

Reflection is equally important in the development of problem-solving skills. Reflecting on both successes and failures provides valuable lessons. Successes reinforce effective strategies and boost confidence, while failures are rich learning opportunities that highlight areas for improvement. This reflective practice enables one to understand what worked, what didn’t, and why.

Critical thinking is a foundational skill in problem-solving. It involves analyzing information, evaluating different perspectives, and making reasoned judgments. Creativity is another vital component. It pushes the boundaries of conventional thinking and leads to innovative solutions. Effective communication also plays a crucial role, as it ensures that ideas are clearly understood and collaboratively refined.

In conclusion, problem-solving is an indispensable skill set that blends analytical thinking, creativity, and practical implementation. It’s a journey from understanding the problem to applying a solution and learning from the outcome.

Whether dealing with simple or complex issues, or structured or unstructured challenges, the essence of problem-solving lies in a methodical approach and the effective use of various tools and techniques. It’s a skill that is honed over time, through experience, reflection, and the continuous development of critical thinking, creativity, and communication abilities. In mastering problem-solving, one not only addresses immediate issues but also builds a foundation for future challenges, leading to more innovative and effective outcomes.

- Mourtos, N.J., Okamoto, N.D. and Rhee, J., 2004, February. Defining, teaching, and assessing problem solving skills . In 7th UICEE Annual Conference on Engineering Education (pp. 1-5).

- Foshay, R. and Kirkley, J., 2003. Principles for teaching problem solving. Technical paper , 4 (1), pp.1-16.

Q: What are the key steps in the problem-solving process?

A : The problem-solving process involves several key steps: identifying the problem, defining it clearly, analyzing it to understand its root causes, generating a range of potential solutions, evaluating and selecting the most viable solution, implementing the chosen solution, and finally, reviewing and reflecting on the effectiveness of the solution and the process used to arrive at it.

Q: How can brainstorming be effectively used in problem-solving?

A: Brainstorming is effective in the solution generation phase of problem-solving. It involves gathering a group and encouraging the free flow of ideas without immediate criticism. The goal is to produce a large quantity of ideas, fostering creative thinking. This technique helps in uncovering unique and innovative solutions that might not surface in a more structured setting.

Q: What is SWOT Analysis and how does it aid in problem-solving?

A : SWOT Analysis is a strategic planning tool used to evaluate the Strengths, Weaknesses, Opportunities, and Threats involved in a situation. In problem-solving, it aids by providing a clear understanding of the internal and external factors that could impact the problem and potential solutions. This analysis helps in formulating strategies that leverage strengths and opportunities while mitigating weaknesses and threats.

Q: Why is it important to understand the nature of a problem before solving it?

A : Understanding the nature of a problem is crucial as it dictates the approach for solving it. Problems can be simple or complex, structured or unstructured, and each type requires a different strategy. A clear understanding of the problem’s nature helps in applying the appropriate methods and tools for effective resolution.

Q: How does reflection contribute to developing problem-solving skills?

A : Reflection is a critical component in developing problem-solving skills. It involves looking back at the problem-solving process and the implemented solution to assess what worked well and what didn’t. Reflecting on both successes and failures provides valuable insights and lessons, helping to refine and improve problem-solving strategies for future challenges. This reflective practice enhances one’s ability to approach problems more effectively over time.

Daniel Croft is a seasoned continuous improvement manager with a Black Belt in Lean Six Sigma. With over 10 years of real-world application experience across diverse sectors, Daniel has a passion for optimizing processes and fostering a culture of efficiency. He's not just a practitioner but also an avid learner, constantly seeking to expand his knowledge. Outside of his professional life, Daniel has a keen Investing, statistics and knowledge-sharing, which led him to create the website learnleansigma.com, a platform dedicated to Lean Six Sigma and process improvement insights.

Free Lean Six Sigma Templates

Improve your Lean Six Sigma projects with our free templates. They're designed to make implementation and management easier, helping you achieve better results.

Other Guides

Was this helpful.

A descriptive phase model of problem-solving processes

- Original Paper

- Open access

- Published: 09 March 2021

- Volume 53 , pages 737–752, ( 2021 )

Cite this article

You have full access to this open access article

- Benjamin Rott ORCID: orcid.org/0000-0002-8113-1584 1 ,

- Birte Specht 2 &

- Christine Knipping 3

7608 Accesses

20 Citations

Explore all metrics

This article has been updated

Complementary to existing normative models, in this paper we suggest a descriptive phase model of problem solving. Real, not ideal, problem-solving processes contain errors, detours, and cycles, and they do not follow a predetermined sequence, as is presumed in normative models. To represent and emphasize the non-linearity of empirical processes, a descriptive model seemed essential. The juxtaposition of models from the literature and our empirical analyses enabled us to generate such a descriptive model of problem-solving processes. For the generation of our model, we reflected on the following questions: (1) Which elements of existing models for problem-solving processes can be used for a descriptive model? (2) Can the model be used to describe and discriminate different types of processes? Our descriptive model allows one not only to capture the idiosyncratic sequencing of real problem-solving processes, but simultaneously to compare different processes, by means of accumulation. In particular, our model allows discrimination between problem-solving and routine processes. Also, successful and unsuccessful problem-solving processes as well as processes in paper-and-pencil versus dynamic-geometry environments can be characterised and compared with our model.

Similar content being viewed by others

Competencies for Complexity: Problem Solving in the Twenty-First Century

Naturalising Problem Solving

The Noble Art of Problem Solving

Avoid common mistakes on your manuscript.

1 Introduction

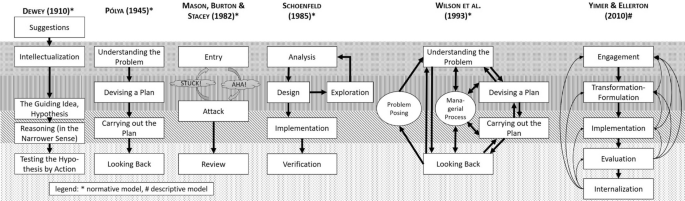

Problem solving (PS)—in the sense of working on non-routine tasks for which the solver knows no previously learned scheme or algorithm designed to solve them (cf. Schoenfeld, 1985 , 1992b )—is an important aspect of doing mathematics (Halmos, 1980 ) as well as learning and teaching mathematics (Liljedahl et al. 2016 ). As one of several reasons, PS is used as a means to help students learn how to think mathematically (Schoenfeld, 1992b ). Hence, PS is part of mathematics curricula in almost all countries (e.g., KMK, 2004 ; NCTM, 2000 , 2014 ). Accordingly, PS has been a focus of interest of researchers for several decades, Pólya ( 1945 ) being one of the most prominent scholars interested in this activity.

Problem-solving processes (PS processes) can be characterised by their inner or their outer structure (Philipp, 2013 , pp. 39–40). The inner structure refers to (meta)cognitive processes such as heuristics, checks, or beliefs, whereas the outer structure refers to observable actions that can be characterised in phases like ‘understanding the problem’ or ‘devising a plan’, as well as the chronological sequence of such phases in a PS process. Our focus in this paper is on the outer structure, as it is directly accessible to teachers and researchers via observation.

In the research literature, there are various characterisations of PS processes. However, almost all of the existing models are normative , which means they represent idealised processes. They characterise PS processes according to distinct phases, in a predetermined sequence, which is why they are sometimes called ‘prescriptive’ instead of normative. These phases and their sequencing have been formulated as a norm for PS processes. Normative models are generally used as a pedagogical tool to guide students’ PS processes and to help them to become better problem solvers. The normative models in current research have mostly been derived from theoretical considerations. Nevertheless, real PS processes look different; they contain errors, detours, and cycles, and they do not follow a predetermined sequence. Actual processes like these are not considered in normative models. Accordingly, there are almost no models that guide teachers and researchers in observing, understanding, and analysing PS processes in their ‘non-smooth’ occurrences (cf. Fernandez et al. 1994 ; Rott, 2014 ). Our aim in this paper, therefore, is to address this research gap by suggesting a descriptive model.

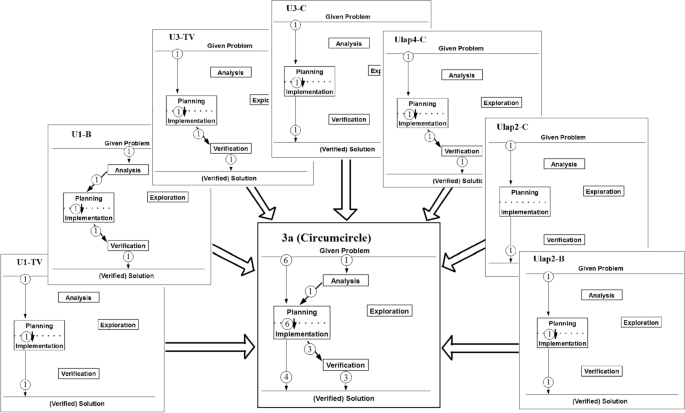

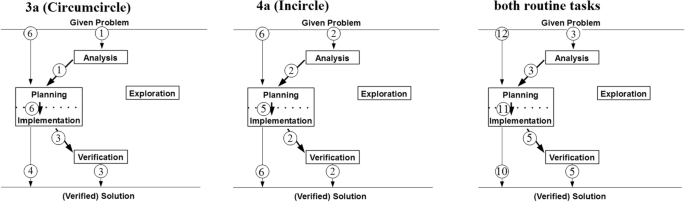

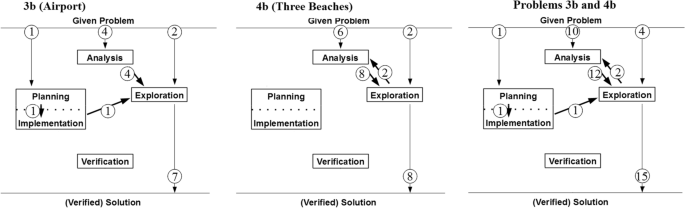

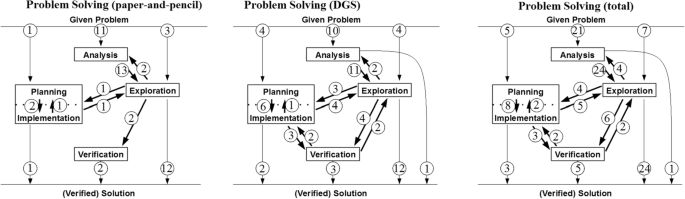

A descriptive model enables not only the representation of real PS processes, but also reveals additional potential for analyses. Our model allows one systematically to compare several PS processes simultaneously by means of accumulation, which is an approach that to our knowledge has not been proposed before in the mathematics education community. In Sect. 6 , we show how this approach can be used to reveal ‘bumps and bruises’ of real students’ PS processes to illustrate the practical value of our descriptive model (Sect. 5.3 , Fig. 5 ). We show how our model allows one to discriminate problem-solving processes from routine processes when students work on tasks. We illustrate how differences between successful and unsuccessful processes can be identified using our model. We also reveal how students’ PS processes, working in a paper-and-pencil environment compared to working in a digital (dynamic geometry) environment , can be characterised and compared by means of our model.