A Guide To The Methods, Benefits & Problems of The Interpretation of Data

Table of Contents

1) What Is Data Interpretation?

2) How To Interpret Data?

3) Why Data Interpretation Is Important?

4) Data Interpretation Skills

5) Data Analysis & Interpretation Problems

6) Data Interpretation Techniques & Methods

7) The Use of Dashboards For Data Interpretation

8) Business Data Interpretation Examples

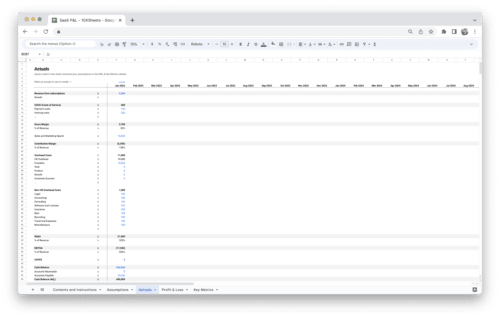

Data analysis and interpretation have now taken center stage with the advent of the digital age… and the sheer amount of data can be frightening. In fact, a Digital Universe study found that the total data supply in 2012 was 2.8 trillion gigabytes! Based on that amount of data alone, it is clear the calling card of any successful enterprise in today’s global world will be the ability to analyze complex data, produce actionable insights, and adapt to new market needs… all at the speed of thought.

Business dashboards are the digital age tools for big data. Capable of displaying key performance indicators (KPIs) for both quantitative and qualitative data analyses, they are ideal for making the fast-paced and data-driven market decisions that push today’s industry leaders to sustainable success. Through the art of streamlined visual communication, data dashboards permit businesses to engage in real-time and informed decision-making and are key instruments in data interpretation. First of all, let’s find a definition to understand what lies behind this practice.

What Is Data Interpretation?

Data interpretation refers to the process of using diverse analytical methods to review data and arrive at relevant conclusions. The interpretation of data helps researchers to categorize, manipulate, and summarize the information in order to answer critical questions.

The importance of data interpretation is evident, and this is why it needs to be done properly. Data is very likely to arrive from multiple sources and has a tendency to enter the analysis process with haphazard ordering. Data analysis tends to be extremely subjective. That is to say, the nature and goal of interpretation will vary from business to business, likely correlating to the type of data being analyzed. While there are several types of processes that are implemented based on the nature of individual data, the two broadest and most common categories are “quantitative and qualitative analysis.”

Yet, before any serious data interpretation inquiry can begin, it should be understood that visual presentations of data findings are irrelevant unless a sound decision is made regarding measurement scales. Before any serious data analysis can begin, the measurement scale must be decided for the data as this will have a long-term impact on data interpretation ROI. The varying scales include:

- Nominal Scale: non-numeric categories that cannot be ranked or compared quantitatively. Variables are exclusive and exhaustive.

- Ordinal Scale: exclusive categories that are exclusive and exhaustive but with a logical order. Quality ratings and agreement ratings are examples of ordinal scales (i.e., good, very good, fair, etc., OR agree, strongly agree, disagree, etc.).

- Interval: a measurement scale where data is grouped into categories with orderly and equal distances between the categories. There is always an arbitrary zero point.

- Ratio: contains features of all three.

For a more in-depth review of scales of measurement, read our article on data analysis questions . Once measurement scales have been selected, it is time to select which of the two broad interpretation processes will best suit your data needs. Let’s take a closer look at those specific methods and possible data interpretation problems.

How To Interpret Data? Top Methods & Techniques

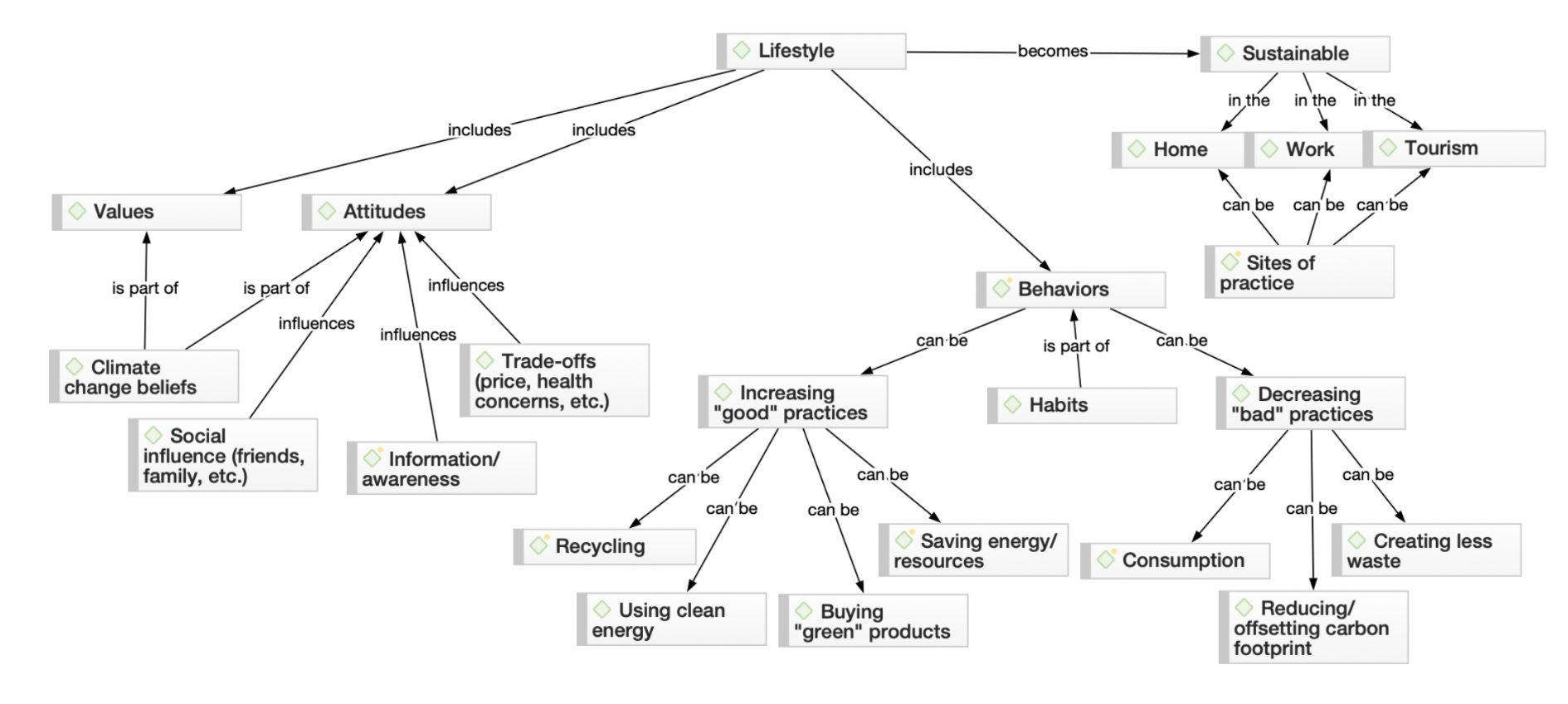

When interpreting data, an analyst must try to discern the differences between correlation, causation, and coincidences, as well as many other biases – but he also has to consider all the factors involved that may have led to a result. There are various data interpretation types and methods one can use to achieve this.

The interpretation of data is designed to help people make sense of numerical data that has been collected, analyzed, and presented. Having a baseline method for interpreting data will provide your analyst teams with a structure and consistent foundation. Indeed, if several departments have different approaches to interpreting the same data while sharing the same goals, some mismatched objectives can result. Disparate methods will lead to duplicated efforts, inconsistent solutions, wasted energy, and inevitably – time and money. In this part, we will look at the two main methods of interpretation of data: qualitative and quantitative analysis.

Qualitative Data Interpretation

Qualitative data analysis can be summed up in one word – categorical. With this type of analysis, data is not described through numerical values or patterns but through the use of descriptive context (i.e., text). Typically, narrative data is gathered by employing a wide variety of person-to-person techniques. These techniques include:

- Observations: detailing behavioral patterns that occur within an observation group. These patterns could be the amount of time spent in an activity, the type of activity, and the method of communication employed.

- Focus groups: Group people and ask them relevant questions to generate a collaborative discussion about a research topic.

- Secondary Research: much like how patterns of behavior can be observed, various types of documentation resources can be coded and divided based on the type of material they contain.

- Interviews: one of the best collection methods for narrative data. Inquiry responses can be grouped by theme, topic, or category. The interview approach allows for highly focused data segmentation.

A key difference between qualitative and quantitative analysis is clearly noticeable in the interpretation stage. The first one is widely open to interpretation and must be “coded” so as to facilitate the grouping and labeling of data into identifiable themes. As person-to-person data collection techniques can often result in disputes pertaining to proper analysis, qualitative data analysis is often summarized through three basic principles: notice things, collect things, and think about things.

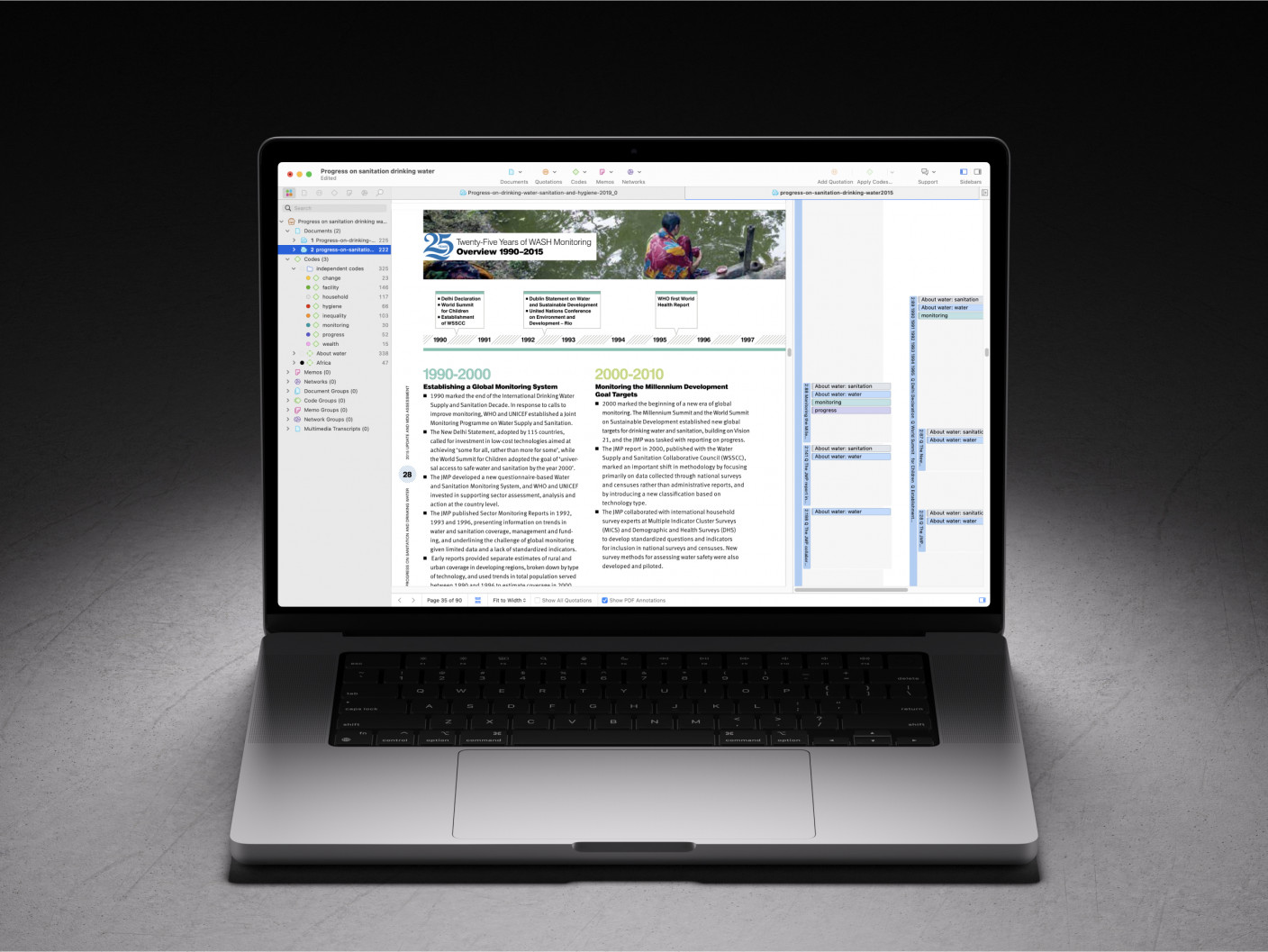

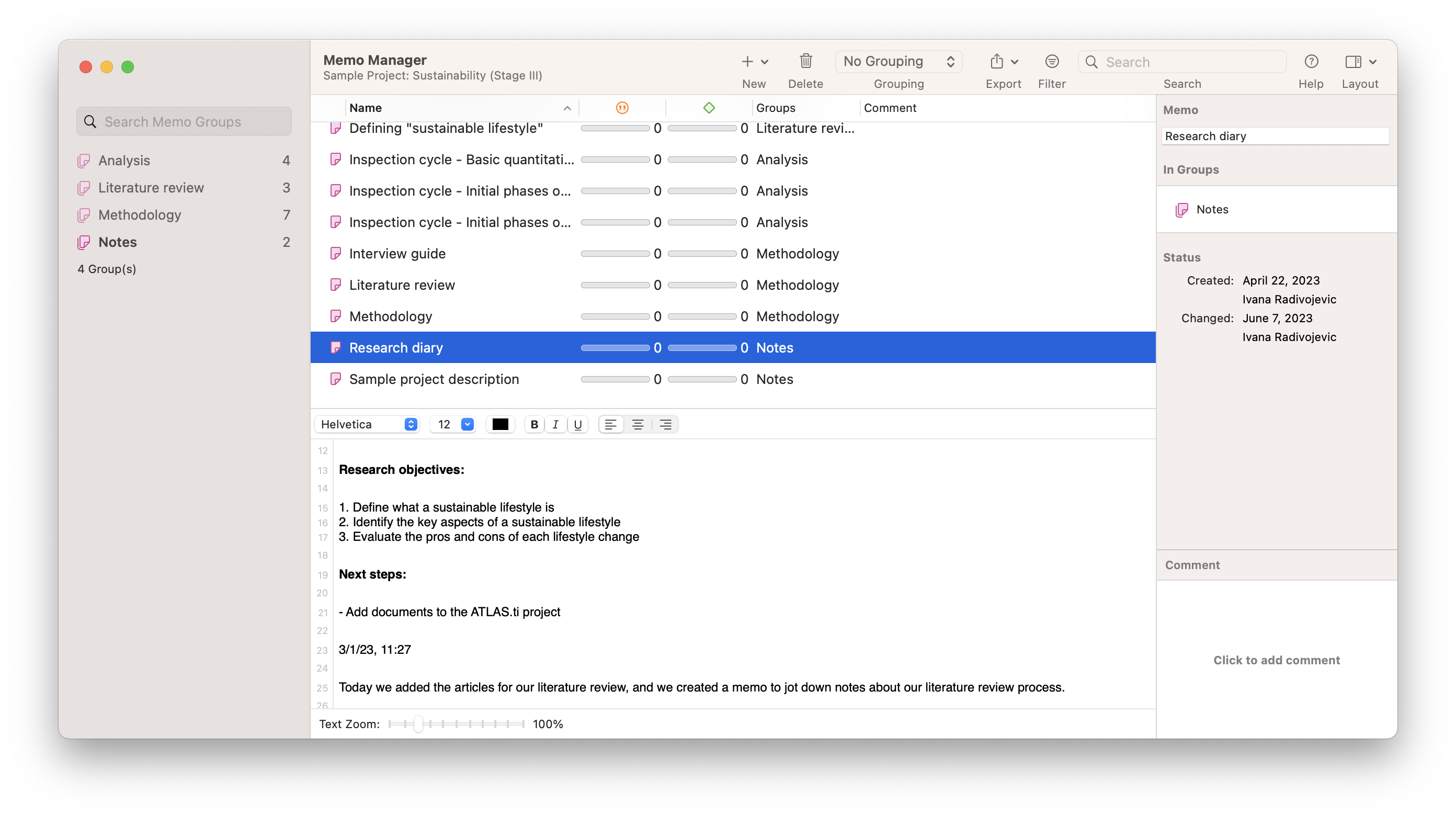

After qualitative data has been collected through transcripts, questionnaires, audio and video recordings, or the researcher’s notes, it is time to interpret it. For that purpose, there are some common methods used by researchers and analysts.

- Content analysis : As its name suggests, this is a research method used to identify frequencies and recurring words, subjects, and concepts in image, video, or audio content. It transforms qualitative information into quantitative data to help discover trends and conclusions that will later support important research or business decisions. This method is often used by marketers to understand brand sentiment from the mouths of customers themselves. Through that, they can extract valuable information to improve their products and services. It is recommended to use content analytics tools for this method as manually performing it is very time-consuming and can lead to human error or subjectivity issues. Having a clear goal in mind before diving into it is another great practice for avoiding getting lost in the fog.

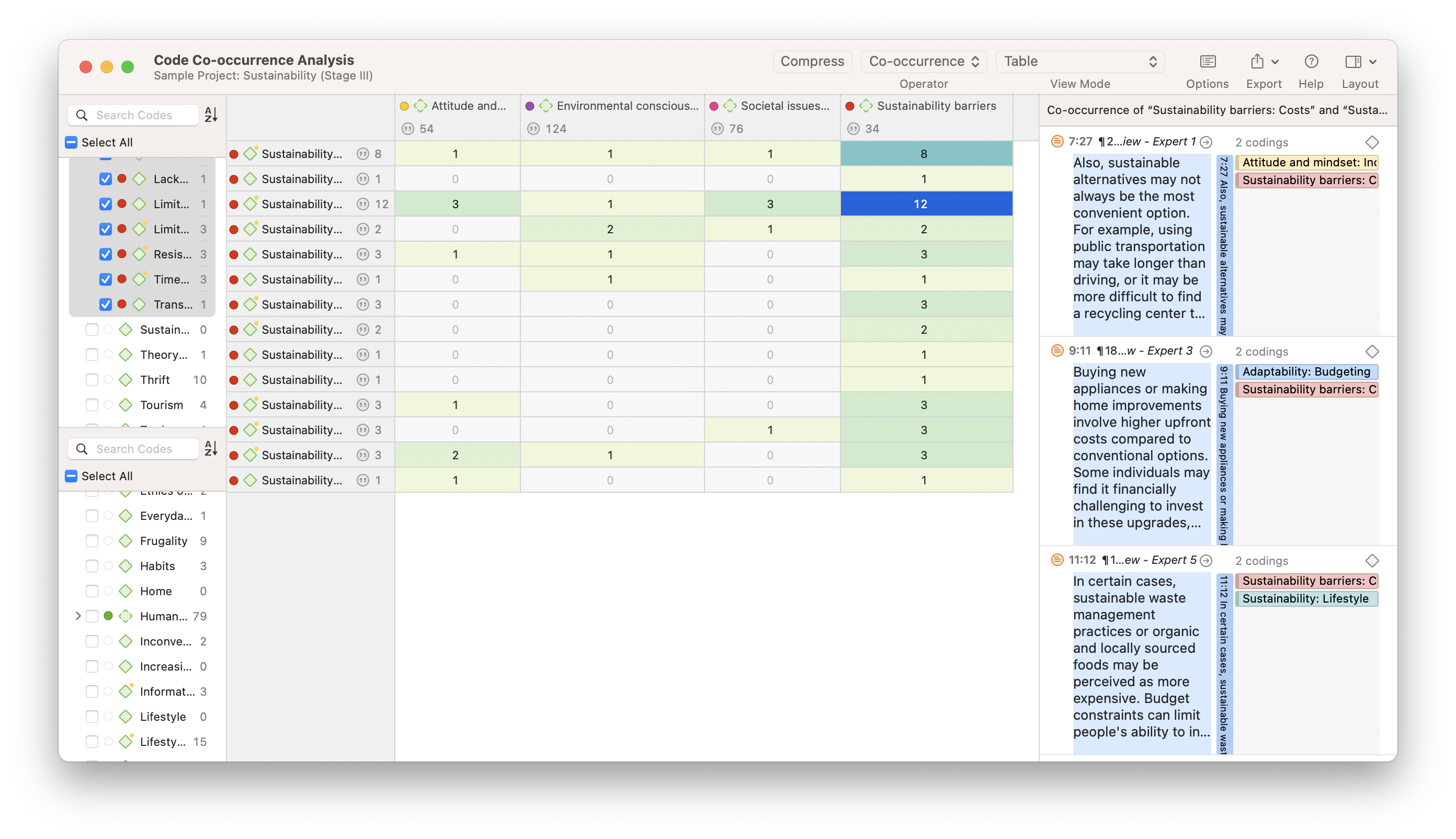

- Thematic analysis: This method focuses on analyzing qualitative data, such as interview transcripts, survey questions, and others, to identify common patterns and separate the data into different groups according to found similarities or themes. For example, imagine you want to analyze what customers think about your restaurant. For this purpose, you do a thematic analysis on 1000 reviews and find common themes such as “fresh food”, “cold food”, “small portions”, “friendly staff”, etc. With those recurring themes in hand, you can extract conclusions about what could be improved or enhanced based on your customer’s experiences. Since this technique is more exploratory, be open to changing your research questions or goals as you go.

- Narrative analysis: A bit more specific and complicated than the two previous methods, it is used to analyze stories and discover their meaning. These stories can be extracted from testimonials, case studies, and interviews, as these formats give people more space to tell their experiences. Given that collecting this kind of data is harder and more time-consuming, sample sizes for narrative analysis are usually smaller, which makes it harder to reproduce its findings. However, it is still a valuable technique for understanding customers' preferences and mindsets.

- Discourse analysis : This method is used to draw the meaning of any type of visual, written, or symbolic language in relation to a social, political, cultural, or historical context. It is used to understand how context can affect how language is carried out and understood. For example, if you are doing research on power dynamics, using discourse analysis to analyze a conversation between a janitor and a CEO and draw conclusions about their responses based on the context and your research questions is a great use case for this technique. That said, like all methods in this section, discourse analytics is time-consuming as the data needs to be analyzed until no new insights emerge.

- Grounded theory analysis : The grounded theory approach aims to create or discover a new theory by carefully testing and evaluating the data available. Unlike all other qualitative approaches on this list, grounded theory helps extract conclusions and hypotheses from the data instead of going into the analysis with a defined hypothesis. This method is very popular amongst researchers, analysts, and marketers as the results are completely data-backed, providing a factual explanation of any scenario. It is often used when researching a completely new topic or with little knowledge as this space to start from the ground up.

Quantitative Data Interpretation

If quantitative data interpretation could be summed up in one word (and it really can’t), that word would be “numerical.” There are few certainties when it comes to data analysis, but you can be sure that if the research you are engaging in has no numbers involved, it is not quantitative research, as this analysis refers to a set of processes by which numerical data is analyzed. More often than not, it involves the use of statistical modeling such as standard deviation, mean, and median. Let’s quickly review the most common statistical terms:

- Mean: A mean represents a numerical average for a set of responses. When dealing with a data set (or multiple data sets), a mean will represent the central value of a specific set of numbers. It is the sum of the values divided by the number of values within the data set. Other terms that can be used to describe the concept are arithmetic mean, average, and mathematical expectation.

- Standard deviation: This is another statistical term commonly used in quantitative analysis. Standard deviation reveals the distribution of the responses around the mean. It describes the degree of consistency within the responses; together with the mean, it provides insight into data sets.

- Frequency distribution: This is a measurement gauging the rate of a response appearance within a data set. When using a survey, for example, frequency distribution, it can determine the number of times a specific ordinal scale response appears (i.e., agree, strongly agree, disagree, etc.). Frequency distribution is extremely keen in determining the degree of consensus among data points.

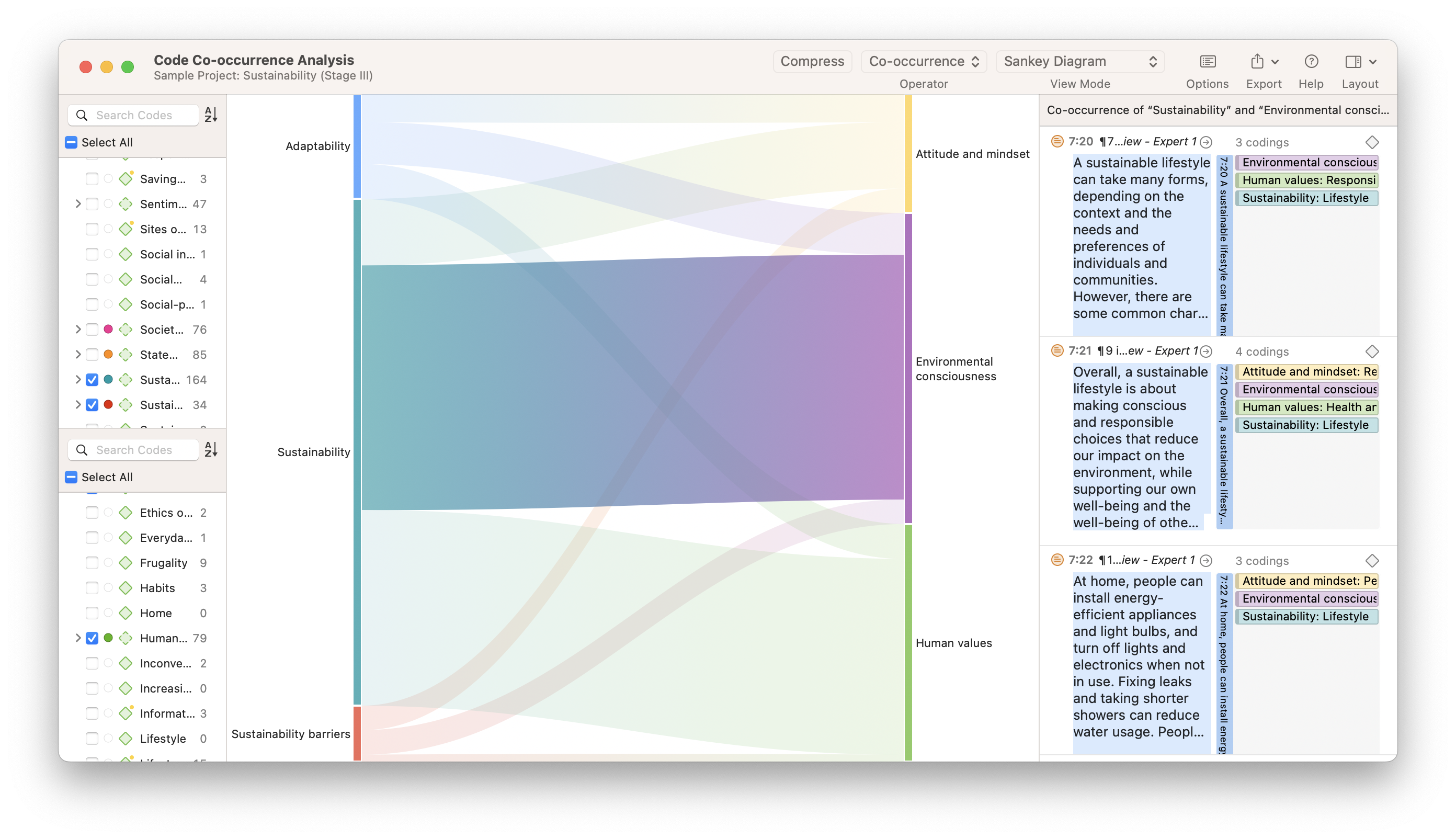

Typically, quantitative data is measured by visually presenting correlation tests between two or more variables of significance. Different processes can be used together or separately, and comparisons can be made to ultimately arrive at a conclusion. Other signature interpretation processes of quantitative data include:

- Regression analysis: Essentially, it uses historical data to understand the relationship between a dependent variable and one or more independent variables. Knowing which variables are related and how they developed in the past allows you to anticipate possible outcomes and make better decisions going forward. For example, if you want to predict your sales for next month, you can use regression to understand what factors will affect them, such as products on sale and the launch of a new campaign, among many others.

- Cohort analysis: This method identifies groups of users who share common characteristics during a particular time period. In a business scenario, cohort analysis is commonly used to understand customer behaviors. For example, a cohort could be all users who have signed up for a free trial on a given day. An analysis would be carried out to see how these users behave, what actions they carry out, and how their behavior differs from other user groups.

- Predictive analysis: As its name suggests, the predictive method aims to predict future developments by analyzing historical and current data. Powered by technologies such as artificial intelligence and machine learning, predictive analytics practices enable businesses to identify patterns or potential issues and plan informed strategies in advance.

- Prescriptive analysis: Also powered by predictions, the prescriptive method uses techniques such as graph analysis, complex event processing, and neural networks, among others, to try to unravel the effect that future decisions will have in order to adjust them before they are actually made. This helps businesses to develop responsive, practical business strategies.

- Conjoint analysis: Typically applied to survey analysis, the conjoint approach is used to analyze how individuals value different attributes of a product or service. This helps researchers and businesses to define pricing, product features, packaging, and many other attributes. A common use is menu-based conjoint analysis, in which individuals are given a “menu” of options from which they can build their ideal concept or product. Through this, analysts can understand which attributes they would pick above others and drive conclusions.

- Cluster analysis: Last but not least, the cluster is a method used to group objects into categories. Since there is no target variable when using cluster analysis, it is a useful method to find hidden trends and patterns in the data. In a business context, clustering is used for audience segmentation to create targeted experiences. In market research, it is often used to identify age groups, geographical information, and earnings, among others.

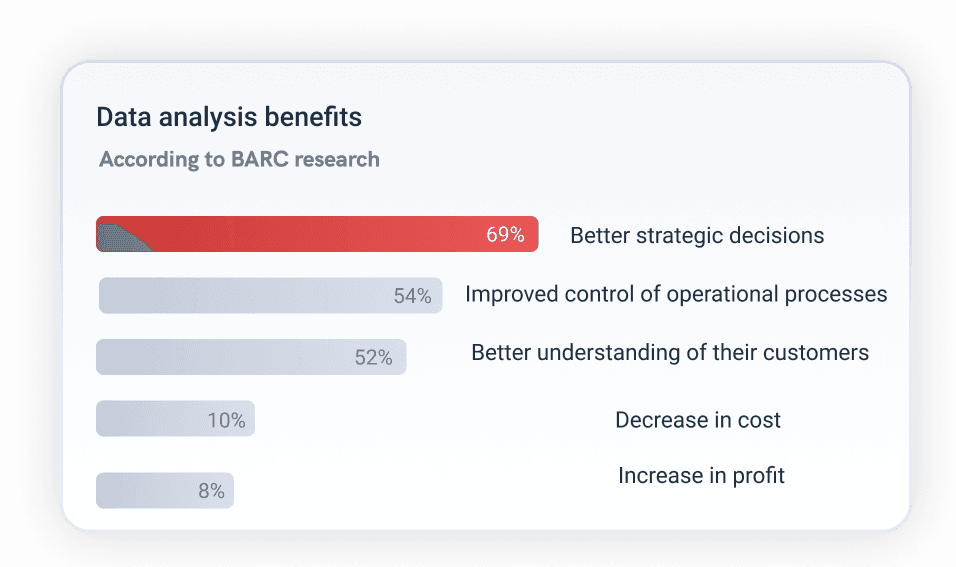

Now that we have seen how to interpret data, let's move on and ask ourselves some questions: What are some of the benefits of data interpretation? Why do all industries engage in data research and analysis? These are basic questions, but they often don’t receive adequate attention.

Your Chance: Want to test a powerful data analysis software? Use our 14-days free trial & start extracting insights from your data!

Why Data Interpretation Is Important

The purpose of collection and interpretation is to acquire useful and usable information and to make the most informed decisions possible. From businesses to newlyweds researching their first home, data collection and interpretation provide limitless benefits for a wide range of institutions and individuals.

Data analysis and interpretation, regardless of the method and qualitative/quantitative status, may include the following characteristics:

- Data identification and explanation

- Comparing and contrasting data

- Identification of data outliers

- Future predictions

Data analysis and interpretation, in the end, help improve processes and identify problems. It is difficult to grow and make dependable improvements without, at the very least, minimal data collection and interpretation. What is the keyword? Dependable. Vague ideas regarding performance enhancement exist within all institutions and industries. Yet, without proper research and analysis, an idea is likely to remain in a stagnant state forever (i.e., minimal growth). So… what are a few of the business benefits of digital age data analysis and interpretation? Let’s take a look!

1) Informed decision-making: A decision is only as good as the knowledge that formed it. Informed data decision-making can potentially set industry leaders apart from the rest of the market pack. Studies have shown that companies in the top third of their industries are, on average, 5% more productive and 6% more profitable when implementing informed data decision-making processes. Most decisive actions will arise only after a problem has been identified or a goal defined. Data analysis should include identification, thesis development, and data collection, followed by data communication.

If institutions only follow that simple order, one that we should all be familiar with from grade school science fairs, then they will be able to solve issues as they emerge in real-time. Informed decision-making has a tendency to be cyclical. This means there is really no end, and eventually, new questions and conditions arise within the process that need to be studied further. The monitoring of data results will inevitably return the process to the start with new data and sights.

2) Anticipating needs with trends identification: data insights provide knowledge, and knowledge is power. The insights obtained from market and consumer data analyses have the ability to set trends for peers within similar market segments. A perfect example of how data analytics can impact trend prediction is evidenced in the music identification application Shazam . The application allows users to upload an audio clip of a song they like but can’t seem to identify. Users make 15 million song identifications a day. With this data, Shazam has been instrumental in predicting future popular artists.

When industry trends are identified, they can then serve a greater industry purpose. For example, the insights from Shazam’s monitoring benefits not only Shazam in understanding how to meet consumer needs but also grant music executives and record label companies an insight into the pop-culture scene of the day. Data gathering and interpretation processes can allow for industry-wide climate prediction and result in greater revenue streams across the market. For this reason, all institutions should follow the basic data cycle of collection, interpretation, decision-making, and monitoring.

3) Cost efficiency: Proper implementation of analytics processes can provide businesses with profound cost advantages within their industries. A recent data study performed by Deloitte vividly demonstrates this in finding that data analysis ROI is driven by efficient cost reductions. Often, this benefit is overlooked because making money is typically viewed as “sexier” than saving money. Yet, sound data analyses have the ability to alert management to cost-reduction opportunities without any significant exertion of effort on the part of human capital.

A great example of the potential for cost efficiency through data analysis is Intel. Prior to 2012, Intel would conduct over 19,000 manufacturing function tests on their chips before they could be deemed acceptable for release. To cut costs and reduce test time, Intel implemented predictive data analyses. By using historical and current data, Intel now avoids testing each chip 19,000 times by focusing on specific and individual chip tests. After its implementation in 2012, Intel saved over $3 million in manufacturing costs. Cost reduction may not be as “sexy” as data profit, but as Intel proves, it is a benefit of data analysis that should not be neglected.

4) Clear foresight: companies that collect and analyze their data gain better knowledge about themselves, their processes, and their performance. They can identify performance challenges when they arise and take action to overcome them. Data interpretation through visual representations lets them process their findings faster and make better-informed decisions on the company's future.

Key Data Interpretation Skills You Should Have

Just like any other process, data interpretation and analysis require researchers or analysts to have some key skills to be able to perform successfully. It is not enough just to apply some methods and tools to the data; the person who is managing it needs to be objective and have a data-driven mind, among other skills.

It is a common misconception to think that the required skills are mostly number-related. While data interpretation is heavily analytically driven, it also requires communication and narrative skills, as the results of the analysis need to be presented in a way that is easy to understand for all types of audiences.

Luckily, with the rise of self-service tools and AI-driven technologies, data interpretation is no longer segregated for analysts only. However, the topic still remains a big challenge for businesses that make big investments in data and tools to support it, as the interpretation skills required are still lacking. It is worthless to put massive amounts of money into extracting information if you are not going to be able to interpret what that information is telling you. For that reason, below we list the top 5 data interpretation skills your employees or researchers should have to extract the maximum potential from the data.

- Data Literacy: The first and most important skill to have is data literacy. This means having the ability to understand, work, and communicate with data. It involves knowing the types of data sources, methods, and ethical implications of using them. In research, this skill is often a given. However, in a business context, there might be many employees who are not comfortable with data. The issue is the interpretation of data can not be solely responsible for the data team, as it is not sustainable in the long run. Experts advise business leaders to carefully assess the literacy level across their workforce and implement training instances to ensure everyone can interpret their data.

- Data Tools: The data interpretation and analysis process involves using various tools to collect, clean, store, and analyze the data. The complexity of the tools varies depending on the type of data and the analysis goals. Going from simple ones like Excel to more complex ones like databases, such as SQL, or programming languages, such as R or Python. It also involves visual analytics tools to bring the data to life through the use of graphs and charts. Managing these tools is a fundamental skill as they make the process faster and more efficient. As mentioned before, most modern solutions are now self-service, enabling less technical users to use them without problem.

- Critical Thinking: Another very important skill is to have critical thinking. Data hides a range of conclusions, trends, and patterns that must be discovered. It is not just about comparing numbers; it is about putting a story together based on multiple factors that will lead to a conclusion. Therefore, having the ability to look further from what is right in front of you is an invaluable skill for data interpretation.

- Data Ethics: In the information age, being aware of the legal and ethical responsibilities that come with the use of data is of utmost importance. In short, data ethics involves respecting the privacy and confidentiality of data subjects, as well as ensuring accuracy and transparency for data usage. It requires the analyzer or researcher to be completely objective with its interpretation to avoid any biases or discrimination. Many countries have already implemented regulations regarding the use of data, including the GDPR or the ACM Code Of Ethics. Awareness of these regulations and responsibilities is a fundamental skill that anyone working in data interpretation should have.

- Domain Knowledge: Another skill that is considered important when interpreting data is to have domain knowledge. As mentioned before, data hides valuable insights that need to be uncovered. To do so, the analyst needs to know about the industry or domain from which the information is coming and use that knowledge to explore it and put it into a broader context. This is especially valuable in a business context, where most departments are now analyzing data independently with the help of a live dashboard instead of relying on the IT department, which can often overlook some aspects due to a lack of expertise in the topic.

Common Data Analysis And Interpretation Problems

The oft-repeated mantra of those who fear data advancements in the digital age is “big data equals big trouble.” While that statement is not accurate, it is safe to say that certain data interpretation problems or “pitfalls” exist and can occur when analyzing data, especially at the speed of thought. Let’s identify some of the most common data misinterpretation risks and shed some light on how they can be avoided:

1) Correlation mistaken for causation: our first misinterpretation of data refers to the tendency of data analysts to mix the cause of a phenomenon with correlation. It is the assumption that because two actions occurred together, one caused the other. This is inaccurate, as actions can occur together, absent a cause-and-effect relationship.

- Digital age example: assuming that increased revenue results from increased social media followers… there might be a definitive correlation between the two, especially with today’s multi-channel purchasing experiences. But that does not mean an increase in followers is the direct cause of increased revenue. There could be both a common cause and an indirect causality.

- Remedy: attempt to eliminate the variable you believe to be causing the phenomenon.

2) Confirmation bias: our second problem is data interpretation bias. It occurs when you have a theory or hypothesis in mind but are intent on only discovering data patterns that support it while rejecting those that do not.

- Digital age example: your boss asks you to analyze the success of a recent multi-platform social media marketing campaign. While analyzing the potential data variables from the campaign (one that you ran and believe performed well), you see that the share rate for Facebook posts was great, while the share rate for Twitter Tweets was not. Using only Facebook posts to prove your hypothesis that the campaign was successful would be a perfect manifestation of confirmation bias.

- Remedy: as this pitfall is often based on subjective desires, one remedy would be to analyze data with a team of objective individuals. If this is not possible, another solution is to resist the urge to make a conclusion before data exploration has been completed. Remember to always try to disprove a hypothesis, not prove it.

3) Irrelevant data: the third data misinterpretation pitfall is especially important in the digital age. As large data is no longer centrally stored and as it continues to be analyzed at the speed of thought, it is inevitable that analysts will focus on data that is irrelevant to the problem they are trying to correct.

- Digital age example: in attempting to gauge the success of an email lead generation campaign, you notice that the number of homepage views directly resulting from the campaign increased, but the number of monthly newsletter subscribers did not. Based on the number of homepage views, you decide the campaign was a success when really it generated zero leads.

- Remedy: proactively and clearly frame any data analysis variables and KPIs prior to engaging in a data review. If the metric you use to measure the success of a lead generation campaign is newsletter subscribers, there is no need to review the number of homepage visits. Be sure to focus on the data variable that answers your question or solves your problem and not on irrelevant data.

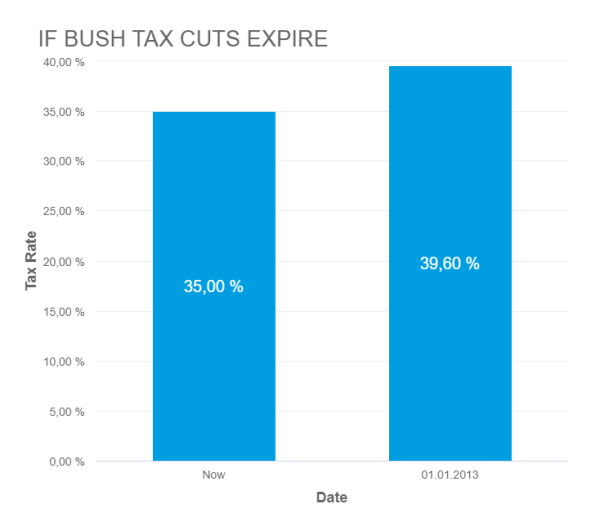

4) Truncating an Axes: When creating a graph to start interpreting the results of your analysis, it is important to keep the axes truthful and avoid generating misleading visualizations. Starting the axes in a value that doesn’t portray the actual truth about the data can lead to false conclusions.

- Digital age example: In the image below, we can see a graph from Fox News in which the Y-axes start at 34%, making it seem that the difference between 35% and 39.6% is way higher than it actually is. This could lead to a misinterpretation of the tax rate changes.

* Source : www.venngage.com *

- Remedy: Be careful with how your data is visualized. Be respectful and realistic with axes to avoid misinterpretation of your data. See below how the Fox News chart looks when using the correct axis values. This chart was created with datapine's modern online data visualization tool.

5) (Small) sample size: Another common problem is using a small sample size. Logically, the bigger the sample size, the more accurate and reliable the results. However, this also depends on the size of the effect of the study. For example, the sample size in a survey about the quality of education will not be the same as for one about people doing outdoor sports in a specific area.

- Digital age example: Imagine you ask 30 people a question, and 29 answer “yes,” resulting in 95% of the total. Now imagine you ask the same question to 1000, and 950 of them answer “yes,” which is again 95%. While these percentages might look the same, they certainly do not mean the same thing, as a 30-person sample size is not a significant number to establish a truthful conclusion.

- Remedy: Researchers say that in order to determine the correct sample size to get truthful and meaningful results, it is necessary to define a margin of error that will represent the maximum amount they want the results to deviate from the statistical mean. Paired with this, they need to define a confidence level that should be between 90 and 99%. With these two values in hand, researchers can calculate an accurate sample size for their studies.

6) Reliability, subjectivity, and generalizability : When performing qualitative analysis, researchers must consider practical and theoretical limitations when interpreting the data. In some cases, this type of research can be considered unreliable because of uncontrolled factors that might or might not affect the results. This is paired with the fact that the researcher has a primary role in the interpretation process, meaning he or she decides what is relevant and what is not, and as we know, interpretations can be very subjective.

Generalizability is also an issue that researchers face when dealing with qualitative analysis. As mentioned in the point about having a small sample size, it is difficult to draw conclusions that are 100% representative because the results might be biased or unrepresentative of a wider population.

While these factors are mostly present in qualitative research, they can also affect the quantitative analysis. For example, when choosing which KPIs to portray and how to portray them, analysts can also be biased and represent them in a way that benefits their analysis.

- Digital age example: Biased questions in a survey are a great example of reliability and subjectivity issues. Imagine you are sending a survey to your clients to see how satisfied they are with your customer service with this question: “How amazing was your experience with our customer service team?”. Here, we can see that this question clearly influences the response of the individual by putting the word “amazing” on it.

- Remedy: A solution to avoid these issues is to keep your research honest and neutral. Keep the wording of the questions as objective as possible. For example: “On a scale of 1-10, how satisfied were you with our customer service team?”. This does not lead the respondent to any specific answer, meaning the results of your survey will be reliable.

Data Interpretation Best Practices & Tips

Data analysis and interpretation are critical to developing sound conclusions and making better-informed decisions. As we have seen with this article, there is an art and science to the interpretation of data. To help you with this purpose, we will list a few relevant techniques, methods, and tricks you can implement for a successful data management process.

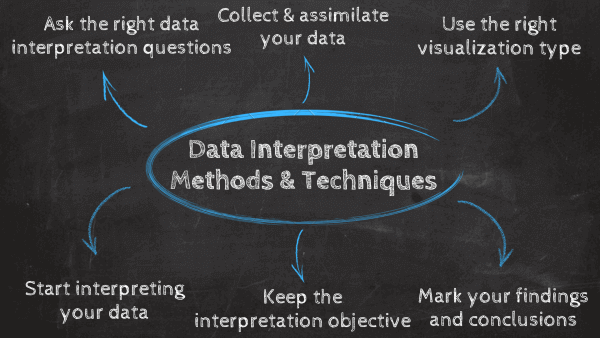

As mentioned at the beginning of this post, the first step to interpreting data in a successful way is to identify the type of analysis you will perform and apply the methods respectively. Clearly differentiate between qualitative (observe, document, and interview notice, collect and think about things) and quantitative analysis (you lead research with a lot of numerical data to be analyzed through various statistical methods).

1) Ask the right data interpretation questions

The first data interpretation technique is to define a clear baseline for your work. This can be done by answering some critical questions that will serve as a useful guideline to start. Some of them include: what are the goals and objectives of my analysis? What type of data interpretation method will I use? Who will use this data in the future? And most importantly, what general question am I trying to answer?

Once all this information has been defined, you will be ready for the next step: collecting your data.

2) Collect and assimilate your data

Now that a clear baseline has been established, it is time to collect the information you will use. Always remember that your methods for data collection will vary depending on what type of analysis method you use, which can be qualitative or quantitative. Based on that, relying on professional online data analysis tools to facilitate the process is a great practice in this regard, as manually collecting and assessing raw data is not only very time-consuming and expensive but is also at risk of errors and subjectivity.

Once your data is collected, you need to carefully assess it to understand if the quality is appropriate to be used during a study. This means, is the sample size big enough? Were the procedures used to collect the data implemented correctly? Is the date range from the data correct? If coming from an external source, is it a trusted and objective one?

With all the needed information in hand, you are ready to start the interpretation process, but first, you need to visualize your data.

3) Use the right data visualization type

Data visualizations such as business graphs , charts, and tables are fundamental to successfully interpreting data. This is because data visualization via interactive charts and graphs makes the information more understandable and accessible. As you might be aware, there are different types of visualizations you can use, but not all of them are suitable for any analysis purpose. Using the wrong graph can lead to misinterpretation of your data, so it’s very important to carefully pick the right visual for it. Let’s look at some use cases of common data visualizations.

- Bar chart: One of the most used chart types, the bar chart uses rectangular bars to show the relationship between 2 or more variables. There are different types of bar charts for different interpretations, including the horizontal bar chart, column bar chart, and stacked bar chart.

- Line chart: Most commonly used to show trends, acceleration or decelerations, and volatility, the line chart aims to show how data changes over a period of time, for example, sales over a year. A few tips to keep this chart ready for interpretation are not using many variables that can overcrowd the graph and keeping your axis scale close to the highest data point to avoid making the information hard to read.

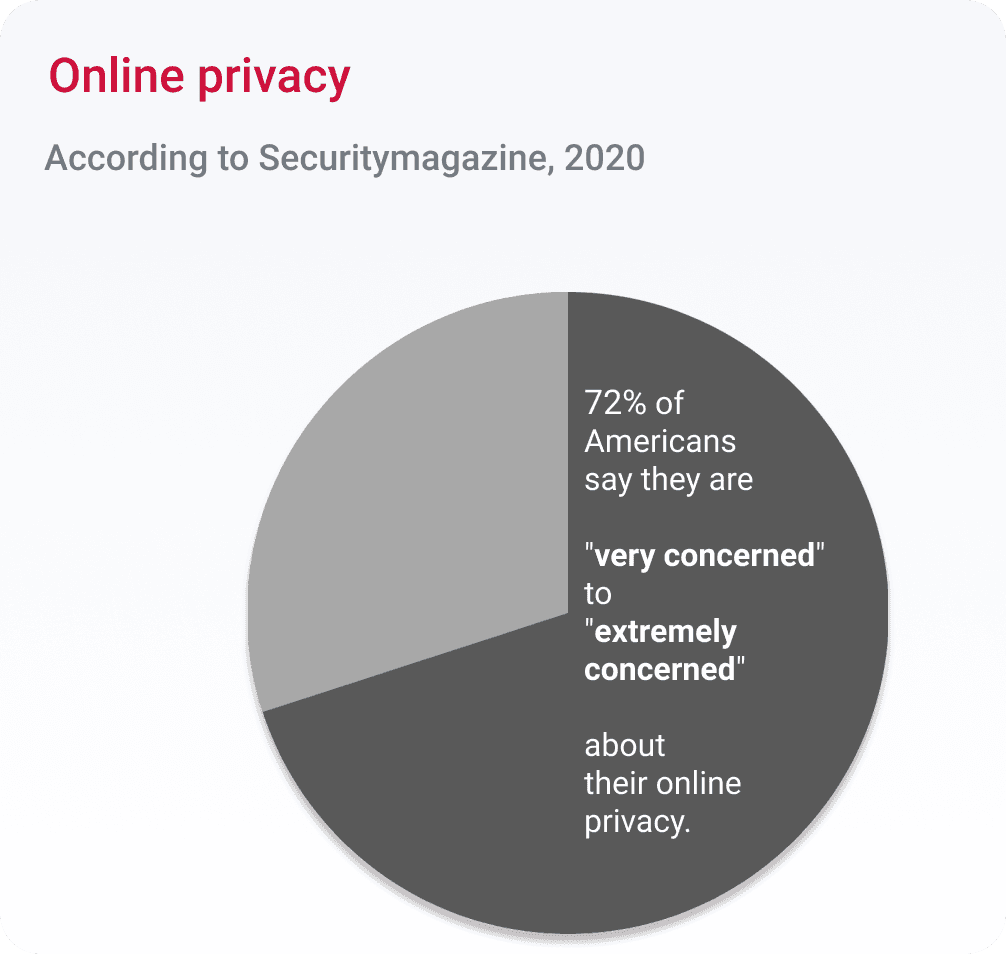

- Pie chart: Although it doesn’t do a lot in terms of analysis due to its uncomplex nature, pie charts are widely used to show the proportional composition of a variable. Visually speaking, showing a percentage in a bar chart is way more complicated than showing it in a pie chart. However, this also depends on the number of variables you are comparing. If your pie chart needs to be divided into 10 portions, then it is better to use a bar chart instead.

- Tables: While they are not a specific type of chart, tables are widely used when interpreting data. Tables are especially useful when you want to portray data in its raw format. They give you the freedom to easily look up or compare individual values while also displaying grand totals.

With the use of data visualizations becoming more and more critical for businesses’ analytical success, many tools have emerged to help users visualize their data in a cohesive and interactive way. One of the most popular ones is the use of BI dashboards . These visual tools provide a centralized view of various graphs and charts that paint a bigger picture of a topic. We will discuss the power of dashboards for an efficient data interpretation practice in the next portion of this post. If you want to learn more about different types of graphs and charts , take a look at our complete guide on the topic.

4) Start interpreting

After the tedious preparation part, you can start extracting conclusions from your data. As mentioned many times throughout the post, the way you decide to interpret the data will solely depend on the methods you initially decided to use. If you had initial research questions or hypotheses, then you should look for ways to prove their validity. If you are going into the data with no defined hypothesis, then start looking for relationships and patterns that will allow you to extract valuable conclusions from the information.

During the process of interpretation, stay curious and creative, dig into the data, and determine if there are any other critical questions that should be asked. If any new questions arise, you need to assess if you have the necessary information to answer them. Being able to identify if you need to dedicate more time and resources to the research is a very important step. No matter if you are studying customer behaviors or a new cancer treatment, the findings from your analysis may dictate important decisions in the future. Therefore, taking the time to really assess the information is key. For that purpose, data interpretation software proves to be very useful.

5) Keep your interpretation objective

As mentioned above, objectivity is one of the most important data interpretation skills but also one of the hardest. Being the person closest to the investigation, it is easy to become subjective when looking for answers in the data. A good way to stay objective is to show the information related to the study to other people, for example, research partners or even the people who will use your findings once they are done. This can help avoid confirmation bias and any reliability issues with your interpretation.

Remember, using a visualization tool such as a modern dashboard will make the interpretation process way easier and more efficient as the data can be navigated and manipulated in an easy and organized way. And not just that, using a dashboard tool to present your findings to a specific audience will make the information easier to understand and the presentation way more engaging thanks to the visual nature of these tools.

6) Mark your findings and draw conclusions

Findings are the observations you extracted from your data. They are the facts that will help you drive deeper conclusions about your research. For example, findings can be trends and patterns you found during your interpretation process. To put your findings into perspective, you can compare them with other resources that use similar methods and use them as benchmarks.

Reflect on your own thinking and reasoning and be aware of the many pitfalls data analysis and interpretation carry—correlation versus causation, subjective bias, false information, inaccurate data, etc. Once you are comfortable with interpreting the data, you will be ready to develop conclusions, see if your initial questions were answered, and suggest recommendations based on them.

Interpretation of Data: The Use of Dashboards Bridging The Gap

As we have seen, quantitative and qualitative methods are distinct types of data interpretation and analysis. Both offer a varying degree of return on investment (ROI) regarding data investigation, testing, and decision-making. But how do you mix the two and prevent a data disconnect? The answer is professional data dashboards.

For a few years now, dashboards have become invaluable tools to visualize and interpret data. These tools offer a centralized and interactive view of data and provide the perfect environment for exploration and extracting valuable conclusions. They bridge the quantitative and qualitative information gap by unifying all the data in one place with the help of stunning visuals.

Not only that, but these powerful tools offer a large list of benefits, and we will discuss some of them below.

1) Connecting and blending data. With today’s pace of innovation, it is no longer feasible (nor desirable) to have bulk data centrally located. As businesses continue to globalize and borders continue to dissolve, it will become increasingly important for businesses to possess the capability to run diverse data analyses absent the limitations of location. Data dashboards decentralize data without compromising on the necessary speed of thought while blending both quantitative and qualitative data. Whether you want to measure customer trends or organizational performance, you now have the capability to do both without the need for a singular selection.

2) Mobile Data. Related to the notion of “connected and blended data” is that of mobile data. In today’s digital world, employees are spending less time at their desks and simultaneously increasing production. This is made possible because mobile solutions for analytical tools are no longer standalone. Today, mobile analysis applications seamlessly integrate with everyday business tools. In turn, both quantitative and qualitative data are now available on-demand where they’re needed, when they’re needed, and how they’re needed via interactive online dashboards .

3) Visualization. Data dashboards merge the data gap between qualitative and quantitative data interpretation methods through the science of visualization. Dashboard solutions come “out of the box” and are well-equipped to create easy-to-understand data demonstrations. Modern online data visualization tools provide a variety of color and filter patterns, encourage user interaction, and are engineered to help enhance future trend predictability. All of these visual characteristics make for an easy transition among data methods – you only need to find the right types of data visualization to tell your data story the best way possible.

4) Collaboration. Whether in a business environment or a research project, collaboration is key in data interpretation and analysis. Dashboards are online tools that can be easily shared through a password-protected URL or automated email. Through them, users can collaborate and communicate through the data in an efficient way. Eliminating the need for infinite files with lost updates. Tools such as datapine offer real-time updates, meaning your dashboards will update on their own as soon as new information is available.

Examples Of Data Interpretation In Business

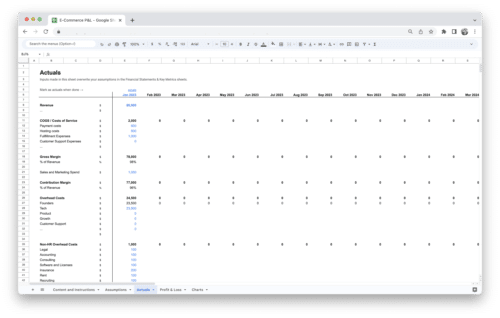

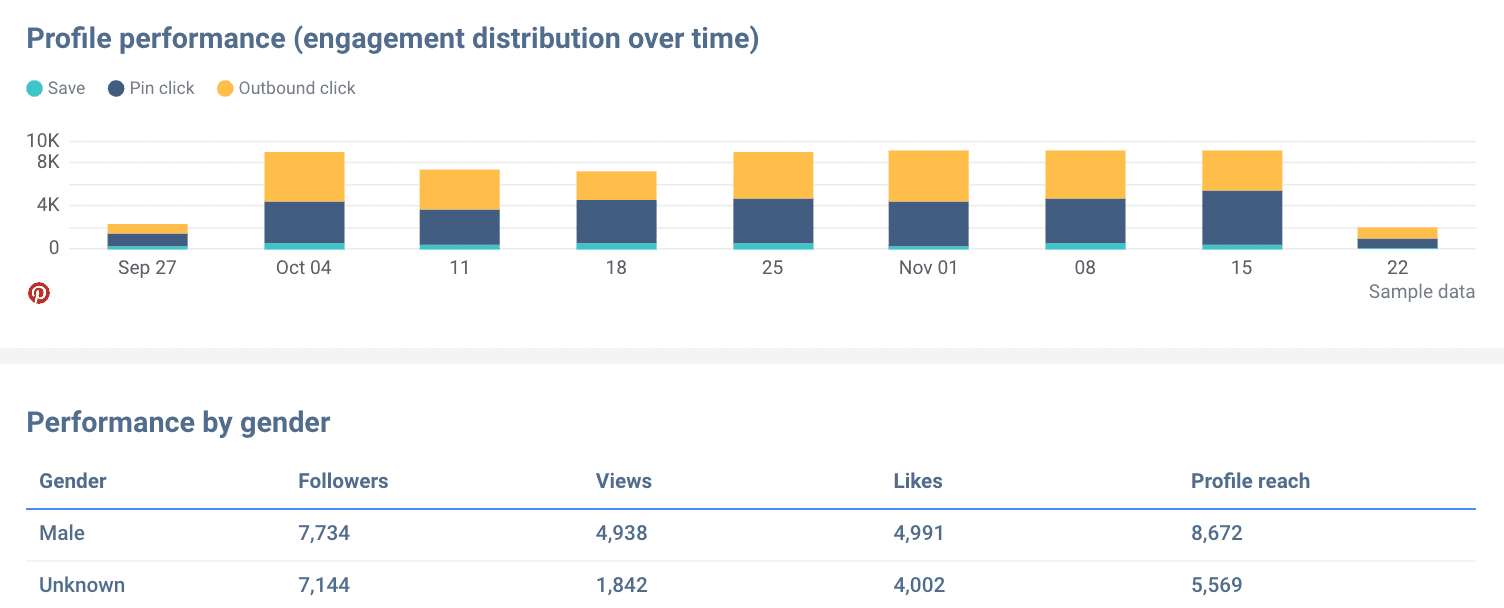

To give you an idea of how a dashboard can fulfill the need to bridge quantitative and qualitative analysis and help in understanding how to interpret data in research thanks to visualization, below, we will discuss three valuable examples to put their value into perspective.

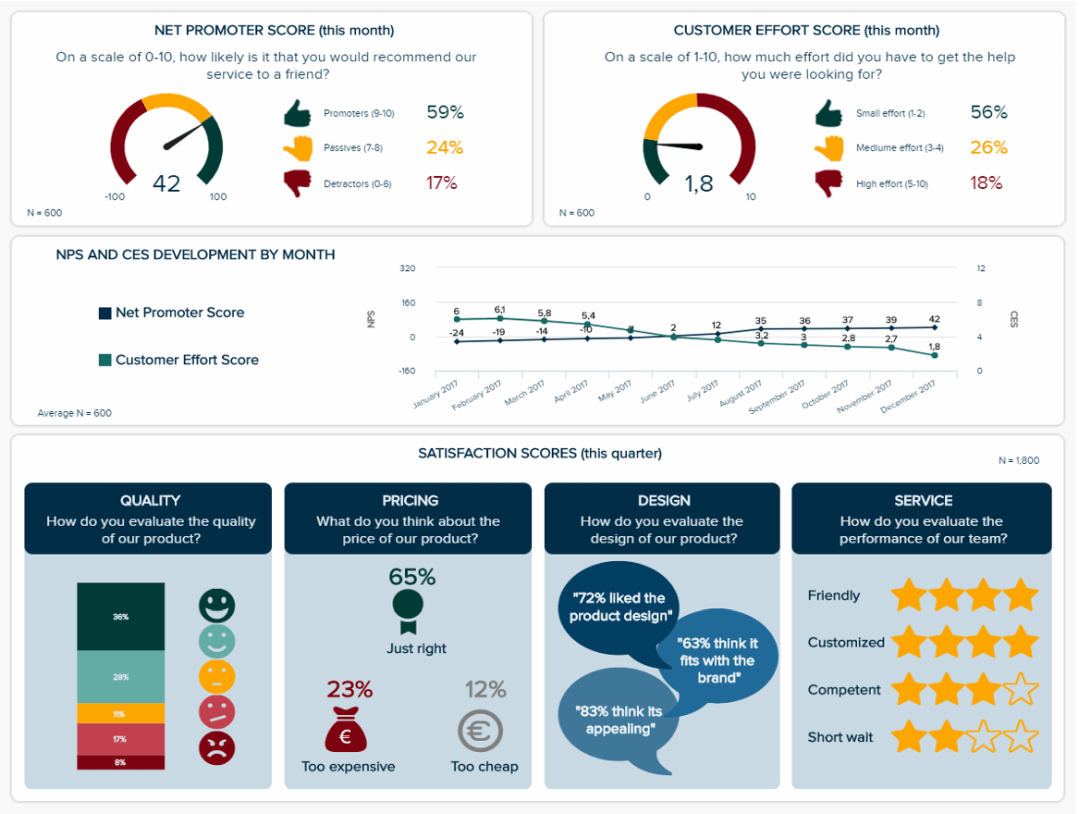

1. Customer Satisfaction Dashboard

This market research dashboard brings together both qualitative and quantitative data that are knowledgeably analyzed and visualized in a meaningful way that everyone can understand, thus empowering any viewer to interpret it. Let’s explore it below.

**click to enlarge**

The value of this template lies in its highly visual nature. As mentioned earlier, visuals make the interpretation process way easier and more efficient. Having critical pieces of data represented with colorful and interactive icons and graphs makes it possible to uncover insights at a glance. For example, the colors green, yellow, and red on the charts for the NPS and the customer effort score allow us to conclude that most respondents are satisfied with this brand with a short glance. A further dive into the line chart below can help us dive deeper into this conclusion, as we can see both metrics developed positively in the past 6 months.

The bottom part of the template provides visually stunning representations of different satisfaction scores for quality, pricing, design, and service. By looking at these, we can conclude that, overall, customers are satisfied with this company in most areas.

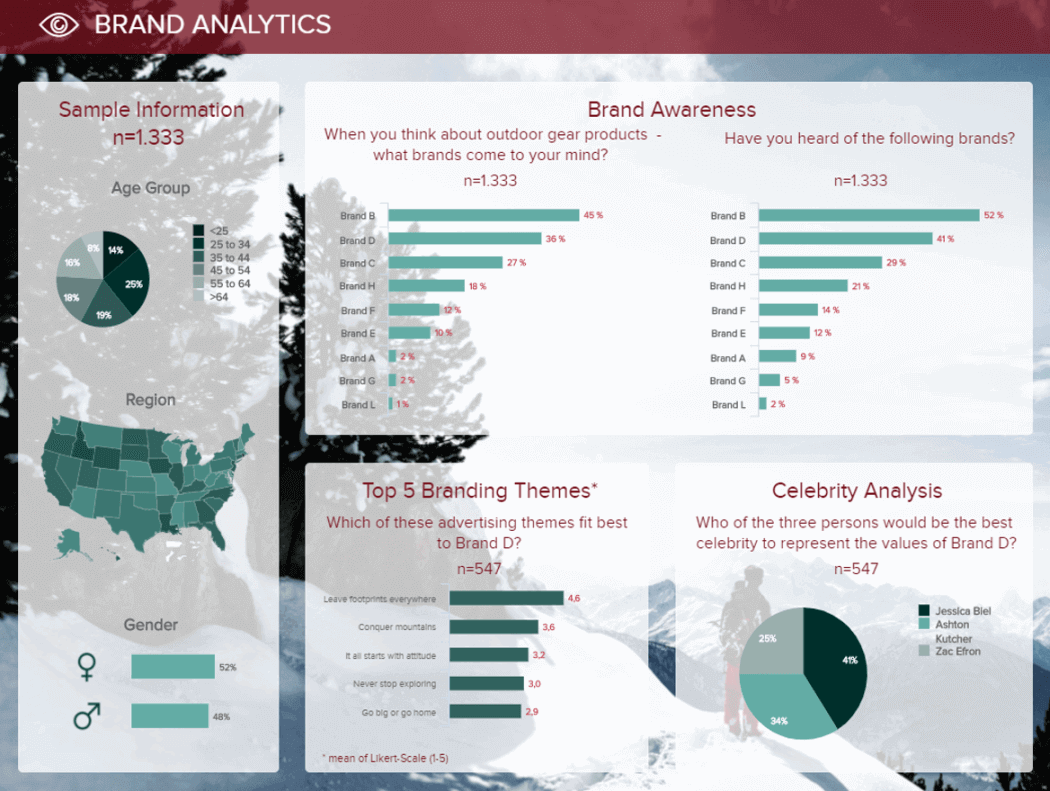

2. Brand Analysis Dashboard

Next, in our list of data interpretation examples, we have a template that shows the answers to a survey on awareness for Brand D. The sample size is listed on top to get a perspective of the data, which is represented using interactive charts and graphs.

When interpreting information, context is key to understanding it correctly. For that reason, the dashboard starts by offering insights into the demographics of the surveyed audience. In general, we can see ages and gender are diverse. Therefore, we can conclude these brands are not targeting customers from a specified demographic, an important aspect to put the surveyed answers into perspective.

Looking at the awareness portion, we can see that brand B is the most popular one, with brand D coming second on both questions. This means brand D is not doing wrong, but there is still room for improvement compared to brand B. To see where brand D could improve, the researcher could go into the bottom part of the dashboard and consult the answers for branding themes and celebrity analysis. These are important as they give clear insight into what people and messages the audience associates with brand D. This is an opportunity to exploit these topics in different ways and achieve growth and success.

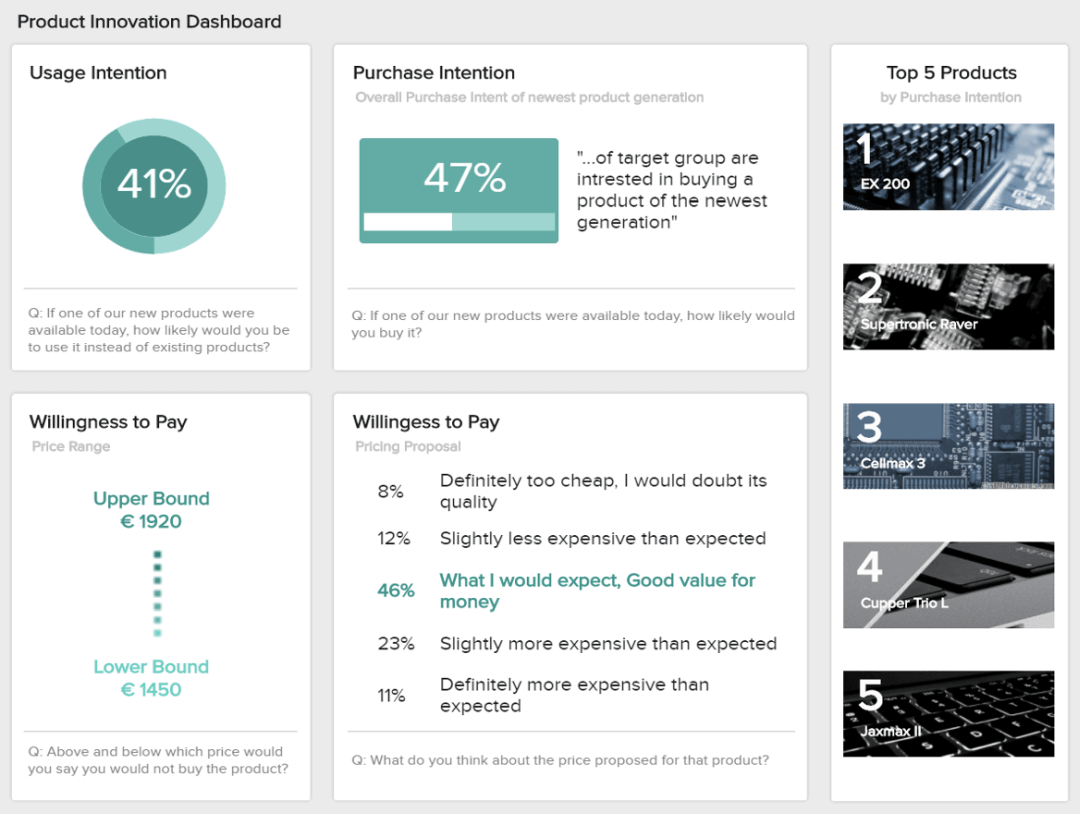

3. Product Innovation Dashboard

Our third and last dashboard example shows the answers to a survey on product innovation for a technology company. Just like the previous templates, the interactive and visual nature of the dashboard makes it the perfect tool to interpret data efficiently and effectively.

Starting from right to left, we first get a list of the top 5 products by purchase intention. This information lets us understand if the product being evaluated resembles what the audience already intends to purchase. It is a great starting point to see how customers would respond to the new product. This information can be complemented with other key metrics displayed in the dashboard. For example, the usage and purchase intention track how the market would receive the product and if they would purchase it, respectively. Interpreting these values as positive or negative will depend on the company and its expectations regarding the survey.

Complementing these metrics, we have the willingness to pay. Arguably, one of the most important metrics to define pricing strategies. Here, we can see that most respondents think the suggested price is a good value for money. Therefore, we can interpret that the product would sell for that price.

To see more data analysis and interpretation examples for different industries and functions, visit our library of business dashboards .

To Conclude…

As we reach the end of this insightful post about data interpretation and analysis, we hope you have a clear understanding of the topic. We've covered the definition and given some examples and methods to perform a successful interpretation process.

The importance of data interpretation is undeniable. Dashboards not only bridge the information gap between traditional data interpretation methods and technology, but they can help remedy and prevent the major pitfalls of the process. As a digital age solution, they combine the best of the past and the present to allow for informed decision-making with maximum data interpretation ROI.

To start visualizing your insights in a meaningful and actionable way, test our online reporting software for free with our 14-day trial !

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Data Interpretation: Definition and Steps with Examples

A good data interpretation process is key to making your data usable. It will help you make sure you’re drawing the correct conclusions and acting on your information.

No matter what, data is everywhere in the modern world. There are two groups and organizations: those drowning in data or not using it appropriately and those benefiting.

In this blog, you will learn the definition of data interpretation and its primary steps and examples.

What is Data Interpretation

Data interpretation is the process of reviewing data and arriving at relevant conclusions using various analytical research methods. Data analysis assists researchers in categorizing, manipulating data , and summarizing data to answer critical questions.

LEARN ABOUT: Level of Analysis

In business terms, the interpretation of data is the execution of various processes. This process analyzes and revises data to gain insights and recognize emerging patterns and behaviors. These conclusions will assist you as a manager in making an informed decision based on numbers while having all of the facts at your disposal.

Importance of Data Interpretation

Raw data is useless unless it’s interpreted. Data interpretation is important to businesses and people. The collected data helps make informed decisions.

Make better decisions

Any decision is based on the information that is available at the time. People used to think that many diseases were caused by bad blood, which was one of the four humors. So, the solution was to get rid of the bad blood. We now know that things like viruses, bacteria, and immune responses can cause illness and can act accordingly.

In the same way, when you know how to collect and understand data well, you can make better decisions. You can confidently choose a path for your organization or even your life instead of working with assumptions.

The most important thing is to follow a transparent process to reduce mistakes and tiredness when making decisions.

Find trends and take action

Another practical use of data interpretation is to get ahead of trends before they reach their peak. Some people have made a living by researching industries, spotting trends, and then making big bets on them.

LEARN ABOUT: Action Research

With the proper data interpretations and a little bit of work, you can catch the start of trends and use them to help your business or yourself grow.

Better resource allocation

The last importance of data interpretation we will discuss is the ability to use people, tools, money, etc., more efficiently. For example, If you know via strong data interpretation that a market is underserved, you’ll go after it with more energy and win.

In the same way, you may find out that a market you thought was a good fit is actually bad. This could be because the market is too big for your products to serve, there is too much competition, or something else.

No matter what, you can move the resources you need faster and better to get better results.

What are the steps in interpreting data?

Here are some steps to interpreting data correctly.

Gather the data

The very first step in data interpretation is gathering all relevant data. You can do this by first visualizing it in a bar, graph, or pie chart. This step aims to analyze the data accurately and without bias. Now is the time to recall how you conducted your research.

Here are two question patterns that will help you to understand better.

- Were there any flaws or changes that occurred during the data collection process?

- Have you saved any observatory notes or indicators?

You can proceed to the next stage when you have all of your data.

- Develop your discoveries

This is a summary of your findings. Here, you thoroughly examine the data to identify trends, patterns, or behavior. If you are researching a group of people using a sample population, this is the section where you examine behavioral patterns. You can compare these deductions to previous data sets, similar data sets, or general hypotheses in your industry. This step’s goal is to compare these deductions before drawing any conclusions.

- Draw Conclusions

After you’ve developed your findings from your data sets, you can draw conclusions based on your discovered trends. Your findings should address the questions that prompted your research. If they do not respond, inquire about why; it may produce additional research or questions.

LEARN ABOUT: Research Process Steps

- Give recommendations

The interpretation procedure of data comes to a close with this stage. Every research conclusion must include a recommendation. As recommendations are a summary of your findings and conclusions, they should be brief. There are only two options for recommendations; you can either recommend a course of action or suggest additional research.

Data interpretation examples

Here are two examples of data interpretations to help you understand it better:

Let’s say your users fall into four age groups. So a company can see which age group likes their content or product. Based on bar charts or pie charts, they can develop a marketing strategy to reach uninvolved groups or an outreach strategy to grow their core user base.

Another example of data analysis is the use of recruitment CRM by businesses. They utilize it to find candidates, track their progress, and manage their entire hiring process to determine how they can better automate their workflow.

Overall, data interpretation is an essential factor in data-driven decision-making. It should be performed on a regular basis as part of an iterative interpretation process. Investors, developers, and sales and acquisition professionals can benefit from routine data interpretation. It is what you do with those insights that determine the success of your business.

Contact QuestionPro experts if you need assistance conducting research or creating a data analysis. We can walk you through the process and help you make the most of your data.

MORE LIKE THIS

Life@QuestionPro: The Journey of Kristie Lawrence

Jun 7, 2024

How Can I Help You? — Tuesday CX Thoughts

Jun 5, 2024

Why Multilingual 360 Feedback Surveys Provide Better Insights

Jun 3, 2024

Raked Weighting: A Key Tool for Accurate Survey Results

May 31, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

What is Data Interpretation? Tools, Techniques, Examples

By Hady ElHady

July 14, 2023

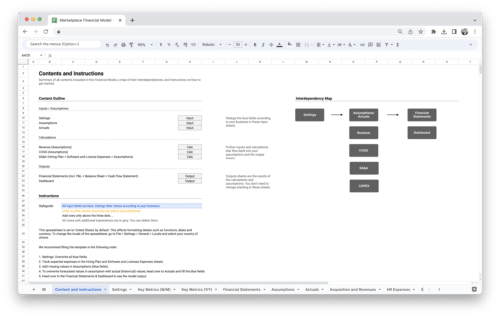

Get Started With a Prebuilt Model

Start with a free template and upgrade when needed.

In today’s data-driven world, the ability to interpret and extract valuable insights from data is crucial for making informed decisions. Data interpretation involves analyzing and making sense of data to uncover patterns, relationships, and trends that can guide strategic actions.

Whether you’re a business professional, researcher, or data enthusiast, this guide will equip you with the knowledge and techniques to master the art of data interpretation.

What is Data Interpretation?

Data interpretation is the process of analyzing and making sense of data to extract valuable insights and draw meaningful conclusions. It involves examining patterns, relationships, and trends within the data to uncover actionable information. Data interpretation goes beyond merely collecting and organizing data; it is about extracting knowledge and deriving meaningful implications from the data at hand.

Why is Data Interpretation Important?

In today’s data-driven world, data interpretation holds immense importance across various industries and domains. Here are some key reasons why data interpretation is crucial:

- Informed Decision-Making: Data interpretation enables informed decision-making by providing evidence-based insights. It helps individuals and organizations make choices supported by data-driven evidence, rather than relying on intuition or assumptions .

- Identifying Opportunities and Risks: Effective data interpretation helps identify opportunities for growth and innovation. By analyzing patterns and trends within the data, organizations can uncover new market segments, consumer preferences, and emerging trends. Simultaneously, data interpretation also helps identify potential risks and challenges that need to be addressed proactively.

- Optimizing Performance: By analyzing data and extracting insights, organizations can identify areas for improvement and optimize their performance. Data interpretation allows for identifying bottlenecks, inefficiencies, and areas of optimization across various processes, such as supply chain management, production, and customer service.

- Enhancing Customer Experience: Data interpretation plays a vital role in understanding customer behavior and preferences. By analyzing customer data, organizations can personalize their offerings, improve customer experience, and tailor marketing strategies to target specific customer segments effectively.

- Predictive Analytics and Forecasting: Data interpretation enables predictive analytics and forecasting, allowing organizations to anticipate future trends and make strategic plans accordingly. By analyzing historical data patterns, organizations can make predictions and forecast future outcomes, facilitating proactive decision-making and risk mitigation.

- Evidence-Based Research and Policy Making: In fields such as healthcare, social sciences, and public policy, data interpretation plays a crucial role in conducting evidence-based research and policy-making. By analyzing relevant data, researchers and policymakers can identify trends, assess the effectiveness of interventions, and make informed decisions that impact society positively.

- Competitive Advantage: Organizations that excel in data interpretation gain a competitive edge. By leveraging data insights, organizations can make informed strategic decisions, innovate faster, and respond promptly to market changes. This enables them to stay ahead of their competitors in today’s fast-paced business environment.

In summary, data interpretation is essential for leveraging the power of data and transforming it into actionable insights. It enables organizations and individuals to make informed decisions, identify opportunities and risks, optimize performance, enhance customer experience, predict future trends, and gain a competitive advantage in their respective domains.

The Role of Data Interpretation in Decision-Making Processes

Data interpretation plays a crucial role in decision-making processes across organizations and industries. It empowers decision-makers with valuable insights and helps guide their actions. Here are some key roles that data interpretation fulfills in decision-making:

- Informing Strategic Planning : Data interpretation provides decision-makers with a comprehensive understanding of the current state of affairs and the factors influencing their organization or industry. By analyzing relevant data, decision-makers can assess market trends, customer preferences, and competitive landscapes. These insights inform the strategic planning process, guiding the formulation of goals, objectives, and action plans.

- Identifying Problem Areas and Opportunities: Effective data interpretation helps identify problem areas and opportunities for improvement. By analyzing data patterns and trends, decision-makers can identify bottlenecks, inefficiencies, or underutilized resources. This enables them to address challenges and capitalize on opportunities, enhancing overall performance and competitiveness.

- Risk Assessment and Mitigation: Data interpretation allows decision-makers to assess and mitigate risks. By analyzing historical data, market trends, and external factors, decision-makers can identify potential risks and vulnerabilities. This understanding helps in developing risk management strategies and contingency plans to mitigate the impact of risks and uncertainties.

- Facilitating Evidence-Based Decision-Making: Data interpretation enables evidence-based decision-making by providing objective insights and factual evidence. Instead of relying solely on intuition or subjective opinions, decision-makers can base their choices on concrete data-driven evidence. This leads to more accurate and reliable decision-making, reducing the likelihood of biases or errors.

- Measuring and Evaluating Performance: Data interpretation helps decision-makers measure and evaluate the performance of various aspects of their organization. By analyzing key performance indicators (KPIs) and relevant metrics, decision-makers can track progress towards goals, assess the effectiveness of strategies and initiatives, and identify areas for improvement. This data-driven evaluation enables evidence-based adjustments and ensures that resources are allocated optimally.

- Enabling Predictive Analytics and Forecasting: Data interpretation plays a critical role in predictive analytics and forecasting. Decision-makers can analyze historical data patterns to make predictions and forecast future trends. This capability empowers organizations to anticipate market changes, customer behavior, and emerging opportunities. By making informed decisions based on predictive insights, decision-makers can stay ahead of the curve and proactively respond to future developments.

- Supporting Continuous Improvement: Data interpretation facilitates a culture of continuous improvement within organizations. By regularly analyzing data, decision-makers can monitor performance, identify areas for enhancement, and implement data-driven improvements. This iterative process of analyzing data, making adjustments, and measuring outcomes enables organizations to continuously refine their strategies and operations.

In summary, data interpretation is integral to effective decision-making. It informs strategic planning, identifies problem areas and opportunities, assesses and mitigates risks, facilitates evidence-based decision-making, measures performance, enables predictive analytics, and supports continuous improvement. By harnessing the power of data interpretation, decision-makers can make well-informed, data-driven decisions that lead to improved outcomes and success in their endeavors.

Understanding Data

Before delving into data interpretation, it’s essential to understand the fundamentals of data. Data can be categorized into qualitative and quantitative types, each requiring different analysis methods. Qualitative data represents non-numerical information, such as opinions or descriptions, while quantitative data consists of measurable quantities.

Types of Data

- Qualitative data: Includes observations, interviews, survey responses, and other subjective information.

- Quantitative data: Comprises numerical data collected through measurements, counts, or ratings.

Data Collection Methods

To perform effective data interpretation, you need to be aware of the various methods used to collect data. These methods can include surveys, experiments, observations, interviews, and more. Proper data collection techniques ensure the accuracy and reliability of the data.

Data Sources and Reliability

When working with data, it’s important to consider the source and reliability of the data. Reliable sources include official statistics, reputable research studies, and well-designed surveys. Assessing the credibility of the data source helps you determine its accuracy and validity.

Data Preprocessing and Cleaning

Before diving into data interpretation, it’s crucial to preprocess and clean the data to remove any inconsistencies or errors. This step involves identifying missing values, outliers, and data inconsistencies, as well as handling them appropriately. Data preprocessing ensures that the data is in a suitable format for analysis.

Exploratory Data Analysis: Unveiling Insights from Data

Exploratory Data Analysis (EDA) is a vital step in data interpretation, helping you understand the data’s characteristics and uncover initial insights. By employing various graphical and statistical techniques, you can gain a deeper understanding of the data patterns and relationships.

Univariate Analysis

Univariate analysis focuses on examining individual variables in isolation, revealing their distribution and basic characteristics . Here are some common techniques used in univariate analysis:

- Histograms: Graphical representations of the frequency distribution of a variable. Histograms display data in bins or intervals, providing a visual depiction of the data’s distribution.

- Box plots: Box plots summarize the distribution of a variable by displaying its quartiles, median, and any potential outliers. They offer a concise overview of the data’s central tendency and spread.

- Frequency distributions: Tabular representations that show the number of occurrences or frequencies of different values or ranges of a variable.

Bivariate Analysis

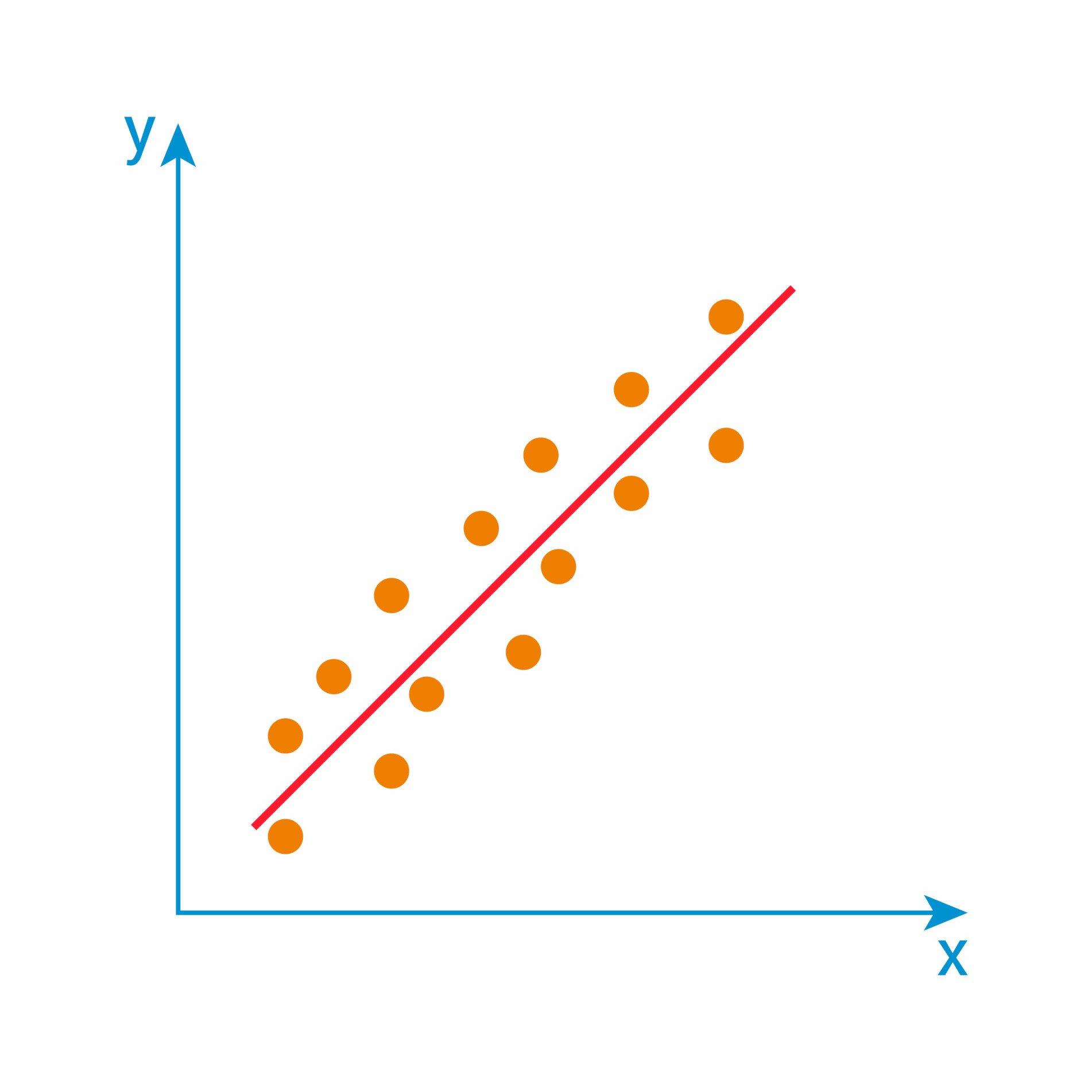

Bivariate analysis explores the relationship between two variables, examining how they interact and influence each other. By visualizing and analyzing the connections between variables, you can identify correlations and patterns. Some common techniques for bivariate analysis include:

- Scatter plots: Graphical representations that display the relationship between two continuous variables. Scatter plots help identify potential linear or nonlinear associations between the variables.

- Correlation analysis: Statistical measure of the strength and direction of the relationship between two variables. Correlation coefficients, such as Pearson’s correlation coefficient, range from -1 to 1, with higher absolute values indicating stronger correlations.

- Heatmaps: Visual representations that use color intensity to show the strength of relationships between two categorical variables. Heatmaps help identify patterns and associations between variables.

Multivariate Analysis

Multivariate analysis involves the examination of three or more variables simultaneously. This analysis technique provides a deeper understanding of complex relationships and interactions among multiple variables. Some common methods used in multivariate analysis include:

- Dimensionality reduction techniques: Approaches like Principal Component Analysis (PCA) or t-Distributed Stochastic Neighbor Embedding (t-SNE) reduce high-dimensional data into lower dimensions, simplifying analysis and visualization.

- Cluster analysis: Grouping data points based on similarities or dissimilarities. Cluster analysis helps identify patterns or subgroups within the data.

Descriptive Statistics: Understanding Data’s Central Tendency and Variability

Descriptive statistics provides a summary of the main features of a dataset, focusing on measures of central tendency and variability. These statistics offer a comprehensive overview of the data’s characteristics and aid in understanding its distribution and spread.

Measures of Central Tendency

Measures of central tendency describe the central or average value around which the data tends to cluster. Here are some commonly used measures of central tendency:

- Mean: The arithmetic average of a dataset, calculated by summing all values and dividing by the total number of observations.

- Median: The middle value in a dataset when arranged in ascending or descending order. The median is less sensitive to extreme values than the mean.

- Mode: The most frequently occurring value in a dataset.

Measures of Dispersion

Measures of dispersion quantify the spread or variability of the data points. Understanding variability is essential for assessing the data’s reliability and drawing meaningful conclusions. Common measures of dispersion include:

- Range: The difference between the maximum and minimum values in a dataset, providing a simple measure of spread.

- Variance: The average squared deviation from the mean, measuring the dispersion of data points around the mean.

- Standard Deviation: The square root of the variance, representing the average distance between each data point and the mean.

Percentiles and Quartiles

Percentiles and quartiles divide the dataset into equal parts, allowing you to understand the distribution of values within specific ranges. They provide insights into the relative position of individual data points in comparison to the entire dataset.

- Percentiles: Divisions of data into 100 equal parts, indicating the percentage of values that fall below a given value. The median corresponds to the 50th percentile.

- Quartiles: Divisions of data into four equal parts, denoted as the first quartile (Q1), median (Q2), and third quartile (Q3). The interquartile range (IQR) measures the spread between Q1 and Q3.

Skewness and Kurtosis

Skewness and kurtosis measure the shape and distribution of data. They provide insights into the symmetry, tail heaviness, and peakness of the distribution.

- Skewness: Measures the asymmetry of the data distribution. Positive skewness indicates a longer tail on the right side, while negative skewness suggests a longer tail on the left side.

- Kurtosis: Measures the peakedness or flatness of the data distribution. Positive kurtosis indicates a sharper peak and heavier tails, while negative kurtosis suggests a flatter peak and lighter tails.

Inferential Statistics: Drawing Inferences and Making Hypotheses

Inferential statistics involves making inferences and drawing conclusions about a population based on a sample of data. It allows you to generalize findings beyond the observed data and make predictions or test hypotheses. This section covers key techniques and concepts in inferential statistics.

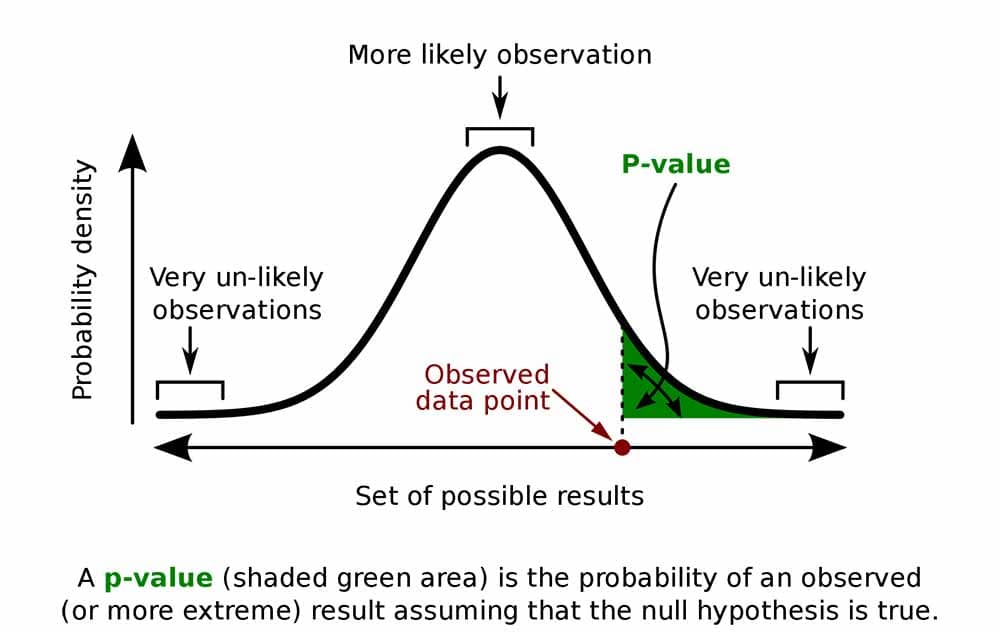

Hypothesis Testing

Hypothesis testing involves making statistical inferences about population parameters based on sample data. It helps determine the validity of a claim or hypothesis by examining the evidence provided by the data. The hypothesis testing process typically involves the following steps:

- Formulate hypotheses: Define the null hypothesis (H0) and alternative hypothesis (Ha) based on the research question or claim.

- Select a significance level: Determine the acceptable level of error (alpha) to guide the decision-making process.

- Collect and analyze data: Gather and analyze the sample data using appropriate statistical tests.

- Calculate the test statistic: Compute the test statistic based on the selected test and the sample data.

- Determine the critical region: Identify the critical region based on the significance level and the test statistic’s distribution.

- Make a decision: Compare the test statistic with the critical region and either reject or fail to reject the null hypothesis.

- Draw conclusions: Interpret the results and make conclusions based on the decision made in the previous step.

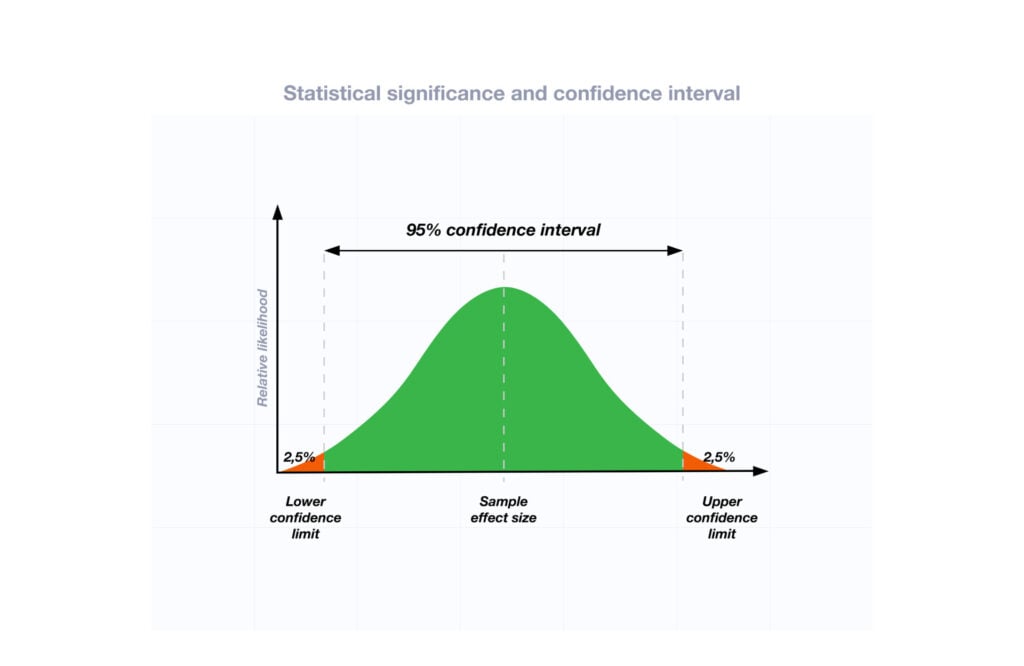

Confidence Intervals

Confidence intervals provide a range of values within which the population parameter is likely to fall. They quantify the uncertainty associated with estimating population parameters based on sample data. The construction of a confidence interval involves:

- Select a confidence level: Choose the desired level of confidence, typically expressed as a percentage (e.g., 95% confidence level).

- Compute the sample statistic: Calculate the sample statistic (e.g., sample mean) from the sample data.

- Determine the margin of error: Determine the margin of error, which represents the maximum likely distance between the sample statistic and the population parameter.

- Construct the confidence interval: Establish the upper and lower bounds of the confidence interval using the sample statistic and the margin of error.

- Interpret the confidence interval: Interpret the confidence interval in the context of the problem, acknowledging the level of confidence and the potential range of population values.

Parametric and Non-parametric Tests

In inferential statistics, different tests are used based on the nature of the data and the assumptions made about the population distribution. Parametric tests assume specific population distributions, such as the normal distribution, while non-parametric tests make fewer assumptions. Some commonly used parametric and non-parametric tests include:

- t-tests: Compare means between two groups or assess differences in paired observations.

- Analysis of Variance (ANOVA): Compare means among multiple groups.

- Chi-square test: Assess the association between categorical variables.

- Mann-Whitney U test: Compare medians between two independent groups.

- Kruskal-Wallis test: Compare medians among multiple independent groups.

- Spearman’s rank correlation: Measure the strength and direction of monotonic relationships between variables.

Correlation and Regression Analysis

Correlation and regression analysis explore the relationship between variables, helping understand how changes in one variable affect another. These analyses are particularly useful in predicting and modeling outcomes based on explanatory variables.

- Correlation analysis: Determines the strength and direction of the linear relationship between two continuous variables using correlation coefficients, such as Pearson’s correlation coefficient.

- Regression analysis: Models the relationship between a dependent variable and one or more independent variables, allowing you to estimate the impact of the independent variables on the dependent variable. It provides insights into the direction, magnitude, and significance of these relationships.

Data Interpretation Techniques: Unlocking Insights for Informed Decisions

Data interpretation techniques enable you to extract actionable insights from your data, empowering you to make informed decisions. We’ll explore key techniques that facilitate pattern recognition, trend analysis , comparative analysis , predictive modeling, and causal inference.

Pattern Recognition and Trend Analysis

Identifying patterns and trends in data helps uncover valuable insights that can guide decision-making. Several techniques aid in recognizing patterns and analyzing trends:

- Time series analysis: Analyzes data points collected over time to identify recurring patterns and trends.

- Moving averages: Smooths out fluctuations in data, highlighting underlying trends and patterns.

- Seasonal decomposition: Separates a time series into its seasonal, trend, and residual components.

- Cluster analysis: Groups similar data points together, identifying patterns or segments within the data.

- Association rule mining: Discovers relationships and dependencies between variables, uncovering valuable patterns and trends.

Comparative Analysis

Comparative analysis involves comparing different subsets of data or variables to identify similarities, differences, or relationships. This analysis helps uncover insights into the factors that contribute to variations in the data.

- Cross-tabulation: Compares two or more categorical variables to understand the relationships and dependencies between them.

- ANOVA (Analysis of Variance): Assesses differences in means among multiple groups to identify significant variations.

- Comparative visualizations: Graphical representations, such as bar charts or box plots, help compare data across categories or groups.

Predictive Modeling and Forecasting

Predictive modeling uses historical data to build mathematical models that can predict future outcomes. This technique leverages machine learning algorithms to uncover patterns and relationships in data, enabling accurate predictions.

- Regression models: Build mathematical equations to predict the value of a dependent variable based on independent variables.

- Time series forecasting: Utilizes historical time series data to predict future values, considering factors like trend, seasonality, and cyclical patterns.

- Machine learning algorithms: Employ advanced algorithms, such as decision trees, random forests, or neural networks, to generate accurate predictions based on complex data patterns.

Causal Inference and Experimentation

Causal inference aims to establish cause-and-effect relationships between variables, helping determine the impact of certain factors on an outcome. Experimental design and controlled studies are essential for establishing causal relationships.