A review of ultrasonic sensing and machine learning methods to monitor industrial processes

Affiliations.

- 1 Food, Water, Waste Research Group, Faculty of Engineering, University of Nottingham, University Park, Nottingham NG7 2RD, UK.

- 2 School of Computer Science, Jubilee Campus, University of Nottingham, Nottingham NG8 1BB, UK.

- 3 Food, Water, Waste Research Group, Faculty of Engineering, University of Nottingham, University Park, Nottingham NG7 2RD, UK. Electronic address: [email protected].

- PMID: 35653984

- DOI: 10.1016/j.ultras.2022.106776

Supervised machine learning techniques are increasingly being combined with ultrasonic sensor measurements owing to their strong performance. These techniques also offer advantages over calibration procedures of more complex fitting, improved generalisation, reduced development time, ability for continuous retraining, and the correlation of sensor data to important process information. However, their implementation requires expertise to extract and select appropriate features from the sensor measurements as model inputs, select the type of machine learning algorithm to use, and find a suitable set of model hyperparameters. The aim of this article is to facilitate implementation of machine learning techniques in combination with ultrasonic measurements for in-line and on-line monitoring of industrial processes and other similar applications. The article first reviews the use of ultrasonic sensors for monitoring processes, before reviewing the combination of ultrasonic measurements and machine learning. We include literature from other sectors such as structural health monitoring. This review covers feature extraction, feature selection, algorithm choice, hyperparameter selection, data augmentation, domain adaptation, semi-supervised learning and machine learning interpretability. Finally, recommendations for applying machine learning to the reviewed processes are made.

Keywords: Deep learning; Domain adaptation; Industrial digital technologies; Machine learning; Transfer learning; Ultrasonic measurements.

Copyright © 2022 The Author(s). Published by Elsevier B.V. All rights reserved.

Publication types

- Machine Learning*

- Monitoring, Physiologic

- Ultrasonics*

I. INTRODUCTION

A. the motivation of this survey and comparison to other surveys, b. contribution, c. organization of this article, ii. conventional flowmeters and evolution of ultrasonic flowmeters, a. magnetic flowmeters, b. mechanical flowmeters, c. vortex flowmeters, d. ultrasonic flowmeters, iii. fpga and advanced processers for ultrasonic flowmeters, iv. deep learning for accuracy estimation in ultrasonic flowmeters, v. key findings and summary of this article, vi. conclusions, a comprehensive review on accuracy in ultrasonic flow measurement using reconfigurable systems and deep learning approaches.

- Split-Screen

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Open the PDF for in another window

- Reprints and Permissions

- Cite Icon Cite

- Search Site

Senthil Kumar J , Kamaraj A , Kalyana Sundaram C , Shobana G , Kirubakaran G; A comprehensive review on accuracy in ultrasonic flow measurement using reconfigurable systems and deep learning approaches. AIP Advances 1 October 2020; 10 (10): 105221. https://doi.org/10.1063/5.0022154

Download citation file:

- Ris (Zotero)

- Reference Manager

Flow rates of fuel are a major control variable in the engines of airborne vehicles. Accuracy in flow rate measurements became a mandatory requirement for the testing and reliable operation of those engines. Flow rate measurement is also essential in food industries, automotive industries, and chemical industries. Flow rate measurement using ultrasonic transducers is an appropriate choice because of its unique properties when they are in contact with the gases and water medium, as well as larger diameter pipelines. Estimating the echo signal of the ultrasonic flowmeter is a challenging task; however, the processing tasks for real-time performance are mandatory for improving accuracy in flow rate measurements. This article reviews the improvement in the accuracy of flow rate measurements of liquids and gases incorporating the modern technological trends with the support of field programmable gate arrays, digital signal processors, other advanced processors, and deep learning approaches. The review also elaborates on the reduction of uncertainty in single path and multi-path ultrasonic flowmeters. Finally, future research prospects are put forward for developing low cost, reliable, and accurate ultrasonic flowmeters for extensive categories of industrial applications.

Generally, a flowmeter measures the amount of liquid, gas, or vapor moving through a pipe or conduit. The basic requirements for any flow measurement techniques are their abilities to calibrate and integrate flow fluctuation with pipes, improved accuracy, high turn-down ratio, low cost, low sensitivity to dirt particles, low-pressure loss, no moving parts, and resistance to corrosion and erosion. There are many types of flowmeters available for use in industrial automation. Most of them fall under the category of mechanical, pressure, thermal, optical, electromagnetic, and ultrasonic flowmeters. Depending on the physical parameters to be measured, the flowmeters may be used for measuring liquid or gas by applying some basic principles of physics and engineering to analyze the working methodologies of flowmeters.

Studies have concluded the usage of digital meters over analog positive feedback systems maintains appropriate flow tube oscillations. 1 The development of flowmeters based on a field programmable gate array (FPGA) provides highly stable driving signals to probe the sensors and provides accuracy in flow estimations. 2 High-speed, highly reliable, and high-precision measurements of an ultrasonic flowmeter are developed by Qi et al. 3 FPGAs can also be configured as transit-time flowmeter time measurement circuits. 4 They are also used to collect the signal and process the acquired data at high speed. 5 The waveform of the excitation signals has to be stored in the read-only memory module of the FPGA chip.

The usage of a digital signal processor (DSP) for the design and implementation of flowmeters increases the accuracy in the propagation time, which is obtained as the difference in measuring ultrasonic signals. 6 DSPs are also being programmed and configured for reducing the measurement errors to obtain improved precision in calculating accurate and reliable flow measurements. 7 The echo signal is sent into the DSP chip to be processed for obtaining the gas flow rate. 5 A complete ultrasonic gas flowmeter needs ultrasonic gas transducers, transmitting/receiving signal channel switch circuits, driving signal generation circuits, sensor components, and amplification circuits as vital components.

Deep learning architectures are inspired by the biological aspects of the senses similar to human brains. Computers and powerful graphical processing units (GPUs) can emulate such deep learning architectures. Human brains normally learn easier concepts first and hierarchically organize them to learn more sophisticated and abstract ideas. Being inspired and driven by this learning technique, investigators have devoted many efforts including multiple levels of abstraction and processed them to solve complex computational problems. Few researchers have utilized the power of deep learning architectures for flow rate measurements. 8 With the volume of data available and being trained, those deep learning architectures are configured to classify and estimate the flow rates of liquids and gases with high accuracy. 9

In this review, the intention is to provide an outline of the progressive technologies in the ultrasonic based flowmeter measurement techniques. Another significant aim is to summarize the major emerging technologies such as the usage of FPGAs as reconfigurable systems and other advanced processors to support the accuracy enhancement inflow measurements. 10 Additional noteworthy discussions are focused on the usage of deep learning and deep neural networks for the accurate flow rate estimation in ultrasonic flowmeters.

On the other hand, a large number of applications were put forward with the advancements in the technologies using modern flowmeters. It is significant to consider the accuracy in the flow rate of underlying modern technologies with analyses of the flow data observed from the systems. Table I summarizes the surveys related to the usage of various flowmeters and their applications. The summary reveals the significant contributions from those articles as well as their relevance to the proposed theme of the survey in this article.

Summary of surveys related to flowmeters and their applications.

The major contributions of this review article are listed as follows:

The evolution of a flowmeter based on the different landscapes of flow measurements is studied.

The key areas of flowmeter applications across different domains are identified from the state-of-the-art literature.

The impact of an ultrasonic flowmeter on maximizing the accuracy and performance in flow rate measurements is highlighted.

A comprehensive view of the usage of FPGAs and other processors and their significant contributions toward flow measurements are surveyed from the recent literature.

Accomplishments of flow rate measurements with the support of standard deep learning frameworks and deep neural networks are discussed from the literature.

Future research directions are highlighted in the stream of flow rate measurement with modern strategies and artificial intelligence.

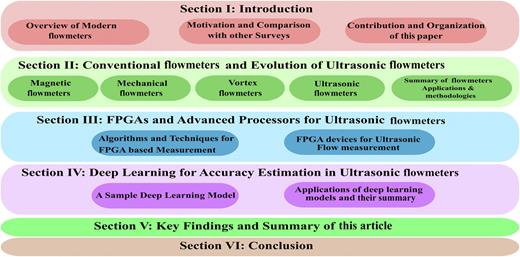

This review article is organized as follows: Sec. II discusses the evolution of conventional flowmeters and ultrasonic flowmeters in the market. It also elaborates on and summarizes the growth of ultrasonic flowmeters and the impact of ultrasonic technology on flow rate measurement applications of liquids and gases. Section III summarizes the utilization of the modern technology FPGA and advanced processors for driving, supporting, and accurate computation of flow rate measurements. Section IV discusses the learning approaches dealt with the aid of deep neural networks and their impact on accuracy measurements in flowmeters. Section V elaborates on the key findings and summary of this article toward accurate flow rate measurements along with the future scope of research left as an open-end problem. Finally, Sec. VI concludes with the key impact on the society. Figure 1 shows the overall organization of this article.

Overview of organization of this article.

In magnetic flowmeters, the measured liquid must be water-based or conductive. This makes the magnetic flowmeter one of the excellent choices for wastewater flow measurement. Magnetic meters are volumetric meters that have no moving parts. They are ideal for those choices, where we need not be exposed to the measured liquid while working on the meter. The working logic of magnetic flowmeters is based on Faraday’s law. Its main requirement is the liquid under which the test is performed should be conductive. The measured voltage is dependent on the average velocity of the liquid, the strength of the magnetic field, and the length of the conductor or the distance between the electrodes. Magnetic flowmeters are simplest in terms of their construction, and they have no moving parts. However, they work only for conducive measurements.

Mechanical flowmeters have some moving internal parts, which may not fit with specific applications such as effluent waters that carry larger particles. It may damage or obstruct the internal parts of the meters. The mechanical flowmeters have a rotational device inside such a paddle wheel or propeller. The liquid flowing through a pipe causes the rotation of the internal paddle, which creates a flow rate that is proportional to the rotational speed of the internal paddle. The faster the paddle rotates, the more the flow occurring through the pipe, which in turn sends a graduated signal to the controller. The pipe size and temperature of the water flowing through the meter are correlated with the rotational speed with a unit measurement such as gallons per minute.

In the differential pressure type of flowmeters, the flow path of liquid or gas is first blocked with a primary element and the difference between the applied pressures is measured before and after the primary element. A vibrating tube is present in the Coriolis method, in which the fluid passes through the vibrating tube. The amplitude of vibration will be proportional to the mass flow of liquid. Using an open channel or variable area type of flow measurement meters ensures to calculate the flow rate from the velocity, level, diameter, area, or depth information. All the categories of mechanical flowmeters have mechanical parts used as measuring elements, and however, the accuracy and lifetime are less due to the moving mechanical parts.

Vortex flowmeters measure the vortices, which have a sensor tab that will bend and flex from side to side as the vortex passes. The bend and flex action will then produce an output frequency proportional to the volumetric flow. In the vortex flowmeters, the amount of bending is proportional to the flow rate, and however, the accuracy is less due to moving parts in vortex flowmeters, and it also leads to a loss in signal flow during the measurement process. Particle image velocimetry (PIV) based flow measurement is used in Taylor–Couette flow pattern experimentation in Ref. 32 . It helps solve flow instabilities by considering the nonlinear dynamics of the fluids.

In this type of flowmeter, an ultrasonic signal is transmitted downstream or in the direction of the flow, while another signal is transmitted upstream. The delta or the differential time is used to calculate the velocity of the liquid in the medium. The estimated velocity is then used to calculate the volumetric flow through the pipe.

Ultrasonic flowmeters have numerous advantages compared to other categories of flowmeters. The usage of ultrasonic flowmeters will not introduce any interference inflow of liquid. It is a good appropriate option for both gas and liquid measurements. However, the measurement can be improved by incorporating high-speed processors.

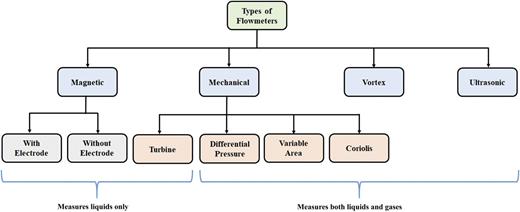

Hence, the ultrasonic flowmeter is a reliable meter for liquid and gas measurements. The summary of the types of flowmeters based on the measurement is shown in Fig. 2 . The characteristics and performance of various categories of flowmeters are listed in Table II . Based on Table II , magnetic and ultrasonic flowmeters are having better performance characteristics. However, the mechanical parts in the magnetic meter make it unsuitable for gas flow measurement.

Categories of flowmeters based on the technology used for measurement.

Characteristics and performance of flowmeters.

The volumetric flow rate of liquids and gases is measured to estimate the velocity of the fluid or gas in the medium. For recording the rate of flow, various linear, non-linear, mass, and volumetric rates can be computed with appropriate flow measurement devices. The flow rate F of any gas or liquid in any medium can be expressed as

where A f represents the flow area, C f is the flow coefficient, d is the density of the fuel, and p c represents the control chamber pressure. Based on the mentioned expression, flow rates are measured for various applications and methodologies listed in Table III .

Summary of applications and methodologies used for flow measurement.

Dynamic flowmeter calibration from small to medium flow rates in aero-engine tests is done using computational fluid dynamics (CFD) and a piezoelectric stack driven double nozzle–flapper valve. 33 The echo energy integral based signal processing method is proposed with calibration experiments performed to verify the validity of maximum and minimum measurable flow rates using the FPGA and DSP. 34 An ultrasonic flowmeter was developed for applications in cryogenic conditions; by using a steady-state Coriolis flowmeter, unsteady fluctuations of imposed disturbance are measured accurately. 35 Flow measurements at high-temperatures up to 320 °C are performed using Z-mode and V-mode configurations in a metallic wedge design of a pipe containing mineral oil. 36

An ultrasonic gas flowmeter is developed with the DSP and FPGA for analyzing different flow rates using gas flow by generating echo signal envelopes in calibration experiments. 37 A 2D flexural ultrasonic array transducer is developed to validate the flow measurement using the spatial averaging method on a flexural array of ultrasonic transducers. 38 The flow rate of individual phases in gas–liquid flows in a Venturi tube using a twin-plane capacitive sensor is done to determine the liquid flow rate independent of the flow patterns. 79

Fluid flow measurement with air bubbles was done as a single-phase flow measurement using an ultrasonic flowmeter at different temperatures through PVC and steel pipes. 39 Identification of two-phase gas/liquid flow regimes using a Doppler ultrasonic sensor and machine learning approaches was done for industrial practice. 40,44 Horizontal oil–gas–water three-phase flow non-intrusive measurement was done using ultrasound Doppler frequency shift in an oil–gas–water three-phase flow test loop system to obtain acceptable accuracy. 41

Coriolis two-phase flow metering solution for nitrogen/synthetic oil mixtures errors is determined by comparing the reference single-phase measurements based on a low dimensional polynomial of the observed temperature suited to high viscosity oil/gas mixtures. 42 The evaluation method for the ultrasonic flowmeter for the rectification effect of gasotron and its error indication in different installation conditions of computational fluid dynamics are optimized. 43

The ultrasonic sensor has a wide variety of applications. It not only is limited to electronics but also has been used across all the engineering fields. Table IV shows the general applications such as wind speed measurement and direction identification, 53 irrigation field for reducing the labor and water consumption, 54 target detection in military/navy and defense purposes, 10 and distance measurements with high accuracy for vehicle movement and accident avoidance system. 80

Summary of FPGA devices used for ultrasonic flow measurement applications.

Most commonly, the ultrasonic concept is used to measure the flow rate of liquids in the pipes. It may be single-phase or double phase flow. 55 In all the liquid flow measurements, clamp-on types of sensors are preferred for that purpose. 56 The methods used for measuring the flow rate fall under any one of the Doppler 55 or time difference 49 categories. In both methods, either single or arrays of sensors are used in the application. In addition, the performance factors commonly taken into account are stability, accuracy, and speed of measurement. Some additional features are added as a value addition for the flow measurement such as jitter and offset nullifying 57 web-based flow monitoring and leakage detection. Additionally, RAM, 60 automatic gain amplifier (AGA), finite impulse response (FIR), bus interfaces, arbitrary function generator (AFG), and URT are incorporated for better processing.

The acquired data have to be processed to reach the design objective. From the review on the literature, it is observed that all of them are incorporated configurable devices such as FPGAs, 49 microcontrollers, 54 and PLDs. 58 This shows that all of them are interested in having a dynamic configuration of the processing. On the FPGA device, Xilinx 47,48 and Altera 45,52 products are used for faster processing and huge resources. The design work is carried out in VHDL, Verilog, and C programming in terms of coding. However, in certain advanced levels, soft processor cores such as MicroBlaze Embedded Development Kit (EDK) 49 and NIOS 50 are used to adopt more sophisticated algorithms such as the Kalman filter, 49 FFT, 80 split-spectrum processing, 10 and spline algorithm. 45

More interestingly, the National Instruments (NI PXIe-7965R) FPGA is used to process 1.2 GB/s data from 225 arrays of sensors to measure the velocity of the liquid, 46 in which the magnetohydrodynamics (MHD) method is adopted. In addition, a bio-inspired visual sensor (housefly) is developed as Micro-Air-Vehicles (MAVs) for unmanned automatic vehicles in defense. 47

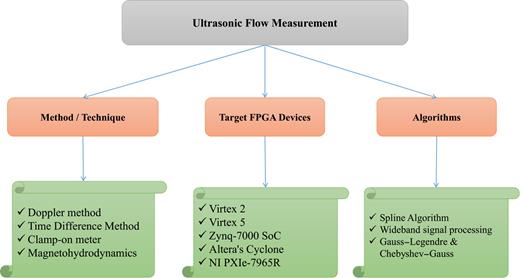

In summary, an ultrasonic sensor is used as a multi-purpose device in engineering solutions. The solutions are implemented in programmable devices with added features. Some special algorithms are incorporated to improve the performance factors such as processing speed, accuracy, and flow rate detection. Hence, the above literature analysis proves that the ultrasonic flowmeter is suitable for commercial applications, which is adopted with programmable devices that would improve the performance in real-time. Figure 3 lists various algorithms and techniques used along with FPGA devices for ultrasonic flow measurement applications. Table IV shows the summary of FPGA devices from the literature used for ultrasonic flow measurement applications.

Algorithms and techniques with FPGA devices for ultrasonic flow measurement.

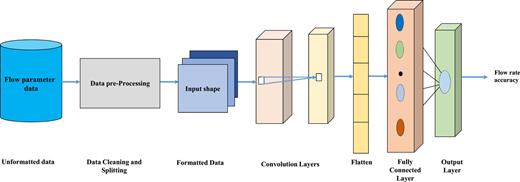

Deep learning neural network models such as the convolution neural network (CNN), long short-term memory (LSTM) and autoencoders are used for estimating the features from the collected data, to correlate with the flow of liquids and gases in the medium. Table V shows the summary of deep learning techniques used for ultrasonic flow measurement applications along with the supporting technologies and outcomes from the literature. Figure 4 shows a sample deep learning model followed for the flow rate accuracy estimation using the flow rate parameters on the input side of the deep neural network. Initial data would be raw data, which are subject to pre-processing, and they are shaped based on the input layer configuration of the deep neural network model. Formatted data flow through the neural network to estimate the accuracy of flow parameters in the final output layer of the model.

Summary of deep learning techniques used for ultrasonic flow measurement applications.

A sample deep learning model for flowmeter accuracy measurement.

A deep learning approach and impedance measurements for a multichannel complex system are used for multiphase flow characterization. 8 Label-free analysis and evaluation are performed using the deep learning-enabled holographic-reconstruction and phase-recovery frameworks. 59 For the network, saturation models and electromagnetic data are used, to test the sensitivity of the deep learning model to multiple electromagnetic components, generalization challenges, noises, and the ability to reconstruct layers of a 3D CNN. 9 Here, a U-Net deep learning network is altered to suit the application, and it is trained and validated.

Deep learning algorithm based image analysis methods are used to classify the accelerated and stable trickle flow in the trickle bed while monitoring the flow transition. 60 Droplet measurements are done by background extraction and value selection based on a threshold using video processing software. 74 Multiphase flow measurements using soft computing techniques are reviewed along with individual phase fractions and phase flow rates. 12 A seven-layer CNN is used for parameter measurement of gas–liquid two-phase flows in the small size pipe. 12 Bubble generative adversarial networks and image processing algorithms are used for extracting flow parameters from the time-averaged distribution of bubbly flow for the network providing better improvement in the quality of estimation. 61 The convolution neural network and machine learning are used to develop flow adversarial networks for discriminating flow rate and domain invariant features, and they provide better flow rate prediction. 62,75

An ensemble Kalman filter is used for updates and for testing the proposed deep LSTM approach, which shows that the predictions provide better divergence and performance. 63 A deep learning framework is developed with hidden fluid mechanics providing a simplified methodology for 2D and 3D flow measurements to extract accurate flow information. 64 Bubbly flow prediction is performed on the high and low fidelity data for exploring the simulation errors using a deep feedforward neural network to estimate the error in coarse mesh fluid dynamics for better industrial design flow prediction. 65 A deep belief network is developed for improved fault diagnostics of air-conditioning systems based on refrigerant flow using a variable flow refrigerant system. 66

A non-intrusive thermal flowmeter is developed to observe the thermal distribution of the pipe and flow rates using machine learning algorithms such as the K-nearest neighbor (KNN), DT, random forest, Ada, and gradient boost. 76 The development of bubble detecting algorithms is initiated by using the bubbly flow images from the labeled bubble dataset to provide a strong benchmark of training data and algorithms for the development of advanced bubble detecting algorithms. 67 Multichannel fluid nonlinear flow patterns are analyzed using a multivariate time series in a 50 mm diameter pipe to characterize flow patterns in the multichannel. 77 An artificial neural network (ANN)-based teaching–learning-based optimization technique measures the wellhead choke liquid flow rate using six different variables providing improved accuracy in prediction. 68

Recurrent R-U-Net and convolutional LSTM are trained to predict the accuracy in flow rates using channelized geological models to predict dynamic subsurface flows. 69 The liquid flow process is optimized using the flower pollination algorithm along with an ANN for testing of data subsets, and cross-validations are done to estimate the accuracy and optimize the liquid flow process. 70 Oil and gas well tests are done using data processing procedures through data acquired from good sensors and the combination of the recurrent neural network (RNN) with the CNN and LST network experiment on the data collected. 71 Coriolis mass flowmeter vibration signal and mass flow rate samples are fed to the LSTM, RNN, and ANN for evaluating the performance of a Coriolis mass flowmeter. 72 Gas valve leakage rate prediction is performed using a deep belief network prediction model with the available plug valve, and ball valve signals are collected using acoustic emission technology. The prediction model is proven to be superior in terms of accuracy in the prediction of the gas leakage rate. 73 2D CNNs are used to learn spatial features and to capture temporal correlations from high-speed images of combustion instability. 78

In this paper, first, the evolution of conventional flowmeters is summarized with their advantages and applications to identify the ultrasonic flowmeter as a better choice for flow rate measurement. Next, the impact of the ultrasonic flowmeter on maximizing the accuracy and performance in flow rate measurements is briefed. Following that, the usage of FPGAs/DSPs and their significant contributions toward flow measurements are surveyed from the recent literature. In addition, the support of deep learning frameworks and deep neural networks for the flow rate measurements is dealt with along with their future research prospects for developing economic, reliable, and accurate flow measurable ultrasonic meters for widespread groups of manufacturing and commercial applications.

Various industrial and commercial applications need flow measurement of liquid or gas. Based on their requirements, the mechanical, magnetic, pressure, optical, thermal, vortex, and ultrasonic meters are equipped for measurement. Since accuracy in flow measurement is the most significant factor in the present-day applications, mechanical, magnetic, and ultrasonic meters are performing better. In terms of repeatability, ultrasonic meters are performing much better as observed and summarized from the literature. The performance of the ultrasonic meter is improved by incorporating reconfigurable devices such as FPGAs, DSPs, and advanced processors for performance measurement. The computation of accuracy in flow measurement can be much improved by adopting deep neural networks in flow rate measurement. In the future, miniature bore ultrasonic sensors can be used for measuring the flow of liquid or gas using radar technology with high accuracy, stability, and repeatability for various applications.

Citing articles via

Submit your article.

Sign up for alerts

- Online ISSN 2158-3226

- For Researchers

- For Librarians

- For Advertisers

- Our Publishing Partners

- Physics Today

- Conference Proceedings

- Special Topics

pubs.aip.org

- Privacy Policy

- Terms of Use

Connect with AIP Publishing

This feature is available to subscribers only.

Sign In or Create an Account

We apologize for the inconvenience...

To ensure we keep this website safe, please can you confirm you are a human by ticking the box below.

If you are unable to complete the above request please contact us using the below link, providing a screenshot of your experience.

https://ioppublishing.org/contacts/

Experimental Study of Ultrasonic Wave Propagation in a Long Waveguide Sensor for Fluid-Level Sensing

- ACOUSTIC METHODS

- Published: 29 May 2024

- Volume 60 , pages 132–143, ( 2024 )

Cite this article

- Abhishek Kumar ORCID: orcid.org/0000-0002-4752-9548 1 &

- Suresh Periyannan ORCID: orcid.org/0000-0001-7316-168X 1

This work reports an ultrasonic long waveguide sensor for measuring the fluid level utilizing longitudinal L (0, 1), torsional T (0, 1), and flexural F (1, 1) wave modes. These wave modes were transmitted and received simultaneously using stainless-steel wire. A long waveguide (>12 m) covers a broader region of interest and is suitable in the process industry’s hostile environment applications, “fluid levels and temperature measurements.” In this work, we used fluids “diesel, water, and glycerin” for measuring fluid levels based on the sensor’s reflection factors from time domain and frequency domain signals. We examined the impact of wave mode attenuation effects for long waveguide sensor design while changing the waveguide lengths. Initially, we obtained the L (0, 1) and T (0, 1) modes reflections from the 12.6 m waveguide length when one end of the long waveguide was fixed with a shear transducer at 45° orientation. Subsequently, we want to study and identify all wave modes (especially F mode) travel distances. Hence, we would like to investigate the guided wave propagation characteristics (attenuation, ultrasonic velocity, and frequency of all wave modes) in the long waveguide while cutting systematically at intervals of 1 m, starting from its original length of the waveguide 12.6 m by analyzing the A-scan signals of various lengths of a single waveguide. This simple and cost-effective technique can monitor the high fluid depths and temperature in power plants, oil, and petrochemical industries while designing a long waveguide sensor with appropriate ultrasonic parameters.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Cummings, D.D., Wartmann, G., and Perdue, K.L., US Patent no. 5 661 251, 1997.

Grieger, B.D., and Cummings, D.D., US Patent no. 5 827 985, 1998.

Pelczarl, C., Meiners, M., Gould, D., Lang, W., and Benecke, W., Contactless liquid level sensing using wave damping phenomena in free-space, TRANSDUCERS 2007–2007 Int. Solid-State Sens. Actuat. Microsyst. Conf. (Lyon, 20070), pp. 2353–2356, https://doi.org/10.1109/SENSOR.2007.4300642 .

Xiaowei, D., and Ruifeng, Z., Detection of liquid-level variation using a side-polished fiber Bragg grating, Opt. Laser Technol., 2010, vol. 42, pp. 214–218.

Article Google Scholar

Peng, G., He, J., Yang, S., and Zhou, W., Application of the fiber-optic distributed temperature sensing for monitoring the liquid level of producing oil wells, Meas. J. Int. Meas. Confed., 2014, vol. 58, pp. 130–137.

Ran, Y., Xia, L., Niu, D., Wen, Y., Yu, C., and Liu, D., Design and demonstration of a liquid level fiber sensor based on self-imaging effect, Sens. Actuat. A Phys., 2016, vol. 237, pp. 41–46.

Article CAS Google Scholar

Rogers, S.C. and Miller, G.N., Level, temperature, and density sensor, IEEE Trans. Nucl. Sci., 1982, vol. 29, no. 1, pp. 665–668.

Lynnworth, L.C., Ultrasonic Measurements for Process Control : Theory, Techniques, Applications, Cambridge: Academic Press, 2013.

Google Scholar

Kim, J.O. et al., Torsional sensor applications in two-phase fluids, IEEE Trans. Ultrason. Ferroelectr. Freq. Control, 1993, vol. 40, pp. 563–576.

Article CAS PubMed Google Scholar

Spratt, W.K., Vetelino, J.F., and Lynnworth, L.C., Torsional ultrasonic waveguide sensor, 2010 IEEE Int. Ultrason. Symp. Proc. (San Diego, 2010).

Balasubramaniam, K. and Periyannan, S., US Patent no. 10520370B2, 2019.

Periyannan, S., Rajagopal, P., and Balasubramaniam, K., Torsional mode ultrasonic helical waveguide sensor for re-configurable temperature measurement, AIP Adv., 2016, vol. 6, p. 065116.

Periyannan, S. and Balasubramanian, K., Multi-level temperature measurements using ultrasonic waveguides, Measurement, 2015, vol. 61, pp. 185–191.

Periyannan S., Rajagopal P., and Balasubramaniam K., Reconfigurable multi-level temperature sensing by ultrasonic spring-like helical waveguide, J. Appl. Phys., 2016, vol. 119, p. 144502.

Rose, J.L., Ultrasonic Waves in solid Media, Cambridge: Cambridge Univ. Press, 1999, pp. 143–152.

Periyannan, S. and Balasubramaniam K., Simultaneous moduli measurement of elastic materials at elevated temperatures using an ultrasonic waveguide method, Rev. Sci. Instrum., 2015, vol. 86, p. 114903.

Periyannan, S., Rajagopal, P., and Balasubramaniam, K., Multiple temperature sensors embedded in an ultrasonic spiral-like waveguide, AIP Adv., 2017, vol. 7, p. 035201.

Kumar, A. and Periyannan S., Helical waveguide sensor for fluid level sensing using L (0,1), T (0,1) and F (1,1) wave modes simultaneously, IEEE Sens. J., 2023, vol. 23(17), pp. 19002–19011. https://doi.org/10.1109/JSEN.2023.3296931

Periyannan, S., Rajagopal, P., and Balasubramanian K., Ultrasonic bent waveguides approach for distributed temperature measurement, Ultrasonics, 2017, vol. 74, pp. 211–220.

Article PubMed Google Scholar

Kumar, A., and Periyannan, S., Enhancing the ultrasonic waveguide sensor’s fluid level sensitivity using through-transmission and pulse-echo techniques simultaneously, Rev. Sci. Instrum., 2023, vol. 94, no. 6, p. 065007. https://doi.org/10.1063/5.0145684

Raja, N., Balasubramaniam, K., and Periyannan, S., Ultrasonic waveguide based level measurement using flexural mode F(1, 1) in addition to the fundamental modes, Rev. Sci. Instrum., 2019, vol. 90. https://doi.org/10.1063/1.5054638

Subhash, N.N. and Balasubramaniam, K., Fluid level sensing using ultrasonic waveguides, Insight-Nondestr. Cond. Monit., 2014, vol. 56, no. 6, pp. 607–612.

Huang, S. et al., An optimized lightweight ultrasonic liquid level sensor adapted to the tilt of liquid level and ripple, IEEE Sens. J., 2022, vol. 22, no. 1, pp. 121–129. https://doi.org/10.1109/JSEN.2021.3127127

Matsuya, I., Honma, Y., Mori, M., and Ihara I., Measuring liquid-level utilizing wedge wave, Sensors, 2018, vol. 18, no. 1, p. 2. https://doi.org/10.3390/s18010002

Dhayalan, R., Saravanan, S., Manivannan, S., and Rao, B.P.C., Development of ultrasonic waveguide sensor for liquid level measurement in loop system, Electron. Lett., 2020, vol. 56(21), pp. 1120–1122.

Rogers, S.C. and Miller, G.N., Ultrasonic level, temperature, and density sensor, IEEE Trans. Nucl. Sci., 1982, vol. 29, no. 1, pp. 665–668.

Kim, J.O., et al., Torsional sensor applications in two-phase fluids, IEEE Trans. Ultrason. Ferroelectr. Freq. Control, 1993, vol. 40, no. 5, pp. 563–576.

Li, P., Cai, Y., Shen, X., Nabuzaale, S., Yin, J., and Li, J., An accurate detection for dynamic liquid level based on MIMO ultrasonic transducer array, IEEE Trans. Instrum. Meas., 2015, vol. 64, pp. 582–595.

Zhang, et al., A novel ultrasonic method for liquid level measurement based on the balance of echo energy, Sensors (Switzerland), 2017, vol. 17. https://doi.org/10.3390/s17040706

Chuprin, V.A., Control of Liquid Media Using Ultrasonic Normal Waves, Moscow: Spektr, 2015.

Murav’ev, V.V., Murav’eva, O.V., et al., Evaluation of residual stresses in rims of wagon wheels using the electromagnetic–acoustic method, Russ. J. Nondestr. Test., 2011, vol. 47, no. 8, pp. 512–521.

Murav’ev, V.V., Murav’eva, O.V., and Kokorina, E.N., Quality control of heat treatment of 60C2A steel bars using the electromagnetic–acoustic method, Russ. J. Nondestr. Test., 2013, vol. 49, no. 1, pp. 15–25.

Murav’eva, O.V., Murav’ev, V.V., and Mishkina, A.V., The influence of the design features of antiphased electromagnetic–acoustic transducers on the formation of directivity characteristics, Russ. J. Nondestr. Test., 2014, vol. 50, no. 9, pp. 531–538.

Rastegaev, I.A., Merson, D.L., Danyuk, A.V., Afanas’ev, M.A., and Khrustalev, A.K., Universal waveguide for the acoustic-emission evaluation of high-temperature industrial objects, Russ. J. Nondestr. Test., 2018, vol. 54, no. 3, pp. 164–173.

Balasubramaniam, K. and Periyannan, S., US Patent no. 11 022 502, 2021.

Pavlakovic, B.N., Lowe, M.J.S., and Cawley, P., Disperse: A general purpose program for creating dispersion curves, Quant. Nondestr. Eval., 1997, vol. 16, pp. 185–192.

Download references

This work was supported by ongoing institutional funding. No additional grants to carry out or direct this particular research were obtained.

Author information

Authors and affiliations.

Department of Mechanical Engineering, National Institute of Technology, 506004, Warangal, Telangana, India

Abhishek Kumar & Suresh Periyannan

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Abhishek Kumar .

Ethics declarations

The authors declare that they have no conflicts of interest.

Additional information

Publisher’s note..

Pleiades Publishing remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Kumar, A., Periyannan, S. Experimental Study of Ultrasonic Wave Propagation in a Long Waveguide Sensor for Fluid-Level Sensing. Russ J Nondestruct Test 60 , 132–143 (2024). https://doi.org/10.1134/S1061830923600880

Download citation

Received : 29 September 2023

Revised : 26 December 2023

Accepted : 28 December 2023

Published : 29 May 2024

Issue Date : February 2024

DOI : https://doi.org/10.1134/S1061830923600880

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- ultrasonic sensor

- long waveguide

- level measurement

- attenuation

- frequency domain

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Sensors (Basel)

Sensor-Based Assistive Devices for Visually-Impaired People: Current Status, Challenges, and Future Directions

The World Health Organization (WHO) reported that there are 285 million visually-impaired people worldwide. Among these individuals, there are 39 million who are totally blind. There have been several systems designed to support visually-impaired people and to improve the quality of their lives. Unfortunately, most of these systems are limited in their capabilities. In this paper, we present a comparative survey of the wearable and portable assistive devices for visually-impaired people in order to show the progress in assistive technology for this group of people. Thus, the contribution of this literature survey is to discuss in detail the most significant devices that are presented in the literature to assist this population and highlight the improvements, advantages, disadvantages, and accuracy. Our aim is to address and present most of the issues of these systems to pave the way for other researchers to design devices that ensure safety and independent mobility to visually-impaired people.

1. Introduction

The World Health Organization (WHO) Fact reported that there are 285 million visually-impaired people worldwide. Among these individuals, there are 39 million who are blind in the world [ 1 ]. More than 1.3 million are completely blind and approximately 8.7 million are visually-impaired in the USA [ 2 ]. Of these, 100,000 are students, according to the American Foundation for the Blind [ 2 ] and National Federation for the Blind [ 3 ]. Over the past years, blindness that is caused by diseases has decreased due to the success of public health actions. However, the number of blind people that are over 60 years old is increasing by 2 million per decade. Unfortunately, all these numbers are estimated to be doubled by 2020 [ 4 ].

The need for assistive devices for navigation and orientation has increased. The simplest and the most affordable navigations and available tools are trained dogs and the white cane [ 5 ]. Although these tools are very popular, they cannot provide the blind with all information and features for safe mobility, which are available to people with sight [ 6 , 7 ].

1.1. Assistive Technology

All the systems, services, devices and appliances that are used by disabled people to help in their daily lives, make their activities easier, and provide a safe mobility are included under one umbrella term: assistive technology [ 8 ].

In the 1960s, assistive technology was introduced to solve the daily problems which are related to information transmission (such as personal care) [ 9 ], navigation and orientation aids which are related to mobility assistance [ 10 , 11 , 12 ].

In Figure 1 , visual assistive technology is divided into three categories: vision enhancement, vision substitution, and vision replacement [ 12 , 13 ]. This assistive technology became available for the blind people through electronic devices which provide the users with detection and localization of the objects in order to offer those people with sense of the external environment using functions of sensors. The sensors also aid the user with the mobility task based on the determination of dimensions, range and height of the objects [ 6 , 14 ].

Classification of electronic devices for visually-impaired people.

The vision replacement category is more complex than the other two categories; it deals with medical and technology issues. Vision replacement includes displaying information directly to the visual cortex of the brain or through an ocular nerve [ 12 ]. However, vision enhancement and vision substitution are similar in concept; the difference is that in vision enhancement, the camera input is processed and then the results will be visually displayed. Vision substitution is similar to vision enhancement, yet the result constitutes non-visual display, which can be vibration, auditory or both based on the hearing and touch senses that can be easily controlled and felt by the blind user.

The main focus in this literature survey is the vision substitution category including its three subcategories; Electronic Travel Aid (ETAs), Electronic Orientation Aid (EOAs), and Position Locator Devices (PLDs). Our in-depth study of all the devices that provide the after mentioned services allows us to come up with a fair taxonomy that can classify any proposed technique among others. The Classification of electronic devices for visually-impaired people is shown in Figure 1 . Each one of the three categories tries to enhance the blind people’s mobility with slight differences.

1.1.1. Electronic Travel Aids (ETAs)

These are devices that gather information about the surrounding environment and transfer it to the user through sensor cameras, sonar, or laser scanners [ 15 , 16 ]. The rules of ETAs according to the National Research Council [ 6 ] are:

- (1) Determining obstacles around the user body from the ground to the head;

- (2) Affording some instructions to the user about the movement surface consists of gaps or textures;

- (3) Finding items surrounding the obstacles;

- (4) Providing information about the distance between the user and the obstacle with essential direction instructions;

- (5) Proposing notable sight locations in addition to identification instructions;

- (6) Affording information to give the ability of self-orientation and mental map of the surroundings.

1.1.2. Electronic Orientation Aids (EOAs)

These are devices that provide pedestrians with directions in unfamiliar places [ 17 , 18 ]. The guidelines of EOAs are given in [ 18 ]:

- (1) Defining the route to select the best path;

- (2) Tracing the path to approximately calculate the location of the user;

- (3) Providing mobility instructions and path signs to guide the user and develop her/his brain about the environment.

1.1.3. Position Locator Devices (PLD)

These are devices that determine the precise position of its holder such as devices that use GPS technology.

Our focus in this paper is on the most significant and latest systems that provide critical services for visually-impaired people including obstacle detection, obstacle avoidance and orientation services containing GPS features.

In Section 2 , a brief description is provided for the most significant electronic devices. Analysis of the main features for each studied device is presented in Section 3 . Section 4 concludes this review with discussion about the systems’ evaluation. The final section includes future directions.

2. The Most Significant Electronic Devices for Visually-impaired People

Most electronic aids that provide services for visually-impaired people depend on the data collected from the surrounding environment (via either laser scanner, cameras sensors, or sonar) and transmitted to the user either via tactile, audio format or both. Different opinions on which one is a better feedback type are discussed, and this is still an open topic.

However, regardless of the services that are provided by any particular system, there are some basic features required in that system to offer a fair performance. These features can be the key to measuring the efficiency and reliability of any electronic device that provides navigation and orientation services for visually-impaired people. Therefore, we present in this section a list of the most important and latest systems with a brief summary including: what is the system, its prototype, briefly how it works, the well-known techniques that being used in that system, and the advantages and disadvantages. Those devices are classified in Figure 1 based on the described features in Table 1 . The comparative results based on these features will be represented in the following section with an answer to the question of which device is the most efficient and desirable.

The most important features that correspond to the user’s needs.

● Smart Cane

Wahab et al. studied the development of the Smart Cane product for detecting the objects and produce accurate instructions for navigation [ 19 ]. The Smart Cane was presented originally by Central Michigan University’s students. The design of the Smart Cane is shown in Figure 2 . It is a portable device that is equipped with a sensor system. The system consists of ultrasonic sensors, microcontroller, vibrator, buzzer, and water detector in order to guide visually-impaired people. It uses servo motors, ultrasonic sensors, and fuzzy controller to detect the obstacles in front of the user and then provide instructions through voice messages or hand vibration.

The Smart Cane prototype [ 19 ].

The servo motors are used to give a precise position feedback. Ultrasonic sensors are used for detecting the obstacles. Hence, the fuzzy controller is able to give the accurate decisions based on the information received from the servo motors and ultrasonic sensors to navigate the user.

The output of the Smart Cane depends on gathering the above information to produce audio messages through the speaker to the user. In addition, hearing impaired people have special vibrator gloves that are provided with the Smart Cane. There is a specific vibration for each finger, and each one has a specific meaning.

The Smart Cane has achieved its goals in detecting the objects and obstacles, producing the needed feedback. As shown in Figure 2 , the Smart Cane is easily carried and easily bent. In addition, the water sensor will not detect the water unless it is 0.5 cm or deeper and the buzzer of water detector will not stop before it is dried or wiped. The authors of the paper have some recommendations for the tested system. They stated that in order to monitor the power status, it would better to have a power supply meter being installed. The authors recommended adding a buzzer timer to specify the period to solve the buzzer’s issue as well.

● Eye Substitution

Bharambe et al. developed an embedded device to act as an eye substitution for the vision impaired people (VIP) that helps in directions and navigation as shown in Figure 3 [ 20 ]. Mainly, the embedded device is a TI MSP 430G2553 micro-controller (Texas Instruments Incorporated, Dallas, TX, USA). The authors implemented the proposed algorithms using an Android application. The role of this application is to use GPS, improved GSM, and GPRS to get the location of the person and generate better directions. The embedded device consists of two HC-SR04 ultrasonic sensors (Yuyao Zhaohua Electric Appliance Factory, Yuyao, China), and three vibrator motors.

The prototype of the eye substitution device [ 20 ].

The ultrasonic sensors send a sequence of ultrasonic pulses. If the obstacle is detected, then the sound will be reflected back to the receiver as shown in Figure 4 . The micro-controller processes the readings of the ultrasonic sensors in order to activate the motors by sending pulse width modulation. It also provides a low power consumption [ 21 ].

Reflection of sequence of ultrasonic pulses between the sender and receiver.

The design of the device is light and very convenient. Furthermore, the system uses two sensors to overcome the issue of narrow cone angle as shown in Figure 5 . So, instead of covering two ranges, the ultrasonic devices cover three ranges. This does not only help in detecting obstacles, but also in locating them. However, the design could be better if the authors did not use the wood foundation that will be carried by the user most of the time. In addition, the system is not reliable and is limited to Android devices.

Ranges that are covered by ultra-sonic sensors [ 20 ].

● Fusion of Artificial Vision and GPS (FAV&GPS)

An assistive device for blind people was introduced in [ 22 ] to improve mapping of the user’s location and positioning the surrounding objects using two functions that are: based on a map matching approach and artificial vision [ 23 ]. The first function helps in locating the required object as well as allowing the user to give instructions by moving her/his head toward the target. The second one helps in automatic detection of visual aims. As shown in Figure 6 , this device is a wearable device that mounted on the user’s head, and it consists of two Bumblebee stereo cameras for video input that installed on the helmet, GPS receiver, headphones, microphone, and Xsens Mti tracking device for motion sensing. The system processes the video stream using SpikNet recognition algorithm [ 24 ] to locate the visual features that handle the 320 × 240 pixels image.

An assistive device for blind people based on a map matching approach and artificial vision [ 22 ].

For fast localization and detection of such visual targets, this system integrated Global Position System (GPS), modified Geographical Information System (GIS) [ 25 ] and vision based positioning. This design is able to improve the performance of the navigation systems where the signal is deputized. Therefore, this system can be combined with any navigation system to overcome the issues of the navigation in such areas.

Due to the lack of the availability of some information about the consistency of pedestrian mobility by commercial GIS, this system maps the GPS signal with the adapting GIS to estimate the user’s current position as shown in Figure 7 . The 3D target’s position is calculated using matrices of lenses and stereoscopic variance. After detecting the user and target positions, the vision agent sends the ID of the target and its 3D coordinates.

The result of mapping both commercial Geographical Information System (GIS) and Global Position System (GPS)’s signals is P1. P2 is the result of mapping the signals of GPS with adapting GIS [ 22 ].

The matrix of the rotation of each angle is multiplied with the target coordinates in the head reference frame ( x , y , z ) to obtain the targets’ coordinates in the map reference ( x − , y − , z − ) as given in Equation (1). After that, the design uses Geographic Information System (GIS) that contain all targets goelocated positions to get the longitude and latitude of landmarks. Based on this information, the authors could compute the user’s coordinates in World Geodetic System Coordinates (WGS84). The results are in audio format through the speaker that is equipped with the device.

The use of the modified GIS shows positive results and better estimation of the user’s position compared to the commercial GIS as shown in Figure 7 . However, the system has not been tested on navigation systems to insure its performance if it is integrated with a navigation system. So, whether it will enhance the navigation systems or not is unknown.

● Banknote Recognition (BanknoteRec)

An assistive device for blind people was implemented in [ 26 ] to help them classify the type of banknotes and coins. The system was built based on three models: input (OV6620 Omni vision CMOS camera), process (SX28 microcontroller), and output (speaker).

RGB color model is used to specify the type of the banknote by calculating the average red, green, and blue color. The function of the microcontroller (IV-CAM) with the camera mounted on a chip is used to extract the desired data from the camera’s streaming video. Then, the mean color and the variance data will be gathered for next step when MCS-51 microcontroller starts to process this gathered information. Based on the processing results, IC voice recorder (Aplus ap8917) records the voice of each kind of banknote and coin.

This system compares some samplings of each kind of a banknote using RGB model. The best matching banknote will be the result of the system. However, the coin is identified based on the size by computing the number of pixels. To find the type of the coin, the average of pixel number of each coin needs to be calculated. The best matching resultant coin will be the result of the device through the speaker.

The accuracy of the results is 80% due to two factors; the difference of the color of the new and old currency and a different light from the nature light might affect the results. On the other hand, the device was only tested on Thai currency. Therefore, the system is not reliable, and we cannot guarantee the efficiency of the system’s performance on other types of currency. Also, the device may not identify other banknotes than the tested if each kind of the banknote have a unique color and the coins that do not have similar size.

Recently, similar work was presented in [ 27 ]. This device is a portable one that shows a reasonable accuracy in detecting the Euro banknotes with a good accuracy in recognizing it by integrating well known computer vision techniques. However, the system has a very limited scope for a particular application such as the coins were not considered for detection and recognition. Furthermore, fake banknotes are not detected by the system.

● TED

A design of a tiny dipole antenna was developed in [ 28 ] to be connected within Tongue-placed electro-tactile device (TED) to assist blind people in transmitting information and navigating. This antenna is designed to establish a wireless communication between the TED device and matrix of electrodes. The design of the antenna in front and the back is shown in Figure 8 a–d. Bazooka Balun is used to reduce the effect of the cable on a small antenna [ 29 ].

( a ) The design of the antenna at the front and ( b ) at the back; ( c ) fabricated antenna at the front; ( d ) at the back and [ 30 ].

The idea of a TED system that was later designed in [ 30 ] is a development of Paul Bach-Y-Rita system into a tiny wireless system. The visual information of all video inputs are displayed into a tactile display unit.

The design of this system as shown in Figure 9 is based on three main parts; sunglasses with detective camera of objects, tongue electro tactile device (TED), and a host computer. The device contains an antenna to support wireless communication in the system, a matrix of electrodes to help the blind sensing through the tongue, a central processing block (CPU), a wireless transmission block, an electrode controlling block, and a battery. A matrix of 33 electrodes that is distributed into 8 pulses will be replaced into the blind person’s tongue as shown in Figure 10 , and the remaining components will be fabricated into a circuit. Each pulse corresponds to a specific direction.

Tongue-placed electro-tactile system with sunglasses carries object detection camera [ 28 ] ( a ) sunglasses with detective camera of objects; ( b ) tongue electro tactile device.

( a ) Matrix of electrode; ( b ) Different eight directions for the matrix of electrodes [ 30 ].

The image signals that are sent from the camera to the electrodes matrix will be received by the host computer first, and then it will be transferred in interpretable information. Hence, this converted information will be received by the wireless transmission block of the TED device as shown in Figure 11 . Next, the image signal will be processed into an encoded signal by the central processing block; that will be processed into controlled signal by the electrode controlling block afterwards. In the end, the controlled signal will be sent to the electrodes.

The overall design of the system [ 30 ].

Although this device meets its goal and show an effective performance, the results show that the antenna is not completely omni-directional. It indicates that the system is not optimized and requires further tests. In addition, the device was tested on a number of blind people. The results show that the user is not responding to some of the pulses, for example, the pulse number 7. This is indicating that the system is not sending the pulse to that particular point.

● CASBlip

A wearable aid system for blind people (CASBlip) was proposed in [ 31 ]. The aims of this design are to provide object detection, orientation, and navigation aid for both partially and completely blind people. This system has two important modules: sensor module and acoustic module. The sensor module contains a pair of glasses that includes the 1X64 3D CMOS image sensors and laser light beams for object detection as shown in Figure 12 . In addition, it has a function implemented using Field Programmable Gate Array (FPGA) that is controlling the reflection of the laser light beams after its collision with enclosure object to the lenses of the camera, calculating the distance, acquisition the data, and controlling the application software. The other function of FPGA was implemented within the acoustic module in order to process the environmental information for locating the object and convert this information to sounds that will be received by stereophonic headphones.

Design of the sensor module [ 31 ].

The developed acoustic system in [ 31 ] allows the user to choose the route and path after detecting the presence of the object and user. However, the small range of this device can cause a serious incident. The system was tested on two different groups of blind people. However, the results of outdoor experiments were not as good as the indoor experiments. This was because of the external noise. One of the recommendations to further develop this system is to use stereovision or add more sensors for improving the image acquisition.

● RFIWS

A Radio Frequency Identification Walking Stick (RFIWS) was designed in [ 32 ] in order to help blind people navigating on their sidewalk. This system helps in detecting and calculating the approximate distance between the sidewalk border and the blind person. A Radio Frequency Identification (RFID) is used to transfer and receive information through radio wave medium [ 33 ]. RFID tag, reader, and middle are the main components of RFID technology.

A number of RFID tags are placed in the middle of the sidewalk with consideration of an equal and specific distance between each other and RFID reader. The RFID will be connected to the stick in order to detect and process received signals. Sounds and vibrations will be produced to notify the user with the distance between the border of the sidewalk and himself/herself. Louder sounds will be generated as the user gets closer to the border. Figure 13 shows the distance of frequency detection (Y) and width of sidewalk (X). Each tag needs to be tested separately due to different ranges of detection.

Distance of the frequency detection on sidewalk [ 32 ].

RFID technology has a perfect reading function between the tags and readers that makes the device reliable in the level of detection. However, each tag needs a specific range which requires a lot of individual testing, that leads to scope limitation. Also, the system can be easily stopped from working in case of wrapping or covering the tags which prevents those tags from receiving the radio waves.

● A Low Cost Outdoor Assistive Navigation System (LowCost Nav)

A navigator with 3D sound system was developed in [ 34 ] to help blind people in navigating. The device is packet on the user’s waist with Raspberry Pi, GPS receiver and three main buttons to run the system as shown in Figure 14 .

The prototype of the proposed device [ 34 ].

The user can select a comfortable sound from recorded list to receive the navigation steps as an audible format. So, the device is provided with voice prompts and speech recognition for better capabilities. The system calculates the distance between the user and the object by using gyroscope and magnetic compass. Furthermore, the Raspberry Pi controls the process of the navigation. Both Mo Nav and Geo-Coder-US modules were used for pedestrian route generation. So, the system works as following: the user can just use the microphone to state the desired address or use one of the three provided buttons if the address already is stored in the system. User can press up button for choosing stored address, e.g., home, or entering a new address by pressing the down button and start recording the new address. The middle button will be selected to continue after the device ensure that the selected address is the correct address.

The system is composed of five main modules: loader is the controller of the system, initializer that verifies the existence of the required data and libraries, user interface that receives the desired address from the user, the address query that translates entered address to geographic coordinates, the route query obtains the user’s current location from GPS, and the route transversal that gives the audible instructions to the user to get to his destination.

This device shows a good performance within residential area as shown in Figure 15 a. It is also an economically cheap for a low income people. In addition, the device is light and easy to carry. However, the device shows a low performance in civilian area where tall buildings are existence due to the low accurate performance of the used GPS receiver as shown in Figure 15 b.

( a ) The results of the device’s orientation in residential area; ( b ) The results of the device’s orientation in civilian [ 34 ].

● ELC

The proposed electronic long cane (ELC) is based on haptics technology which was presented by A.R. Garcia et al. for the mobility aid to the blind people [ 35 ]. ELC is a development of the traditional cane in order to provide an accurate detection of the objects that are around the user. A small grip of the cane shown in Figure 16 consists of an embedded electronic circuit that includes an ultrasonic sensor for detection process, micro-motor actuator as the feedback interface, and a 9 V battery as a power supplier. This grip is able to detect the obstacles above the waistline of the blind person. A tactile feedback through a vibration will be produced as warning to a close obstacle. The frequency of the feedback will be increased as the blind person gets closer to the obstacle. Figure 17 shows how the ELC could help the blind people in detecting the obstacle above his waistline, which is considered as one of the reasons to a serious injury for those who are visually-impaired or completely blind.

The prototype of grip [ 35 ].

The proposed device for enhanced spatial sensitivity [ 35 ].

The ELC were tested on eight of voluntarily blind people. Physical obstacle, information obstacles, cultural obstacles are the main tested categories for the obstacles classification. The results were classified based on a taken quiz by the blind people who used the device. The results showed the efficiency of the device for physical obstacles detection above the waistline of the blind person. However, the device helps a blind person just in detecting obstacles but not in the orientation function. So, the blind person still needs to identify his path himself and relies on the tradition cane for the navigation as shown in Figure 17 .

● Cognitive Guidance System (CG System)

Landa et al. proposed a guidance system for blind people through structured environments [ 36 ]. This design uses Kinect sensor and stereoscopic vision to calculate the distance between the user and the obstacle with help of fuzzy decision rules type Mandani and vanishing point to guide the user through the path.

The proposed system consists of two video cameras (Sony 1/3” progressive scan CCD) and one laptop. The analysis of detection range is beyond 4 m; which was obtained using stereoscopy and Kinect to compress the cloud of 3D points in range within 40 cm to 4 m in order to calculate the vanishing point. The vanishing point is used in this system as a virtual compass to direct the blind person through structured environment. Then, fuzzy decision rules are applied to avoid the obstacles.

In a first step, the system scans for planes in range between 1.5 m and 4 m. For better performance, the system processes 25 frames per second. Then the Canny filter is used for edges detection. After the edges are defined, the result is used for calculating the vanishing point. Next, the device gets the 3D Euclidean orientation from the Kinect sensor which is projected to 2D image. That gives the direction to the goal point.

This work implemented 49 fuzzy rules which cover only 80 configurations. Moreover, the vanishing point can be computed only based on existing lines which rarely exist in outdoor. That emphasizes the system is not affordable for outdoor use. The perception capacities of the system need to be increased to detect spatial landmarks as well.

● Ultrasonic Cane as a Navigation Aid (UltraCane)

Development to C-5 laser cane [ 37 ], Krishna Kumar et al. deployed an ultrasonic based cane to aid the blind people [ 38 ]. The aim of this work is to replace the laser with the ultrasonic sensors to avoid the risk of the laser. This cane is able to detect the ground and aerial obstacles.

The prototype of this device as shown in Figure 18 a is based on a light weight cane, three ultrasonic trans-receivers, X-bee-S1 trans-receiver module, two Arduino UNO microcontrollers, three LED panels, and pizeo buzzer. The target of the three ultrasonic sensors is to detect the ground and aerial obstacles in range of 5 cm to 150 cm. Figure 18 b shows the process of the object detection within a specific distance. Once an ultrasonic wave is detected, a control signal is generated and it triggers the echo pin of the microcontroller. The microcontroller records the width of the time duration of the height of each pin and transforms it to a distance. The control signal will be wirelessly transferred by X-bee to the receiving unit which would be worn on the shoulders. The buzzer will be played to alert the user based on the obstacle’s approach (high alert, normal alert, low alert and no alert).

( a ) The prototype of the device; ( b ) Detection process of the obstacle from 5 cm to 150 cm [ 38 ].

The authors claimed that this device can be a navigational aid to the blind people. However, the results showed it is only an object detector within a small range. Also, detection of the dynamic object was not covered in this technique which may led to an accident. In order to improve this work, tele-instructions should be giving to the user for navigation aid as well as the integration of GPS which is needed to allocate the user’s position.

● Obstacle Avoidance Using Auto-adaptive Thresholding (Obs Avoid using Thresholding)

An obstacle avoidance system using Kinect depth camera for blind people was presented by Muhamad and Widyawan [ 39 ]. The prototype of the proposed system is shown in Figure 19 a. The auto-adaptive thresholding is used to detect and calculate the distance between the obstacle and the user. The notebook with USB hub, earphone, and Microsoft Kinect depth camera are the main components of the system.

( a ) The prototype of the proposed system; ( b ) calculating threshold value and the distance of the closest object [ 39 ].

The raw data (depth information about each pixel) is transferred to the system by the Kinect. To increase the efficiency, the range of a depth close to 800 mm and more than 4000 mm will be reset to zero. Then, the depth image will be divided to three areas (left, middle, and right). The auto-adaptive threshold generates the optimal threshold value for each area. Each 2 × 2 pixel area, there will be 1 pixel that is going to be used. Then, this group of data will be classified and transformed to depth histogram. Contrast function will calculate a local maximum for each depth as shown in Figure 19 b. Otsu method will be applied to find the most peak threshold value [ 40 ]. Then, an average function will determine the closet object for each area afterwards. Beeps will be generated through earphone when the obstacle is in a range of 1500 mm. As it reaches 1000 mm, the voice recommendation will be produced to the blind person, so, he/she takes left, middle, or right path. The low accuracy of Kinect in closed range could reduce the performance of the system. Also, the results show the auto-adaptive threshold cannot differentiate between the objects as the distance between the user and obstacle increases.

● Obstacle Avoidance Using Haptics and a Laser Rangefinder (Obs Avoid using Haptics&Laser)

Using a laser as a virtual white cane to help blind people was introduced by Daniel et al. [ 41 ]. The environment is scanned by a laser rangefinder and the feedback is sent to the user via a haptic interface. The user will be able to sense the obstacle several meters away with no physical contact. The length of the virtual cane can be chosen by the user, but it is still limited. A laptop type MSI with intel core i7-740 QM, a laser rangefinder type SICK, an NVIDIA graphic card type GTX460M, and a haptic display type Novint Falcone are the main components of the proposed systems, which are structured on an electronic wheelchair. The developed software used an open source platform H3DAPI [ 42 ].

The wheel chair will be controlled by Joystic using right hand and sensing the environment will be controlled by Falcon (haptic interface) using the other hand as shown in Figure 20 . As the user starts the system, the range finder will start scanning the environment that is in front of the chair. Then, it will calculate the distance between the user and the object using the laser beams. The distance information will be transmitted to the laptop to create a 3-dimensional graph using NIVIDA card and then transmit it to the haptic device.

Display the proposed system mounted on the special electronic wheelchair [ 41 ].

The results showed that the precise location of obstacles and angles were difficult to determine due to misunderstanding of the scale factor between the real and model world by the user of haptic grip translation.

● A Computer Vision System that Ensure the Autonomous Navigation (ComVis Sys)

A real time obstacle detection system was presented in [ 43 ] to alert the blind people and aid them in their mobility indoors and outdoors. This application works on a smartphone that is attached on the blind person’s chest. Furthermore, this paper focuses on a static and dynamic objects’ detection and classification technique which was introduced in [ 44 ].

Using detection technique in [ 44 ], the team was able to detect both static/dynamic objects in a video stream. The interested points which are the pixels that located in a cell’s center of the image are selected based on image-grid. Then, the multiscale Lucas-Kanade algorithm tracks these selected points. After that, they applied the RANSAC algorithm on these points reclusively to detect the background motion. The number of clusters are created to merge the outlines afterwards. The distance between the object and video camera defines the state of the object either as normal or urgent.

The adapted HOG (Histogram of Oriented Gradients) descriptor was used as recognition algorithm that is integrated with the framework BoVW (Bag of Visual Words). However, the sizes of images are resizable based on the object type that the team decided. Then, they computed the descriptor on the extracted interested points of each group of images and then make clusters which contain the extracted features of all images. After that, they applied BoVW to create a codebook for all clusters (K): W = { w 1 , w 2 , … .. , w k } . Each w is a visual word that represents the system’s vocabulary. The work flow is illustrated in Figure 21 .

The process of detection and recognition algorithm [ 43 ].

Now, each image is divided to blocks that created by HOG and then included into the training dataset and mapped to related visual word. At the end, they used SVM classifier for training. So, each labeled data is transmitted to the classifier to be differentiated based on specific categories.

The implementation of the system into smartphone is considered as a great mobility aid to the blind people since the smartphones nowadays are light and easy to carry. Also, using HOG descriptor to extract the feature of each set of images makes the recognition process efficient as the system not only detects the object, but also recognizes it based on its type using the clusters.

However, the fixed sizes of the image which is based on the category, can make detecting the same object with a different size a challenge. Objects in dark places and those that are highly dynamic cannot be detected. Smartphone videos are noisy as well. In addition, the tested dataset of 4500 images with dictionary of 4000 words is considered as small dataset. The system is tested and can only work on a Samsung S4.

● Silicon Eyes (Sili Eyes)

By adapting GSM and GPS coordinator, Prudhvi et al. introduced an assistive navigator for blind people in [ 45 ]. It helps the users detect their current location, hence, navigating them using haptic feedback. In addition, the user can get information about time, date and even the color of the objects in front of him/her in audio format. The proposed device is attached within a silicon glove to be wearable as shown in Figure 22 .

The proposed system attached on silicon glove [ 45 ].

The prototype of the proposed device is based on a microcontroller which is 32-bit cortex-M3 to control entire system, a 24-bit color sensor to recognize the colors of the objects, light/temperature sensor, and SONAR to detect the distance between the object and the user.

The system supports a touch keyboard using Braille technique to enter any information. After the user chooses the desired destination, he/she will be directed using MEMS accelerometer and magnetometer through the road. The instructions will be sent through headset that is connected to the device via MP3 decoder. The user will be notified by SONAR on the detected distance between the user and closet obstacle. In case of emergency, the current location of the disable user will be sent via SMS to someone whose phone number is provided by the user using both technologies GSM and GPS.