- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

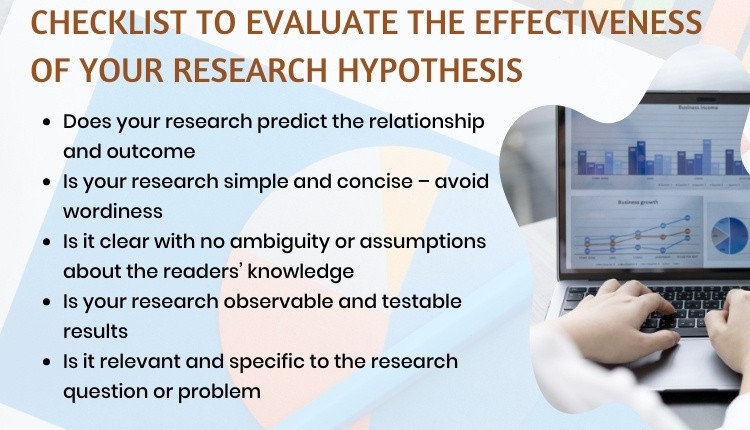

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

What Is A Research (Scientific) Hypothesis? A plain-language explainer + examples

By: Derek Jansen (MBA) | Reviewed By: Dr Eunice Rautenbach | June 2020

If you’re new to the world of research, or it’s your first time writing a dissertation or thesis, you’re probably noticing that the words “research hypothesis” and “scientific hypothesis” are used quite a bit, and you’re wondering what they mean in a research context .

“Hypothesis” is one of those words that people use loosely, thinking they understand what it means. However, it has a very specific meaning within academic research. So, it’s important to understand the exact meaning before you start hypothesizing.

Research Hypothesis 101

- What is a hypothesis ?

- What is a research hypothesis (scientific hypothesis)?

- Requirements for a research hypothesis

- Definition of a research hypothesis

- The null hypothesis

What is a hypothesis?

Let’s start with the general definition of a hypothesis (not a research hypothesis or scientific hypothesis), according to the Cambridge Dictionary:

Hypothesis: an idea or explanation for something that is based on known facts but has not yet been proved.

In other words, it’s a statement that provides an explanation for why or how something works, based on facts (or some reasonable assumptions), but that has not yet been specifically tested . For example, a hypothesis might look something like this:

Hypothesis: sleep impacts academic performance.

This statement predicts that academic performance will be influenced by the amount and/or quality of sleep a student engages in – sounds reasonable, right? It’s based on reasonable assumptions , underpinned by what we currently know about sleep and health (from the existing literature). So, loosely speaking, we could call it a hypothesis, at least by the dictionary definition.

But that’s not good enough…

Unfortunately, that’s not quite sophisticated enough to describe a research hypothesis (also sometimes called a scientific hypothesis), and it wouldn’t be acceptable in a dissertation, thesis or research paper . In the world of academic research, a statement needs a few more criteria to constitute a true research hypothesis .

What is a research hypothesis?

A research hypothesis (also called a scientific hypothesis) is a statement about the expected outcome of a study (for example, a dissertation or thesis). To constitute a quality hypothesis, the statement needs to have three attributes – specificity , clarity and testability .

Let’s take a look at these more closely.

Need a helping hand?

Hypothesis Essential #1: Specificity & Clarity

A good research hypothesis needs to be extremely clear and articulate about both what’ s being assessed (who or what variables are involved ) and the expected outcome (for example, a difference between groups, a relationship between variables, etc.).

Let’s stick with our sleepy students example and look at how this statement could be more specific and clear.

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

As you can see, the statement is very specific as it identifies the variables involved (sleep hours and test grades), the parties involved (two groups of students), as well as the predicted relationship type (a positive relationship). There’s no ambiguity or uncertainty about who or what is involved in the statement, and the expected outcome is clear.

Contrast that to the original hypothesis we looked at – “Sleep impacts academic performance” – and you can see the difference. “Sleep” and “academic performance” are both comparatively vague , and there’s no indication of what the expected relationship direction is (more sleep or less sleep). As you can see, specificity and clarity are key.

Hypothesis Essential #2: Testability (Provability)

A statement must be testable to qualify as a research hypothesis. In other words, there needs to be a way to prove (or disprove) the statement. If it’s not testable, it’s not a hypothesis – simple as that.

For example, consider the hypothesis we mentioned earlier:

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

We could test this statement by undertaking a quantitative study involving two groups of students, one that gets 8 or more hours of sleep per night for a fixed period, and one that gets less. We could then compare the standardised test results for both groups to see if there’s a statistically significant difference.

Again, if you compare this to the original hypothesis we looked at – “Sleep impacts academic performance” – you can see that it would be quite difficult to test that statement, primarily because it isn’t specific enough. How much sleep? By who? What type of academic performance?

So, remember the mantra – if you can’t test it, it’s not a hypothesis 🙂

Defining A Research Hypothesis

You’re still with us? Great! Let’s recap and pin down a clear definition of a hypothesis.

A research hypothesis (or scientific hypothesis) is a statement about an expected relationship between variables, or explanation of an occurrence, that is clear, specific and testable.

So, when you write up hypotheses for your dissertation or thesis, make sure that they meet all these criteria. If you do, you’ll not only have rock-solid hypotheses but you’ll also ensure a clear focus for your entire research project.

What about the null hypothesis?

You may have also heard the terms null hypothesis , alternative hypothesis, or H-zero thrown around. At a simple level, the null hypothesis is the counter-proposal to the original hypothesis.

For example, if the hypothesis predicts that there is a relationship between two variables (for example, sleep and academic performance), the null hypothesis would predict that there is no relationship between those variables.

At a more technical level, the null hypothesis proposes that no statistical significance exists in a set of given observations and that any differences are due to chance alone.

And there you have it – hypotheses in a nutshell.

If you have any questions, be sure to leave a comment below and we’ll do our best to help you. If you need hands-on help developing and testing your hypotheses, consider our private coaching service , where we hold your hand through the research journey.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

16 Comments

Very useful information. I benefit more from getting more information in this regard.

Very great insight,educative and informative. Please give meet deep critics on many research data of public international Law like human rights, environment, natural resources, law of the sea etc

In a book I read a distinction is made between null, research, and alternative hypothesis. As far as I understand, alternative and research hypotheses are the same. Can you please elaborate? Best Afshin

This is a self explanatory, easy going site. I will recommend this to my friends and colleagues.

Very good definition. How can I cite your definition in my thesis? Thank you. Is nul hypothesis compulsory in a research?

It’s a counter-proposal to be proven as a rejection

Please what is the difference between alternate hypothesis and research hypothesis?

It is a very good explanation. However, it limits hypotheses to statistically tasteable ideas. What about for qualitative researches or other researches that involve quantitative data that don’t need statistical tests?

In qualitative research, one typically uses propositions, not hypotheses.

could you please elaborate it more

I’ve benefited greatly from these notes, thank you.

This is very helpful

well articulated ideas are presented here, thank you for being reliable sources of information

Excellent. Thanks for being clear and sound about the research methodology and hypothesis (quantitative research)

I have only a simple question regarding the null hypothesis. – Is the null hypothesis (Ho) known as the reversible hypothesis of the alternative hypothesis (H1? – How to test it in academic research?

this is very important note help me much more

Trackbacks/Pingbacks

- What Is Research Methodology? Simple Definition (With Examples) - Grad Coach - […] Contrasted to this, a quantitative methodology is typically used when the research aims and objectives are confirmatory in nature. For example,…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- How to Write a Strong Hypothesis | Guide & Examples

How to Write a Strong Hypothesis | Guide & Examples

Published on 6 May 2022 by Shona McCombes .

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more variables . An independent variable is something the researcher changes or controls. A dependent variable is something the researcher observes and measures.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Prevent plagiarism, run a free check.

Step 1: ask a question.

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2: Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalise more complex constructs.

Step 3: Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

Step 4: Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

Step 5: Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if … then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

Step 6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis. The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, May 06). How to Write a Strong Hypothesis | Guide & Examples. Scribbr. Retrieved 27 May 2024, from https://www.scribbr.co.uk/research-methods/hypothesis-writing/

Is this article helpful?

Shona McCombes

Other students also liked, operationalisation | a guide with examples, pros & cons, what is a conceptual framework | tips & examples, a quick guide to experimental design | 5 steps & examples.

- Privacy Policy

Home » What is a Hypothesis – Types, Examples and Writing Guide

What is a Hypothesis – Types, Examples and Writing Guide

Table of Contents

Definition:

Hypothesis is an educated guess or proposed explanation for a phenomenon, based on some initial observations or data. It is a tentative statement that can be tested and potentially proven or disproven through further investigation and experimentation.

Hypothesis is often used in scientific research to guide the design of experiments and the collection and analysis of data. It is an essential element of the scientific method, as it allows researchers to make predictions about the outcome of their experiments and to test those predictions to determine their accuracy.

Types of Hypothesis

Types of Hypothesis are as follows:

Research Hypothesis

A research hypothesis is a statement that predicts a relationship between variables. It is usually formulated as a specific statement that can be tested through research, and it is often used in scientific research to guide the design of experiments.

Null Hypothesis

The null hypothesis is a statement that assumes there is no significant difference or relationship between variables. It is often used as a starting point for testing the research hypothesis, and if the results of the study reject the null hypothesis, it suggests that there is a significant difference or relationship between variables.

Alternative Hypothesis

An alternative hypothesis is a statement that assumes there is a significant difference or relationship between variables. It is often used as an alternative to the null hypothesis and is tested against the null hypothesis to determine which statement is more accurate.

Directional Hypothesis

A directional hypothesis is a statement that predicts the direction of the relationship between variables. For example, a researcher might predict that increasing the amount of exercise will result in a decrease in body weight.

Non-directional Hypothesis

A non-directional hypothesis is a statement that predicts the relationship between variables but does not specify the direction. For example, a researcher might predict that there is a relationship between the amount of exercise and body weight, but they do not specify whether increasing or decreasing exercise will affect body weight.

Statistical Hypothesis

A statistical hypothesis is a statement that assumes a particular statistical model or distribution for the data. It is often used in statistical analysis to test the significance of a particular result.

Composite Hypothesis

A composite hypothesis is a statement that assumes more than one condition or outcome. It can be divided into several sub-hypotheses, each of which represents a different possible outcome.

Empirical Hypothesis

An empirical hypothesis is a statement that is based on observed phenomena or data. It is often used in scientific research to develop theories or models that explain the observed phenomena.

Simple Hypothesis

A simple hypothesis is a statement that assumes only one outcome or condition. It is often used in scientific research to test a single variable or factor.

Complex Hypothesis

A complex hypothesis is a statement that assumes multiple outcomes or conditions. It is often used in scientific research to test the effects of multiple variables or factors on a particular outcome.

Applications of Hypothesis

Hypotheses are used in various fields to guide research and make predictions about the outcomes of experiments or observations. Here are some examples of how hypotheses are applied in different fields:

- Science : In scientific research, hypotheses are used to test the validity of theories and models that explain natural phenomena. For example, a hypothesis might be formulated to test the effects of a particular variable on a natural system, such as the effects of climate change on an ecosystem.

- Medicine : In medical research, hypotheses are used to test the effectiveness of treatments and therapies for specific conditions. For example, a hypothesis might be formulated to test the effects of a new drug on a particular disease.

- Psychology : In psychology, hypotheses are used to test theories and models of human behavior and cognition. For example, a hypothesis might be formulated to test the effects of a particular stimulus on the brain or behavior.

- Sociology : In sociology, hypotheses are used to test theories and models of social phenomena, such as the effects of social structures or institutions on human behavior. For example, a hypothesis might be formulated to test the effects of income inequality on crime rates.

- Business : In business research, hypotheses are used to test the validity of theories and models that explain business phenomena, such as consumer behavior or market trends. For example, a hypothesis might be formulated to test the effects of a new marketing campaign on consumer buying behavior.

- Engineering : In engineering, hypotheses are used to test the effectiveness of new technologies or designs. For example, a hypothesis might be formulated to test the efficiency of a new solar panel design.

How to write a Hypothesis

Here are the steps to follow when writing a hypothesis:

Identify the Research Question

The first step is to identify the research question that you want to answer through your study. This question should be clear, specific, and focused. It should be something that can be investigated empirically and that has some relevance or significance in the field.

Conduct a Literature Review

Before writing your hypothesis, it’s essential to conduct a thorough literature review to understand what is already known about the topic. This will help you to identify the research gap and formulate a hypothesis that builds on existing knowledge.

Determine the Variables

The next step is to identify the variables involved in the research question. A variable is any characteristic or factor that can vary or change. There are two types of variables: independent and dependent. The independent variable is the one that is manipulated or changed by the researcher, while the dependent variable is the one that is measured or observed as a result of the independent variable.

Formulate the Hypothesis

Based on the research question and the variables involved, you can now formulate your hypothesis. A hypothesis should be a clear and concise statement that predicts the relationship between the variables. It should be testable through empirical research and based on existing theory or evidence.

Write the Null Hypothesis

The null hypothesis is the opposite of the alternative hypothesis, which is the hypothesis that you are testing. The null hypothesis states that there is no significant difference or relationship between the variables. It is important to write the null hypothesis because it allows you to compare your results with what would be expected by chance.

Refine the Hypothesis

After formulating the hypothesis, it’s important to refine it and make it more precise. This may involve clarifying the variables, specifying the direction of the relationship, or making the hypothesis more testable.

Examples of Hypothesis

Here are a few examples of hypotheses in different fields:

- Psychology : “Increased exposure to violent video games leads to increased aggressive behavior in adolescents.”

- Biology : “Higher levels of carbon dioxide in the atmosphere will lead to increased plant growth.”

- Sociology : “Individuals who grow up in households with higher socioeconomic status will have higher levels of education and income as adults.”

- Education : “Implementing a new teaching method will result in higher student achievement scores.”

- Marketing : “Customers who receive a personalized email will be more likely to make a purchase than those who receive a generic email.”

- Physics : “An increase in temperature will cause an increase in the volume of a gas, assuming all other variables remain constant.”

- Medicine : “Consuming a diet high in saturated fats will increase the risk of developing heart disease.”

Purpose of Hypothesis

The purpose of a hypothesis is to provide a testable explanation for an observed phenomenon or a prediction of a future outcome based on existing knowledge or theories. A hypothesis is an essential part of the scientific method and helps to guide the research process by providing a clear focus for investigation. It enables scientists to design experiments or studies to gather evidence and data that can support or refute the proposed explanation or prediction.

The formulation of a hypothesis is based on existing knowledge, observations, and theories, and it should be specific, testable, and falsifiable. A specific hypothesis helps to define the research question, which is important in the research process as it guides the selection of an appropriate research design and methodology. Testability of the hypothesis means that it can be proven or disproven through empirical data collection and analysis. Falsifiability means that the hypothesis should be formulated in such a way that it can be proven wrong if it is incorrect.

In addition to guiding the research process, the testing of hypotheses can lead to new discoveries and advancements in scientific knowledge. When a hypothesis is supported by the data, it can be used to develop new theories or models to explain the observed phenomenon. When a hypothesis is not supported by the data, it can help to refine existing theories or prompt the development of new hypotheses to explain the phenomenon.

When to use Hypothesis

Here are some common situations in which hypotheses are used:

- In scientific research , hypotheses are used to guide the design of experiments and to help researchers make predictions about the outcomes of those experiments.

- In social science research , hypotheses are used to test theories about human behavior, social relationships, and other phenomena.

- I n business , hypotheses can be used to guide decisions about marketing, product development, and other areas. For example, a hypothesis might be that a new product will sell well in a particular market, and this hypothesis can be tested through market research.

Characteristics of Hypothesis

Here are some common characteristics of a hypothesis:

- Testable : A hypothesis must be able to be tested through observation or experimentation. This means that it must be possible to collect data that will either support or refute the hypothesis.

- Falsifiable : A hypothesis must be able to be proven false if it is not supported by the data. If a hypothesis cannot be falsified, then it is not a scientific hypothesis.

- Clear and concise : A hypothesis should be stated in a clear and concise manner so that it can be easily understood and tested.

- Based on existing knowledge : A hypothesis should be based on existing knowledge and research in the field. It should not be based on personal beliefs or opinions.

- Specific : A hypothesis should be specific in terms of the variables being tested and the predicted outcome. This will help to ensure that the research is focused and well-designed.

- Tentative: A hypothesis is a tentative statement or assumption that requires further testing and evidence to be confirmed or refuted. It is not a final conclusion or assertion.

- Relevant : A hypothesis should be relevant to the research question or problem being studied. It should address a gap in knowledge or provide a new perspective on the issue.

Advantages of Hypothesis

Hypotheses have several advantages in scientific research and experimentation:

- Guides research: A hypothesis provides a clear and specific direction for research. It helps to focus the research question, select appropriate methods and variables, and interpret the results.

- Predictive powe r: A hypothesis makes predictions about the outcome of research, which can be tested through experimentation. This allows researchers to evaluate the validity of the hypothesis and make new discoveries.

- Facilitates communication: A hypothesis provides a common language and framework for scientists to communicate with one another about their research. This helps to facilitate the exchange of ideas and promotes collaboration.

- Efficient use of resources: A hypothesis helps researchers to use their time, resources, and funding efficiently by directing them towards specific research questions and methods that are most likely to yield results.

- Provides a basis for further research: A hypothesis that is supported by data provides a basis for further research and exploration. It can lead to new hypotheses, theories, and discoveries.

- Increases objectivity: A hypothesis can help to increase objectivity in research by providing a clear and specific framework for testing and interpreting results. This can reduce bias and increase the reliability of research findings.

Limitations of Hypothesis

Some Limitations of the Hypothesis are as follows:

- Limited to observable phenomena: Hypotheses are limited to observable phenomena and cannot account for unobservable or intangible factors. This means that some research questions may not be amenable to hypothesis testing.

- May be inaccurate or incomplete: Hypotheses are based on existing knowledge and research, which may be incomplete or inaccurate. This can lead to flawed hypotheses and erroneous conclusions.

- May be biased: Hypotheses may be biased by the researcher’s own beliefs, values, or assumptions. This can lead to selective interpretation of data and a lack of objectivity in research.

- Cannot prove causation: A hypothesis can only show a correlation between variables, but it cannot prove causation. This requires further experimentation and analysis.

- Limited to specific contexts: Hypotheses are limited to specific contexts and may not be generalizable to other situations or populations. This means that results may not be applicable in other contexts or may require further testing.

- May be affected by chance : Hypotheses may be affected by chance or random variation, which can obscure or distort the true relationship between variables.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Institutional Review Board – Application Sample...

Evaluating Research – Process, Examples and...

What is a scientific hypothesis?

It's the initial building block in the scientific method.

Hypothesis basics

What makes a hypothesis testable.

- Types of hypotheses

- Hypothesis versus theory

Additional resources

Bibliography.

A scientific hypothesis is a tentative, testable explanation for a phenomenon in the natural world. It's the initial building block in the scientific method . Many describe it as an "educated guess" based on prior knowledge and observation. While this is true, a hypothesis is more informed than a guess. While an "educated guess" suggests a random prediction based on a person's expertise, developing a hypothesis requires active observation and background research.

The basic idea of a hypothesis is that there is no predetermined outcome. For a solution to be termed a scientific hypothesis, it has to be an idea that can be supported or refuted through carefully crafted experimentation or observation. This concept, called falsifiability and testability, was advanced in the mid-20th century by Austrian-British philosopher Karl Popper in his famous book "The Logic of Scientific Discovery" (Routledge, 1959).

A key function of a hypothesis is to derive predictions about the results of future experiments and then perform those experiments to see whether they support the predictions.

A hypothesis is usually written in the form of an if-then statement, which gives a possibility (if) and explains what may happen because of the possibility (then). The statement could also include "may," according to California State University, Bakersfield .

Here are some examples of hypothesis statements:

- If garlic repels fleas, then a dog that is given garlic every day will not get fleas.

- If sugar causes cavities, then people who eat a lot of candy may be more prone to cavities.

- If ultraviolet light can damage the eyes, then maybe this light can cause blindness.

A useful hypothesis should be testable and falsifiable. That means that it should be possible to prove it wrong. A theory that can't be proved wrong is nonscientific, according to Karl Popper's 1963 book " Conjectures and Refutations ."

An example of an untestable statement is, "Dogs are better than cats." That's because the definition of "better" is vague and subjective. However, an untestable statement can be reworded to make it testable. For example, the previous statement could be changed to this: "Owning a dog is associated with higher levels of physical fitness than owning a cat." With this statement, the researcher can take measures of physical fitness from dog and cat owners and compare the two.

Types of scientific hypotheses

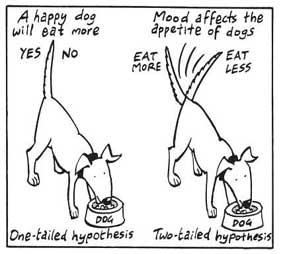

In an experiment, researchers generally state their hypotheses in two ways. The null hypothesis predicts that there will be no relationship between the variables tested, or no difference between the experimental groups. The alternative hypothesis predicts the opposite: that there will be a difference between the experimental groups. This is usually the hypothesis scientists are most interested in, according to the University of Miami .

For example, a null hypothesis might state, "There will be no difference in the rate of muscle growth between people who take a protein supplement and people who don't." The alternative hypothesis would state, "There will be a difference in the rate of muscle growth between people who take a protein supplement and people who don't."

If the results of the experiment show a relationship between the variables, then the null hypothesis has been rejected in favor of the alternative hypothesis, according to the book " Research Methods in Psychology " (BCcampus, 2015).

There are other ways to describe an alternative hypothesis. The alternative hypothesis above does not specify a direction of the effect, only that there will be a difference between the two groups. That type of prediction is called a two-tailed hypothesis. If a hypothesis specifies a certain direction — for example, that people who take a protein supplement will gain more muscle than people who don't — it is called a one-tailed hypothesis, according to William M. K. Trochim , a professor of Policy Analysis and Management at Cornell University.

Sometimes, errors take place during an experiment. These errors can happen in one of two ways. A type I error is when the null hypothesis is rejected when it is true. This is also known as a false positive. A type II error occurs when the null hypothesis is not rejected when it is false. This is also known as a false negative, according to the University of California, Berkeley .

A hypothesis can be rejected or modified, but it can never be proved correct 100% of the time. For example, a scientist can form a hypothesis stating that if a certain type of tomato has a gene for red pigment, that type of tomato will be red. During research, the scientist then finds that each tomato of this type is red. Though the findings confirm the hypothesis, there may be a tomato of that type somewhere in the world that isn't red. Thus, the hypothesis is true, but it may not be true 100% of the time.

Scientific theory vs. scientific hypothesis

The best hypotheses are simple. They deal with a relatively narrow set of phenomena. But theories are broader; they generally combine multiple hypotheses into a general explanation for a wide range of phenomena, according to the University of California, Berkeley . For example, a hypothesis might state, "If animals adapt to suit their environments, then birds that live on islands with lots of seeds to eat will have differently shaped beaks than birds that live on islands with lots of insects to eat." After testing many hypotheses like these, Charles Darwin formulated an overarching theory: the theory of evolution by natural selection.

"Theories are the ways that we make sense of what we observe in the natural world," Tanner said. "Theories are structures of ideas that explain and interpret facts."

- Read more about writing a hypothesis, from the American Medical Writers Association.

- Find out why a hypothesis isn't always necessary in science, from The American Biology Teacher.

- Learn about null and alternative hypotheses, from Prof. Essa on YouTube .

Encyclopedia Britannica. Scientific Hypothesis. Jan. 13, 2022. https://www.britannica.com/science/scientific-hypothesis

Karl Popper, "The Logic of Scientific Discovery," Routledge, 1959.

California State University, Bakersfield, "Formatting a testable hypothesis." https://www.csub.edu/~ddodenhoff/Bio100/Bio100sp04/formattingahypothesis.htm

Karl Popper, "Conjectures and Refutations," Routledge, 1963.

Price, P., Jhangiani, R., & Chiang, I., "Research Methods of Psychology — 2nd Canadian Edition," BCcampus, 2015.

University of Miami, "The Scientific Method" http://www.bio.miami.edu/dana/161/evolution/161app1_scimethod.pdf

William M.K. Trochim, "Research Methods Knowledge Base," https://conjointly.com/kb/hypotheses-explained/

University of California, Berkeley, "Multiple Hypothesis Testing and False Discovery Rate" https://www.stat.berkeley.edu/~hhuang/STAT141/Lecture-FDR.pdf

University of California, Berkeley, "Science at multiple levels" https://undsci.berkeley.edu/article/0_0_0/howscienceworks_19

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Earth from space: Ethereal algal vortex blooms at the heart of massive Baltic 'dead zone'

10 surprising things that are made from petroleum

Bright comet headed toward Earth could be visible with the naked eye

Most Popular

- 2 10 surprising things that are made from petroleum

- 3 Scientists just discovered an enormous lithium reservoir under Pennsylvania

- 4 Alaska's rivers are turning bright orange and as acidic as vinegar as toxic metal escapes from melting permafrost

- 5 Ancient Mycenaean armor is so good, it protected users in an 11-hour battle simulation inspired by the Trojan War

- 2 Deepest blue hole in the world discovered, with hidden caves and tunnels believed to be inside

- 3 10 surprising things that are made from petroleum

- 4 What's the highest place on Earth that humans live?

- 5 Why did Homo sapiens emerge in Africa?

- Manuscript Preparation

What is and How to Write a Good Hypothesis in Research?

- 4 minute read

- 318.4K views

Table of Contents

One of the most important aspects of conducting research is constructing a strong hypothesis. But what makes a hypothesis in research effective? In this article, we’ll look at the difference between a hypothesis and a research question, as well as the elements of a good hypothesis in research. We’ll also include some examples of effective hypotheses, and what pitfalls to avoid.

What is a Hypothesis in Research?

Simply put, a hypothesis is a research question that also includes the predicted or expected result of the research. Without a hypothesis, there can be no basis for a scientific or research experiment. As such, it is critical that you carefully construct your hypothesis by being deliberate and thorough, even before you set pen to paper. Unless your hypothesis is clearly and carefully constructed, any flaw can have an adverse, and even grave, effect on the quality of your experiment and its subsequent results.

Research Question vs Hypothesis

It’s easy to confuse research questions with hypotheses, and vice versa. While they’re both critical to the Scientific Method, they have very specific differences. Primarily, a research question, just like a hypothesis, is focused and concise. But a hypothesis includes a prediction based on the proposed research, and is designed to forecast the relationship of and between two (or more) variables. Research questions are open-ended, and invite debate and discussion, while hypotheses are closed, e.g. “The relationship between A and B will be C.”

A hypothesis is generally used if your research topic is fairly well established, and you are relatively certain about the relationship between the variables that will be presented in your research. Since a hypothesis is ideally suited for experimental studies, it will, by its very existence, affect the design of your experiment. The research question is typically used for new topics that have not yet been researched extensively. Here, the relationship between different variables is less known. There is no prediction made, but there may be variables explored. The research question can be casual in nature, simply trying to understand if a relationship even exists, descriptive or comparative.

How to Write Hypothesis in Research

Writing an effective hypothesis starts before you even begin to type. Like any task, preparation is key, so you start first by conducting research yourself, and reading all you can about the topic that you plan to research. From there, you’ll gain the knowledge you need to understand where your focus within the topic will lie.

Remember that a hypothesis is a prediction of the relationship that exists between two or more variables. Your job is to write a hypothesis, and design the research, to “prove” whether or not your prediction is correct. A common pitfall is to use judgments that are subjective and inappropriate for the construction of a hypothesis. It’s important to keep the focus and language of your hypothesis objective.

An effective hypothesis in research is clearly and concisely written, and any terms or definitions clarified and defined. Specific language must also be used to avoid any generalities or assumptions.

Use the following points as a checklist to evaluate the effectiveness of your research hypothesis:

- Predicts the relationship and outcome

- Simple and concise – avoid wordiness

- Clear with no ambiguity or assumptions about the readers’ knowledge

- Observable and testable results

- Relevant and specific to the research question or problem

Research Hypothesis Example

Perhaps the best way to evaluate whether or not your hypothesis is effective is to compare it to those of your colleagues in the field. There is no need to reinvent the wheel when it comes to writing a powerful research hypothesis. As you’re reading and preparing your hypothesis, you’ll also read other hypotheses. These can help guide you on what works, and what doesn’t, when it comes to writing a strong research hypothesis.

Here are a few generic examples to get you started.

Eating an apple each day, after the age of 60, will result in a reduction of frequency of physician visits.

Budget airlines are more likely to receive more customer complaints. A budget airline is defined as an airline that offers lower fares and fewer amenities than a traditional full-service airline. (Note that the term “budget airline” is included in the hypothesis.

Workplaces that offer flexible working hours report higher levels of employee job satisfaction than workplaces with fixed hours.

Each of the above examples are specific, observable and measurable, and the statement of prediction can be verified or shown to be false by utilizing standard experimental practices. It should be noted, however, that often your hypothesis will change as your research progresses.

Language Editing Plus

Elsevier’s Language Editing Plus service can help ensure that your research hypothesis is well-designed, and articulates your research and conclusions. Our most comprehensive editing package, you can count on a thorough language review by native-English speakers who are PhDs or PhD candidates. We’ll check for effective logic and flow of your manuscript, as well as document formatting for your chosen journal, reference checks, and much more.

- Research Process

Systematic Literature Review or Literature Review?

What is a Problem Statement? [with examples]

You may also like.

Page-Turner Articles are More Than Just Good Arguments: Be Mindful of Tone and Structure!

A Must-see for Researchers! How to Ensure Inclusivity in Your Scientific Writing

Make Hook, Line, and Sinker: The Art of Crafting Engaging Introductions

Can Describing Study Limitations Improve the Quality of Your Paper?

A Guide to Crafting Shorter, Impactful Sentences in Academic Writing

6 Steps to Write an Excellent Discussion in Your Manuscript

How to Write Clear and Crisp Civil Engineering Papers? Here are 5 Key Tips to Consider

The Clear Path to An Impactful Paper: ②

Input your search keywords and press Enter.

What Is a Hypothesis? (Science)

If...,Then...

Angela Lumsden/Getty Images

- Scientific Method

- Chemical Laws

- Periodic Table

- Projects & Experiments

- Biochemistry

- Physical Chemistry

- Medical Chemistry

- Chemistry In Everyday Life

- Famous Chemists

- Activities for Kids

- Abbreviations & Acronyms

- Weather & Climate

- Ph.D., Biomedical Sciences, University of Tennessee at Knoxville

- B.A., Physics and Mathematics, Hastings College

A hypothesis (plural hypotheses) is a proposed explanation for an observation. The definition depends on the subject.

In science, a hypothesis is part of the scientific method. It is a prediction or explanation that is tested by an experiment. Observations and experiments may disprove a scientific hypothesis, but can never entirely prove one.

In the study of logic, a hypothesis is an if-then proposition, typically written in the form, "If X , then Y ."

In common usage, a hypothesis is simply a proposed explanation or prediction, which may or may not be tested.

Writing a Hypothesis

Most scientific hypotheses are proposed in the if-then format because it's easy to design an experiment to see whether or not a cause and effect relationship exists between the independent variable and the dependent variable . The hypothesis is written as a prediction of the outcome of the experiment.

Null Hypothesis and Alternative Hypothesis

Statistically, it's easier to show there is no relationship between two variables than to support their connection. So, scientists often propose the null hypothesis . The null hypothesis assumes changing the independent variable will have no effect on the dependent variable.

In contrast, the alternative hypothesis suggests changing the independent variable will have an effect on the dependent variable. Designing an experiment to test this hypothesis can be trickier because there are many ways to state an alternative hypothesis.

For example, consider a possible relationship between getting a good night's sleep and getting good grades. The null hypothesis might be stated: "The number of hours of sleep students get is unrelated to their grades" or "There is no correlation between hours of sleep and grades."

An experiment to test this hypothesis might involve collecting data, recording average hours of sleep for each student and grades. If a student who gets eight hours of sleep generally does better than students who get four hours of sleep or 10 hours of sleep, the hypothesis might be rejected.

But the alternative hypothesis is harder to propose and test. The most general statement would be: "The amount of sleep students get affects their grades." The hypothesis might also be stated as "If you get more sleep, your grades will improve" or "Students who get nine hours of sleep have better grades than those who get more or less sleep."

In an experiment, you can collect the same data, but the statistical analysis is less likely to give you a high confidence limit.

Usually, a scientist starts out with the null hypothesis. From there, it may be possible to propose and test an alternative hypothesis, to narrow down the relationship between the variables.

Example of a Hypothesis

Examples of a hypothesis include:

- If you drop a rock and a feather, (then) they will fall at the same rate.

- Plants need sunlight in order to live. (if sunlight, then life)

- Eating sugar gives you energy. (if sugar, then energy)

- White, Jay D. Research in Public Administration . Conn., 1998.

- Schick, Theodore, and Lewis Vaughn. How to Think about Weird Things: Critical Thinking for a New Age . McGraw-Hill Higher Education, 2002.

- Null Hypothesis Examples

- Examples of Independent and Dependent Variables

- Difference Between Independent and Dependent Variables

- Definition of a Hypothesis

- Null Hypothesis Definition and Examples

- What Are the Elements of a Good Hypothesis?

- Six Steps of the Scientific Method

- What Are Examples of a Hypothesis?

- Independent Variable Definition and Examples

- Understanding Simple vs Controlled Experiments

- Scientific Method Flow Chart

- What Is a Testable Hypothesis?

- Scientific Method Vocabulary Terms

- What 'Fail to Reject' Means in a Hypothesis Test

- How To Design a Science Fair Experiment

- What Is an Experiment? Definition and Design

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Korean Med Sci

- v.37(16); 2022 Apr 25

A Practical Guide to Writing Quantitative and Qualitative Research Questions and Hypotheses in Scholarly Articles

Edward barroga.

1 Department of General Education, Graduate School of Nursing Science, St. Luke’s International University, Tokyo, Japan.

Glafera Janet Matanguihan

2 Department of Biological Sciences, Messiah University, Mechanicsburg, PA, USA.

The development of research questions and the subsequent hypotheses are prerequisites to defining the main research purpose and specific objectives of a study. Consequently, these objectives determine the study design and research outcome. The development of research questions is a process based on knowledge of current trends, cutting-edge studies, and technological advances in the research field. Excellent research questions are focused and require a comprehensive literature search and in-depth understanding of the problem being investigated. Initially, research questions may be written as descriptive questions which could be developed into inferential questions. These questions must be specific and concise to provide a clear foundation for developing hypotheses. Hypotheses are more formal predictions about the research outcomes. These specify the possible results that may or may not be expected regarding the relationship between groups. Thus, research questions and hypotheses clarify the main purpose and specific objectives of the study, which in turn dictate the design of the study, its direction, and outcome. Studies developed from good research questions and hypotheses will have trustworthy outcomes with wide-ranging social and health implications.

INTRODUCTION

Scientific research is usually initiated by posing evidenced-based research questions which are then explicitly restated as hypotheses. 1 , 2 The hypotheses provide directions to guide the study, solutions, explanations, and expected results. 3 , 4 Both research questions and hypotheses are essentially formulated based on conventional theories and real-world processes, which allow the inception of novel studies and the ethical testing of ideas. 5 , 6

It is crucial to have knowledge of both quantitative and qualitative research 2 as both types of research involve writing research questions and hypotheses. 7 However, these crucial elements of research are sometimes overlooked; if not overlooked, then framed without the forethought and meticulous attention it needs. Planning and careful consideration are needed when developing quantitative or qualitative research, particularly when conceptualizing research questions and hypotheses. 4

There is a continuing need to support researchers in the creation of innovative research questions and hypotheses, as well as for journal articles that carefully review these elements. 1 When research questions and hypotheses are not carefully thought of, unethical studies and poor outcomes usually ensue. Carefully formulated research questions and hypotheses define well-founded objectives, which in turn determine the appropriate design, course, and outcome of the study. This article then aims to discuss in detail the various aspects of crafting research questions and hypotheses, with the goal of guiding researchers as they develop their own. Examples from the authors and peer-reviewed scientific articles in the healthcare field are provided to illustrate key points.

DEFINITIONS AND RELATIONSHIP OF RESEARCH QUESTIONS AND HYPOTHESES

A research question is what a study aims to answer after data analysis and interpretation. The answer is written in length in the discussion section of the paper. Thus, the research question gives a preview of the different parts and variables of the study meant to address the problem posed in the research question. 1 An excellent research question clarifies the research writing while facilitating understanding of the research topic, objective, scope, and limitations of the study. 5

On the other hand, a research hypothesis is an educated statement of an expected outcome. This statement is based on background research and current knowledge. 8 , 9 The research hypothesis makes a specific prediction about a new phenomenon 10 or a formal statement on the expected relationship between an independent variable and a dependent variable. 3 , 11 It provides a tentative answer to the research question to be tested or explored. 4

Hypotheses employ reasoning to predict a theory-based outcome. 10 These can also be developed from theories by focusing on components of theories that have not yet been observed. 10 The validity of hypotheses is often based on the testability of the prediction made in a reproducible experiment. 8

Conversely, hypotheses can also be rephrased as research questions. Several hypotheses based on existing theories and knowledge may be needed to answer a research question. Developing ethical research questions and hypotheses creates a research design that has logical relationships among variables. These relationships serve as a solid foundation for the conduct of the study. 4 , 11 Haphazardly constructed research questions can result in poorly formulated hypotheses and improper study designs, leading to unreliable results. Thus, the formulations of relevant research questions and verifiable hypotheses are crucial when beginning research. 12

CHARACTERISTICS OF GOOD RESEARCH QUESTIONS AND HYPOTHESES

Excellent research questions are specific and focused. These integrate collective data and observations to confirm or refute the subsequent hypotheses. Well-constructed hypotheses are based on previous reports and verify the research context. These are realistic, in-depth, sufficiently complex, and reproducible. More importantly, these hypotheses can be addressed and tested. 13

There are several characteristics of well-developed hypotheses. Good hypotheses are 1) empirically testable 7 , 10 , 11 , 13 ; 2) backed by preliminary evidence 9 ; 3) testable by ethical research 7 , 9 ; 4) based on original ideas 9 ; 5) have evidenced-based logical reasoning 10 ; and 6) can be predicted. 11 Good hypotheses can infer ethical and positive implications, indicating the presence of a relationship or effect relevant to the research theme. 7 , 11 These are initially developed from a general theory and branch into specific hypotheses by deductive reasoning. In the absence of a theory to base the hypotheses, inductive reasoning based on specific observations or findings form more general hypotheses. 10

TYPES OF RESEARCH QUESTIONS AND HYPOTHESES

Research questions and hypotheses are developed according to the type of research, which can be broadly classified into quantitative and qualitative research. We provide a summary of the types of research questions and hypotheses under quantitative and qualitative research categories in Table 1 .

Research questions in quantitative research

In quantitative research, research questions inquire about the relationships among variables being investigated and are usually framed at the start of the study. These are precise and typically linked to the subject population, dependent and independent variables, and research design. 1 Research questions may also attempt to describe the behavior of a population in relation to one or more variables, or describe the characteristics of variables to be measured ( descriptive research questions ). 1 , 5 , 14 These questions may also aim to discover differences between groups within the context of an outcome variable ( comparative research questions ), 1 , 5 , 14 or elucidate trends and interactions among variables ( relationship research questions ). 1 , 5 We provide examples of descriptive, comparative, and relationship research questions in quantitative research in Table 2 .

Hypotheses in quantitative research

In quantitative research, hypotheses predict the expected relationships among variables. 15 Relationships among variables that can be predicted include 1) between a single dependent variable and a single independent variable ( simple hypothesis ) or 2) between two or more independent and dependent variables ( complex hypothesis ). 4 , 11 Hypotheses may also specify the expected direction to be followed and imply an intellectual commitment to a particular outcome ( directional hypothesis ) 4 . On the other hand, hypotheses may not predict the exact direction and are used in the absence of a theory, or when findings contradict previous studies ( non-directional hypothesis ). 4 In addition, hypotheses can 1) define interdependency between variables ( associative hypothesis ), 4 2) propose an effect on the dependent variable from manipulation of the independent variable ( causal hypothesis ), 4 3) state a negative relationship between two variables ( null hypothesis ), 4 , 11 , 15 4) replace the working hypothesis if rejected ( alternative hypothesis ), 15 explain the relationship of phenomena to possibly generate a theory ( working hypothesis ), 11 5) involve quantifiable variables that can be tested statistically ( statistical hypothesis ), 11 6) or express a relationship whose interlinks can be verified logically ( logical hypothesis ). 11 We provide examples of simple, complex, directional, non-directional, associative, causal, null, alternative, working, statistical, and logical hypotheses in quantitative research, as well as the definition of quantitative hypothesis-testing research in Table 3 .

Research questions in qualitative research

Unlike research questions in quantitative research, research questions in qualitative research are usually continuously reviewed and reformulated. The central question and associated subquestions are stated more than the hypotheses. 15 The central question broadly explores a complex set of factors surrounding the central phenomenon, aiming to present the varied perspectives of participants. 15

There are varied goals for which qualitative research questions are developed. These questions can function in several ways, such as to 1) identify and describe existing conditions ( contextual research question s); 2) describe a phenomenon ( descriptive research questions ); 3) assess the effectiveness of existing methods, protocols, theories, or procedures ( evaluation research questions ); 4) examine a phenomenon or analyze the reasons or relationships between subjects or phenomena ( explanatory research questions ); or 5) focus on unknown aspects of a particular topic ( exploratory research questions ). 5 In addition, some qualitative research questions provide new ideas for the development of theories and actions ( generative research questions ) or advance specific ideologies of a position ( ideological research questions ). 1 Other qualitative research questions may build on a body of existing literature and become working guidelines ( ethnographic research questions ). Research questions may also be broadly stated without specific reference to the existing literature or a typology of questions ( phenomenological research questions ), may be directed towards generating a theory of some process ( grounded theory questions ), or may address a description of the case and the emerging themes ( qualitative case study questions ). 15 We provide examples of contextual, descriptive, evaluation, explanatory, exploratory, generative, ideological, ethnographic, phenomenological, grounded theory, and qualitative case study research questions in qualitative research in Table 4 , and the definition of qualitative hypothesis-generating research in Table 5 .

Qualitative studies usually pose at least one central research question and several subquestions starting with How or What . These research questions use exploratory verbs such as explore or describe . These also focus on one central phenomenon of interest, and may mention the participants and research site. 15

Hypotheses in qualitative research

Hypotheses in qualitative research are stated in the form of a clear statement concerning the problem to be investigated. Unlike in quantitative research where hypotheses are usually developed to be tested, qualitative research can lead to both hypothesis-testing and hypothesis-generating outcomes. 2 When studies require both quantitative and qualitative research questions, this suggests an integrative process between both research methods wherein a single mixed-methods research question can be developed. 1

FRAMEWORKS FOR DEVELOPING RESEARCH QUESTIONS AND HYPOTHESES

Research questions followed by hypotheses should be developed before the start of the study. 1 , 12 , 14 It is crucial to develop feasible research questions on a topic that is interesting to both the researcher and the scientific community. This can be achieved by a meticulous review of previous and current studies to establish a novel topic. Specific areas are subsequently focused on to generate ethical research questions. The relevance of the research questions is evaluated in terms of clarity of the resulting data, specificity of the methodology, objectivity of the outcome, depth of the research, and impact of the study. 1 , 5 These aspects constitute the FINER criteria (i.e., Feasible, Interesting, Novel, Ethical, and Relevant). 1 Clarity and effectiveness are achieved if research questions meet the FINER criteria. In addition to the FINER criteria, Ratan et al. described focus, complexity, novelty, feasibility, and measurability for evaluating the effectiveness of research questions. 14

The PICOT and PEO frameworks are also used when developing research questions. 1 The following elements are addressed in these frameworks, PICOT: P-population/patients/problem, I-intervention or indicator being studied, C-comparison group, O-outcome of interest, and T-timeframe of the study; PEO: P-population being studied, E-exposure to preexisting conditions, and O-outcome of interest. 1 Research questions are also considered good if these meet the “FINERMAPS” framework: Feasible, Interesting, Novel, Ethical, Relevant, Manageable, Appropriate, Potential value/publishable, and Systematic. 14

As we indicated earlier, research questions and hypotheses that are not carefully formulated result in unethical studies or poor outcomes. To illustrate this, we provide some examples of ambiguous research question and hypotheses that result in unclear and weak research objectives in quantitative research ( Table 6 ) 16 and qualitative research ( Table 7 ) 17 , and how to transform these ambiguous research question(s) and hypothesis(es) into clear and good statements.

a These statements were composed for comparison and illustrative purposes only.

b These statements are direct quotes from Higashihara and Horiuchi. 16

a This statement is a direct quote from Shimoda et al. 17

The other statements were composed for comparison and illustrative purposes only.

CONSTRUCTING RESEARCH QUESTIONS AND HYPOTHESES