Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Types of Variables in Research | Definitions & Examples

Types of Variables in Research | Definitions & Examples

Published on 19 September 2022 by Rebecca Bevans . Revised on 28 November 2022.

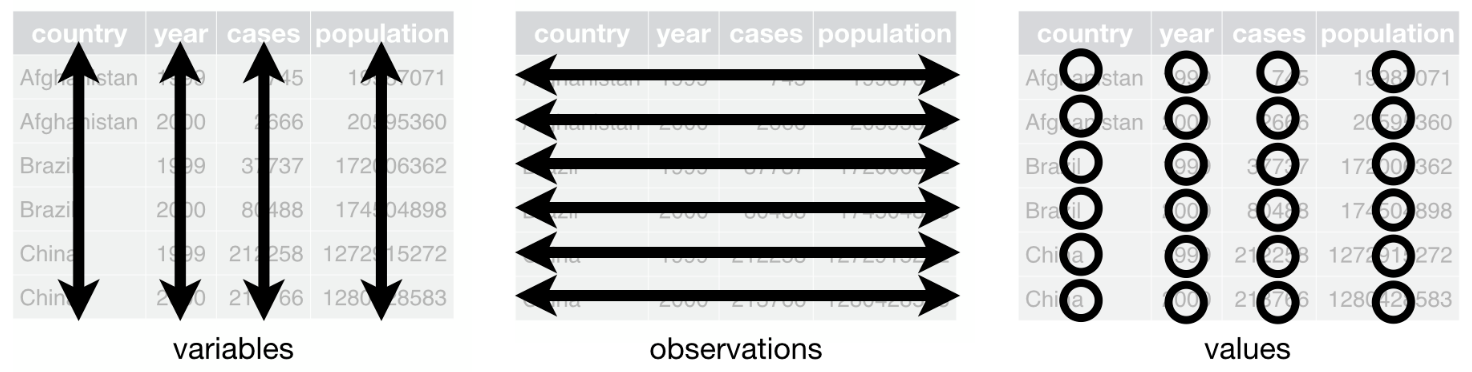

In statistical research, a variable is defined as an attribute of an object of study. Choosing which variables to measure is central to good experimental design .

You need to know which types of variables you are working with in order to choose appropriate statistical tests and interpret the results of your study.

You can usually identify the type of variable by asking two questions:

- What type of data does the variable contain?

- What part of the experiment does the variable represent?

Table of contents

Types of data: quantitative vs categorical variables, parts of the experiment: independent vs dependent variables, other common types of variables, frequently asked questions about variables.

Data is a specific measurement of a variable – it is the value you record in your data sheet. Data is generally divided into two categories:

- Quantitative data represents amounts.

- Categorical data represents groupings.

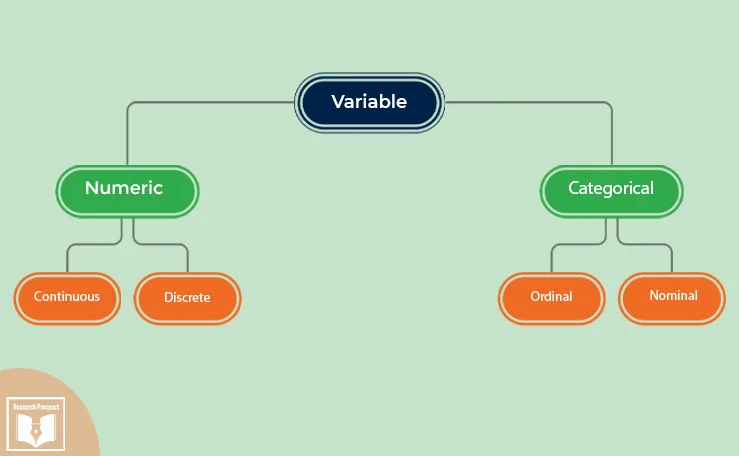

A variable that contains quantitative data is a quantitative variable ; a variable that contains categorical data is a categorical variable . Each of these types of variable can be broken down into further types.

Quantitative variables

When you collect quantitative data, the numbers you record represent real amounts that can be added, subtracted, divided, etc. There are two types of quantitative variables: discrete and continuous .

Categorical variables

Categorical variables represent groupings of some kind. They are sometimes recorded as numbers, but the numbers represent categories rather than actual amounts of things.

There are three types of categorical variables: binary , nominal , and ordinal variables.

*Note that sometimes a variable can work as more than one type! An ordinal variable can also be used as a quantitative variable if the scale is numeric and doesn’t need to be kept as discrete integers. For example, star ratings on product reviews are ordinal (1 to 5 stars), but the average star rating is quantitative.

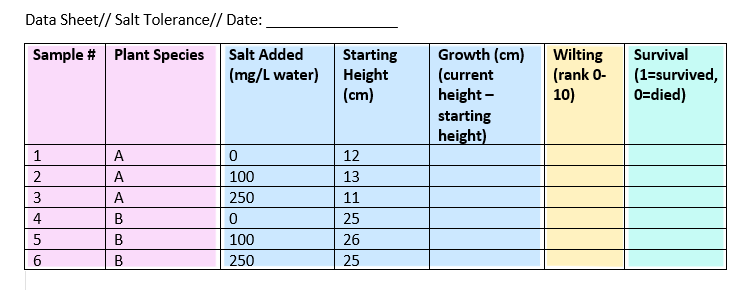

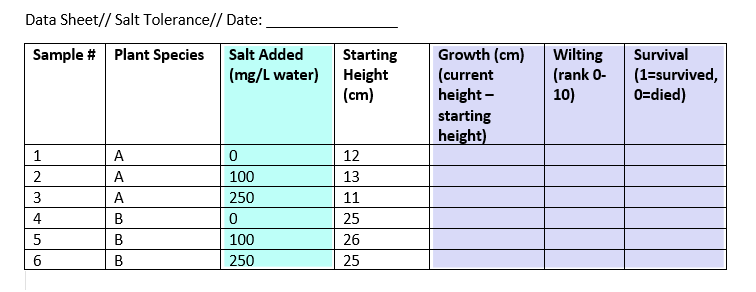

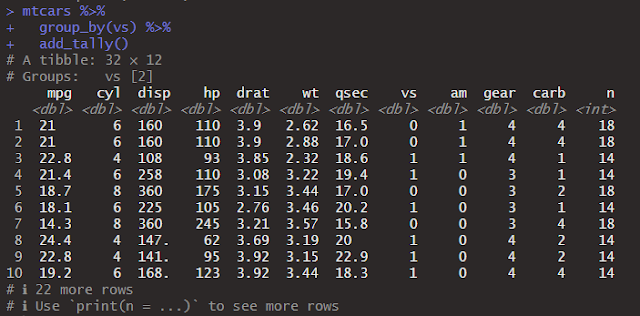

Example data sheet

To keep track of your salt-tolerance experiment, you make a data sheet where you record information about the variables in the experiment, like salt addition and plant health.

To gather information about plant responses over time, you can fill out the same data sheet every few days until the end of the experiment. This example sheet is colour-coded according to the type of variable: nominal , continuous , ordinal , and binary .

Prevent plagiarism, run a free check.

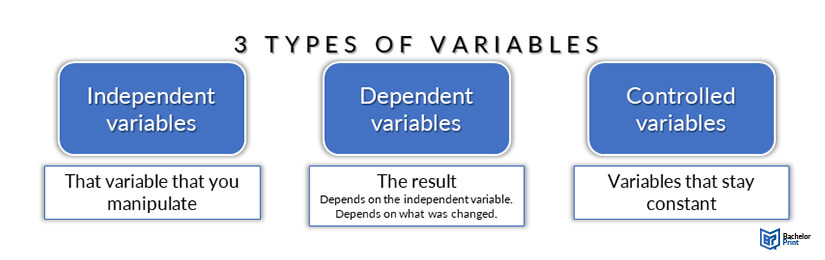

Experiments are usually designed to find out what effect one variable has on another – in our example, the effect of salt addition on plant growth.

You manipulate the independent variable (the one you think might be the cause ) and then measure the dependent variable (the one you think might be the effect ) to find out what this effect might be.

You will probably also have variables that you hold constant ( control variables ) in order to focus on your experimental treatment.

In this experiment, we have one independent and three dependent variables.

The other variables in the sheet can’t be classified as independent or dependent, but they do contain data that you will need in order to interpret your dependent and independent variables.

What about correlational research?

When you do correlational research , the terms ‘dependent’ and ‘independent’ don’t apply, because you are not trying to establish a cause-and-effect relationship.

However, there might be cases where one variable clearly precedes the other (for example, rainfall leads to mud, rather than the other way around). In these cases, you may call the preceding variable (i.e., the rainfall) the predictor variable and the following variable (i.e., the mud) the outcome variable .

Once you have defined your independent and dependent variables and determined whether they are categorical or quantitative, you will be able to choose the correct statistical test .

But there are many other ways of describing variables that help with interpreting your results. Some useful types of variable are listed below.

A confounding variable is closely related to both the independent and dependent variables in a study. An independent variable represents the supposed cause , while the dependent variable is the supposed effect . A confounding variable is a third variable that influences both the independent and dependent variables.

Failing to account for confounding variables can cause you to wrongly estimate the relationship between your independent and dependent variables.

Discrete and continuous variables are two types of quantitative variables :

- Discrete variables represent counts (e.g., the number of objects in a collection).

- Continuous variables represent measurable amounts (e.g., water volume or weight).

You can think of independent and dependent variables in terms of cause and effect: an independent variable is the variable you think is the cause , while a dependent variable is the effect .

In an experiment, you manipulate the independent variable and measure the outcome in the dependent variable. For example, in an experiment about the effect of nutrients on crop growth:

- The independent variable is the amount of nutrients added to the crop field.

- The dependent variable is the biomass of the crops at harvest time.

Defining your variables, and deciding how you will manipulate and measure them, is an important part of experimental design .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, November 28). Types of Variables in Research | Definitions & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/research-methods/variables-types/

Is this article helpful?

Rebecca Bevans

Other students also liked, a quick guide to experimental design | 5 steps & examples, quasi-experimental design | definition, types & examples, construct validity | definition, types, & examples.

Variables: Definition, Examples, Types of Variables in Research

What is a Variable?

Within the context of a research investigation, concepts are generally referred to as variables. A variable is, as the name applies, something that varies.

Examples of Variable

These are all examples of variables because each of these properties varies or differs from one individual to another.

- income and expenses,

- family size,

- country of birth,

- capital expenditure,

- class grades,

- blood pressure readings,

- preoperative anxiety levels,

- eye color, and

- vehicle type.

What is Variable in Research?

A variable is any property, characteristic, number, or quantity that increases or decreases over time or can take on different values (as opposed to constants, such as n , that do not vary) in different situations.

When conducting research, experiments often manipulate variables. For example, an experimenter might compare the effectiveness of four types of fertilizers.

In this case, the variable is the ‘type of fertilizers.’ A social scientist may examine the possible effect of early marriage on divorce. Her early marriage is variable.

A business researcher may find it useful to include the dividend in determining the share prices . Here, the dividend is the variable.

Effectiveness, divorce, and share prices are variables because they also vary due to manipulating fertilizers, early marriage, and dividends.

11 Types of Variables in Research

Qualitative variables.

An important distinction between variables is the qualitative and quantitative variables.

Qualitative variables are those that express a qualitative attribute, such as hair color, religion, race, gender, social status, method of payment, and so on. The values of a qualitative variable do not imply a meaningful numerical ordering.

The value of the variable ‘religion’ (Muslim, Hindu.., etc..) differs qualitatively; no ordering of religion is implied. Qualitative variables are sometimes referred to as categorical variables.

For example, the variable sex has two distinct categories: ‘male’ and ‘female.’ Since the values of this variable are expressed in categories, we refer to this as a categorical variable.

Similarly, the place of residence may be categorized as urban and rural and thus is a categorical variable.

Categorical variables may again be described as nominal and ordinal.

Ordinal variables can be logically ordered or ranked higher or lower than another but do not necessarily establish a numeric difference between each category, such as examination grades (A+, A, B+, etc., and clothing size (Extra large, large, medium, small).

Nominal variables are those that can neither be ranked nor logically ordered, such as religion, sex, etc.

A qualitative variable is a characteristic that is not capable of being measured but can be categorized as possessing or not possessing some characteristics.

Quantitative Variables

Quantitative variables, also called numeric variables, are those variables that are measured in terms of numbers. A simple example of a quantitative variable is a person’s age.

Age can take on different values because a person can be 20 years old, 35 years old, and so on. Likewise, family size is a quantitative variable because a family might be comprised of one, two, or three members, and so on.

Each of these properties or characteristics referred to above varies or differs from one individual to another. Note that these variables are expressed in numbers, for which we call quantitative or sometimes numeric variables.

A quantitative variable is one for which the resulting observations are numeric and thus possess a natural ordering or ranking.

Discrete and Continuous Variables

Quantitative variables are again of two types: discrete and continuous.

Variables such as some children in a household or the number of defective items in a box are discrete variables since the possible scores are discrete on the scale.

For example, a household could have three or five children, but not 4.52 children.

Other variables, such as ‘time required to complete an MCQ test’ and ‘waiting time in a queue in front of a bank counter,’ are continuous variables.

The time required in the above examples is a continuous variable, which could be, for example, 1.65 minutes or 1.6584795214 minutes.

Of course, the practicalities of measurement preclude most measured variables from being continuous.

Discrete Variable

A discrete variable, restricted to certain values, usually (but not necessarily) consists of whole numbers, such as the family size and a number of defective items in a box. They are often the results of enumeration or counting.

A few more examples are;

- The number of accidents in the twelve months.

- The number of mobile cards sold in a store within seven days.

- The number of patients admitted to a hospital over a specified period.

- The number of new branches of a bank opened annually during 2001- 2007.

- The number of weekly visits made by health personnel in the last 12 months.

Continuous Variable

A continuous variable may take on an infinite number of intermediate values along a specified interval. Examples are:

- The sugar level in the human body;

- Blood pressure reading;

- Temperature;

- Height or weight of the human body;

- Rate of bank interest;

- Internal rate of return (IRR),

- Earning ratio (ER);

- Current ratio (CR)

No matter how close two observations might be, if the instrument of measurement is precise enough, a third observation can be found, falling between the first two.

A continuous variable generally results from measurement and can assume countless values in the specified range.

Dependent Variables and Independent Variable

In many research settings, two specific classes of variables need to be distinguished from one another: independent variable and dependent variable.

Many research studies aim to reveal and understand the causes of underlying phenomena or problems with the ultimate goal of establishing a causal relationship between them.

Look at the following statements:

- Low intake of food causes underweight.

- Smoking enhances the risk of lung cancer.

- Level of education influences job satisfaction.

- Advertisement helps in sales promotion.

- The drug causes improvement of health problems.

- Nursing intervention causes more rapid recovery.

- Previous job experiences determine the initial salary.

- Blueberries slow down aging.

- The dividend per share determines share prices.

In each of the above queries, we have two independent and dependent variables. In the first example, ‘low intake of food’ is believed to have caused the ‘problem of being underweight.’

It is thus the so-called independent variable. Underweight is the dependent variable because we believe this ‘problem’ (the problem of being underweight) has been caused by ‘the low intake of food’ (the factor).

Similarly, smoking, dividend, and advertisement are all independent variables, and lung cancer, job satisfaction, and sales are dependent variables.

In general, an independent variable is manipulated by the experimenter or researcher, and its effects on the dependent variable are measured.

Independent Variable

The variable that is used to describe or measure the factor that is assumed to cause or at least to influence the problem or outcome is called an independent variable.

The definition implies that the experimenter uses the independent variable to describe or explain its influence or effect of it on the dependent variable.

Variability in the dependent variable is presumed to depend on variability in the independent variable.

Depending on the context, an independent variable is sometimes called a predictor variable, regressor, controlled variable, manipulated variable, explanatory variable, exposure variable (as used in reliability theory), risk factor (as used in medical statistics), feature (as used in machine learning and pattern recognition) or input variable.

The explanatory variable is preferred by some authors over the independent variable when the quantities treated as independent variables may not be statistically independent or independently manipulable by the researcher.

If the independent variable is referred to as an explanatory variable, then the term response variable is preferred by some authors for the dependent variable.

Dependent Variable

The variable used to describe or measure the problem or outcome under study is called a dependent variable.

In a causal relationship, the cause is the independent variable, and the effect is the dependent variable. If we hypothesize that smoking causes lung cancer, ‘smoking’ is the independent variable and cancer the dependent variable.

A business researcher may find it useful to include the dividend in determining the share prices. Here dividend is the independent variable, while the share price is the dependent variable.

The dependent variable usually is the variable the researcher is interested in understanding, explaining, or predicting.

In lung cancer research, the carcinoma is of real interest to the researcher, not smoking behavior per se. The independent variable is the presumed cause of, antecedent to, or influence on the dependent variable.

Depending on the context, a dependent variable is sometimes called a response variable, regressand, predicted variable, measured variable, explained variable, experimental variable, responding variable, outcome variable, output variable, or label.

An explained variable is preferred by some authors over the dependent variable when the quantities treated as dependent variables may not be statistically dependent.

If the dependent variable is referred to as an explained variable, then the term predictor variable is preferred by some authors for the independent variable.

Levels of an Independent Variable

If an experimenter compares an experimental treatment with a control treatment, then the independent variable (a type of treatment) has two levels: experimental and control.

If an experiment were to compare five types of diets, then the independent variables (types of diet) would have five levels.

In general, the number of levels of an independent variable is the number of experimental conditions.

Background Variable

In almost every study, we collect information such as age, sex, educational attainment, socioeconomic status, marital status, religion, place of birth, and the like. These variables are referred to as background variables.

These variables are often related to many independent variables, so they indirectly influence the problem. Hence they are called background variables.

The background variables should be measured if they are important to the study. However, we should try to keep the number of background variables as few as possible in the interest of the economy.

Moderating Variable

In any statement of relationships of variables, it is normally hypothesized that in some way, the independent variable ’causes’ the dependent variable to occur.

In simple relationships, all other variables are extraneous and are ignored.

In actual study situations, such a simple one-to-one relationship needs to be revised to take other variables into account to explain the relationship better.

This emphasizes the need to consider a second independent variable that is expected to have a significant contributory or contingent effect on the originally stated dependent-independent relationship.

Such a variable is termed a moderating variable.

Suppose you are studying the impact of field-based and classroom-based training on the work performance of health and family planning workers. You consider the type of training as the independent variable.

If you are focusing on the relationship between the age of the trainees and work performance, you might use ‘type of training’ as a moderating variable.

Extraneous Variable

Most studies concern the identification of a single independent variable and measuring its effect on the dependent variable.

But still, several variables might conceivably affect our hypothesized independent-dependent variable relationship, thereby distorting the study. These variables are referred to as extraneous variables.

Extraneous variables are not necessarily part of the study. They exert a confounding effect on the dependent-independent relationship and thus need to be eliminated or controlled for.

An example may illustrate the concept of extraneous variables. Suppose we are interested in examining the relationship between the work status of mothers and breastfeeding duration.

It is not unreasonable in this instance to presume that the level of education of mothers as it influences work status might have an impact on breastfeeding duration too.

Education is treated here as an extraneous variable. In any attempt to eliminate or control the effect of this variable, we may consider this variable a confounding variable.

An appropriate way of dealing with confounding variables is to follow the stratification procedure, which involves a separate analysis of the different levels of lies in confounding variables.

For this purpose, one can construct two crosstables for illiterate mothers and the other for literate mothers.

Suppose we find a similar association between work status and duration of breastfeeding in both the groups of mothers. In that case, we conclude that mothers’ educational level is not a confounding variable.

Intervening Variable

Often an apparent relationship between two variables is caused by a third variable.

For example, variables X and Y may be highly correlated, but only because X causes the third variable, Z, which in turn causes Y. In this case, Z is the intervening variable.

An intervening variable theoretically affects the observed phenomena but cannot be seen, measured, or manipulated directly; its effects can only be inferred from the effects of the independent and moderating variables on the observed phenomena.

We might view motivation or counseling as the intervening variable in the work-status and breastfeeding relationship.

Thus, motive, job satisfaction, responsibility, behavior, and justice are some of the examples of intervening variables.

Suppressor Variable

In many cases, we have good reasons to believe that the variables of interest have a relationship, but our data fail to establish any such relationship. Some hidden factors may suppress the true relationship between the two original variables.

Such a factor is referred to as a suppressor variable because it suppresses the relationship between the other two variables.

The suppressor variable suppresses the relationship by being positively correlated with one of the variables in the relationship and negatively correlated with the other. The true relationship between the two variables will reappear when the suppressor variable is controlled for.

Thus, for example, low age may pull education up but income down. In contrast, a high age may pull income up but education down, effectively canceling the relationship between education and income unless age is controlled for.

4 Relationships Between Variables

In dealing with relationships between variables in research, we observe a variety of dimensions in these relationships.

Positive and Negative Relationship

Symmetrical relationship, causal relationship, linear and non-linear relationship.

Two or more variables may have a positive, negative, or no relationship. In the case of two variables, a positive relationship is one in which both variables vary in the same direction.

However, they are said to have a negative relationship when they vary in opposite directions.

When a change in the other variable does not accompany the change or movement of one variable, we say that the variables in question are unrelated.

For example, if an increase in wage rate accompanies one’s job experience, the relationship between job experience and the wage rate is positive.

If an increase in an individual’s education level decreases his desire for additional children, the relationship is negative or inverse.

If the level of education does not have any bearing on the desire, we say that the variables’ desire for additional children and ‘education’ are unrelated.

Strength of Relationship

Once it has been established that two variables are related, we want to ascertain how strongly they are related.

A common statistic to measure the strength of a relationship is the so-called correlation coefficient symbolized by r. r is a unit-free measure, lying between -1 and +1 inclusive, with zero signifying no linear relationship.

As far as the prediction of one variable from the knowledge of the other variable is concerned, a value of r= +1 means a 100% accuracy in predicting a positive relationship between the two variables, and a value of r = -1 means a 100% accuracy in predicting a negative relationship between the two variables.

So far, we have discussed only symmetrical relationships in which a change in the other variable accompanies a change in either variable.

This relationship does not indicate which variable is the independent variable and which variable is the dependent variable.

In other words, you can label either of the variables as the independent variable.

Such a relationship is a symmetrical relationship. In an asymmetrical relationship, a change in variable X (say) is accompanied by a change in variable Y, but not vice versa.

The amount of rainfall, for example, will increase productivity, but productivity will not affect the rainfall. This is an asymmetrical relationship.

Similarly, the relationship between smoking and lung cancer would be asymmetrical because smoking could cause cancer, but lung cancer could not cause smoking.

Indicating a relationship between two variables does not automatically ensure that changes in one variable cause changes in another.

It is, however, very difficult to establish the existence of causality between variables. While no one can ever be certain that variable A causes variable B , one can gather some evidence that increases our belief that A leads to B.

In an attempt to do so, we seek the following evidence:

- Is there a relationship between A and B? When such evidence exists, it indicates a possible causal link between the variables.

- Is the relationship asymmetrical so that a change in A results in B but not vice-versa? In other words, does A occur before B? If we find that B occurs before A, we can have little confidence that A causes.

- Does a change in A result in a change in B regardless of the actions of other factors? Or, is it possible to eliminate other possible causes of B? Can one determine that C, D, and E (say) do not co-vary with B in a way that suggests possible causal connections?

A linear relationship is a straight-line relationship between two variables, where the variables vary at the same rate regardless of whether the values are low, high, or intermediate.

This is in contrast with the non-linear (or curvilinear) relationships, where the rate at which one variable changes in value may differ for different values of the second variable.

Whether a variable is linearly related to the other variable or not can simply be ascertained by plotting the K values against X values.

If the values, when plotted, appear to lie on a straight line, the existence of a linear relationship between X and Y is suggested.

Height and weight almost always have an approximately linear relationship, while age and fertility rates have a non-linear relationship.

Frequently Asked Questions about Variable

What is a variable within the context of a research investigation.

A variable, within the context of a research investigation, refers to concepts that vary. It can be any property, characteristic, number, or quantity that can increase or decrease over time or take on different values.

How is a variable used in research?

In research, a variable is any property or characteristic that can take on different values. Experiments often manipulate variables to compare outcomes. For instance, an experimenter might compare the effectiveness of different types of fertilizers, where the variable is the ‘type of fertilizers.’

What distinguishes qualitative variables from quantitative variables?

Qualitative variables express a qualitative attribute, such as hair color or religion, and do not imply a meaningful numerical ordering. Quantitative variables, on the other hand, are measured in terms of numbers, like a person’s age or family size.

How do discrete and continuous variables differ in terms of quantitative variables?

Discrete variables are restricted to certain values, often whole numbers, resulting from enumeration or counting, like the number of children in a household. Continuous variables can take on an infinite number of intermediate values along a specified interval, such as the time required to complete a test.

What are the roles of independent and dependent variables in research?

In research, the independent variable is manipulated by the researcher to observe its effects on the dependent variable. The independent variable is the presumed cause or influence, while the dependent variable is the outcome or effect that is being measured.

What is a background variable in a study?

Background variables are information collected in a study, such as age, sex, or educational attainment. These variables are often related to many independent variables and indirectly influence the main problem or outcome, hence they are termed background variables.

How does a suppressor variable affect the relationship between two other variables?

A suppressor variable can suppress or hide the true relationship between two other variables. It does this by being positively correlated with one of the variables and negatively correlated with the other. When the suppressor variable is controlled for, the true relationship between the two original variables can be observed.

Variables in Research | Types, Definiton & Examples

Introduction

What is a variable, what are the 5 types of variables in research, other variables in research.

Variables are fundamental components of research that allow for the measurement and analysis of data. They can be defined as characteristics or properties that can take on different values. In research design , understanding the types of variables and their roles is crucial for developing hypotheses , designing methods , and interpreting results .

This article outlines the the types of variables in research, including their definitions and examples, to provide a clear understanding of their use and significance in research studies. By categorizing variables into distinct groups based on their roles in research, their types of data, and their relationships with other variables, researchers can more effectively structure their studies and achieve more accurate conclusions.

A variable represents any characteristic, number, or quantity that can be measured or quantified. The term encompasses anything that can vary or change, ranging from simple concepts like age and height to more complex ones like satisfaction levels or economic status. Variables are essential in research as they are the foundational elements that researchers manipulate, measure, or control to gain insights into relationships, causes, and effects within their studies. They enable the framing of research questions, the formulation of hypotheses, and the interpretation of results.

Variables can be categorized based on their role in the study (such as independent and dependent variables ), the type of data they represent (quantitative or categorical), and their relationship to other variables (like confounding or control variables). Understanding what constitutes a variable and the various variable types available is a critical step in designing robust and meaningful research.

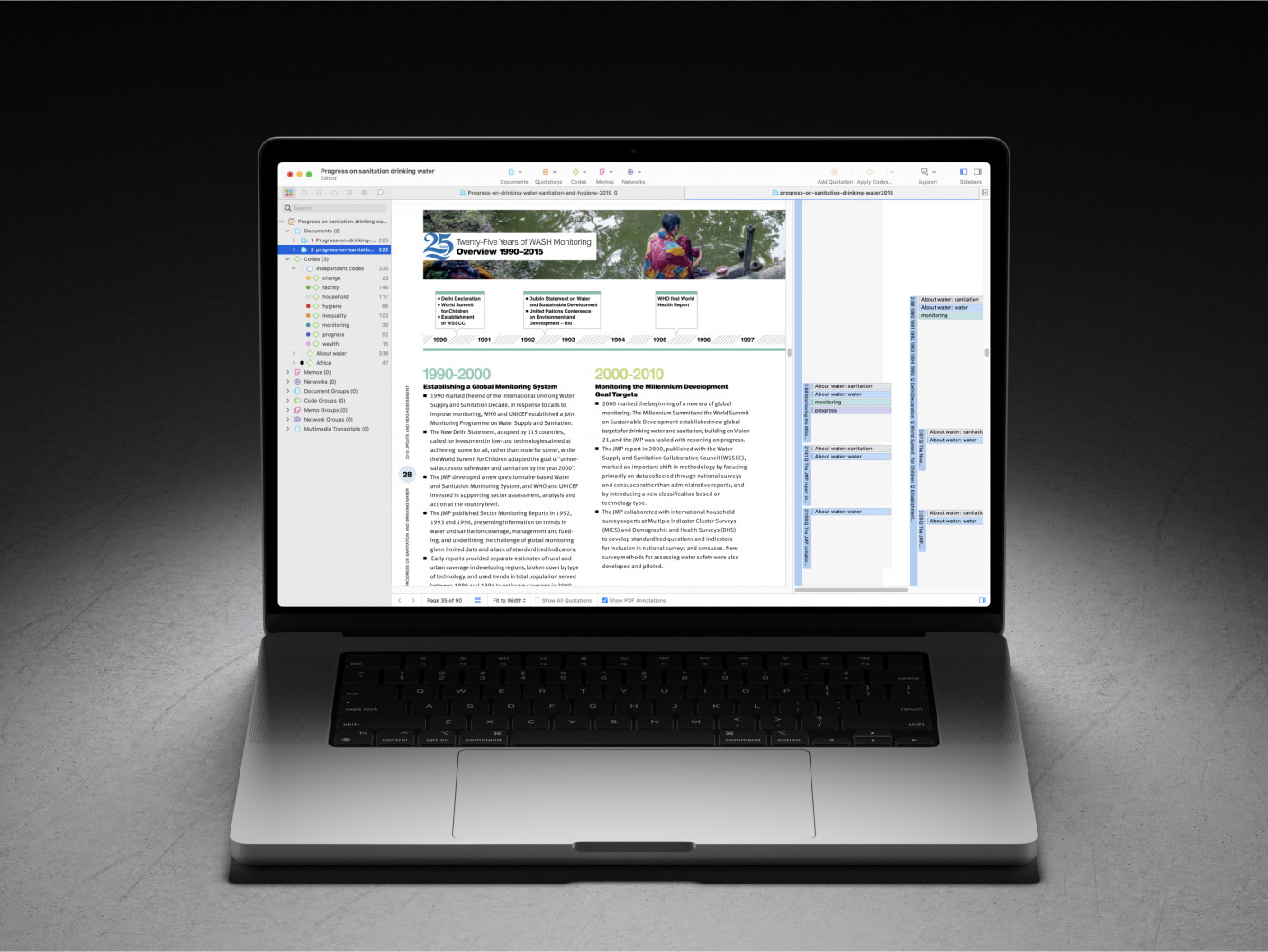

ATLAS.ti makes complex data easy to understand

Turn to our powerful data analysis tools to make the most of your research. Get started with a free trial.

Variables are crucial components in research, serving as the foundation for data collection , analysis , and interpretation . They are attributes or characteristics that can vary among subjects or over time, and understanding their types is essential for any study. Variables can be broadly classified into five main types, each with its distinct characteristics and roles within research.

This classification helps researchers in designing their studies, choosing appropriate measurement techniques, and analyzing their results accurately. The five types of variables include independent variables, dependent variables, categorical variables, continuous variables, and confounding variables. These categories not only facilitate a clearer understanding of the data but also guide the formulation of hypotheses and research methodologies.

Independent variables

Independent variables are foundational to the structure of research, serving as the factors or conditions that researchers manipulate or vary to observe their effects on dependent variables. These variables are considered "independent" because their variation does not depend on other variables within the study. Instead, they are the cause or stimulus that directly influences the outcomes being measured. For example, in an experiment to assess the effectiveness of a new teaching method on student performance, the teaching method applied (traditional vs. innovative) would be the independent variable.

The selection of an independent variable is a critical step in research design, as it directly correlates with the study's objective to determine causality or association. Researchers must clearly define and control these variables to ensure that observed changes in the dependent variable can be attributed to variations in the independent variable, thereby affirming the reliability of the results. In experimental research, the independent variable is what differentiates the control group from the experimental group, thereby setting the stage for meaningful comparison and analysis.

Dependent variables

Dependent variables are the outcomes or effects that researchers aim to explore and understand in their studies. These variables are called "dependent" because their values depend on the changes or variations of the independent variables.

Essentially, they are the responses or results that are measured to assess the impact of the independent variable's manipulation. For instance, in a study investigating the effect of exercise on weight loss, the amount of weight lost would be considered the dependent variable, as it depends on the exercise regimen (the independent variable).

The identification and measurement of the dependent variable are crucial for testing the hypothesis and drawing conclusions from the research. It allows researchers to quantify the effect of the independent variable , providing evidence for causal relationships or associations. In experimental settings, the dependent variable is what is being tested and measured across different groups or conditions, enabling researchers to assess the efficacy or impact of the independent variable's variation.

To ensure accuracy and reliability, the dependent variable must be defined clearly and measured consistently across all participants or observations. This consistency helps in reducing measurement errors and increases the validity of the research findings. By carefully analyzing the dependent variables, researchers can derive meaningful insights from their studies, contributing to the broader knowledge in their field.

Categorical variables

Categorical variables, also known as qualitative variables, represent types or categories that are used to group observations. These variables divide data into distinct groups or categories that lack a numerical value but hold significant meaning in research. Examples of categorical variables include gender (male, female, other), type of vehicle (car, truck, motorcycle), or marital status (single, married, divorced). These categories help researchers organize data into groups for comparison and analysis.

Categorical variables can be further classified into two subtypes: nominal and ordinal. Nominal variables are categories without any inherent order or ranking among them, such as blood type or ethnicity. Ordinal variables, on the other hand, imply a sort of ranking or order among the categories, like levels of satisfaction (high, medium, low) or education level (high school, bachelor's, master's, doctorate).

Understanding and identifying categorical variables is crucial in research as it influences the choice of statistical analysis methods. Since these variables represent categories without numerical significance, researchers employ specific statistical tests designed for a nominal or ordinal variable to draw meaningful conclusions. Properly classifying and analyzing categorical variables allow for the exploration of relationships between different groups within the study, shedding light on patterns and trends that might not be evident with numerical data alone.

Continuous variables

Continuous variables are quantitative variables that can take an infinite number of values within a given range. These variables are measured along a continuum and can represent very precise measurements. Examples of continuous variables include height, weight, temperature, and time. Because they can assume any value within a range, continuous variables allow for detailed analysis and a high degree of accuracy in research findings.

The ability to measure continuous variables at very fine scales makes them invaluable for many types of research, particularly in the natural and social sciences. For instance, in a study examining the effect of temperature on plant growth, temperature would be considered a continuous variable since it can vary across a wide spectrum and be measured to several decimal places.

When dealing with continuous variables, researchers often use methods incorporating a particular statistical test to accommodate a wide range of data points and the potential for infinite divisibility. This includes various forms of regression analysis, correlation, and other techniques suited for modeling and analyzing nuanced relationships between variables. The precision of continuous variables enhances the researcher's ability to detect patterns, trends, and causal relationships within the data, contributing to more robust and detailed conclusions.

Confounding variables

Confounding variables are those that can cause a false association between the independent and dependent variables, potentially leading to incorrect conclusions about the relationship being studied. These are extraneous variables that were not considered in the study design but can influence both the supposed cause and effect, creating a misleading correlation.

Identifying and controlling for a confounding variable is crucial in research to ensure the validity of the findings. This can be achieved through various methods, including randomization, stratification, and statistical control. Randomization helps to evenly distribute confounding variables across study groups, reducing their potential impact. Stratification involves analyzing the data within strata or layers that share common characteristics of the confounder. Statistical control allows researchers to adjust for the effects of confounders in the analysis phase.

Properly addressing confounding variables strengthens the credibility of research outcomes by clarifying the direct relationship between the dependent and independent variables, thus providing more accurate and reliable results.

Beyond the primary categories of variables commonly discussed in research methodology , there exists a diverse range of other variables that play significant roles in the design and analysis of studies. Below is an overview of some of these variables, highlighting their definitions and roles within research studies:

- Discrete variables : A discrete variable is a quantitative variable that represents quantitative data , such as the number of children in a family or the number of cars in a parking lot. Discrete variables can only take on specific values.

- Categorical variables : A categorical variable categorizes subjects or items into groups that do not have a natural numerical order. Categorical data includes nominal variables, like country of origin, and ordinal variables, such as education level.

- Predictor variables : Often used in statistical models, a predictor variable is used to forecast or predict the outcomes of other variables, not necessarily with a causal implication.

- Outcome variables : These variables represent the results or outcomes that researchers aim to explain or predict through their studies. An outcome variable is central to understanding the effects of predictor variables.

- Latent variables : Not directly observable, latent variables are inferred from other, directly measured variables. Examples include psychological constructs like intelligence or socioeconomic status.

- Composite variables : Created by combining multiple variables, composite variables can measure a concept more reliably or simplify the analysis. An example would be a composite happiness index derived from several survey questions .

- Preceding variables : These variables come before other variables in time or sequence, potentially influencing subsequent outcomes. A preceding variable is crucial in longitudinal studies to determine causality or sequences of events.

Master qualitative research with ATLAS.ti

Turn data into critical insights with our data analysis platform. Try out a free trial today.

- How it works

Types of Variables – A Comprehensive Guide

Published by Carmen Troy at August 14th, 2021 , Revised On October 26, 2023

A variable is any qualitative or quantitative characteristic that can change and have more than one value, such as age, height, weight, gender, etc.

Before conducting research, it’s essential to know what needs to be measured or analysed and choose a suitable statistical test to present your study’s findings.

In most cases, you can do it by identifying the key issues/variables related to your research’s main topic.

Example: If you want to test whether the hybridisation of plants harms the health of people. You can use the key variables like agricultural techniques, type of soil, environmental factors, types of pesticides used, the process of hybridisation, type of yield obtained after hybridisation, type of yield without hybridisation, etc.

Variables are broadly categorised into:

- Independent variables

- Dependent variable

- Control variable

Independent Vs. Dependent Vs. Control Variable

The research includes finding ways:

- To change the independent variables.

- To prevent the controlled variables from changing.

- To measure the dependent variables.

Note: The term dependent and independent is not applicable in correlational research as this is not a controlled experiment. A researcher doesn’t have control over the variables. The association and between two or more variables are measured. If one variable affects another one, then it’s called the predictor variable and outcome variable.

Example: Correlation between investment (predictor variable) and profit (outcome variable)

What data collection best suits your research?

- Find out by hiring an expert from ResearchProspect today!

- Despite how challenging the subject may be, we are here to help you.

Types of Variables Based on the Types of Data

A data is referred to as the information and statistics gathered for analysis of a research topic. Data is broadly divided into two categories, such as:

Quantitative/Numerical data is associated with the aspects of measurement, quantity, and extent.

Categorial data is associated with groupings.

A qualitative variable consists of qualitative data, and a quantitative variable consists of a quantitative variable.

Quantitative Variable

The quantitative variable is associated with measurement, quantity, and extent, like how many . It follows the statistical, mathematical, and computational techniques in numerical data such as percentages and statistics. The research is conducted on a large group of population.

Example: Find out the weight of students of the fifth standard studying in government schools.

The quantitative variable can be further categorised into continuous and discrete.

Categorial Variable

The categorical variable includes measurements that vary in categories such as names but not in terms of rank or degree. It means one level of a categorical variable cannot be considered better or greater than another level.

Example: Gender, brands, colors, zip codes

The categorical variable is further categorised into three types:

Note: Sometimes, an ordinal variable also acts as a quantitative variable. Ordinal data has an order, but the intervals between scale points may be uneven.

Example: Numbers on a rating scale represent the reviews’ rank or range from below average to above average. However, it also represents a quantitative variable showing how many stars and how much rating is given.

Not sure which statistical tests to use for your data?

Let the experts at researchprospect do the daunting work for you..

Using our approach, we illustrate how to collect data, sample sizes, validity, reliability, credibility, and ethics, so you won’t have to do it all by yourself!

Other Types of Variables

It’s important to understand the difference between dependent and independent variables and know whether they are quantitative or categorical to choose the appropriate statistical test.

There are many other types of variables to help you differentiate and understand them.

Also, read a comprehensive guide written about inductive and deductive reasoning .

- Entertainment

- Online education

- Database management, storage, and retrieval

Frequently Asked Questions

What are the 10 types of variables in research.

The 10 types of variables in research are:

- Independent

- Confounding

- Categorical

- Extraneous.

What is an independent variable?

An independent variable, often termed the predictor or explanatory variable, is the variable manipulated or categorized in an experiment to observe its effect on another variable, called the dependent variable. It’s the presumed cause in a cause-and-effect relationship, determining if changes in it produce changes in the observed outcome.

What is a variable?

In research, a variable is any attribute, quantity, or characteristic that can be measured or counted. It can take on various values, making it “variable.” Variables can be classified as independent (manipulated), dependent (observed outcome), or control (kept constant). They form the foundation for hypotheses, observations, and data analysis in studies.

What is a dependent variable?

A dependent variable is the outcome or response being studied in an experiment or investigation. It’s what researchers measure to determine the effect of changes in the independent variable. In a cause-and-effect relationship, the dependent variable is presumed to be influenced or caused by the independent variable.

What is a variable in programming?

In programming, a variable is a symbolic name for a storage location that holds data or values. It allows data storage and retrieval for computational operations. Variables have types, like integer or string, determining the nature of data they can hold. They’re fundamental in manipulating and processing information in software.

What is a control variable?

A control variable in research is a factor that’s kept constant to ensure that it doesn’t influence the outcome. By controlling these variables, researchers can isolate the effects of the independent variable on the dependent variable, ensuring that other factors don’t skew the results or introduce bias into the experiment.

What is a controlled variable in science?

In science, a controlled variable is a factor that remains constant throughout an experiment. It ensures that any observed changes in the dependent variable are solely due to the independent variable, not other factors. By keeping controlled variables consistent, researchers can maintain experiment validity and accurately assess cause-and-effect relationships.

How many independent variables should an investigation have?

Ideally, an investigation should have one independent variable to clearly establish cause-and-effect relationships. Manipulating multiple independent variables simultaneously can complicate data interpretation.

However, in advanced research, experiments with multiple independent variables (factorial designs) are used, but they require careful planning to understand interactions between variables.

You May Also Like

This article provides the key advantages of primary research over secondary research so you can make an informed decision.

The authenticity of dissertation is largely influenced by the research method employed. Here we present the most notable research methods for dissertation.

A meta-analysis is a formal, epidemiological, quantitative study design that uses statistical methods to generalise the findings of the selected independent studies.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Types of Variable

All experiments examine some kind of variable(s). A variable is not only something that we measure, but also something that we can manipulate and something we can control for. To understand the characteristics of variables and how we use them in research, this guide is divided into three main sections. First, we illustrate the role of dependent and independent variables. Second, we discuss the difference between experimental and non-experimental research. Finally, we explain how variables can be characterised as either categorical or continuous.

Dependent and Independent Variables

An independent variable, sometimes called an experimental or predictor variable, is a variable that is being manipulated in an experiment in order to observe the effect on a dependent variable, sometimes called an outcome variable.

Imagine that a tutor asks 100 students to complete a maths test. The tutor wants to know why some students perform better than others. Whilst the tutor does not know the answer to this, she thinks that it might be because of two reasons: (1) some students spend more time revising for their test; and (2) some students are naturally more intelligent than others. As such, the tutor decides to investigate the effect of revision time and intelligence on the test performance of the 100 students. The dependent and independent variables for the study are:

Dependent Variable: Test Mark (measured from 0 to 100)

Independent Variables: Revision time (measured in hours) Intelligence (measured using IQ score)

The dependent variable is simply that, a variable that is dependent on an independent variable(s). For example, in our case the test mark that a student achieves is dependent on revision time and intelligence. Whilst revision time and intelligence (the independent variables) may (or may not) cause a change in the test mark (the dependent variable), the reverse is implausible; in other words, whilst the number of hours a student spends revising and the higher a student's IQ score may (or may not) change the test mark that a student achieves, a change in a student's test mark has no bearing on whether a student revises more or is more intelligent (this simply doesn't make sense).

Therefore, the aim of the tutor's investigation is to examine whether these independent variables - revision time and IQ - result in a change in the dependent variable, the students' test scores. However, it is also worth noting that whilst this is the main aim of the experiment, the tutor may also be interested to know if the independent variables - revision time and IQ - are also connected in some way.

In the section on experimental and non-experimental research that follows, we find out a little more about the nature of independent and dependent variables.

Experimental and Non-Experimental Research

- Experimental research : In experimental research, the aim is to manipulate an independent variable(s) and then examine the effect that this change has on a dependent variable(s). Since it is possible to manipulate the independent variable(s), experimental research has the advantage of enabling a researcher to identify a cause and effect between variables. For example, take our example of 100 students completing a maths exam where the dependent variable was the exam mark (measured from 0 to 100), and the independent variables were revision time (measured in hours) and intelligence (measured using IQ score). Here, it would be possible to use an experimental design and manipulate the revision time of the students. The tutor could divide the students into two groups, each made up of 50 students. In "group one", the tutor could ask the students not to do any revision. Alternately, "group two" could be asked to do 20 hours of revision in the two weeks prior to the test. The tutor could then compare the marks that the students achieved.

- Non-experimental research : In non-experimental research, the researcher does not manipulate the independent variable(s). This is not to say that it is impossible to do so, but it will either be impractical or unethical to do so. For example, a researcher may be interested in the effect of illegal, recreational drug use (the independent variable(s)) on certain types of behaviour (the dependent variable(s)). However, whilst possible, it would be unethical to ask individuals to take illegal drugs in order to study what effect this had on certain behaviours. As such, a researcher could ask both drug and non-drug users to complete a questionnaire that had been constructed to indicate the extent to which they exhibited certain behaviours. Whilst it is not possible to identify the cause and effect between the variables, we can still examine the association or relationship between them. In addition to understanding the difference between dependent and independent variables, and experimental and non-experimental research, it is also important to understand the different characteristics amongst variables. This is discussed next.

Categorical and Continuous Variables

Categorical variables are also known as discrete or qualitative variables. Categorical variables can be further categorized as either nominal , ordinal or dichotomous .

- Nominal variables are variables that have two or more categories, but which do not have an intrinsic order. For example, a real estate agent could classify their types of property into distinct categories such as houses, condos, co-ops or bungalows. So "type of property" is a nominal variable with 4 categories called houses, condos, co-ops and bungalows. Of note, the different categories of a nominal variable can also be referred to as groups or levels of the nominal variable. Another example of a nominal variable would be classifying where people live in the USA by state. In this case there will be many more levels of the nominal variable (50 in fact).

- Dichotomous variables are nominal variables which have only two categories or levels. For example, if we were looking at gender, we would most probably categorize somebody as either "male" or "female". This is an example of a dichotomous variable (and also a nominal variable). Another example might be if we asked a person if they owned a mobile phone. Here, we may categorise mobile phone ownership as either "Yes" or "No". In the real estate agent example, if type of property had been classified as either residential or commercial then "type of property" would be a dichotomous variable.

- Ordinal variables are variables that have two or more categories just like nominal variables only the categories can also be ordered or ranked. So if you asked someone if they liked the policies of the Democratic Party and they could answer either "Not very much", "They are OK" or "Yes, a lot" then you have an ordinal variable. Why? Because you have 3 categories, namely "Not very much", "They are OK" and "Yes, a lot" and you can rank them from the most positive (Yes, a lot), to the middle response (They are OK), to the least positive (Not very much). However, whilst we can rank the levels, we cannot place a "value" to them; we cannot say that "They are OK" is twice as positive as "Not very much" for example.

Continuous variables are also known as quantitative variables. Continuous variables can be further categorized as either interval or ratio variables.

- Interval variables are variables for which their central characteristic is that they can be measured along a continuum and they have a numerical value (for example, temperature measured in degrees Celsius or Fahrenheit). So the difference between 20°C and 30°C is the same as 30°C to 40°C. However, temperature measured in degrees Celsius or Fahrenheit is NOT a ratio variable.

- Ratio variables are interval variables, but with the added condition that 0 (zero) of the measurement indicates that there is none of that variable. So, temperature measured in degrees Celsius or Fahrenheit is not a ratio variable because 0°C does not mean there is no temperature. However, temperature measured in Kelvin is a ratio variable as 0 Kelvin (often called absolute zero) indicates that there is no temperature whatsoever. Other examples of ratio variables include height, mass, distance and many more. The name "ratio" reflects the fact that you can use the ratio of measurements. So, for example, a distance of ten metres is twice the distance of 5 metres.

Ambiguities in classifying a type of variable

In some cases, the measurement scale for data is ordinal, but the variable is treated as continuous. For example, a Likert scale that contains five values - strongly agree, agree, neither agree nor disagree, disagree, and strongly disagree - is ordinal. However, where a Likert scale contains seven or more value - strongly agree, moderately agree, agree, neither agree nor disagree, disagree, moderately disagree, and strongly disagree - the underlying scale is sometimes treated as continuous (although where you should do this is a cause of great dispute).

It is worth noting that how we categorise variables is somewhat of a choice. Whilst we categorised gender as a dichotomous variable (you are either male or female), social scientists may disagree with this, arguing that gender is a more complex variable involving more than two distinctions, but also including measurement levels like genderqueer, intersex and transgender. At the same time, some researchers would argue that a Likert scale, even with seven values, should never be treated as a continuous variable.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

Types of Variables

- Last updated

- Save as PDF

- Page ID 31273

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

CO-4: Distinguish among different measurement scales, choose the appropriate descriptive and inferential statistical methods based on these distinctions, and interpret the results.

CO-7: Use statistical software to analyze public health data.

Classifying Types of Variables

Learning objectives.

LO 4.1: Determine the type (categorical or quantitative) of a given variable.

LO 4.2: Classify a given variable as nominal, ordinal, discrete, or continuous.

Types of Variables (3 Parts; 13:25 total time)

Variables can be broadly classified into one of two types :

- Quantitative

- Categorical

Below we define these two main types of variables and provide further sub-classifications for each type.

Categorical variables take category or label values, and place an individual into one of several groups .

Categorical variables are often further classified as either:

- Nominal, when there is no natural ordering among the categories .

Common examples would be gender, eye color, or ethnicity.

- Ordinal , when there is a natural order among the categories , such as, ranking scales or letter grades.

However, ordinal variables are still categorical and do not provide precise measurements.

Differences are not precisely meaningful, for example, if one student scores an A and another a B on an assignment, we cannot say precisely the difference in their scores, only that an A is larger than a B.

Quantitative variables take numerical values, and represent some kind of measurement .

Quantitative variables are often further classified as either:

- Discrete , when the variable takes on a countable number of values.

Most often these variables indeed represent some kind of count such as the number of prescriptions an individual takes daily.

- Continuous , when the variable can take on any value in some range of values .

Our precision in measuring these variables is often limited by our instruments.

Units should be provided.

Common examples would be height (inches), weight (pounds), or time to recovery (days).

One special variable type occurs when a variable has only two possible values.

A variable is said to be Binary or Dichotomous , when there are only two possible levels.

These variables can usually be phrased in a “yes/no” question. Whether nor not someone is a smoker is an example of a binary variable.

Currently we are primarily concerned with classifying variables as either categorical or quantitative.

Sometimes, however, we will need to consider further and sub-classify these variables as defined above.

These concepts will be discussed and reviewed as needed but here is a quick practice on sub-classifying categorical and quantitative variables.

Did I Get This?

Example: medical records.

Let’s revisit the dataset showing medical records for a sample of patients

In our example of medical records, there are several variables of each type:

- Age, Weight, and Height are quantitative variables.

- Race, Gender, and Smoking are categorical variables.

- Notice that the values of the categorical variable Smoking have been coded as the numbers 0 or 1.

It is quite common to code the values of a categorical variable as numbers, but you should remember that these are just codes.

They have no arithmetic meaning (i.e., it does not make sense to add, subtract, multiply, divide, or compare the magnitude of such values).

Usually, if such a coding is used, all categorical variables will be coded and we will tend to do this type of coding for datasets in this course.

- Sometimes, quantitative variables are divided into groups for analysis, in such a situation, although the original variable was quantitative, the variable analyzed is categorical.

A common example is to provide information about an individual’s Body Mass Index by stating whether the individual is underweight, normal, overweight, or obese.

This categorized BMI is an example of an ordinal categorical variable.

- Categorical variables are sometimes called qualitative variables, but in this course we’ll use the term “categorical.”

Software Activity

LO 7.1: View a dataset in EXCEL, text editor, or other spreadsheet or statistical software.

Learn By Doing:

Exploring a Dataset using Software

Why Does the Type of Variable Matter?

The types of variables you are analyzing directly relate to the available descriptive and inferential statistical methods .

It is important to:

- assess how you will measure the effect of interest and

- know how this determines the statistical methods you can use.

As we proceed in this course, we will continually emphasize the types of variables that are appropriate for each method we discuss .

For example:

To compare the number of polio cases in the two treatment arms of the Salk Polio vaccine trial, you could use

- Fisher’s Exact Test

- Chi-Square Test

To compare blood pressures in a clinical trial evaluating two blood pressure-lowering medications, you could use

- Two-sample t-Test

- Wilcoxon Rank-Sum Test

(Optional) Great Resource: : UCLA Institute for Digital Research and Education – What statistical analysis should I use?

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- Independent and Dependent Variables

- Purpose of Guide

- Design Flaws to Avoid

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

Definitions

Dependent Variable The variable that depends on other factors that are measured. These variables are expected to change as a result of an experimental manipulation of the independent variable or variables. It is the presumed effect.

Independent Variable The variable that is stable and unaffected by the other variables you are trying to measure. It refers to the condition of an experiment that is systematically manipulated by the investigator. It is the presumed cause.

Cramer, Duncan and Dennis Howitt. The SAGE Dictionary of Statistics . London: SAGE, 2004; Penslar, Robin Levin and Joan P. Porter. Institutional Review Board Guidebook: Introduction . Washington, DC: United States Department of Health and Human Services, 2010; "What are Dependent and Independent Variables?" Graphic Tutorial.

Identifying Dependent and Independent Variables

Don't feel bad if you are confused about what is the dependent variable and what is the independent variable in social and behavioral sciences research . However, it's important that you learn the difference because framing a study using these variables is a common approach to organizing the elements of a social sciences research study in order to discover relevant and meaningful results. Specifically, it is important for these two reasons:

- You need to understand and be able to evaluate their application in other people's research.

- You need to apply them correctly in your own research.

A variable in research simply refers to a person, place, thing, or phenomenon that you are trying to measure in some way. The best way to understand the difference between a dependent and independent variable is that the meaning of each is implied by what the words tell us about the variable you are using. You can do this with a simple exercise from the website, Graphic Tutorial. Take the sentence, "The [independent variable] causes a change in [dependent variable] and it is not possible that [dependent variable] could cause a change in [independent variable]." Insert the names of variables you are using in the sentence in the way that makes the most sense. This will help you identify each type of variable. If you're still not sure, consult with your professor before you begin to write.

Fan, Shihe. "Independent Variable." In Encyclopedia of Research Design. Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 592-594; "What are Dependent and Independent Variables?" Graphic Tutorial; Salkind, Neil J. "Dependent Variable." In Encyclopedia of Research Design , Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 348-349;

Structure and Writing Style

The process of examining a research problem in the social and behavioral sciences is often framed around methods of analysis that compare, contrast, correlate, average, or integrate relationships between or among variables . Techniques include associations, sampling, random selection, and blind selection. Designation of the dependent and independent variable involves unpacking the research problem in a way that identifies a general cause and effect and classifying these variables as either independent or dependent.

The variables should be outlined in the introduction of your paper and explained in more detail in the methods section . There are no rules about the structure and style for writing about independent or dependent variables but, as with any academic writing, clarity and being succinct is most important.

After you have described the research problem and its significance in relation to prior research, explain why you have chosen to examine the problem using a method of analysis that investigates the relationships between or among independent and dependent variables . State what it is about the research problem that lends itself to this type of analysis. For example, if you are investigating the relationship between corporate environmental sustainability efforts [the independent variable] and dependent variables associated with measuring employee satisfaction at work using a survey instrument, you would first identify each variable and then provide background information about the variables. What is meant by "environmental sustainability"? Are you looking at a particular company [e.g., General Motors] or are you investigating an industry [e.g., the meat packing industry]? Why is employee satisfaction in the workplace important? How does a company make their employees aware of sustainability efforts and why would a company even care that its employees know about these efforts?

Identify each variable for the reader and define each . In the introduction, this information can be presented in a paragraph or two when you describe how you are going to study the research problem. In the methods section, you build on the literature review of prior studies about the research problem to describe in detail background about each variable, breaking each down for measurement and analysis. For example, what activities do you examine that reflect a company's commitment to environmental sustainability? Levels of employee satisfaction can be measured by a survey that asks about things like volunteerism or a desire to stay at the company for a long time.

The structure and writing style of describing the variables and their application to analyzing the research problem should be stated and unpacked in such a way that the reader obtains a clear understanding of the relationships between the variables and why they are important. This is also important so that the study can be replicated in the future using the same variables but applied in a different way.

Fan, Shihe. "Independent Variable." In Encyclopedia of Research Design. Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 592-594; "What are Dependent and Independent Variables?" Graphic Tutorial; “Case Example for Independent and Dependent Variables.” ORI Curriculum Examples. U.S. Department of Health and Human Services, Office of Research Integrity; Salkind, Neil J. "Dependent Variable." In Encyclopedia of Research Design , Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 348-349; “Independent Variables and Dependent Variables.” Karl L. Wuensch, Department of Psychology, East Carolina University [posted email exchange]; “Variables.” Elements of Research. Dr. Camille Nebeker, San Diego State University.

- << Previous: Design Flaws to Avoid

- Next: Glossary of Research Terms >>

- Last Updated: May 20, 2024 9:47 AM

- URL: https://libguides.usc.edu/writingguide

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Types of Variables in Psychology Research

Examples of Independent and Dependent Variables

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

James Lacy, MLS, is a fact-checker and researcher.

:max_bytes(150000):strip_icc():format(webp)/James-Lacy-1000-73de2239670146618c03f8b77f02f84e.jpg)

Dependent and Independent Variables

- Intervening Variables

- Extraneous Variables

- Controlled Variables

- Confounding Variables

- Operationalizing Variables

Frequently Asked Questions

Variables in psychology are things that can be changed or altered, such as a characteristic or value. Variables are generally used in psychology experiments to determine if changes to one thing result in changes to another.

Variables in psychology play a critical role in the research process. By systematically changing some variables in an experiment and measuring what happens as a result, researchers are able to learn more about cause-and-effect relationships.

The two main types of variables in psychology are the independent variable and the dependent variable. Both variables are important in the process of collecting data about psychological phenomena.

This article discusses different types of variables that are used in psychology research. It also covers how to operationalize these variables when conducting experiments.

Students often report problems with identifying the independent and dependent variables in an experiment. While this task can become more difficult as the complexity of an experiment increases, in a psychology experiment:

- The independent variable is the variable that is manipulated by the experimenter. An example of an independent variable in psychology: In an experiment on the impact of sleep deprivation on test performance, sleep deprivation would be the independent variable. The experimenters would have some of the study participants be sleep-deprived while others would be fully rested.

- The dependent variable is the variable that is measured by the experimenter. In the previous example, the scores on the test performance measure would be the dependent variable.

So how do you differentiate between the independent and dependent variables? Start by asking yourself what the experimenter is manipulating. The things that change, either naturally or through direct manipulation from the experimenter, are generally the independent variables. What is being measured? The dependent variable is the one that the experimenter is measuring.

Intervening Variables in Psychology

Intervening variables, also sometimes called intermediate or mediator variables, are factors that play a role in the relationship between two other variables. In the previous example, sleep problems in university students are often influenced by factors such as stress. As a result, stress might be an intervening variable that plays a role in how much sleep people get, which may then influence how well they perform on exams.

Extraneous Variables in Psychology

Independent and dependent variables are not the only variables present in many experiments. In some cases, extraneous variables may also play a role. This type of variable is one that may have an impact on the relationship between the independent and dependent variables.

For example, in our previous example of an experiment on the effects of sleep deprivation on test performance, other factors such as age, gender, and academic background may have an impact on the results. In such cases, the experimenter will note the values of these extraneous variables so any impact can be controlled for.

There are two basic types of extraneous variables:

- Participant variables : These extraneous variables are related to the individual characteristics of each study participant that may impact how they respond. These factors can include background differences, mood, anxiety, intelligence, awareness, and other characteristics that are unique to each person.