Data Vault 2.0

How to model many-to-many relationship in data vault or what is a record tracking satellite.

At times you need to model Many-to-Many relationship that changes over time in Data Vault. For e.g., you may have HUB_CUSTOMER and HUB_ADDRESS. Several Customer may be associated with the same Address, and also multiple Addresses can be associated with a single Customer. And this can change over time. For e.g.

LINK_CUSTOMER_ADDRESS will only capture the first occurrence of the relationship, but will not capture the changing nature of the relations. LINK_CUSTOMER_ADDRESS will look as follows:

Note that the Link is only capturing the first appearance of the relationship, but nothing about the effectivity of the relationship. This is where you need the Record Tracking Satellite. A Record Tracking Satellite is a narrow table that keeps tracks of LINK Hashkeys or SAT HASHKEY for each load. It basically captures all the HASHKEYs that are valid for that load. The Record Tracking Satellite for the above Many-to-Many relationship that changes over time will look as following

This way you can capture the effectivity of Many-to-Many relationships that change over time.

Data Vault Anti-pattern: Using Effectivity Satellites as SCD2

In Data Vault 2.0 Effectivity Satellites are artifacts that are exclusively used to Track the temporal relevance of a relationship based on a Driving Key. As such, they hang from a Link Table.

Effectivity Satellites are not same as the SCD2.

So what is an Effectivity Satellite?

For an Effectivity Satellite, a Driving Key needs to be defined. For e.g. let's say we have a Link for Opportunities and relations to Accounts. The Account on Opportunity can change overtime. A common scenario is that the Opportunity is Assigned to a Global Parent account (e.g. Seagate Technology), and may get re-assigned to the Account Subsidiary (e.g. Lyve Labs) and get re-assigned to the Global Parent (Seagate Technology) at a later date

This will be tracked in the LINK as following:

Now the 3rd step, i.e. 111 switching back to Seagate Technology will not be captured in this LINK as a new row in this LINK

An Effectivity SAT can be used to Track effectivity (temporal relevance) of the aforementioned Opportunity to Account relationships.

- When the relationship is first recorded only 1 record is inserted (highlighted in green)

- When there is a change in a relationship it must be based on one of the participants of the relationship– the driver, we track its change vs the other keys in the relationship. To do that we end_date one record and insert a new "active record"; i.e. 2 records inserted. (highlighted in red)

.png)

Data Vault Anti-pattern: Load Dates that are anything other than time of loading the Staging

By using the Load Date we should be able to identify all the Data that was loaded into DV in that particular batch. If the Load Date is some else, for e.g. the Load Date from the ETL tool, the entire batch in DV can not identified using the Load Date.

Data Vault Anti-pattern: Using varchar to store the HashKeys and HashDiffs

Why would you do that? HashKeys and HashDiffs are binary generated using a hashing algorithm like MD5 or SHA-1. Just store them as binary and effectively halve your storage and double your I/O! No need to convert them to the Char to store them as VARCHAR.

Data Vault Anti-pattern: Creating Hubs for Dependent Children

Dependent Children should not have their own Hubs. They are not Business Concepts and as such should not be a Business Key by themselves. They only make sense when associated with a Business concept.

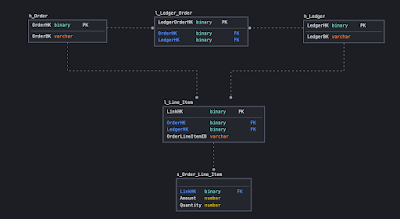

One example is Line Items in a Order. The Line Item in Order by itself does not make sense. It needs to be associated with an Order. This makes a Line Item a Dependent Child i.e. it is not a Business Concept on its own.

One way to model the Dependent Child is to add them in the Link as following:

Data Vault Anti-pattern: Implementing Business Rules at the Infomart Level

While it is tempting to implement Business Rules at the Infomart Level, that is not where the Business Rules should reside. They should reside in Business Vault. This enables historisation of the Business Rules and introduces auditability. When the Business Rule changes, with historisation it is possible to go back in time and analyze the impact of the Business Rule change. Now if this Business Rule was implemented at the Infomart Level, there would be no history thus no auditability. As a best-practice, the Business Vault tables should be materialized physical TABLES instead of VIEWs.

Data Vault Anti-pattern: Using Historized Links to store Transactional data that does not change

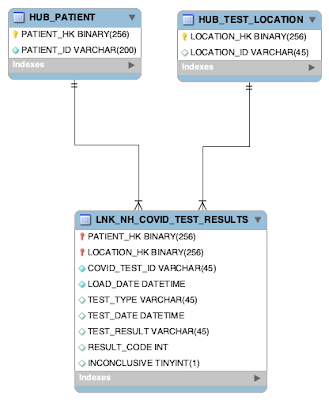

Transactional Data that does not change e.g. sensor data, stock trades, call center call data log, medical test results, event logs etc. should reside in a Non-historized Link (NHL) aka. Transaction Link. There is no point in using a Historized Link to store data that can not change. All of the attributes of the Transaction can be stored within the NHL. Here is an example:

Note that the PATIENT_HK , LOCATION_HK , COVID_TEST_ID , and LOAD_DATE form the Unique Key for the Non-historized Link. The descriptive attributes are stored in the Non-historized Link instead of a SAT hanging from the Link.

"Non-historised links are used when the data in the source should not be modified at any time"

Data Vault 2.0 Modeling Basics

Chief Technical Evangelist & Lead Strategic Advisor at Snowflake

- data warehouse

- database modeling

In my last post , we looked at the need for an Agile Data Engineering solution, issues with some of the current data warehouse modeling approaches, the history of data modeling in general, and Data Vault specifically. This time we get into the technical details of what the Data Vault Model looks like and how you build one.

For my examples I will be using a simply Human Resources (HR) type model that most people should relate to (even if you have never worked with an HR model). In this post I will walk through how you get from the OLTP model to the Data Vault model .

Data Vault Prime Directive

One thing to get very clear up front is that, unlike many data warehouse implementations today, the Data Vault Method requires that we load data exactly as it exists in the source system. No edits, no changes, no application of soft business rules (including data cleansing).

So that the Data Vault is 100% auditable.

If you alter the data on the way into the Data Vault, you break the ability to trace the data to the source in case of an audit because you cannot match the data warehouse data to source data. Remember your EDW (Enterprise Data Warehouse) is the enterprise store of historical data too. Once the data is purged from the source systems, your EDW may also be your Source of Record . So it is critical the data remain clean.

We have a saying in Data Vault world – The DV is a source of FACTS , not a source of TRUTH (truth is often subjective & relative in the data world).

Now, you can alter the data downstream from the Data Vault when you build your Information Marts . I will discuss that in more detail in the 3 rd and 4 th articles.

For a Data Vault, the first thing you do is model the Hubs. Hubs are the core of any DV design. If done properly, Hubs are what allow you to integrate multiple source systems in your data warehouse. To do that, they must be source system agnostic . That means they must be based on true Business Keys (or meaningful natural keys) that are not tied to any one source system.

That means you should not use source system surrogate keys for identification. Hubs keys must be based on an identifiable business element or elements.

What is an identifiable business element? It is a column (or set of columns) found in the systems that the business consistently uses to identify and locate the data. Regardless of source system, these elements must have the same semantic meaning (even though the names may vary from source to source). If you are very lucky, the source system model will have this defined for you in the form of alternate unique keys or indexes . If not you will need to engage in data profiling and conversations with the business customers to figure out what the business keys are.

This is the most important aspect of Data Vault modeling . You must get this right if you intend to build an integrated enterprise data warehouse for your organization.

(To be honest, in order to really, really do this right , you should start by building a conceptual model based on business processes using business terminology . If you do that, the Data Vault model will be most obvious to you and it will not be based on any existing source system).

As an example, look at these tables from a typical HR application. This is the source OLTP example we will work with.

Figure 1 – Source Model

The Primary Key (PK) on the LOCATION table is LOCATION_ID . But that is an integer surrogate key. It is not a good candidate for the Business Key.

Why? Because every source system can have an ID of 1, 2, 201, 5389, etc. It is just a number and has no meaning in the real world. Instead LOCATION_NAME is the likely candidate for the Business Key (BK). Looking in the database, we see that it has a unique index. Great! (If there were no unique indexes, we would have to talk to the business and/or query the data directly to find a unique set of columns to use.)

For the COUNTRIES table, the COUNTRY_ABBREV column will work as a Business Key, and for REGIONS , it would be the REGION_NAME .

Fundamentally, a Hub is a list of unique business keys .

A Hub table has a very simple structure. It contains:

- The Business Key column(s)

- The Load Date (LOAD_DTS)

- The Source for the record (REC_SRC)

New in DV 2.0 , the Hub PK is a calculated field consisting of a Hash (often MD5) of the Business Key columns (more on that in a bit).

The Business Key must be a declared unique or alternate key constraint in the Hub. That means for each key there will be only on row in the Hub table, ever. It can be a compound key made up of more than one column.

The LOAD_DTS tells us the first time the data warehouse “knew” about that business key. So no matter how many loads you run, this row is created the first time and never dropped or updated.

The REC_SRC tells us which source system the row came from. If the value can come from multiple sources, this will tell us which source fed us the value first.

In the HUB_LOCATION table, LOCATION_NAME is the Business Key. It is a unique name used in the source systems to identify a location. It must have a unique constraint or index declared on it in the database to prevent duplicates from being entered and to facilitate faster query access.

What can you do with a single Hub table?

You can do data profiling and basic analysis on the business key. Answer questions like:

- How many locations do we have?

- How many source systems provide us locations names?

- Are there data quality issues? Do we see Location Names that seem similar but are from different sources? (Hint: if you do, you may have a master data management issue )

Hash-based Primary Keys

One of the innovations in DV 2.0 was the replacement of the standard integer surrogate keys with hash-based primary keys. This was to allow a DV solution to be deployed, at least in part, on a Hadoop solution. Hadoop systems do not have surrogate key generators like a modern RDBMS, but you can generate an MD5 Hash. With a Hash Key in Hadoop and one in your RDBMS, you can “logically” join this data (with the right tools of course). (Plus sequence generators and counters can become a bottleneck on some systems at very high volumes and degrees of parallelism.)

Here is an example of Oracle code to create the HUB_LOCATION_KEY:

dbms_obfuscation_toolkit.md5(upper(trim(location_name)))

For a Hub and source Stage tables, we apply an MD5 (or other) hash calculation to the Business Key columns. So if you have so much data coming so fast that you cannot load it quickly into your database, one option is to build stage tables (really copies of source tables) on Hadoop so you do not lose the data. As you load those files, the hash calculation is applied to the Business Key and added as a new piece of data during the load. Then when it is time to load to your database you can compare the Hash Key on Hadoop to the Hash Key in your Hub table to see if the data is loaded already or not. (Note: how to load a data vault is too much for a blog post; please look at the new Data Vault book or the online training class for details).

The Link is the key to flexibility and scalability in the Data Vault modeling technique. They are modeled in such a way as to allow for changes and additions to the model over time by providing the ability to easily add new objects and relationships without having to change existing structures or load routines.

In a Data Vault model, all source data relationships (i.e., foreign keys) and events are represented as Links. One of the foundational rules in DV is that Hubs can have no FKs, so to represent the joins between Hub concepts, we must use a Link table. The purpose of the Link is to capture and record the relationship of data elements at the lowest possible grain. Other examples of Links include transactions and hierarchies (because in reality those are the intersection of a bunch of Hubs too).

A Link is therefore an intersection of business keys. It contains the columns that represent the business keys from the related Hubs. A Link must have more than one parent table. There must be at least two Hubs, but, as in the case of a transaction, they may be composed of many Hubs. A Link table’s grain is defined by the number of parent keys it contains (very similar to a Fact table in dimensional modeling).

Like a Hub, the Link is also technically a simple structure. It contains:

- A Link PK (Hash Key)

- The PKs from the parent Hubs – used for lookups

- The Business Key column(s) – new feature in DV 2.0

In Figure 3 you see the results of converting the FK to REGIONS from the COUNTRIES table (Figure 1) into a Link table. The PK column LNK_REGION_COUNTRY_KEY is a hash key calculated against the Business Key columns from the contributing Hubs. This gives us a unique key for every combination of Country and Region that may be fed to us by the source systems.

In Oracle that might look like:

dbms_obfuscation_toolkit.md5(upper(trim(country_abbrv))||’^’|| upper(trim(region_name)))

Just as in the Hubs, the Link records only the first time that the relationship appears in the DV.

In addition, the Link contains the PKs from the parent Hubs (which should be declared as an alternate unique key or index). This makes up the natural key for the Link.

New to DV 2.0 is the inclusion of the text business key columns from the parent Hubs. Yes, this is a specific de-normalization.

Why? For query performance when you want to extract data from the Data Vault (more on this in part 3 & 4 of this series). Depending on your platform, you may consider adding a unique key constraint or index on these columns as well.

Why are Links Many-to-Many?

Links are intersection tables and so, by design, they represent a many-to-many relationship. Since a FK is usually 1:M, you may ask why it is done this way.

One word: Flexibility !

Remember that part of the goal of the Data Vault is to store only the facts and avoid reengineering (refactoring) even if the business rules or source systems change. So if you did your business modeling right, the only thing that might change would be the cardinality of a relationship. Now granted it is not likely that one Region will ever be in two Countries, but if that rule changed or you get a new source system that allows it, the 1:M might become a M:M. With a Link, we can handle either with no change to the design or the load process !

That is dynamic adaptability. That is an agile design. (Maximize the amount of work not done.)

Using this type of design pattern allows us to more quickly adapt and absorb business rule and source system changes while minimizing the need to re-engineer the Data Vault. Less work. Less time. No re-testing. Less money!

Satellites (or Sats for short) are where all the big action is in a Data Vault. These structures are where all the descriptive (i.e., non-key) columns go, plus this is where the Change Data Capture ( CDC ) is done and history is stored. The structure and concept are very much like a Type 2 Slowly Changing Dimension.

To accomplish this function, the Primary Key for a Sat contains two parts: the PK from its Parent Hub (or Link) plus the LOAD_DTS. So every time we load the DV and find new records or changed records, we insert those records into the Sats and give them a timestamp. (On a side note, this structure also means that a DV is real-time-ready in that you can load whenever and as often as you need as long as you set the LOAD_DTS correctly.)

This is the only structure in the core Data Vault that has a two-part key. That is as complicated as it gets from a structure perspective.

Of course, a Sat must also have the REC_SRC column for auditability. REC_SRC will tell us the source of each row of data in the Sat.

Important Note : The REC_SRC in a Sat does NOT have to be the same as that in the parent Hub or Link. Remember that Hubs and Links record the source of the concept key or relationship the first time the DV sees it. Subsequent loads may find different sources provide different descriptive information at different times for one single Hub record (don’t forget that we are integrating systems too).

You may have noticed that not all the columns in Figure 1 ended up in the Hubs or the Link tables that we have looked at.

Where do they go? They go in the Sats.

Figure 4 – Hubs and Links with Sats

In Figure 4, look at SAT_LOCATIONS . There you see all the address columns from the original table LOCATIONS (Figure 1). Likewise in SAT_COUNTRIES you see COUNTRY_NAME and in SAT_REGIONS you see REGION_ID (remember that was the source system PK but NOT the Business Key, so it goes here so we can trace back to the source if needed).

Use of HASH_DIFF columns for Change Data Capture (CDC)

Another innovation that came with DV 2.0 is the use of a Hash-based column for determining if a record in the source has changed from what was previously loaded into the Data Vault. We call that a Hash Diff (for diff erence). Every Sat must have this column to be DV 2.0 compliant.

So, how this works is that you first calculate a Hash on the combination of all of the descriptive (non-meta data) columns in the Sat.

Examples for Oracle for the three Sats in Figure 4 are:

SAT_REGIONS.HASH_DIFF = dbms_obfuscation_toolkit.md5(TO_CHAR(region_id))

SAT_COUNTRIES.HASH_DIFF = dbms_obfuscation_toolkit.md5(TRIM(country_name))

SAT_LOCATIONS.HASH_DIFF = dbms_obfuscation_toolkit.md5( TRIM(street_address) || ’^’ || TRIM(city) || ^ || TRIM(state_province) || ’^’ || TRIM(postal_code))

Now when you get ready to do another load, you must calculate a Hash on the inbound values (using exactly the same formula) and then compare that to the HASH_DIFF column in the Sat for the most recent row (i.e., from the last load date) for the same Hub Business Key. If the Hash calculation is different, then you create a new row. If not, you do nothing (which is way faster).

This is exactly what you do when you build a Type 2 SCD ( Slowly Changing Dimension ).

The difference is that instead of comparing every single column in the feed to every single column in the Sat, you only have to compare one column – the HASH_DIFF . (See my white paper to learn on how we did it in DV 1.0)

So which do you think is faster on a very wide table?

Comparing one column vs. 50 columns (or several hundred columns)?

In fact, since all the HASH_DIFFs are the same size , the speed of the comparison allows you to scale to wider tables without the CDC process slowing your down.

Is it overkill for a small, narrow table? Probably.

But if you want a standards based, repeatable approach, you apply the same rules regardless. Plus if you follow the patterns , you can automate the generation of the design and load processes (or buy software that does it for you).

Another Note : There are no updates! We never over write data in a Data Vault! We only insert new data. That allows us to keep a clean audit trail.

So there you have the basics (and then some) of what makes up a raw Data Vault Data Model and you have seen some of the innovations from Data Vault 2.0

Just remember this list:

- Hubs = Business Keys

- Links = Associations / Transactions

- Satellites = Descriptors

Hubs make it business driven and allow for integration across systems.

Links give you the flexibility to absorb structural and business rule changes without re-engineering (and therefore without reloading any data).

Sats give you the adaptability to record history at any interval you want plus unquestionable auditability and traceability to your source systems.

All together you get agility, flexibility, adaptability, auditability, scalability, and speed to market .

What more could a data warehouse architect want?

Want more in-depth details? Check out LearnDataVault.com

Until next time!

Kent Graziano

Want to know more about Kent Graziano? Go to his: blog , Twitter profile , LinkedIn profile .

You may also like

Olap for oltp practitioners, 7 common database design errors, spider schema – a bridge between oltp and olap, a book review, tips for better database design, database design: more than just an erd.

Our website uses cookies. By using this website, you agree to their use in accordance with the browser settings. You can modify your browser settings on your own. For more information see our Privacy Policy .

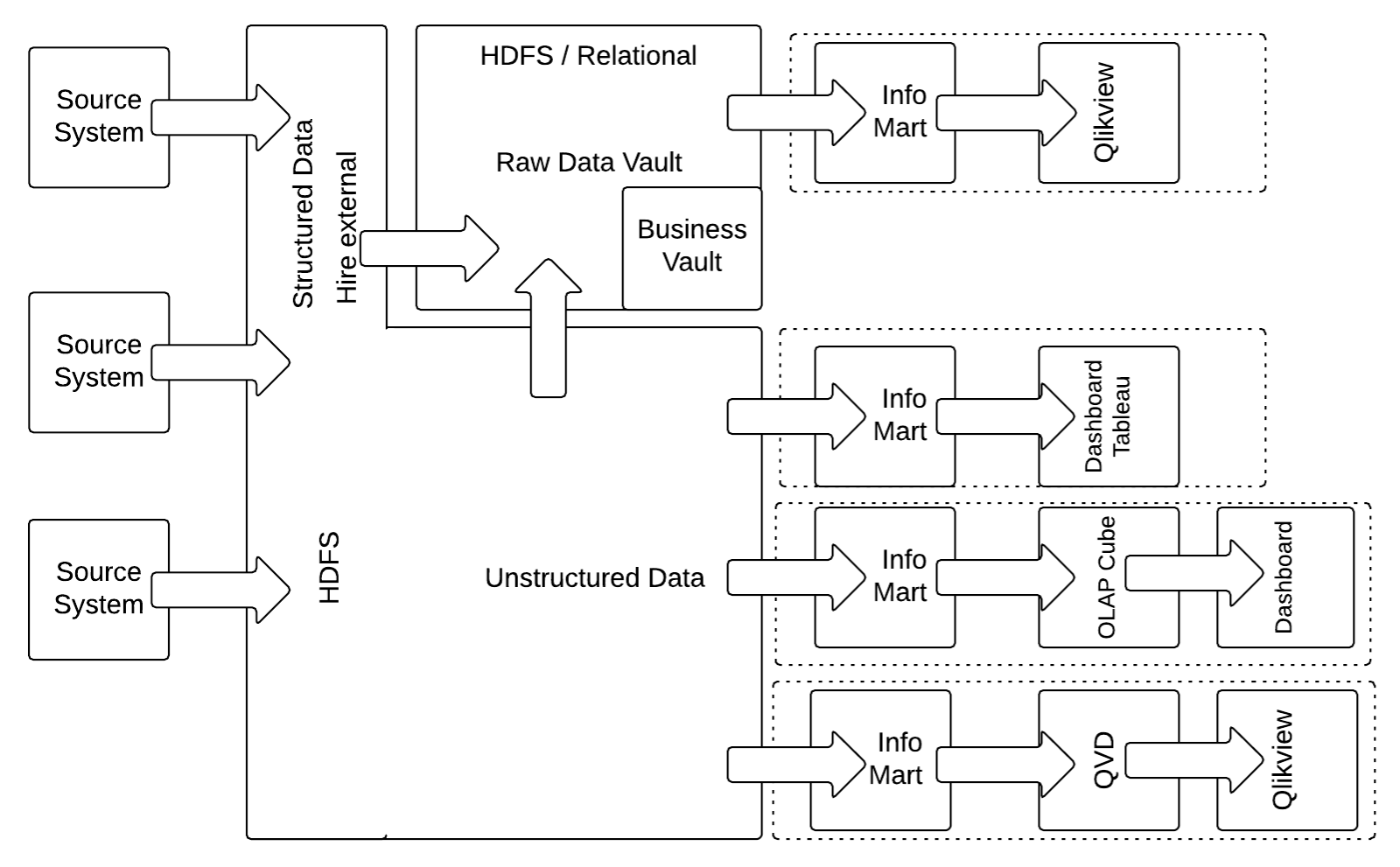

Hybrid Architecture in Data Vault 2.0

Business users expect from their data warehouse systems to load and prepare more and more data, regarding the variety, volume, and velocity of data. Also, the workload that is put on typical data warehouse environments is increasing more and more, especially if the initial version of the warehouse has become a success with its first users. Therefore, scalability has multiple dimensions. Last month we talked about Satellites , which play an important role regarding the scalability. Now we explain how to combine structured and unstructured data with a hybrid architecture.

LOGICAL DATA VAULT 2.0 ARCHITECTURE

The Data Vault 2.0 architecture is based on three layers: the staging area which collects the raw data from the source systems, the enterprise data warehouse layer, modeled as a Data Vault 2.0 model, and the information delivery layer with information marts as star schemas and other structures. The architecture supports both batch loading of source systems and real-time loading from the enterprise service bus (ESB) or any other service-oriented architecture (SOA).

The following diagram shows the most basic logical Data Vault 2.0 architecture:

In this case, structured data from source systems is first loaded into the staging area in order to reduce the operational / performance burden from the operational source systems. It is then loaded unmodified into the Raw Data Vault which represents the Enterprise Data Warehouse layer. After the data has been loaded into this Data Vault model (with hubs, links, and satellites), business rules are applied in the Business Vault on top of the data in the Raw Data Vault. Once the business logic is applied, both, the Raw Data Vault and the Business Vault are joined and restructured into the business model for information delivery in the information marts. The business user is using dashboard applications (or reporting applications) to access the information in the information marts.

The architecture allows to implement the business rules in the Business Vault using a mix of various technologies, such as SQL-based virtualization (typically using SQL views) and external tools, such as business rule management systems (BRMS).

But it is also possible to integrate unstructured NoSQL database systems into this architecture. Due to the platform independence of Data Vault 2.0, NoSQL can be used for every data warehouse layer, including the stage area, the enterprise data warehouse layer, and information delivery. Therefore, the NoSQL database could be used as a staging area and load data into the relational Data Vault layer. However it could also be integrated both ways with the Data Vault layer via a hashed business key . In this case, it would become a hybrid solution and information marts would consume data from both environments.

HYBRID ARCHITECTURE

The standard Data Vault 2.0 architecture in figure 1 focuses on structured data. Because more and more enterprise data is semi-structured or unstructured, the recommended best practice for a new enterprise data warehouse is to use a hybrid architecture based on a Hadoop cluster, as shown in the next figure:

In this modification of the architecture from the previous section, the relational staging area is replaced by a HDFS based staging area which captures all unstructured and structured data. While capturing structured data on the HDFS appears as overhead at first glance, this strategy actually reduces the burden of the source system by making sure that the source data is always being extracted, regardless of any structural changes. The data is then extracted using Apache Drill, Hive External or similar technologies.

It is also possible to store the Raw Data Vault and the Business Vault (the structured data in the Data Vault model) on Hive Internal.

How to Get Updates and Support

Please send inquiries and feature requests to [email protected] .

For Data Vault training and on-site training inquiries, please contact [email protected] or register at www.scalefree.com .

To support the creation of Visual Data Vault drawings in Microsoft Visio, a stencil is implemented that can be used to draw Data Vault models. The stencil is available at www.visualdatavault.com .

Subscribe to our free monthly newsletter

Used by 8k+ people to unlock the power of their data.

You May Also Like

An efficient data lake structure, organization of information requirements, capturing semi-structured descriptive data, leave a reply cancel reply.

Save my name, email, and website in this browser for the next time I comment.

Sign me up for the Monthly Newsletter!

- Proof Of Concept

- Project Consulting

- Customized Training

- Datavault4coalesce

- TurboVault4dbt

- DataVault4dbt

- Case Studies

- Boot Camp Class

- Introduction Class

- Multi-Temporal Class

- Information Delivery Class

- Customized Class

- Modeling Class

- WhereScape Workshop

- Agile Data Warehousing

- Frequently Asked Questions

- Data Vault 2.0

- Data Warehouse Automation

- Enterprise Data Warehouse

- Data Science

- Data Analytics

- Cloud Computing

- Managed Self-Service BI

- Enterprise Data Strategy

- Data Dreamland 2024

- DVIC Summit 2024

- Data Dreamland 2023

- Data Dreamland 2022

- Data Dreamland 2021

- WWDVC Europe 2020

- Architecture

- Data Warehouse

- Expert Sessions

- Data Vault Friday

- Whitepapers

- Local Impact

- Press Releases

- Your Career

- Job Openings

- Scalefree Academy

© 2024 Scalefree International GmbH Imprint | Privacy Policy | Terms and Conditions

- Consulting Overview

- Proof of Concept

- DataVault4Coalesce

- Upcoming Trainings

- Upcoming Events

- WWDVC EU 2020

- All Articles

- Our Knowledge

Search by keyword

Gdp up by 0.3% in both the euro area and the eu.

In the first quarter of 2024, seasonally adjusted GDP increased by 0.3% in both the euro area and the EU , compared with the previous quarter, according to a preliminary flash estimate published by Eurostat, the statistical office of the European Union . In the fourth quarter of 2023, GDP had declined by 0.1% in the euro area and had remained stable in the EU .

These preliminary GDP flash estimates are based on data sources that are incomplete and subject to further revisions.

Compared with the same quarter of the previous year, seasonally adjusted GDP increased by 0.4% in the euro area and by 0.5% in the EU in the first quarter of 2024, after +0.1% in the euro area and +0.2% in the EU in the previous quarter.

Among the Member States for which data are available for the first quarter of 2024, Ireland (+1.1%) recorded the highest increase compared to the previous quarter, followed by Latvia , Lithuania and Hungary (all +0.8%). Sweden (-0.1%) was the only Member State that recorded a decrease compared to the previous quarter. The year on year growth rates were positive for nine countries and negative for four.

The next estimates for the first quarter of 2024 will be released on 15 May 2024.

Notes for users

The reliability of GDP flash estimates was tested by dedicated working groups and revisions of subsequent estimates are continuously monitored . Further information can be found on Eurostat website .

With this preliminary flash estimate, euro area and EU GDP figures for earlier quarters are not revised.

All figures presented in this release may be revised with the GDP t+45 flash estimate scheduled for 15 May 2024 and subsequently by Eurostat’s regular estimates of GDP and main aggregates (including employment) scheduled for 7 June 2024 and 19 July 2024.

The preliminary flash estimate of the first quarter of 2024 GDP growth presented in this release is based on the data of 18 Member States, covering 95% of euro area GDP and 94% of EU GDP.

Release schedule

Comprehensive estimates of European main aggregates (including GDP and employment) are based on countries regular transmissions and published around 65 and 110 days after the end of each quarter. To improve the timeliness of key indicators, Eurostat also publishes flash estimates for GDP (after around 30 and 45 days) and employment (after around 45 days). Their compilation is based on estimates provided by EU Member States on a voluntary basis.

This news release presents preliminary flash estimates for euro area and EU after around 30 days.

Methods and definitions

European quarterly national accounts are compiled in accordance with the European System of Accounts 2010 (ESA 2010).

Gross domestic product (GDP) at market prices measures the production activity of resident production units. Growth rates are based on chain-linked volumes.

Two statistical working papers present the preliminary GDP flash methodology for the European estimates and Member States estimates .

The method used for compilation of European GDP is the same as for previous releases.

Geographical information

Euro area (EA20): Belgium, Germany, Estonia, Ireland, Greece, Spain, France, Croatia, Italy, Cyprus, Latvia, Lithuania, Luxembourg, Malta, the Netherlands, Austria, Portugal, Slovenia, Slovakia and Finland.

European Union (EU27): Belgium, Bulgaria, Czechia, Denmark, Germany, Estonia, Ireland, Greece, Spain, France, Croatia, Italy, Cyprus, Latvia, Lithuania, Luxembourg, Hungary, Malta, the Netherlands, Austria, Poland, Portugal, Romania, Slovenia, Slovakia, Finland and Sweden.

For more information

Website section on national accounts , and specifically the page on quarterly national accounts

Database section on national accounts and metadata on quarterly national accounts

Statistics Explained articles on measuring quarterly GDP and presentation of updated quarterly estimates

Country specific metadata

Country specific metadata on the recording of Ukrainian refugees in main aggregates of national accounts

European System of Accounts 2010

Euro indicators dashboard

Release calendar for Euro indicators

European Statistics Code of Practice

Get in touch

Media requests

Eurostat Media Support

Phone: (+352) 4301 33 408

E-mail: [email protected]

Further information on data

Thierry COURTEL

Julio Cesar CABECA

E-mail: [email protected]

Share the release

IMAGES

VIDEO

COMMENTS

Data Vault Anti-pattern: Using Effectivity Satellites as SCD2. In Data Vault 2.0 Effectivity Satellites are artifacts that are exclusively used to Track the temporal relevance of a relationship based on a Driving Key. As such, they hang from a Link Table. Effectivity Satellites are not same as the SCD2.

This article starts a blog series regarding the Data Vault 2.0 concept to build scalable business intelligence solutions that provide a new approach for building a modern data analytics platform. Data Vault 2.0 contains all necessary components to accomplish enterprise vision in Data Warehousing and Information Delivery.

Outlook. In this article, we have described how to implement Data Vault 2.0 on Databricks. The platform provides all necessary tools and services to build a data analytics platform based on the Data Vault 2.0 principles that also works at scale. In the next article, we will look at the Data Science with Azure Synapse Analytics and Data Vault 2.0.

Welcome to a quick introductory look at what the Data Vault 2.0 Implementation has to offer. Our Implementation Standards are built on years of best practices that help you manage, measure, identify, and optimize everything from the way people work, down to the data models that are generated. No other standards in the world can get you there as ...

With the ever-changing landscape of source systems, modeling requirements, and data acquisition and integration options, the Data Vault 2.0 model provides the necessary patterns to adapt to these…

A 44-minute introductory discussion on what Data Vault 2.0 is, how it works as a solution for your EDW, BI, and Analytics programs.0:00-00:31 Dan Linstedt I...

Data Vault 2.0 Model Benefits. The model carries significant benefits when built properly. These benefits include scalability (to the petabyte ranges and beyond), and are backed by set-logic mathematics, Big O notation, Discrete Math, and more. This ensures not only the stability of the model, but the longevity when built according to the ...

Kent Graziano is a recognized industry expert, leader, trainer, and published author in the areas of data modeling, data warehousing, data architecture, and various Oracle tools (like Oracle Designer and Oracle SQL Developer Data Modeler). A certified Data Vault Master, Data Vault 2.0 Practitioner (CDVP2), and an Oracle ACE Director with over 30 years experience in the Information Technology ...

Introduction. In our previous articles of this blog series, we introduced Data Vault 2.0 as a concept for designing data analytics platforms, and introduced both the Data Vault 2.0 reference architecture and the real-time architecture. These architectures have one thing in common: they are setup and further developed by the data warehouse team.

Understanding how your organization can mature in it's ways-of-working (WoW) is vital to your future agility success. The Data Vault 2.0 methodology is backed by Lean-Six Sigma, TQM, and CMMI best practices, which provide a foundational level for measuring, monitoring and optimization of your WoW along the way.

Conclusion. Data Vault 2.0 stands out as a versatile, robust solution to the evolving challenges of data warehousing. Its modular, flexible nature adeptly addresses the needs for diverse data ...

To support the creation of Visual Data Vault drawings in Microsoft Visio, a stencil is implemented that can be used to draw Data Vault models. The stencil is available at www.visualdatavault.com. Data Vault 2.0 is often assumed to be only a modeling technique. A whole BI solution composed of agile methodology, architecture, implementation, and ...

Description. Data Vault is an innovative modeling technique invented by Dan Linstedt to simplify data integration from multiple sources, offers auditability and design flexibility to cope with data from the heterogeneous information systems which supports most business demands today. It is designed to deliver an Enterprise Data Warehouse while ...

This Article is Authored By Michael Olschimke, co-founder and CEO at Scalefree International GmbH and Co-authored with Dmytro Polishchuk Senior BI Consultant from Scalefree; The Technical Review is done by Ian Clarke and Naveed Hussain - GBBs (Cloud Scale Analytics) for EMEA at Microsoft; The last article in this blog series discussed the basic entity types in Data Vault 2.0: hubs, links and ...

Effectively Integrate People, Process and Technology. Data Vault 2.0 is a system of business intelligence that represents a major evolution in the already successful Data Vault architecture. It has been extended beyond the Data Warehouse component to include a model capable of dealing with cross-platform data persistence, multi-latency, multi ...

HYBRID ARCHITECTURE. The standard Data Vault 2.0 architecture in figure 1 focuses on structured data. Because more and more enterprise data is semi-structured or unstructured, the recommended best practice for a new enterprise data warehouse is to use a hybrid architecture based on a Hadoop cluster, as shown in the next figure: Figure 2: Hybrid ...

If you want to learn about Data Vault 2.0, your best starting point is the book Building a Scalable Data Warehouse with Data Vault 2.0 (see details below). Why do Data Vault 2.0 and dbt integrate well?¶ The Data Vault 2.0 method uses a small set of standard building blocks to model your data warehouse (Hubs, Links and Satellites in the Raw ...

Data Vault 2.0 is the prescriptive, industry-standard methodology for you to turn raw data into actionable intelligence, leading to tangible business outcomes. Follow our proactive, proven recipe, and transform your raw data into information that will allow you to produce the results that your business find most valuable.

Data Vault 2.0 is a system of business intelligence that comprises architecture, modeling and methodology. It goes beyond traditional data warehousing approaches to provide an agile, scalable, repeatable system for data and analytics delivery. Automation helps teams to cut the complexity and time in delivering and operating data vaults.

It follows the layered approach as the conceptual Data Vault 2.0 architecture: Data sources deliver data either in batches or real-time (or both). Depending on the delivery cycle, the data is either directly loaded into the Azure Data Lake (batches) or first accepted by the Event Hub (real-time).

Data Vault 2.0 is the new specification. ... Six Sigma, SDLC, etc..), the architecture (amongst others an input layer (data stage, called persistent staging area in Data Vault 2.0) and a presentation layer (data mart), and handling of data quality services and master data services), and the model. Within the methodology, the implementation of ...

Overview In the first quarter of 2024, seasonally adjusted GDP increased by 0.3% in both the euro area and the EU, compared with the previous quarter, according to a preliminary flash estimate published by Eurostat, the statistical office of the European Union. In the fourth quarter of 2023, GDP had declined by 0.1% in the euro area and had remained stable in the EU. These preliminary GDP ...

This Raw Data Vault could later be used to create reports, dashboards, and other data-centric solutions. Note that, while more complete, this article does not describe the full capabilities of Data Vault 2.0. Data Vault 2.0 shows its full strength when data from multiple data sources must be integrated.

In this webinar, we explore the benefits and reasons for adopting data vault 2.0 to customer experience as a result of embracing the Data Vault 2.0 method. The benefits are business, technical, and financial, applying to many different industries. Mr. Strange also discusses how organizations seek value from their data projects.