WATCH THE PROGRAM DEMO VIDEO

By submitting your information, you are agreeing to receive periodic information about online programs from MIT related to the content of this course.

Machine Learning, Modeling, and Simulation: Engineering Problem-Solving in the Age of AI

Demystify machine learning through computational engineering principles and applications in this two-course program from MIT

Submit your information to discover what makes this machine learning program different and how you'll learn with MIT xPRO.

A HANDS-ON APPROACH TO ENGINEERING PROBLEM-SOLVING

The advent of big data, cloud computing, and machine learning are revolutionizing how many professionals approach their work. These technologies offer exciting new ways for engineers to tackle real-world challenges. But with little exposure to these new computational methods, engineers lacking data science or experience in modern computational methods might feel left behind.

This two-course online certificate program brings a hands-on approach to understanding the computational tools used in modern engineering problem-solving.

Leveraging the rich experience of the faculty at the MIT Center for Computational Science and Engineering (CCSE), this program connects your science and engineering skills to the principles of machine learning and data science. With an emphasis on the application of these methods, you will put these new skills into practice in real time.

5 weeks per course

AFTER THIS PROGRAM, YOU WILL:

Learn how to simulate complex physical processes in your work using discretization methods and numerical algorithms.

Assess and respond to cost-accuracy tradeoffs in simulation and optimization, and make decisions about how to deploy computational resources.

Understand optimization techniques and their fundamental role in machine learning.

Practice real-world forecasting and risk assessment using probabilistic methods.

Recognize the limitations of machine learning and what MIT researchers are doing to resolve them.

Learn about current research in machine learning at the MIT CCSE and how it might impact your work in the future.

COURSES IN THIS PROGRAM

Machine Learning, Modeling, and Simulation Principles

Course 1 of 2 in the Machine Learning, Modeling, and Simulation online program

View Weekly Schedule

Applying Machine Learning to Engineering and Science

Course 2 of 2 in the Machine Learning, Modeling, and Simulation online program

View WEEKLY SCHEDULE

The MIT xPRO Learning Experience

We bring together an innovative pedagogy paired with world-class faculty.

LEARN BY DOING

Practice processes and methods through simulations, assessments, case studies, and tools.

LEARN FROM OTHERS

Connect with an international community of professionals interested in solving complex problems.

LEARN ON DEMAND

Access all of the content online and watch videos on the go.

REFLECT AND APPLY

Bring your new skills to your organization, through examples from technical work environments and ample prompts for reflection.

DEMONSTRATE YOUR SUCCESS

Earn a Professional Certificate and Continuing Education Units (CEUs) from MIT.

LEARN FROM THE BEST

Access cutting edge, research-based multimedia content developed by MIT professors & industry experts.

THIS PROGRAM IS FOR YOU IF...

You have a bachelor's degree in engineering (e.g., mechanical, civil, aerospace, chemical, materials, nuclear, biological, electrical, etc.) or the physical sciences.

You have proficient knowledge of college-level mathematics including differential calculus, linear algebra, and statistics.

You have some experience with MATLAB (R). Programming experience is not necessary, but knowledge of MATLAB (R) is very useful.

Your industry is or will be impacted by machine learning.

Prepare for this program with free online resources.

JUSTIFY YOUR PROFESSIONAL DEVELOPMENT

Many companies offer professional development benefits to their employees but sometimes starting the conversation is the hardest part of the process.

Use these talking points, stats, and email template to advocate for your professional development through MIT xPRO's online professional certificate program Machine Learning, Modeling, and Simulation: Engineering Problem-Solving in the Age of AI.

What Learners Are Saying

Mit xpro learners are not only scientists, engineers, technicians, managers and consultants – they are change agents. they take the initiative, push boundaries, and define the future..

Vivian D'Souza, Model Based Systems Analysis Engineer at Dana Incorporated

"The course was a fantastic blend of concepts and practical applications. Professor Youssef's content is unmatched to other similar courses that I've tried, and not to mention his enthusiasm for the topic is contagious."

Rachael Naoum, Product Definition Engineer at Dassault Systems

"I loved this course. At first, I was a bit intimidated, it's been a while since I've done any hardcore math. However, the layout of this course made it super easy to follow along with all the concepts and I felt very well-guided throughout the graded assignments. I like how the lectures are broken into short videos for each topic, making it easy to replay and digest. Very well-thought-out course."

Tolga Kaya, Professor of Electrical and Computer Engineering at Sacred Heart University

"This course allowed me to dig deeper [into] the foundations of machine learning and the underlying mechanism of the main algorithms that are used. As a MATLAB user, I particularly appreciated the utilization of MATLAB instead of straight black box python libraries."

Bill Bear, Agile Transformation Coach

"Great course for learning the concepts and methods behind machine learning! The course was prepared and delivered in a thoughtful way that provided good challenges and plenty of helpful information. This is just the kind of result that I have come to expect with MIT xPRO."

Juharasha Shaik, Senior Staff Software Engineer at Visa

"This course has helped me gain more understanding of the various algorithms that can be applied to the problems that we face during data analysis and modeling. I definitely recommend this course [for a] great understanding of the available tools/algorithms/methods to analyze various use cases and help model the solution."

MIT FACULTY

Youssef M. Marzouk

Faculty Co-Director of MIT Center of Computational Engineering, Professor of Aeronautics & Astronautics and Director of Aerospace Computational Design Laboratory, MIT

George Barbastathis

Professor of Mechanical Engineering, MIT

Heather Kulik

Associate Professor of Chemical Engineering, MIT

John Williams

Professor Civil & Environmental Engineering, MIT

Themistoklis Sapsis

Associate Professor of Mechanical & Ocean Engineering, MIT

Markus Buehler

McAfee Professor of Engineering & Head, Department of Civil & Environmental Engineering, MIT

Richard Braatz

Edwin R. Gilliland Professor of Chemical Engineering, MIT

Justin Solomon

Associate Professor of Electrical Engineering and Computer Science, MIT

Laurent Demanet

Professor of Applied Mathematics & Director of MIT's Earth Resources Laboratory

NOT READY TO ENROLL?

Watch a free demo video..

Machine Learning offers important new capabilities for solving today’s complex problems, but it’s not a panacea. To get beyond the hype, engineers and scientists must discern how and where machine learning tools are the best option — and where they are not.

Submit your information in the form above and watch a short demo video on the online program — what makes it different from other machine learning courses, what you'll learn, and how you will learn it.

Propel Your Career On Your Terms

Technology is accelerating at an unprecedented pace causing disruption across all levels of business. Tomorrow’s leaders must demonstrate technical expertise as well as leadership acumen in order to maintain a technical edge over the competition while driving innovation in an ever-changing environment.

MIT uniquely understands this challenge and how to solve it with decades of experience developing technical professionals. MIT xPRO’s online learning programs leverage vetted content from world-renowned experts to make learning accessible anytime, anywhere. Designed using cutting-edge research in the neuroscience of learning, MIT xPRO programs are application focused, helping professionals build their skills on the job.

Embrace change. Enhance your skill set. Keep learning. MIT xPRO is with you each step of the way.

Have questions about the program?

On the site

- Introduction to Computation and Programming Using Python

Introduction to Computation and Programming Using Python , third edition

With application to computational modeling and understanding data.

by John V. Guttag

ISBN: 9780262542364

Pub date: January 5, 2021

- Publisher: The MIT Press

664 pp. , 7 x 9 in , 140

ISBN: 9780262363433

Pub date: March 2, 2021

eTextbook rental

- 9780262542364

- Published: January 2021

- 9780262363433

- Published: March 2021

- MIT Press Bookstore

- Penguin Random House

- Barnes and Noble

- Bookshop.org

- Books a Million

Other Retailers:

- Amazon.co.uk

- Waterstones

- Description

The new edition of an introduction to the art of computational problem solving using Python.

This book introduces students with little or no prior programming experience to the art of computational problem solving using Python and various Python libraries, including numpy, matplotlib, random, pandas, and sklearn. It provides students with skills that will enable them to make productive use of computational techniques, including some of the tools and techniques of data science for using computation to model and interpret data as well as substantial material on machine learning.

The book is based on an MIT course and was developed for use not only in a conventional classroom but in a massive open online course (MOOC). It contains material suitable for a two-semester introductory computer science sequence.

This third edition has expanded the initial explanatory material, making it a gentler introduction to programming for the beginner, with more programming examples and many more “finger exercises.” A new chapter shows how to use the Pandas package for analyzing time series data. All the code has been rewritten to make it stylistically consistent with the PEP 8 standards. Although it covers such traditional topics as computational complexity and simple algorithms, the book focuses on a wide range of topics not found in most introductory texts, including information visualization, simulations to model randomness, computational techniques to understand data, and statistical techniques that inform (and misinform) as well as two related but relatively advanced topics: optimization problems and dynamic programming. The book also includes a Python 3 quick reference guide.

All of the code in the book and an errata sheet are available on the book's web page on the MIT Press website.

John V. Guttag is the Dugald C. Jackson Professor of Computer Science and Electrical Engineering at MIT.

Additional Material

Table of Contents

Introduction to Computer Science and Programming edX Course

Author's Website with Code and Errata

Author Video - MIT, edX, and OCW Courses

Introduction to Computer Science and Programming OpenCourseWare

Author Video - Accessibility at Different Levels

Author Video - New Chapters and Sections

Author Video - Use of the Book in Courses

Related Books

Popular Searches

Next generation science.

- Designing Challenge Based Science Learning

- Unit Library

What is Computational Thinking?

- Inclusive Integration of Computational Thinking

- Data Practices

- Creating Algorithms

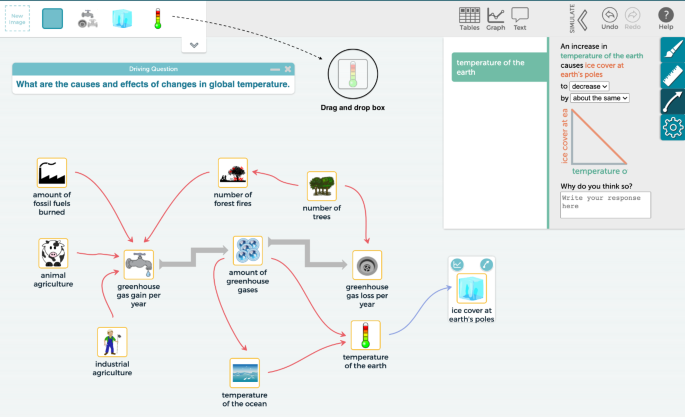

- Understanding Systems with Computational Models

Computational thinking is an interrelated set of skills and practices for solving complex problems, a way to learn topics in many disciplines, and a necessity for fully participating in a computational world.

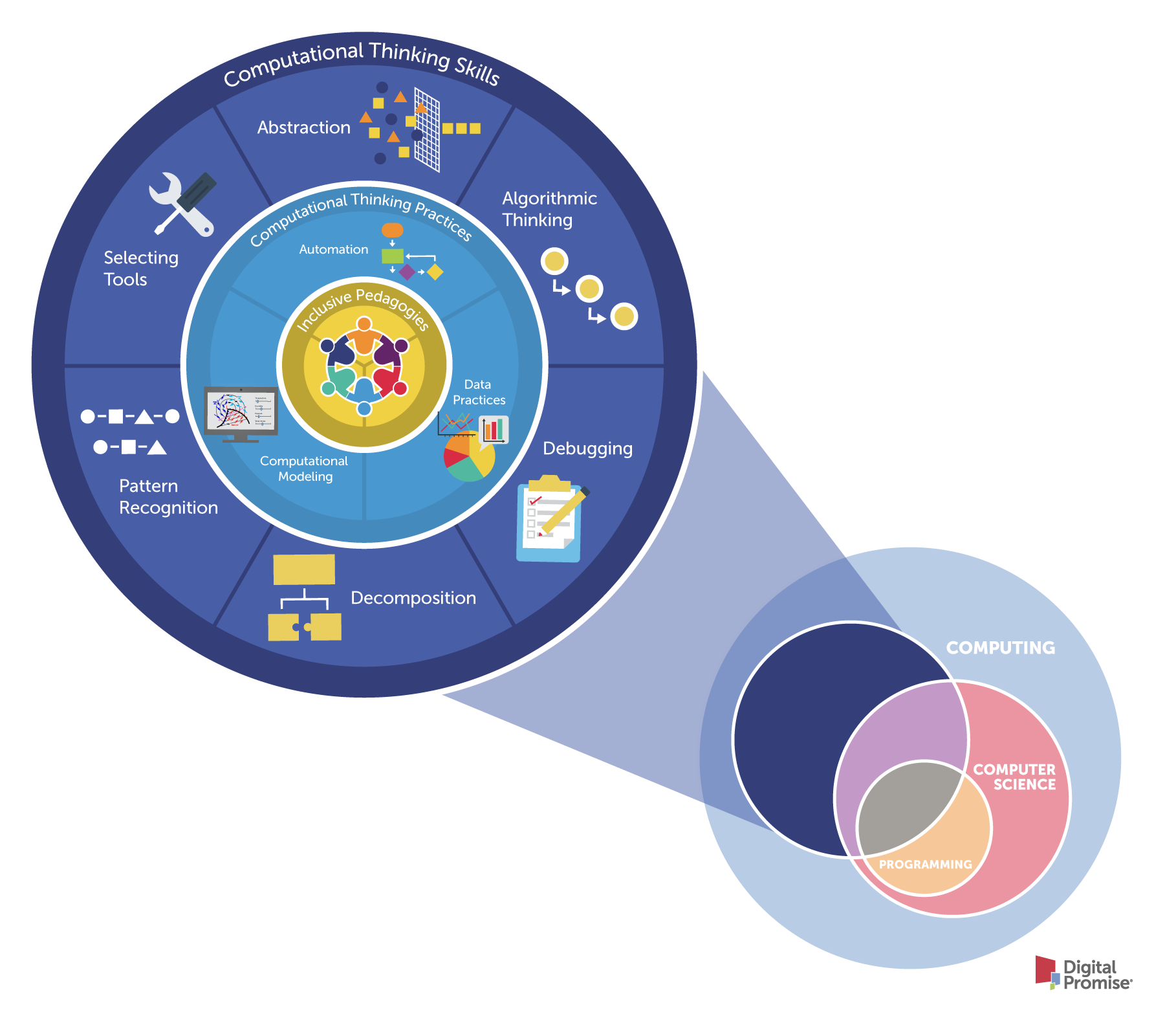

Many different terms are used when talking about computing, computer science, computational thinking, and programming. Computing encompasses the skills and practices in both computer science and computational thinking. While computer science is an individual academic discipline, computational thinking is a problem-solving approach that integrates across activities, and programming is the practice of developing a set of instructions that a computer can understand and execute, as well as debugging, organizing, and applying that code to appropriate problem-solving contexts. The skills and practices requiring computational thinking are broader, leveraging concepts and skills from computer science and applying them to other contexts, such as core academic disciplines (e.g. arts, English language arts, math, science, social studies) and everyday problem solving. For educators integrating computational thinking into their classrooms, we believe computational thinking is best understood as a series of interrelated skills and competencies.

Figure 1. The relationship between computer science (CS), computational thinking (CT), programming and computing.

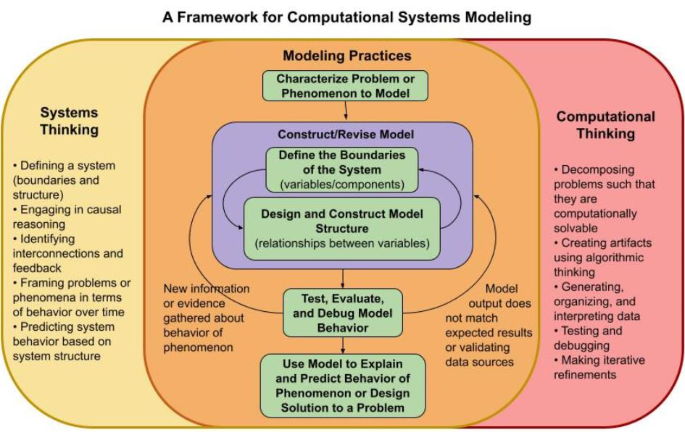

In order to integrate computational thinking into K-12 teaching and learning, educators must define what students need to know and be able to do to be successful computational thinkers. Our recommended framework has three concentric circles.

- Computational thinking skills , in the outermost circle, are the cognitive processes necessary to engage with computational tools to solve problems. These skills are the foundation to engage in any computational problem solving and should be integrated into early learning opportunities in K-3.

- Computational thinking practices , in the middle circle, combine multiple computational skills to solve an applied problem. Students in the older grades (4-12) may use these practices to develop artifacts such as a computer program, data visualization, or computational model.

- Inclusive pedagogies , in the innermost circle, are strategies for engaging all learners in computing, connecting applications to students’ interests and experiences, and providing opportunities to acknowledge, and combat biases and stereotypes within the computing field.

Figure 2. A framework for computational thinking integration.

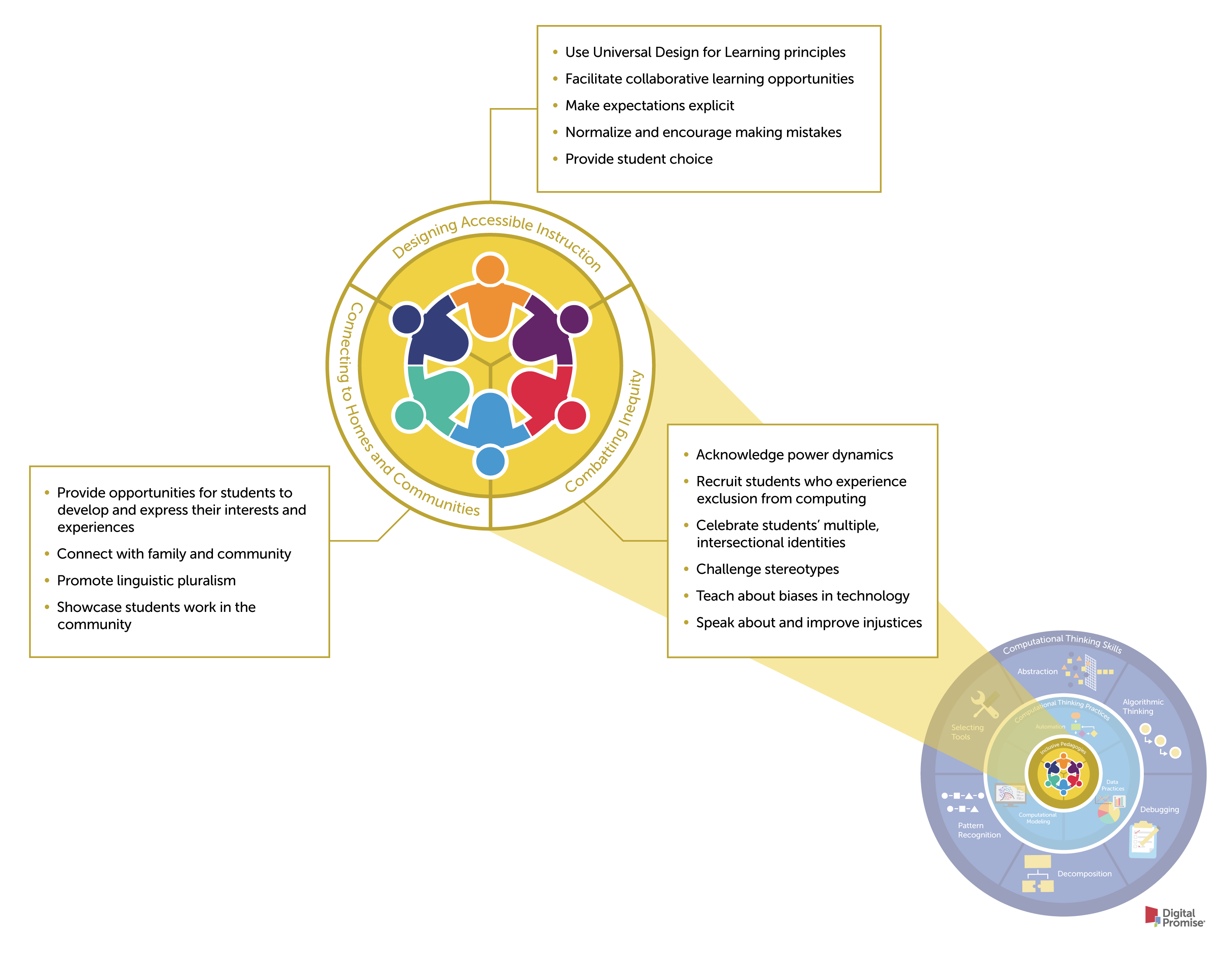

What does inclusive computational thinking look like in a classroom? In the image below, we provide examples of inclusive computing pedagogies in the classroom. The pedagogies are divided into three categories to emphasize different pedagogical approaches to inclusivity. Designing Accessible Instruction refers to strategies teachers should use to engage all learners in computing. Connecting to Students’ Interests, Homes, and Communities refers to drawing on the experiences of students to design learning experiences that are connected with their homes, communities, interests and experiences to highlight the relevance of computing in their lives. Acknowledging and Combating Inequity refers to a teacher supporting students to recognize and take a stand against the oppression of marginalized groups in society broadly and specifically in computing. Together these pedagogical approaches promote a more inclusive computational thinking classroom environment, life-relevant learning, and opportunities to critique and counter inequalities. Educators should attend to each of the three approaches as they plan and teach lessons, especially related to computing.

Figure 3. Examples of inclusive pedagogies for teaching computing in the classroom adapted from Israel et al., 2017; Kapor Center, 2021; Madkins et al., 2020; National Center for Women & Information Technology, 2021b; Paris & Alim, 2017; Ryoo, 2019; CSTeachingTips, 2021

Micro-credentials for computational thinking

A micro-credential is a digital certificate that verifies an individual’s competence in a specific skill or set of skills. To earn a micro-credential, teachers submit evidence of student work from classroom activities, as well as documentation of lesson planning and reflection.

Because the integration of computational thinking is new to most teachers, micro-credentials can be a useful tool for professional learning and/or credentialing pathways. Digital Promise has created micro-credentials for Computational Thinking Practices . These micro-credentials are framed around practices because the degree to which students have built foundational skills cannot be assessed until they are manifested through the applied practices.

Visit Digital Promise’s micro-credential platform to find out more and start earning micro-credentials today!

Sign up for updates!

Read the new OECD publication on supporting teachers to use digital tools for developing and assessing 21st century competences.

- Applications

- Karel the Turtle

- Betty's Brain

- Game Creator by Cand.li

- Competences

- PILA for Research

Competency framework

Conceptual framework of the PILA Computational Problem Solving module

What is computational problem solving.

‘Computational problem solving’ is the iterative process of developing computational solutions to problems. Computational solutions are expressed as logical sequences of steps (i.e. algorithms), where each step is precisely defined so that it can be expressed in a form that can be executed by a computer. Much of the process of computational problem solving is thus oriented towards finding ways to use the power of computers to design new solutions or execute existing solutions more efficiently.

Using computation to solve problems requires the ability to think in a certain way, which is often referred to as ‘computational thinking’. The term originally referred to the capacity to formulate problems as a defined set of inputs (or rules) producing a defined set of outputs. Today, computational thinking has been expanded to include thinking with many levels of abstractions (e.g. reducing complexity by removing unnecessary information), simplifying problems by decomposing them into parts and identifying repeated patterns, and examining how well a solution scales across problems.

Why is computational problem solving important and useful?

Computers and the technologies they enable play an increasingly central role in jobs and everyday life. Being able to use computers to solve problems is thus an important competence for students to develop in order to thrive in today’s digital world. Even people who do not plan a career in computing can benefit from developing computational problem solving skills because these skills enhance how people understand and solve a wide range of problems beyond computer science.

This skillset can be connected to multiple domains of education, and particularly to subjects like science, technology, engineering or mathematics (STEM) and the social sciences. Computing has revolutionised the practices of science, and the ability to use computational tools to carry out scientific inquiry is quickly becoming a required skillset in the modern scientific landscape. As a consequence, teachers who are tasked with preparing students for careers in these fields must understand how this competence develops and can be nurtured. At school, developing computational problem solving skills should be an interdisciplinary activity that involves creating media and other digital artefacts to design, execute, and communicate solutions, as well as to learn about the social and natural world through the exploration, development and use of computational models.

Is computational problem solving the same as knowing a programming language?

A programming language is an artificial language used to write instructions (i.e. code) that can be executed by a computer. However, writing computer code requires many skills beyond knowing the syntax of a specific programming language. Effective programmers must be able to apply the general practices and concepts involved in computational thinking and problem solving. For example, programmers have to understand the problem at hand, explore how it can be simplified, and identify how it relates to other problems they have already solved. Thus, computational problem solving is a skillset that can be employed in different human endeavours, including programming. When employed in the context of programming, computational problem solving ensures that programmers can use their knowledge of a programming language to solve problems effectively and efficiently.

Students can develop computational problem solving skills without the use of a technical programming language (e.g. JavaScript, Python). In the PILA module, the focus is not on whether students can read or use a certain programming language, but rather on how well students can use computational problem solving skills and practices to solve problems (i.e. to “think” like a computer scientist).

How is computational problem solving assessed in PILA?

Computational problem solving is assessed in PILA by asking students to work through dynamic problems in open-ended digital environments where they have to interpret, design, or debug computer programs (i.e. sequences of code in a visual format). PILA provides ‘learning assessments’, which are assessment experiences that include resources and structured support (i.e. scaffolds) for learning. During these experiences, students iteratively develop programs using various forms of support, such as tutorials, automated feedback, hints and worked examples. The assessments are cumulative, asking students to use what they practiced in earlier tasks when completing successive, more complex tasks.

To ensure that the PILA module focuses on foundational computational problem solving skills and that the material is accessible to all secondary school students no matter their knowledge of programming languages, the module includes an assessment application, ‘Karel World’, that employs an accessible block-based visual programming language. Block-based environments prevent syntax errors while still retaining the concepts and practices that are foundational to programming. These environments work well to introduce novices to programming and help develop their computational problem solving skills, and can be used to generate a wide spectrum of problems from very easy to very hard.

What is assessed in the PILA module on computational problem solving?

Computational problem solving skills.

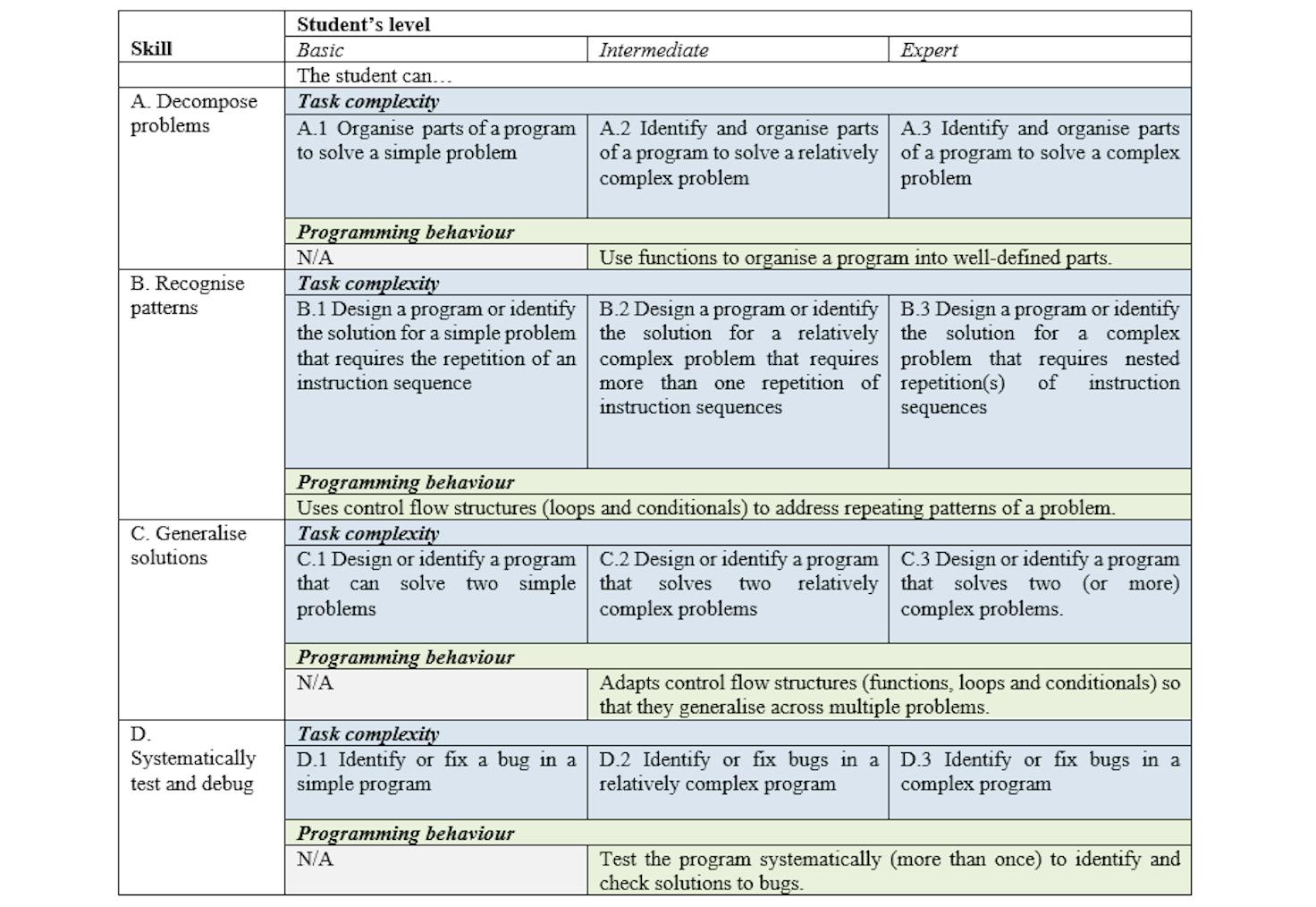

The module assesses the following set of complementary problem solving skills, which are distinct yet are often used together in order to create effective and efficient solutions to complex problems:

• Decompose problems

Decomposition is the act of breaking down a problem goal into a set of smaller, more manageable sub-goals that can be addressed individually. The sub-goals can be further broken down into more fine-grained sub-goals to reach the granularity necessary for solving the entire problem.

• Recognise and address patterns

Pattern recognition refers to the ability to identify elements that repeat within a problem and can thus be solved through the same operations. Adressing repeating patterns means instructing a computer to iterate given operations until the desired result is achieved. This requires identifying the repeating instructions and defining the conditions governing the duration of the repetition.

• Generalise solutions

Generalisation is the thinking process that results in identifying similarities or common differences across problems to define problem categories. Generalising solution results in producing programs that work across similar problems through the use of ‘abstractions’, such as blocks of organised, reusable sequence(s) of instructions.

• Systematically test and debug

Solving a complex computational problem is an adaptive process that follows iterative cycles of ideation, testing, debugging, and further development. Computational problem solving involves systematically evaluating the state of one’s own work, identifying when and how a given operation requires fixing, and implementing the needed corrections.

Programming concepts

In order to apply these skills to the programming tasks presented in the module, students have to master the below set of programming concepts. These concepts can be isolated but are more often used in concert to solve computational problems:

• Sequences

Sequences are lists of step-by-step instructions that are carried out consecutively and specify the behavior or action that should be produced. In Karel World, for example, students learn to build a sequence of block commands to instruct a turtle to move around the world, avoiding barriers (e.g. walls) and performing certain actions (e.g. pick up or place stones).

• Conditionals

Conditional statements allow a specific set of commands to be carried out only if certain criteria are met. For example, in Karel World, the turtle can be instructed to pick up stones ‘if stones are present’.

To create more concise and efficient instructions, loops can communicate an action or set of actions that are repeated under a certain condition. The repeat command indicates that a given action (i.e. place stone) should be repeated through a real value (i.e. 9 times). A loop could also include a set of commands that repeat as long as a Boolean condition is true, such as ‘while stones are present’.

• Functions

Creating a function helps organise a program by abstracting longer, more complex pieces of code into one single step. By removing repetitive areas of code and assigning higher-level steps, functions make it easier to understand and reason about the various steps of the program, as well as facilitate its use by others. A simple example in Karel World is the function that instructs the turtle to ‘turn around’, which consists of turning left twice.

How is student performance evaluated in the PILA module?

Student performance in the module is evaluated through rubrics. The rubrics are structured in levels, that succinctly describe how students progress in their mastery of the computational problem solving skills and associated concepts. The levels in the rubric (see Table 1) are defined by the complexity of the problems that are presented to the students (simple, relatively complex or complex) and by the behaviours students are expected to exhibit while solving the problem (e.g., using functions, conducting tests). Each problem in the module is mapped to one or more skills (the rows in the rubric) and classified according to its complexity (the columns in the rubric). Solving a problem in the module and performing a set of expected programming operations thus provide evidence that supports the claims about the student presented in the rubric. The more problems at a given cell of the rubric the student solves, the more conclusive is the evidence that the student has reached the level corresponding to that cell.

Please note: the rubric is updated as feedback is received from teachers on the clarity and usefulness of the descriptions.

Table 1 . Rubric for computational problem solving skills

Learning management skills

The performance of students on the PILA module depends not just on their mastery of computational problem solving skills and concepts, but also on their capacity to effectively manage their work in the digital learning environment. The complex tasks included in the module invite students to monitor, adapt and reflect on their understanding and progress. The assessment will capture data on students’ ability to regulate these aspects of their own work and will communicate to teachers the extent to which their students can:

• Use resources

PILA tasks provide resources such as worked examples that students can refer to as they build their own solution. Students use resources effectively when they recognise that they have a knowledge gap or need help after repeated failures and proceed to accessing a learning resource.

• Adapt to feedback

As students work through a PILA assessment, they receive different types of automated feedback (e.g.: ‘not there yet’, ‘error: front is blocked’, ‘try using fewer blocks’). Students who can successfully adapt are able to perform actions that are consistent with the feedback, for example inserting a repetition block in their program after the feedback ‘try using fewer blocks’.

• Evaluate own performance

In the assessment experiences designed by experts in PILA, the final task is a complex, open challenge. Upon completion of this task, students are asked to evaluate their own performance and this self-assessment is compared with their actual performance on the task.

• Stay engaged

The assessment will also collect information on the extent to which students are engaged throughout the assessment experience. Evidence on engagement is collected through questions that are included in a survey at the end of the assessment, and through information on students’ use of time and number of attempts.

Learn about computational problem solving-related learning trajectories:

- Rich, K. M., Strickland, C., Binkowski, T. A., Moran, C., & Franklin, D. (2017). K-8 Learning Trajectories Derived from Research Literature: Sequence, Repetition, Conditionals. Proceedings of the 2017 ACM Conference on International Computing Education Research, 182–190.

- Rich, K. M., Strickland, C., Binkowski, T. A., & Franklin, D. (2019). A K-8 Debugging Learning Trajectory Derived from Research Literature. Proceedings of the 50th ACM Technical Symposium on Computer Science Education, 745–751. https://doi.org/10.1145/3287324.3287396

- Rich, K. M., Binkowski, T. A., Strickland, C., & Franklin, D. (2018). Decomposition: A K-8 Computational Thinking Learning Trajectory. Proceedings of the 2018 ACM Conference on International Computing Education Research - ICER ’18, 124–132. https://doi.org/10.1145/3230977.3230979

Learn about the connection between computational thinking and STEM education:

- Weintrop, D., Beheshti, E., Horn, M., Orton, K., Jona, K., Trouille, L., & Wilensky, U. (2015). Defining Computational Thinking for Mathematics and Science Classrooms. Journal of Science Education and Technology, 25(1), 127–147. doi:10.1007/s10956-015-9581-5

Learn how students apply computational problem solving to Scratch:

- Brennan, K., & Resnick, M. (2012). Using artifact-based interviews to study the development of computational thinking in interactive media design. Paper presented at annual American Educational Research Association meeting, Vancouver, BC, Canada.

Associated Content

Take a step further

© Organisation for Economic Co-operation and Development

Computational Mathematics Bachelor of Science Degree

Request Info about undergraduate study Visit Apply

RIT’s computational mathematics major emphasizes problem-solving using mathematical models to identify solutions in business, science, engineering, and more.

Accelerated Bachelor’s/ Master’s Available

Co-op/Internship Encouraged

STEM-OPT Visa Eligible

Outcomes Rate of RIT Graduates from this degree

Average First-Year Salary of RIT Graduates from this degree

Ranking for Mathematicians on Best Business Jobs List, U.S. News & World Report , 2020

Overview for Computational Mathematics BS

Why major in computational mathematics at rit.

- Learn by Doing: Gain experience through an experiential learning component of the program approved by the School of Mathematical Sciences .

- Real World Experience: With RIT’s cooperative education and internship program you'll earn more than a degree. You’ll gain practical hands-on experience that sets you apart.

- Strong Career Paths: Recent computational mathematics graduates are employed at Carbon Black, iCitizen, Amazon, National Security Agency, KJT Group, Department of Defense, and Hewlett Packard.

What is Computational Mathematics?

Computational mathematics, or computational and applied mathematics, focuses on using numerical methods and algorithms to solve mathematical problems and perform mathematical computations with the aid of computers. It bridges the gap between theoretical mathematics and practical applications in various fields, including science, engineering, finance, and more.

RIT’s Computational Mathematics Major

The computational mathematics bachelor's degree combines the beauty and logic of mathematics with the application of today’s fastest and most powerful computers. At RIT, you get the solid foundation in both mathematics and computational methods that you need to be successful in the field or in graduate school.

RIT’s computational mathematics major uses computers as problem-solving tools to come up with mathematical solutions to real-world problems in engineering, operations research, economics, business, and other areas of science.

Computational Mathematics Degree Curriculum

The skills you learn in the computational mathematics degree can be applied to everyday life, from computing security and telecommunication networking to routes for school buses and delivery companies. The degree provides computational mathematics courses such as:

- Differential equations

- Graph theory

- Abstract and linear algebra

- Mathematical modeling

- Numerical analysis

Students are required to complete an experiential learning component of the program, as approved by the School of Mathematical Sciences . Students are encouraged to participate in research opportunities or cooperative education experiences . You will gain extensive computing skills through a number of high-level programming, system design, and other computer science courses.

Furthering Your Education in Computational Mathematics

Combined accelerated bachelor’s/master’s degrees.

Today’s careers require advanced degrees grounded in real-world experience. RIT’s Combined Accelerated Bachelor’s/Master’s Degrees enable you to earn both a bachelor’s and a master’s degree in as little as five years of study, all while gaining the valuable hands-on experience that comes from co-ops, internships, research, study abroad, and more.

+1 MBA: Students who enroll in a qualifying undergraduate degree have the opportunity to add an MBA to their bachelor’s degree after their first year of study, depending on their program. Learn how the +1 MBA can accelerate your learning and position you for success.

Next Steps to Enroll

From deposit to housing, view the first five steps for accepted first-year students.

What’s next for accepted students

Careers and Experiential Learning

Typical job titles, cooperative education.

What’s different about an RIT education? It’s the career experience you gain by completing cooperative education and internships with top companies in every single industry. You’ll earn more than a degree. You’ll gain real-world career experience that sets you apart. It’s exposure–early and often–to a variety of professional work environments, career paths, and industries.

Co-ops and internships take your knowledge and turn it into know-how. Science co-ops include a range of hands-on experiences, from co-ops and internships and work in labs to undergraduate research and clinical experience in health care settings. These opportunities provide the hands-on experience that enables you to apply your scientific, math, and health care knowledge in professional settings while you make valuable connections between classwork and real-world applications.

Although cooperative education is optional for computational mathematics students, it may be used to fulfill the experiential learning component of the program. Students have worked in a variety of settings on problem-solving teams with engineers, biologists, computer scientists, physicists, and marketing specialists.

National Labs Career Events and Recruiting

The Office of Career Services and Cooperative Education offers National Labs and federally-funded Research Centers from all research areas and sponsoring agencies a variety of options to connect with and recruit students. Students connect with employer partners to gather information on their laboratories and explore co-op, internship, research, and full-time opportunities. These national labs focus on scientific discovery, clean energy development, national security, technology advancements, and more. Recruiting events include our university-wide Fall Career Fair, on-campus and virtual interviews, information sessions, 1:1 networking with lab representatives, and a National Labs Resume Book available to all labs.

Featured Profiles

A Beacon of Public Leadership at RIT Wins Newman Civic Fellowship

Student Nidhi Baindur was awarded a Newman Civic Fellowship for her role as a change-maker and public problem-solver at RIT.

Artificial Intelligence, Mathematics, and Designing Mini Protein Drugs

David Longo ’10 (computational math)

David Longo ’10, CEO of Ordaōs, shares his experience at RIT and explains how computational mathematics allowed him to think outside the box to come up with advanced solutions.

Computational Mathematics and a Future in Cryptography

Keegan Kresge ‘22 (computational mathematics)

Keegan Kresge loves math and programming, making him the perfect fit for cryptography. After completing his degree in computational mathematics, he plans to work at the Department of Defense.

Curriculum for 2023-2024 for Computational Mathematics BS

Current Students: See Curriculum Requirements

Computational Mathematics, BS degree, typical course sequence

Please see General Education Curriculum (GE) for more information.

(WI) Refers to a writing intensive course within the major.

Please see Wellness Education Requirement for more information. Students completing bachelor's degrees are required to complete two different Wellness courses.

† Three of the program electives must be MATH or STAT courses with course numbers of at least 250, and either Graph Theory (MATH-351) or Numerical Linear Algebra (MATH-412) must be one of the three courses. Three of the program elective courses must be chosen from SWEN-261, MATH-305, ISTE-470, CMPE-570, EEEE-346, EEEE-547, (ISEE-301 or MATH-301), BIOL-235, BIOL-470, PHYS-377, ENGL-581, IGME-386, and CSCI courses numbered at least 250.

‡ Students will satisfy this requirement by taking either University Physics I (PHYS-211) and University Physics II (PHYS-212) or General & Analytical Chemistry I and Lab (CHMG-141/145) and General & Analytical Chemistry II and Lab (CHMG-142/146) or General Biology I and Lab (BIOL-101/103) and General Biology II and Lab (BIOL-102/104).

§ Students are required to complete an experiential learning component of the program: MATH-501 Experiential Learning Requirement in Mathematics, as approved by the School of Mathematics and Statistics. Students are urged to fulfill this requirement by participating in research opportunities or co-op experiences; students can also fulfill this requirement by taking MATH-500 Senior Capstone in Mathematics as a program elective.

Combined Accelerated Bachelor's/Master's Degrees

The curriculum below outlines the typical course sequence(s) for combined accelerated degrees available with this bachelor's degree.

Computational Mathematics, BS degree/Applied and Computational Mathematics (thesis option), MS degree, typical course sequence

Computational mathematics, bs degree/applied and computational mathematics (project option), ms degree, typical course sequence, computational mathematics, bs degree/computer science, ms degree, typical course sequence, admissions and financial aid.

This program is STEM designated when studying on campus and full time.

First-Year Admission

A strong performance in a college preparatory program is expected. This includes:

- 4 years of English

- 3 years of social studies and/or history

- 4 years of mathematics is required and must include algebra, geometry, algebra 2/trigonometry, and pre-calculus. Calculus is preferred .

- 2-3 years of science is required and must include chemistry or physics; both are recommended .

Transfer Admission

Transfer course recommendations without associate degree Courses in liberal arts, physics, math, and chemistry

Appropriate associate degree programs for transfer AS degree in liberal arts with math/science option

Learn How to Apply

Financial Aid and Scholarships

100% of all incoming first-year and transfer students receive aid.

RIT’s personalized and comprehensive financial aid program includes scholarships, grants, loans, and campus employment programs. When all these are put to work, your actual cost may be much lower than the published estimated cost of attendance. Learn more about financial aid and scholarships

Nathan Cahill

Nathaniel Barlow

Undergraduate Research Opportunities

Many students join research teams and engage in research projects starting as early as their first year. Participation in undergraduate research leads to the development of real-world skills, enhanced problem-solving techniques, and broader career opportunities. Our students have opportunities to travel to national conferences for presentations and also become contributing authors on peer-reviewed manuscripts. Explore the variety of mathematics and statistics undergraduate research projects happening across the university.

Latest News

July 20, 2023

AI has secured a footing in drug discovery. Where does it go from here?

PharmaVoice talks to David Longo ’10 (computational mathematics), CEO of drug design company Ordaos, about artificial intelligence in drug development.

June 20, 2023

Sign-Speak joins AWS Impact Accelerator

The Rochester Beacon features Nikolas Kelly '20 (supply chain management), co-founder and chief product officer of Sign-Speak, and Nicholas Wilkins '19 (computational mathematics), '19 MS (computer science).

May 17, 2023

RIT students awarded international fellowships and scholarships

Several RIT students from a variety of colleges and academic disciplines have been awarded prestigious international fellowships and scholarships.

Modeling a Problem-Solving Approach Through Computational Thinking for Teaching Programming

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Open access

- Published: 03 August 2020

Fostering computational thinking through educational robotics: a model for creative computational problem solving

- Morgane Chevalier ORCID: orcid.org/0000-0002-9115-1992 1 , 2 na1 ,

- Christian Giang 2 , 3 na1 ,

- Alberto Piatti 3 &

- Francesco Mondada 2

International Journal of STEM Education volume 7 , Article number: 39 ( 2020 ) Cite this article

17k Accesses

87 Citations

35 Altmetric

Metrics details

Educational robotics (ER) is increasingly used in classrooms to implement activities aimed at fostering the development of students’ computational thinking (CT) skills. Though previous works have proposed different models and frameworks to describe the underlying concepts of CT, very few have discussed how ER activities should be implemented in classrooms to effectively foster CT skill development. Particularly, there is a lack of operational frameworks, supporting teachers in the design, implementation, and assessment of ER activities aimed at CT skill development. The current study therefore presents a model that allows teachers to identify relevant CT concepts for different phases of ER activities and aims at helping them to appropriately plan instructional interventions. As an experimental validation, the proposed model was used to design and analyze an ER activity aimed at overcoming a problem that is often observed in classrooms: the trial-and-error loop, i.e., an over-investment in programming with respect to other tasks related to problem-solving.

Two groups of primary school students participated in an ER activity using the educational robot Thymio. While one group completed the task without any imposed constraints, the other was subjected to an instructional intervention developed based on the proposed model. The results suggest that (i) a non-instructional approach for educational robotics activities (i.e., unlimited access to the programming interface) promotes a trial-and-error behavior; (ii) a scheduled blocking of the programming interface fosters cognitive processes related to problem understanding, idea generation, and solution formulation; (iii) progressively adjusting the blocking of the programming interface can help students in building a well-settled strategy to approach educational robotics problems and may represent an effective way to provide scaffolding.

Conclusions

The findings of this study provide initial evidence on the need for specific instructional interventions on ER activities, illustrating how teachers could use the proposed model to design ER activities aimed at CT skill development. However, future work should investigate whether teachers can effectively take advantage of the model for their teaching activities. Moreover, other intervention hypotheses have to be explored and tested in order to demonstrate a broader validity of the model.

Introduction

Educational robotics (ER) activities are becoming increasingly popular in classrooms. Among others, ER activities have been praised for the development of important twenty-first century skills such as creativity (Eguchi, 2014 ; Negrini & Giang, 2019 ; Romero, Lepage, & Lille, 2017 ) and collaboration (Denis & Hubert, 2001 ; Giang et al., 2019 ). Due to its increasing popularity, ER is also often used to implement activities aimed at fostering CT skills of students. Such activities usually require students to practice their abilities in problem decomposition, abstraction, algorithm design, debugging, iteration, and generalization, representing six main facets of CT (Shute, Sun, & Asbell-Clarke, 2017 ). Indeed, previous works have argued that ER can be considered an appropriate tool for the development of CT skills (Bers, Flannery, Kazakoff, & Sullivan, 2014 ; Bottino & Chioccariello, 2014 ; Catlin & Woollard, 2014 ; Chalmers, 2018 ; Eguchi, 2016 ; Leonard et al., 2016 ; Miller & Nourbakhsh, 2016 ).

Nevertheless, studies discussing how to implement ER activities for CT skills development in classrooms, still appear to be scarce. The latest meta-analyses carried out on ER and CT (Hsu, Chang, & Hung, 2018 ; Jung & Won, 2018 ; Shute et al., 2017 ) have mentioned only four works between 2006 and 2018 that elaborated how ER activities should be implemented in order to foster CT skills in K-5 education (particularly for grades 3 and 4, i.e., for students of age between 8 and 10 years old). Another recent work (Ioannou & Makridou, 2018 ) has shown that there are currently only nine empirical investigations at the intersection of ER and CT in K-12. Among the recommendations for researchers that were presented in this work, the authors stated that it is important to “work on a practical framework for the development of CT through robotics.” A different study (Atmatzidou & Demetriadis, 2016 ) has pointed out that there is a lack of “explicit teacher guidance on how to organize a well-guided ER activity to promote students’ CT skills.”

In the meta-analysis of Shute et al. ( 2017 ), the authors reviewed the state of the art of existing CT models and concluded that the existing models were inconsistent, causing “problems in designing interventions to support CT learning.” They therefore synthesized the information and proposed a new CT model represented by the six main facets mentioned above (Shute et al., 2017 ). Though the authors suggested that this model may provide a framework to guide assessment and support of CT skills, the question remains whether teachers can take advantage of such models and put them into practice. In order to support teachers in the design, implementation, and assessment of activities addressing these CT components, it can be presumed that more operational frameworks are needed. Particularly, such frameworks should provide ways to identify specific levers that teachers can adjust to promote the development of CT skills of students in ER activities.

To address this issue, the present work aims at providing an operational framework for ER activities taking into consideration two main aspects of CT, computation, and creativity, embedded in the context of problem-solving situations. The objective of the present study is to obtain a framework that allows teachers to effectively design ER activities for CT development, anticipate what could occur in class during the activities, and accordingly plan specific interventions. Moreover, such framework could potentially allow teachers to assess the activities in terms of CT competencies developed by the students.

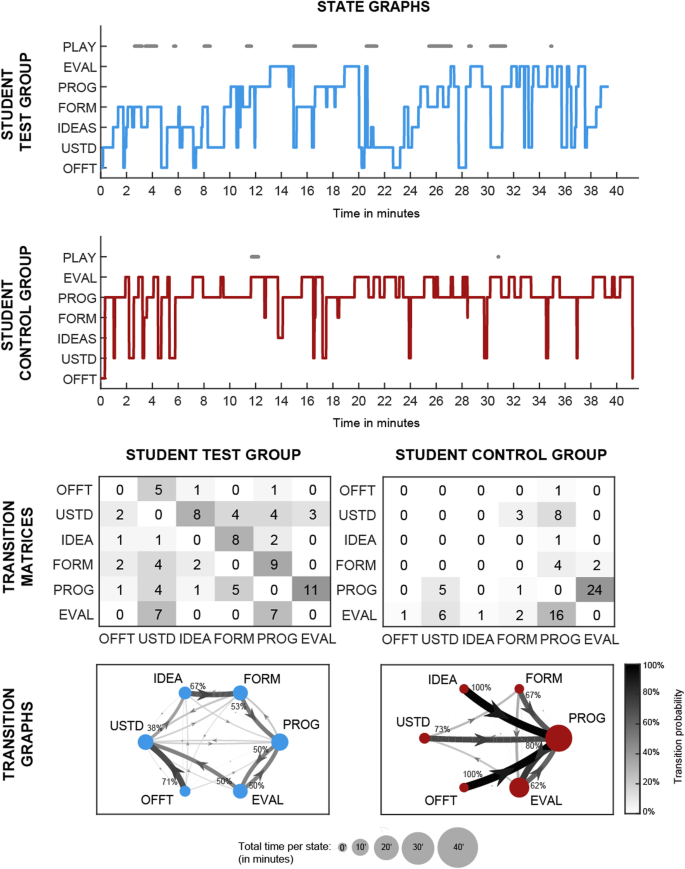

To verify the usefulness of the proposed framework, it has been used to design and analyze an ER activity aimed at overcoming a situation that is often observed in classrooms: the trial-and-error loop. It represents an over-investment in programming with respect to other problem-solving tasks during ER activities. In the current study, an ER activity has been developed and proposed to two groups of primary school pupils: a test group and a control group, each performing the same task under different experimental conditions. The students were recorded during the activity and the videos have been analyzed by two independent evaluators to study the effectiveness of instructional interventions designed according to the proposed framework and implemented in the experimental condition for the test groups.

In the following section, past works at the intersection of ER and CT are summarized. This is followed by the presentation of the creative computational problem-solving (CCPS) model for ER activities aimed at CT skills development. Subsequently, the research questions addressed in this study are described, as well as the methods for the experimental validation of this study. This is followed by the presentation of the experimental results and a discussion on these findings. The paper finally concludes with a summary on the possible implications and the limitations of the study.

What three meta-analyses at the crossroads of ER and CT have shown

The idea of using robots in classrooms has a long history—indeed, much time has passed since the idea was first promoted by Papert in the late 1970s (Papert, 1980 ). On the other hand, the use of ER to foster CT skills development appears to be more recent—it was in 2006 that Jeannette Wing introduced the expression “computational thinking” to the educational context (Wing, 2006 ). It is therefore not surprising that only three meta-analyses have examined the studies conducted on this topic between 2006 and 2017 (Hsu et al., 2018 ; Jung & Won, 2018 ; Shute et al., 2017 ).

In the first meta-analysis (Jung & Won, 2018 ), Jung and Won describe a systematic and thematic review on existing ER literature ( n = 47) for K-5 education. However, only four out of the 47 analyzed articles related ER to CT and only two of them (Bers et al., 2014 ; Sullivan, Bers, & Mihm, 2017 ) conveyed specific information about how to teach and learn CT, yet limited to K-2 education (with the Kibo robot and the TangibleK platform).

In a second meta-analysis (Hsu et al., 2018 ), Hsu et al. conducted a meta-review on CT in academic journals ( n = 120). As a main result, the authors concluded that “CT has mainly been applied to activities of program design and computer science.” This focus on programming seems to be common and has been questioned before—among others, it has been argued that CT competencies consist of more than just skills related to programming.

A similar conclusion was found in the third meta-analysis (Shute et al., 2017 ). Shute et al. conducted a review among literature on CT in K-16 education ( n = 45) and stated that “considering CT as knowing how to program may be too limiting.” Nevertheless, the relation between programming and CT seems to be interlinked. The authors suggested that “CT skills are not the same as programming skills (Ioannidou, Bennett, Repenning, Koh, & Basawapatna, 2011 ), but being able to program is one benefit of being able to think computationally (Israel, Pearson, Tapia, Wherfel, & Reese, 2015 ).”

According to the findings of these three recent meta-analyses, it appears that there is still a lack of studies focusing on how ER can be used for CT skills development. Except for two studies that specifically describe how to teach and learn CT with ER in K-2 education, operational frameworks guiding the implementation of ER activities for students, especially those aged between 8 and 10 years old (i.e., grades 3 and 4), are still scarce. It also emerges that in the past, activities aimed at CT development have focused too much on the programming aspects. However, CT competences go beyond the limitations on pure coding skills and ER activities should therefore be designed accordingly.

CT development with ER is more than just programming a robot

Because robots can be programmed, ER has often been considered a relevant medium for CT skill development. However, many researchers have also argued that CT is not only programming. As illustrated by Li et al. ( 2020 ), CT should be considered “as a model of thinking that is more about thinking than computing” (p.4). In the work of Bottino and Chioccariello ( 2014 ), the authors are reminiscent of what Papert claimed about the use of robots (Papert, 1980 ). Programming concrete objects such as robots support students’ active learning as robots can “provide immediate feedback and concept reification.” The programming activity is thus not the only one that is important for CT skills development. Instead, evaluating (i.e., testing and observing) can be considered equally important. In the 5c21 framework for CT competency of Romero et al. ( 2017 ), both activities therefore represent separate components: create a computer program (COMP5) and evaluation and iterative improvement (COMP6).

While the evaluation of a solution after programming appears to be natural for most ER activities, it seems that activities prior to programming often receive far less attention. Indeed, it is also relevant to explore what activities are required before programming, that is to say, before translating an algorithm into a programming language for execution by a robot. Several efforts have shown that different activities can be carried out before the programming activity (Giannakoulas & Xinogalos, 2018 ; Kazimoglu, Kiernan, Bacon, & MacKinnon, 2011 , 2012 ). For instance, puzzle games such as Lightbot (Yaroslavski, 2014 ) can be used to convey basic concepts needed before programming (Giannakoulas & Xinogalos, 2018 ). In another work (Kazimoglu et al., 2012 ), a fine effort has been made to put in parallel the task to be done by the student (with a virtual bot) and the cognitive activity implied. This is how the authors sustained the CT skills of students before programming. The integration of such instructional initiatives prior to programming is usually aimed at introducing fundamental concepts necessary for the programming activities. Indeed, code literacy (COMP3) and technological system literacy (COMP4) have been described as two other components in the framework of Romero et al. ( 2017 ), and they have been considered important prerequisites for the use of programmable objects.

But even if students meet these prerequisites, there are other important processes that they should go through prior to the creation of executable code. The two following components in the framework of Romero et al. are related to these processes: problem identification (COMP1) and organize and model the situation (COMP2). However, it appears that in the design of ER activities, these aspects are often not given enough attention. In a classroom environment, robots and computers often attract students’ attention to such an extent that the students tend to dive into a simple trial-and-error approach instead of developing proper solution strategies. Due to the prompt feedback of the machine, students receive an immediate validation of their strategy, reinforcing their perception of controllability (Viau, 2009 ), but this also causes them to easily enter in a trial-and-error loop (Shute et al., 2017 ). In many different learning situations, however, researchers have shown that a pure trial-and-error-approach may limit skill development (Antle, 2013 ; Sadik, Leftwich, & Nadiruzzaman, 2017 ; Tsai, Hsu, & Tsai, 2012 ). In the context of inquiry-based science learning, Bumbacher et al. have shown that students who were instructed to follow a Predict-Observe-Explain (POE) strategy, forcing them to take breaks between actions, gained better conceptual understanding than students who used the same manipulative environment without any instructions (Bumbacher, Salehi, Wieman, & Blikstein, 2018 ). The strategic use of pauses has also been investigated by Perez et al. ( 2017 ) in the context of students who worked with a virtual lab representing a DC circuit construction kit. The authors argued that strategic pauses can represent opportunities for reflection and planning and are highly associated with productive learning. A similar approach has been discussed by Dillenbourg ( 2013 ) who introduced a paper token to a tangible platform to prevent students from running simulations without reflection. Only when students gave a satisfactory answer to the teacher about the predicted behavior of the platform, they were given the paper token to execute the simulations.

However, to this day such instructional interventions have not been applied to activities involving ER. As a matter of fact, many ER activities are conducted without any specific instructional guidance.

As elaborated before, the development of CT skills with ER should involve students in different phases that occur prior as well as after the creation of programming code. While most of the time, the evaluation of a solution after programming appears to be natural, the phases required prior to programming are usually less emphasized. These preceding phases, however, incorporate processes related to many important facets of CT and should therefore be equally addressed. The following section therefore introduces a model for ER activities that allows teachers to identify all relevant phases related to different CT skills. Based on this model, teachers may accordingly plan instructional interventions to foster the development of such CT skills.

The CCPS model

Educational robotic systems for the development of ct skills.

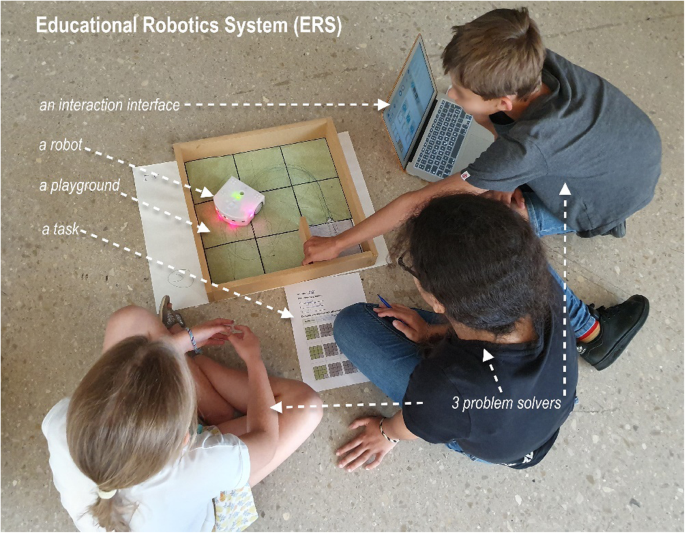

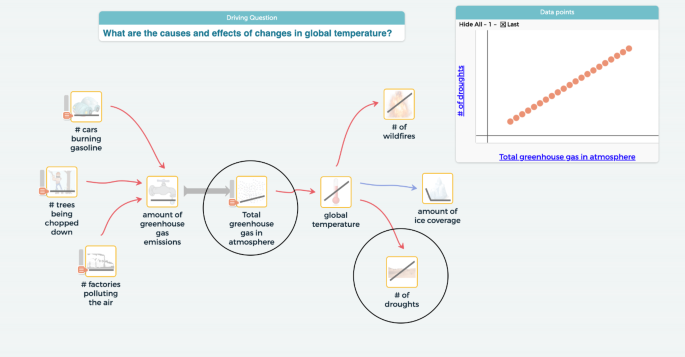

Educational robotics activities are typically based on three main components: one or more educational robots, an interaction interface allowing the user to communicate with the robot and one or more tasks to be solved on a playground (Fig. 1 ).

Example of an educational robotics (ER) activity. The figure exemplifies a typical situation encountered in ER activities. One or more problem solvers work on a playground and confront a problem situation involving an Educational Robotics System (ERS), consisting of one or more robots, an interaction interface and one or more tasks to be solved

This set of components is fundamental to any kind of ER activity and has been previously referred to as an Educational Robotics System (ERS) by Giang, Piatti, and Mondada ( 2019 ). When an ERS is used for the development of CT skills, the given tasks are often formulated as open-ended problems that need to be solved. These problems are usually statements requiring the modification of a given perceptual reality (virtual or concrete) through a creative act in order to satisfy a set of conditions. In most cases, a playground relates to the environment (offering the range of possibilities) in which the problem is embedded. The modification can consist in the creation of a new entity or the realization of an event inside the playground, respectively, the acquisition of new knowledge about the playground itself. A modification that satisfies the conditions of the problem is called solution. The problem solver is a human (or a group of humans), that is able to understand and interpret the given problem, to create ideas for its resolution, to use the interaction interface to transform these ideas into a behavior executed by the robot, and to evaluate the solution represented by the behavior of the robot. The language of the problem solver and the language of the robot are usually different. While the (human) problem solver’s language consists of natural languages (both oral and written), graphical, or iconic representations and other perceptual semiotic registers, the (artificial) language of the robot consists of formal languages (i.e., machine languages, binary code). Consequently, the problem should be stated in the problem solver’s language, while the solution has to be implemented in the robot’s language. For the problem solver, the robot’s language is a sort of foreign language that he/she should know in order to communicate with the robot. On the other hand, the robot usually does not communicate directly with the problem solver but generates a modification of the playground that the problem solver can perceive through his/her senses. To facilitate the interaction, the robot embeds a sort of translator between the robot’s language and the problem solver’s language. Indeed, graphical or text programming languages allow part of the language of the robot to be shown and written in iconic representations that can be directly perceived by the problem solver.

Combining creative problem solving and computational problem solving

It has often been claimed that CT is a competence related to the process of problem solving that is contextualized in “computational” situations (Barr & Stephenson, 2011 ; Dierbach, 2012 ; Haseski, Ilic, & Tugtekin, 2018 ; Perkovic, Settle, Hwang, & Jones, 2010 ; Weintrop et al., 2016 ). These processes involve in particular the understanding of a given problem situation, the design of a solution, and the implementation in executable code. At the same time, some researchers have pointed out that the development of CT competencies also involves a certain creative act (Brennan & Resnick, 2012 ; DeSchryver & Yadav, 2015 ; Repenning et al., 2015 ; Romero et al., 2017 ; Shute et al., 2017 ). This perspective refers to creative problem solving which involves “a series of distinct mental operations such as collecting information, defining problems, generating ideas, developing solutions, and taking action” (Puccio, 1999 ). In a different context, creative problem solving has been described as a cooperative iterative process (Lumsdaine & Lumsdaine, 1994 ) involving different persons with different mindsets and thinking modes and consisting of five phases: problem definition (detective and explorer), idea generation (artist), idea synthesis (engineer), idea evaluation (judge), and solution implementation (producer) (Lumsdaine & Lumsdaine, 1994 ).

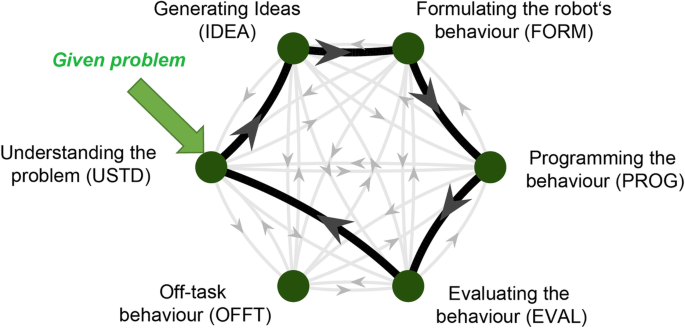

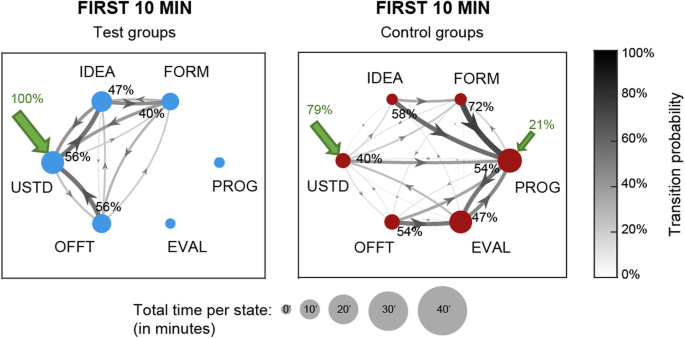

The creative computational problem solving (CCPS) model presented in the current study, represents a hybrid model combining these two perspectives and adapting them to the context of ERS. Similar to the model of Lumsdaine and Lumsdaine ( 1994 ), the proposed model involves the definition of different phases and iterations. However, while Lumsdaine and Lumsdaine’s model describes the interactions between different human actors, each taking a specific role in the problem-solving process, this model considers the fact that different human actors interact with one or more artificial actors, i.e., the robot(s), to implement the solution. The CCPS model is a structure of five phases, in which transitions are possible, in each moment, from any phase to any other (Fig. 2 ).

Phases and transitions of the CCPS model. The graph illustrates the six different phases (green dots) that students pass through when working on ER activities and all the possible transitions between them (gray arrows). The theoretically most efficient problem-solving cycle is highlighted in black. The cycle usually starts with a given problem situation that needs first to be understood by the problem solver (green arrow)

The first three phases of the model can be related to the initial phases of the creative problem-solving model presented in the work of Puccio ( 1999 ): understanding the problem, generating ideas, and planning for action (i.e., solution finding, acceptance-finding). While the first two phases (understanding the problem and generating ideas) are very similar to Puccio’s model, the third phase in this model (formulating the robot’s behavior) is influenced by the fact that the action should be performed by an artificial agent (i.e., a robot). On the other hand, the last two phases of this model can be related to computational problem solving: the fourth phase (programming the behavior) describes the creation of executable code for the robot and the fifth phase (evaluating the solution) consists in the evaluation of the execution of the code (i.e., the robot’s behavior).

The phases of the CCPS model

Based on the conceptual framework of ERS (Giang, Piatti, & Mondada, 2019 ), the CCPS model describes the different phases that students should go through when ERS is used for CT skills development (Fig. 2 ).

It is a structure of five main phases that theoretically, in the most effective case, are completed one after the other and then repeated iteratively.

Understanding the problem (USTD)

In this phase, the problem solver identifies the given problem (see COMP1 in the 5c21 framework of Romero et al. ( 2017 )) through abstraction and decomposition (Shute et al., 2017 ) in order to identify the desired modification of the playground. Here, abstraction is considered as the process of “identifying and extracting relevant information to define main ideas” (Hsu et al., 2018 ). This phase takes as input the given problem situation, usually expressed in the language of the problem solver (e.g., natural language, graphical representations). The completion of this phase is considered successful if the problem solver identifies an unambiguous transformation of the playground that has to be performed by the robot. The output of the phase is the description of the required transformation of the playground.

Generating ideas (IDEA)

The problem-solver sketches one or more behavior ideas for the robot that could satisfy the conditions given in the problem, i.e., modify the playground in the desired way. This phase requires a creative act, i.e., “going from intention to realization” (Duchamp, 1967 ). The input to this phase is the description of the transformation of the playground that has to be performed by the robot. The phase is completed successfully when one or more behaviors are sketched that have the potential of inducing the desired transformation of the playground. The sketches of the different behaviors are the output of this phase.

Formulating the behavior (FORM)

A behavior idea is transformed into a formulation of the robot’s behavior while considering the physical constraints of the playground and by mobilizing the knowledge related to the characteristics of the robot (see COMP4 in Romero et al. ( 2017 )). To do so, the problem solver has to organize and model the situation efficiently (like in COMP2 in Romero et al. ( 2017 )). The input to this phase is the sketch of a behavior, selected among those produced in the preceding phase. The phase is performed successfully when the behavior sketch is transformed into a complete formulation of the robot’s behavior. The behavior formulation is expressed as algorithms in the problem solver’s language, describing “logical and ordered instructions for rendering a solution to the problem” (Shute et al., 2017 ). This is considered the output of this phase.

Programming the behavior (PROG)

In this phase, the problem solver creates a program (see COMP5 in Romero et al. ( 2017 )) to transform the behavior formulation into a behavior expressed by the robot. Prerequisites for succeeding in this phase are the necessary computer science literacy and the knowledge of the specific programming language of the robot or its interface, respectively (see COMP3 in Romero et al. ( 2017 )). Moreover, this phase serves for debugging (Shute et al., 2017 ), allowing the problem solver to revise previous implementations. The input to this phase is the robot’s behavior expressed in the problem solver’s language. The phase is performed successfully when the formulated behavior of the robot is completely expressed in the robot’s language and executed. The output of this phase is the programmed behavior in the robot’s language and its execution so that, once the robot is introduced to the playground, it results in a transformation of the playground.

Evaluating the solution (EVAL)

While the robot performs a modification of the playground according to the programmed behavior, the problem solver observes the realized modification of the playground and evaluates its correspondence to the conditions of the problems and its adequacy in general. As described in Lumsdaine and Lumsdaine ( 1994 ), the problem solver acts as a “judge” in this phase. The input to this phase is the transformation of the playground observed by the problem solver. The observed transformation is compared with the conditions expressed in the given problem. Then, the problem solver has to decide if the programmed behavior can be considered an appropriate solution of the problem, or if it has to be refined, corrected, or completely redefined. This phase is therefore crucial to identify the next step of iteration (see Shute et al. ( 2017 ) and COMP2 in Romero et al. ( 2017 )). As a result, the transitions in the CCPS model can either be terminated or continued through a feedback transition to one of the other phases.

Finally, an additional sixth phase, called off-task behavior (OFFT) , was included to account for situations where the problem solver is not involved in the problem-solving process. This phase was not considered a priori, however, the experiments with students showed that off-task behavior is part of the reality in classrooms and should therefore be included in the model. Moreover, in reality, transitions between phases do not necessarily occur in the order presented. Therefore, the model also accounts for transitions between non-adjacent phases as well as for transitions into the off-task behavior phase (light arrows in Fig. 2 ). In order to facilitate the presentation of these transitions, the matrix representation of the model is introduced hereafter (Fig. 3 ).

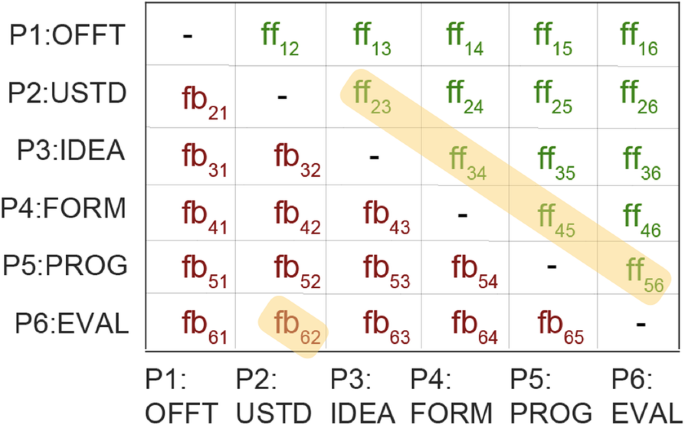

Matrix representation of the CCPS model. The figure depicts all phases of the CCPS model and transitions between them using a matrix representation. The rows i of the matrix describe the phases from which a transition is outgoing, while the columns j describe the phases towards which the transition is made (e.g., ff 23 describes the transition from the phase USTD towards the phase IDEA). In this representation, feedforward transitions (i.e., transitions from a phase to one of the subsequent ones) are on the upper triangular matrix (green). Feedback transitions (i.e., transitions from one phase to one of the preceding ones) are on the lower triangular matrix (red). Self-transitions (i.e., the remaining in a phase) are not considered in this representation (dashes). The theoretically most efficient problem-solving cycle is highlighted in yellow (ff 23 –ff 34 –ff 45 –ff 56 –ff 62 )

In this representation, a feedforward (ff) is the transition from a phase to any of the subsequent ones and feedback (fb) is the transition from a phase to any of the preceding ones. Consequently, ff ij denotes the feedforward from phase i to phase j , where i < j and fb ij denotes the feedback from phase i to phase j, where j < i. With six states, there are in theory 15 possible feedforward (upper triangular matrix) and 15 possible feedback transitions (lower triangular matrix), as represented in Fig. 3 . Although some of the transitions seem meaningless, they might however be observed in reality and are therefore kept in the model. For instance, it seems that the transition ff 36 would not be possible, since a solution can only be evaluated if it has been programmed. However, especially in the context of group work, it might be possible that a generated idea is immediately followed by an evaluation phase, which was implemented by another student going through the programming phase. Finally, it can be assumed that feedback transitions usually respond to instabilities in previous phases. In this model, special emphasis is therefore placed on the cycle considering the transitions ff 23 -ff 34 -ff 45 -ff 56 -fb 62 (highlighted in yellow in Fig. 3 ), which, as presented before, correspond to the theoretically most efficient cycle within the CCPS model.

Research question

As presented before, one common situation encountered in ER activities is the trial-and-error loop that in the CCPS model corresponds to an over-emphasis on feedforward and feedback transitions between PROG and EVAL phases. However, this condition might not be the most favorable for CT skill development, since the remaining phases (USTD, IDEA, and FORM) of the CCPS model are neglected. Consequently, this leads to the following research question: In a problem-solving activity involving educational robotics, how can the activation of all the CT processes related to the CCPS model be encouraged? In order to foster transitions towards all phases, the current study suggests to expressly generate an USTD-IDEA-FORM loop upstream so that students would not enter the PROG-EVAL loop without being able to leave it. To do so, a temporary blocking of the PROG phase (i.e., blocking the access to the programming interface) is proposed as an instructional intervention. Based on the findings of similar approaches implemented for inquiry-based learning (Bumbacher et al., 2018 ; Dillenbourg, 2013 ; Perez et al., 2017 ), the main idea is to introduce strategic pauses to the students to reinforce the three phases preceding the PROG phase. However, creating one loop to replace another is not a sustainable solution. With time, it is also important to adjust the instructional intervention into a “partial blocking,” so that students can progressively advance in the problem-solving process. At a later stage, students should therefore be allowed to use the programming interface (i.e., enter the PROG phase); however, they should not be allowed to run their code on the robot, to prevent them from entering the trial-and-error loop between PROG and EVAL. Based on these instructional interventions, the current study aims at addressing the following research sub-questions:

Does a non-instructional approach for ER activities (i.e., unlimited access to the programming interface) promote a trial-and-error approach?

Does a blocking of the programming interface foster cognitive processes related to problem understanding, idea generation, and solution formulation, does a partial blocking (i.e., the possibility to use the programming interface without executing the code on the robot) help students to gradually advance in the problem-solving process.

The resulting operational hypotheses are as follows:

Compared to the control group, the students subject to the blocking of the programming interface (total then partial) will activate all the CT processes of the CCPS model.

Compared to the test group, the students not subject to the blocking of the programming interface will mostly activate the PROG-EVAL phases of the CT processes of the CCPS model.

To test these hypotheses, an experiment using a test group and a control group was set up, with test groups that were subject to blocking of the programming interface and control groups that had free access to it.

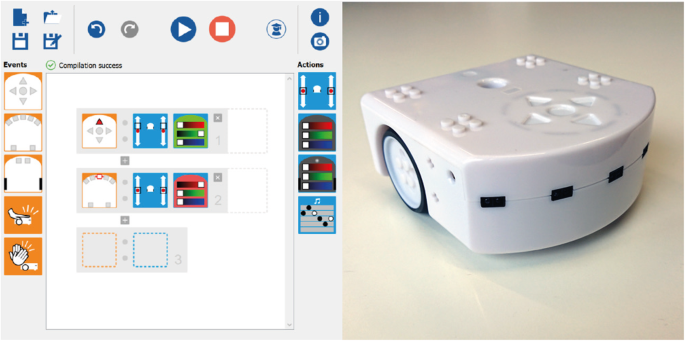

The proposed CCPS model was evaluated in a research study with 29 primary school students (for details see “Participants” subsection). In groups of 2–3 students, the participants were asked to solve the robot lawnmower mission with the Thymio robot. In this activity, the robot has to be programmed in a way such that it drives autonomously around a lawn area, covering as much of the area as possible. Based on the CCPS model and the presented instructional interventions, two different experimental conditions were implemented for the activity and the students randomly and equally assigned to each condition. The activities of all groups were recorded on video, which subsequently were analyzed by two independent evaluators.

The robot lawnmower mission

The playground of the robot lawnmower mission consists of a fenced lawn area of 45cm × 45cm size (Fig. 4 ).

Playground of the robot lawnmower mission with Thymio. The playground consists of a wooden fence surrounding an area (45 × 45cm) representing the lawn. One of the nine squares represents the garage, the starting position of the mission (left). The task is to program the Thymio robot so that it passes over all eight lawn squares while avoiding any collisions with the fence (right)