- Email Alert

论文 全文 图 表 新闻

- Abstracting/Indexing

- Journal Metrics

- Current Editorial Board

- Early Career Advisory Board

- Previous Editor-in-Chief

- Past Issues

- Current Issue

- Special Issues

- Early Access

- Online Submission

- Information for Authors

- Share facebook twitter google linkedin

IEEE/CAA Journal of Automatica Sinica

- JCR Impact Factor: 11.8 , Top 4% (SCI Q1) CiteScore: 17.6 , Top 3% (Q1) Google Scholar h5-index: 77, TOP 5

Advancements in Humanoid Robots: A Comprehensive Review and Future Prospects

Doi: 10.1109/jas.2023.124140.

- Yuchuang Tong , , ,

- Haotian Liu , ,

- Zhengtao Zhang , ,

Yuchuang Tong (Member, IEEE) received the Ph.D. degree in mechatronic engineering from the State Key Laboratory of Robotics, Shenyang Institute of Automation (SIA), Chinese Academy of Sciences (CAS) in 2022. Currently, she is an Assistant Professor with the Institute of Automation, Chinese Academy of Sciences. Her research interests include humanoid robots, robot control and human-robot interaction. Dr. Tong has authored more than ten publications in journals and conference proceedings in the areas of her research interests. She was the recipient of the Best Paper Award from 2020 International Conference on Robotics and Rehabilitation Intelligence, the Dean’s Award for Excellence of CAS and the CAS Outstanding Doctoral Dissertation

Haotian Liu received the B.Sc. degree in traffic equipment and control engineering from Central South University in 2021. He is currently a Ph.D. candidate in control science and control engineering at the CAS Engineering Laboratory for Industrial Vision and Intelligent Equipment Technology, Institute of Automation, Chinese Academy of Sciences (IACAS) and University of Chinese Academy of Sciences (UCAS). His research interests include robotics, intelligent control and machine learning

Zhengtao Zhang (Member, IEEE) received the B.Sc. degree in automation from the China University of Petroleum in 2004, the M.Sc. degree in detection technology and automatic equipment from the Beijing Institute of Technology in 2007, and the Ph.D. degree in control science and engineering from the Institute of Automation, Chinese Academy of Sciences in 2010. He is currently a Professor with the CAS Engineering Laboratory for Industrial Vision and Intelligent Equipment Technology, IACAS. His research interests include industrial vision inspection, and intelligent robotics

- Corresponding author: Yuchuang Tong, e-mail: [email protected] ; Zhengtao Zhang, e-mail: [email protected]

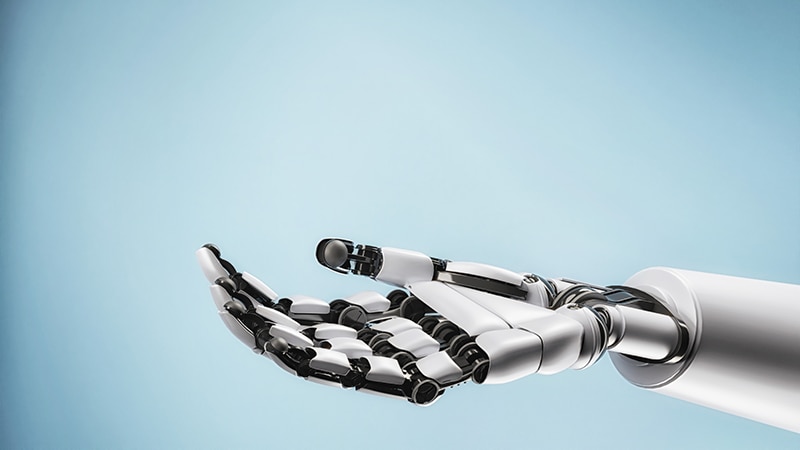

- Accepted Date: 2023-11-26

This paper provides a comprehensive review of the current status, advancements, and future prospects of humanoid robots, highlighting their significance in driving the evolution of next-generation industries. By analyzing various research endeavors and key technologies, encompassing ontology structure, control and decision-making, and perception and interaction, a holistic overview of the current state of humanoid robot research is presented. Furthermore, emerging challenges in the field are identified, emphasizing the necessity for a deeper understanding of biological motion mechanisms, improved structural design, enhanced material applications, advanced drive and control methods, and efficient energy utilization. The integration of bionics, brain-inspired intelligence, mechanics, and control is underscored as a promising direction for the development of advanced humanoid robotic systems. This paper serves as an invaluable resource, offering insightful guidance to researchers in the field, while contributing to the ongoing evolution and potential of humanoid robots across diverse domains.

- Future trends and challenges ,

- humanoid robots ,

- human-robot interaction ,

- key technologies ,

- potential applications

Proportional views

通讯作者: 陈斌, [email protected].

沈阳化工大学材料科学与工程学院 沈阳 110142

Figures( 7 ) / Tables( 5 )

Article Metrics

- PDF Downloads( 398 )

- Abstract views( 1532 )

- HTML views( 105 )

- The current state, advancements and future prospects of humanoid robots are outlined

- Fundamental techniques including structure, control, learning and perception are investigated

- This paper highlights the potential applications of humanoid robots

- This paper outlines future trends and challenges in humanoid robot research

- Copyright © 2022 IEEE/CAA Journal of Automatica Sinica

- 京ICP备14019135号-24

- E-mail: [email protected] Tel: +86-10-82544459, 10-82544746

- Address: 95 Zhongguancun East Road, Handian District, Beijing 100190, China

Export File

- Figure 1. Historical progression of humanoid robots.

- Figure 2. The mapping knowledge domain of humanoid robots. (a) Co-citation analysis; (b) Country and institution analysis; (c) Cluster analysis of keywords.

- Figure 3. The number of papers varies with each year.

- Figure 4. Research status of humanoid robots

- Figure 5. Comparison of Child-size and Adult-size humanoid robots

- Figure 6. Potential applications of humanoid robots.

- Figure 7. Key technologies of humanoid robots.

For IEEE Members

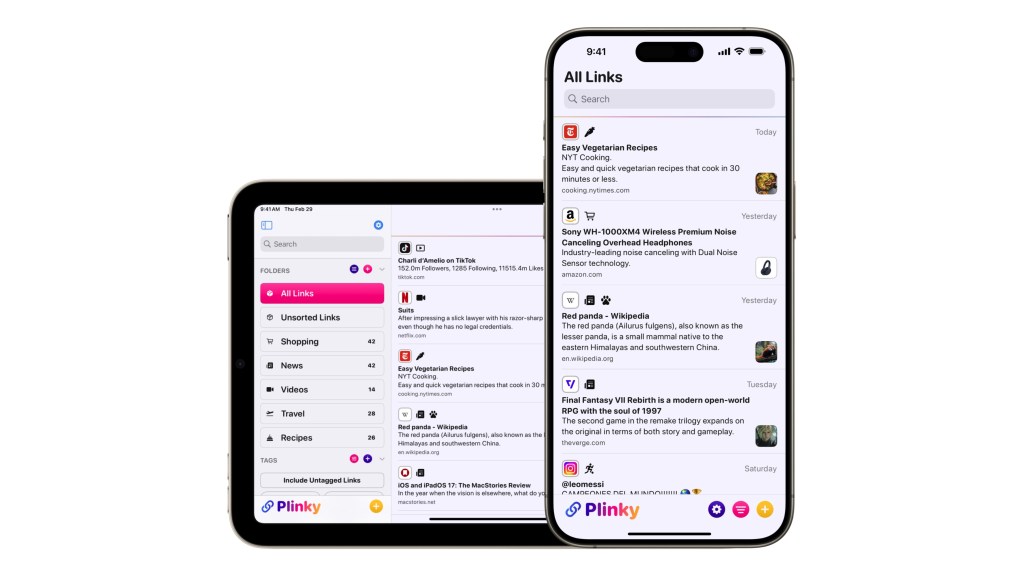

Ieee spectrum, follow ieee spectrum, support ieee spectrum, enjoy more free content and benefits by creating an account, saving articles to read later requires an ieee spectrum account, the institute content is only available for members, downloading full pdf issues is exclusive for ieee members, downloading this e-book is exclusive for ieee members, access to spectrum 's digital edition is exclusive for ieee members, following topics is a feature exclusive for ieee members, adding your response to an article requires an ieee spectrum account, create an account to access more content and features on ieee spectrum , including the ability to save articles to read later, download spectrum collections, and participate in conversations with readers and editors. for more exclusive content and features, consider joining ieee ., join the world’s largest professional organization devoted to engineering and applied sciences and get access to all of spectrum’s articles, archives, pdf downloads, and other benefits. learn more →, join the world’s largest professional organization devoted to engineering and applied sciences and get access to this e-book plus all of ieee spectrum’s articles, archives, pdf downloads, and other benefits. learn more →, access thousands of articles — completely free, create an account and get exclusive content and features: save articles, download collections, and talk to tech insiders — all free for full access and benefits, join ieee as a paying member., humanoid robots are getting to work, humanoids from agility robotics and seven other companies vie for jobs.

Agility Robotics’ Digit carries an empty tote to a conveyor in an Amazon research and development warehouse.

Ten years ago, at the DARPA Robotics Challenge (DRC) Trial event near Miami, I watched the most advanced humanoid robots ever built struggle their way through a scenario inspired by the Fukushima nuclear disaster . A team of experienced engineers controlled each robot, and overhead safety tethers kept them from falling over. The robots had to demonstrate mobility, sensing, and manipulation—which, with painful slowness, they did.

These robots were clearly research projects, but DARPA has a history of catalyzing technology with a long-term view. The DARPA Grand and Urban Challenges for autonomous vehicles, in 2005 and 2007, formed the foundation for today’s autonomous taxis. So, after DRC ended in 2015 with several of the robots successfully completing the entire final scenario, the obvious question was: When would humanoid robots make the transition from research project to a commercial product?

This article is part of our special report Top Tech 2024 .

The answer seems to be 2024, when a handful of well-funded companies will be deploying their robots in commercial pilot projects to figure out whether humanoids are really ready to get to work.

One of the robots that made an appearance at the DRC Finals in 2015 was called ATRIAS , developed by Jonathan Hurst at the Oregon State University Dynamic Robotics Laboratory . In 2015, Hurst cofounded Agility Robotics to turn ATRIAS into a human-centric, multipurpose, and practical robot called Digit. Approximately the same size as a human, Digit stands 1.75 meters tall (about 5 feet, 8 inches), weighs 65 kilograms (about 140 pounds), and can lift 16 kg (about 35 pounds). Agility is now preparing to produce a commercial version of Digit at massive scale, and the company sees its first opportunity in the logistics industry, where it will start doing some of the jobs where humans are essentially acting like robots already.

Are humanoid robots useful?

“We spent a long time working with potential customers to find a use case where our technology can provide real value, while also being scalable and profitable,” Hurst says. “For us, right now, that use case is moving e-commerce totes.” Totes are standardized containers that warehouses use to store and transport items. As items enter or leave the warehouse, empty totes need to be continuously moved from place to place. It’s a vital job, and even in highly automated warehouses, much of that job is done by humans.

Agility says that in the United States, there are currently several million people working at tote-handling tasks, and logistics companies are having trouble keeping positions filled, because in some markets there are simply not enough workers available. Furthermore, the work tends to be dull, repetitive, and stressful on the body. “The people doing these jobs are basically doing robotic jobs,” says Hurst, and Agility argues that these people would be much better off doing work that’s more suited to their strengths. “What we’re going to have is a shifting of the human workforce into a more supervisory role,” explains Damion Shelton, Agility Robotics’ CEO. “We’re trying to build something that works with people,” Hurst adds. “We want humans for their judgment, creativity, and decision-making, using our robots as tools to do their jobs faster and more efficiently.”

For Digit to be an effective warehouse tool, it has to be capable, reliable, safe, and financially sustainable for both Agility and its customers. Agility is confident that all of this is possible, citing Digit’s potential relative to the cost and performance of human workers. “What we’re encouraging people to think about,” says Shelton, “is how much they could be saving per hour by being able to allocate their human capital elsewhere in the building.” Shelton estimates that a typical large logistics company spends at least US $30 per employee-hour for labor, including benefits and overhead. The employee, of course, receives much less than that.

Agility is not yet ready to provide pricing information for Digit, but we’re told that it will cost less than $250,000 per unit. Even at that price, if Digit is able to achieve Agility’s goal of minimum 20,000 working hours (five years of two shifts of work per day), that brings the hourly rate of the robot to $12.50. A service contract would likely add a few dollars per hour to that. “You compare that against human labor doing the same task,” Shelton says, “and as long as it’s apples to apples in terms of the rate that the robot is working versus the rate that the human is working, you can decide whether it makes more sense to have the person or the robot.”

Agility’s robot won’t be able to match the general capability of a human, but that’s not the company’s goal. “Digit won’t be doing everything that a person can do,” says Hurst. “It’ll just be doing that one process-automated task,” like moving empty totes. In these tasks, Digit is able to keep up with (and in fact slightly exceed) the speed of the average human worker, when you consider that the robot doesn’t have to accommodate the needs of a frail human body.

Amazon’s experiments with warehouse robots

The first company to put Digit to the test is Amazon . In 2022, Amazon invested in Agility as part of its Industrial Innovation Fund , and late last year Amazon started testing Digit at its robotics research and development site near Seattle, Wash. Digit will not be lonely at Amazon—the company currently has more than 750,000 robots deployed across its warehouses, including legacy systems that operate in closed-off areas as well as more modern robots that have the necessary autonomy to work more collaboratively with people. These newer robots include autonomous mobile robotic bases like Proteus , which can move carts around warehouses, as well as stationary robot arms like Sparrow and Cardinal, which can handle inventory or customer orders in structured environments. But a robot with legs will be something new.

“What’s interesting about Digit is because of its bipedal nature, it can fit in spaces a little bit differently,” says Emily Vetterick, director of engineering at Amazon Global Robotics , who is overseeing Digit’s testing. “We’re excited to be at this point with Digit where we can start testing it, because we’re going to learn where the technology makes sense.”

Where two legs make sense has been an ongoing question in robotics for decades. Obviously, in a world designed primarily for humans, a robot with a humanoid form factor would be ideal. But balancing dynamically on two legs is still difficult for robots, especially when those robots are carrying heavy objects and are expected to work at a human pace for tens of thousands of hours. When is it worthwhile to use a bipedal robot instead of something simpler?

“The people doing these jobs are basically doing robotic jobs.” —Jonathan Hurst, Agility Robotics

“The use case for Digit that I’m really excited about is empty tote recycling,” Vetterick says. “We already automate this task in a lot of our warehouses with a conveyor, a very traditional automation solution, and we wouldn’t want a robot in a place where a conveyor works. But a conveyor has a specific footprint, and it’s conducive to certain types of spaces. When we start to get away from those spaces, that’s where robots start to have a functional need to exist.”

The need for a robot doesn’t always translate into the need for a robot with legs, however, and a company like Amazon has the resources to build its warehouses to support whatever form of robotics or automation it needs. Its newer warehouses are indeed built that way, with flat floors, wide aisles, and other environmental considerations that are particularly friendly to robots with wheels.

“The building types that we’re thinking about [for Digit] aren’t our new-generation buildings. They’re older-generation buildings, where we can’t put in traditional automation solutions because there just isn’t the space for them,” says Vetterick. She describes the organized chaos of some of these older buildings as including narrower aisles with roof supports in the middle of them, and areas where pallets, cardboard, electrical cord covers, and ergonomics mats create uneven floors. “Our buildings are easy for people to navigate,” Vetterick continues. “But even small obstructions become barriers that a wheeled robot might struggle with, and where a walking robot might not.” Fundamentally, that’s the advantage bipedal robots offer relative to other form factors: They can quickly and easily fit into spaces and workflows designed for humans. Or at least, that’s the goal.

Vetterick emphasizes that the Seattle R&D site deployment is only a very small initial test of Digit’s capabilities. Having the robot move totes from a shelf to a conveyor across a flat, empty floor is not reflective of the use case that Amazon ultimately would like to explore. Amazon is not even sure that Digit will turn out to be the best tool for this particular job, and for a company so focused on efficiency, only the best solution to a specific problem will find a permanent home as part of its workflow. “Amazon isn’t interested in a general-purpose robot,” Vetterick explains. “We are always focused on what problem we’re trying to solve. I wouldn’t want to suggest that Digit is the only way to solve this type of problem. It’s one potential way that we’re interested in experimenting with.”

The idea of a general-purpose humanoid robot that can assist people with whatever tasks they may need is certainly appealing, but as Amazon makes clear, the first step for companies like Agility is to find enough value performing a single task (or perhaps a few different tasks) to achieve sustainable growth. Agility believes that Digit will be able to scale its business by solving Amazon’s empty tote-recycling problem, and the company is confident enough that it’s preparing to open a factory in Salem, Ore. At peak production the plant will eventually be capable of manufacturing 10,000 Digit robots per year.

A menagerie of humanoids

Agility is not alone in its goal to commercially deploy bipedal robots in 2024. At least seven other companies are also working toward this goal, with hundreds of millions of dollars of funding backing them. 1X , Apptronik , Figure , Sanctuary , Tesla , and Unitree all have commercial humanoid robot prototypes.

Despite an influx of money and talent into commercial humanoid robot development over the past two years, there have been no recent fundamental technological breakthroughs that will substantially aid these robots’ development. Sensors and computers are capable enough, but actuators remain complex and expensive, and batteries struggle to power bipedal robots for the length of a work shift.

There are other challenges as well, including creating a robot that’s manufacturable with a resilient supply chain and developing the service infrastructure to support a commercial deployment at scale. The biggest challenge by far is software. It’s not enough to simply build a robot that can do a job—that robot has to do the job with the kind of safety, reliability, and efficiency that will make it desirable as more than an experiment.

There’s no question that Agility Robotics and the other companies developing commercial humanoids have impressive technology, a compelling narrative, and an enormous amount of potential. Whether that potential will translate into humanoid robots in the workplace now rests with companies like Amazon, who seem cautiously optimistic. It would be a fundamental shift in how repetitive labor is done. And now, all the robots have to do is deliver.

This article appears in the January 2024 print issue as “Year of the Humanoid.”

- Amazon Shows Off Impressive New Warehouse Robots ›

- Watch This Giant Chopstick Robot Handle Boxes With Ease ›

- A Robot for the Worst Job in the Warehouse ›

- Everything You Wanted to Know About 1X’s Latest Video - IEEE Spectrum ›

- Toyota’s Bubble-ized Humanoid Grasps With Its Whole Body - IEEE Spectrum ›

- Drive Unit - ROBOTS: Your Guide to the World of Robotics ›

Evan Ackerman is a senior editor at IEEE Spectrum . Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

How could be that a rigorous publication such as IEEE Spectrum does not even mention Boston Dynamics' Atlas humanoid robot in the article ?

Interesting presentation.

Management Versus Technical Track

A skeptic’s take on beaming power to earth from space, femtosecond lasers solve solar panels' recycling issue.

- IEEE Xplore Digital Library

- IEEE Standards Association

- Spectrum Online

- More IEEE Sites

TECHNICAL COMMITTEE FOR

Humanoid Robotics

Humanoid robotics is an emerging and challenging research field, which has received significant attention during the past years and will continue to play a central role in robotics research and in many applications of the 21st century. Regardless of the application area, one of the common problems tackled in humanoid robotics is the understanding of human-like information processing and the underlying mechanisms of the human brain in dealing with the real world.

Ambitious goals have been set for future humanoid robotics. They are expected to serve as companions and assistants for humans in daily life and as ultimate helpers in man-made and natural disasters. In 2050, a team of humanoid robots soccer players shall win against the winner of most recent World Cup. DARPA announced recently the next Grand Challenge in robotics: building robots which do things like humans in a world made for humans.

Considerable progress has been made in humanoid research resulting in a number of humanoid robots able to move and perform well-designed tasks. Over the past decade in humanoid research, an encouraging spectrum of science and technology has emerged that leads to the development of highly advanced humanoid mechatronic systems endowed with rich and complex sensorimotor capabilities. Of major importance for advances of the field is without doubt the availability of reproducible humanoid robots systems, which have been used in the last years as common hardware and software platforms to support humanoids research. Many technical innovations and remarkable results by universities, research institutions and companies are visible.

The major activities of the TC are reflected by the firmly established annual IEEE-RAS International Conference on Humanoid Robots, which is the internationally recognized prime event of the humanoid robotics community. The conference is sponsored by the IEEE Robotics and Automation Society. The level of interest in humanoid robotics research continues to grow, which is evidenced by the increasing number of submitted papers to this conference. For more information, please visit the official website of the Humanoids TC: http://www.humanoid-robotics.org

Committee News

Help | Advanced Search

Computer Science > Robotics

Title: the mit humanoid robot: design, motion planning, and control for acrobatic behaviors.

Abstract: Demonstrating acrobatic behavior of a humanoid robot such as flips and spinning jumps requires systematic approaches across hardware design, motion planning, and control. In this paper, we present a new humanoid robot design, an actuator-aware kino-dynamic motion planner, and a landing controller as part of a practical system design for highly dynamic motion control of the humanoid robot. To achieve the impulsive motions, we develop two new proprioceptive actuators and experimentally evaluate their performance using our custom-designed dynamometer. The actuator's torque, velocity, and power limits are reflected in our kino-dynamic motion planner by approximating the configuration-dependent reaction force limits and in our dynamics simulator by including actuator dynamics along with the robot's full-body dynamics. For the landing control, we effectively integrate model-predictive control and whole-body impulse control by connecting them in a dynamically consistent way to accomplish both the long-time horizon optimal control and high-bandwidth full-body dynamics-based feedback. Actuators' torque output over the entire motion are validated based on the velocity-torque model including battery voltage droop and back-EMF voltage. With the carefully designed hardware and control framework, we successfully demonstrate dynamic behaviors such as back flips, front flips, and spinning jumps in our realistic dynamics simulation.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

DBLP - CS Bibliography

Bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Reference Manager

- Simple TEXT file

People also looked at

Editorial article, editorial: humanoid robots for real-world applications.

- 1 CNRS-AIST Joint Robotics Laboratory (JRL), IRL3218, National Institute of Advanced Industrial Science and Technology (AIST), Tsukuba, Japan

- 2 Electrical and Computer Engineering Department, Université de Sherbrooke, Sherbrooke, QC, Canada

- 3 Florida Institute for Human and Machine Cognition, Pensacola, FL, United States

Editorial on the Research Topic Humanoid Robots for Real-World Applications

Since Honda introduced the P2 in 1996, numerous humanoid robots have been developed around the world, and research and development of various fundamental technologies, including bipedal walking, have been conducted. At the same time, attempts have been made to apply humanoid robots to various applications such as plant maintenance, telemedicine, vehicle operation, home security, construction, aircraft manufacturing, disaster response, evaluation of assistive devices, and entertainment.

Humanoid robots have an anthropomorphic body, and their major advantage is that they can move within an environment designed for humans and can use tools and vehicles designed for humans as they are. It is hoped that these advantages can be used to help people focus on more creative activities by replacing activities in harsh environments, hazardous tasks, and low added-value tasks that people are forced to perform because existing fixed, wheeled, or crawler-type robots are unable to deal with them.

In addition, since the fact that something shaped like a human moves like a human has an effect of attracting people, it can be expected to entertain and heal people by interacting with them. It is easier for humans to understand their “intention” through body language. This is related to the avatar application introduced later.

Despite these expectations, even today, more than 25 years after the announcement of P2, there is still no humanoid robot that has been put to practical use other than R&D and communication applications. This is because there is no necessity to use humanoid robots in a structured environment like a conventional factory, where existing robots can be easily applied, and the technology is too immature to use humanoid robots in an environment that is so unstructured that existing robots cannot deal with.

This Research Topic introduces two efforts to improve the basic capabilities of humanoid robots and one effort to apply humanoid robots to remote services, with the aim of practical applications of humanoid robots.

Until now, almost all humanoid robots have used a method in which joints are accurately position-controlled and position commands are updated using joint velocities calculated by inverse kinematics. Recently, methods that updates the position commands by calculating joint accelerations using inverse dynamics calculations, and methods that control the joint torques are being used. Ramuzat et al. implemented these three approaches on the same hardware platform and clarified the advantages and disadvantages of each approach. The method combining position control and inverse kinematics was found to be the least computationally intensive, while the method using torque control was confirmed to have advantages in terms of smoothness of trajectory tracking, energy consumption, and passivity. Recent improvements in computer performance have made it possible to perform inverse dynamics-based torque control at 1 kHz, and there is a possibility that torque control will become the mainstream of joint control in the future.

Multi-contact technology is essential to enable humanoid robots to move in unstructured environments where it is difficult for existing robots to operate. By actively bringing various parts of the body into contact with the environment, humanoid robots can move in confined spaces that are inaccessible to wheeled robots with large footprints. One of the basic functions in multi-contact motion generation is the Posture Generator. This is the problem of calculating joint angles that can realize a given set of contacts without colliding with the environment or the robot itself, and because it is a process that is called frequently in multi-contact motion generation, it must be computationally fast. In the past, collision avoidance was often incorporated into the inverse kinematics solver as an inequality constraint. However, when there are many obstacles in proximity, such as narrow passages, the number of constraints increases, and the computation speed slows down. Rossini et al. tackled the latter problem by proposing a method to generate a collision free posture using an adaptive random velocity vector generator and showed that it is effective especially in narrow environments.

Due in part to the influence of COVID-19, the use of avatar robots to provide remote services has attracted much attention in recent years. Baba et al. compared the performance and perceived workload of face-to-face service delivery and service delivery via avatars in a public space. They found no significant difference in performance, but interestingly found that the perceived workload was smaller when the service was provided via an avatar robot.

Further research and development are needed to enable humanoid robots to autonomously perform tasks that are currently difficult for other robots, and it is expected that it will take more time to achieve this goal. To promote the industrialization of humanoid robots as early as possible, it is thought that their deployment as avatar robots would be an effective way. Using the robot as an avatar robot will enable humans to perform tasks that are harsh or dangerous while being in a safe and comfortable environment. Moreover, it will also enable humans to compensate for the robot’s insufficient abilities, such as advanced situational awareness and higher-level decision making, through remote control. By starting industrialization of humanoid robots in this manner and utilizing them in the real fields every day, a virtuous cycle can be expected to emerge whereby costs are reduced while reliability and autonomy are improved.

Author Contributions

FK drafted the manuscript. WS and RG have made substantial and intellectual contribution to the manuscript. All authors approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Keywords: humanoid robots, industrial robots, collaborative robots, avatar robot, whole-body control, posture generation

Citation: Kanehiro F, Suleiman W and Griffin R (2022) Editorial: Humanoid Robots for Real-World Applications. Front. Robot. AI 9:938775. doi: 10.3389/frobt.2022.938775

Received: 08 May 2022; Accepted: 17 May 2022; Published: 07 June 2022.

Edited and reviewed by:

Copyright © 2022 Kanehiro, Suleiman and Griffin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fumio Kanehiro, [email protected]

This article is part of the Research Topic

Humanoid Robots for Real-World Applications

Top 22 Humanoid Robots in Use Right Now

While many humanoid robots are still in the prototype phase or other early stages of development, a few have escaped research and development in the last few years, entering the real world as bartenders, concierges, deep-sea divers and as companions for older adults. Some work in warehouses and factories, assisting humans in logistics and manufacturing . And others seem to offer more novelty and awe than anything else, conducting orchestras and greeting guests at conferences.

What Are Humanoid Robots?

How are humanoid robots being used today.

While more humanoid robots are being introduced into the world and making a positive impact in industries like logistics, manufacturing, healthcare and hospitality, their use is still limited, and development costs are high.

That said, the sector is expected to grow. The humanoid robot market is valued at $1.8 billion in 2023, according to research firm MarketsandMarkets, and is predicted to increase to more than $13 billion over the next five years. Fueling that growth and demand will be advanced humanoid robots with greater AI capabilities and human-like features that can take on more duties in the service industry, education and healthcare .

In light of recent investments, the dawn of complex humanoid robots may come sooner than later. For instance, AI robotics company Figure and ChatGPT-maker OpenAI formed a partnership that’s backed by investors like Jeff Bezos. Under the deal, OpenAI will likely adapt its GPT language models to suit the needs of Figure’s robots. And microchip manufacturer Nvidia revealed plans for Project GR00T , the goal of which is to develop a general-purpose foundation model for humanoid robots. These announcements come in the wake of Elon Musk and Tesla introducing the humanoid robot Optimus in 2022, although the robot remains in the production phase .

How Are Humanoid Robots Being Used?

- Hospitality: Some humanoid robots, like Kime, are pouring and serving customer drinks and snacks at self-contained kiosks in Spain. Some are even working as hotel concierges and in other customer-facing roles.

- Education: Humanoid Robots Nao and Pepper are working with students in educational settings, creating content and teaching programming.

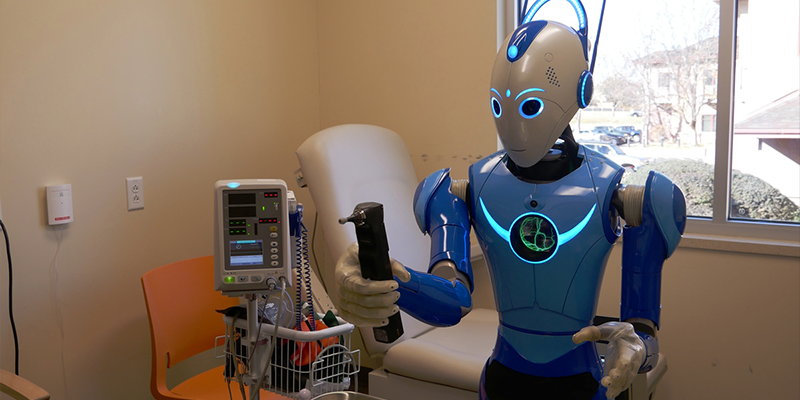

- Healthcare: Other humanoid robots are providing services in healthcare settings, like communicating patient information and measuring vital signs.

But before companies can fully unleash their humanoid robots, pilot programs testing their ability to safely work and collaborate alongside human counterparts on factory floors, warehouses and elsewhere will have to be conducted.

It’s unclear how well humanoid robots will integrate into society and how well humans will accept their help. While some people will see the proliferation of these robots as creepy , dangerous or as unneeded competition in the labor market, the potential benefits like increased efficiency and safety may outweigh many of the perceived consequences.

Either way, humanoid robots are poised to have a tremendous impact , and fortunately, there are already some among us that we can look to for guidance. Here are a few examples of the top humanoid robots working in our world today.

Examples of Humanoid Robots

Ameca (Engineered Arts)

Engineered Arts ’ latest and most advanced humanoid robot is Ameca , which the company bills as a development platform where AI and machine learning systems can be tested. Featuring sensors that can track movement across the entirety of a room, along with face and multiple voice recognition capabilities, Ameca naturally interacts with humans and detects emotions and age. Ameca is able to communicate common expressions like astonishment and surprise, and gestures like yawning and shrugging.

More on the future of robotics 35 Robotics Companies on the Forefront of Innovation

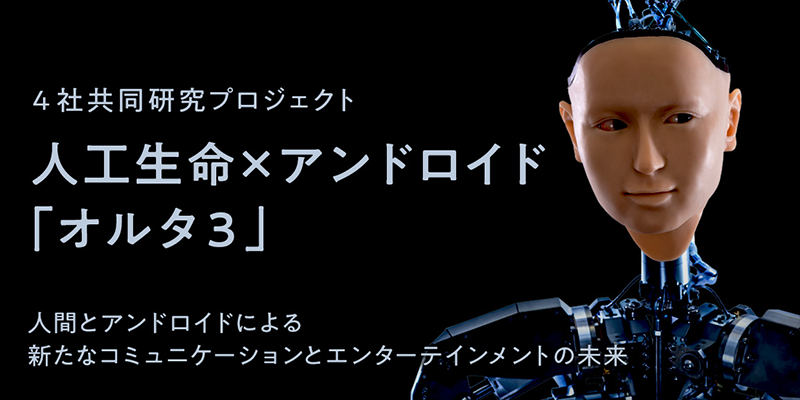

Alter 3 (Osaka University and mixi)

Dubbed Alter 3 , the latest humanoid robot from Osaka University and mixi is powered by an artificial neural network and has an ear for music. Earlier iterations of Alter sang in an opera . Alter 3, which has enhanced sensors and improved expressive ability and vocalization system for singing, went even further in 2020 by conducting an orchestra at the New National Theater in Tokyo and taking part in other live performances.

ARMAR-6 (Karlsruhe Institute of Technology)

ARMAR-6 is a humanoid robot developed by researchers at the Karlsruhe Institute of Technology in Germany to work in industrial settings. Capable of using drills, hammers and other tools, ARMAR-6 also features AI technology allowing it to learn how to grasp objects and hand them to human co-workers. It’s also able to take on maintenance duties like wiping down surfaces and even has the ability to ask for help when needed.

Apollo (Apptronik)

Apptronik ’s Apollo is the result of the company building on its experiences of previous robots, including its 2022 humanoid robot Astro . With the ability to carry up to 55 pounds, Apollo is designed to function in plants and warehouses and may expand into industries like retail and construction. An impact zone allows the robot to stop its motion when detecting nearby moving objects while swappable batteries that last four hours each keep Apollo productive.

Atlas (Boston Dynamics)

Atlas is a leaping, backflipping humanoid robot designed by Boston Dynamics that uses depth sensors for real-time perception and model-predictive control technology to improve motion. Measuring 5 feet tall and weighing in at 196 pounds, Atlas has three onboard computers, 28 hydraulic joints, and moves at speeds of more than 5 miles per hour. Built with 3D-printed parts , Atlas is used by company roboticists as a research and design tool to increase human-like agility and coordination. In April 2024, Boston Dynamics announced plans to retire the hydraulic Atlas in favor of a new electric version that the company says will be stronger and have a broader range of motion.

Beomni (Beyond Imagination)

Created by Beyond Imagination , the humanoid robot Beomni is controlled remotely by “human pilots” donning virtual reality headsets and other wearable devices like gloves, while AI helps Beomni learn tasks so one day it can become autonomous. In 2022, Beyond Imagination CEO and co-founder Harry Kloor told Built In that he’s hopeful Beomni will transform the care older adults receive, while taking over more tedious and dangerous jobs in other industries. The company also agreed to supply 1,000 humanoid robots over five years to SELF Labs as part of a simulated farm game.

Digit (Agility Robotics)

Already capable of unloading trailers and moving packages, Digit , a humanoid robot from Agility Robotics , is poised to take on even more tedious tasks. With fully functioning limbs, Digit is able to crouch and squat to pick up objects, adjusting its center of gravity depending on size and weight, while surface plane-reading sensors help it find the most efficient path and circumvent whatever’s in its way. In 2019, Agility partnered with automaker Ford to test autonomous package delivery , and in 2022, the company raised $150 million from Amazon and other companies to help Digit enter the workforce.

Jiajia (University of Science and Technology of China)

Developed by researchers from the University of Science and Technology of China , Jiajia is the first humanoid robot to come out of China. Researchers spent three years developing Jiajia. Chen Xiaoping, who led the team behind the humanoid robot, told reporters during Jiajia’s 2016 unveiling that he and his team would soon work to make Jiajia capable of crying and laughing, the Independent reports . According to Mashable , its human-like appearance was modeled after five students from USTC.

KIME (Macco Robotics)

KIME , Macco Robotics ’ humanoid robotic bartender, serves beer, coffee, wine, snacks, salads and more. Each KIME kiosk is able to dispense 253 items per hour and features a touchscreen and app-enabled ordering, plus a built-in payment system. Though unable to dispense the sage advice of a seasoned bartender, KIME is able to recognize its regular customers and pour two beers every six seconds.

More on Robotic Innovation Exoskeleton Suits: 20 Real-Life Examples

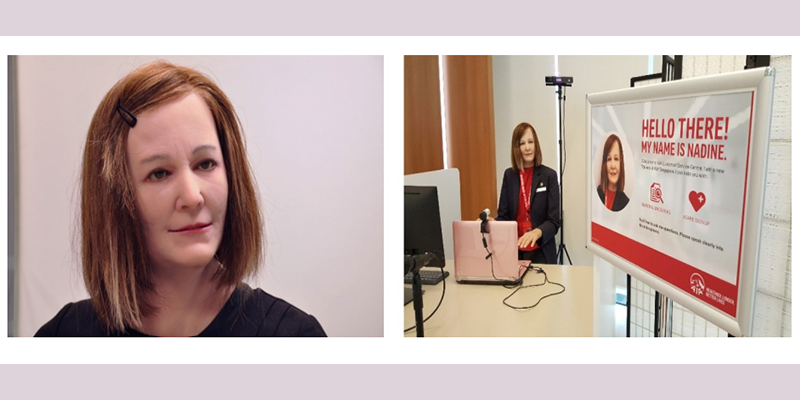

Nadine (Nanyang Technological University)

Researchers from Nanyang Technological University in Singapore developed Nadine , a humanoid social robot, with realistic skin, hair, facial expressions and upper body movements that’s able to work in a variety of settings. According to researchers, Nadine can recognize faces, speech, gestures and objects. It even features an affective system that models Nadine’s personality, emotions and mood. So far, Nadine has worked in customer service and led a bingo game for a group of older adults in Singapore.

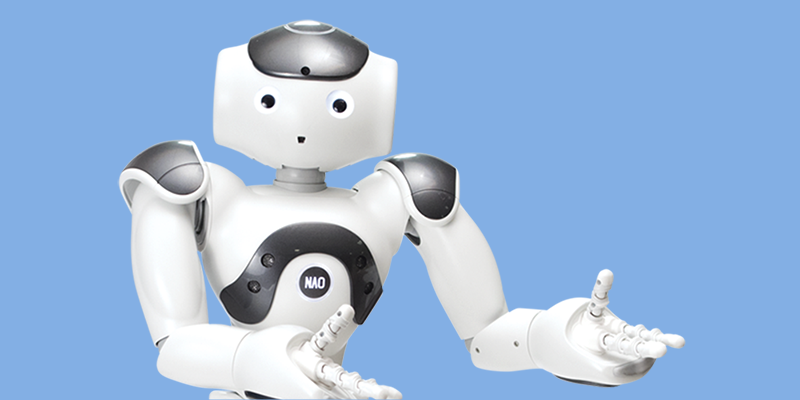

NAO (Softbank Robotics)

Softbank Robotics ’ first humanoid robot, NAO , works as an assistant for companies and organizations in industries ranging from healthcare to education. Only 2 feet tall, NAO features two 2D cameras for object recognition as well as four directional microphones and speakers, plus seven touch sensors, to better interact with people and its surrounding environment. With the ability to speak and converse in 20 languages, NAO is helping create content and teach programming in classrooms and working as assistants and patient service representatives in healthcare settings .

OceanOne (Stanford Robotics Lab)

A diving humanoid robot, OceanOne , from the Stanford Robotics Lab is exploring shipwrecks. In 2016, in its maiden voyage, OceanOne ventured to the Mediterranean Sea off the coast of France to explore the wreckage of La Lune, one of King Louis XIV’s ships that was sunk in 1664. In its latest iteration, OceanOneK , Stanford’s humanoid robot is able to dive even deeper, reaching depths of 1,000 meters. Featuring haptic feedback and AI, OceanOneK can operate tools and other equipment, and has already explored underwater wreckage of planes and ships.

Pepper (Softbank Robotics)

Pepper is another humanoid robot from Softbank Robotics working in classrooms and healthcare settings. But unlike NAO, Pepper is able to recognize faces and track human emotions. Pepper has worked as a hotel concierge and has been used to monitor contactless care and communication for older adults during the pandemic. A professional baseball team in Japan even used a squad of Peppers to cheer on its players when the pandemic kept the team’s human fans at home.

Promobot (Promobot)

Promobot is a customizable humanoid robot that’s capable of working as a brand ambassador, concierge, tour guide, medical assistant and in other service-oriented roles. Equipped with facial recognition and chat functions, Promobot can issue keycards, scan and auto-fill documents, and print guest passes and receipts. As a concierge, Promobot integrates with a building’s security system and is able to recognize the faces of a building’s residents. At hotels, it can check guests in , and in healthcare settings, Promobot is able to measure key health indicators like blood sugar and blood oxygen levels.

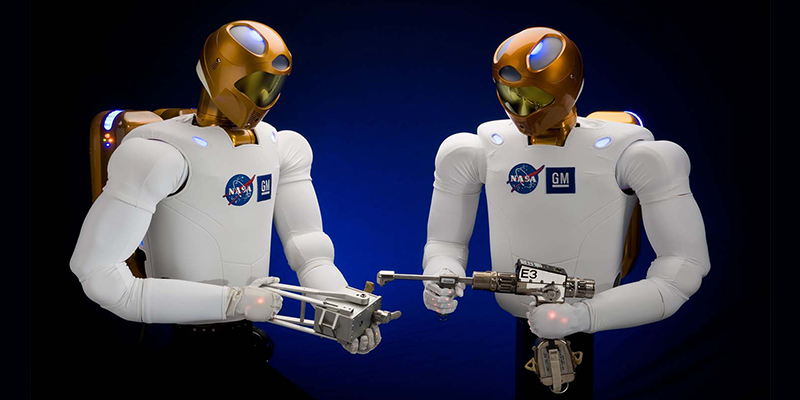

Robonaut 2 (NASA and General Motors)

Developed by NASA and General Motors , Robonaut 2 is a humanoid robot that works alongside human counterparts in space and on the factory floor. More than a decade ago, Robonaut 2 became the first humanoid robot to enter space , and worked as an assistant on the International Space Station until 2018, when it returned to Earth for repairs . Today, Robonaut 2 is inspiring other innovations and advancements in robotics, like the RoboGlove and Aquanaut from the ocean robotics company Nauticus .

RoboThespian (Engineered Arts)

Another humanoid robot from Engineered Arts is RoboThespian , which features telepresence software that allows humans to remotely talk through the robot. With automated eye contact and micro-facial expressions, RoboThespian is able to perform for crowds and work in places like the Kennedy Space Center where it answers questions about the Hubble Telescope from curious visitors.

Sophia (Hanson Robotics)

Hanson Robotics ’ AI-powered humanoid robot Sophia has traveled the world, graced the cover of Cosmopolitan Magazine and addressed the United Nations. One of the more widely known humanoid robots, Sophia can process visual, emotional and conversational data to better interact with humans. Sophia has made multiple appearances on The Tonight Show , where she challenged host Jimmy Fallon to a game of rock-paper-scissors, expressed her disdain for nacho cheese and sang a short duet with Fallon. Sophia sees herself as “a personification of our dreams for the future of AI , as well as a framework for advanced AI and robotics research, and an agent for exploring human-robot experience in service and entertainment applications.”

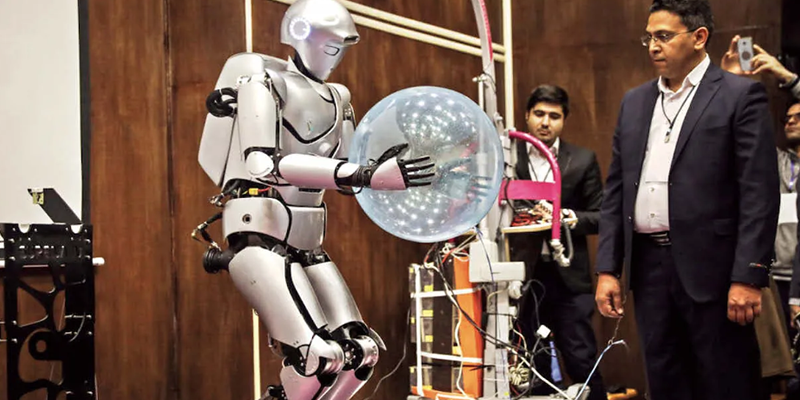

Surena IV (University of Tehran)

Able to grab a water bottle, pose for a selfie and write its own name on a whiteboard, Surena IV is the latest humanoid robot from the University of Tehran . IEEE Spectrum reports that Surena IV has improved tracking capabilities and new hands that allow it to use power tools. It’s also able to adjust the angle and position of its feet, giving it an improved ability to navigate uneven terrain.

More on Robotics Do Robots Have a Race?

T-HR3 (Toyota)

Billed as a “remote avatar robot” Toyota ’s humanoid robot, T-HR3 , is controlled by humans clad in wearable devices . Introduced in 2017, T-HR3 aims to help the automaker expand its mobility services, according to Tomohisa Moridaira, the T-HR3 development team leader. Toyota envisions that T-HR3 will one day help around the house and assist in childcare, nursing and construction.

Walker (UBTECH Robotics)

With improved hand-eye coordination and autonomous navigation, Walker , a humanoid service robot by UBTECH Robotics , is able to safely climb stairs and balance on one leg. Robotics and Automation News reports that Walker is able to serve tea, water flowers and use a vacuum, showing off just how helpful this humanoid robot could be around the house.

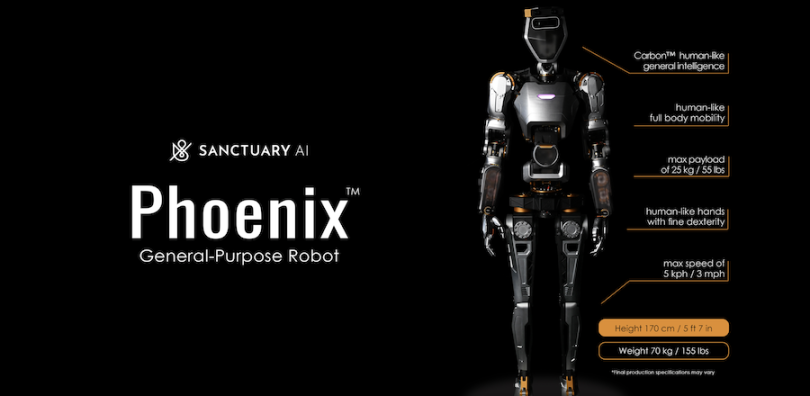

Phoenix (Sanctuary AI)

Sanctuary AI ’s sixth-generation robot, named Phoenix , is equipped with human-like hands and the ability to lift up to 55 pounds, making it useful for various roles in the workforce where there are labor shortages. Because Phoenix can be controlled, supervised and trained by humans, it goes beyond specific tasks and demonstrates the competence to complete tasks in various settings.

1X claims the title of being the company to send the first AI-powered humanoid robot into the workforce. The company’s robot EVE comes with strong grippers for hands, cameras that support panoramic vision and two wheels for mobility. Most importantly, EVE uses AI to learn new tasks and improve based on past experiences. With these abilities, EVE is on pace to spread into industries like retail, logistics and even commercial security .

Frequently Asked Questions

What are humanoid robots.

Humanoid robots look like humans and mimic human motions and actions to perform various tasks. Some humanoid robots even use materials that resemble human features, like skin and eyes, to appear friendlier.

What are humanoid robots used for?

Humanoid robots are often used for customer service roles, including concierges, bartenders and greeters. Because of their human shape, humanoid robots can also assist with handling and carrying materials in warehouses and factories.

Great Companies Need Great People. That's Where We Come In.

Advancements in Humanoid Robots: A Comprehensive Review and Future Prospects

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Perception for Humanoid Robots

- Open access

- Published: 28 November 2023

- Volume 4 , pages 127–140, ( 2023 )

Cite this article

You have full access to this open access article

- Arindam Roychoudhury ORCID: orcid.org/0009-0001-7016-0523 1 ,

- Shahram Khorshidi 1 ,

- Subham Agrawal 1 &

- Maren Bennewitz 1 , 2

1348 Accesses

Explore all metrics

Purpose of Review

The field of humanoid robotics, perception plays a fundamental role in enabling robots to interact seamlessly with humans and their surroundings, leading to improved safety, efficiency, and user experience. This scientific study investigates various perception modalities and techniques employed in humanoid robots, including visual, auditory, and tactile sensing by exploring recent state-of-the-art approaches for perceiving and understanding the internal state, the environment, objects, and human activities.

Recent Findings

Internal state estimation makes extensive use of Bayesian filtering methods and optimization techniques based on maximum a-posteriori formulation by utilizing proprioceptive sensing. In the area of external environment understanding, with an emphasis on robustness and adaptability to dynamic, unforeseen environmental changes, the new slew of research discussed in this study have focused largely on multi-sensor fusion and machine learning in contrast to the use of hand-crafted, rule-based systems. Human robot interaction methods have established the importance of contextual information representation and memory for understanding human intentions.

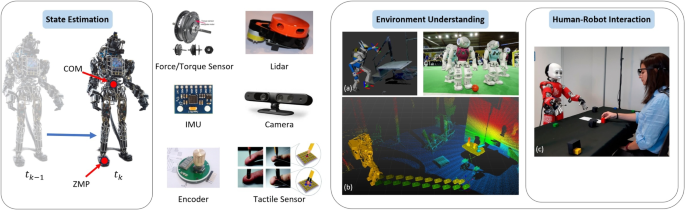

This review summarizes the recent developments and trends in the field of perception in humanoid robots. Three main areas of application are identified, namely, internal state estimation, external environment estimation, and human robot interaction. The applications of diverse sensor modalities in each of these areas are considered and recent significant works are discussed.

Similar content being viewed by others

Sensor Fusion and State Estimation of the Robot

Sensing and Estimation

Avoid common mistakes on your manuscript.

Introduction

Perception is of paramount importance for robots to establish a model of their internal state as well as the external environment. These models allow the robot to perform its task safely, efficiently and accurately. Perception is facilitated by various types of sensors which gather both proprioceptive and exteroceptive information. Humanoid robots, especially those which are mobile, pose a difficult challenge for the perception process: mounted sensors are susceptible to jerky and unstable motions due to the very high degrees of freedom afforded by the high number of articulable joints present on a humanoid’s body, e.g., the legs, the hip, the manipulator arms or the neck.

We organize the main areas of perception in humanoid robots into three broad yet overlapping areas for the purposes of this survey, namely, state estimation for balance and joint configurations, environment understanding for navigation, mapping and manipulation, and finally human-robot interaction for successful integration into a shared human workspace, see Fig. 1 . For each area we discuss the popular application areas, the challenges and recent methodologies used to surmount them.

Internal state estimation is a critical aspect of autonomous systems, particularly for humanoid robots in order to address both low level stability and dynamics, and as an auxiliary to higher level tasks such as localization, mapping and navigation. Legged robots locomotion is particularly challenging given their inherent under-actuation dynamics and the intermittent contact switching with the ground during motion.

The application of external environment understanding has a very broad scope in humanoid robotics but can be roughly divided into navigation and manipulation. Navigation implies the movement of the mobile bipedal base from one location to another without collision thereby leaving the external environment configuration unchanged. On the other hand, manipulation is where the humanoid changes the physical configuration of its environment using its end-effectors.

It could be argued that human robot interaction or HRI is a subset of environment understanding. However, we have separated the two areas based on their ultimate goals. The goal of environment understanding is to interact with inanimate objects while the goal of HRI is to interact with humans. The set of posed challenges are different though similar principles may be reused. Human detection, gesture and activity recognition, teleoperation, object handover and collaborative actions, and social communications are some of the main areas where perception is used.

Perception for humanoid robots split into three principal areas. Left: State estimation being used to estimate derived quantities like CoM and ZMP from sensors like IMU and joint encoders. Right: Environment understanding has a very broad scope which varies from localization and mapping to environment segmentation for planning and even more application areas. Human Robot Interaction is closely related but deals exclusively with human beings rather than inanimate objects. Center: Some sensors which aid in perception for humanoid robots. Sources for labeled images- (a):[ 1 ], (b): [ 2 ] and (c): [ 3 ]

State Estimation

Recent works on humanoid and legged robots locomotion control have focused extensively on state-feedback approaches [ 4 ]. Legged robots have highly nonlinear dynamics, and they need high frequency ( \(1\, kHz\) ) and low latency ( \(<1: ms\) ) feedback in order to have robust and adaptive control systems, thereby adding more complexity to the design and development of reliable estimators for the base and centroidal states, and contact detection.

Challenges in State Estimation

Perceived data is often noisy and biased and it gets magnified in derived quantities. For instance, joint velocities tend to be noisier than joint positions, as these are obtained by numerically differentiating joint encoder values. Rotella et al. [ 5 ] developed a method to determine joint velocities and acceleration of a humanoid robot using link-mounted Inertial Measurement Units (IMUs), resulting in less noise and delay compared to filtered velocities from numerical differentiation. An effective approach to mitigate biased IMU measurements is to explicitly introduce these biases as estimated states in the estimation framework [ 6 , 7 ].

The high dimensionality of humanoids make it computationally expensive to formulate a single filter for the entire state. As an alternative, Xinjilefu et al. [ 8 ] proposed decoupling the full state into several independent state vectors, and used separate filters to estimate the pelvis state and joint dynamics.

To account for kinematic modeling errors such as joint backlash and link flexibility, Xinjilefu et al. [ 9 ] introduced a method using a Linear Inverted Pendulum Model (LIPM) with an offset which represented the modeling error in the Center of Mass (CoM) position and/or external forces. Bae et al. [ 10 ] proposed a CoM kinematics estimator by including a spring and damper in the LIPM to compensate for modeling errors. To address the issue of link flexibility in the humanoid exoskeleton Atalante , Vigne et al. [ 11 ] decomposed the full state estimation problem into several independent attitude estimation problems, each corresponding to a given flexibility and a specific IMU relying only on dependable and easily accessible geometric parameters of the system, rather than the dynamic model.

In the remainder of this section, we classify the recent related works on state estimation into three main categories [ 12 ]: proprioceptive state estimation, which primarily involves filtering methods that fuse high-frequency proprioceptive sensor data; multi-sensor fusion filtering, which integrates exteroceptive sensor modalities into the filtering process; multi-sensor fusion with state smoothing, which employs advanced techniques that leverage the entire history of sensor measurements to refine estimated states.

Finally, we present a list of available open-source software for state estimation from reviewed literature in Table 1 .

Proprioceptive State Estimation

Proprioceptive sensors provide measurements of the robot’s internal state. They are commonly used to compute leg odometry, which captures the drifting pose. For a comprehensive review of the evolution of proprioceptive filters on leg odometry, refer to [ 22 ], and [ 23 ].

Base State Estimation

In humanoid robots, the focus is on estimating the position, velocity, and orientation of the “base” frame, typically located at the pelvis. Recent state estimation approaches in this field often fuse IMU and leg odometry.

The work by Bloesch [ 6 ] was a decisive step in introducing a base state estimator for legged robots using a quaternion-based Extended Kalman Filter (EKF) approach. This method made no assumptions about the robot’s gait and number of legs or the terrain structure and included absolute positions of the feet contact points, and IMU bias terms in the estimated states. Rotella et al. [ 7 ] extended it to humanoid platforms by considering the full foot plate and adding foot orientation to the state vector. Both works showed that as long as at least one foot remains in contact with the ground, the base absolute velocity, roll and pitch angles, and IMU biases are observable. There are also other formulations for the base state estimation using only proprioceptive sensing in [ 16 , 24 ], and [ 25 ].

Centroidal State Estimation

Centroidal states in humanoid robots include the CoM position, linear and angular momentum, and their derivatives. The CoM serves as a vital control variable for stability and robust humanoid locomotion, making accurate estimation of centroidal states crucial in control system design for humanoid robots.

When the full 6-axis contact wrench is not directly available to the estimator, e.g., the robot gauge sensors measure only the contact normal force, some works have utilized simplified models of dynamics, such as the LIPM [ 26 ].

Piperakis et al. [ 27 ] presented an EKF to estimate centroidal variables by fusing joint encoders, IMU, foot sensitive resistors, and later including visual odometry in [ 13 ]. They formulated the estimator based on the non-linear Zero Moment Point (ZMP) dynamics, which captured the coupling between dynamics behavior in the frontal and lateral planes. Their results showed better performance over Kalman filter formulation based on the LIPM.

Mori et al. [ 28 ] proposed a centroidal state estimation framework for a humanoid robot based on real-time inertial parameter identification, using only the robot’s proprioceptive sensors (IMU, foot Force/Torque (F/T) sensors, and joint encoders), and the sequential least squares method. They conducted successful experiments deliberately altering the robot’s mass properties to demonstrate the robustness of their framework against dynamic inertia changes.

By having 6-axis F/T sensors on the feet, Rotella et al. [ 29 ] utilized momentum dynamics of the robot to estimate the centroidal quantities. Their nonlinear observability analysis demonstrated the observability of either biases or external wrench. In a different approach, Carpentier et al. [ 30 ] proposed a frequency analysis of the information sources utilized in estimating the CoM position, and later for CoM acceleration and the derivative of angular momentum [ 31 ]. They introduced a complementary filtering technique that fuses various measurements, including ZMP position, sensed contact forces, and geometry-based reconstruction of the CoM by using joint encoders, according to their reliability in the respective spectral bandwidth.

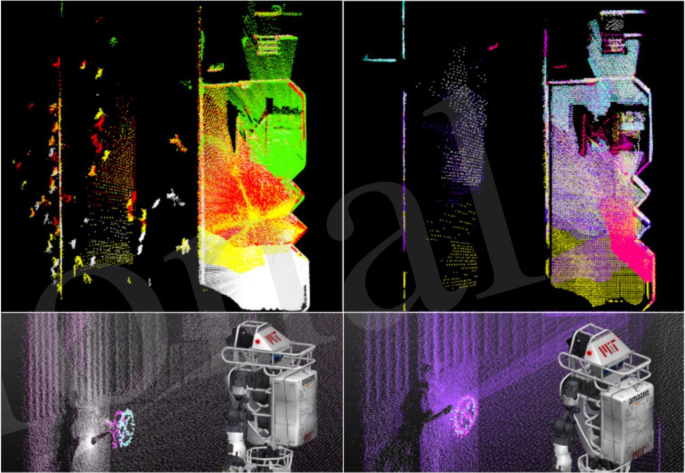

State estimation with multi-sensor filtering, integrating LiDAR for drift correction and localization. Top row, filtering people from raw point cloud. Bottom row, state estimation and localization with iterative closest point correction on filtered point cloud. From [ 12 ]

Contact Detection and Estimation

Feet contact detection plays a crucial role in locomotion control, gait planning, and proprioceptive state estimation in humanoid robots. Recent approaches can be categorized into two main groups: those directly utilizing measured ground reaction wrenches, and methods integrating kinematics and dynamics to infer the contact status by estimating the ground reaction forces. Fallon et al. [ 2 ] employed a Schmitt trigger with a 3-axis foot F/T sensor to classify contact forces and used a simple state machine to determine the most reliable foot for kinematic measurements. Piperakis et al. [ 13 ] adapted a similar approach by utilizing pressure sensors on the foot.

Rotella et al. [ 32 ] presented an unsupervised method for estimating contact states by using fuzzy clustering on only proprioceptive sensor data (foot F/T and IMU sensing), surpassing traditional approaches based on measured normal force. By including the joint encoders in proprioceptive sensing, Piperakis et al. [ 20 ] proposed an unsupervised learning framework for gait phase estimation, achieving effectiveness on uneven/rough terrain walking gaits. They also developed a deep learning framework by utilizing F/T and IMU sensing in each leg, to determine the contact state probabilities [ 33 ]. The generalizability and accuracy of their approach was demonstrated on different robotic platforms. Furthermore, Maravgakis et al. [ 34 ] introduced a probabilistic contact detection model, using only IMU sensors mounted on the end effector. Their approach estimated the contact state of the feet without requiring training data or ground truth labels.

Another active research field in humanoid robots is monitoring and identifying contact points on the robot’s body. Common approaches focus on proprioceptive sensing for contact localization and identification. Flacco et al. [ 35 ] proposed using an internal residual of external momentum to isolate and identify singular contacts, along with detecting additional contacts with known locations. Manuelli et al. [ 36 ] introduced a contact particle filter for detecting and localizing external contacts, by only using proprioceptive sensing, such as 6-axis F/T sensors, capable of handling up to 3 contacts efficiently. Vorndamme et al. [ 37 ] developed a real-time method for multi-contact detection using 6-axis F/T sensors distributed along the kinematic chain, capable of handling up to 5 contacts. Vezzani et al. [ 38 ] proposed a memory unscented particle filter algorithm for real-time 6 Degrees of freedom (DoF) tactile localization using contact point measurements made by tactile sensors.

Multi-Sensor Fusion Filtering

One drawback of base state estimation using proprioceptive sensing is the accumulation of drift over the time, due to sensor noise. This drift is not acceptable for controlling highly dynamic motions, therefore it is typically compensated by integrating other sensor modalities from exteroceptive sensors, such as cameras, depth cameras, and LiDAR.

Fallon et al. [ 2 ] proposed a drift-free base pose estimation method by incorporating LiDAR sensing into a high-rate EKF estimator using a Gaussian particle filter for laser scan localization. Although their framework eliminated the drift, a pre-generated map was required as input. Piperakis et al. [ 39 ] introduced a robust Gaussian EKF to handle outlier detection in visual/LiDAR measurements for humanoid walking in dynamic environments. To address state estimation challenges in real-world scenarios, Camurri et al. [ 12 ] presented Pronto, a modular open-source state estimation framework for legged robots Fig. 2 . It combined proprioceptive and exteroceptive sensing, such as stereo vision and LiDAR, using a loosely-coupled EKF approach.

Multi-Sensor Fusion with State Smoothing

So far, we have explored filtering methods based on Bayesian filtering for sensor fusion and state estimation. However, as the number of states and measurements increases, computational complexity becomes a limitation. Recent advancements in computing power and nonlinear solvers have popularized non-linear iterative maximum a-posteriori (MAP) optimization techniques, such as factor graph optimization.

To address the issue of visual tracking loss in visual factor graphs, Hartley et al. [ 40 ] introduced a factor graph framework that integrated forward kinematic and pre-integrated contact factors. The work was extended by incorporating the influence of contact switches and associated uncertainties [ 41 ]. Both works showed that the fusion of contact information with IMU and vision data provides a reliable odometry system for legged robots.

Solá et al [ 18 ] presented an open-source modular estimation framework for mobile robots based on factor graphs. Their approach offered systematic methods to handle the complexities arising from multi-sensory systems with asynchronous and different-frequency data sources. This framework was evaluated on state estimation for legged robots and landmark-based visual-inertial SLAM for humanoids by Fourmy et al. [ 26 ].

Environment Understanding

Environment understanding is a critical area of research for humanoid robots, enabling them to effectively navigate through and interact with complex and dynamic environments. This field can be broadly classified into two key categories: 1. localization, navigation and planning for the mobile base, and 2. object manipulation and grasping.

Perception in Localization, Navigation and Planning

Localization focuses on precisely and continuously estimating the robot’s position and orientation relative to its environment. Planning and navigation involve generating optimal paths and trajectories for the robot to reach its desired destination while avoiding obstacles and considering task-specific constraints.

Localization, Mapping and SLAM

Localization and SLAM (simultaneous localization and mapping) relies primarily on visual sensors such as cameras and lasers but often additionally use encoders and IMUs to enhance estimation accuracy.

Localization

Indoor environments are usually considered structured, characterized by the presence of well-defined, repeatable and often geometrically consistent objects. Landmarks can be uniquely identified by encoded vectors obtained from visual sensors such as depth or RGB cameras allowing the robot to essentially build up a visual map of the environment and then compare newly observed landmarks against a database to localize via object or landmark identification. In recent years, the use of handcrafted image features such as SIFT and SURF and feature dictionaries such as the Bag-of-Words (BoW) model in landmark representation has been superseded by feature representations learned through training on large example sets, usually by variants of artificial neural networks such as convolutional neural networks (CNNs). CNNs have also outperformed classifiers such as support vector machines (SVMs) in deriving inferences [ 42 , 43 ]. However, several rapidly evolving CNN architectures exist. Ovalle-magallanes et al. [ 44 ] performed a comparative study of four such networks while successfully localizing in a visual map.

The RoboCup Soccer League is popular in humanoid research due to the visual identification and localization challenges it presents. [ 45 , 46 ] and [ 47 ] are some examples of real-time, CNN based ball detection approaches utilizing RGB cameras developed specifically for RoboCup. Cruz et al. [ 48 ] could additionally estimate player poses, goal locations and other key pitch features using intensity images alone. Due to the low on-board computational power of the humanoids, others have used fast, low power external mobile GPU boards such as the Nvidia Jetson to aid inference [ 47 , 49 ].

Unstructured and semi-structured environments are encountered outdoors or in hazardous and disaster rescue scenarios. They have a dearth of reliably trackable features, unpredictable lighting conditions and are challenging for gathering training data. Thus, instead of features, researchers have focused on raw point clouds or combining different sensor modalities for navigating such environments. Starr et al. [ 50 ] presented a sensor fusion approach which combined long-wavelength infrared stereo vision and a spinning LiDAR for accurate rangefinding in smoke-obscured environments. Nobili et al. [ 51 ] successfully localized robots constrained by a limited field-of-view LiDAR in a semi-structured environment. They proposed a novel strategy for tuning outlier filtering based on point cloud overlap which achieved good localization results in the DARPA Robotics Challenge Finals. Raghavan et al. [ 52 ] presented simultaneous odometry and mapping by fusing LiDAR and kinematic-inertial data from IMU, joint encoders, and foot F/T sensors while navigating a disaster environment.

SLAM subsumes localization by the additional map construction and loop closing aspects, whereby the robot has to re-identify and match a place which was visited sometime in the past, to its current surroundings and adjust its pose history and recorded landmark locations accordingly. A humanoid robot which is intended to share human workspaces needs to deal with moving objects, both rapid and slow, which could disrupt its mapping and localizing capabilities. Thus, recent works on SLAM have focused on handling the presence of dynamic obstacles in visual scenes. While the most popular approach remains sensor fusion [ 53 , 54 ], other purely visual approaches have also been proposed, such as, [ 55 ] which introduced a dense RGB-D SLAM solution that utilized optical flow residuals to achieve accurate and efficient dynamic/static segmentation for camera tracking and background reconstruction. Zhang et al. [ 56 ] took a more direct approach which employed deep learning based human detection, and used graph-based segmentation to separate moving humans from the static environment. They further presented a SLAM benchmark dedicated to dynamic environment SLAM solutions [ 57 ]. It included RGB-D data acquired from an on-board camera on the HRP-4 humanoid robot, along with other sensor data. Adapting publicly available SLAM solutions and tailoring it for humanoid use is not uncommon. Sewtz et al. [ 58 ] adapted the Orb-Slam [ 59 ] for a multi-camera setup on the DLR Rollin’ Justin System while Ginn et al. [ 60 ] did it for the iGus , a midsize humanoid platform, to have low computational demands.

Navigation and Planning

Navigation and planning algorithms use perception information to generate a safe, optimal and reactive path, considering obstacles, terrain, and other constraints.

Local Planning

Local planning or reactive navigation is generally concerned with local real-time decision-making and control, allowing the robot to actively respond to perceived changes in the environment and adjust its movements accordingly. Especially in highly controlled applications rule-based, perception driven navigation is still popular and yields state-of-the-art performance both in terms of time demands and task accomplishment. Bista et al. [ 61 ] achieved real-time navigation in indoor environments by representing the environment by key RGB images, and deriving a control law based on common line segments and feature points between the current image and nearby key images. Regier et al. [ 62 ] determined appropriate actions based on a pre-defined set of mappings between object class and action. A CNN was used to classify objects from monocular RGB vision. Ferro et al. [ 63 ] integrated information from a monocular camera, joint encoders, and an IMU to generate a collision-free visual servo control scheme. Juang et al. [ 64 ] developed a line follower which was able to infer forward, lateral and angular velocity commands using path curvature estimation and PID control from monocular RGB images. Magassouba et al. [ 65 ] introduced an aural servo framework based on auditory perception, enabling robot motions to be directly linked to low-level auditory features through a feedback loop.

We also see the use of a diverse array of classifiers to learn navigation schemes from perception information. Their generalization capability allows adaptation to unforeseen obstacles and events in the environment. Abiyev et al. [ 66 ] presented a vision-based path-finding algorithm which segregated captured images into free and occupied areas using an SVM. Lobos-tsunekawa et al. [ 67 ] and Silva et al. [ 68 ] proposed deep learned visual (RGB) navigation systems for humanoid robots which were able to achieve real time performance. The former used a reinforcement learning (RL) system with an actor-critic architecture while the latter utilized a decision tree of deep neural networks deployed on a soccer playing robot.

Global Planning

These algorithms operate globally, taking into account long-term objectives and optimize movements to minimize costs, maximize efficiency, or achieve a specific outcome on the basis of a perceived environment model.

Footstep Planning is a crucial part of humanoid locomotion and has generated substantial research interest for itself. Recent works exhibit two primary trends related to perception. The first is providing humanoids the capability of rapidly perceiving changes in the environment and reacting through fast re-planning. The second endeavors to segment and/or classify uneven terrains to find stable 6 DoF footholds for highly versatile navigation.

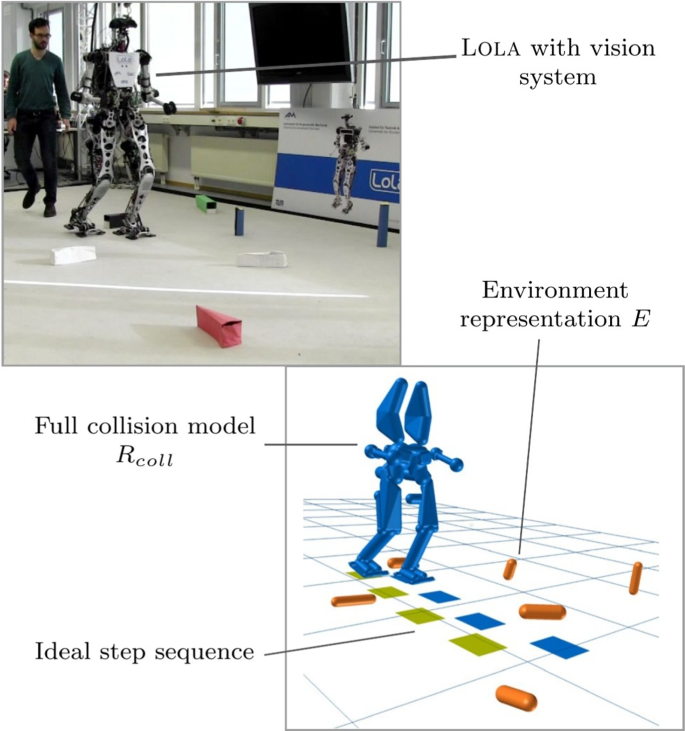

Footstep planning on the humanoid Lola from [ 69 ]. Top left: The robot’s vision system and a human causing disturbance. Bottom right: The collision model with geometric obstacle approximations

Tanguy et al. [ 54 ] proposed a model predictive control (MPC) scheme that fused visual SLAM and proprioceptive F/T sensors for accurate state estimation. This allowed rapid reaction to external disturbances by adaptive stepping leading to balance recovery and improved localization accuracy. Hildebrandt et al. [ 69 ] used the point cloud from an RGB-D camera to model obstacles as swept-sphere-volumes (SSVs) and step-able surfaces as convex polygons for real-time reactive footstep planning with the Lola humanoid robot. Their system was capable of handling rough terrain as well as external disturbances such as pushes (see Fig. 3 ). Others have also used geometric primitives to aid in footstep planning, such as surface patches for foothold representation [ 70 , 71 ], environment segmentation to find step-able regions, such as 2D plane segments embedded in 3D space [ 72 , 73 ], or represented obstacles by their polygonal ground projections [ 74 ]. Suryamurthy et al. [ 75 ] assigned pixel-wise terrain labels and rugosity measures using a CNN consuming RGB images for footstep planning on a CENTAURO robot.

Whole Body Planning in humanoid robots involves the coordinated planning and control of the robot’s entire body to achieve an objective. Coverage planning is a subset of whole body planning where a minimal sequence of whole body robot poses are estimated to completely explore a 3D space via robot mounted visual sensors [ 76 , 77 ]. Target finding is a special case of coverage planning where the exploration stops when the target is found [ 78 , 79 ]. These concepts are related primarily to view planning in computer vision. In other applications, Wang et al. [ 80 ] presented a method for trajectory planning and formation building of a robot fleet using local positions estimated from onboard optical sensors and Liu et al. [ 81 ] presented a temporal planning approach for choreographing dancing robots in response to microphone-sensed music.

Perception in Grasping and Manipulation

Manipulation and grasping in humanoid robots involve their ability to interact with objects of varying shapes, sizes, and weights, to perform dexterous manipulation tasks using their sensor equipped end-effectors which provide visual or tactile feedback for grip adjustment.

Grasp Planning

Grasp planning is a lower level task specifically focused on determining the optimal manipulator pose sequence to securely and effectively grasp an object. Visual information is used to find grasping locations and also as a feedback to optimize the difference between the target grasp pose and the current end-effector pose.

Schmidt et al. [ 82 ] utilized a CNN trained on object depth images and pre-generated analytic grasp plans to synthesize grasp solutions. The solution generated full end-effector poses and could generate poses not limited to the camera view direction. Vezzani et al. [ 83 ] modeled the shape and volume of the target object captured from stereo vision in real-time using super-quadric functions allowing grasping even when parts of the object were occluded. Vicente et al. [ 84 ] and Nguyen [ 85 ] focused on achieving accurate hand-eye coordination in humanoids equipped with stereo vision. While the former compensated for kinematic calibration errors between the robot’s internal hand model and captured images using particle based optimization, the latter trained a deep neural network predictor to estimate the robot arm’s joint configuration. [ 86 ] proposed a combination of CNNs and dense conditional random fields (CRFs) to infer action possibilities on an object (affordances) from RGB images.

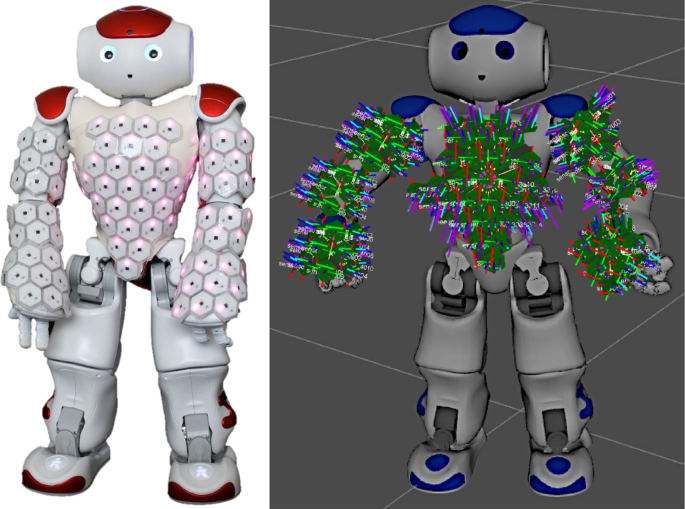

Left: A Nao humanoid equipped with artificial skin cells on the chest, hand, fore arm, and upper arm. Right: Visualization of the skin cell coordinate frames on the Nao. Figure taken from [ 87 ]