What Is Primary Data and Secondary Data in Research Methodology

When it comes to research methodology, primary data and secondary data are essential components of the process. What is primary data and secondary data in research methodology?

Primary data is information collected through direct observation or experimentation, while secondary data is existing knowledge obtained from sources such as books, reports, and surveys. Understanding how to collect both primary and secondary data can be a challenge for R&D teams looking for insights into their projects.

In this blog post, we will explore what exactly these two types of research entail, how they should be collected in order to get the best results possible, how to analyze your findings, and how to apply those results to your project.

By understanding more about what is primary data and secondary data in research methodology, you can ensure that any decisions made regarding an innovation project are well-informed ones!

Table of Contents

What is Primary Data?

Types of primary data, advantages of primary data, disadvantages of primary data, how to collect primary and secondary data, methods for collecting primary and secondary data, challenges in collecting what is primary data and secondary data in research methodology, tips for collecting reliable primary and secondary data, analyzing primary and secondary research results, challenges in analyzing research results.

Primary data is information that has been collected directly from its original source. It is original and unique to the research project or study being conducted, as opposed to secondary data which has already been gathered and published by someone else.

Primary data can be collected through a variety of methods such as surveys, interviews, focus groups, observations, experiments, and more.

This type of data can be qualitative or quantitative in nature and provides insight into a particular issue or problem being studied. It is often used in research projects to gain an understanding of people’s opinions, behaviors, attitudes, and preferences on various topics.

The types of primary data depend on the method used for collecting it. Common types include survey responses (qualitative), interview transcripts (qualitative), observation notes (quantitative), and experiment results (quantitative).

Other examples include photographs taken during fieldwork trips or video recordings made during interviews with participants in a study.

Using primary data offers several advantages over relying solely on secondary sources when conducting research.

First off, it allows researchers to collect their own unique set of information that may not have been available before. This gives them greater control over what they are studying as well as how they interpret their findings.

Additionally, primary sources tend to provide more accurate results since there are fewer chances for errors due to human bias or misinterpretation.

Lastly, using primary sources also helps ensure that any potential ethical issues related to collecting personal information are addressed prior to the beginning of the project – something which isn’t always possible with secondary sources!

Despite all these benefits associated with using primary sources, there are some drawbacks too.

One major disadvantage is cost. Primary data collection can become quite expensive if done incorrectly!

Another downside relates to accuracy. Since much less time goes into verifying each data source, mistakes may occur more frequently — resulting in unreliable conclusions.

Key Takeaway: Primary data is a valuable source of information for research as it allows researchers to collect their own unique set of information that may not have been available before.

What is primary data and secondary data in research methodology?

Primary data can be gathered through surveys, interviews, focus groups, and experiments. It provides an accurate picture of the subject being studied since it has not been altered or influenced by other sources.

Secondary data is information that has already been collected and stored in a database. Examples of secondary data include census records, government statistics, published journal articles , and public opinion polls.

Secondary data can provide valuable insights into the topic being studied but may not always be up-to-date or reliable due to its age or source material.

There are several methods available for collecting primary and secondary data including surveys, interviews, focus groups, and experiments as well as online resources such as databases and archives.

Surveys are one of the most common methods used to collect primary data. They involve asking specific questions from a group of people who have agreed to participate in the survey process.

Interviews are another popular method used to gather primary information. They involve having an interviewer ask questions face-to-face with participants who have agreed to take part in the interview process.

Focus groups allow researchers to gain insight into specific topics by gathering together small groups of individuals who share similar interests or experiences so that their opinions can be discussed openly among each other during a moderated session.

Experiments are often used when conducting scientific research. They involve manipulating variables within controlled conditions while measuring results over time.

Online resources such as databases and archives offer access to large amounts of existing secondary information which can then be analyzed further if needed.

One challenge associated with collecting both primary and secondary data is obtaining accurate responses from participants.

Another issue could arise if there’s too much bias present within certain types of datasets (eg: political opinion polls) which makes it difficult for researchers to accurately interpret results.

Additionally, there might also exist some privacy concerns depending on the nature of personal details required while conducting research (eg: medical studies).

How to ensure reliable results when collecting both primary and secondary datasets?

First, make sure you have enough sample size.

Secondly, try to avoid using biased sources like political opinion polls.

Third, check all relevant privacy laws prior to starting any project involving the collection of personal details.

Lastly, double-check the accuracy and validity of all your findings before drawing final conclusions.

Key Takeaway: Collecting reliable primary and secondary data for research projects requires careful consideration of various factors. Researchers should ensure an adequate sample size, avoid biased sources, check relevant privacy laws, and double-check accuracy before drawing conclusions.

The first step in analyzing primary and secondary research results is to identify the key points from each study. This includes understanding what was studied, who participated in the study, how it was conducted, and any other relevant information about the study’s methodology.

Once this information has been gathered, it can be used to draw conclusions about the findings. Additionally, researchers should compare their own findings with those of other studies on similar topics to gain a more comprehensive understanding of their topic area.

Analyzing primary and secondary research results can be challenging due to sample size or methodology.

It is also difficult to determine which findings are reliable since some studies may have methodological flaws that could affect their accuracy or validity.

Additionally, interpreting qualitative data can be especially challenging since there is often no clear-cut answer when examining subjective responses from participants in a survey or interview setting.

Finally, researchers must take care not to make assumptions based on limited evidence as this could lead them astray from accurate interpretations of their results.

Primary data is collected through surveys, interviews, experiments, or observations while secondary data is obtained from existing sources such as books, journals, newspapers, and websites. Collecting both types of data requires careful planning and execution to ensure accuracy and reliability.

Analyzing the results of primary and secondary research can help identify trends in the industry that could be used to inform decisions or strategies for innovation teams.

Are you an R&D or innovation team looking for a solution to help centralize data sources and provide rapid time to insights? Look no further than Cypris . Our platform is designed specifically for teams like yours, providing easy access to primary and secondary data research so that your team can make the most informed decisions possible.

With our streamlined approach, there’s never been a better way to maximize efficiency in the pursuit of groundbreaking ideas!

Similar insights you might enjoy

Gallium Nitride Innovation Pulse

Carbon Capture & Storage Innovation Pulse

Sodium-Ion Batteries Innovation Pulse

Library Guides

Dissertations 4: methodology: methods.

- Introduction & Philosophy

- Methodology

Primary & Secondary Sources, Primary & Secondary Data

When describing your research methods, you can start by stating what kind of secondary and, if applicable, primary sources you used in your research. Explain why you chose such sources, how well they served your research, and identify possible issues encountered using these sources.

Definitions

There is some confusion on the use of the terms primary and secondary sources, and primary and secondary data. The confusion is also due to disciplinary differences (Lombard 2010). Whilst you are advised to consult the research methods literature in your field, we can generalise as follows:

Secondary sources

Secondary sources normally include the literature (books and articles) with the experts' findings, analysis and discussions on a certain topic (Cottrell, 2014, p123). Secondary sources often interpret primary sources.

Primary sources

Primary sources are "first-hand" information such as raw data, statistics, interviews, surveys, law statutes and law cases. Even literary texts, pictures and films can be primary sources if they are the object of research (rather than, for example, documentaries reporting on something else, in which case they would be secondary sources). The distinction between primary and secondary sources sometimes lies on the use you make of them (Cottrell, 2014, p123).

Primary data

Primary data are data (primary sources) you directly obtained through your empirical work (Saunders, Lewis and Thornhill 2015, p316).

Secondary data

Secondary data are data (primary sources) that were originally collected by someone else (Saunders, Lewis and Thornhill 2015, p316).

Comparison between primary and secondary data

Use

Virtually all research will use secondary sources, at least as background information.

Often, especially at the postgraduate level, it will also use primary sources - secondary and/or primary data. The engagement with primary sources is generally appreciated, as less reliant on others' interpretations, and closer to 'facts'.

The use of primary data, as opposed to secondary data, demonstrates the researcher's effort to do empirical work and find evidence to answer her specific research question and fulfill her specific research objectives. Thus, primary data contribute to the originality of the research.

Ultimately, you should state in this section of the methodology:

What sources and data you are using and why (how are they going to help you answer the research question and/or test the hypothesis.

If using primary data, why you employed certain strategies to collect them.

What the advantages and disadvantages of your strategies to collect the data (also refer to the research in you field and research methods literature).

Quantitative, Qualitative & Mixed Methods

The methodology chapter should reference your use of quantitative research, qualitative research and/or mixed methods. The following is a description of each along with their advantages and disadvantages.

Quantitative research

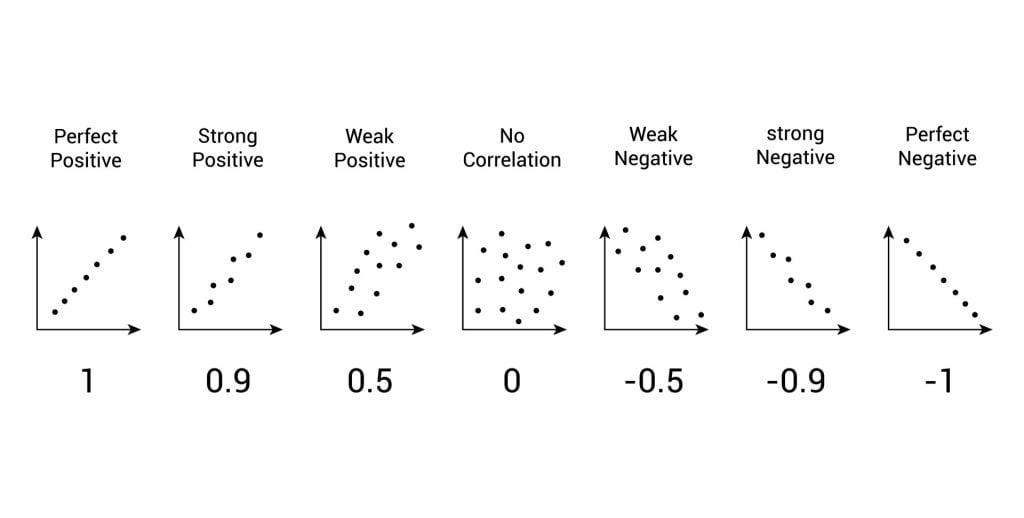

Quantitative research uses numerical data (quantities) deriving, for example, from experiments, closed questions in surveys, questionnaires, structured interviews or published data sets (Cottrell, 2014, p93). It normally processes and analyses this data using quantitative analysis techniques like tables, graphs and statistics to explore, present and examine relationships and trends within the data (Saunders, Lewis and Thornhill, 2015, p496).

Qualitative research

Qualitative research is generally undertaken to study human behaviour and psyche. It uses methods like in-depth case studies, open-ended survey questions, unstructured interviews, focus groups, or unstructured observations (Cottrell, 2014, p93). The nature of the data is subjective, and also the analysis of the researcher involves a degree of subjective interpretation. Subjectivity can be controlled for in the research design, or has to be acknowledged as a feature of the research. Subject-specific books on (qualitative) research methods offer guidance on such research designs.

Mixed methods

Mixed-method approaches combine both qualitative and quantitative methods, and therefore combine the strengths of both types of research. Mixed methods have gained popularity in recent years.

When undertaking mixed-methods research you can collect the qualitative and quantitative data either concurrently or sequentially. If sequentially, you can for example, start with a few semi-structured interviews, providing qualitative insights, and then design a questionnaire to obtain quantitative evidence that your qualitative findings can also apply to a wider population (Specht, 2019, p138).

Ultimately, your methodology chapter should state:

Whether you used quantitative research, qualitative research or mixed methods.

Why you chose such methods (and refer to research method sources).

Why you rejected other methods.

How well the method served your research.

The problems or limitations you encountered.

Doug Specht, Senior Lecturer at the Westminster School of Media and Communication, explains mixed methods research in the following video:

LinkedIn Learning Video on Academic Research Foundations: Quantitative

The video covers the characteristics of quantitative research, and explains how to approach different parts of the research process, such as creating a solid research question and developing a literature review. He goes over the elements of a study, explains how to collect and analyze data, and shows how to present your data in written and numeric form.

Link to quantitative research video

Some Types of Methods

There are several methods you can use to get primary data. To reiterate, the choice of the methods should depend on your research question/hypothesis.

Whatever methods you will use, you will need to consider:

why did you choose one technique over another? What were the advantages and disadvantages of the technique you chose?

what was the size of your sample? Who made up your sample? How did you select your sample population? Why did you choose that particular sampling strategy?)

ethical considerations (see also tab...)

safety considerations

validity

feasibility

recording

procedure of the research (see box procedural method...).

Check Stella Cottrell's book Dissertations and Project Reports: A Step by Step Guide for some succinct yet comprehensive information on most methods (the following account draws mostly on her work). Check a research methods book in your discipline for more specific guidance.

Experiments

Experiments are useful to investigate cause and effect, when the variables can be tightly controlled. They can test a theory or hypothesis in controlled conditions. Experiments do not prove or disprove an hypothesis, instead they support or not support an hypothesis. When using the empirical and inductive method it is not possible to achieve conclusive results. The results may only be valid until falsified by other experiments and observations.

For more information on Scientific Method, click here .

Observations

Observational methods are useful for in-depth analyses of behaviours in people, animals, organisations, events or phenomena. They can test a theory or products in real life or simulated settings. They generally a qualitative research method.

Questionnaires and surveys

Questionnaires and surveys are useful to gain opinions, attitudes, preferences, understandings on certain matters. They can provide quantitative data that can be collated systematically; qualitative data, if they include opportunities for open-ended responses; or both qualitative and quantitative elements.

Interviews

Interviews are useful to gain rich, qualitative information about individuals' experiences, attitudes or perspectives. With interviews you can follow up immediately on responses for clarification or further details. There are three main types of interviews: structured (following a strict pattern of questions, which expect short answers), semi-structured (following a list of questions, with the opportunity to follow up the answers with improvised questions), and unstructured (following a short list of broad questions, where the respondent can lead more the conversation) (Specht, 2019, p142).

This short video on qualitative interviews discusses best practices and covers qualitative interview design, preparation and data collection methods.

Focus groups

In this case, a group of people (normally, 4-12) is gathered for an interview where the interviewer asks questions to such group of participants. Group interactions and discussions can be highly productive, but the researcher has to beware of the group effect, whereby certain participants and views dominate the interview (Saunders, Lewis and Thornhill 2015, p419). The researcher can try to minimise this by encouraging involvement of all participants and promoting a multiplicity of views.

This video focuses on strategies for conducting research using focus groups.

Check out the guidance on online focus groups by Aliaksandr Herasimenka, which is attached at the bottom of this text box.

Case study

Case studies are often a convenient way to narrow the focus of your research by studying how a theory or literature fares with regard to a specific person, group, organisation, event or other type of entity or phenomenon you identify. Case studies can be researched using other methods, including those described in this section. Case studies give in-depth insights on the particular reality that has been examined, but may not be representative of what happens in general, they may not be generalisable, and may not be relevant to other contexts. These limitations have to be acknowledged by the researcher.

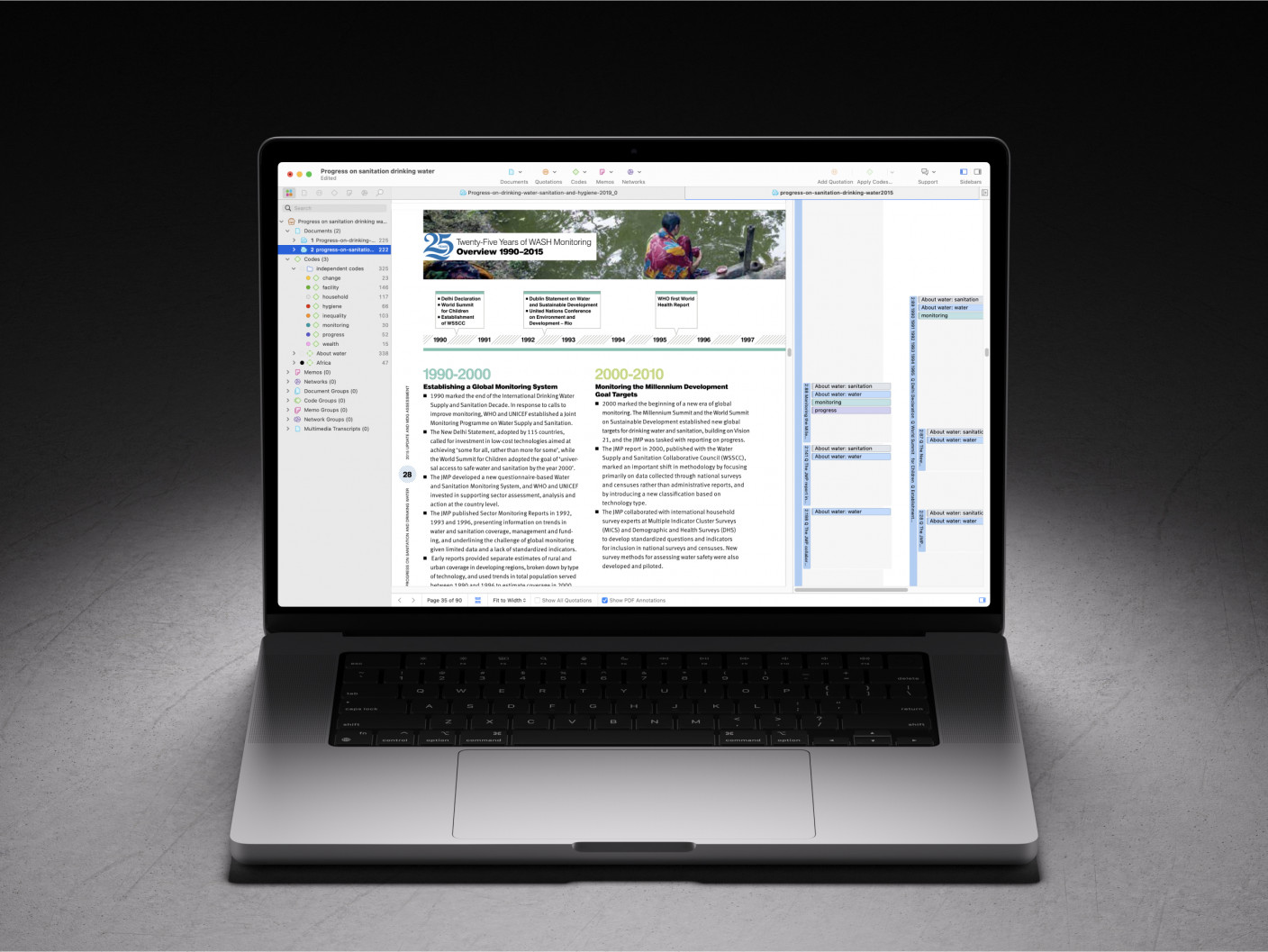

Content analysis

Content analysis consists in the study of words or images within a text. In its broad definition, texts include books, articles, essays, historical documents, speeches, conversations, advertising, interviews, social media posts, films, theatre, paintings or other visuals. Content analysis can be quantitative (e.g. word frequency) or qualitative (e.g. analysing intention and implications of the communication). It can detect propaganda, identify intentions of writers, and can see differences in types of communication (Specht, 2019, p146). Check this page on collecting, cleaning and visualising Twitter data.

Extra links and resources:

Research Methods

A clear and comprehensive overview of research methods by Emerald Publishing. It includes: crowdsourcing as a research tool; mixed methods research; case study; discourse analysis; ground theory; repertory grid; ethnographic method and participant observation; interviews; focus group; action research; analysis of qualitative data; survey design; questionnaires; statistics; experiments; empirical research; literature review; secondary data and archival materials; data collection.

Doing your dissertation during the COVID-19 pandemic

Resources providing guidance on doing dissertation research during the pandemic: Online research methods; Secondary data sources; Webinars, conferences and podcasts;

- Virtual Focus Groups Guidance on managing virtual focus groups

5 Minute Methods Videos

The following are a series of useful videos that introduce research methods in five minutes. These resources have been produced by lecturers and students with the University of Westminster's School of Media and Communication.

Case Study Research

Research Ethics

Quantitative Content Analysis

Sequential Analysis

Qualitative Content Analysis

Thematic Analysis

Social Media Research

Mixed Method Research

Procedural Method

In this part, provide an accurate, detailed account of the methods and procedures that were used in the study or the experiment (if applicable!).

Include specifics about participants, sample, materials, design and methods.

If the research involves human subjects, then include a detailed description of who and how many participated along with how the participants were selected.

Describe all materials used for the study, including equipment, written materials and testing instruments.

Identify the study's design and any variables or controls employed.

Write out the steps in the order that they were completed.

Indicate what participants were asked to do, how measurements were taken and any calculations made to raw data collected.

Specify statistical techniques applied to the data to reach your conclusions.

Provide evidence that you incorporated rigor into your research. This is the quality of being thorough and accurate and considers the logic behind your research design.

Highlight any drawbacks that may have limited your ability to conduct your research thoroughly.

You have to provide details to allow others to replicate the experiment and/or verify the data, to test the validity of the research.

Bibliography

Cottrell, S. (2014). Dissertations and project reports: a step by step guide. Hampshire, England: Palgrave Macmillan.

Lombard, E. (2010). Primary and secondary sources. The Journal of Academic Librarianship , 36(3), 250-253

Saunders, M.N.K., Lewis, P. and Thornhill, A. (2015). Research Methods for Business Students. New York: Pearson Education.

Specht, D. (2019). The Media And Communications Study Skills Student Guide . London: University of Westminster Press.

- << Previous: Introduction & Philosophy

- Next: Ethics >>

- Last Updated: Sep 14, 2022 12:58 PM

- URL: https://libguides.westminster.ac.uk/methodology-for-dissertations

CONNECT WITH US

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Primary vs Secondary Research

Try Qualtrics for free

Primary vs secondary research – what’s the difference.

14 min read Find out how primary and secondary research are different from each other, and how you can use them both in your own research program.

Primary vs secondary research: in a nutshell

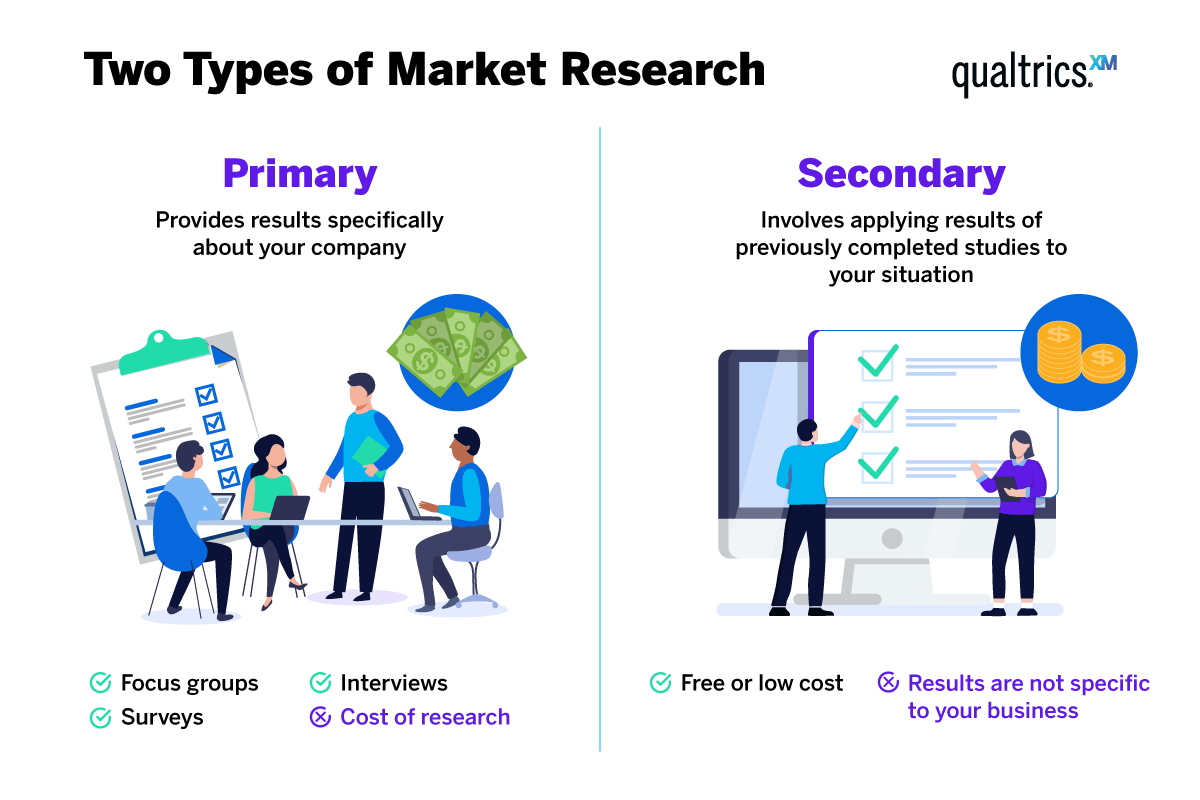

The essential difference between primary and secondary research lies in who collects the data.

- Primary research definition

When you conduct primary research, you’re collecting data by doing your own surveys or observations.

- Secondary research definition:

In secondary research, you’re looking at existing data from other researchers, such as academic journals, government agencies or national statistics.

Free Ebook: The Qualtrics Handbook of Question Design

When to use primary vs secondary research

Primary research and secondary research both offer value in helping you gather information.

Each research method can be used alone to good effect. But when you combine the two research methods, you have the ingredients for a highly effective market research strategy. Most research combines some element of both primary methods and secondary source consultation.

So assuming you’re planning to do both primary and secondary research – which comes first? Counterintuitive as it sounds, it’s more usual to start your research process with secondary research, then move on to primary research.

Secondary research can prepare you for collecting your own data in a primary research project. It can give you a broad overview of your research area, identify influences and trends, and may give you ideas and avenues to explore that you hadn’t previously considered.

Given that secondary research can be done quickly and inexpensively, it makes sense to start your primary research process with some kind of secondary research. Even if you’re expecting to find out what you need to know from a survey of your target market, taking a small amount of time to gather information from secondary sources is worth doing.

Primary research

Primary market research is original research carried out when a company needs timely, specific data about something that affects its success or potential longevity.

Primary research data collection might be carried out in-house by a business analyst or market research team within the company, or it may be outsourced to a specialist provider, such as an agency or consultancy. While outsourcing primary research involves a greater upfront expense, it’s less time consuming and can bring added benefits such as researcher expertise and a ‘fresh eyes’ perspective that avoids the risk of bias and partiality affecting the research data.

Primary research gives you recent data from known primary sources about the particular topic you care about, but it does take a little time to collect that data from scratch, rather than finding secondary data via an internet search or library visit.

Primary research involves two forms of data collection:

- Exploratory research This type of primary research is carried out to determine the nature of a problem that hasn’t yet been clearly defined. For example, a supermarket wants to improve its poor customer service and needs to understand the key drivers behind the customer experience issues. It might do this by interviewing employees and customers, or by running a survey program or focus groups.

- Conclusive research This form of primary research is carried out to solve a problem that the exploratory research – or other forms of primary data – has identified. For example, say the supermarket’s exploratory research found that employees weren’t happy. Conclusive research went deeper, revealing that the manager was rude, unreasonable, and difficult, making the employees unhappy and resulting in a poor employee experience which in turn led to less than excellent customer service. Thanks to the company’s choice to conduct primary research, a new manager was brought in, employees were happier and customer service improved.

Examples of primary research

All of the following are forms of primary research data.

- Customer satisfaction survey results

- Employee experience pulse survey results

- NPS rating scores from your customers

- A field researcher’s notes

- Data from weather stations in a local area

- Recordings made during focus groups

Primary research methods

There are a number of primary research methods to choose from, and they are already familiar to most people. The ones you choose will depend on your budget, your time constraints, your research goals and whether you’re looking for quantitative or qualitative data.

A survey can be carried out online, offline, face to face or via other media such as phone or SMS. It’s relatively cheap to do, since participants can self-administer the questionnaire in most cases. You can automate much of the process if you invest in good quality survey software.

Primary research interviews can be carried out face to face, over the phone or via video calling. They’re more time-consuming than surveys, and they require the time and expense of a skilled interviewer and a dedicated room, phone line or video calling setup. However, a personal interview can provide a very rich primary source of data based not only on the participant’s answers but also on the observations of the interviewer.

Focus groups

A focus group is an interview with multiple participants at the same time. It often takes the form of a discussion moderated by the researcher. As well as taking less time and resources than a series of one-to-one interviews, a focus group can benefit from the interactions between participants which bring out more ideas and opinions. However this can also lead to conversations going off on a tangent, which the moderator must be able to skilfully avoid by guiding the group back to the relevant topic.

Secondary research

Secondary research is research that has already been done by someone else prior to your own research study.

Secondary research is generally the best place to start any research project as it will reveal whether someone has already researched the same topic you’re interested in, or a similar topic that helps lay some of the groundwork for your research project.

Even if your preliminary secondary research doesn’t turn up a study similar to your own research goals, it will still give you a stronger knowledge base that you can use to strengthen and refine your research hypothesis. You may even find some gaps in the market you didn’t know about before.

The scope of secondary research resources is extremely broad. Here are just a few of the places you might look for relevant information.

Books and magazines

A public library can turn up a wealth of data in the form of books and magazines – and it doesn’t cost a penny to consult them.

Market research reports

Secondary research from professional research agencies can be highly valuable, as you can be confident the data collection methods and data analysis will be sound

Scholarly journals, often available in reference libraries

Peer-reviewed journals have been examined by experts from the relevant educational institutions, meaning there has been an extra layer of oversight and careful consideration of the data points before publication.

Government reports and studies

Public domain data, such as census data, can provide relevant information for your research project, not least in choosing the appropriate research population for a primary research method. If the information you need isn’t readily available, try contacting the relevant government agencies.

White papers

Businesses often produce white papers as a means of showcasing their expertise and value in their field. White papers can be helpful in secondary research methods, although they may not be as carefully vetted as academic papers or public records.

Trade or industry associations

Associations may have secondary data that goes back a long way and offers a general overview of a particular industry. This data collected over time can be very helpful in laying the foundations of your particular research project.

Private company data

Some businesses may offer their company data to those conducting research in return for fees or with explicit permissions. However, if a business has data that’s closely relevant to yours, it’s likely they are a competitor and may flat out refuse your request.

Learn more about secondary research

Examples of secondary research data

These are all forms of secondary research data in action:

- A newspaper report quoting statistics sourced by a journalist

- Facts from primary research articles quoted during a debate club meeting

- A blog post discussing new national figures on the economy

- A company consulting previous research published by a competitor

Secondary research methods

Literature reviews.

A core part of the secondary research process, involving data collection and constructing an argument around multiple sources. A literature review involves gathering information from a wide range of secondary sources on one topic and summarizing them in a report or in the introduction to primary research data.

Content analysis

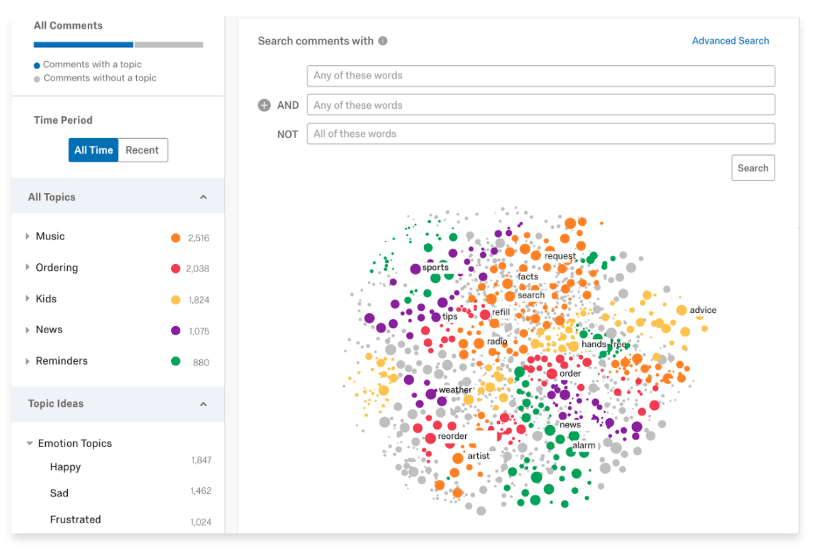

This systematic approach is widely used in social science disciplines. It uses codes for themes, tropes or key phrases which are tallied up according to how often they occur in the secondary data. The results help researchers to draw conclusions from qualitative data.

Data analysis using digital tools

You can analyze large volumes of data using software that can recognize and categorize natural language. More advanced tools will even be able to identify relationships and semantic connections within the secondary research materials.

Comparing primary vs secondary research

We’ve established that both primary research and secondary research have benefits for your business, and that there are major differences in terms of the research process, the cost, the research skills involved and the types of data gathered. But is one of them better than the other?

The answer largely depends on your situation. Whether primary or secondary research wins out in your specific case depends on the particular topic you’re interested in and the resources you have available. The positive aspects of one method might be enough to sway you, or the drawbacks – such as a lack of credible evidence already published, as might be the case in very fast-moving industries – might make one method totally unsuitable.

Here’s an at-a-glance look at the features and characteristics of primary vs secondary research, illustrating some of the key differences between them.

What are the pros and cons of primary research?

Primary research provides original data and allows you to pinpoint the issues you’re interested in and collect data from your target market – with all the effort that entails.

Benefits of primary research:

- Tells you what you need to know, nothing irrelevant

- Yours exclusively – once acquired, you may be able to sell primary data or use it for marketing

- Teaches you more about your business

- Can help foster new working relationships and connections between silos

- Primary research methods can provide upskilling opportunities – employees gain new research skills

Limitations of primary research:

- Lacks context from other research on related subjects

- Can be expensive

- Results aren’t ready to use until the project is complete

- Any mistakes you make in in research design or implementation could compromise your data quality

- May not have lasting relevance – although it could fulfill a benchmarking function if things change

What are the pros and cons of secondary research?

Secondary research relies on secondary sources, which can be both an advantage and a drawback. After all, other people are doing the work, but they’re also setting the research parameters.

Benefits of secondary research:

- It’s often low cost or even free to access in the public domain

- Supplies a knowledge base for researchers to learn from

- Data is complete, has been analyzed and checked, saving you time and costs

- It’s ready to use as soon as you acquire it

Limitations of secondary research

- May not provide enough specific information

- Conducting a literature review in a well-researched subject area can become overwhelming

- No added value from publishing or re-selling your research data

- Results are inconclusive – you’ll only ever be interpreting data from another organization’s experience, not your own

- Details of the research methodology are unknown

- May be out of date – always check carefully the original research was conducted

Related resources

Business research methods 12 min read, qualitative research interviews 11 min read, market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, request demo.

Ready to learn more about Qualtrics?

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Afr J Emerg Med

- v.10(Suppl 2); 2020

Acquiring data in medical research: A research primer for low- and middle-income countries

Vicken totten.

a Kaweah Delta Health Care District (KDHCD), KDHCD Department of Emergency Medicine, Visalia, CA, USA

Erin L. Simon

b Cleveland Clinic Akron General, Department of Emergency Medicine, Akron, OH, USA

Mohammad Jalili

c Department of Emergency Medicine, Tehran University of Medical Sciences, Tehran, Iran

Hendry R. Sawe

d Muhimbili University of Health and Allied Sciences, Dar es Salaam, Tanzania

Without data, there is no new knowledge generated. There may be interesting speculation, new paradigms or theories, but without data gathered from the universe, as representative of the truth in the universe as possible, there will be no new knowledge. Therefore, it is important to become excellent at collecting, collating and correctly interpreting data. Pre-existing and new data sources are discussed; variables are discussed, and sampling methods are covered. The importance of a detailed protocol and research manual are emphasized. Data collectors and data collection forms, both electronic and paper-based are discussed. Ensuring subject privacy while also ensuring appropriate data retention must be balanced.

African relevance

- • To get good quality information you first need good quality data

- • Data collection systematically and reproducibly gathers and measures variables to answer research questions.

- • Good data is a result of a well thought out study protocol

The International Federation for Emergency Medicine global health research primer

This paper forms part 9 of a series of ‘how to’ papers, commissioned by the International Federation for Emergency Medicine. It describes data sources, variables, sampling methods, data collection and the value of a clear data protocol. We have also included additional tips and pitfalls that are relevant to emergency medicine researchers.

Data collection is the process of systematically and reproducibly gathering and measuring variables in order to answer research questions, test hypotheses, or evaluate outcomes.

Data is not information. To get good quality information you first need good quality data, then you must curate, analyse and interpret it. Data is comprised of variables. Data collection begins with determining which variables are required, followed by the selection of a sample from a certain population. After that, a data collection tool is used to collect the variables from the selected sample, which is then converted into a data spreadsheet or database. The analysis is done on the database.

Sometimes you gather data yourself. Sometimes you analyse data others collected for different purposes. Ideally, you collect a universal sample, that is, 100%. In real life, you get a limited sample. Preferably, it will be a truly random sample with enough power to answer your question. Unfortunately, you may have to settle for consecutive or convenience sampling. Ideally, your data collectors would be blinded to the outcome of interest, to prevent bias. However, real life is full of biases. Imperfect data may be better than no data; you can often get useful information from imperfect data. Remember the enemy of good is perfect.

Why is good data important?

Acquiring data is the most important step in a research study. The best design with bad data is useless. Bad design produces bad data. The most sophisticated analysis cannot be performed without data; analysing bad data produces erroneous results. Analysis can never be better than the quality of the data on which it was run. Good data has integrity. Data integrity is paramount to learning “Truth in the Universe”. Good data is as complete and as clean, as you can reasonably make it. Clean data ‘has integrity’ when the variables access as much relevant information as possible, and in the same way for each subject.

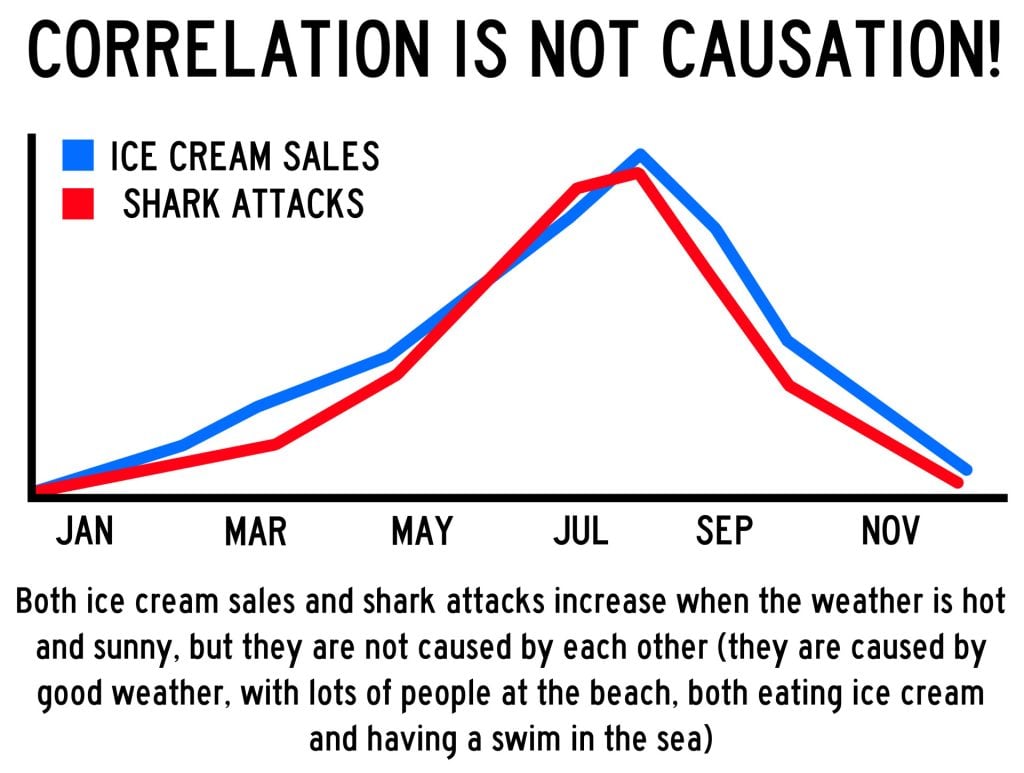

Some information is very hard to get. You may have to use proxy variables for what you really want to know. A proxy variable is a variable that is not in itself directly relevant, but that serves in place of an unobservable or immeasurable variable. In order for a variable to be a good proxy, it must have a close correlation, not necessarily linear, with the variable of interest. One example for the variable of a specific illness might be a medication list.

Consequences of bad data include an inability to answer the research question; inability to replicate or validate the study; distorted findings and wasted resources; compromised knowledge and even harm to subjects.

Ensure data quality

Good data is a result of a well-thought-out study protocol, which is the written plan for the study. Good planning is the most cost-effective way to ensure data integrity. Good planning is documented by a thorough and detailed protocol, with a comprehensive procedures manual. Poorly written manuals risk incomplete or inconsistent collection of data, in other words, ‘bad data’. The manual should include rigorous, step-by-step instructions on how to administer tests or collect the data. It should cover the ‘who’ (the subject and the researcher); the ‘when’ (the timing), the ‘how’ (methods), and the ‘what’ (a complete listing of variables to be collected). There should also be an identified mechanism to document any changes in procedures that may evolve over the course of the investigation. The study design should be reproducible: so that the protocol can be followed by any other researcher. All data needs to be gathered in the same way. Test (trial-run) your manual before you start your study. If data is collected by several people, make sure there is a sufficient degree of inter-rater reliability.

To get good data, your sample needs to be representative of the population. For others to apply your results, you need to characterize your population, so others can decide if your conclusions are relevant to their population (see Sampling section, below).

Data integrity demands you supervise your study, making sure it is complete and accurate. You may wish to do interim analyses. Keep copies! Keep both the raw data and the data sheets, for the length of time required by law or by Good Research Practice in your country. This will protect you from accusations of falsification of data.

In real life, you may have to deal with any number of sampling and data collection biases. Some of these biases can be measured statistically. Regardless, all the limitations you can think of should be written in your limitations section. The best design you can practically use gives you the best data you can reasonably get. Remember, “you cannot fix with statistics what you fouled up by design.”

Before you acquire your first datum, consider: Do you have a developed protocol and a research manual? Have you sought Ethics Board approval? Do you have an informed consent? Do you have a plan to protect the subject's confidentiality? Do you have a plan for data analysis? Where will you safely store and protect the data? If you have collaborators, have you established, in writing, who owns the data, and who has the right to analyse and publish it?

Types of data: qualitative vs. quantitative data

Numerical data is generally called quantitative; if in words or sentences, it is qualitative. Medical research historically has focused on quantitative methods. Generally, quantitative research is cheaper, easier to gather and easier to analyse. For purposes of this chapter, we will focus on quantitative research.

Qualitative research is about words, sentences, sounds, feeling, emotions, colours and other elements that are non-quantifiable. It requires human intellect to extract themes from the sentences, evaluate the fit of the data to the themes, and to draw the implications of the themes. Primary sources for qualitative data include open ended surveys, interviews, and public meetings. Qualitative research is more common in politics and the social sciences, and will not be further discussed here, except to refer you to other sources.

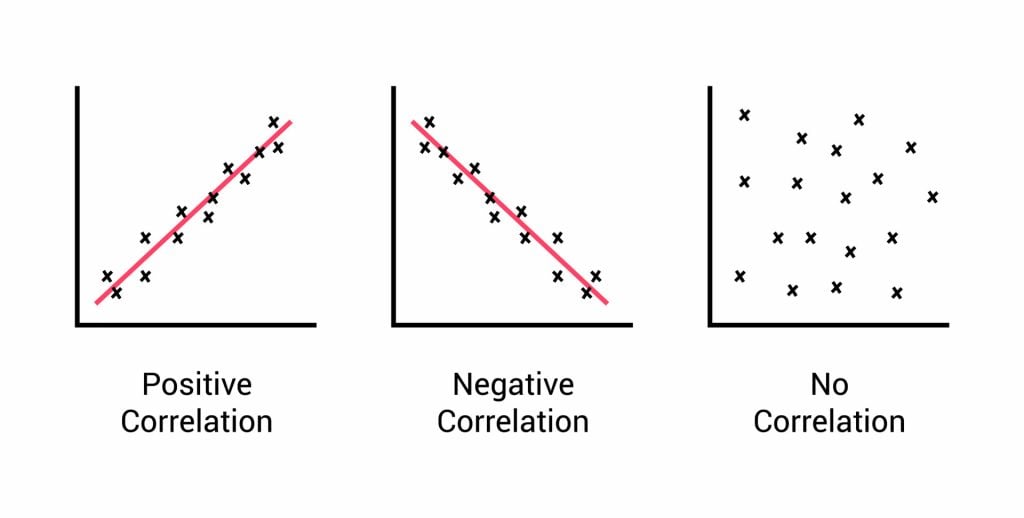

Quantitative research can include questionnaires with closed-ended questions (open ended questions belong in qualitative research). The data is transformed into numbers and will be analysed with parametric and non-parametric statistical tests. In general, you will derive a mean, mode and median; you will calculate probabilities, make correlation and regressions in order to draw conclusions.

Sources of data: primary vs secondary data

To answer a research question, there are many potential sources of data. Two main categories are primary data and secondary data. Primary data is newly collected data; it can be gathered directly from people's responses (surveys), or from their biometrics (blood pressure, weight, blood tests, etc.). It is still considered primary data if you gather data that was collected for other (medical) purposes by extracting the data from medical records. Medical records can be a rich source of data, but data extraction by hand takes a lot of time.

Secondary data already exists; it has already been published or complied. There are extant local, regional, national and international databases such as Trauma Registries, Disease-specific Registries, Public Health Data, government statistics, and World Health Organization data. Locally, your hospital or clinic may already keep statistics on any number of topics. Combining information from disparate databases may sometimes yield interesting results. For example, in the US, the Centers for Disease Control and Prevention keeps databases of reportable diseases, accidents, causes of death and much more. The US Geographic Survey reports the average elevation of American cities. Combining the two databases revealed that, even when gun ownership, drug and alcohol use were statistically controlled for, there was a linear correlation between altitude and suicide rates [ 2 ]. Reno et al., reviewed the existing medical literature (also secondary data), and confirmed the correlation and concluded that the mechanisms have yet to be elucidated [ 3 ].

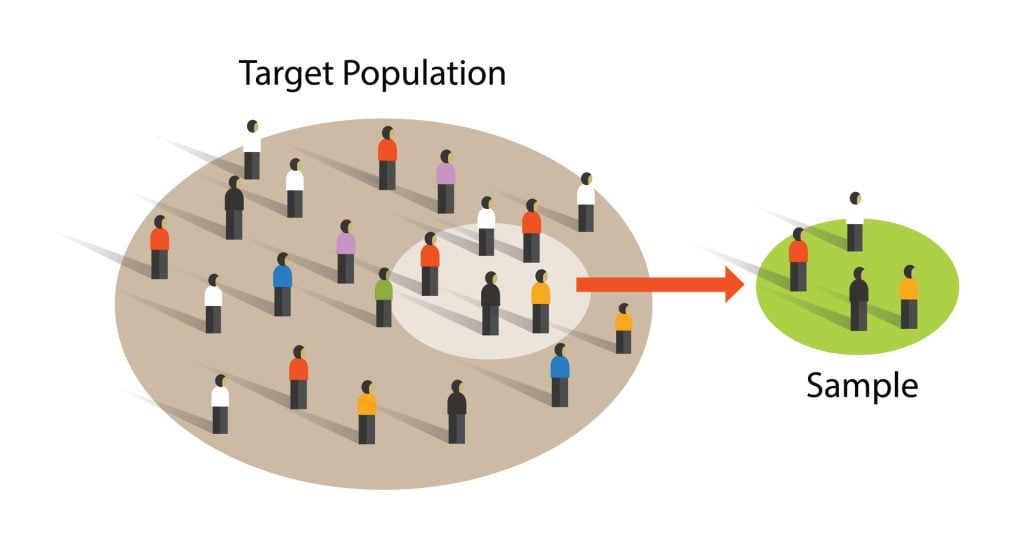

Collecting good data is often the hardest part of research. Ideally, you would want to collect 100% of the data (universal sampling to reflect target population). One example would be ‘all elderly persons with gout’. In real life, you have access to only a subset of the target population (the accessible population). Further, in your study you will be limited to a subset of the accessible population (the study population). Again, in the ideal world, that limited sample would be truly random, and have enough power to answer your question. You can find free random number generators online. In real life, you may have to settle for consecutive or convenience sampling. Of the two, consecutive sampling has less bias. Sometimes it is important to balance your groups. You may have 2 or 3 treatments (or interventions) and want to have an equal number of each kind. So, you create blocks — of a few times the number of treatments. You randomized within the block. Each time a block is filled, you are assured that you have the right balance of subjects. Blocks are often in groups of six, eight or 12. This is called balanced allocation .

If you must get only a convenience sample – for example because you only have a single data gatherer and can get data only when that person is available – you should, at a minimum, try to get some simple demographics from times when the data gatherer is not available, to see if subjects at that other time are systematically different. For example, if you are looking at injuries, people who are injured when drinking on a Friday night might be systematically different from people who are injured on their way to work on a Monday morning. If you can only collect injury data in the morning, your results will be biased.

Variables are the bits of data you collect. They change from subject to subject and describe the subject numerically. Age (or year of birth); gender; ethnic group or tribe; and geographic location are commonly called simple demographic variables and should be collected and reported for most populations.

Continuous variables are quantified on a continuous scale, such as body weight. Discrete variables use a scale whose units are limited to integers (such as the number of cigarettes smoked per day). Discrete variables have a number of possible values and can resemble continuous variables in statistical analysis and be equivalent for the purpose of designing measurements. A good general rule is to prefer continuous variables because the information they contain provides additional information and improves statistical efficiency (more study power and smaller sample size).

Categorical variables are those not suitable for quantification. They are often measured by classifying them into categories. If there are two possible values (dead or alive), they are dichotomous. If there are more than two categories, they can be classified according to the type of information they provide (polytomous).

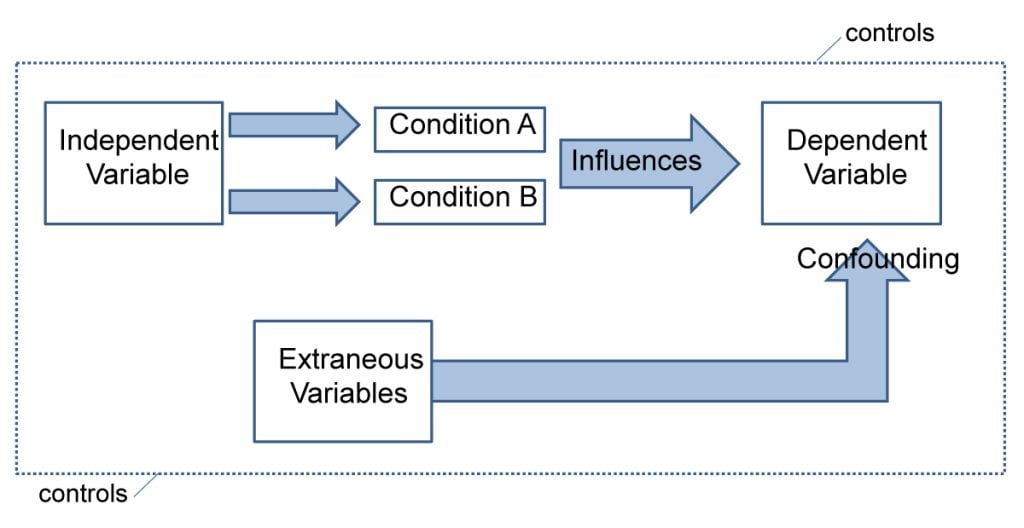

Research variables are either predictor (independent) or outcome (dependent) variables. The predictor variables might include such things as “Diabetes, Yes/No”, “Age over 65 — Yes/No”, and “diagnosis of hypertension” (again, Yes/No). The respective outcome might be “lower limb amputation” or “death within 10 years”. Your question might have been, “How much additional risk of amputation does a diagnosis of hypertension add in a person with diabetes?”

Before analysis, variables are coded into numbers and entered into a database. Your Research Manual should describe how to code all the data. When the variables are binary, (male/female; alive/dead) coding them into “0” and “1” makes analysing the data much easier (“1” versus “2” makes it harder). The easiest variables for computers to analyse are binary. In other words, “0” or “1”. Such variables are Yes/No; True/False; Male/Female; 65 or over / under 65, etc. The next easiest are ordinal integers: 1, 2, 3, etc. You might create ordinal numbers from categories (0–9; 10–19; 20–29 years of age, etc.), but in order to be ordinal, they require an obvious sequence. Categorical variables do not have an intrinsic order. “Green” “Brown” and “Orange” are non-ordinal, categorical variables. It is possible to transform categorical variables into binary variables, by making columns where only one of the answers is marked with a “1” (if that variable is present) and all the others are marked “0”. The form of the variables and their distribution will determine the type of statistical analysis possible. Data which must be transformed or cleaned is more prone to error in the cleaning or transformation process.

There are alternative ways to get similar information. For example, if you wanted to know the HIV status of each of your subjects, you could either test each one, or you could ask them. The tests cost more, however; they are less likely to give biased results. How you gather each variable will depend on your resources and will inform the limitations of your study.

Precision of a variable is the degree to which it is reproducible with nearly the same value each time it is measured. Precision has a very important influence on the power of a study. The more precise a measurement, the greater the statistical power of a given sample size to estimate mean values and test your hypotheses. In order to minimize random error in your data, and increase the precision of measurements, you should standardize your measurement methods; train your observers; refine any instruments you may use (such as calibrating instruments); automate instruments when possible (automated blood pressure cuff instead of manual); and repeat your measurements.

Accuracy of the variable is the degree to which it actually represents what it is intended to (Truth in the Universe). This influences the validity of the study. Accuracy is impacted by systemic error (bias). The greater the error, the less accurate the variable. Three common biases are: observer bias (how the measurement is reported); instrument bias (faulty function of an instrument); and subject bias (bad reporting or recall of the measurement by the study subject).

Validity is the degree to which a measurement represents the phenomenon of interest. When validating an abstract concept, search the literature or consult with experts so you can find an already validated data collection instrument (such as a questionnaire). This allows your results to be comparable to prior studies in the same area and strengthens your study methods.

Research manual

Simple research with limited resources does not need a research manual, just a protocol. Nor is there much need if the primary investigator is the only data gatherer and analyser. However, if several persons gather data, it is important that the data be gathered the same way each time.

Prevention is the most cost-effective activity that will ensure the integrity of data collection. A detailed and comprehensive research manual will standardize data collection. Poorly written manuals are vague and ambiguous.

The research manual is based off your protocol. The manual should spell out every step of the data collection process. It should include the name of each variable and specific details about how each variable should be collected. Contingents should be written. For example: “If the patient does not have a left arm, the blood pressure may be taken on the right arm. If the patient has no arms, leg blood pressures may be recorded, but put an ‘*’ beside the reading.” The manual should also include every step of the coding process. The coding manual should describe the name of each variable, and how it should be coded. Both the coder and the statistician will want to refer to that section. The coding section should describe how each variable will be entered into the database. Test the manual to make sure everyone understands it the same way.

Think about various ways a plan can go wrong. Write them down, with preferred solutions. There will always be unexpected changes. They should be added into the manual on a continuing basis. An on-going section where questions, problems and their solutions are all recorded will increase the integrity of your research.

Data collection methods

Before you start data collection, you need to ask yourself what data you are going to collect and how you are going to collect them. Which data, and the amount of data to be collected needs to be defined clearly. Different people (including several data collectors) should have a similar understanding of each variable and how it is measured. Otherwise, the data cannot be relied on. Furthermore, the decision to collect a piece of data needs to be justified. The amount of data collected for the study should be sufficient. A common mistake is to collect too much data without actually knowing what will be done with it. Researchers should identify essential data elements and eliminate those that may seem interesting but are not central to the study hypothesis. Collection of the latter type of data places an unnecessary burden on both the study participants and data collectors.

Different data collection approaches which are commonly used in the conduct of clinical research include questionnaire surveys, patient self-reported data, proxy/informant information, hospital and ambulatory medical records, as well as the collection and analysis of biologic samples. Each of these methods has its own advantages and disadvantages.

Surveys are conducted through administration of standardized or home-grown questionnaires, where participants are asked to respond to a set of questions as yes/no, or perhaps on a Likert type scale. Sometimes open-ended responses are elicited.

Medical records can be important sources of high-quality data and may be used either as the only source of data, or as a complement to information collected through other instruments. Unfortunately, due to the non-standardized nature of data collection, information contained in the medical records may be conflicting or of questionable accuracy. Moreover, the extent of documentation by different providers can vary significantly. These issues can make the construction or use of key study variables very difficult.

Collection of biological materials, as well as various imaging modalities, from the study participants are increasingly being used in clinical research. They need to be performed under standardized conditions, and ethical implications should be considered.

Data collection tool

You may need to collect information on paper. If you do, it is useful to have the actual code which should be entered into the computerized database written on the forms themselves (as well as in the manual). If you have access to an electronic database such as REDcap [a web-based application developed by Vanderbilt University to capture data for clinical research and create databases and projects [ 4 ], you can enter the data directly as you get them ( male ; female ) and the database will automatically convert the data into code. This reduces transcribing errors. Another common electronic database is Excel, which can also be used to manipulate the data. In spite of the advantages of recording data electronically, such as directly into REDcap or Excel, there are advantages to collecting and keeping the original data on paper. Paper data collection forms can be saved for audit or quality control. Furthermore, paper records cannot be remotely hacked. Moreover, if the anonymous electronic database is compromised or corrupted, you can re-create your database.

Data collectors

Good data collectors are worth gold. If they are thorough and ethical, you will get great data. If not, your data may be unusable. Make sure they understand research ethics, the need for protection of human subjects, and the privacy of data. Ideally, your data collectors would be blinded to the outcome of interest, to prevent bias. It is ok to blind data collectors to the research question, but they need to understand that collecting every variable the same way for each subject is essential to data integrity.

Data gatherers should be trained in advance of collecting any data. They need to understand informed consent and have the time to explain a study to the satisfaction of the subjects. The importance of conducting a dry run in an attempt to anticipate and address issues that can arise during data collection cannot be over-stated. It would even be worthwhile to pilot the research manual, to learn if everyone understands it the same way.

Data storage

Data collection, done right, protects the confidentiality of the subject as well as the data. Data must also be properly stored safely and securely. It is reasonable to back up your data in a different, secure, location. You do not want to go to all the trouble of creating a protocol, collecting your data, only to lose it, or have no way to analyse it!

There are many reasons to keep your data safe and secure. Obviously, you do not want to lose your data. You may wish to use the data again. For example, you may wish to combine it with other data for a different study. An additional reason is that you do not want your subjects to risk a ‘loss of privacy’. Still another reason is that institutions and governments may require you to store data for a specified number of years. Know how long you must keep your data. Keep it in a locked cabinet in a secure room, or behind an institutional firewall.

Furthermore, if you keep a cipher , that is, a connector between a subject and their study number, keep that cipher separate from the research data. That way, even if someone learns that subject 302 has an embarrassing condition, they will not know who subject 302 really is.

These days, almost everyone has access to computers and programs, locally or ‘in the cloud’. For statistical analysis, you will need to have your data in electronic form. If you started with paper, consider double entry (two data extractors for each record, then compare the two) for greater accuracy.

Tips on this topic and pitfalls to avoid

Hazard: no research manual.

- • No identified mechanism to document changes in procedures that may evolve over the course of the investigation.

- • Vague description of data collection instruments to be used in lieu of rigorous step-by-step instructions on administering tests

- • Only a partial listing of variables to be collected

- • Forgetting to put instructions on the data collection sheet about how to code the data when transferring to an electronic medium.

Hazard: no assistant training

- • Failure to adequately train data collectors

- • Failure to do a Dry Run/Failure to try enrolling a mock subject

- • Uncertainty about when, how and who should review gathered data.

Hazard: failure to understand data management

- • Data should be easy to understand, and the protocol good enough that another researcher can repeat the study.

- • Data audit: keep raw data and collected data

- • Failure to keep backups

Annotated bibliography

- 1. RCR Data Acquisition and Management. This online book is pretty comprehensive. http://ccnmtl.columbia.edu/projects/rcr/rcr_data/foundation/ (Accessed 2019 June 23)

- 2. Qualitative research – Wikipedia: en.wikipedia.org/wiki/Qualitative_research (Accessed 2019 June 23) – this is a good overview with references so you can delve deeper if you wish.

- 3. Qualitative Research: Definition, Types, Methods and Examples: https://www.questionpro.com/blog/qualitative-research-methods/ (Accessed 2019 June 23) – this is a good overview with references so you can delve deeper if you wish.

- 4. Qualitative Research Methods: A Data Collector's Field Guide: https://course.ccs.neu.edu/is4800sp12/resources/qualmethods.pdf (Accessed 2019 June 23) – another on-line resource about data collection.

Additional reading about statistical variables

- 1. Types of Variables in Statistics and Research: A List of Common and Uncommon Types of Variables. https://www.statisticshowto.datasciencecentral.com/probability-and-statistics/types-of-variables/

- 2. Research Variables: Dependent, Independent, Control, Extraneous & Moderator. https://study.com/academy/lesson/research-variables-dependent-independent-control-extraneous-moderator.html

- 3. Knatterud GL. Rockhold FW. George SL. Barton FB. Davis CE. Fairweather WR. Honohan, T. Mowery R. O'Neill R. (1998). Guidelines for quality assurance in multicenter trials: a position paper. Controlled Clinical Trials, 19:477–493.

- 4. Whitney CW. Lind BK. Wahl PW. (1998). Quality assurance and quality control in longitudinal studies. Epidemiologic Reviews, 20 [ 1 ]: 71–80.

Additional relevant information to consider

Consider who owns the data before and after collection (this brings up questions of consent, privacy, sponsorship and data-sharing, most of which are beyond the scope of this paper).

Authors' contribution

Authors contributed as follow to the conception or design of the work; the acquisition, analysis, or interpretation of data for the work; and drafting the work or revising it critically for important intellectual content: ES contributed 70%; VT, MJ and HS contributed 10% each. All authors approved the version to be published and agreed to be accountable for all aspects of the work.

Declaration of competing interest

The authors declared no conflicts of interest.

Primary Data: Definition, Examples & Collection Methods

Introduction

What is meant by primary data, what is the difference between primary and secondary data, what are examples of primary data, primary data collection methods, advantages of primary data collection, disadvantages of primary data collection, ethical considerations for primary data.

Understanding the type of data being analyzed is crucial for drawing accurate conclusions in qualitative research. Collecting primary data directly from the source offers unique insights that can benefit researchers in various fields.

This article provides a comprehensive guide on primary data, illustrating its definition, how it stands apart from secondary data , pertinent examples, and the common methods employed in the primary data collection process. Additionally, we will explore the advantages and disadvantages associated with primary data acquisition.

Primary data refers to information that is collected firsthand by the researcher for a specific research purpose. Unlike secondary data, which is already available and has been collected for some other objective, primary data is raw and unprocessed, offering fresh insights directly related to the research question at hand. This type of data is gathered through various methods such as surveys , interviews , experiments, and observations , allowing researchers to obtain tailored and precise information.

The main characteristic of primary data is its relevancy to the specific study. Since it is collected with the research objectives and questions in mind, it directly addresses the issues or hypotheses under investigation. This direct connection enhances the validity and accuracy of the research findings, as the data is not diluted or missing important information relevant to the research question.

Moreover, primary data provides the most current information available, making it especially valuable in fast-changing fields or situations where timely data is crucial. By analyzing primary data, researchers can draw unique conclusions and develop original insights that contribute significantly to their field of study.

Understanding the distinction between primary and secondary data is fundamental in the realm of research, as it influences the research design , methodology , and analysis . Primary data is information collected firsthand for a specific research purpose. It is original and unprocessed, providing new insights directly relevant to the researcher's questions or objectives. Common methods of collecting data from primary sources include observations , surveys , interviews , and experiments, each allowing the researcher to gather specific, targeted information.

Conversely, secondary data refers to information that was collected by someone else for a different purpose and is subsequently used by a researcher for a new study. This data can come from a primary source such as an academic journal, a government report, a set of historical records, or a previous research study. While secondary data is invaluable for providing context, background, and supporting evidence, it may not be as precisely tailored to the specific research questions as primary data.

The key differences between these two types of data also extend to their advantages and disadvantages concerning accessibility, cost, and time. Primary data is typically more time-consuming and expensive to collect but offers specificity and relevance that is unmatched by secondary data. On the other hand, secondary data is usually more accessible and less costly, as it leverages existing information, although it may not align perfectly with the current research needs and might be outdated or less specific.

In terms of accuracy and reliability, primary data allows for greater control over the quality and methodology of the data collected, reflecting the current scenario accurately. However, secondary data's reliability depends on the original data collection's accuracy and the context in which it was gathered, which might not be fully verifiable by the new researcher.

Synthesizing primary and secondary data

While primary and secondary data each have distinct roles in research, synthesizing both types can provide a more comprehensive understanding of the research topic . Integrating primary data with secondary data allows researchers to contextualize their firsthand findings within the broader literature and existing knowledge.

This approach can enhance the depth and relevance of the research, providing a more nuanced analysis that leverages the detailed, current insights of primary data alongside the extensive, contextual background of secondary data.

For example, primary data might offer detailed consumer behavior insights, which researchers can then compare with broader market trends or historical data from secondary sources. This synthesis can reveal patterns, corroborate findings, or identify anomalies, enriching the research's analytical value and implications.

Ultimately, combining primary and secondary data helps build a robust research framework, enabling a more informed and comprehensive exploration of the research question .

Primary data collection is a cornerstone of research in the social sciences, providing firsthand insights that are crucial for understanding complex human behaviors and societal structures. This direct approach to data gathering allows researchers to uncover rich, context-specific information.

The following subsections highlight examples of primary data across various social science disciplines, showcasing the versatility and depth of these research methods.

Economic behaviors in market research

Market research within economics often relies on primary data to understand consumer preferences, spending habits, and decision-making processes. For instance, a study may collect primary data through surveys or interviews to gauge consumer reactions to a new product or service.

This information can reveal economic behaviors, such as price sensitivity and brand loyalty, offering valuable insights for businesses and policymakers.

Voting patterns in political science

In political science, researchers collect primary data to analyze voting patterns and political engagement. Through exit polls and surveys conducted during elections, researchers can obtain firsthand accounts of voter preferences and motivations.

This data is pivotal in understanding the dynamics of electoral politics, voter turnout, and the influence of campaign strategies on public opinion.

Cultural practices in anthropology

Anthropologists gather primary data to explore cultural practices and beliefs, often through ethnographic studies . By immersing themselves in a community, researchers can directly observe rituals, social interactions, and traditions.

For example, a study might focus on marriage ceremonies, food customs, or religious practices within a particular culture, providing in-depth insights into the community's way of life.

Social interactions in sociology

Sociologists utilize primary data to investigate the intricacies of social interactions and societal structures. Observational studies , for instance, can reveal how individuals behave in group settings, how social norms are enforced, and how social hierarchies influence behavior.

By analyzing these interactions within settings like schools, workplaces, or public spaces, sociologists can uncover patterns and dynamics that shape social life.

Quality research starts with powerful analysis tools

Make ATLAS.ti your solution for qualitative data analysis. Download a free trial.

Primary data collection is an integral aspect of research, enabling investigators to gather fresh, relevant data directly related to their study objectives. This direct engagement provides rich, nuanced insights that are critical for in-depth analysis. Selecting the appropriate data collection method is pivotal, as it influences the study's overall design, data quality, and conclusiveness.

Below are some of the different types of primary data utilized across various research disciplines, each offering unique benefits and suited to different research needs.

In-person and online surveys collect data from a large audience efficiently. By utilizing structured questionnaires, researchers can gather data on a wide range of topics, such as attitudes, preferences, behaviors, or factual information.

Surveys can be distributed through various channels, including online platforms, phone, mail, or in-person, allowing for flexibility in reaching diverse populations.

Interviews provide an in-depth look into the respondents' perspectives, experiences, or opinions. They can range from highly structured formats to open-ended, conversational styles, depending on the research goals.

Interviews are particularly valuable for exploring complex issues, understanding personal narratives, and gaining detailed insights that are not easily captured through other methods.

Focus groups

Focus groups involve guided discussions with a small group of participants, allowing researchers to explore collective views, uncover trends in perceptions, and stimulate debate on a specific topic.

This method is particularly useful for generating rich qualitative data, understanding group dynamics, and identifying variations in opinions across different demographic groups.

Observations

Observational research involves systematically watching and recording behaviors and interactions in their natural context. It can be conducted in various settings, such as schools, workplaces, or public areas, providing authentic insights into real-world behaviors.

The observation method can be either participant, where the observer is involved in the activities, or non-participant, where the researcher observes without interaction.

Experiments

Experiments are a fundamental method in scientific research, allowing researchers to control variables and measure effects accurately.

By manipulating certain factors and observing the outcomes, experiments can establish causal relationships, providing a robust basis for testing hypotheses and drawing conclusions.

Case studies

Case studies offer an in-depth examination of a particular instance or phenomenon, often involving a comprehensive analysis of individuals, organizations, events, or other entities.

This method is particularly suited to exploring new or complex issues, providing detailed contextual analysis, and uncovering underlying mechanisms or principles.

Ethnography

As a key method in anthropology, ethnography involves extended observation of a community or culture, often through fieldwork. Researchers immerse themselves in the environment, participating in and observing daily life to gain a deep understanding of social practices, norms, and values.

Ethnography is invaluable for exploring cultural phenomena, understanding community dynamics, and providing nuanced interpretations of social behavior.

Primary data collection is a fundamental aspect of research, offering distinct advantages that enhance the quality and relevance of study findings. By gathering high-quality primary data firsthand, a research project can obtain specific, up-to-date information that directly addresses their research questions or hypotheses. This section explores four key advantages of primary data collection, highlighting how it contributes to robust and insightful research outcomes.

Specificity

One of the most significant advantages of primary data collection is its specificity. Data gathered firsthand is tailored specifically to the research question or hypothesis, ensuring that the information is directly relevant and applicable to the study's objectives. This level of specificity enhances the precision of the research, allowing for a more targeted analysis and reducing the likelihood of extraneous variables influencing the results.