Engineering: The Literature Review Process

- How to Use This Guide

- 1. What is a Literature Review?

- 2. Precision vs Retrieval

- 3. Equip Your Tool Box

- 4. What to look for

- 5. Where to Look for it

- 6. How to Look for it

- 7. Keeping Current

Your TBR (To Be Read) Pile

Tips for getting through that tbr pile, how to read a research article, using a literature matrix.

- 9. Writing Tips

- 10. Checklist

You've been searching the literature gathering documents -- lots of documents. Your file in a citation manager for this project is bulging and if you've printed out those documents, the pile is huge.

Here's some advice on how to manage all this reading material.

- Tips For Getting Through Your TBR Pile

- Using a Literature Matrix

- Set Goals Decide the date when you need to complete your reading list. How many days away is that from today? How many documents are in your list? If you've got 200 articles to read and you want to accomplish that within a month, you'll need to be reading 6-7 articles each day or about 50 each week.

- Schedule Reading "Appointments" on Your Calendar Don't leave this up to chance. Make an appointment with yourself as often as needed - an hour or two each day, several times a week - whatever you need. And then stick to it; don't cancel your reading time for other activities.

- Always Have Your Reading Available You never know when you might get the "gift of time" so whether you prefer digital or print, carry the items you need to read with you. Whether riding the bus, waiting for someone to arrive for an appointment, standing in line, or riding that exercise bike, take advantage of these short breaks to pull out the next item in your reading pile.

The following articles recommend ways to read and digest a research article ...

- Keshav, S. How to Read a Paper . University of Waterloo (Ontario, Canada) August 2, 2013. "The key idea is that you should read the paper in up to three passes, instead of starting at the beginning and plowing your way to the end. Each pass accomplishes specific goals and builds upon the previous pass: The first pass gives you a general idea about the paper. The second pass lets you grasp the paper’s content, but not its details. The third pass helps you understand the paper in depth."

- Ling, Charles X. and Yang, Qiang. Crafting Your Research Future: A guide to Successful Master’s and Ph.D. Degrees in Science & engineering . Morgan & Claypool, 2012. Synthesis Lectures on Engineering #18. Section 3.3: How to Read Papers presents advice on what parts of a journal article to read and what concepts to look for.

- Purugganan, Mary and Hewitt, Jan. How to Read a Scientific Article . Rice University, Cain Project in Engineering and Professional Communication. 2004. "Reading a scientific article is a complex task. The worst way to approach this task is to treat it like the reading of a textbook ..."

In your review you will need to "group" the literature that you've found. As you'll see in the next section, you can group by theme, method, topic, chronology, and other issues. To keep track of what each document says and which ones have similar information, create a literature matrix. The matrix will consist of a table, with each row representing a specific document. Each column in the table will represent different issues, topics, themes, etc. Once the table is filled out and you re-sort by the different columns, the patterns in the literature will stand out.

Here's some articles that show how to do a matrix.

- Writing a Literature Review and Using a Synthesis Matrix. VCU/NCSU

- Literature Review: Synthesizing Multiple Sources . VCU/IUPUI See the literature review grid on page 2.

- << Previous: 7. Keeping Current

- Next: 9. Writing Tips >>

- Last updated: Jan 2, 2024 8:27 AM

- URL: https://libguides.asu.edu/engineeringlitreview

The ASU Library acknowledges the twenty-three Native Nations that have inhabited this land for centuries. Arizona State University's four campuses are located in the Salt River Valley on ancestral territories of Indigenous peoples, including the Akimel O’odham (Pima) and Pee Posh (Maricopa) Indian Communities, whose care and keeping of these lands allows us to be here today. ASU Library acknowledges the sovereignty of these nations and seeks to foster an environment of success and possibility for Native American students and patrons. We are advocates for the incorporation of Indigenous knowledge systems and research methodologies within contemporary library practice. ASU Library welcomes members of the Akimel O’odham and Pee Posh, and all Native nations to the Library.

University of Illinois Chicago

University library, search uic library collections.

Find items in UIC Library collections, including books, articles, databases and more.

Advanced Search

Search UIC Library Website

Find items on the UIC Library website, including research guides, help articles, events and website pages.

- Search Collections

- Search Website

- UIC Library

- Subject and Course Guides

- Engineering

- How to read a scientific paper

Engineering: How to read a scientific paper

- Articles, Standards, and Databases

- Books & Reference Resources

- Engineering Data Repositories

- Funding Sources

- Managing Your Data

- Citation Managers

- Job Search Resources

- Writing Help

- Open Engineering Resources

Structure of a Technical Paper

How to Read a Scientific Paper

- How to Read a Paper A short work on how to read academic papers, organized as an academic paper. Some of the advice on doing a literature survey works better in the author's field (CS) but most the material works for everyone.

- How to Read a Research Paper Part of an assignment on how to read academic papers for a CS class, it describes some strategies and lays out some expectations in terms of time and effort that should be useful.

- How to Read Scientific Papers Without Reading Every Word A blog post that gives a similar but differently worded take on the same issue.

- How to read Mathematics An article discussing how to go about reading a math article or chapter.

Health Promotion Practice. (2020). How to Read a Scholarly Article [Infographic].

http://healthpromotionpracticenotes.com/2020/07/new- tool-how-to-read-a-scholarly-article-infographic/

Assistant Professor and Reference & Liaison Librarian (STEM)

- << Previous: Citation Managers

- Next: Job Search Resources >>

- Last Updated: Jun 17, 2024 4:44 PM

- URL: https://researchguides.uic.edu/engineering

Unfortunately we don't fully support your browser. If you have the option to, please upgrade to a newer version or use Mozilla Firefox , Microsoft Edge , Google Chrome , or Safari 14 or newer. If you are unable to, and need support, please send us your feedback .

We'd appreciate your feedback. Tell us what you think! opens in new tab/window

Infographic: How to read a scientific paper

April 5, 2021 | 3 min read

By Natalia Rodriguez

Mastering this skill can help you excel at research, peer review – and writing your own papers

Much of a scientist’s work involves reading research papers, whether it’s to stay up to date in their field, advance their scientific understanding, review manuscripts, or gather information for a project proposal or grant application. Because scientific articles are different from other texts, like novels or newspaper stories, they should be read differently.

Research papers follow the well-known IMRD format — an abstract followed by the I ntroduction, M ethods, R esults and D iscussion. They have multiple cross references and tables as well as supplementary material, such as data sets, lab protocols and gene sequences. All those characteristics can make them dense and complex. Being able to effectively understanding them is a matter of practice.

You can use ScienceDirect’s recommendations service to find other articles related to the work you’re reading. Once you've registered opens in new tab/window , the recommendations engine uses an adaptive algorithm to understand your research interests. It can then find related content from our database of more than 3,800 journals and over 37,000 book titles. The more frequently you sign in, the better it gets to know you, and the more relevant the recommendations you'll receive. Reading a scientific paper should not be done in a linear way (from beginning to end); instead, it should be done strategically and with a critical mindset, questioning your understanding and the findings. Sometimes you will have to go backwards and forwards, take notes and have multiples tabs opened in your browser.

LennyRhine. “ How to Read a Scientific Paper opens in new tab/window ,” Research4Life Training portal

Valerie Matarese, PhD (Ed). “ Usingstrategic, critical reading of research papers to teach scientific writing opens in new tab/window ,” Supporting Research Writing: Rolesand challenges in multilingual settings,” Chandos Publishing, Elsevier (2012)

Allen H. Renear, PhD, and Carole L. Palmer, PhD. " StrategicReading, Ontologies, and the Future of Scientific Publishing opens in new tab/window ," Science (2009).

Angel Borja, PhD. “ 11 steps to structuring a science paper editors will take seriously ,” Elsevier Connect (June 24, 2014)

Mary Purugganan, PhD, and Jan Hewitt, PhD. “ How to Read a Scientific Article opens in new tab/window ,” Cain Project in Engineering andProfessional Communication, Rice University

“How to Read and Review a Scientific Journal Article,”Writing Studio, Duke University

Robert Siegel, PhD. “ ReadingScientific Papers opens in new tab/window ,” Stanford University

Related resources

Elsevier Researcher Academy opens in new tab/window Free e-learning modules developed by global experts; career guidance and advice; research news on our blog.

Research4Life Training Portal opens in new tab/window : A platform with free downloadable resources for researchers. The Authorship Skills section contains 10 modules, including how to read and write scientific papers, intellectual property and web bibliography along with hands-on activity workbooks.

Career Advice portal of Elsevier Connect : Stories include tips for publishing in an international journal, how to succeed in a PhD program, and how to make your mark in the world of science.

Contributor

Natalia Rodriguez

How To: Read an Academic Paper

To start the course, you’re going to be reading a bunch of papers, and so a good place to start is with: How do you read an academic paper? In some ways, that’s putting the cart before the horse because to read a paper, you need to find it — but we’ll assume you’re starting off using the course library, so finding something to get started with should be fine

How Not to Read an Academic Paper

This prompt might sound silly. How do you read an academic paper? Well, you start on page 1, and read to the last page, right?

You could do that , but what you’ll find is that you spend a lot of time on the far right side of the curve of diminishing returns . So, you don’t want to just start on page 1 and read to the last page. What should you do instead? To understand that, it’s important to understand a little bit about how most academic papers are organized.

How Academic Papers are Organized

Most academic papers follow something like this structure:

- Abstract: A high-level summary of the entire paper

- Introduction and Background: What the problem or question is and where it came from

- Related Work: What others have done in this space, likely specifically contextualizing why the authors’ solution is necessary

- Solution: What the authors did to address the problem or answer the question

- Methodology: How the authors evaluated their solution

- Results: The results of evaluating the solution

- Analysis: The authors’ interpretation of the results

- Conclusion: The limitations of the work and what the authors will do next

There is lots of variation there, of course. Pure research papers don’t really have a “Solution” section since they’re often not building something. If a paper was based exclusively on doing some surveys or interviews, for example, it likely would jump straight to methodology. Similarly, there are papers that don’t do much evaluation: they propose a design of some tool or theory, but the contribution is the idea itself.

Generally, though, you can map most papers onto something resembling this structure.

Intention Matters: Getting Started

What you do next depends on why you’re reading a particular paper. What you’re seeking to get out of it matters.

Early in the class, you’re probably just trying to get a high-level view of lots of work going on in a field. For that: start by reading the Abstract. Sometimes, that will be all you need from that paper. That’s ok. You may find that once you settle on an area you want to explore, you might just read a bunch of abstracts to get a view of the field.

When you’re just starting out, though, you probably want to go just a bit deeper. You want to use this paper as an anchor for further exploration. Read the Introduction next. See what problem they’re setting up and how they approach it. Then, jump to the conclusion. See what they found. Oftentimes, this is enough. Then, if you want to find more papers like this one, jump back to the related work section and see what you might want to read next. Your goal here is just to get a feel for what they did and why: once you know what a bunch of people have done and why they’ve done it, you can start to position your own work and take a deeper dive.

For the first couple weeks of this class, that’s about where you’ll stay: you want to get a broad look at what lots of people are working on and start to understand the overall trajectories of the field. You don’t need to get too far into the details of how they did stuff or how they know it worked.

Intention Matters: Zooming In

Once you’re comfortable zooming in on an area, though, your intention shifts a little bit. Now it’s less “Know what others are doing” and more “See what needs to be done”. With that change in intention comes a slight change in how you read. Now, you want to focus a little more on the Future Work and/or Limitations sections of the paper. What do the authors say still needs to be done? That could be more work to expand on the current state of the field, or it could be work to resolve limitations in the existing study. For example, imagine a tool was tested with 15 middle school students and found to be good for learning: does that hold true when tested with 150 students in a less controlled environment? Those are the kinds of things that come next.

At this point, you may also want to finally visit the methodology section, especially if there’s a paper whose conclusions you disagree with. You want to find out how they came to their conclusions, and see if there’s an alternate explanation for their results. Your follow-up work then might be to test that alternate explanation.

In any case, your goal here is to figure out exactly how your new work is going to map to the work that’s already been done: it might fill in some holes, push some boundaries, or even refute existing ideas.

So Why Is It There?

You might be wondering: if I really only need to read the introduction and conclusion, why is the rest of the stuff even there? Here, it’s important to remember that as someone doing research in these areas, you aren’t the original target audience of this publication. The target audience was the academic community in which it was published, and the goal of the paper was to convince that community that the conclusions of the paper are valid and properly scoped. The methodology, raw results, etc. are all in service of that goal: to convince the community that the paper’s conclusions are believable.

You’re welcome to read those areas, too, and come to your own conclusions, and as suggested above, if you’re working on something super-similar to what someone else has done, you probably want to do that. But for the vast majority of your reading, it’s usually sufficient to know, “This paper was selected for publication by this respected venue after a rigorous process of peer review.” That basically says, “If it’s good enough for them, it’s good enough for me.”

You Can Put the Cart Before the Horse

This entire write-up is written from the perspective of someone coming into this world with no prior ideas looking for a problem to solve. However, for many of you, you already have some ideas. You might want to build an intelligent tutoring system for your daughter’s Algebra class, or research whether bring your own technology initiatives improve learning outcomes in disadvantaged areas.

None of the above should suggest that you can’t do that. Rather, your existing ideas just give you a clearer anchor on where to start. If you already know what you’d like to work on, start with that area of the literature, and read with a particular eye toward developing your own idea. It’s a near-certainty that others have done something like what you want to do, but they may have done it in a different domain, a different grade level, with a different technology, etc. If you already know what you want to do, then your goal for this phase of the class is to find out how to put your ideas in the context of the community, as well as to make sure you’re building off whatever lessons have already been learned.

The biggest mistake people make in this class is to assume that their ideas are totally new, and therefore they do not need to look at what others have done. This is never true. Even if your idea is very different from others, there are analogues to others’ work from which you can likely learn. And even if your idea somehow is totally new, you need to be able to explain what makes it new, which requires understanding what others have done.

But I’m getting ahead of myself. We’ll talk about this more when we talk about how to find additional sources later in the week.

Research vs. Tools

You may notice much of this applies to academic research, but many of y’all are looking at developing tools or courseware. The same principles apply, however. You’re looking for what others have done in an area to get a general feel, and then you’re zooming in to your specific competitors or collaborators. So, the skillset we’re describing generally transfers. The big difference in research is that other researchers are usually far more open about reporting their results, and peer review keeps claims a bit more honest. So, developing these skills in academia is a great exercise even if you’re planning on taking a more business-oriented route: the skills are the same, but the business world is more guarded in the data it makes available.

In fact, in many ways, these skills are even more applicable to the business world. If you ever take a class on Entrepreneurship or go through a startup incubator, one of the lessons they’ll drill into your head is that if you think no one has done your idea before, then you haven’t looked hard enough. Nearly every idea has been explored; the question is always: what are you going to do better, or different?

But Don’t Take My Word For It…

Reading academic papers is a well-explored topic. Others have their own takes. If you want more on this, I recommend starting with UBC’s How to Read an Academic Paper video . It’s pretty similar to what I’ve written here, but with a more narrative visual style, so it’s a bit more approachable and digestible.

Then, I’d read Adam Ruben’s “How to read a scientific paper” , in particular his “10 Stages of Reading a Scientific Paper”. It won’t really help you that much, but it’ll reassure you that you’re not alone in finding this somewhat intimidating.

And then I’d read Elisabeth Pain’s “How to (seriously) read a scientific paper” . She asked several scientists how they approach it. I recommend this article because it also gives you a broader diversity of perspectives: maybe something else would work better for you. I’d also recommend William Griswold’s “How to Read an Engineering Research Paper” , especially for more design- and implementation-oriented papers rather than user research and testing papers (thanks to Chu for suggesting this one!).

You’ll rarely read entire papers. Instead, read just enough to get what you need. If you’re just trying to get a high-level feel for the work, read abstracts and conclusions. If you’re trying to understand the field as a whole, read the introduction and related work. If you’re trying to specifically position your work relative to a certain paper, read the methodology, analysis, future work, and limitations sections.

Next time, we’ll talk about how to find papers to read.

InterviewReady

System design - gaurav sen.

Hello World!

The future of reading

Rebecca Silverman is an expert in how humans learn to read.

It’s a complex process, she says. First we must connect letters and sounds to decode words in texts. Researchers know a lot about the decoding process and how to teach it. But, beyond that, we must also comprehend what the words in texts are conveying. Comprehension is complex, and researchers know much less about the comprehension process and how to teach it, Silverman tells host Russ Altman on this episode of Stanford Engineering’s The Future of Everything podcast.

Listen on your favorite podcast platform:

Related : Rebecca Silverman , associate professor of education

[00:00:00] Rebecca Silverman: One of the hardest things that kids learn to do in school, um, that is actually not very natural for them and is a, it's kind of a huge hurdle for them to overcome and actually allows them to access everything else in school. You know, math has word problems in it, science they need to read the textbook, social studies they need to, you know, understand source documents so that in order for them to access the rest of education, um, so you really need to be able to unlock this very complex problem of learning to read.

[00:00:37] Russ Altman: This is Stanford Engineering's The Future of Everything, and I'm your host, Russ Altman. If you enjoy The Future of Everything, please follow or subscribe wherever you get your podcasts. This will guarantee that you'll never miss an episode.

[00:00:49] Today, Rebecca Silverman will tell us that learning to read is hard. First you need to learn to decode the letters and turn them into words. Then you need to comprehend what's being said. It turns out that that comprehension part is the hardest. It's the future of reading.

[00:01:06] Before I get started, please remember to follow the podcast if you're not doing so already. And if you're listening on Spotify, hit the bell icon. This ensures that you'll get alerted to the new episodes. And as I love to say, you won't miss the future of anything.

[00:01:22] Now, reading is key to life and to learning. We read all the time, we see signs, we automatically read the words that are presented to us. But most of us learned how to read as a kid, and there's huge variability in that experience. Some kids learn very early, remarkably early. Others take some time, others may struggle with it for much longer periods of time. Different cultures have different challenges in learning how to read because they have different letters. Some cultures have easy letters, some cultures have more difficult to read symbols. And that can affect the speed at which people learn to read.

[00:02:04] But really, reading is not just, yes, I can read, no, I can't read. It's a lifelong experience of getting better and better at decoding the words and then understanding what the author is trying to tell you.

[00:02:16] Well, Rebecca Silverman is a professor of education at Stanford University. She's an expert at literacy, child development, and how people learn how to read. She studies this from the perspective of the students, of the teachers, of the families, and of the technologies that are becoming available to help kids and others learn how to read.

[00:02:37] Rebecca, how should we think about reading? Like, it's more than just looking at letters and coming up with a word. I think, and your research has shown this. So, as we start this conversation, how should I think about the process of reading and acquiring the ability to read?

[00:02:55] Rebecca Silverman: So, I think as adults, many of us, uh, think of reading as just almost second nature. It's just something we can't, we almost can't help but read the signs on the street. We can't help but, you know, read the magazine covers in the, the grocery aisle. Um, but when we think about kids learning to read or even adults who've never read before, it is an incredibly complex task. And so, I think the way we should think about it is one of the hardest things that kids learn to do in school, um, that is actually not very natural for them and is a, it's kind of a huge hurdle for them to overcome. Um, and actually allows them to access everything else in school.

[00:03:37] You know, math has word problems in it, science, they need to read the textbook, social studies, they need to, you know, understand source documents. So that in order for them to access the rest of education, um, they really need to be able to unlock this very complex problem of learning to read.

[00:03:53] Russ Altman: And it's intimately connected, I believe, and I think you've written about this, it's connected to, um, their understanding of like narrative and stories. It's, not just decoding single words, but it becomes decoding sentences at a time. So how do you break down the elements of reading when you try to study it kind of scientifically?

[00:04:14] Rebecca Silverman: Yeah, so research has of, um, gelled on this. It's a very simple model of reading. It's kind of a way to think about reading as the combination of decoding and linguistic comprehension. So decoding is putting together those letters and sounds, linguistic comprehension is understanding the meanings of the words and concepts that, you know, those sounds create. And so when you put those together, you've got reading, essentially.

[00:04:40] What we know and what we, um, have figured out pretty well is how to teach the connecting letters and sounds. Um, it's a fairly, um, constrained process. You know, there's only so many letters, only so many sounds we need to learn. Um, and once we've done that, we become automatic and we can decode. Um, the comprehension part of it is a lot more challenging. Um, the way that we put together words into sentences, the way that, um, words mean different things in different contexts, all of that is incredibly complex, um, and takes children a lifetime to learn. I mean, we're still learning words even this day, you know, new words are being invented as we speak.

[00:05:18] So, um, all of that, you know, creates a huge kind of challenge. And one of the things that, I think one of the things that's that we've discovered in education is that reading comprehension is one of the most difficult things to change in terms of being able to do an intervention and change that that trajectory for kids.

[00:05:34] So we're still trying to figure it out. We've got the decoding down pretty well. We kind of understand what we're doing there. There's a lot less consensus on the comprehend, the linguistic comprehension part of things.

[00:05:44] Russ Altman: Yeah, so tell me more about that because I'm not even sure I know what it means to say, um, you would change somebody's reading comprehension. Do you mean that their depth of understanding of the text that they're reading? And when you say it's difficult to change, do you mean over time? Um, if they're not great at it, it's harder to get them to become great at it?

[00:06:04] Rebecca Silverman: Right. So with decoding, um, when we're teaching letters and sounds, we can teach those in a fairly linear trajectory. Um, we can do that over a fairly, um, constrained period of time. Um, and then once kids know that, they become fluent and they take off. With reading comprehension, um, you're involving things like understanding word meaning, understanding how words change, um, in different contexts, understanding how sentences are put together, how those sentences combine to make paragraphs. How those paragraphs combine to make larger text, um, and then you need text structure, you need background knowledge, you need all of this stuff to put those ideas together. Um, and that, intervening on that, you know, kind of when, if kids have challenges with that, um, it, we can't do it in a few weeks. We can't do it even in a few months. Uh, for many children, we need to focus on this year after year after year to give them support in understanding how language, uh, connects and is constructed in order to make meaning in text. So it's a big project.

[00:07:06] Russ Altman: Makes perfect sense. And I even see it, you know, teaching undergraduates at Stanford University how to write, that they come in with very different abilities to make the mappings that you were just discussing.

[00:07:17] Rebecca Silverman: Yeah, absolutely.

[00:07:18] Russ Altman: And it is hard to move them, although we can move them, that's why we're teachers. We're committed to the idea that we can help our students get better. Okay, my head is exploding with questions, so I want to go back to a few basic things. How much variability do we see in the acquisition of reading, uh, in typical children?

[00:07:36] So I'm, of course there will be children with disabilities, and I don't know if you want to include them or put them aside. But for children who don't have obvious disabilities, how much variability do we see and what are the sources of those variability?

[00:07:47] Rebecca Silverman: So we see huge variability. Some children, um, we estimate like roughly five, uh, to twenty percent of kids learn to read fairly quickly. They comprehend things fairly easily. Um, another say, forty, thirty, thirty to forty percent of kids, they struggle a little bit, but they need some instruction. And then you've got a good, you know, twenty to thirty percent of the kids who really have difficulty. Um, and those difficulties could come from decoding, could come from linguistic comprehension. It could also come from a variety of other things like their memory ability, their, um, their executive functioning ability, um, their motivation, their engagement, their, their, uh, socioemotional, uh, context. So there's so many things that go into, um, what it takes to become a reader that, um, the individual variation is quite large.

[00:08:38] And so, um, when my daughter entered kindergarten, she didn't know how to read very well. Um, she was learning her letters and sounds like most kindergartners, but there was a kid in her class of the same sort of socioeconomic background, um, who was reading chapter books. You know, this is in kindergarten. So, you know, you just think of the range that is in any given class, any given, group of kids and it's huge and it's a huge challenge for teachers, too.

[00:09:04] Russ Altman: I'm sure it is, and do they have an, uh, a knowledge about how much of this is environmental versus kind of inborn, and, and I don't even know how relevant that question is but I’m sure people have shown some interest in it.

[00:09:15] Rebecca Silverman: Yeah, absolutely and it's kind of like a nature nurture interaction you know, there's a certain extent to which um kids are bored with certain you know, linguistic abilities, certain memory capabilities, those kinds of things are gonna feed into their ability to learn to read.

[00:09:26] And then there's the environmental part, you know, how much exposure do they have to text? How much language do they have in their environment? Um, how many conversations are they having about books, all of these things? And so when you combine those two things, you get lots of different combinations. And so that's why we can't, you know, we can't say just because somebody is in this environment, that they're gonna be this kind of reader. Or just because they're born this way they're going to be this kind of reader. It's really the interaction between the two.

[00:09:55] Russ Altman: What about cross culturally? Do we see the same kind of timing and acquisition of reading skills? I'm thinking of, you know, Chinese and Hebrew and then European languages. They're all very different even in terms of what the letters look like and left to right, right to left, whether they're pictures or whether they're phonetic. Tell me about the global picture of reading.

[00:10:15] Rebecca Silverman: Sure. So, um, those two basic components that I talked about, the decoding and the language comprehension, um, we found that those are consistent across languages and cultures. So those are kind of basic building blocks, basic elements.

[00:10:29] However, the trajectory of those things is going to be different across languages. So some languages are what we call very transparent. They, um, the letters kind of clearly match the sounds and so kids don't spend a whole lot of time on the decoding part and can move very quickly to the comprehension part of things. That would be a language like Finnish, for example. Children in Finland learn, learn to read, learn the decoding part pretty quickly because that language is very transparent. Um, other languages are much more opaque. English is very opaque, um, and because we borrow, uh, language combinations and spellings from all kinds of languages. So it takes much longer, um, for kids in, that are speaking English, um, to learn to read.

[00:11:14] And then you have, um, I think in an even more difficult level, um, when you have kids who are learning, uh, symbols and putting those symbols to sounds, that's an even more opaque uh, kind of language. And takes perhaps longer for kids to learn to read and write with characters. So you've got this kind of trajectory, but the same basic components are there across the languages.

[00:11:35] Russ Altman: What are the present research challenges that an expert like you is focusing on? Like there's so much of this, there's so many ways to attack this. So how do you choose to spend your time? What are the big questions that you ask in your, in your work?

[00:11:49] Rebecca Silverman: So the two probably biggest things I think about are, how do we identify which kids are having difficulty specifically with either decoding or language comprehension or both? Um, and so in that space, I think about how do we develop measures to identify these kids? How do we develop systems to identify these kids? And then on the other hand, it's once we've identified them, how do we support them through intervention?

[00:12:15] And so, um, different kids are going to need different things. They might need more of the decoding, more of the language comprehension. And then when we get to the language comprehension, for kids who have difficulty with that, um, really, how do we break that down for them? How do we support them in understanding all of the things that they need to know in order to be able to understand text?

[00:12:36] Um, and so some of the intervention work that I do is, um, involved, uh, we kind of breakdown language. You know, what do words mean? Um, how are words built? Uh, how are sentences built? Um, and then we go all the way out to a very macro level. How do we think about ideas? How do we, um, debate different perspectives? Uh, and so even fourth and fifth graders are doing this like really big work just like college kids, um, in order to become readers.

[00:13:03] Russ Altman: Okay. So that, those are great questions and maybe we can go back and just take them, uh, one at a time. So the first one, the first challenge is identifying, uh, the kids who might have challenges. How do we do this? What is our, what is the state of our ability? I mean, I remember, you know, growing up, it was, you know, this was a long time ago. And even as a first grader, I could tell that this was very coarse. You know, it was like jets and turtles and rabbits or whatever, and like, that was it. And, um, it was thinly veiled how much confidence the teacher had in each of our abilities to read. Uh, have we gotten any better?

[00:13:38] Rebecca Silverman: We have, um, we have, particularly with the decoding. There are really good measures that help us identify kids who are going to struggle with that, either because they have a hard time, uh, discerning the sounds of the language or because they have a hard time matching those sounds to letters.

[00:13:52] We're, we have good measures of that. Um, I think we're still at a point where we're trying to make sure that all schools are using those measures and using them consistently. So there's sort of this like more systemic and policy level, um, aspect to education. Um, the measures of language comprehension, uh, we're much further behind.

[00:14:11] And so we have a much harder time, um, figuring out is it, for example, because a child has a, uh, disability in the language comprehension area, or is it because maybe they multiple languages and haven't quite figured out English yet, for example. So we have a lot more, uh of a challenge and a problem to solve in terms of how do we capture and how do we identify kids with comprehension based difficulties.

[00:14:38] Russ Altman: Do they tend to be, um, and forgive me for being so simplistic, but like read this passage and answer questions? Is that still where we are?

[00:14:46] Rebecca Silverman: Yeah, absolutely. And sometimes it's broken down a little bit more into like, what does this word mean? And you've got some multiple-choice options. But we're pretty, uh, we're pretty basic when it comes to how to measure those things right now. And so I think coming up with new ways to do that is really, uh, is really going to be important in the future.

[00:15:03] Russ Altman: I had a very strange experience as a child where one of my teachers after a standardized test of reading came up to me and said, Russ, you're a much better reader than I thought. And even as a kid, I could tell he was saying, it was my English teacher, I could tell he was saying, I thought you were not so good at reading and didn't understand. But evidently this test is indicating that you do. And I remember being confused about what signals I was sending in class that he kind of thought I was, uh, not very strong even. And he was so surprised by these test results. Uh, it's just like seventh grade. And it was, he was a great teacher.

[00:15:42] So I, this is a much bigger tech discussion. But I want to ask you about technology and the role of technology. Um, uh, is there data about the differences of reading on paper, on a tablet, on a computer. Uh, as an old guy, I am extremely aware that I am so much better with paper, and for serious things that I really care about, I know it kills trees, but I just have to print things out, and I don't know if that's just an anecdote, or if there's actually data about this.

[00:16:12] Rebecca Silverman: Yeah, so there is research that looks at the complexity of reading, um, in a, uh, on paper versus in a digital environment. And part of what you need to consider is that the actual act of reading in those two, um, contexts is very different. In paper, you're going, you know, you're turning pages. You can't really move around as much, uh, as easily. Um, whereas on a digital text, you kind of need to understand, um, parts about like the digital nature, like that you can move very easily from one place to another. Sometimes there's, you know, little pop outs or hypertext that you need to pay attention to in order to understand what's going on. Um, so yes, uh, reading in a digital realm is more difficult, um, and can be harder for kids. But it's something that kids can learn and it's something that we can teach them to do better.

[00:17:00] Um, so I would say that, you know, when we're thinking about the world in the future. I think kids will be reading on e-readers and on, online and that kind of thing. And so part of our job is to teach them about how that might be similar and different from reading on paper.

[00:17:15] Russ Altman: And I take it that most kids these days are still learning on paper.

[00:17:19] Rebecca Silverman: Not necessarily. I mean to some extent, yes, they're learning in paper first. But they quickly moved to e-readers in a lot of contexts. Um, there's been, particularly in the pandemic, there was a lot of exposure to technology. And so now there's a lot of, um, tools where kids can access books online, um, which allows them to have this whole other library outside of their school that they can access. Um, and so kids are reading online at a much earlier age and, um, and much more consistently than they ever had in the past.

[00:17:49] Russ Altman: This is the Future of Everything with Russ Altman, more with Rebecca Silverman next.

[00:18:08] Welcome back to The Future of Everything. I'm Russ Altman and I'm speaking with my guest professor Rebecca Silverman, about how people learn how to read.

[00:18:15] In the last segment, we went over some of the key challenges in reading, decoding the words, comprehending the words, and a little bit about some of the technologies and techniques that are used to identify when it's going well and when it's a problem.

[00:18:29] In this segment, Rebecca will tell us about the role of technology in learning. She'll tell us about the role of families and family life, and she'll talk about teachers, the ones to whom many of these responsibilities fall in the end.

[00:18:42] Rebecca, I wanted to ask you a little bit more about technology. It's everywhere. We're seeing a boom in AI. I'm guessing that this is affecting readers, new readers, new learners, and their teachers a lot. So, how is technology doing with the, in the reading industry?

[00:18:58] Rebecca Silverman: So, especially in the last few years, there's been a huge boom in educational technology, specifically focused on literacy. Um, and so one of the things that we see is, um, parents, families using a lot more technology in their homes, teachers using and accessing a lot more technology. Um, I think one issue is that not all of these technologies have been explored in research. So we don't know exactly how all of them, uh, play out. What we do know is that, um, we need to teach kids how to use them and how to use them appropriately, um, in order to get the learning that we want from them. Um, we also know that some content is better than other content, so content that's built for specific purposes and that have really thought about how learners learn and instructional design, those tools are going to be better than tools that, you know, are just fun.

[00:19:47] Russ Altman: Yes.

[00:19:47] Rebecca Silverman: Um, and, uh, we also know that some tools do seem to have, uh, really positive effects and have had studies that have shown that specifically, and this will tie back to what we were talking about before in the area of decoding. We know what it means to teach decoding, and we've kind of figured that out in a digital world as well. Less so on the language comprehension side of things.

[00:20:07] Russ Altman: Yeah, the comprehension seems to be a holy grail that has come up now a couple of times, as we really are, that's an area of focus in the future, it sounds like.

[00:20:15] Rebecca Silverman: Absolutely, and trying to figure out, there have been studies of how, um, say, instructional agents, in, um, in a virtual world might be able to support, uh, learners in thinking about text or thinking about, um, you know, words and meaning in text. And so that's an area of, um, of kind of future growth. But I think that's definitely a realm, uh, of exploration in the future.

[00:20:38] Russ Altman: Great. And then what about, uh, what about families? You mentioned families just now in your answer that families are bringing tools into the home and then new learners about reading or being exposed to these. Let's talk a little bit about the greater role of families. You know, people are always told it's critical to read to your kid, like even maybe even in utero, but certainly ex-utero. You know, we want to be sitting down, um, you know, even with kids who don't talk yet and then having them look at the pictures and look at the words. Um, what's understood about that process and what are the best practices and the best advice these days about, uh, new parents, uh, and their child?

[00:21:13] Rebecca Silverman: So, I think some of the same things are still there. We want to talk to kids, we want to read to kids. I think, um, what's important for families to know is that they should do that in the way that is most comfortable for them, whether culturally or linguistically. So, if families speak a certain language in the home and kids might learn a different language at school, that's fine. Speak to them in their home language. What we know is that developing that home language is crucially important for kids developing, um, their language and literacy skills later on. Um, and also, um, in ways that are culturally appropriate, you know, some families do more storytelling than book reading, and that's okay. That's still setting up the same fundamental functions of, you know, using language to make meaning, um, and to, uh, use for communication. And so I think…

[00:21:58] Russ Altman: That's great news. It's actually great news that telling stories and an oral tradition, you shouldn't feel bad about doing that. It's still contributing potentially even to the comprehension, in fact, definitely to the comprehension part of the, uh, ledger.

[00:22:13] Rebecca Silverman: Absolutely. I think parents a lot of times feel like, you know, oh, I should be doing it this way. And it feels unnatural to them. It feels like they're engaging with their kids in a way that's not comfortable with them. And I think I would say to parents, you know, go back to what makes sense for you and your family. And most of the time that's going to set kids up for success in school.

[00:22:31] Russ Altman: You know, speaking about families, I want to ask a slightly off-track question. But it was one that I had written down and I really wanted to know the answer, which is sometimes people, uh, for whatever reason in their life haven't learned how to read as a kid and they take on the learning of reading as an adult. Um, we know for languages, like that there's a golden period where kids can learn languages, like speaking a new language very easily and that becomes much more hard. Is it the same situation for reading or is it different?

[00:22:59] Rebecca Silverman: So, reading is going to be hard no matter, you know, when we do it. So it's going to be hard as an adult. It's going to be hard as an adult because it feels like we're doing something that, you know, kids can learn to do. And so in that sense, it is going to feel harder. But it's the same task, especially if, um, adults have already developed a lot of the, um, vocabulary skills and language skills that would help them understand things. Then what they're doing is really just figuring out how to break the code, how to connect letters and sounds. Um, and so it's really the fundamentally the same task. And, um, you know, as long as adults kind of recognize that it's going to be harder because they're older and it feels, uh, you know, like something a child should be able to do, they can learn, um, to read.

[00:23:43] Russ Altman: Great. So this is really good news. And it's not like language. They, you're always in the game for learning how to be a reader.

[00:23:51] Rebecca Silverman: Absolutely.

[00:23:52] Russ Altman: So that's great news. Okay. Well, we've gone twenty-one minutes and we've hardly talked about teachers. But I think it's time you've been a teacher of students, of small children. Tell me how this world is for teachers. I mean, I guess it's kind of, as you said, it's kind of first grade, maybe in kindergarten, but certainly in first grade, that becomes a main focus. And, you know, these are six-year-olds, there's so many things going on. I guess, my first question is just to paint a picture for what it's like to be that teacher whose job it is to get this to start to happen.

[00:24:25] Rebecca Silverman: Sure. So, I mean, one thing is that I think that the range of teachers who are kind of focusing on literacy is much, much larger. So, we think about early childhood teachers, teachers who are teaching two-year-olds, three-year-olds, and how they're already starting to think about how to support kids’ foundational literacy skills. Um, all the way up through elementary school and into middle and high school, you have teachers thinking about literacy. Um, the thing is because reading and literacy is so complex and we talked about that earlier, um, teachers need to know a lot about what it takes to help kids become better readers. And so when they, uh, when they're thinking about what are all the skills that I need to focus on, how do I focus on them best? What does the research say? That's a huge task for teachers, and we spend a lot of time in teacher education doing that. Um, then, especially for elementary, early childhood and elementary school teachers, you also think about all of the other things that they need to be doing. They need to be doing classroom management, math, science, social studies, social, emotional skills, all of those things.

[00:25:23] It's an incredibly tough job to be a teacher, and particularly in this day and age when the stressors are there, um, you know, we're coming out of a pandemic. There's a lot of, um challenges that kids and teachers face. It's a very challenging job and unfortunately we're losing a lot of teachers because of that. Um, so I think in the future we need to figure out ways to recruit teachers, train teachers, support teachers throughout their teaching profession so that we have the educators we need to support the children who are going to be the future of our generation.

[00:25:54] Russ Altman: And I'm guessing, and you can tell me if I'm right, that a teacher in those early childhood years also has to assess each individual student. And like, if they have fifteen, twenty, twenty-five students in the class, they kind of have to have a personalized program for each student. And then you mentioned all the other things that they're thinking about.

[00:26:11] So what kind of support, like what is the training that teachers are getting and how do they learn about the new things that you're doing that might change the way they do their, their job because of new findings and like, what's effective and what's not effective? Do they do kind of, I guess, a professional ongoing education? Do they specialize? I don't remember first grade and second grade teachers like coming in for math or coming in for reading. It was just, at least a long time ago, it was one teacher for the day. So I'm just trying to understand, how do they keep abreast and how do they even begin to come up with personalized programs for all the different students?

[00:26:50] Rebecca Silverman: Yeah, I think we've got a long way to come, and earlier we were talking about technology and like how AI might become involved. I think those are ways that we can support teachers with technology. Um, using assessments to generate, um, uh, suggestions for teachers. I think that's, um, that's one way we can help them.

[00:27:06] But, but for now, it usually is one teacher in a room with twenty kids all day long doing all the subjects. Um, I don't think that's a great plan long term. I think actually having teachers specialize would be a much better way to go. Um, I'm a huge proponent of having you know, a literacy specialist who knows the ins and outs of literacy and can kind of direct the other teachers and how to support literacy. Same for math, same for other areas. And I think in that way, the act of being able to look at data, uh, figure out what kids need, what are their strengths, what are their needs in the area of a specific skill like literacy, and then differentiating would be a lot more manageable. Right now, it's just a huge task.

[00:27:44] Russ Altman: So I guess my final question is, you mentioned AI a minute ago. Are you generally optimistic about the outlook for AI to help in this situation? Cause I could imagine you coming in and saying, it's a nightmare. So, where are we on the nightmare to, um, you know, solution to all of our problems in this context for things like AI, ChatGPT that talks, things like that.

[00:28:07] Rebecca Silverman: Yeah, so I think we're, in the infancy of where we will end up. I think there is a lot of promise for how, um, AI and other, uh, technological approaches can be used to help us use the information we have, use the research that we have. And apply it more quickly and efficiently for supporting kids.

[00:28:27] I think right now we're kind of a long way from that. So there's a lot of work to be done to get from point A to point B. But I do think there's a lot of promise there. I think we have to as researchers, as, uh, developers in the field, we need to be very careful though, to really keep in mind that, um, we need to have human eyes on this.

[00:28:47] Russ Altman: Yes.

[00:28:48] Rebecca Silverman: We need to think like teachers. We need to think about what works in particular contexts, um, in kind of checking the AI.

[00:28:55] Russ Altman: Yeah.

[00:28:55] Rebecca Silverman: To make sure that we're not just using data randomly without context and input.

[00:29:00] Russ Altman: Yeah, it sounds like a partnership of the humans and the teachers with the AI is the prudent way to go forward. And it would be kind of a disaster scenario to have, you know, to throw a tablet at a kid and leave the room and expect good things to happen.

[00:29:14] Rebecca Silverman: Yeah, and, and we've done that and that doesn't that it doesn't work. So just giving kids technology isn't going to help, just giving teachers technology isn't going to help. It's really that the inner, interplay between the two that I think is we have work to do in that area.

[00:29:29] Russ Altman: Thanks to Rebecca Silverman. That was the future of reading.

[00:29:34] You've been listening to The Future of Everything and I'm your host, Russ Altman. With more than 250 episodes in our back catalog, you can listen to interesting conversations on our broad diversity of topics. And they're ready whenever you are. If you're enjoying the show, please consider leaving it a 5-star rating and some comments about why you love it. That will help the show grow, and it'll help other people discover it. You can connect with me on X or Twitter, @RBaltman, and you can connect with Stanford Engineering @StanfordENG.

The future of exercise

A chip-scale titanium-sapphire laser

Canary in the sewer: Using wastewater as a disease early warning tool

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing in Engineering

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

In this section

Subsections.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Collection 12 March 2023

Top 100 in Engineering - 2022

This collection highlights our most downloaded* engineering papers published in 2022. Featuring authors from around the world, these papers showcase valuable research from an international community.

You can also view the top papers across various subject areas here .

*Data obtained from SN Insights, which is based on Digital Science's Dimensions.

Generation mechanism and prediction of an observed extreme rogue wave

- Johannes Gemmrich

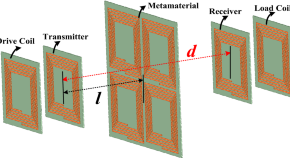

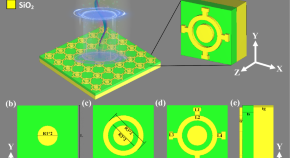

Wireless power transfer system with enhanced efficiency by using frequency reconfigurable metamaterial

- Dongyong Shan

- Haiyue Wang

- Junhua Zhang

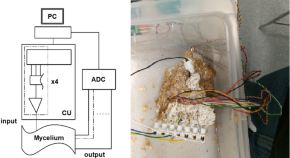

Mining logical circuits in fungi

- Nic Roberts

- Andrew Adamatzky

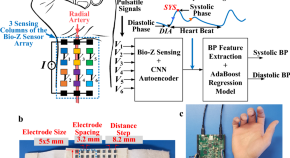

Cuffless blood pressure monitoring from a wristband with calibration-free algorithms for sensing location based on bio-impedance sensor array and autoencoder

- Bassem Ibrahim

- Roozbeh Jafari

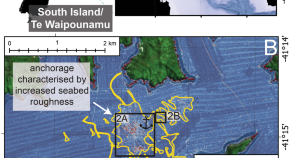

The footprint of ship anchoring on the seafloor

- Sally J. Watson

- Geoffroy Lamarche

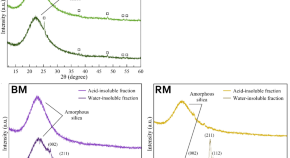

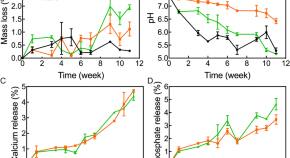

Dental tissue remineralization by bioactive calcium phosphate nanoparticles formulations

- Andrei Cristian Ionescu

- Lorenzo Degli Esposti

- Eugenio Brambilla

Neuromorphic chip integrated with a large-scale integration circuit and amorphous-metal-oxide semiconductor thin-film synapse devices

- Mutsumi Kimura

- Yuki Shibayama

- Yasuhiko Nakashima

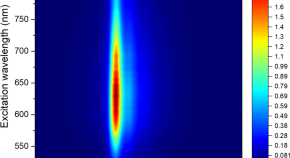

Near-infrared photoluminescence of Portland cement

- Sergei M. Bachilo

- R. Bruce Weisman

Investigating physical and mechanical properties of nest soils used by mud dauber wasps from a geotechnical engineering perspective

- Joon S. Park

- Noura S. Saleh

- Nathan P. Lord

Control of blood glucose induced by meals for type-1 diabetics using an adaptive backstepping algorithm

- Rasoul Zahedifar

- Ali Keymasi Khalaji

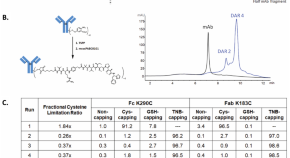

Cysteine metabolic engineering and selective disulfide reduction produce superior antibody-drug-conjugates

- Renée Procopio-Melino

- Frank W. Kotch

- Xiaotian Zhong

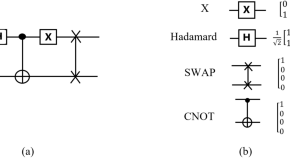

An optimizing method for performance and resource utilization in quantum machine learning circuits

- Tahereh Salehi

- Mariam Zomorodi

- Vahid Salari

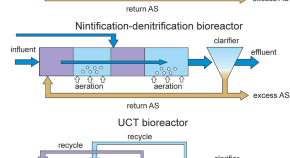

The structure of microbial communities of activated sludge of large-scale wastewater treatment plants in the city of Moscow

- Shahjahon Begmatov

- Alexander G. Dorofeev

- Andrey V. Mardanov

Characterization of 3D-printed PLA parts with different raster orientations and printing speeds

- Mohammad Reza Khosravani

- Filippo Berto

- Tamara Reinicke

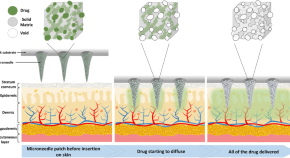

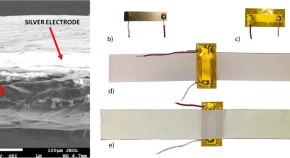

Hard polymeric porous microneedles on stretchable substrate for transdermal drug delivery

- Aydin Sadeqi

- Sameer Sonkusale

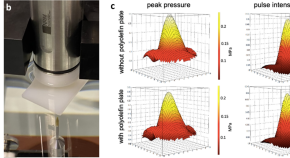

Ultrasounds induce blood–brain barrier opening across a sonolucent polyolefin plate in an in vitro isolated brain preparation

- Laura Librizzi

- Francesco Prada

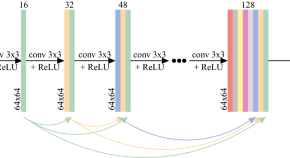

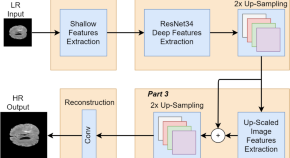

Deep learning-based single image super-resolution for low-field MR brain images

- M. L. de Leeuw den Bouter

- G. Ippolito

Effective treatment of aquaculture wastewater with mussel/microalgae/bacteria complex ecosystem: a pilot study

- Yongchao Li

- Weifeng Guo

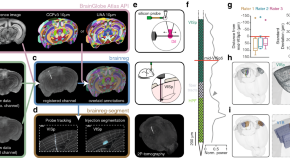

Ultrasound-guided femoral approach for coronary angiography and interventions in the porcine model

- Grigorios Tsigkas

- Georgios Vasilagkos

- Periklis Davlouros

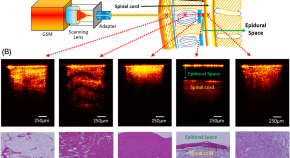

Epidural anesthesia needle guidance by forward-view endoscopic optical coherence tomography and deep learning

- Qinggong Tang

PLA/Hydroxyapatite scaffolds exhibit in vitro immunological inertness and promote robust osteogenic differentiation of human mesenchymal stem cells without osteogenic stimuli

- Marcela P. Bernardo

- Bruna C. R. da Silva

- Antonio Sechi

Atrial fibrillation prediction by combining ECG markers and CMR radiomics

- Esmeralda Ruiz Pujadas

- Zahra Raisi-Estabragh

- Karim Lekadir

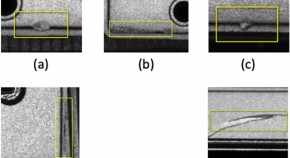

Small object detection method with shallow feature fusion network for chip surface defect detection

- Haixin Huang

- Xueduo Tang

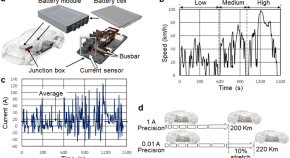

High-precision robust monitoring of charge/discharge current over a wide dynamic range for electric vehicle batteries using diamond quantum sensors

- Yuji Hatano

- Jaewon Shin

- Mutsuko Hatano

Driver drowsiness estimation using EEG signals with a dynamical encoder–decoder modeling framework

- Sadegh Arefnezhad

- James Hamet

- Ali Yousefi

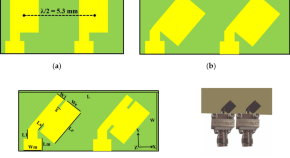

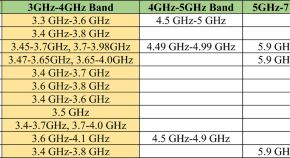

A compact two elements MIMO antenna for 5G communication

- Ashfaq Ahmad

- Dong-you Choi

- Sadiq Ullah

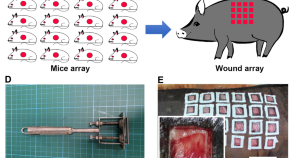

Skin wound healing assessment via an optimized wound array model in miniature pigs

- Ting-Yung Kuo

- Chao-Cheng Huang

- Lynn L. H. Huang

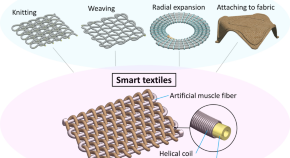

Smart textiles using fluid-driven artificial muscle fibers

- Phuoc Thien Phan

- Mai Thanh Thai

- Thanh Nho Do

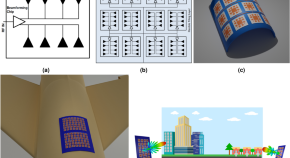

Tile-based massively scalable MIMO and phased arrays for 5G/B5G-enabled smart skins and reconfigurable intelligent surfaces

- Manos M. Tentzeris

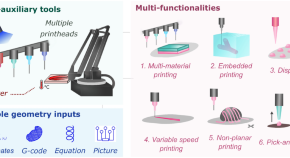

A hackable, multi-functional, and modular extrusion 3D printer for soft materials

- Iek Man Lei

- Yan Yan Shery Huang

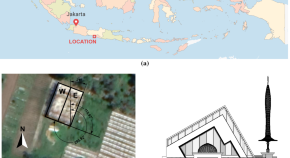

Techno-economic analysis of rooftop solar power plant implementation and policy on mosques: an Indonesian case study

- Fadhil Ahmad Qamar

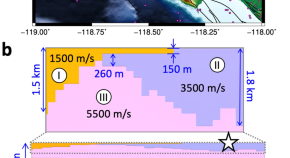

Seismic wave simulation using a 3D printed model of the Los Angeles Basin

- Sunyoung Park

- Changsoo Shin

- Robert W. Clayton

Banana stem and leaf biochar as an effective adsorbent for cadmium and lead in aqueous solution

- Gaoxiang Li

- Xinxian Long

Potential risk assessment for safe driving of autonomous vehicles under occluded vision

- Denggui Wang

- Jincao Zhou

Natural quantum reservoir computing for temporal information processing

- Yudai Suzuki

- Naoki Yamamoto

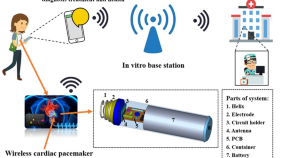

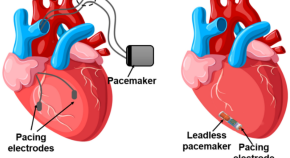

A compact and miniaturized implantable antenna for ISM band in wireless cardiac pacemaker system

- Li Gaosheng

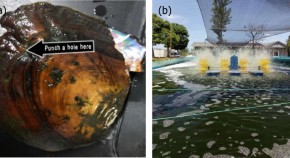

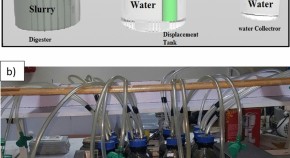

Experimental and simulation analysis of biogas production from beverage wastewater sludge for electricity generation

- Anteneh Admasu

- Wondwossen Bogale

- Yedilfana Setarge Mekonnen

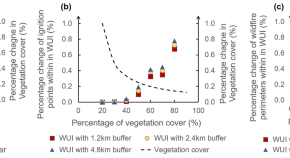

Mapping the wildland-urban interface in California using remote sensing data

- Tirtha Banerjee

Design and implementation of a terahertz lens-antenna for a photonic integrated circuits based THz systems

- Shihab Al-Daffaie

- Alaa Jabbar Jumaah

- Thomas Kusserow

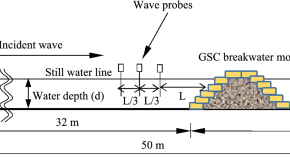

Preliminary investigation on stability and hydraulic performance of geotextile sand container breakwaters filled with sand and cement

- Kiran G. Shirlal

Detection of volatile organic compounds using mid-infrared silicon nitride waveguide sensors

- Junchao Zhou

- Diana Al Husseini

- Pao Tai Lin

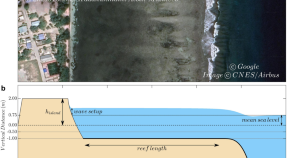

Coastal flooding and mean sea-level rise allowances in atoll island

- Angel Amores

- Marta Marcos

- Jochen Hinkel

Integration of life cycle assessment and life cycle costing for the eco-design of rubber products

- Yahong Dong

- Yating Zhao

- Guangyi Lin

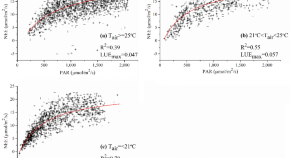

Estimating mangrove forest gross primary production by quantifying environmental stressors in the coastal area

- Yuhan Zheng

- Wataru Takeuchi

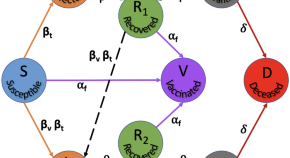

COVID-19 waves: variant dynamics and control

- Abhishek Dutta

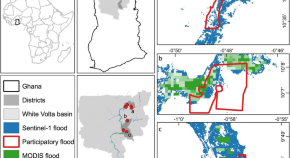

Increased flooded area and exposure in the White Volta river basin in Western Africa, identified from multi-source remote sensing data

- Chengxiu Li

- Jadunandan Dash

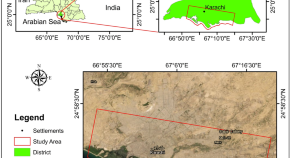

Sentinel-1A for monitoring land subsidence of coastal city of Pakistan using Persistent Scatterers In-SAR technique

- Muhammad Afaq Hussain

- Zhanlong Chen

A Lab-in-a-Fiber optofluidic device using droplet microfluidics and laser-induced fluorescence for virus detection

- Helen E. Parker

- Sanghamitra Sengupta

- Fredrik Laurell

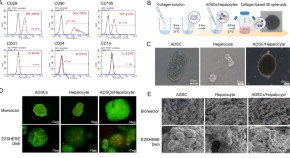

Transplantation of 3D adipose-derived stem cell/hepatocyte spheroids alleviates chronic hepatic damage in a rat model of thioacetamide-induced liver cirrhosis

- Yu Chiuan Wu

- Guan Xuan Wu

- Shyh Ming Kuo

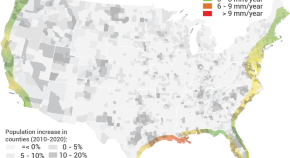

Hidden costs to building foundations due to sea level rise in a changing climate

- Mohamed A. Abdelhafez

- Bruce Ellingwood

- Hussam Mahmoud

Mapping native and non-native vegetation in the Brazilian Cerrado using freely available satellite products

- Kennedy Lewis

- Fernanda de V. Barros

- Lucy Rowland

Graphene-based metasurface solar absorber design with absorption prediction using machine learning

- Juveriya Parmar

- Shobhit K. Patel

- Vijay Katkar

Experimental study of reasonable mesh size of geogrid reinforced tailings

- Lidong Liang

Stromal-vascular fraction and adipose-derived stem cell therapies improve cartilage regeneration in osteoarthritis-induced rats

- Wan-Ting Yang

- Chun-Yen Ke

- Ru-Ping Lee

Planar ultrasonic transducer based on a metasurface piezoelectric ring array for subwavelength acoustic focusing in water

- Hyunggyu Choi

- Yong Tae Kim

Sample-efficient parameter exploration of the powder film drying process using experiment-based Bayesian optimization

- Kohei Nagai

- Takayuki Osa

- Keisuke Nagato

Predicting the splash of a droplet impinging on solid substrates

- Yukihiro Yonemoto

- Kanta Tashiro

- Tomoaki Kunugi

Designing a new alginate-fibrinogen biomaterial composite hydrogel for wound healing

- Marjan Soleimanpour

- Samaneh Sadat Mirhaji

- Ali Akbar Saboury

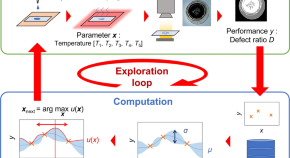

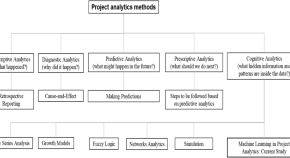

Machine learning in project analytics: a data-driven framework and case study

- Shahadat Uddin

- Stephen Ong

Multi-state MRAM cells for hardware neuromorphic computing

- Piotr Rzeszut

- Jakub Chȩciński

- Tomasz Stobiecki

Flexible, self-powered sensors for estimating human head kinematics relevant to concussions

- Henry Dsouza

- Juan Pastrana

- Nelson Sepúlveda

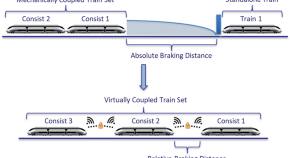

Technical feasibility analysis and introduction strategy of the virtually coupled train set concept

- Sebastian Stickel

- Moritz Schenker

- Javier Goikoetxea

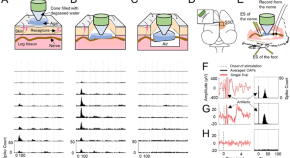

Ultrasound does not activate but can inhibit in vivo mammalian nerves across a wide range of parameters

- Hongsun Guo

- Sarah J. Offutt

- Hubert H. Lim

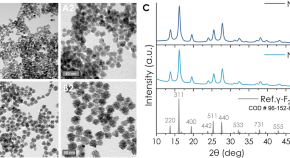

Iron oxide nanoflowers encapsulated in thermosensitive fluorescent liposomes for hyperthermia treatment of lung adenocarcinoma

- Maria Theodosiou

- Elias Sakellis

- Eleni Efthimiadou

Piezoelectric energy harvester with double cantilever beam undergoing coupled bending-torsion vibrations by width-splitting method

- Jiawen Song

- Guihong Sun

- Xuejun Zheng

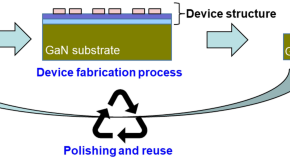

Laser slice thinning of GaN-on-GaN high electron mobility transistors

- Atsushi Tanaka

- Ryuji Sugiura

- Hiroshi Amano

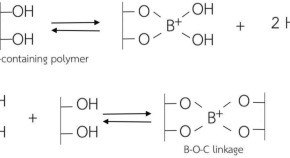

Superabsorbent cellulose-based hydrogels cross-liked with borax

- Supachok Tanpichai

- Farin Phoothong

- Anyaporn Boonmahitthisud

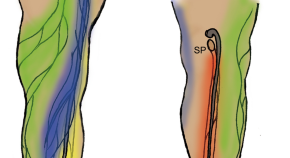

A new severity classification of lower limb secondary lymphedema based on lymphatic pathway defects in an indocyanine green fluorescent lymphography study

- Akira Shinaoka

- Kazuyo Kamiyama

- Yoshihiro Kimata

Vagus nerve stimulation using a miniaturized wirelessly powered stimulator in pigs

- Iman Habibagahi

- Mahmoud Omidbeigi

- Aydin Babakhani

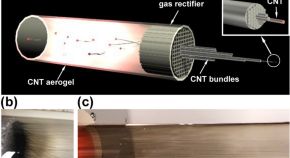

One step fabrication of aligned carbon nanotubes using gas rectifier

- Toshihiko Fujimori

- Daiji Yamashita

- Jun-ichi Fujita

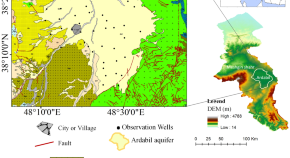

Use of InSAR data for measuring land subsidence induced by groundwater withdrawal and climate change in Ardabil Plain, Iran

- Zahra Ghorbani

- Ali Khosravi

Bioinspired gelatin based sticky hydrogel for diverse surfaces in burn wound care

- Benu George

- Nitish Bhatia

- Suchithra T. V.

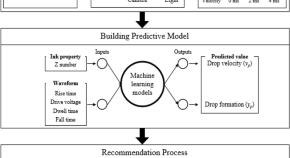

The design of an inkjet drive waveform using machine learning

- Seongju Kim

- Sungjune Jung

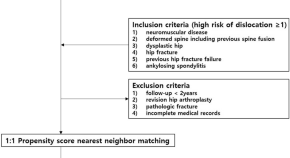

The usefulness of dual mobility cups in primary total hip arthroplasty patients at a risk of dislocation

- Nam Hoon Moon

- Won Chul Shin

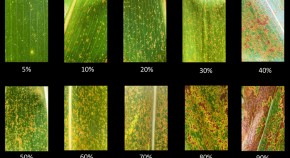

Phenomic data-facilitated rust and senescence prediction in maize using machine learning algorithms

- Aaron J. DeSalvio

- Thomas Isakeit

Polarization and angular insensitive bendable metamaterial absorber for UV to NIR range

- Md Mizan Kabir Shuvo

- Md Imran Hossain

- Mohammad Tariqul Islam

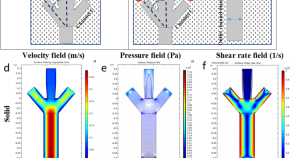

Numerical analysis on the effects of microfluidic-based bioprinting parameters on the microfiber geometrical outcomes

- Ahmadreza Zaeri

- Robert C. Chang

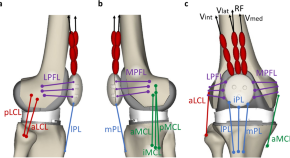

Kinematics and kinetics comparison of ultra-congruent versus medial-pivot designs for total knee arthroplasty by multibody analysis

- Giovanni Putame

- Mara Terzini

- Cristina Bignardi

A new generative adversarial network for medical images super resolution

- Waqar Ahmad

- Shoaib Azmat

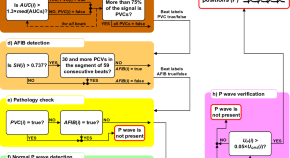

Reliable P wave detection in pathological ECG signals

- Lucie Saclova

- Andrea Nemcova

- Marina Ronzhina

Gain and isolation enhancement of a wideband MIMO antenna using metasurface for 5G sub-6 GHz communication systems

- Md. Mhedi Hasan

- Md. Shabiul Islam

Design and implementation of compact dual-band conformal antenna for leadless cardiac pacemaker system

- Deepti Sharma

- Binod Kumar Kanaujia

- Ladislau Matekovits

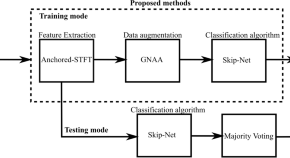

Enhancing the decoding accuracy of EEG signals by the introduction of anchored-STFT and adversarial data augmentation method

- Muhammad Saif-ur-Rehman

- Christian Klaes

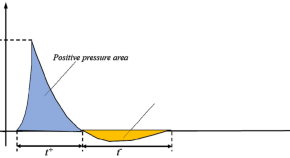

Propagation rules of shock waves in confined space under different initial pressure environments

Wave attenuation through forests under extreme conditions

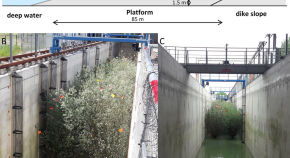

- Bregje K. van Wesenbeeck

- Guido Wolters

- Tjeerd J. Bouma

Multi-fidelity information fusion with concatenated neural networks

- Suraj Pawar

- Trond Kvamsdal

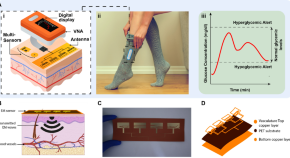

Wearable flexible body matched electromagnetic sensors for personalized non-invasive glucose monitoring

- Jessica Hanna

- Youssef Tawk

- Assaad A. Eid

Portable magnetic resonance imaging of patients indoors, outdoors and at home

- Teresa Guallart-Naval

- José M. Algarín

- Joseba Alonso

Combined effects of body posture and three-dimensional wing shape enable efficient gliding in flying lizards

- Pranav C. Khandelwal

- Tyson L. Hedrick

Road damage detection algorithm for improved YOLOv5

- Zhenyu Zhang

A pavement distresses identification method optimized for YOLOv5s

- Sudong Wang

Regeneration of collagen fibrils at the papillary dermis by reconstructing basement membrane at the dermal–epidermal junction

- Shunsuke Iriyama

- Satoshi Amano

Bio-actuated microvalve in microfluidics using sensing and actuating function of Mimosa pudica

- Yusufu Aishan

- Shun-ichi Funano

Uncovering emergent phenotypes in endothelial cells by clustering of surrogates of cardiovascular risk factors

- Iguaracy Pinheiro-de-Sousa

- Miriam H. Fonseca-Alaniz

- Jose E. Krieger

Degenerative joint disease induced by repeated intra-articular injections of monosodium urate crystals in rats as investigated by translational imaging

- Nathalie Accart

- Janet Dawson

- Nicolau Beckmann

High gain DC/DC converter with continuous input current for renewable energy applications

- Arafa S. Mansour

- AL-Hassan H. Amer

- Mohamed S. Zaky

Sensitive asprosin detection in clinical samples reveals serum/saliva correlation and indicates cartilage as source for serum asprosin

- Yousef A. T. Morcos

- Steffen Lütke

- Gerhard Sengle

Scalp attached tangential magnetoencephalography using tunnel magneto-resistive sensors

- Akitake Kanno

- Nobukazu Nakasato

3D modelling and simulation of the dispersion of droplets and drops carrying the SARS-CoV-2 virus in a railway transport coach

- Patrick Armand

- Jérémie Tâche

Accurate determination of marker location within whole-brain microscopy images

- Adam L. Tyson

- Mateo Vélez-Fort

- Troy W. Margrie

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Must-read papers on prompt-based tuning for pre-trained language models.

thunlp/PromptPapers

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 132 Commits | ||||

Repository files navigation

Promptpapers.

We have released an open-source prompt-learning toolkit, check out OpenPrompt !

We strongly encourage the researchers that want to promote their fantastic work to the community to make pull request to update their paper's information! (See contributing details )

Effective adaptation of pre-trained models could be probed from different perspectives. Prompt-learning more focuses on the organization of training procedure and the unification of different tasks, while delta tuning (parameter efficient methods) provides another direction from the specific optimization of pre-trained models. Check DeltaPapers !

Must-read papers on prompt-based tuning for pre-trained language models. The paper list is mainly mantained by Ning Ding and Shengding Hu . Watch this repository for the latest updates!

Keywords Convention

Improvements, specializations, other contributors, contributing to this paper list, introduction.

This is a paper list about prompt-based tuning for large-scale pre-trained language models. Different from traditional fine-tuning that uses an explicit classifier, prompt-based tuning directly uses the pre-trained models to conduct the pre-training tasks for classification or regression.

This section contains the papers that overview the general trends in recent natural language processing with big (pretrained) models.

OpenPrompt: An Open-source Framework for Prompt-learning. Preprint.

Ning Ding, Shengding Hu, Weilin Zhao, Yulin Chen, Zhiyuan Liu, Hai-Tao Zheng, Maoson Sun [ pdf ] [ project ], 2021.11

Pre-Trained Models: Past, Present and Future. Preprint.

Xu Han, Zhengyan Zhang, Ning Ding, Yuxian Gu, Xiao Liu, Yuqi Huo, Jiezhong Qiu, Yuan Yao, Ao Zhang, Liang Zhang, Wentao Han, Minlie Huang, Qin Jin, Yanyan Lan, Yang Liu, Zhiyuan Liu, Zhiwu Lu, Xipeng Qiu, Ruihua Song, Jie Tang, Ji-Rong Wen, Jinhui Yuan, Wayne Xin Zhao, Jun Zhu. [ pdf ], 2021.6

Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. Preprint.

Liu, Pengfei, Weizhe Yuan, Jinlan Fu, Zhengbao Jiang, Hiroaki Hayashi, and Graham Neubig. [ pdf ] [ project ], 2021.7

Paradigm Shift in Natural Language Processing. Machine Intelligence Research.

Tianxiang Sun, Xiangyang Liu, Xipeng Qiu, Xuanjing Huang [ pdf ] [ project ], 2021.9

This section contains the pilot works that might contributes to the prevalence of prompt learning paradigm.

Neil Houlsby, Andrei Giurgiu, Stanislaw Jastrzebski, Bruna Morrone, Quentin de Laroussilhe, Andrea Gesmundo, Mona Attariyan, Sylvain Gelly . [ pdf ], [ project ], 2019.6

Fabio Petroni, Tim Rocktaschel, Patrick Lewis, Anton Bakhtin, Yuxiang Wu, Alexander H. Miller, Sebastian Riedel. [ pdf ], [ project ] , 2019.9

Zhengbao Jiang, Frank F. Xu, Jun Araki, Graham Neubig . [ pdf ], [ project ], 2019.11

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, Dario Amodei. [ pdf ], [ website ], 2020.5

Yulong Chen, Yang Liu, Li Dong, Shuohang Wang, Chenguang Zhu, Michael Zeng, Yue Zhang [ pdf ], 2022.02

This section contains the exploration on the basic aspects of prompt tuning, such as template, verbalizer, training paradigms, etc.

Timo Schick, Hinrich Schütze. [ pdf ], [ project ], 2020.1

Timo Schick, Hinrich Schütze. [ pdf ], [ project ], 2020.9

Taylor Shin, Yasaman Razeghi, Robert L. Logan IV, Eric Wallace, Sameer Singh. [ pdf ], [ website ], 2020.10

Timo Schick, Helmut Schmid, Hinrich Schütze. [ pdf ], [ project ], 2020.12

Tianyu Gao, Adam Fisch, Danqi Chen. [ pdf ], [ project ], 2020.12

Xiang Lisa Li, Percy Liang. [ pdf ], [ project ], 2021.1

Laria Reynolds, Kyle McDonell. [ pdf ], 2021.2

Derek Tam, Rakesh R Menon, Mohit Bansal, Shashank Srivastava, Colin Raffel. [pdf] , 2021.3

Xiao Liu, Yanan Zheng, Zhengxiao Du, Ming Ding, Yujie Qian, Zhilin Yang, Jie Tang . [ pdf ], [ project ], 2021.3

Brian Lester, Rami Al-Rfou, Noah Constant . [ pdf ], [ project ], 2021.4

Guanghui Qin, Jason Eisner. [ pdf ][ project ], 2021.4

Zexuan Zhong, Dan Friedman, Danqi Chen. [ pdf ], [ project ], 2021.4

Robert L. Logan IV, Ivana Balažević, Eric Wallace, Fabio Petroni, Sameer Singh, Sebastian Riedel . [ pdf ], 2021.6

Karen Hambardzumyan, Hrant Khachatrian, Jonathan May. [ pdf ], [ project ], 2021.6

Xu Han, Weilin Zhao, Ning Ding, Zhiyuan Liu, Maosong Sun. [ pdf ], 2021.5

Yi Sun*, Yu Zheng*, Chao Hao, Hangping Qiu , [ pdf ], [ project ], 2021.9

ason Wei, Maarten Bosma, Vincent Y. Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, Andrew M. Dai, Quoc V. Le. [ pdf ], 2021.9

Yuxian Gu*, Xu Han*, Zhiyuan Liu, Minlie Huang. [ pdf ], 2021.9