- Privacy Policy

Home » 500+ Computer Science Research Topics

500+ Computer Science Research Topics

Computer Science is a constantly evolving field that has transformed the world we live in today. With new technologies emerging every day, there are countless research opportunities in this field. Whether you are interested in artificial intelligence, machine learning, cybersecurity, data analytics, or computer networks, there are endless possibilities to explore. In this post, we will delve into some of the most interesting and important research topics in Computer Science. From the latest advancements in programming languages to the development of cutting-edge algorithms, we will explore the latest trends and innovations that are shaping the future of Computer Science. So, whether you are a student or a professional, read on to discover some of the most exciting research topics in this dynamic and rapidly expanding field.

Computer Science Research Topics

Computer Science Research Topics are as follows:

- Using machine learning to detect and prevent cyber attacks

- Developing algorithms for optimized resource allocation in cloud computing

- Investigating the use of blockchain technology for secure and decentralized data storage

- Developing intelligent chatbots for customer service

- Investigating the effectiveness of deep learning for natural language processing

- Developing algorithms for detecting and removing fake news from social media

- Investigating the impact of social media on mental health

- Developing algorithms for efficient image and video compression

- Investigating the use of big data analytics for predictive maintenance in manufacturing

- Developing algorithms for identifying and mitigating bias in machine learning models

- Investigating the ethical implications of autonomous vehicles

- Developing algorithms for detecting and preventing cyberbullying

- Investigating the use of machine learning for personalized medicine

- Developing algorithms for efficient and accurate speech recognition

- Investigating the impact of social media on political polarization

- Developing algorithms for sentiment analysis in social media data

- Investigating the use of virtual reality in education

- Developing algorithms for efficient data encryption and decryption

- Investigating the impact of technology on workplace productivity

- Developing algorithms for detecting and mitigating deepfakes

- Investigating the use of artificial intelligence in financial trading

- Developing algorithms for efficient database management

- Investigating the effectiveness of online learning platforms

- Developing algorithms for efficient and accurate facial recognition

- Investigating the use of machine learning for predicting weather patterns

- Developing algorithms for efficient and secure data transfer

- Investigating the impact of technology on social skills and communication

- Developing algorithms for efficient and accurate object recognition

- Investigating the use of machine learning for fraud detection in finance

- Developing algorithms for efficient and secure authentication systems

- Investigating the impact of technology on privacy and surveillance

- Developing algorithms for efficient and accurate handwriting recognition

- Investigating the use of machine learning for predicting stock prices

- Developing algorithms for efficient and secure biometric identification

- Investigating the impact of technology on mental health and well-being

- Developing algorithms for efficient and accurate language translation

- Investigating the use of machine learning for personalized advertising

- Developing algorithms for efficient and secure payment systems

- Investigating the impact of technology on the job market and automation

- Developing algorithms for efficient and accurate object tracking

- Investigating the use of machine learning for predicting disease outbreaks

- Developing algorithms for efficient and secure access control

- Investigating the impact of technology on human behavior and decision making

- Developing algorithms for efficient and accurate sound recognition

- Investigating the use of machine learning for predicting customer behavior

- Developing algorithms for efficient and secure data backup and recovery

- Investigating the impact of technology on education and learning outcomes

- Developing algorithms for efficient and accurate emotion recognition

- Investigating the use of machine learning for improving healthcare outcomes

- Developing algorithms for efficient and secure supply chain management

- Investigating the impact of technology on cultural and societal norms

- Developing algorithms for efficient and accurate gesture recognition

- Investigating the use of machine learning for predicting consumer demand

- Developing algorithms for efficient and secure cloud storage

- Investigating the impact of technology on environmental sustainability

- Developing algorithms for efficient and accurate voice recognition

- Investigating the use of machine learning for improving transportation systems

- Developing algorithms for efficient and secure mobile device management

- Investigating the impact of technology on social inequality and access to resources

- Machine learning for healthcare diagnosis and treatment

- Machine Learning for Cybersecurity

- Machine learning for personalized medicine

- Cybersecurity threats and defense strategies

- Big data analytics for business intelligence

- Blockchain technology and its applications

- Human-computer interaction in virtual reality environments

- Artificial intelligence for autonomous vehicles

- Natural language processing for chatbots

- Cloud computing and its impact on the IT industry

- Internet of Things (IoT) and smart homes

- Robotics and automation in manufacturing

- Augmented reality and its potential in education

- Data mining techniques for customer relationship management

- Computer vision for object recognition and tracking

- Quantum computing and its applications in cryptography

- Social media analytics and sentiment analysis

- Recommender systems for personalized content delivery

- Mobile computing and its impact on society

- Bioinformatics and genomic data analysis

- Deep learning for image and speech recognition

- Digital signal processing and audio processing algorithms

- Cloud storage and data security in the cloud

- Wearable technology and its impact on healthcare

- Computational linguistics for natural language understanding

- Cognitive computing for decision support systems

- Cyber-physical systems and their applications

- Edge computing and its impact on IoT

- Machine learning for fraud detection

- Cryptography and its role in secure communication

- Cybersecurity risks in the era of the Internet of Things

- Natural language generation for automated report writing

- 3D printing and its impact on manufacturing

- Virtual assistants and their applications in daily life

- Cloud-based gaming and its impact on the gaming industry

- Computer networks and their security issues

- Cyber forensics and its role in criminal investigations

- Machine learning for predictive maintenance in industrial settings

- Augmented reality for cultural heritage preservation

- Human-robot interaction and its applications

- Data visualization and its impact on decision-making

- Cybersecurity in financial systems and blockchain

- Computer graphics and animation techniques

- Biometrics and its role in secure authentication

- Cloud-based e-learning platforms and their impact on education

- Natural language processing for machine translation

- Machine learning for predictive maintenance in healthcare

- Cybersecurity and privacy issues in social media

- Computer vision for medical image analysis

- Natural language generation for content creation

- Cybersecurity challenges in cloud computing

- Human-robot collaboration in manufacturing

- Data mining for predicting customer churn

- Artificial intelligence for autonomous drones

- Cybersecurity risks in the healthcare industry

- Machine learning for speech synthesis

- Edge computing for low-latency applications

- Virtual reality for mental health therapy

- Quantum computing and its applications in finance

- Biomedical engineering and its applications

- Cybersecurity in autonomous systems

- Machine learning for predictive maintenance in transportation

- Computer vision for object detection in autonomous driving

- Augmented reality for industrial training and simulations

- Cloud-based cybersecurity solutions for small businesses

- Natural language processing for knowledge management

- Machine learning for personalized advertising

- Cybersecurity in the supply chain management

- Cybersecurity risks in the energy sector

- Computer vision for facial recognition

- Natural language processing for social media analysis

- Machine learning for sentiment analysis in customer reviews

- Explainable Artificial Intelligence

- Quantum Computing

- Blockchain Technology

- Human-Computer Interaction

- Natural Language Processing

- Cloud Computing

- Robotics and Automation

- Augmented Reality and Virtual Reality

- Cyber-Physical Systems

- Computational Neuroscience

- Big Data Analytics

- Computer Vision

- Cryptography and Network Security

- Internet of Things

- Computer Graphics and Visualization

- Artificial Intelligence for Game Design

- Computational Biology

- Social Network Analysis

- Bioinformatics

- Distributed Systems and Middleware

- Information Retrieval and Data Mining

- Computer Networks

- Mobile Computing and Wireless Networks

- Software Engineering

- Database Systems

- Parallel and Distributed Computing

- Human-Robot Interaction

- Intelligent Transportation Systems

- High-Performance Computing

- Cyber-Physical Security

- Deep Learning

- Sensor Networks

- Multi-Agent Systems

- Human-Centered Computing

- Wearable Computing

- Knowledge Representation and Reasoning

- Adaptive Systems

- Brain-Computer Interface

- Health Informatics

- Cognitive Computing

- Cybersecurity and Privacy

- Internet Security

- Cybercrime and Digital Forensics

- Cloud Security

- Cryptocurrencies and Digital Payments

- Machine Learning for Natural Language Generation

- Cognitive Robotics

- Neural Networks

- Semantic Web

- Image Processing

- Cyber Threat Intelligence

- Secure Mobile Computing

- Cybersecurity Education and Training

- Privacy Preserving Techniques

- Cyber-Physical Systems Security

- Virtualization and Containerization

- Machine Learning for Computer Vision

- Network Function Virtualization

- Cybersecurity Risk Management

- Information Security Governance

- Intrusion Detection and Prevention

- Biometric Authentication

- Machine Learning for Predictive Maintenance

- Security in Cloud-based Environments

- Cybersecurity for Industrial Control Systems

- Smart Grid Security

- Software Defined Networking

- Quantum Cryptography

- Security in the Internet of Things

- Natural language processing for sentiment analysis

- Blockchain technology for secure data sharing

- Developing efficient algorithms for big data analysis

- Cybersecurity for internet of things (IoT) devices

- Human-robot interaction for industrial automation

- Image recognition for autonomous vehicles

- Social media analytics for marketing strategy

- Quantum computing for solving complex problems

- Biometric authentication for secure access control

- Augmented reality for education and training

- Intelligent transportation systems for traffic management

- Predictive modeling for financial markets

- Cloud computing for scalable data storage and processing

- Virtual reality for therapy and mental health treatment

- Data visualization for business intelligence

- Recommender systems for personalized product recommendations

- Speech recognition for voice-controlled devices

- Mobile computing for real-time location-based services

- Neural networks for predicting user behavior

- Genetic algorithms for optimization problems

- Distributed computing for parallel processing

- Internet of things (IoT) for smart cities

- Wireless sensor networks for environmental monitoring

- Cloud-based gaming for high-performance gaming

- Social network analysis for identifying influencers

- Autonomous systems for agriculture

- Robotics for disaster response

- Data mining for customer segmentation

- Computer graphics for visual effects in movies and video games

- Virtual assistants for personalized customer service

- Natural language understanding for chatbots

- 3D printing for manufacturing prototypes

- Artificial intelligence for stock trading

- Machine learning for weather forecasting

- Biomedical engineering for prosthetics and implants

- Cybersecurity for financial institutions

- Machine learning for energy consumption optimization

- Computer vision for object tracking

- Natural language processing for document summarization

- Wearable technology for health and fitness monitoring

- Internet of things (IoT) for home automation

- Reinforcement learning for robotics control

- Big data analytics for customer insights

- Machine learning for supply chain optimization

- Natural language processing for legal document analysis

- Artificial intelligence for drug discovery

- Computer vision for object recognition in robotics

- Data mining for customer churn prediction

- Autonomous systems for space exploration

- Robotics for agriculture automation

- Machine learning for predicting earthquakes

- Natural language processing for sentiment analysis in customer reviews

- Big data analytics for predicting natural disasters

- Internet of things (IoT) for remote patient monitoring

- Blockchain technology for digital identity management

- Machine learning for predicting wildfire spread

- Computer vision for gesture recognition

- Natural language processing for automated translation

- Big data analytics for fraud detection in banking

- Internet of things (IoT) for smart homes

- Robotics for warehouse automation

- Machine learning for predicting air pollution

- Natural language processing for medical record analysis

- Augmented reality for architectural design

- Big data analytics for predicting traffic congestion

- Machine learning for predicting customer lifetime value

- Developing algorithms for efficient and accurate text recognition

- Natural Language Processing for Virtual Assistants

- Natural Language Processing for Sentiment Analysis in Social Media

- Explainable Artificial Intelligence (XAI) for Trust and Transparency

- Deep Learning for Image and Video Retrieval

- Edge Computing for Internet of Things (IoT) Applications

- Data Science for Social Media Analytics

- Cybersecurity for Critical Infrastructure Protection

- Natural Language Processing for Text Classification

- Quantum Computing for Optimization Problems

- Machine Learning for Personalized Health Monitoring

- Computer Vision for Autonomous Driving

- Blockchain Technology for Supply Chain Management

- Augmented Reality for Education and Training

- Natural Language Processing for Sentiment Analysis

- Machine Learning for Personalized Marketing

- Big Data Analytics for Financial Fraud Detection

- Cybersecurity for Cloud Security Assessment

- Artificial Intelligence for Natural Language Understanding

- Blockchain Technology for Decentralized Applications

- Virtual Reality for Cultural Heritage Preservation

- Natural Language Processing for Named Entity Recognition

- Machine Learning for Customer Churn Prediction

- Big Data Analytics for Social Network Analysis

- Cybersecurity for Intrusion Detection and Prevention

- Artificial Intelligence for Robotics and Automation

- Blockchain Technology for Digital Identity Management

- Virtual Reality for Rehabilitation and Therapy

- Natural Language Processing for Text Summarization

- Machine Learning for Credit Risk Assessment

- Big Data Analytics for Fraud Detection in Healthcare

- Cybersecurity for Internet Privacy Protection

- Artificial Intelligence for Game Design and Development

- Blockchain Technology for Decentralized Social Networks

- Virtual Reality for Marketing and Advertising

- Natural Language Processing for Opinion Mining

- Machine Learning for Anomaly Detection

- Big Data Analytics for Predictive Maintenance in Transportation

- Cybersecurity for Network Security Management

- Artificial Intelligence for Personalized News and Content Delivery

- Blockchain Technology for Cryptocurrency Mining

- Virtual Reality for Architectural Design and Visualization

- Natural Language Processing for Machine Translation

- Machine Learning for Automated Image Captioning

- Big Data Analytics for Stock Market Prediction

- Cybersecurity for Biometric Authentication Systems

- Artificial Intelligence for Human-Robot Interaction

- Blockchain Technology for Smart Grids

- Virtual Reality for Sports Training and Simulation

- Natural Language Processing for Question Answering Systems

- Machine Learning for Sentiment Analysis in Customer Feedback

- Big Data Analytics for Predictive Maintenance in Manufacturing

- Cybersecurity for Cloud-Based Systems

- Artificial Intelligence for Automated Journalism

- Blockchain Technology for Intellectual Property Management

- Virtual Reality for Therapy and Rehabilitation

- Natural Language Processing for Language Generation

- Machine Learning for Customer Lifetime Value Prediction

- Big Data Analytics for Predictive Maintenance in Energy Systems

- Cybersecurity for Secure Mobile Communication

- Artificial Intelligence for Emotion Recognition

- Blockchain Technology for Digital Asset Trading

- Virtual Reality for Automotive Design and Visualization

- Natural Language Processing for Semantic Web

- Machine Learning for Fraud Detection in Financial Transactions

- Big Data Analytics for Social Media Monitoring

- Cybersecurity for Cloud Storage and Sharing

- Artificial Intelligence for Personalized Education

- Blockchain Technology for Secure Online Voting Systems

- Virtual Reality for Cultural Tourism

- Natural Language Processing for Chatbot Communication

- Machine Learning for Medical Diagnosis and Treatment

- Big Data Analytics for Environmental Monitoring and Management.

- Cybersecurity for Cloud Computing Environments

- Virtual Reality for Training and Simulation

- Big Data Analytics for Sports Performance Analysis

- Cybersecurity for Internet of Things (IoT) Devices

- Artificial Intelligence for Traffic Management and Control

- Blockchain Technology for Smart Contracts

- Natural Language Processing for Document Summarization

- Machine Learning for Image and Video Recognition

- Blockchain Technology for Digital Asset Management

- Virtual Reality for Entertainment and Gaming

- Natural Language Processing for Opinion Mining in Online Reviews

- Machine Learning for Customer Relationship Management

- Big Data Analytics for Environmental Monitoring and Management

- Cybersecurity for Network Traffic Analysis and Monitoring

- Artificial Intelligence for Natural Language Generation

- Blockchain Technology for Supply Chain Transparency and Traceability

- Virtual Reality for Design and Visualization

- Natural Language Processing for Speech Recognition

- Machine Learning for Recommendation Systems

- Big Data Analytics for Customer Segmentation and Targeting

- Cybersecurity for Biometric Authentication

- Artificial Intelligence for Human-Computer Interaction

- Blockchain Technology for Decentralized Finance (DeFi)

- Virtual Reality for Tourism and Cultural Heritage

- Machine Learning for Cybersecurity Threat Detection and Prevention

- Big Data Analytics for Healthcare Cost Reduction

- Cybersecurity for Data Privacy and Protection

- Artificial Intelligence for Autonomous Vehicles

- Blockchain Technology for Cryptocurrency and Blockchain Security

- Virtual Reality for Real Estate Visualization

- Natural Language Processing for Question Answering

- Big Data Analytics for Financial Markets Prediction

- Cybersecurity for Cloud-Based Machine Learning Systems

- Artificial Intelligence for Personalized Advertising

- Blockchain Technology for Digital Identity Verification

- Virtual Reality for Cultural and Language Learning

- Natural Language Processing for Semantic Analysis

- Machine Learning for Business Forecasting

- Big Data Analytics for Social Media Marketing

- Artificial Intelligence for Content Generation

- Blockchain Technology for Smart Cities

- Virtual Reality for Historical Reconstruction

- Natural Language Processing for Knowledge Graph Construction

- Machine Learning for Speech Synthesis

- Big Data Analytics for Traffic Optimization

- Artificial Intelligence for Social Robotics

- Blockchain Technology for Healthcare Data Management

- Virtual Reality for Disaster Preparedness and Response

- Natural Language Processing for Multilingual Communication

- Machine Learning for Emotion Recognition

- Big Data Analytics for Human Resources Management

- Cybersecurity for Mobile App Security

- Artificial Intelligence for Financial Planning and Investment

- Blockchain Technology for Energy Management

- Virtual Reality for Cultural Preservation and Heritage.

- Big Data Analytics for Healthcare Management

- Cybersecurity in the Internet of Things (IoT)

- Artificial Intelligence for Predictive Maintenance

- Computational Biology for Drug Discovery

- Virtual Reality for Mental Health Treatment

- Machine Learning for Sentiment Analysis in Social Media

- Human-Computer Interaction for User Experience Design

- Cloud Computing for Disaster Recovery

- Quantum Computing for Cryptography

- Intelligent Transportation Systems for Smart Cities

- Cybersecurity for Autonomous Vehicles

- Artificial Intelligence for Fraud Detection in Financial Systems

- Social Network Analysis for Marketing Campaigns

- Cloud Computing for Video Game Streaming

- Machine Learning for Speech Recognition

- Augmented Reality for Architecture and Design

- Natural Language Processing for Customer Service Chatbots

- Machine Learning for Climate Change Prediction

- Big Data Analytics for Social Sciences

- Artificial Intelligence for Energy Management

- Virtual Reality for Tourism and Travel

- Cybersecurity for Smart Grids

- Machine Learning for Image Recognition

- Augmented Reality for Sports Training

- Natural Language Processing for Content Creation

- Cloud Computing for High-Performance Computing

- Artificial Intelligence for Personalized Medicine

- Virtual Reality for Architecture and Design

- Augmented Reality for Product Visualization

- Natural Language Processing for Language Translation

- Cybersecurity for Cloud Computing

- Artificial Intelligence for Supply Chain Optimization

- Blockchain Technology for Digital Voting Systems

- Virtual Reality for Job Training

- Augmented Reality for Retail Shopping

- Natural Language Processing for Sentiment Analysis in Customer Feedback

- Cloud Computing for Mobile Application Development

- Artificial Intelligence for Cybersecurity Threat Detection

- Blockchain Technology for Intellectual Property Protection

- Virtual Reality for Music Education

- Machine Learning for Financial Forecasting

- Augmented Reality for Medical Education

- Natural Language Processing for News Summarization

- Cybersecurity for Healthcare Data Protection

- Artificial Intelligence for Autonomous Robots

- Virtual Reality for Fitness and Health

- Machine Learning for Natural Language Understanding

- Augmented Reality for Museum Exhibits

- Natural Language Processing for Chatbot Personality Development

- Cloud Computing for Website Performance Optimization

- Artificial Intelligence for E-commerce Recommendation Systems

- Blockchain Technology for Supply Chain Traceability

- Virtual Reality for Military Training

- Augmented Reality for Advertising

- Natural Language Processing for Chatbot Conversation Management

- Cybersecurity for Cloud-Based Services

- Artificial Intelligence for Agricultural Management

- Blockchain Technology for Food Safety Assurance

- Virtual Reality for Historical Reenactments

- Machine Learning for Cybersecurity Incident Response.

- Secure Multiparty Computation

- Federated Learning

- Internet of Things Security

- Blockchain Scalability

- Quantum Computing Algorithms

- Explainable AI

- Data Privacy in the Age of Big Data

- Adversarial Machine Learning

- Deep Reinforcement Learning

- Online Learning and Streaming Algorithms

- Graph Neural Networks

- Automated Debugging and Fault Localization

- Mobile Application Development

- Software Engineering for Cloud Computing

- Cryptocurrency Security

- Edge Computing for Real-Time Applications

- Natural Language Generation

- Virtual and Augmented Reality

- Computational Biology and Bioinformatics

- Internet of Things Applications

- Robotics and Autonomous Systems

- Explainable Robotics

- 3D Printing and Additive Manufacturing

- Distributed Systems

- Parallel Computing

- Data Center Networking

- Data Mining and Knowledge Discovery

- Information Retrieval and Search Engines

- Network Security and Privacy

- Cloud Computing Security

- Data Analytics for Business Intelligence

- Neural Networks and Deep Learning

- Reinforcement Learning for Robotics

- Automated Planning and Scheduling

- Evolutionary Computation and Genetic Algorithms

- Formal Methods for Software Engineering

- Computational Complexity Theory

- Bio-inspired Computing

- Computer Vision for Object Recognition

- Automated Reasoning and Theorem Proving

- Natural Language Understanding

- Machine Learning for Healthcare

- Scalable Distributed Systems

- Sensor Networks and Internet of Things

- Smart Grids and Energy Systems

- Software Testing and Verification

- Web Application Security

- Wireless and Mobile Networks

- Computer Architecture and Hardware Design

- Digital Signal Processing

- Game Theory and Mechanism Design

- Multi-agent Systems

- Evolutionary Robotics

- Quantum Machine Learning

- Computational Social Science

- Explainable Recommender Systems.

- Artificial Intelligence and its applications

- Cloud computing and its benefits

- Cybersecurity threats and solutions

- Internet of Things and its impact on society

- Virtual and Augmented Reality and its uses

- Blockchain Technology and its potential in various industries

- Web Development and Design

- Digital Marketing and its effectiveness

- Big Data and Analytics

- Software Development Life Cycle

- Gaming Development and its growth

- Network Administration and Maintenance

- Machine Learning and its uses

- Data Warehousing and Mining

- Computer Architecture and Design

- Computer Graphics and Animation

- Quantum Computing and its potential

- Data Structures and Algorithms

- Computer Vision and Image Processing

- Robotics and its applications

- Operating Systems and its functions

- Information Theory and Coding

- Compiler Design and Optimization

- Computer Forensics and Cyber Crime Investigation

- Distributed Computing and its significance

- Artificial Neural Networks and Deep Learning

- Cloud Storage and Backup

- Programming Languages and their significance

- Computer Simulation and Modeling

- Computer Networks and its types

- Information Security and its types

- Computer-based Training and eLearning

- Medical Imaging and its uses

- Social Media Analysis and its applications

- Human Resource Information Systems

- Computer-Aided Design and Manufacturing

- Multimedia Systems and Applications

- Geographic Information Systems and its uses

- Computer-Assisted Language Learning

- Mobile Device Management and Security

- Data Compression and its types

- Knowledge Management Systems

- Text Mining and its uses

- Cyber Warfare and its consequences

- Wireless Networks and its advantages

- Computer Ethics and its importance

- Computational Linguistics and its applications

- Autonomous Systems and Robotics

- Information Visualization and its importance

- Geographic Information Retrieval and Mapping

- Business Intelligence and its benefits

- Digital Libraries and their significance

- Artificial Life and Evolutionary Computation

- Computer Music and its types

- Virtual Teams and Collaboration

- Computer Games and Learning

- Semantic Web and its applications

- Electronic Commerce and its advantages

- Multimedia Databases and their significance

- Computer Science Education and its importance

- Computer-Assisted Translation and Interpretation

- Ambient Intelligence and Smart Homes

- Autonomous Agents and Multi-Agent Systems.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

300+ Mental Health Research Topics

300+ Social Media Research Topics

500+ Business Research Topics

300+ American History Research Paper Topics

500+ Psychology Research Topic Ideas

300+ Communication Research Topics

Research Topics & Ideas: CompSci & IT

50+ Computer Science Research Topic Ideas To Fast-Track Your Project

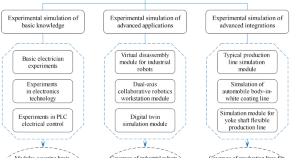

Finding and choosing a strong research topic is the critical first step when it comes to crafting a high-quality dissertation, thesis or research project. If you’ve landed on this post, chances are you’re looking for a computer science-related research topic , but aren’t sure where to start. Here, we’ll explore a variety of CompSci & IT-related research ideas and topic thought-starters, including algorithms, AI, networking, database systems, UX, information security and software engineering.

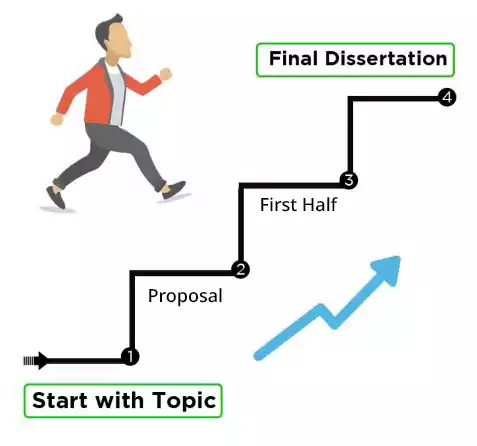

NB – This is just the start…

The topic ideation and evaluation process has multiple steps . In this post, we’ll kickstart the process by sharing some research topic ideas within the CompSci domain. This is the starting point, but to develop a well-defined research topic, you’ll need to identify a clear and convincing research gap , along with a well-justified plan of action to fill that gap.

If you’re new to the oftentimes perplexing world of research, or if this is your first time undertaking a formal academic research project, be sure to check out our free dissertation mini-course. In it, we cover the process of writing a dissertation or thesis from start to end. Be sure to also sign up for our free webinar that explores how to find a high-quality research topic.

Overview: CompSci Research Topics

- Algorithms & data structures

- Artificial intelligence ( AI )

- Computer networking

- Database systems

- Human-computer interaction

- Information security (IS)

- Software engineering

- Examples of CompSci dissertation & theses

Topics/Ideas: Algorithms & Data Structures

- An analysis of neural network algorithms’ accuracy for processing consumer purchase patterns

- A systematic review of the impact of graph algorithms on data analysis and discovery in social media network analysis

- An evaluation of machine learning algorithms used for recommender systems in streaming services

- A review of approximation algorithm approaches for solving NP-hard problems

- An analysis of parallel algorithms for high-performance computing of genomic data

- The influence of data structures on optimal algorithm design and performance in Fintech

- A Survey of algorithms applied in internet of things (IoT) systems in supply-chain management

- A comparison of streaming algorithm performance for the detection of elephant flows

- A systematic review and evaluation of machine learning algorithms used in facial pattern recognition

- Exploring the performance of a decision tree-based approach for optimizing stock purchase decisions

- Assessing the importance of complete and representative training datasets in Agricultural machine learning based decision making.

- A Comparison of Deep learning algorithms performance for structured and unstructured datasets with “rare cases”

- A systematic review of noise reduction best practices for machine learning algorithms in geoinformatics.

- Exploring the feasibility of applying information theory to feature extraction in retail datasets.

- Assessing the use case of neural network algorithms for image analysis in biodiversity assessment

Topics & Ideas: Artificial Intelligence (AI)

- Applying deep learning algorithms for speech recognition in speech-impaired children

- A review of the impact of artificial intelligence on decision-making processes in stock valuation

- An evaluation of reinforcement learning algorithms used in the production of video games

- An exploration of key developments in natural language processing and how they impacted the evolution of Chabots.

- An analysis of the ethical and social implications of artificial intelligence-based automated marking

- The influence of large-scale GIS datasets on artificial intelligence and machine learning developments

- An examination of the use of artificial intelligence in orthopaedic surgery

- The impact of explainable artificial intelligence (XAI) on transparency and trust in supply chain management

- An evaluation of the role of artificial intelligence in financial forecasting and risk management in cryptocurrency

- A meta-analysis of deep learning algorithm performance in predicting and cyber attacks in schools

Topics & Ideas: Networking

- An analysis of the impact of 5G technology on internet penetration in rural Tanzania

- Assessing the role of software-defined networking (SDN) in modern cloud-based computing

- A critical analysis of network security and privacy concerns associated with Industry 4.0 investment in healthcare.

- Exploring the influence of cloud computing on security risks in fintech.

- An examination of the use of network function virtualization (NFV) in telecom networks in Southern America

- Assessing the impact of edge computing on network architecture and design in IoT-based manufacturing

- An evaluation of the challenges and opportunities in 6G wireless network adoption

- The role of network congestion control algorithms in improving network performance on streaming platforms

- An analysis of network coding-based approaches for data security

- Assessing the impact of network topology on network performance and reliability in IoT-based workspaces

Topics & Ideas: Database Systems

- An analysis of big data management systems and technologies used in B2B marketing

- The impact of NoSQL databases on data management and analysis in smart cities

- An evaluation of the security and privacy concerns of cloud-based databases in financial organisations

- Exploring the role of data warehousing and business intelligence in global consultancies

- An analysis of the use of graph databases for data modelling and analysis in recommendation systems

- The influence of the Internet of Things (IoT) on database design and management in the retail grocery industry

- An examination of the challenges and opportunities of distributed databases in supply chain management

- Assessing the impact of data compression algorithms on database performance and scalability in cloud computing

- An evaluation of the use of in-memory databases for real-time data processing in patient monitoring

- Comparing the effects of database tuning and optimization approaches in improving database performance and efficiency in omnichannel retailing

Topics & Ideas: Human-Computer Interaction

- An analysis of the impact of mobile technology on human-computer interaction prevalence in adolescent men

- An exploration of how artificial intelligence is changing human-computer interaction patterns in children

- An evaluation of the usability and accessibility of web-based systems for CRM in the fast fashion retail sector

- Assessing the influence of virtual and augmented reality on consumer purchasing patterns

- An examination of the use of gesture-based interfaces in architecture

- Exploring the impact of ease of use in wearable technology on geriatric user

- Evaluating the ramifications of gamification in the Metaverse

- A systematic review of user experience (UX) design advances associated with Augmented Reality

- A comparison of natural language processing algorithms automation of customer response Comparing end-user perceptions of natural language processing algorithms for automated customer response

- Analysing the impact of voice-based interfaces on purchase practices in the fast food industry

Topics & Ideas: Information Security

- A bibliometric review of current trends in cryptography for secure communication

- An analysis of secure multi-party computation protocols and their applications in cloud-based computing

- An investigation of the security of blockchain technology in patient health record tracking

- A comparative study of symmetric and asymmetric encryption algorithms for instant text messaging

- A systematic review of secure data storage solutions used for cloud computing in the fintech industry

- An analysis of intrusion detection and prevention systems used in the healthcare sector

- Assessing security best practices for IoT devices in political offices

- An investigation into the role social media played in shifting regulations related to privacy and the protection of personal data

- A comparative study of digital signature schemes adoption in property transfers

- An assessment of the security of secure wireless communication systems used in tertiary institutions

Topics & Ideas: Software Engineering

- A study of agile software development methodologies and their impact on project success in pharmacology

- Investigating the impacts of software refactoring techniques and tools in blockchain-based developments

- A study of the impact of DevOps practices on software development and delivery in the healthcare sector

- An analysis of software architecture patterns and their impact on the maintainability and scalability of cloud-based offerings

- A study of the impact of artificial intelligence and machine learning on software engineering practices in the education sector

- An investigation of software testing techniques and methodologies for subscription-based offerings

- A review of software security practices and techniques for protecting against phishing attacks from social media

- An analysis of the impact of cloud computing on the rate of software development and deployment in the manufacturing sector

- Exploring the impact of software development outsourcing on project success in multinational contexts

- An investigation into the effect of poor software documentation on app success in the retail sector

CompSci & IT Dissertations/Theses

While the ideas we’ve presented above are a decent starting point for finding a CompSci-related research topic, they are fairly generic and non-specific. So, it helps to look at actual dissertations and theses to see how this all comes together.

Below, we’ve included a selection of research projects from various CompSci-related degree programs to help refine your thinking. These are actual dissertations and theses, written as part of Master’s and PhD-level programs, so they can provide some useful insight as to what a research topic looks like in practice.

- An array-based optimization framework for query processing and data analytics (Chen, 2021)

- Dynamic Object Partitioning and replication for cooperative cache (Asad, 2021)

- Embedding constructural documentation in unit tests (Nassif, 2019)

- PLASA | Programming Language for Synchronous Agents (Kilaru, 2019)

- Healthcare Data Authentication using Deep Neural Network (Sekar, 2020)

- Virtual Reality System for Planetary Surface Visualization and Analysis (Quach, 2019)

- Artificial neural networks to predict share prices on the Johannesburg stock exchange (Pyon, 2021)

- Predicting household poverty with machine learning methods: the case of Malawi (Chinyama, 2022)

- Investigating user experience and bias mitigation of the multi-modal retrieval of historical data (Singh, 2021)

- Detection of HTTPS malware traffic without decryption (Nyathi, 2022)

- Redefining privacy: case study of smart health applications (Al-Zyoud, 2019)

- A state-based approach to context modeling and computing (Yue, 2019)

- A Novel Cooperative Intrusion Detection System for Mobile Ad Hoc Networks (Solomon, 2019)

- HRSB-Tree for Spatio-Temporal Aggregates over Moving Regions (Paduri, 2019)

Looking at these titles, you can probably pick up that the research topics here are quite specific and narrowly-focused , compared to the generic ones presented earlier. This is an important thing to keep in mind as you develop your own research topic. That is to say, to create a top-notch research topic, you must be precise and target a specific context with specific variables of interest . In other words, you need to identify a clear, well-justified research gap.

Fast-Track Your Research Topic

If you’re still feeling a bit unsure about how to find a research topic for your Computer Science dissertation or research project, check out our Topic Kickstarter service.

You Might Also Like:

Investigating the impacts of software refactoring techniques and tools in blockchain-based developments.

Steps on getting this project topic

I want to work with this topic, am requesting materials to guide.

Information Technology -MSc program

It’s really interesting but how can I have access to the materials to guide me through my work?

That’s my problem also.

Investigating the impacts of software refactoring techniques and tools in blockchain-based developments is in my favour. May i get the proper material about that ?

BLOCKCHAIN TECHNOLOGY

I NEED TOPIC

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

CS 261: Research Topics in Operating Systems (2021)

Some links to papers are links to the ACM’s site. You may need to use the Harvard VPN to get access to the papers via those links. Alternate links will be provided.

Meeting 1 (1/26): Overview

Operating system architectures, meeting 2 (1/28): multics and unix.

“Multics—The first seven years” , Corbató FJ, Saltzer JH, and Clingen CT (1972)

“Protection in an information processing utility” , Graham RM (1968)

“The evolution of the Unix time-sharing system” , Ritchie DM (1984)

Additional resources

The Multicians web site for additional information on Multics, including extensive stories and Multics source code.

Technical: The Multics input/output system , Feiertag RJ and Organick EI, for a description of Multics I/O to contrast with Unix I/O.

Unix and Multics , Tom Van Vleck.

… I remarked to Dennis that easily half the code I was writing in Multics was error recovery code. He said, "We left all that stuff out. If there's an error, we have this routine called panic() , and when it is called, the machine crashes, and you holler down the hall, 'Hey, reboot it.'"

The Louisiana State Trooper Story

The IBM 7094 and CTSS

This describes the history of the system that preceded Multics, CTSS (the Compatible Time Sharing System). It also contains one of my favorite stories about the early computing days: “IBM had been very generous to MIT in the fifties and sixties, donating or discounting its biggest scientific computers. When a new top of the line 36-bit scientific machine came out, MIT expected to get one. In the early sixties, the deal was that MIT got one 8-hour shift, all the other New England colleges and universities got a shift, and the third shift was available to IBM for its own use. One use IBM made of its share was yacht handicapping: the President of IBM raced big yachts on Long Island Sound, and these boats were assigned handicap points by a complicated formula. There was a special job deck kept at the MIT Computation Center, and if a request came in to run it, operators were to stop whatever was running on the machine and do the yacht handicapping job immediately.”

Using Ring 5 , Randy Saunders.

"All Multics User functions work in Ring 5." I have that EMail (from Dave Bergum) framed on my wall to this date. … All the documentation clearly states that system software has ring brackets of [1,5,5] so that it runs equally in both rings 4 and 5. However, the PL/I compiler creates segments with ring brackets of [4,4,4] by default. … I found each and every place CNO had fixed a program without resetting the ring brackets correctly. It started out 5 a day, and in 3 months it was down to one a week.”

Bell Systems Technical Journal 57(6) Part 2: Unix Time-sharing System (July–August 1978)

This volume contains some of the first broadly-accessible descriptions of Unix. Individual articles are available on archive.org . As of late January 2021, you can buy a physical copy on Amazon for $2,996. Interesting articles include Thompson on Unix implementation, Ritchie’s retrospective, and several articles on actual applications, especially document preparation.

Meeting 3 (2/2): Microkernels

“The nucleus of a multiprogramming system” , Brinch Hansen P (1970).

“Toward real microkernels” , Liedtke J (1996).

“Are virtual machine monitors microkernels done right?” , Hand S, Warfield A, Fraser K, Kotsovinos E, Magenheimer DJ (2005).

Supplemental reading

“Improving IPC by kernel design” , Liedtke J (1993). Article introducing the first microbenchmark-performant microkernel.

“Are virtual machine monitors microkernels done right?” , Heiser G, Uhlig V, LeVasseur J (2006).

“From L3 to seL4: What have we learnt in 20 years of L4 microkernels?” , Elphinstone K, Heiser G (2013).

Retained: Minimality as key design principle. Replaced: Synchronous IPC augmented with (seL4, NOVA, Fiasco.OC) or replaced by (OKL4) asynchronous notification. Replaced: Physical by virtual message registers. Abandoned: Long IPC. Replaced: Thread IDs by port-like IPC endpoints as message destinations. Abandoned: IPC timeouts in seL4, OKL4. Abandoned: Clans and chiefs. Retained: User-level drivers as a core feature. Abandoned: Hierarchical process management. Multiple approaches: Some L4 kernels retain the model of recursive address-space construc- tion, while seL4 and OKL4 originate mappings from frames. Added: User-level control over kernel memory in seL4, kernel memory quota in Fiasco.OC. Unresolved: Principled, policy-free control of CPU time. Unresolved: Handling of multicore processors in the age of verification. Replaced: Process kernel by event kernel in seL4, OKL4 and NOVA. Abandoned: Virtual TCB addressing. … Abandoned: C++ for seL4 and OKL4.

Meeting 4 (2/4): Exokernels

“Exterminate all operating systems abstractions” , Engler DE, Kaashoek MF (1995).

“Exokernel: an operating system architecture for application-level resource management” , Engler DE, Kaashoek MF, O’Toole J (1995).

“The nonkernel: a kernel designed for the cloud” , Ben-Yehuda M, Peleg O, Ben-Yehuda OA, Smolyar I, Tsafrir D (2013).

“Application performance and flexibility on exokernel systems” , Kaashoek MF, Engler DR, Ganger GR, Briceño HM, Hunt R, Mazières D, Pinckney T, Grimm R, Jannotti J, Mackenzie K (1997).

Particularly worth reading is section 4, Multiplexing Stable Storage, which contains one of the most overcomplicated designs for stable storage imaginable. It’s instructive: if your principles end up here, might there be something wrong with your principles?

“Fast and flexible application-level networking on exokernel systems” , Ganger GR, Engler DE, Kaashoek MF, Briceño HM, Hunt R, Pinckney T (2002).

Particularly worth reading is section 8, Discussion: “The construction and revision of the Xok/ExOS networking support came with several lessons and controversial design decisions.”

Meeting 5 (2/9): Security

“EROS: A fast capability system” , Shapiro JS, Smith JM, Farber DJ (1999).

“Labels and event processes in the Asbestos operating system” , Vandebogart S, Efstathopoulos P, Kohler E, Krohn M, Frey C, Ziegler D, Kaashoek MF, Morris R, Mazières D (2007).

This paper covers too much ground. On the first read, skip sections 4–6.

Meeting 6 (2/11): I/O

“Arrakis: The operating system is the control plane” (PDF) , Peter S, Li J, Zhang I, Ports DRK, Woos D, Krishnamurthy A, Anderson T, Roscoe T (2014)

“The IX Operating System: Combining Low Latency, High Throughput, and Efficiency in a Protected Dataplane” , Belay A, Prekas G, Primorac M, Klimovic A, Grossman S, Kozyrakis C, Bugnion E (2016) — read Sections 1–4 first (return to the rest if you have time)

“I'm Not Dead Yet!: The Role of the Operating System in a Kernel-Bypass Era” , Zhang I, Liu J, Austin A, Roberts ML, Badam A (2019)

- “The multikernel: A new OS architecture for scalable multicore systems” , Baumann A, Barham P, Dagand PE, Harris T, Isaacs R, Peter S, Roscoe T, Schüpach A, Singhana A (2009); this describes the Barrelfish system on which Arrakis is based

Meeting 7 (2/16): Speculative designs

From least to most speculative:

“Unified high-performance I/O: One Stack to Rule Them All” (PDF) , Trivedi A, Stuedi P, Metzler B, Pletka R, Fitch BG, Gross TR (2013)

“The Case for Less Predictable Operating System Behavior” (PDF) , Sun R, Porter DE, Oliveira D, Bishop M (2015)

“Quantum operating systems” , Corrigan-Gibbs H, Wu DJ, Boneh D (2017)

“Pursue robust indefinite scalability” , Ackley DH, Cannon DC (2013)

Meeting 8 (2/18): Log-structured file system

“The Design and Implementation of a Log-Structured File System” , Rosenblum M, Ousterhout J (1992)

“Logging versus Clustering: A Performance Evaluation”

- Read the abstract of the paper ; scan further if you’d like

- Then poke around the linked critiques

Meeting 9 (2/23): Consistency

“Generalized file system dependencies” , Frost C, Mammarella M, Kohler E, de los Reyes A, Hovsepian S, Matsuoka A, Zhang L (2007)

“Application crash consistency and performance with CCFS” , Sankaranarayana Pillai T, Alagappan R, Lu L, Chidambaram V, Arpaci-Dusseau AC, Arpaci-Dusseau RH (2017)

Meeting 10 (2/25): Transactions and speculation

“Rethink the sync” , Nightingale EB, Veeraraghavzn K, Chen PM, Flinn J (2006)

“Operating system transactions” , Porter DE, Hofmann OS, Rossbach CJ, Benn E, Witchel E (2009)

Meeting 11 (3/2): Speculative designs

“Can We Store the Whole World's Data in DNA Storage?”

“A tale of two abstractions: The case for object space”

“File systems as processes”

“Preserving hidden data with an ever-changing disk”

More, if you’re hungry for it

- “Breaking Apart the VFS for Managing File Systems”

Virtualization

Meeting 14 (3/11): virtual machines and containers.

“Xen and the Art of Virtualization” , Barham P, Dragovic B, Fraser K, Hand S, Harris T, Ho A, Neugebauer R, Pratt I, Warfield A (2003)

“Blending containers and virtual machines: A study of Firecracker and gVisor” , Anjali, Caraz-Harter T, Swift MM (2020)

Meeting 15 (3/18): Virtual memory and virtual devices

“Memory resource management in VMware ESX Server” , Waldspurger CA (2002)

“Opportunistic flooding to improve TCP transmit performance in virtualized clouds” , Gamage S, Kangarlou A, Kompella RR, Xu D (2011)

Meeting 16 (3/23): Speculative designs

“The Best of Both Worlds with On-Demand Virtualization” , Kooburat T, Swift M (2011)

“The NIC is the Hypervisor: Bare-Metal Guests in IaaS Clouds” , Mogul JC, Mudigonda J, Santos JR, Turner Y (2013)

“vPipe: One Pipe to Connect Them All!” , Gamage S, Kompella R, Xu D (2013)

“Scalable Cloud Security via Asynchronous Virtual Machine Introspection” , Rajasekaran S, Ni Z, Chawla HS, Shah N, Wood T (2016)

Distributed systems

Meeting 17 (3/25): distributed systems history.

“Grapevine: an exercise in distributed computing” , Birrell AD, Levin R, Schroeder MD, Needham RM (1982)

“Implementing remote procedure calls” , Birrell AD, Nelson BJ (1984)

Skim : “Time, clocks, and the ordering of events in a distributed system” , Lamport L (1978)

Meeting 18 (3/30): Paxos

“Paxos made simple” , Lamport L (2001)

“Paxos made live: an engineering perspective” , Chanra T, Griesemer R, Redston J (2007)

“In search of an understandable consensus algorithm” , Ongaro D, Ousterhout J (2014)

- Adrian Colyer’s consensus series links to ten papers, especially:

- “Raft Refloated: Do we have consensus?” , Howard H, Schwarzkopf M, Madhavapeddy A, Crowcroft J (2015)

- A later update from overlapping authors: “Paxos vs. Raft: Have we reached consensus on distributed consensus?” , Howard H, Mortier R (2020)

- “Understanding Paxos” , notes by Paul Krzyzanowski (2018); includes some failure examples

- One-slide Paxos pseudocode , Robert Morris (2014)

Meeting 19 (4/1): Review of replication results

Meeting 20 (4/6): project discussion, meeting 21 (4/8): industrial consistency.

“Scaling Memcache at Facebook” , Nishtala R, Fugal H, Grimm S, Kwiatkowski M, Lee H, Li HC, McElroy R, Paleczny M, Peek D, Saab P, Stafford D, Tung T, Venkataramani V (2013)

“Millions of Tiny Databases” , Brooker M, Chen T, Ping F (2020)

Meeting 22 (4/13): Short papers and speculative designs

“Scalability! But at what COST?” , McSherry F, Isard M, Murray DG (2015)

“What bugs cause production cloud incidents?” , Liu H, Lu S, Musuvathi M, Nath S (2019)

“Escape Capsule: Explicit State Is Robust and Scalable” , Rajagopalan S, Williams D, Jamjoom H, Warfield A (2013)

“Music-defined networking” , Hogan M, Esposito F (2018)

- Too networking-centric for us, but fun: “Delay is Not an Option: Low Latency Routing in Space” , Handley M (2018)

- A useful taxonomy: “When Should The Network Be The Computer?” , Ports DRK, Nelson J (2019)

Meeting 23 (4/20): The M Group

“All File Systems Are Not Created Equal: On the Complexity of Crafting Crash-Consistent Applications” , Pillai TS, Chidambaram V, Alagappan R, Al-Kiswany S, Arpaci-Dusseau AC, Arpaci-Dusseau RH (2014)

“Crash Consistency Validation Made Easy” , Jiang Y, Chen H, Qin F, Xu C, Ma X, Lu J (2016)

Meeting 24 (4/22): NVM and Juice

“Persistent Memcached: Bringing Legacy Code to Byte-Addressable Persistent Memory” , Marathe VJ, Seltzer M, Byan S, Harris T

“NVMcached: An NVM-based Key-Value Cache” , Wu X, Ni F, Zhang L, Wang Y, Ren Y, Hack M, Shao Z, Jiang S (2016)

“Cloudburst: stateful functions-as-a-service” , Sreekanti V, Wu C, Lin XC, Schleier-Smith J, Gonzalez JE, Hellerstein JM, Tumanov A (2020)

- Adrian Colyer’s take

Meeting 25 (4/27): Scheduling

- “The Linux Scheduler: A Decade of Wasted Cores” , Lozi JP, Lepers B, Funston J, Gaud F, Quéma V, Fedorova A (2016)

Cyber risk and cybersecurity: a systematic review of data availability

- Open access

- Published: 17 February 2022

- Volume 47 , pages 698–736, ( 2022 )

Cite this article

You have full access to this open access article

- Frank Cremer 1 ,

- Barry Sheehan ORCID: orcid.org/0000-0003-4592-7558 1 ,

- Michael Fortmann 2 ,

- Arash N. Kia 1 ,

- Martin Mullins 1 ,

- Finbarr Murphy 1 &

- Stefan Materne 2

69k Accesses

72 Citations

42 Altmetric

Explore all metrics

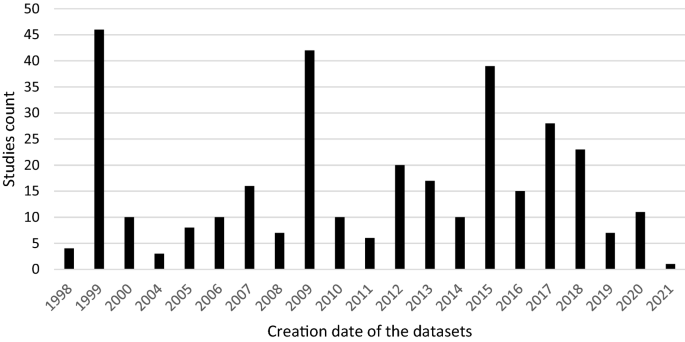

Cybercrime is estimated to have cost the global economy just under USD 1 trillion in 2020, indicating an increase of more than 50% since 2018. With the average cyber insurance claim rising from USD 145,000 in 2019 to USD 359,000 in 2020, there is a growing necessity for better cyber information sources, standardised databases, mandatory reporting and public awareness. This research analyses the extant academic and industry literature on cybersecurity and cyber risk management with a particular focus on data availability. From a preliminary search resulting in 5219 cyber peer-reviewed studies, the application of the systematic methodology resulted in 79 unique datasets. We posit that the lack of available data on cyber risk poses a serious problem for stakeholders seeking to tackle this issue. In particular, we identify a lacuna in open databases that undermine collective endeavours to better manage this set of risks. The resulting data evaluation and categorisation will support cybersecurity researchers and the insurance industry in their efforts to comprehend, metricise and manage cyber risks.

Similar content being viewed by others

Systematic Review: Cybersecurity Risk Taxonomy

A Survey of Cybersecurity Risk Management Frameworks

Cybersecurity Risk Management Frameworks in the Oil and Gas Sector: A Systematic Literature Review

Avoid common mistakes on your manuscript.

Introduction

Globalisation, digitalisation and smart technologies have escalated the propensity and severity of cybercrime. Whilst it is an emerging field of research and industry, the importance of robust cybersecurity defence systems has been highlighted at the corporate, national and supranational levels. The impacts of inadequate cybersecurity are estimated to have cost the global economy USD 945 billion in 2020 (Maleks Smith et al. 2020 ). Cyber vulnerabilities pose significant corporate risks, including business interruption, breach of privacy and financial losses (Sheehan et al. 2019 ). Despite the increasing relevance for the international economy, the availability of data on cyber risks remains limited. The reasons for this are many. Firstly, it is an emerging and evolving risk; therefore, historical data sources are limited (Biener et al. 2015 ). It could also be due to the fact that, in general, institutions that have been hacked do not publish the incidents (Eling and Schnell 2016 ). The lack of data poses challenges for many areas, such as research, risk management and cybersecurity (Falco et al. 2019 ). The importance of this topic is demonstrated by the announcement of the European Council in April 2021 that a centre of excellence for cybersecurity will be established to pool investments in research, technology and industrial development. The goal of this centre is to increase the security of the internet and other critical network and information systems (European Council 2021 ).

This research takes a risk management perspective, focusing on cyber risk and considering the role of cybersecurity and cyber insurance in risk mitigation and risk transfer. The study reviews the existing literature and open data sources related to cybersecurity and cyber risk. This is the first systematic review of data availability in the general context of cyber risk and cybersecurity. By identifying and critically analysing the available datasets, this paper supports the research community by aggregating, summarising and categorising all available open datasets. In addition, further information on datasets is attached to provide deeper insights and support stakeholders engaged in cyber risk control and cybersecurity. Finally, this research paper highlights the need for open access to cyber-specific data, without price or permission barriers.

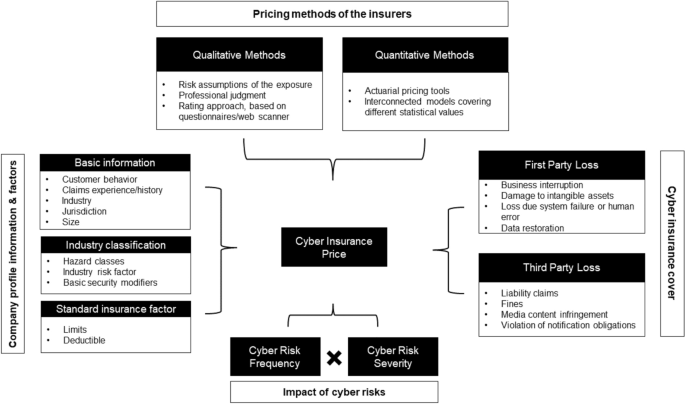

The identified open data can support cyber insurers in their efforts on sustainable product development. To date, traditional risk assessment methods have been untenable for insurance companies due to the absence of historical claims data (Sheehan et al. 2021 ). These high levels of uncertainty mean that cyber insurers are more inclined to overprice cyber risk cover (Kshetri 2018 ). Combining external data with insurance portfolio data therefore seems to be essential to improve the evaluation of the risk and thus lead to risk-adjusted pricing (Bessy-Roland et al. 2021 ). This argument is also supported by the fact that some re/insurers reported that they are working to improve their cyber pricing models (e.g. by creating or purchasing databases from external providers) (EIOPA 2018 ). Figure 1 provides an overview of pricing tools and factors considered in the estimation of cyber insurance based on the findings of EIOPA ( 2018 ) and the research of Romanosky et al. ( 2019 ). The term cyber risk refers to all cyber risks and their potential impact.

An overview of the current cyber insurance informational and methodological landscape, adapted from EIOPA ( 2018 ) and Romanosky et al. ( 2019 )

Besides the advantage of risk-adjusted pricing, the availability of open datasets helps companies benchmark their internal cyber posture and cybersecurity measures. The research can also help to improve risk awareness and corporate behaviour. Many companies still underestimate their cyber risk (Leong and Chen 2020 ). For policymakers, this research offers starting points for a comprehensive recording of cyber risks. Although in many countries, companies are obliged to report data breaches to the respective supervisory authority, this information is usually not accessible to the research community. Furthermore, the economic impact of these breaches is usually unclear.

As well as the cyber risk management community, this research also supports cybersecurity stakeholders. Researchers are provided with an up-to-date, peer-reviewed literature of available datasets showing where these datasets have been used. For example, this includes datasets that have been used to evaluate the effectiveness of countermeasures in simulated cyberattacks or to test intrusion detection systems. This reduces a time-consuming search for suitable datasets and ensures a comprehensive review of those available. Through the dataset descriptions, researchers and industry stakeholders can compare and select the most suitable datasets for their purposes. In addition, it is possible to combine the datasets from one source in the context of cybersecurity or cyber risk. This supports efficient and timely progress in cyber risk research and is beneficial given the dynamic nature of cyber risks.

Cyber risks are defined as “operational risks to information and technology assets that have consequences affecting the confidentiality, availability, and/or integrity of information or information systems” (Cebula et al. 2014 ). Prominent cyber risk events include data breaches and cyberattacks (Agrafiotis et al. 2018 ). The increasing exposure and potential impact of cyber risk have been highlighted in recent industry reports (e.g. Allianz 2021 ; World Economic Forum 2020 ). Cyberattacks on critical infrastructures are ranked 5th in the World Economic Forum's Global Risk Report. Ransomware, malware and distributed denial-of-service (DDoS) are examples of the evolving modes of a cyberattack. One example is the ransomware attack on the Colonial Pipeline, which shut down the 5500 mile pipeline system that delivers 2.5 million barrels of fuel per day and critical liquid fuel infrastructure from oil refineries to states along the U.S. East Coast (Brower and McCormick 2021 ). These and other cyber incidents have led the U.S. to strengthen its cybersecurity and introduce, among other things, a public body to analyse major cyber incidents and make recommendations to prevent a recurrence (Murphey 2021a ). Another example of the scope of cyberattacks is the ransomware NotPetya in 2017. The damage amounted to USD 10 billion, as the ransomware exploited a vulnerability in the windows system, allowing it to spread independently worldwide in the network (GAO 2021 ). In the same year, the ransomware WannaCry was launched by cybercriminals. The cyberattack on Windows software took user data hostage in exchange for Bitcoin cryptocurrency (Smart 2018 ). The victims included the National Health Service in Great Britain. As a result, ambulances were redirected to other hospitals because of information technology (IT) systems failing, leaving people in need of urgent assistance waiting. It has been estimated that 19,000 cancelled treatment appointments resulted from losses of GBP 92 million (Field 2018 ). Throughout the COVID-19 pandemic, ransomware attacks increased significantly, as working from home arrangements increased vulnerability (Murphey 2021b ).

Besides cyberattacks, data breaches can also cause high costs. Under the General Data Protection Regulation (GDPR), companies are obliged to protect personal data and safeguard the data protection rights of all individuals in the EU area. The GDPR allows data protection authorities in each country to impose sanctions and fines on organisations they find in breach. “For data breaches, the maximum fine can be €20 million or 4% of global turnover, whichever is higher” (GDPR.EU 2021 ). Data breaches often involve a large amount of sensitive data that has been accessed, unauthorised, by external parties, and are therefore considered important for information security due to their far-reaching impact (Goode et al. 2017 ). A data breach is defined as a “security incident in which sensitive, protected, or confidential data are copied, transmitted, viewed, stolen, or used by an unauthorized individual” (Freeha et al. 2021 ). Depending on the amount of data, the extent of the damage caused by a data breach can be significant, with the average cost being USD 392 million Footnote 1 (IBM Security 2020 ).

This research paper reviews the existing literature and open data sources related to cybersecurity and cyber risk, focusing on the datasets used to improve academic understanding and advance the current state-of-the-art in cybersecurity. Furthermore, important information about the available datasets is presented (e.g. use cases), and a plea is made for open data and the standardisation of cyber risk data for academic comparability and replication. The remainder of the paper is structured as follows. The next section describes the related work regarding cybersecurity and cyber risks. The third section outlines the review method used in this work and the process. The fourth section details the results of the identified literature. Further discussion is presented in the penultimate section and the final section concludes.

Related work

Due to the significance of cyber risks, several literature reviews have been conducted in this field. Eling ( 2020 ) reviewed the existing academic literature on the topic of cyber risk and cyber insurance from an economic perspective. A total of 217 papers with the term ‘cyber risk’ were identified and classified in different categories. As a result, open research questions are identified, showing that research on cyber risks is still in its infancy because of their dynamic and emerging nature. Furthermore, the author highlights that particular focus should be placed on the exchange of information between public and private actors. An improved information flow could help to measure the risk more accurately and thus make cyber risks more insurable and help risk managers to determine the right level of cyber risk for their company. In the context of cyber insurance data, Romanosky et al. ( 2019 ) analysed the underwriting process for cyber insurance and revealed how cyber insurers understand and assess cyber risks. For this research, they examined 235 American cyber insurance policies that were publicly available and looked at three components (coverage, application questionnaires and pricing). The authors state in their findings that many of the insurers used very simple, flat-rate pricing (based on a single calculation of expected loss), while others used more parameters such as the asset value of the company (or company revenue) or standard insurance metrics (e.g. deductible, limits), and the industry in the calculation. This is in keeping with Eling ( 2020 ), who states that an increased amount of data could help to make cyber risk more accurately measured and thus more insurable. Similar research on cyber insurance and data was conducted by Nurse et al. ( 2020 ). The authors examined cyber insurance practitioners' perceptions and the challenges they face in collecting and using data. In addition, gaps were identified during the research where further data is needed. The authors concluded that cyber insurance is still in its infancy, and there are still several unanswered questions (for example, cyber valuation, risk calculation and recovery). They also pointed out that a better understanding of data collection and use in cyber insurance would be invaluable for future research and practice. Bessy-Roland et al. ( 2021 ) come to a similar conclusion. They proposed a multivariate Hawkes framework to model and predict the frequency of cyberattacks. They used a public dataset with characteristics of data breaches affecting the U.S. industry. In the conclusion, the authors make the argument that an insurer has a better knowledge of cyber losses, but that it is based on a small dataset and therefore combination with external data sources seems essential to improve the assessment of cyber risks.

Several systematic reviews have been published in the area of cybersecurity (Kruse et al. 2017 ; Lee et al. 2020 ; Loukas et al. 2013 ; Ulven and Wangen 2021 ). In these papers, the authors concentrated on a specific area or sector in the context of cybersecurity. This paper adds to this extant literature by focusing on data availability and its importance to risk management and insurance stakeholders. With a priority on healthcare and cybersecurity, Kruse et al. ( 2017 ) conducted a systematic literature review. The authors identified 472 articles with the keywords ‘cybersecurity and healthcare’ or ‘ransomware’ in the databases Cumulative Index of Nursing and Allied Health Literature, PubMed and Proquest. Articles were eligible for this review if they satisfied three criteria: (1) they were published between 2006 and 2016, (2) the full-text version of the article was available, and (3) the publication is a peer-reviewed or scholarly journal. The authors found that technological development and federal policies (in the U.S.) are the main factors exposing the health sector to cyber risks. Loukas et al. ( 2013 ) conducted a review with a focus on cyber risks and cybersecurity in emergency management. The authors provided an overview of cyber risks in communication, sensor, information management and vehicle technologies used in emergency management and showed areas for which there is still no solution in the literature. Similarly, Ulven and Wangen ( 2021 ) reviewed the literature on cybersecurity risks in higher education institutions. For the literature review, the authors used the keywords ‘cyber’, ‘information threats’ or ‘vulnerability’ in connection with the terms ‘higher education, ‘university’ or ‘academia’. A similar literature review with a focus on Internet of Things (IoT) cybersecurity was conducted by Lee et al. ( 2020 ). The review revealed that qualitative approaches focus on high-level frameworks, and quantitative approaches to cybersecurity risk management focus on risk assessment and quantification of cyberattacks and impacts. In addition, the findings presented a four-step IoT cyber risk management framework that identifies, quantifies and prioritises cyber risks.

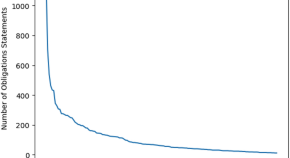

Datasets are an essential part of cybersecurity research, underlined by the following works. Ilhan Firat et al. ( 2021 ) examined various cybersecurity datasets in detail. The study was motivated by the fact that with the proliferation of the internet and smart technologies, the mode of cyberattacks is also evolving. However, in order to prevent such attacks, they must first be detected; the dissemination and further development of cybersecurity datasets is therefore critical. In their work, the authors observed studies of datasets used in intrusion detection systems. Khraisat et al. ( 2019 ) also identified a need for new datasets in the context of cybersecurity. The researchers presented a taxonomy of current intrusion detection systems, a comprehensive review of notable recent work, and an overview of the datasets commonly used for assessment purposes. In their conclusion, the authors noted that new datasets are needed because most machine-learning techniques are trained and evaluated on the knowledge of old datasets. These datasets do not contain new and comprehensive information and are partly derived from datasets from 1999. The authors noted that the core of this issue is the availability of new public datasets as well as their quality. The availability of data, how it is used, created and shared was also investigated by Zheng et al. ( 2018 ). The researchers analysed 965 cybersecurity research papers published between 2012 and 2016. They created a taxonomy of the types of data that are created and shared and then analysed the data collected via datasets. The researchers concluded that while datasets are recognised as valuable for cybersecurity research, the proportion of publicly available datasets is limited.

The main contributions of this review and what differentiates it from previous studies can be summarised as follows. First, as far as we can tell, it is the first work to summarise all available datasets on cyber risk and cybersecurity in the context of a systematic review and present them to the scientific community and cyber insurance and cybersecurity stakeholders. Second, we investigated, analysed, and made available the datasets to support efficient and timely progress in cyber risk research. And third, we enable comparability of datasets so that the appropriate dataset can be selected depending on the research area.

Methodology

Process and eligibility criteria.

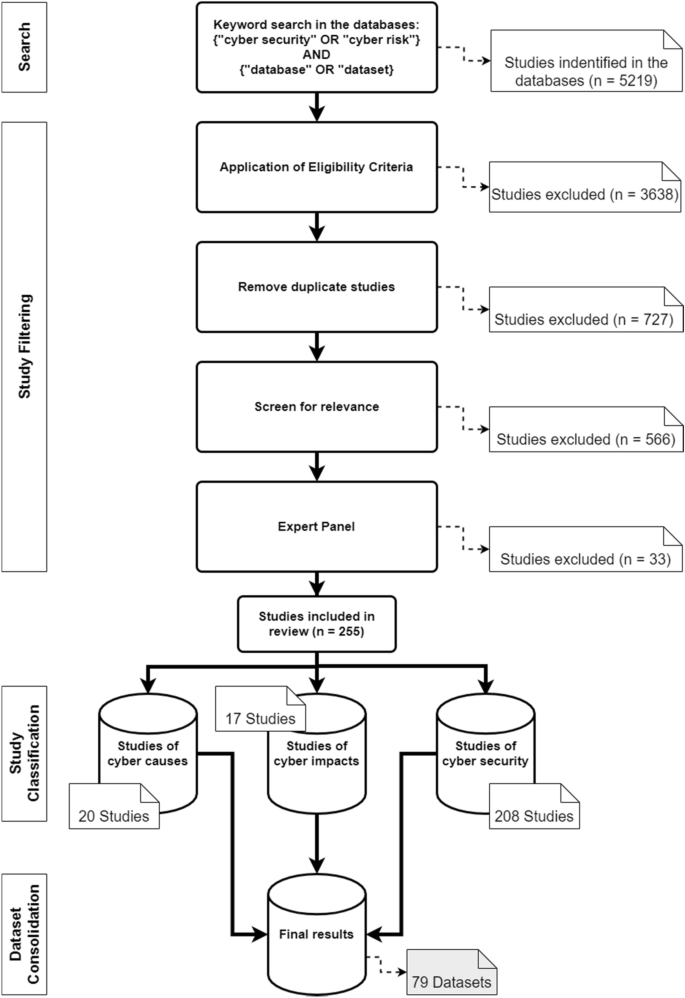

The structure of this systematic review is inspired by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) framework (Page et al. 2021 ), and the search was conducted from 3 to 10 May 2021. Due to the continuous development of cyber risks and their countermeasures, only articles published in the last 10 years were considered. In addition, only articles published in peer-reviewed journals written in English were included. As a final criterion, only articles that make use of one or more cybersecurity or cyber risk datasets met the inclusion criteria. Specifically, these studies presented new or existing datasets, used them for methods, or used them to verify new results, as well as analysed them in an economic context and pointed out their effects. The criterion was fulfilled if it was clearly stated in the abstract that one or more datasets were used. A detailed explanation of this selection criterion can be found in the ‘Study selection’ section.

Information sources

In order to cover a complete spectrum of literature, various databases were queried to collect relevant literature on the topic of cybersecurity and cyber risks. Due to the spread of related articles across multiple databases, the literature search was limited to the following four databases for simplicity: IEEE Xplore, Scopus, SpringerLink and Web of Science. This is similar to other literature reviews addressing cyber risks or cybersecurity, including Sardi et al. ( 2021 ), Franke and Brynielsson ( 2014 ), Lagerström (2019), Eling and Schnell ( 2016 ) and Eling ( 2020 ). In this paper, all databases used in the aforementioned works were considered. However, only two studies also used all the databases listed. The IEEE Xplore database contains electrical engineering, computer science, and electronics work from over 200 journals and three million conference papers (IEEE 2021 ). Scopus includes 23,400 peer-reviewed journals from more than 5000 international publishers in the areas of science, engineering, medicine, social sciences and humanities (Scopus 2021 ). SpringerLink contains 3742 journals and indexes over 10 million scientific documents (SpringerLink 2021 ). Finally, Web of Science indexes over 9200 journals in different scientific disciplines (Science 2021 ).