- Privacy Policy

Home » Data Interpretation – Process, Methods and Questions

Data Interpretation – Process, Methods and Questions

Table of Contents

Data Interpretation

Definition :

Data interpretation refers to the process of making sense of data by analyzing and drawing conclusions from it. It involves examining data in order to identify patterns, relationships, and trends that can help explain the underlying phenomena being studied. Data interpretation can be used to make informed decisions and solve problems across a wide range of fields, including business, science, and social sciences.

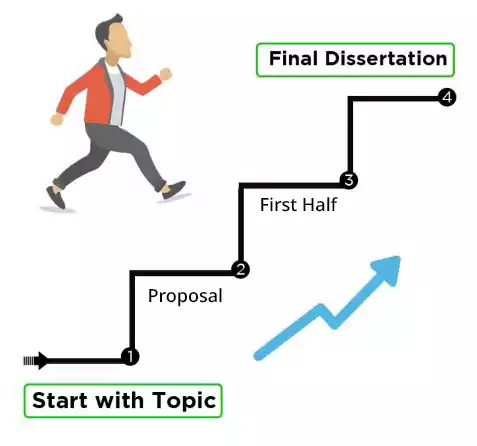

Data Interpretation Process

Here are the steps involved in the data interpretation process:

- Define the research question : The first step in data interpretation is to clearly define the research question. This will help you to focus your analysis and ensure that you are interpreting the data in a way that is relevant to your research objectives.

- Collect the data: The next step is to collect the data. This can be done through a variety of methods such as surveys, interviews, observation, or secondary data sources.

- Clean and organize the data : Once the data has been collected, it is important to clean and organize it. This involves checking for errors, inconsistencies, and missing data. Data cleaning can be a time-consuming process, but it is essential to ensure that the data is accurate and reliable.

- Analyze the data: The next step is to analyze the data. This can involve using statistical software or other tools to calculate summary statistics, create graphs and charts, and identify patterns in the data.

- Interpret the results: Once the data has been analyzed, it is important to interpret the results. This involves looking for patterns, trends, and relationships in the data. It also involves drawing conclusions based on the results of the analysis.

- Communicate the findings : The final step is to communicate the findings. This can involve creating reports, presentations, or visualizations that summarize the key findings of the analysis. It is important to communicate the findings in a way that is clear and concise, and that is tailored to the audience’s needs.

Types of Data Interpretation

There are various types of data interpretation techniques used for analyzing and making sense of data. Here are some of the most common types:

Descriptive Interpretation

This type of interpretation involves summarizing and describing the key features of the data. This can involve calculating measures of central tendency (such as mean, median, and mode), measures of dispersion (such as range, variance, and standard deviation), and creating visualizations such as histograms, box plots, and scatterplots.

Inferential Interpretation

This type of interpretation involves making inferences about a larger population based on a sample of the data. This can involve hypothesis testing, where you test a hypothesis about a population parameter using sample data, or confidence interval estimation, where you estimate a range of values for a population parameter based on sample data.

Predictive Interpretation

This type of interpretation involves using data to make predictions about future outcomes. This can involve building predictive models using statistical techniques such as regression analysis, time-series analysis, or machine learning algorithms.

Exploratory Interpretation

This type of interpretation involves exploring the data to identify patterns and relationships that were not previously known. This can involve data mining techniques such as clustering analysis, principal component analysis, or association rule mining.

Causal Interpretation

This type of interpretation involves identifying causal relationships between variables in the data. This can involve experimental designs, such as randomized controlled trials, or observational studies, such as regression analysis or propensity score matching.

Data Interpretation Methods

There are various methods for data interpretation that can be used to analyze and make sense of data. Here are some of the most common methods:

Statistical Analysis

This method involves using statistical techniques to analyze the data. Statistical analysis can involve descriptive statistics (such as measures of central tendency and dispersion), inferential statistics (such as hypothesis testing and confidence interval estimation), and predictive modeling (such as regression analysis and time-series analysis).

Data Visualization

This method involves using visual representations of the data to identify patterns and trends. Data visualization can involve creating charts, graphs, and other visualizations, such as heat maps or scatterplots.

Text Analysis

This method involves analyzing text data, such as survey responses or social media posts, to identify patterns and themes. Text analysis can involve techniques such as sentiment analysis, topic modeling, and natural language processing.

Machine Learning

This method involves using algorithms to identify patterns in the data and make predictions or classifications. Machine learning can involve techniques such as decision trees, neural networks, and random forests.

Qualitative Analysis

This method involves analyzing non-numeric data, such as interviews or focus group discussions, to identify themes and patterns. Qualitative analysis can involve techniques such as content analysis, grounded theory, and narrative analysis.

Geospatial Analysis

This method involves analyzing spatial data, such as maps or GPS coordinates, to identify patterns and relationships. Geospatial analysis can involve techniques such as spatial autocorrelation, hot spot analysis, and clustering.

Applications of Data Interpretation

Data interpretation has a wide range of applications across different fields, including business, healthcare, education, social sciences, and more. Here are some examples of how data interpretation is used in different applications:

- Business : Data interpretation is widely used in business to inform decision-making, identify market trends, and optimize operations. For example, businesses may analyze sales data to identify the most popular products or customer demographics, or use predictive modeling to forecast demand and adjust pricing accordingly.

- Healthcare : Data interpretation is critical in healthcare for identifying disease patterns, evaluating treatment effectiveness, and improving patient outcomes. For example, healthcare providers may use electronic health records to analyze patient data and identify risk factors for certain diseases or conditions.

- Education : Data interpretation is used in education to assess student performance, identify areas for improvement, and evaluate the effectiveness of instructional methods. For example, schools may analyze test scores to identify students who are struggling and provide targeted interventions to improve their performance.

- Social sciences : Data interpretation is used in social sciences to understand human behavior, attitudes, and perceptions. For example, researchers may analyze survey data to identify patterns in public opinion or use qualitative analysis to understand the experiences of marginalized communities.

- Sports : Data interpretation is increasingly used in sports to inform strategy and improve performance. For example, coaches may analyze performance data to identify areas for improvement or use predictive modeling to assess the likelihood of injuries or other risks.

When to use Data Interpretation

Data interpretation is used to make sense of complex data and to draw conclusions from it. It is particularly useful when working with large datasets or when trying to identify patterns or trends in the data. Data interpretation can be used in a variety of settings, including scientific research, business analysis, and public policy.

In scientific research, data interpretation is often used to draw conclusions from experiments or studies. Researchers use statistical analysis and data visualization techniques to interpret their data and to identify patterns or relationships between variables. This can help them to understand the underlying mechanisms of their research and to develop new hypotheses.

In business analysis, data interpretation is used to analyze market trends and consumer behavior. Companies can use data interpretation to identify patterns in customer buying habits, to understand market trends, and to develop marketing strategies that target specific customer segments.

In public policy, data interpretation is used to inform decision-making and to evaluate the effectiveness of policies and programs. Governments and other organizations use data interpretation to track the impact of policies and programs over time, to identify areas where improvements are needed, and to develop evidence-based policy recommendations.

In general, data interpretation is useful whenever large amounts of data need to be analyzed and understood in order to make informed decisions.

Data Interpretation Examples

Here are some real-time examples of data interpretation:

- Social media analytics : Social media platforms generate vast amounts of data every second, and businesses can use this data to analyze customer behavior, track sentiment, and identify trends. Data interpretation in social media analytics involves analyzing data in real-time to identify patterns and trends that can help businesses make informed decisions about marketing strategies and customer engagement.

- Healthcare analytics: Healthcare organizations use data interpretation to analyze patient data, track outcomes, and identify areas where improvements are needed. Real-time data interpretation can help healthcare providers make quick decisions about patient care, such as identifying patients who are at risk of developing complications or adverse events.

- Financial analysis: Real-time data interpretation is essential for financial analysis, where traders and analysts need to make quick decisions based on changing market conditions. Financial analysts use data interpretation to track market trends, identify opportunities for investment, and develop trading strategies.

- Environmental monitoring : Real-time data interpretation is important for environmental monitoring, where data is collected from various sources such as satellites, sensors, and weather stations. Data interpretation helps to identify patterns and trends that can help predict natural disasters, track changes in the environment, and inform decision-making about environmental policies.

- Traffic management: Real-time data interpretation is used for traffic management, where traffic sensors collect data on traffic flow, congestion, and accidents. Data interpretation helps to identify areas where traffic congestion is high, and helps traffic management authorities make decisions about road maintenance, traffic signal timing, and other strategies to improve traffic flow.

Data Interpretation Questions

Data Interpretation Questions samples:

- Medical : What is the correlation between a patient’s age and their risk of developing a certain disease?

- Environmental Science: What is the trend in the concentration of a certain pollutant in a particular body of water over the past 10 years?

- Finance : What is the correlation between a company’s stock price and its quarterly revenue?

- Education : What is the trend in graduation rates for a particular high school over the past 5 years?

- Marketing : What is the correlation between a company’s advertising budget and its sales revenue?

- Sports : What is the trend in the number of home runs hit by a particular baseball player over the past 3 seasons?

- Social Science: What is the correlation between a person’s level of education and their income level?

In order to answer these questions, you would need to analyze and interpret the data using statistical methods, graphs, and other visualization tools.

Purpose of Data Interpretation

The purpose of data interpretation is to make sense of complex data by analyzing and drawing insights from it. The process of data interpretation involves identifying patterns and trends, making comparisons, and drawing conclusions based on the data. The ultimate goal of data interpretation is to use the insights gained from the analysis to inform decision-making.

Data interpretation is important because it allows individuals and organizations to:

- Understand complex data : Data interpretation helps individuals and organizations to make sense of complex data sets that would otherwise be difficult to understand.

- Identify patterns and trends : Data interpretation helps to identify patterns and trends in data, which can reveal important insights about the underlying processes and relationships.

- Make informed decisions: Data interpretation provides individuals and organizations with the information they need to make informed decisions based on the insights gained from the data analysis.

- Evaluate performance : Data interpretation helps individuals and organizations to evaluate their performance over time and to identify areas where improvements can be made.

- Communicate findings: Data interpretation allows individuals and organizations to communicate their findings to others in a clear and concise manner, which is essential for informing stakeholders and making changes based on the insights gained from the analysis.

Characteristics of Data Interpretation

Here are some characteristics of data interpretation:

- Contextual : Data interpretation is always contextual, meaning that the interpretation of data is dependent on the context in which it is analyzed. The same data may have different meanings depending on the context in which it is analyzed.

- Iterative : Data interpretation is an iterative process, meaning that it often involves multiple rounds of analysis and refinement as more data becomes available or as new insights are gained from the analysis.

- Subjective : Data interpretation is often subjective, as it involves the interpretation of data by individuals who may have different perspectives and biases. It is important to acknowledge and address these biases when interpreting data.

- Analytical : Data interpretation involves the use of analytical tools and techniques to analyze and draw insights from data. These may include statistical analysis, data visualization, and other data analysis methods.

- Evidence-based : Data interpretation is evidence-based, meaning that it is based on the data and the insights gained from the analysis. It is important to ensure that the data used in the analysis is accurate, relevant, and reliable.

- Actionable : Data interpretation is actionable, meaning that it provides insights that can be used to inform decision-making and to drive action. The ultimate goal of data interpretation is to use the insights gained from the analysis to improve performance or to achieve specific goals.

Advantages of Data Interpretation

Data interpretation has several advantages, including:

- Improved decision-making: Data interpretation provides insights that can be used to inform decision-making. By analyzing data and drawing insights from it, individuals and organizations can make informed decisions based on evidence rather than intuition.

- Identification of patterns and trends: Data interpretation helps to identify patterns and trends in data, which can reveal important insights about the underlying processes and relationships. This information can be used to improve performance or to achieve specific goals.

- Evaluation of performance: Data interpretation helps individuals and organizations to evaluate their performance over time and to identify areas where improvements can be made. By analyzing data, organizations can identify strengths and weaknesses and make changes to improve their performance.

- Communication of findings: Data interpretation allows individuals and organizations to communicate their findings to others in a clear and concise manner, which is essential for informing stakeholders and making changes based on the insights gained from the analysis.

- Better resource allocation: Data interpretation can help organizations allocate resources more efficiently by identifying areas where resources are needed most. By analyzing data, organizations can identify areas where resources are being underutilized or where additional resources are needed to improve performance.

- Improved competitiveness : Data interpretation can give organizations a competitive advantage by providing insights that help to improve performance, reduce costs, or identify new opportunities for growth.

Limitations of Data Interpretation

Data interpretation has some limitations, including:

- Limited by the quality of data: The quality of data used in data interpretation can greatly impact the accuracy of the insights gained from the analysis. Poor quality data can lead to incorrect conclusions and decisions.

- Subjectivity: Data interpretation can be subjective, as it involves the interpretation of data by individuals who may have different perspectives and biases. This can lead to different interpretations of the same data.

- Limited by analytical tools: The analytical tools and techniques used in data interpretation can also limit the accuracy of the insights gained from the analysis. Different analytical tools may yield different results, and some tools may not be suitable for certain types of data.

- Time-consuming: Data interpretation can be a time-consuming process, particularly for large and complex data sets. This can make it difficult to quickly make decisions based on the insights gained from the analysis.

- Incomplete data: Data interpretation can be limited by incomplete data sets, which may not provide a complete picture of the situation being analyzed. Incomplete data can lead to incorrect conclusions and decisions.

- Limited by context: Data interpretation is always contextual, meaning that the interpretation of data is dependent on the context in which it is analyzed. The same data may have different meanings depending on the context in which it is analyzed.

Difference between Data Interpretation and Data Analysis

Data interpretation and data analysis are two different but closely related processes in data-driven decision-making.

Data analysis refers to the process of examining and examining data using statistical and computational methods to derive insights and conclusions from it. It involves cleaning, transforming, and modeling the data to uncover patterns, relationships, and trends that can help in understanding the underlying phenomena.

Data interpretation, on the other hand, refers to the process of making sense of the findings from the data analysis by contextualizing them within the larger problem domain. It involves identifying the key takeaways from the data analysis, assessing their relevance and significance to the problem at hand, and communicating the insights in a clear and actionable manner.

In short, data analysis is about uncovering insights from the data, while data interpretation is about making sense of those insights and translating them into actionable recommendations.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Approach – Types Methods and Examples

Significance of the Study – Examples and Writing...

Tables in Research Paper – Types, Creating Guide...

Dissertation – Format, Example and Template

Data Verification – Process, Types and Examples

Literature Review – Types Writing Guide and...

8 Types of Data Analysis

The different types of data analysis include descriptive, diagnostic, exploratory, inferential, predictive, causal, mechanistic and prescriptive. Here’s what you need to know about each one.

Data analysis is an aspect of data science and data analytics that is all about analyzing data for different kinds of purposes. The data analysis process involves inspecting, cleaning, transforming and modeling data to draw useful insights from it.

What Are the Different Types of Data Analysis?

- Descriptive analysis

- Diagnostic analysis

- Exploratory analysis

- Inferential analysis

- Predictive analysis

- Causal analysis

- Mechanistic analysis

- Prescriptive analysis

With its multiple facets, methodologies and techniques, data analysis is used in a variety of fields, including business, science and social science, among others. As businesses thrive under the influence of technological advancements in data analytics, data analysis plays a huge role in decision-making , providing a better, faster and more efficacious system that minimizes risks and reduces human biases .

That said, there are different kinds of data analysis catered with different goals. We’ll examine each one below.

Two Camps of Data Analysis

Data analysis can be divided into two camps, according to the book R for Data Science :

- Hypothesis Generation — This involves looking deeply at the data and combining your domain knowledge to generate hypotheses about why the data behaves the way it does.

- Hypothesis Confirmation — This involves using a precise mathematical model to generate falsifiable predictions with statistical sophistication to confirm your prior hypotheses.

Types of Data Analysis

Data analysis can be separated and organized into types, arranged in an increasing order of complexity.

1. Descriptive Analysis

The goal of descriptive analysis is to describe or summarize a set of data. Here’s what you need to know:

- Descriptive analysis is the very first analysis performed in the data analysis process.

- It generates simple summaries about samples and measurements.

- It involves common, descriptive statistics like measures of central tendency, variability, frequency and position.

Descriptive Analysis Example

Take the Covid-19 statistics page on Google, for example. The line graph is a pure summary of the cases/deaths, a presentation and description of the population of a particular country infected by the virus.

Descriptive analysis is the first step in analysis where you summarize and describe the data you have using descriptive statistics, and the result is a simple presentation of your data.

More on Data Analysis: Data Analyst vs. Data Scientist: Similarities and Differences Explained

2. Diagnostic Analysis

Diagnostic analysis seeks to answer the question “Why did this happen?” by taking a more in-depth look at data to uncover subtle patterns. Here’s what you need to know:

- Diagnostic analysis typically comes after descriptive analysis, taking initial findings and investigating why certain patterns in data happen.

- Diagnostic analysis may involve analyzing other related data sources, including past data, to reveal more insights into current data trends.

- Diagnostic analysis is ideal for further exploring patterns in data to explain anomalies.

Diagnostic Analysis Example

A footwear store wants to review its website traffic levels over the previous 12 months. Upon compiling and assessing the data, the company’s marketing team finds that June experienced above-average levels of traffic while July and August witnessed slightly lower levels of traffic.

To find out why this difference occurred, the marketing team takes a deeper look. Team members break down the data to focus on specific categories of footwear. In the month of June, they discovered that pages featuring sandals and other beach-related footwear received a high number of views while these numbers dropped in July and August.

Marketers may also review other factors like seasonal changes and company sales events to see if other variables could have contributed to this trend.

3. Exploratory Analysis (EDA)

Exploratory analysis involves examining or exploring data and finding relationships between variables that were previously unknown. Here’s what you need to know:

- EDA helps you discover relationships between measures in your data, which are not evidence for the existence of the correlation, as denoted by the phrase, “ Correlation doesn’t imply causation .”

- It’s useful for discovering new connections and forming hypotheses. It drives design planning and data collection.

Exploratory Analysis Example

Climate change is an increasingly important topic as the global temperature has gradually risen over the years. One example of an exploratory data analysis on climate change involves taking the rise in temperature over the years from 1950 to 2020 and the increase of human activities and industrialization to find relationships from the data. For example, you may increase the number of factories, cars on the road and airplane flights to see how that correlates with the rise in temperature.

Exploratory analysis explores data to find relationships between measures without identifying the cause. It’s most useful when formulating hypotheses.

4. Inferential Analysis

Inferential analysis involves using a small sample of data to infer information about a larger population of data.

The goal of statistical modeling itself is all about using a small amount of information to extrapolate and generalize information to a larger group. Here’s what you need to know:

- Inferential analysis involves using estimated data that is representative of a population and gives a measure of uncertainty or standard deviation to your estimation.

- The accuracy of inference depends heavily on your sampling scheme. If the sample isn’t representative of the population, the generalization will be inaccurate. This is known as the central limit theorem .

Inferential Analysis Example

The idea of drawing an inference about the population at large with a smaller sample size is intuitive. Many statistics you see on the media and the internet are inferential; a prediction of an event based on a small sample. For example, a psychological study on the benefits of sleep might have a total of 500 people involved. When they followed up with the candidates, the candidates reported to have better overall attention spans and well-being with seven-to-nine hours of sleep, while those with less sleep and more sleep than the given range suffered from reduced attention spans and energy. This study drawn from 500 people was just a tiny portion of the 7 billion people in the world, and is thus an inference of the larger population.

Inferential analysis extrapolates and generalizes the information of the larger group with a smaller sample to generate analysis and predictions.

5. Predictive Analysis

Predictive analysis involves using historical or current data to find patterns and make predictions about the future. Here’s what you need to know:

- The accuracy of the predictions depends on the input variables.

- Accuracy also depends on the types of models. A linear model might work well in some cases, and in other cases it might not.

- Using a variable to predict another one doesn’t denote a causal relationship.

Predictive Analysis Example

The 2020 US election is a popular topic and many prediction models are built to predict the winning candidate. FiveThirtyEight did this to forecast the 2016 and 2020 elections. Prediction analysis for an election would require input variables such as historical polling data, trends and current polling data in order to return a good prediction. Something as large as an election wouldn’t just be using a linear model, but a complex model with certain tunings to best serve its purpose.

Predictive analysis takes data from the past and present to make predictions about the future.

More on Data: Explaining the Empirical for Normal Distribution

6. Causal Analysis

Causal analysis looks at the cause and effect of relationships between variables and is focused on finding the cause of a correlation. Here’s what you need to know:

- To find the cause, you have to question whether the observed correlations driving your conclusion are valid. Just looking at the surface data won’t help you discover the hidden mechanisms underlying the correlations.

- Causal analysis is applied in randomized studies focused on identifying causation.

- Causal analysis is the gold standard in data analysis and scientific studies where the cause of phenomenon is to be extracted and singled out, like separating wheat from chaff.

- Good data is hard to find and requires expensive research and studies. These studies are analyzed in aggregate (multiple groups), and the observed relationships are just average effects (mean) of the whole population. This means the results might not apply to everyone.

Causal Analysis Example

Say you want to test out whether a new drug improves human strength and focus. To do that, you perform randomized control trials for the drug to test its effect. You compare the sample of candidates for your new drug against the candidates receiving a mock control drug through a few tests focused on strength and overall focus and attention. This will allow you to observe how the drug affects the outcome.

Causal analysis is about finding out the causal relationship between variables, and examining how a change in one variable affects another.

7. Mechanistic Analysis

Mechanistic analysis is used to understand exact changes in variables that lead to other changes in other variables. Here’s what you need to know:

- It’s applied in physical or engineering sciences, situations that require high precision and little room for error, only noise in data is measurement error.

- It’s designed to understand a biological or behavioral process, the pathophysiology of a disease or the mechanism of action of an intervention.

Mechanistic Analysis Example

Many graduate-level research and complex topics are suitable examples, but to put it in simple terms, let’s say an experiment is done to simulate safe and effective nuclear fusion to power the world. A mechanistic analysis of the study would entail a precise balance of controlling and manipulating variables with highly accurate measures of both variables and the desired outcomes. It’s this intricate and meticulous modus operandi toward these big topics that allows for scientific breakthroughs and advancement of society.

Mechanistic analysis is in some ways a predictive analysis, but modified to tackle studies that require high precision and meticulous methodologies for physical or engineering science .

8. Prescriptive Analysis

Prescriptive analysis compiles insights from other previous data analyses and determines actions that teams or companies can take to prepare for predicted trends. Here’s what you need to know:

- Prescriptive analysis may come right after predictive analysis, but it may involve combining many different data analyses.

- Companies need advanced technology and plenty of resources to conduct prescriptive analysis. AI systems that process data and adjust automated tasks are an example of the technology required to perform prescriptive analysis.

Prescriptive Analysis Example

Prescriptive analysis is pervasive in everyday life, driving the curated content users consume on social media. On platforms like TikTok and Instagram, algorithms can apply prescriptive analysis to review past content a user has engaged with and the kinds of behaviors they exhibited with specific posts. Based on these factors, an algorithm seeks out similar content that is likely to elicit the same response and recommends it on a user’s personal feed.

When to Use the Different Types of Data Analysis

- Descriptive analysis summarizes the data at hand and presents your data in a comprehensible way.

- Diagnostic analysis takes a more detailed look at data to reveal why certain patterns occur, making it a good method for explaining anomalies.

- Exploratory data analysis helps you discover correlations and relationships between variables in your data.

- Inferential analysis is for generalizing the larger population with a smaller sample size of data.

- Predictive analysis helps you make predictions about the future with data.

- Causal analysis emphasizes finding the cause of a correlation between variables.

- Mechanistic analysis is for measuring the exact changes in variables that lead to other changes in other variables.

- Prescriptive analysis combines insights from different data analyses to develop a course of action teams and companies can take to capitalize on predicted outcomes.

A few important tips to remember about data analysis include:

- Correlation doesn’t imply causation.

- EDA helps discover new connections and form hypotheses.

- Accuracy of inference depends on the sampling scheme.

- A good prediction depends on the right input variables.

- A simple linear model with enough data usually does the trick.

- Using a variable to predict another doesn’t denote causal relationships.

- Good data is hard to find, and to produce it requires expensive research.

- Results from studies are done in aggregate and are average effects and might not apply to everyone.

Recent Expert Contributors Articles

- How it works

Chapter 4 – Data Analysis and Discussion (example)

Disclaimer: This is not a sample of our professional work. The paper has been produced by a student. You can view samples of our work here . Opinions, suggestions, recommendations and results in this piece are those of the author and should not be taken as our company views.

Type of Academic Paper – Dissertation Chapter

Academic Subject – Marketing

Word Count – 2964 words

Reliability Analysis

Before conducting any analysis on the data, all the data’s reliability was analyzed based on Cronbach’s Alpha value. The reliability analysis was performed on the complete data of the questionnaire. The reliability of the data was found to be (0.922), as shown in the results of the reliability analysis provided below in table 4.1. However, the complete results output of the reliability analysis is given in the appendix.

Reliability Analysis (N=200)

The Cronbach’s Alpha value between (0.7-1.0) is considered to have excellent reliability. The Cronbach’s Alpha value of the data was found to be (0.922); therefore, this indicated that the questionnaire data had excellent reliability. All of the 29 items of the questionnaire had excellent reliability, and if they are taken for further analysis, they can generate results with 92.2% reliability.

Frequency Distribution Analysis

First of all, the frequency distribution analysis was performed on the demographic variables using SPSS to identify the respondents’ demographic composition. Section 1 of the questionnaire had 5 demographic questions to identify; gender, age group, annual income, marital status, and education level of the research sample. The frequency distribution results shown in table 4.2 below indicated that there were 200 respondents in total, out of which 50% were male, and 50% were female. This shows that the research sample was free from gender-based biases as males and females had equal representation in the sample.

Moreover, the frequency distribution analysis suggested three age groups; ‘20-35’, ‘36-60’ and ‘Above 60’. 39% of the respondents belonged to the ‘20-35’ age group, while 56.5% of the respondents belonged to the ‘36-60’ age group and the remaining 4.5% belonged to the age group of ‘Above 60’.

Furthermore, the annual income level was divided into four categories. The income values were in GBP. It was found that 13% of the respondents had income ‘up to 30000’, 27% had income between ‘31000 to 50000’, 52.5% had income between ‘51000 to 100000’, and 7.5% had income ‘Above 100000’. This suggests that most of the respondents had an annual income between ‘31000 to 50000’ GBP.

The frequency distribution analysis indicated that 61% of respondents were single, while 39% were married, as indicated in table 4.2. This means that most of the respondents were single. Based on frequency distribution, it was also found that the education level of the respondents was analyzed using four categories of education level, namely; diploma, graduate, master, and doctorate. The results depicted that 37% of the respondents were diploma holders, 46% were graduates, 16% had master-level education, while only 2% had a doctorate. This suggests that most of the respondents were either graduate or diploma holders.

Frequency Distribution of the Demographic Characteristics of the respondents (N=200)

Multiple Regression Analysis

The hypotheses were tested using linear multiple regression analysis to determine which of the dependent variables had a significant positive effect on the customer loyalty of the five-star hotel brands. The results of the regression analysis are summarized in the following table 4.3. However, the complete SPSS output of the regression analysis is given in the appendix. Table 4.3

Multiple regression analysis showing the predictive values of dependent variables (Brand image, corporate identity, public relation, perceived quality, and trustworthiness) on customer loyalty (N=200)

Predictors: (Constant), Trustworthiness, Public Relation, Brand Image, Corporate Identity, Perceived Quality Dependent Variable: Customer Loyalty

The significance value (p-value) of ANOVA was found to be (0.000) as shown in the above

table, which was less than 0.05. This suggested that the model equation was significantly fitted

on the data. Moreover, the adjusted R-Square value was (0.897), which indicated that the model’s predictors explained 89.7% variation in customer loyalty.

Furthermore, the presence of the significant effect of the 5 predicting variables on customer loyalty was identified based on their sig. Values. The effect of a predicting variable is significant if its sig. Value is less than 0.05 or if its t-Statistics value is greater than 2. It was found that the variable ‘brand image’ had sig. Value (0.046), the variable ‘corporate identity had sig. Value (0.482), the variable ‘public relation’ had sig. Value (0.400), while the variable ‘perceived quality’ had sig. value (0.000), and the variable ‘trustworthiness’ had sig. value (0.652).

Hire an Expert Dissertation Chapter Writer

Orders completed by our expert writers are

- Formally drafted in an academic style

- Free Amendments and 100% Plagiarism Free – or your money back!

- 100% Confidential and Timely Delivery!

- Free anti-plagiarism report

- Appreciated by thousands of clients. Check client reviews

Hypotheses Assessment

Based on the regression analysis, it was found that brand image and perceived quality have a significant positive effect on customer loyalty. In contrast, corporate identity, public relations, and trustworthiness have an insignificant effect on customer loyalty. Therefore the two hypotheses; H1 and H4 were accepted, however the three hypotheses; H2, H3, and H5 were rejected as indicated in table 4.4.

Hypothesis Assessment Summary Table (N=200)

The insignificant variables (corporate identity, public relation and trustworthiness) were excluded from equation 1. After excluding the insignificant variables from the model equation 1, the final equation becomes as follows;

Customer loyalty = α + 0.074 (Brand image) + 0.991 (Perceived quality) + €

The above equation suggests that a 1 unit increase in brand image is likely to result in 0.074 units increase customer loyalty. In comparison, 1 unit increase in perceived quality can result in 0.991 units increase in customer loyalty.

Cross Tabulation Analysis

To further explore the results, the demographic variables’ data were cross-tabulated against the respondents’ responses regarding customer loyalty using SPSS. In this regards the five demographic variables; gender, age group, annual income, marital status and education level were cross-tabulated against the five questions regarding customer loyalty to know the difference between the customer loyalty of five-star hotels of UK based on demographic differences. The results of the cross-tabulation analysis are given in the appendix. The results are graphically presented in bar charts too, which are also given in the appendix.

Cross Tabulation of Gender against Customer Loyalty

The gender was cross-tabulated against question 1 to 5 of the questionnaire to identify the gender differences between male and female respondents’ responses regarding customer loyalty of five-star hotels of the UK. The results indicated that out of 100 males, 57% were extremely agreed that they stay at one hotel, while out of 100 females, 80% were extremely agreed they stay at one hotel. This shows that in comparison with a male, females were more agreed that they stayed at one hotel and were found to be more loyal towards their respective hotel brands.

The cross-tabulation results further indicated that out of 100 males, 53% agreed that they always say positive things about their respective hotel brand to other people. In contrast, out of 100 females, 77% were extremely agreed. Based on the results, the females were found to be in more agreement than males that they always say positive things about their respective hotel brand to other people.

It was further found that out of 100 males, 53% were extremely agreed that they recommend their hotel brand to others, however, out of 100 females, 74% were extremely agreed to this statement. This result also suggested that females were more in agreement than males to recommend their hotel brand to others.

Moreover, it was found that out of 100 males, 54% were extremely agreed that they don’t seek alternative hotel brands, while out of 100 females, 79% were extremely agreed to this statement. This result also suggested that females were more agreed than males that they don’t seek alternative hotel brands, and so were found to be more loyal than males.

Furthermore, it was identified that out of 100 male respondents 56% were extremely agreed that they would continue to go to the same hotel irrespective of the prices, however out of 100 females 79% were extremely agreed. Based on this result, it was clear that females were more agreed than males that they would continue to go to the same hotel irrespective of the prices, so females were found to be more loyal than males.

After cross tabulating ‘gender’ against the response of the 5 questions regarding customer loyalty the females were found to be more loyal customers of the five-star hotel brands than males as they were found to be more in agreement than the man that they stay at one hotel, always say positive things about their hotel brand to other people, recommend their hotel brand to others, don’t seek alternative hotel brands and would continue to go to the same hotel irrespective of the prices.

Cross Tabulation of Age Group against Customer Loyalty

Afterward, the second demographic variable, ‘age groups’ was cross-tabulated against questions 1 to 5 of the questionnaire to identify the difference between the customer loyalty of customers of different age groups. The results indicated that out of 78 respondents between 20 to 35 years of age, 61.5% were extremely agreed that they stayed at one hotel. While out of 113 respondents who were between 36 to 60 years of age, 72.6% were extremely agreed that they always stay at one hotel. However, out of 9 respondents who were above 60 years of age, 77.8% agreed that they always stay at one hotel. This indicated that customers of 36-60 and above 60 age groups were more loyal to their hotel brands as they were keener to stay at a respective hotel brand.

Content removed…

Cross Tabulation of Annual Income against Customer Loyalty

The third demographic variable, ‘annual income’ was cross-tabulated against questions 1 to 5 of the questionnaire to identify which of the customers were most loyal based on their respective annual income levels. The results indicated that out of 26 respondents who had annual income up to 30000 GBP, 84.6% were extremely agreed that they always stay at one hotel. However, out of 54 respondents who had annual income from 31000 to 50000 GBP, 98.1% agreed that they always stay at one hotel. Although out of 105 respondents had annual income from 50000 to 100000 GBP, 49.5% were extremely agreed that they always stay at one hotel. While out of 10 respondents who had annual income from 50000 to 1000000 GBP, 66.7% agreed that they always stay at one hotel. This indicated that customers of annual income levels from 31000 to 50000 GBP were more loyal to their hotel brands than the customers having other annual income levels.

Cross Tabulation of Marital Status against Customer Loyalty

Furthermore, the fourth demographic variable the ‘marital status’ was cross-tabulated against questions 1 to 5 of the questionnaire to understand the difference between married and unmarried respondents regarding customer loyalty of five-star hotels of the UK. The cross-tabulation analysis results indicated that out of 122 single respondents, 59.8% were extremely agreed that they stay at one hotel. However, out of 78 married respondents, around 82% of respondents agreed that they stay at one hotel. Thus, the married customers were more loyal to their hotel brands than unmarried customers because, in comparison, married customers prefer to stay at one hotel brand.

To proceed with the cross-tabulation results, out of 122 single respondents, 55.7% were extremely agreed upon always saying positive things about their hotel brands to other people. On the other hand, out of 78 married respondents, 79.5% were extremely agreed. Hence, upon evaluating the results, it can be said that married customers have more customer loyalty as they are in more agreement than singles. They always give positive feedback regarding their respective hotel brand to other people.

Cross Tabulation of Education Level against Customer Loyalty

Subsequently, the fifth demographic variable, ‘education level’ was cross-tabulated against questions 1 to 5 of the questionnaire to identify which of the customers were most loyal based on their respective education levels. The results indicated that out of 50 respondents who were diploma holders, 67.6% were extremely agreed that they always stay at one hotel. While out of 64 respondents who were graduates, 69.6% were extremely agreed that they always stay at one hotel. Although out of 22 respondents who were masters, 68.8% were extremely agreed that they always stay at one hotel. However, out of 2 respondents with doctorates, 50% were extremely agreed to always stay at one hotel. This indicated that customers who were graduates were more loyal than the customers with diplomas, masters, or doctorates.

Moreover, 66.2% of the diploma holders were extremely agreed that they always say positive things about their hotel brand to other people. In comparison, 64.1% of the respondents who were graduates were extremely agreed. However, 65.5% of the respondents who had masters were extremely agreed, and 50% of the respondents who had doctorates agreed with the statement. Based on this result customers having masters were the most loyal customers of their respective five-star hotel brands.

Need a Dissertation Chapter On a Similar Topic?

In this subsection, the findings of this study are compared and contrasted with the literature to identify which of the past research supports the present research findings. This present study based on regression analysis suggested that brand image can have a significant positive effect on the customer loyalty of five-star hotels in the UK. This finding was supported by the research of Heung et al. (1996), who also suggested that the hotel’s brand image can play a vital role in preserving a high ratio of customer loyalty.

Moreover, this present study also suggested that perceived quality was the second factor that was found to have a significant positive effect on customer loyalty. The perceived quality was evaluated based on; service quality, comfort, staff courtesy, customer satisfaction, and service quality expectations. In this regard, Tat and Raymond (2000) research supports the findings of this study. The staff service quality was found to affect customer loyalty and the level of satisfaction. Teas (1994) had also found service quality to affect customer loyalty. However, Teas also found that staff empathy (staff courtesy) towards customers can also affect customer loyalty. The research of Rowley and Dawes (1999) also supports the finding of this present study. The users’ expectations about the quality and nature of the services affect customer loyalty. A study by Oberoi and Hales (1990) was found to agree with the present study’s findings, as they had found the quality of staff service to affect customer loyalty.

Summary of the Findings

- The brand image was found to have a significant positive effect on customer loyalty. Therefore customer loyalty is likely to increase with the increase in brand image.

- The corporate identity was found to have an insignificant effect on customer loyalty. Therefore customer loyalty is not likely to increase with the increase in corporate identity.

- Public relations was found to have an insignificant effect on customer loyalty. Therefore customer loyalty is not likely to increase with the increase in public relations.

- Perceived quality was found to have a significant positive effect on customer loyalty. Therefore customer loyalty is likely to increase with the increase in perceived quality.

- Trustworthiness was found to have an insignificant effect on customer loyalty. Therefore customer loyalty is not likely to increase with the increase in trustworthiness.

- The female customers were found to be more loyal customers of the five-star hotel brands than male customers.

- The customers of age from 36 to 60 years were more loyal to their hotel brands than the customers of age from 20 to 35 and above 60.

- The customers who had annual income from 31000 to 50000 were more loyal customers of their respective hotel brands than those who had an annual income level of less than 31000 or more than 50000.

- The married respondents had more customer loyalty than unmarried customers, towards five-star hotel brands of the UK.

The customers who had bachelor degrees and the customers who had master degrees were more loyal to the customers who had a diploma or doctorate.

Bryman, A., Bell, E., 2015. Business Research Methods. Oxford University Press.

Daum, P., 2013. International Synergy Management: A Strategic Approach for Raising Efficiencies in the Cross-border Interaction Process. Anchor Academic Publishing (aap_verlag).

Dümke, R., 2002. Corporate Reputation and its Importance for Business Success: A European

Perspective and its Implication for Public Relations Consultancies. diplom.de.

Guetterman, T.C., 2015. Descriptions of Sampling Practices Within Five Approaches to Qualitative Research in Education and the Health Sciences. Forum Qualitative Sozialforschung /

Forum: Qualitative Social Research 16.

Haq, M., 2014. A Comparative Analysis of Qualitative and Quantitative Research Methods and a Justification for Adopting Mixed Methods in Social Research (PDF Download Available).

ResearchGate 1–22. doi:http://dx.doi.org/10.13140/RG.2.1.1945.8640

Kelley, ., Clark, B., Brown, V., Sitzia, J., 2003. Good practice in the conduct and reporting of survey research. Int J Qual Health Care 15, 261–266. doi:10.1093/intqhc/mzg031

Lewis, S., 2015. Qualitative Inquiry and Research Design: Choosing Among Five Approaches.

Health Promotion Practice 16, 473–475. doi:10.1177/1524839915580941

Saunders, M., 2003. Research Methods for Business Students. Pearson Education India.

Saunders, M.N.K., Tosey, P., 2015. Handbook of Research Methods on Human Resource

Development. Edward Elgar Publishing.

DMCA / Removal Request

If you are the original writer of this Dissertation Chapter and no longer wish to have it published on the www.ResearchProspect.com then please:

Request The Removal Of This Dissertation Chapter

Frequently Asked Questions

How to write the results chapter of a dissertation.

To write the Results chapter of a dissertation:

- Present findings objectively.

- Use tables, graphs, or charts for clarity.

- Refer to research questions/hypotheses.

- Provide sufficient details.

- Avoid interpretation; save that for the Discussion chapter.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

- Search Menu

- Sign in through your institution

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Papyrology

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Evolution

- Language Reference

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Religion

- Music and Media

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Science

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Strategy

- Business Ethics

- Business History

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Systems

- Economic History

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- Ethnic Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Behaviour

- Political Economy

- Political Institutions

- Political Theory

- Politics and Law

- Politics of Development

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

31 Interpretation In Qualitative Research: What, Why, How

Allen Trent, College of Education, University of Wyoming

Jeasik Cho, Department of Educational Studies, University of Wyoming

- Published: 02 September 2020

- Cite Icon Cite

- Permissions Icon Permissions

This chapter addresses a wide range of concepts related to interpretation in qualitative research, examines the meaning and importance of interpretation in qualitative inquiry, and explores the ways methodology, data, and the self/researcher as instrument interact and impact interpretive processes. Additionally, the chapter presents a series of strategies for qualitative researchers engaged in the process of interpretation and closes by presenting a framework for qualitative researchers designed to inform their interpretations. The framework includes attention to the key qualitative research concepts transparency, reflexivity, analysis, validity, evidence, and literature. Four questions frame the chapter: What is interpretation, and why are interpretive strategies important in qualitative research? How do methodology, data, and the researcher/self impact interpretation in qualitative research? How do qualitative researchers engage in the process of interpretation? And, in what ways can a framework for interpretation strategies support qualitative researchers across multiple methodologies and paradigms?

“ All human knowledge takes the form of interpretation.” In this seemingly simple statement, the late German philosopher Walter Benjamin asserted that all knowledge is mediated and constructed. In doing so, he situates himself as an interpretivist, one who believes that human subjectivity, individuals’ characteristics, feelings, opinions, and experiential backgrounds impact observations, analysis of these observations, and resultant knowledge/truth constructions. Hammersley ( 2013 ) noted,