- Bibliography

- More Referencing guides Blog Automated transliteration Relevant bibliographies by topics

- Automated transliteration

- Relevant bibliographies by topics

- Referencing guides

Dissertations / Theses on the topic 'Human-computer interaction'

Create a spot-on reference in apa, mla, chicago, harvard, and other styles.

Consult the top 50 dissertations / theses for your research on the topic 'Human-computer interaction.'

Next to every source in the list of references, there is an 'Add to bibliography' button. Press on it, and we will generate automatically the bibliographic reference to the chosen work in the citation style you need: APA, MLA, Harvard, Chicago, Vancouver, etc.

You can also download the full text of the academic publication as pdf and read online its abstract whenever available in the metadata.

Browse dissertations / theses on a wide variety of disciplines and organise your bibliography correctly.

Jackson, Samuel. "Sustainability in Computer Science, Human-Computer Interaction, and Interaction Design." Scholarship @ Claremont, 2016. http://scholarship.claremont.edu/cmc_theses/1329.

Fleury, Rosanne. "Gender and human-computer interaction." Thesis, National Library of Canada = Bibliothèque nationale du Canada, 2000. http://www.collectionscanada.ca/obj/s4/f2/dsk2/ftp03/MQ50310.pdf.

Li, QianQian. "Human-Computer Interaction: Security Aspects." Doctoral thesis, Università degli studi di Padova, 2018. http://hdl.handle.net/11577/3427166.

Sayago, Barrantes Sergio. "Human-computer interaction with older people." Doctoral thesis, Universitat Pompeu Fabra, 2009. http://hdl.handle.net/10803/7560.

Laberge, Dominic. "Visual tracking for human-computer interaction." Thesis, University of Ottawa (Canada), 2003. http://hdl.handle.net/10393/26504.

Abowd, Gregory Dominic. "Formal aspects of human-computer interaction." Thesis, University of Oxford, 1991. http://ethos.bl.uk/OrderDetails.do?uin=uk.bl.ethos.232812.

Ramsay, Judith Easton. "Measuring and facilitating human-computer interaction." Thesis, University of Glasgow, 1992. http://ethos.bl.uk/OrderDetails.do?uin=uk.bl.ethos.281957.

Roast, Christopher Richard. "Executing models in human computer interaction." Thesis, University of York, 1993. http://ethos.bl.uk/OrderDetails.do?uin=uk.bl.ethos.335778.

Westerman, Stephen J. "Individual differences in human-computer interaction." Thesis, Aston University, 1993. http://publications.aston.ac.uk/10853/.

Frisk, Henrik. "Improvisation, computers and interaction : rethinking human-computer interaction through music /." Malmö : Malmö Academy of Music, Lund University, 2008. http://www.lu.se/o.o.i.s?id=12588&postid=1239899.

Drewes, Heiko. "Eye Gaze Tracking for Human Computer Interaction." Diss., lmu, 2010. http://nbn-resolving.de/urn:nbn:de:bvb:19-115914.

Bao, Leiming, and Chunyan Sun. "Human-Computer Interaction in a Smart House." Thesis, Högskolan Kristianstad, Sektionen för hälsa och samhälle, 2012. http://urn.kb.se/resolve?urn=urn:nbn:se:hkr:diva-9475.

Bourges-Waldegg, Paula. "Handling cultural factors in human-computer interaction." Thesis, University of Derby, 1998. http://hdl.handle.net/10545/310928.

Bär, Nina. "Human-Computer Interaction And Online Users’ Trust." Doctoral thesis, Universitätsbibliothek Chemnitz, 2014. http://nbn-resolving.de/urn:nbn:de:bsz:ch1-qucosa-149685.

Tzanavari, Aimilia. "User modeling for intelligent human-computer interaction." Thesis, University of Bristol, 2001. http://ethos.bl.uk/OrderDetails.do?uin=uk.bl.ethos.364961.

Sparrell, Carlton James. "Coverbal iconic gesture in human-computer interaction." Thesis, Massachusetts Institute of Technology, 1993. http://hdl.handle.net/1721.1/62327.

Oliveira, Victor Adriel de Jesus. "Designing tactile vocabularies for human-computer interaction." reponame:Biblioteca Digital de Teses e Dissertações da UFRGS, 2014. http://hdl.handle.net/10183/99335.

Farbiak, Peter. "Správa projektů z oblasti Human-Computer Interaction." Master's thesis, Vysoké učení technické v Brně. Fakulta informačních technologií, 2012. http://www.nusl.cz/ntk/nusl-236579.

Leiva, Torres Luis Alberto. "Diverse Contributions to Implicit Human-Computer Interaction." Doctoral thesis, Universitat Politècnica de València, 2012. http://hdl.handle.net/10251/17803.

Nápravníková, Hana. "Human-Computer Interaction - spolupráce člověka a počítače." Master's thesis, Vysoká škola ekonomická v Praze, 2017. http://www.nusl.cz/ntk/nusl-359070.

Watkinson, Neil Stephen. "The evaluation of dynamic human-computer interaction." Thesis, Loughborough University, 1991. https://dspace.lboro.ac.uk/2134/7031.

Wache, Julia. "Implicit Human-computer Interaction: Two complementary Approaches." Doctoral thesis, Università degli studi di Trento, 2016. https://hdl.handle.net/11572/368869.

Wache, Julia. "Implicit Human-computer Interaction: Two complementary Approaches." Doctoral thesis, University of Trento, 2016. http://eprints-phd.biblio.unitn.it/1803/1/Wache_thesis.pdf.

Mohamedally, Dean. "Constructionism through Mobile Interactive Knowledge Elicitation (MIKE) in human-computer interaction." Thesis, City University London, 2006. http://ethos.bl.uk/OrderDetails.do?uin=uk.bl.ethos.433674.

TRUYENQUE, MICHEL ALAIN QUINTANA. "A COMPUTER VISION APPLICATION FOR HAND-GESTURES HUMAN COMPUTER INTERACTION." PONTIFÍCIA UNIVERSIDADE CATÓLICA DO RIO DE JANEIRO, 2005. http://www.maxwell.vrac.puc-rio.br/Busca_etds.php?strSecao=resultado&nrSeq=6585@1.

Drugge, Mikael. "Wearable computer interaction issues in mediated human to human communication." Licentiate thesis, Luleå : Luleå Univ. of Technology, 2004. http://epubl.luth.se/1402-1757/2004/42.

Erdem, Ibrahim Aykut. "Vision-based Human-computer Interaction Using Laser Pointer." Master's thesis, METU, 2003. http://etd.lib.metu.edu.tr/upload/1128776/index.pdf.

Pallotta, Vincenzo. "Cognitive language engineering towards robust human-computer interaction /." Lausanne, 2002. http://library.epfl.ch/theses/?display=detail&nr=2630.

Van, den Bergh Michael. "Visual body pose analysis for human-computer interaction." Konstanz Hartung-Gorre, 2010. http://d-nb.info/1000839370/04.

Gerken, Jens [Verfasser]. "Longitudinal Research in Human-Computer Interaction / Jens Gerken." Konstanz : Bibliothek der Universität Konstanz, 2011. http://d-nb.info/1017933847/34.

Azad, Minoo. "A proto-pattern language for human-computer interaction." Thesis, National Library of Canada = Bibliothèque nationale du Canada, 2000. http://www.collectionscanada.ca/obj/s4/f2/dsk1/tape3/PQDD_0025/MQ52376.pdf.

Costanza, Enrico. "Subtle, intimate interfaces for mobile human computer interaction." Thesis, Massachusetts Institute of Technology, 2006. http://hdl.handle.net/1721.1/37387.

Britton, Brent Cabot James. "Enhancing computer-human interaction with animated facial expressions." Thesis, Massachusetts Institute of Technology, 1991. http://hdl.handle.net/1721.1/64856.

Radüntz, Thea. "Biophysiological Mental-State Monitoring during Human-Computer Interaction." Doctoral thesis, Humboldt-Universität zu Berlin, 2021. http://dx.doi.org/10.18452/23026.

Trendafilov, Dari. "An information-theoretic account of human-computer interaction." Thesis, University of Glasgow, 2017. http://theses.gla.ac.uk/8614/.

Alshaali, Saif. "Human-computer interaction : lessons from theory and practice." Thesis, University of Southampton, 2011. https://eprints.soton.ac.uk/210545/.

Martín-Albo, Simón Daniel. "Contributions to Pen & Touch Human-Computer Interaction." Doctoral thesis, Universitat Politècnica de València, 2016. http://hdl.handle.net/10251/68482.

Obrist, Marianna. "DIY HCI do-it-yourself human computer interaction." Saarbrücken VDM Verlag Dr. Müller, 2007. http://d-nb.info/991461355/04.

Garrido, Piedad, Jesús Tramullas, Manuel Coll, Francisco Martínez, and Inmaculada Plaza. "XTM-DITA structure at Human-Computer Interaction Service." Universidad de Castilla-La Mancha, 2008. http://hdl.handle.net/10150/106152.

Wheeldon, Alan. "Improving human computer interaction in intelligent tutoring systems." Thesis, Queensland University of Technology, 2007. https://eprints.qut.edu.au/16587/1/Alan_Wheeldon_Thesis.pdf.

Wheeldon, Alan. "Improving human computer interaction in intelligent tutoring systems." Queensland University of Technology, 2007. http://eprints.qut.edu.au/16587/.

King, William Joseph. "Toward the human-computer dyad /." Thesis, Connect to this title online; UW restricted, 2002. http://hdl.handle.net/1773/10325.

Herrera, Acuna Raul. "Advanced computer vision-based human computer interaction for entertainment and software development." Thesis, Kingston University, 2014. http://eprints.kingston.ac.uk/29884/.

Hamette, Patrick de la. "Embedded stereo vision systems for mobile human-computer interaction /." Zürich : ETH, 2008. http://e-collection.ethbib.ethz.ch/show?type=diss&nr=18075.

Genc, Serkan. "Vision-based Hand Interface Systems In Human Computer Interaction." Phd thesis, METU, 2010. http://etd.lib.metu.edu.tr/upload/12611700/index.pdf.

Cermak-Sassenrath, Daniel. "The logic of play in everyday human-computer interaction." Universität Potsdam, 2010. http://opus.kobv.de/ubp/volltexte/2010/4272/.

Schels, Martin [Verfasser]. "Multiple classifier systems in human-computer interaction / Martin Schels." Ulm : Universität Ulm. Fakultät für Ingenieurwissenschaften und Informatik, 2015. http://d-nb.info/1076828493/34.

Limerick, Hannah. "Investigating the sense of agency in human-computer interaction." Thesis, University of Bristol, 2016. http://ethos.bl.uk/OrderDetails.do?uin=uk.bl.ethos.715757.

Surie, Dipak. "An agent-centric approach to implicit human-computer interaction." Thesis, Umeå universitet, Institutionen för datavetenskap, 2005. http://urn.kb.se/resolve?urn=urn:nbn:se:umu:diva-52476.

Raisamo, Roope. "Multimodal human-computer interaction a constructive and empirical study /." Tampere, [Finland] : University of Tampere, 1999. http://acta.uta.fi/pdf/951-44-4702-6.pdf.

Bachelor & master theses in the research field of human-computer interaction

We offer thesis topics for bachelor and master level of the study programmes media informatics, computer science, software engineering, and cognitive systems.

Below you can find an uptodate list of topic suggestions. Of cource, we are open to discuss any proposals by students in our research field. The descriptions are partially only visible inside the campus net.

If you are interested, please directly get in touch with a research associate of the research group .

For further questions about howto conceptualise and write a thesis, please take a look at the FAQ section .

Overview of currently available thesis topics (bachelor level)

Overview of currently available thesis topics (master level), details about individual topics.

Adaptive Autonomous Vehicle Driving Style

Supervisor: Annika Stampf

Level: Bachelor / Master

Description:

The aim of this thesis is to investigate how trust and acceptance is influenced if the driving style of an autonomous vehicle is adapted to the current state of a user. A prototype should be implemented in Unity and a user study should be conducted.

Anthropomorphism in Highly Automated Vehicles

The aim of this work is to investigate what anthropomorphic features can be used in in-vehicle interfaces (such as physiological signals, e.g. heart beat or a nudge to the driver from the vehicle). These identified features should be implemented prototypically in a VR environment with Unity. Subsequently, a user study should be conducted to evaluate whether those identified features have a positive impact on passengers' trust in HAVs.

Balancing Access and Acceptance: Exploring the Intersection of Personalization and Social Norms in Automotive Interface Design for the Visually Impaired

Supervisor: Max Rädler

Level: Master

This thesis explores the use of Bayesian optimization to design accessible interfaces for autonomous vehicles, focusing on the visually impaired. As autonomous driving evolves, offering new levels of independence, the challenge arises in designing interfaces that do not inadvertently disclose the user's disability in shared settings. This research aims to incorporate Bayesian optimization into vehicle interior design, creating personalized environments while considering social acceptance as a crucial factor. The feasibility of these designs will be assessed through a user study, exploring the balance between accessibility and social discretion.

Breaking the Rebound: Exploring Strategies for Sustainable Consumption

Supervisor: Albin Zeqiri

Level: Bachelor & Master

The efficiency paradox (also known as "rebound effect") is the concept that increases in resource efficiency can lead to higher consumption, which offsets the environmental benefits. For example, energy-efficient light bulbs may lead to decreased energy consumption per bulb, but leaving them on for longer periods could increase overall energy consumption. Without addressing the issue, sustainable transitions will remain challenging, and environmental issues will persist or even worsen. The goal of theses on this topic is to design and evaluate countermeasures to mitigate these effects. Based on Bachelor or Master level the thesis is adapted.

Calm Technology and Digital Detox - How to nudge people towards a healthier technology usage

Supervisor: Luca-Maxim Meinhardt

Description

The first thing we do when we get up and the last thing we do before we go to sleep is to look at our phone to check for new notifications and search for new content on social media. Unfortunately, our dependence on technology is already so deeply integrated into our habits that we do not even realize how often we use our phones. On the one hand, this addiction harms us by being less focused due to distraction via notifications. Additionally, it is said that social media contributes to our attention span getting smaller. On the other hand, technology usage is also harming our social interactions during face-to-face interactions. For example, some people focus on their phones instead of engaging in a conversation, decreasing its quality. Recent trends in wearable technology, such as smartwatches and AR glasses, might even deteriorate this behavior. Therefore, new methods and interactions must be found to nudge people towards healthier technology usage and change their habits unconsciously.

Constant motion stimulus for peripherical vision to create Unconscious Notifications

Description The human peripherical vision provides us with information without shifting our focus from the primary task. This is particularly interesting since peripheral vision demands less cognitive load. So information that is positioned at the edge of the field of view can be received unconsciously without drawing attention. A prominent example is car driving since only a short moment of inattention might end in serious accidents. However, even daily struggles with loud and attention-drawing smartphone notifications that distract from working might be solved calmly with peripherical displays. In order to enable this concept, Augmented Reality glasses are the key since they provide us with wearable displays attached to our view.

Design Through My Eyes: Supporting Designers with a Vision Impairment Simulator Using Eye-Tracking

This thesis aims to create a desktop overlay simulation tool for visual impairment, designed to aid developers and designers in understanding the unique needs and experiences of visually impaired individuals. The project will involve developing a prototype simulation that can be applied across various design tasks, followed by a usability study to assess its effectiveness and impact on creating more accessible environments.

Exploring Integration of Personal Context into Eco-Visualizations

Environmental labels play a significant role in shaping our behavior towards the environment. Understanding the meaning of eco-visualizations can help consumers make informed and sustainable purchase decisions. However, current eco-visualizations are often complex and difficult to comprehend, leading to a lack of action and confusion among consumers. The Awareness-Behavior Gap describes this issue. Personalized eco-visualizations tailored to individual behavior patterns and lifestyles could be a solution to this problem. This project aims to develop concepts, prototypes, and solutions to integrate personalized context into eco-visualizations and evaluate their effectiveness in user studies.

Extensive Viewing for Language Learning

Betreuer: Tobias Wagner

Beschreibung: The goal of this project is to develop a technology-enhanced learning tool that supports language learners in learning new vocabulary through watching TV shows and movies. You will evaluate the system in terms of usability and explore its effects on learners’ learning success and motivation.

Get Up, Stand Up - Put Down Your Phone - How to turn smartphone sessions into a sporty activity.

Supervisor: Luca Meinhardt and Jana Funke

Description: Based on literature research and related work, this thesis will aim for a solution to boost our self-control in phone usage. Our goal is to help people establish healthier smartphone usage by nudging them to do sports instead of browsing the phone.

Hello Me! – How Similarity and Mimicry of In-Vehicle Assistants Effect Trust in Highly Automated Vehicles

The aim of this work is to design and prototypically implement an in-vehicle avatar, which is able to adapt to the appearance of a passenger, for example by using DeepFake. The avatar should be further able to mimic the passenger. Subsequently, a user study should be conducted to evaluate whether similarity and mimicry have positive impacts on passengers trust in highly automated vehicles.

Immersive VR Guardians - Improving VR Gameplay through user-centered safety system design

Betreuer: Annalisa Degenhrad

Beschreibung: Have you ever played a VR game? Whenever you reach the boundary of your real-world play area (which quickly happens in average households), a grid will appear in front of you and will probably ruin your illusion of being in that fantastic VR world. The goal of this project will be to enhance built-in guardian systems for VR. In order to achieve this, you will focus on certain aspects of such systems and conduct a structured analysis to find out how these aspects could be improved to increase user experience. Possible aspects that you may focus on are the sensory representation of collision warnings, collision prevention systems or innovative customization mechanisms to match such systems with various VR worlds. Your goal will be to optimize guardian systems in terms of presence, usability, and safety in order to provide better VR experiences.

Implicit Interaction Concepts for Highly Automated Vehicles

The aim of this work is to design new implicit in-vehicle interaction concepts for highly automated vehicles (HAVs). These concepts should be implemented with Unity (in a driving simulator or VR environment). Subsequently, a user study should be conducted to evaluate whether those concepts have a positive influence on passengers’ trust, acceptance, and user experience in HAVs.

Is this real? Understanding the perception of virtual worlds and how to manipulate it

Supervisor: Annalisa Degenhard

The aim of this thesis is to explore the phenomenon of presence in virtual reality. A literature analysis should be conducted. By designing a suitable virtual environment a hypothesis on the behavior of presence will be tested in a user study. The goal is to get insights into how users perceive virtual environments and to draw conclusions on how they should be designed to improve the experience of virtual reality.

Lost in translation – Enhancing the explainability of online translators

Description Imagine the scenario that you need to translate a document in a foreign language that you are not familiar with. You will probably look for an online translator such as Google Translator or DeepL and just copy the translator’s output. But how can you trust this translation? How can you be sure that the translation is precisely what you are trying to say? Prominent examples are sayings that might have no meaning if translated word by word. But since you are not familiar with the language, you cannot verify if the online translator grasped the hidden meaning behind the saying. Current online translators lack this explainability. They provide multiple alternatives for the translated phrase, but there is no explanation about why these alternatives were shown and how well they suit the inputted text.

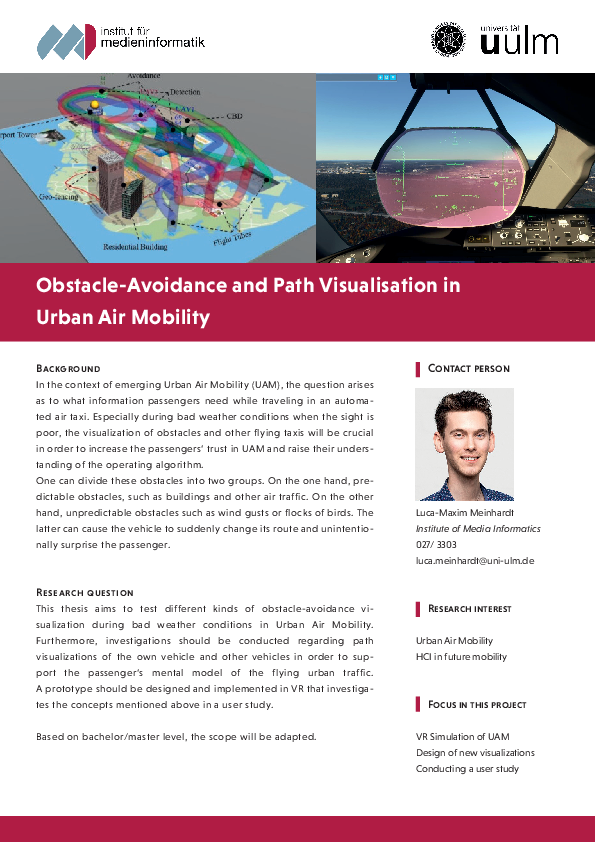

Obstacle-Avoidance and Path Visualisation in Urban Air Mobility

Description This thesis aims to test different kinds of obstacle-avoidance visualization during bad weather conditions in Urban Air Mobility. Furthermore, investigations should be conducted regarding path visualizations of the own vehicle and other vehicles in order to support the passenger‘s mental model of the flying urban traffic. A prototype should be designed and implemented in VR that investigates the concepts mentioned above in a user study.

Perceived Safety in Piloting for Urban Air Mobility

Description: Urban Air Mobility (UAM) has started to gain increasing interest in future mobility. Unlike conventional transportation such as buses and trains, flying taxis will not be limited to predefined routes, thus avoiding transportation delays. Hence, the vision is to create a network of flying vehicles operating in metropolitan areas to connect short and medium distances. Experts predict that the first UAM vehicles will lift passengers in the mid 2020th. In fact, the first crewed flight of the German start-up Volocopter successfully ran 2019 in Singapour. UAM will shift air mobility from the current mass transportation to a relatively private ride with 2-4 passengers, which creates new interesting aspects for HCI research since the passengers are focused.

Sustainability-in-Design: Reframing the Human-Technology Relationship

Goals of theses in this area cover the development of new, interactive concepts that introduce living materials or even microorganisms into hardware and map its respective needs to various device functionalities. Based on bachelor or master level, the scope is adapted.

Talk to Me: Voice User Interfaces in Highly Automated Vehicles for People Who Are Visually Impaired

This thesis explores the integration of voice assistance and screen reader technologies in autonomous vehicle interfaces to enhance usability for visually impaired users. With advancements in autonomous driving providing increased independence for the blind and visually impaired, the focus here is on determining how these assistive technologies can be adapted for automotive use. This involves developing various prototypes of speech interfaces and assistance systems. The effectiveness and user-friendliness of these prototypes will be assessed through workshops or studies involving visually impaired participants, aiming to ensure that all vehicle controls are accessible and comprehensible.

Tell me More: Empowering the Visually Impaired with Situation Awareness

This thesis focuses on utilizing auditory technologies such as 3D Sound and Earcons in autonomous vehicles to aid visually impaired individuals. With significant advancements in autonomous driving, these technologies can enhance situational awareness by conveying essential road information. This project will implement these auditory methods in Unity's virtual reality environment and evaluate their effectiveness through a workshop with visually impaired participants. The study aims to develop practical design guidelines based on the findings and existing literature.

Driving Change: Approaches to Support Green Mobility Habits

Level: Bachelor

Goals of theses in this area cover the development of new, interactive concepts that explicitly or implicitly induce behavior change regarding mobility choices. Theses include reviewing relevant literature, implementing, and evaluating the developed concepts. Based on bachelor/master level the scope is adapted.

Seeing through the Haze: Preventing Perceptual Manipulations in Mixed Realit

The aim of this thesis is to develop strategies that mitigate the effect of dark patterns in MR. This includes reviewing/categorizing relevant literature, implementing, and evaluating the developed concepts. Based on bachelor/master level the scope is adapted

Funding Partners Publications Awards

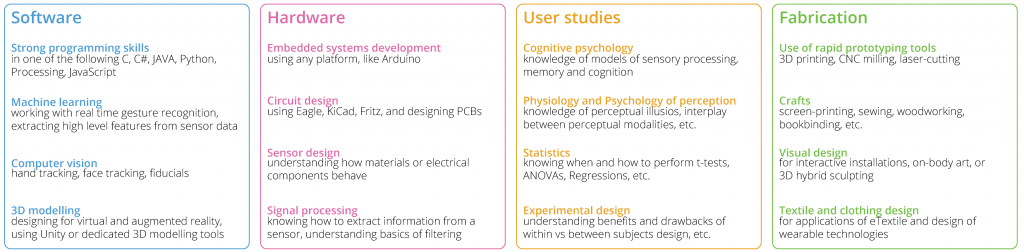

Projects Topics for bachelor and master theses

German Pre-CHI Event 2022

If you are interested in finishing your Bachelor or Master studies with a thesis in Human-Computer Interaction, you are welcome to contact us. Our research projects are a rich source for ideas. In general, writing a thesis with a focus on HCI will require you to take a user-centred perspective and asks you to apply adequate methods, such as involving users during design and evaluation. But at the same time, you will have the chance to work with the latest technology, such as Augmented Reality Glasses, Gaze-Based Interaction, Multi-Device Interaction, Voice Assistants, or other novel interaction techniques.

Our research topics are concerned with Collaborative Work Spaces and Human-Robot Interaction. Take a look at our research project pages - there is always room for a bachelor or master thesis. Get inspired here .

You have your own idea? Please, let us know! We are always looking for new topics to expand to.

Interested in a thesis topic?

Completed theses.

human computer interaction Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Project-based learning in human–computer interaction: a service‐dominant logic approach

Purpose This study aims to propose a service-dominant logic (S-DL)-informed framework for teaching innovation in the context of human–computer interaction (HCI) education involving large industrial projects. Design/methodology/approach This study combines S-DL from the field of marketing with experiential and constructivist learning to enable value co-creation as the primary method of connecting diverse actors within the service ecology. The approach aligns with the current conceptualization of central university activities as a triad of research, education and innovation. Findings The teaching framework based on the S-DL enabled ongoing improvements to the course (a project-based, bachelor’s-level HCI course in the computer science department), easier management of stakeholders and learning experiences through students’ participation in real-life projects. The framework also helped to provide an understanding of how value co-creation works and brought a new dimension to HCI education. Practical implications The proposed framework and the authors’ experience described herein, along with examples of projects, can be helpful to educators designing and improving project-based HCI courses. It can also be useful for partner companies and organizations to realize the potential benefits of collaboration with universities. Decision-makers in industry and academia can benefit from these findings when discussing approaches to addressing sustainability issues. Originality/value While HCI has successfully contributed to innovation, HCI education has made only moderate efforts to include innovation as part of the curriculum. The proposed framework considers multiple service ecosystem actors and covers a broader set of co-created values for the involved partners and society than just learning benefits.

Recommender Systems: Past, Present, Future

The origins of modern recommender systems date back to the early 1990s when they were mainly applied experimentally to personal email and information filtering. Today, 30 years later, personalized recommendations are ubiquitous and research in this highly successful application area of AI is flourishing more than ever. Much of the research in the last decades was fueled by advances in machine learning technology. However, building a successful recommender sys-tem requires more than a clever general-purpose algorithm. It requires an in-depth understanding of the specifics of the application environment and the expected effects of the system on its users. Ultimately, making recommendations is a human-computer interaction problem, where a computerized system supports users in information search or decision-making contexts. This special issue contains a selection of papers reflecting this multi-faceted nature of the problem and puts open research challenges in recommender systems to the fore-front. It features articles on the latest learning technology, reflects on the human-computer interaction aspects, reports on the use of recommender systems in practice, and it finally critically discusses our research methodology.

Research on the Construction of Human-Computer Interaction System Based on a Machine Learning Algorithm

In this paper, we use machine learning algorithms to conduct in-depth research and analysis on the construction of human-computer interaction systems and propose a simple and effective method for extracting salient features based on contextual information. The method can retain the dynamic and static information of gestures intact, which results in a richer and more robust feature representation. Secondly, this paper proposes a dynamic planning algorithm based on feature matching, which uses the consistency and accuracy of feature matching to measure the similarity of two frames and then uses a dynamic planning algorithm to find the optimal matching distance between two gesture sequences. The algorithm ensures the continuity and accuracy of the gesture description and makes full use of the spatiotemporal location information of the features. The features and limitations of common motion target detection methods in motion gesture detection and common machine learning tracking methods in gesture tracking are first analyzed, and then, the kernel correlation filter method is improved by designing a confidence model and introducing a scale filter, and finally, comparison experiments are conducted on a self-built gesture dataset to verify the effectiveness of the improved method. During the training and validation of the model by the corpus, the complementary feature extraction methods are ablated and learned, and the corresponding results obtained are compared with the three baseline methods. But due to this feature, GMMs are not suitable when users want to model the time structure. It has been widely used in classification tasks. By using the kernel function, the support vector machine can transform the original input set into a high-dimensional feature space. After experiments, the speech emotion recognition method proposed in this paper outperforms the baseline methods, proving the effectiveness of complementary feature extraction and the superiority of the deep learning model. The speech is used as the input of the system, and the emotion recognition is performed on the input speech, and the corresponding emotion obtained is successfully applied to the human-computer dialogue system in combination with the online speech recognition method, which proves that the speech emotion recognition applied to the human-computer dialogue system has application research value.

Human–Computer Interaction-Oriented African Literature and African Philosophy Appreciation

African literature has played a major role in changing and shaping perceptions about African people and their way of life for the longest time. Unlike western cultures that are associated with advanced forms of writing, African literature is oral in nature, meaning it has to be recited and even performed. Although Africa has an old tribal culture, African philosophy is a new and strange idea among us. Although the problem of “universality” of African philosophy actually refers to the question of whether Africa has heckling of philosophy in the Western sense, obviously, the philosophy bred by Africa’s native culture must be acknowledged. Therefore, the human–computer interaction-oriented (HCI-oriented) method is proposed to appreciate African literature and African philosophy. To begin with, a physical object of tablet-aid is designed, and a depth camera is used to track the user’s hand and tablet-aid and then map them to the virtual scene, respectively. Then, a tactile redirection method is proposed to meet the user’s requirement of tactile consistency in head-mounted display virtual reality environment. Finally, electroencephalogram (EEG) emotion recognition, based on multiscale convolution kernel convolutional neural networks, is proposed to appreciate the reflection of African philosophy in African literature. The experimental results show that the proposed method has a strong immersion and a good interactive experience in navigation, selection, and manipulation. The proposed HCI method is not only easy to use, but also improves the interaction efficiency and accuracy during appreciation. In addition, the simulation of EEG emotion recognition reveals that the accuracy of emotion classification in 33-channel is 90.63%, almost close to the accuracy of the whole channel, and the proposed algorithm outperforms three baselines with respect to classification accuracy.

Wearable devices in diving: A systematic review (Preprint)

BACKGROUND Wearable devices have grown enormously in importance in recent years. While wearables have generally been well studied, they have not yet been discussed in the underwater environment. OBJECTIVE The reason for this systematic review was to systematically search for the wearables for underwater operation used in the scientific literature, to make a comprehensive map of their capabilities and features, and to discuss the general direction of development. METHODS In September 2021, we conducted an extensively search of existing literature in the largest databases using keywords. For this purpose, only articles were used that contained a wearable or device that can be used in diving. Only articles in English were considered, as well as peer-reviewed articles. RESULTS In the 36 relevant studies that were found, four device categories could be identified: safety devices, underwater communication devices, head-up displays and underwater human-computer interaction devices. CONCLUSIONS The possibilities and challenges of the respective technologies were considered and evaluated separately. Underwater communication has the most significant influence on future developments. Another topic that has not received enough attention is human-computer interaction.

Analyzing the mental states of the sports student based on augmentative communication with human–computer interaction

Recognition of facial expressions and its application to human computer interaction, physical education system and training framework based on human–computer interaction for augmentative and alternative communication, enhancing the human-computer interaction through the application of artificial intelligence, machine learning, and data mining, applications of human-computer interaction for improving erp usability in education systems, export citation format, share document.

- DSpace@MIT Home

- MIT Libraries

- Graduate Theses

Emerging human-computer interaction interfaces : a categorizing framework for general computing

Other Contributors

Terms of use, description, date issued, collections.

Vision based hand gesture recognition for human computer interaction: a survey

- Published: 06 November 2012

- Volume 43 , pages 1–54, ( 2015 )

Cite this article

- Siddharth S. Rautaray 1 &

- Anupam Agrawal 1

16k Accesses

1024 Citations

7 Altmetric

Explore all metrics

As computers become more pervasive in society, facilitating natural human–computer interaction (HCI) will have a positive impact on their use. Hence, there has been growing interest in the development of new approaches and technologies for bridging the human–computer barrier. The ultimate aim is to bring HCI to a regime where interactions with computers will be as natural as an interaction between humans, and to this end, incorporating gestures in HCI is an important research area. Gestures have long been considered as an interaction technique that can potentially deliver more natural, creative and intuitive methods for communicating with our computers. This paper provides an analysis of comparative surveys done in this area. The use of hand gestures as a natural interface serves as a motivating force for research in gesture taxonomies, its representations and recognition techniques, software platforms and frameworks which is discussed briefly in this paper. It focuses on the three main phases of hand gesture recognition i.e. detection, tracking and recognition. Different application which employs hand gestures for efficient interaction has been discussed under core and advanced application domains. This paper also provides an analysis of existing literature related to gesture recognition systems for human computer interaction by categorizing it under different key parameters. It further discusses the advances that are needed to further improvise the present hand gesture recognition systems for future perspective that can be widely used for efficient human computer interaction. The main goal of this survey is to provide researchers in the field of gesture based HCI with a summary of progress achieved to date and to help identify areas where further research is needed.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price excludes VAT (USA) Tax calculation will be finalised during checkout.

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Retracted article: a survey on recent vision-based gesture recognition.

Vision Based Hand Gesture Recognition for Mobile Devices: A Review

A Survey on Vision-Based Hand Gesture Recognition

A Forge.NET (2012) http://www.aforgenet.com/framework/

Alijanpour N, Ebrahimnezhad H, Ebrahimi A (2008) Inner distance based hand gesture recognition for devices control. In: International conference on innovations in information technology, pp 742–746

Alon J, Athitsos V, Yuan Q, Sclaroff S (2005) Simultaneous localization and recognition of dynamic hand gestures. In: IEEE workshop on motion and video computing (WACV/MOTION’05), pp 254–260

Alon J, Athitsos V, Yuan Q, Sclaroff S (2009) A unified framework for gesture recognition and spatiotemporal gesture segmentation. IEEE Trans Pattern Analy Mach Intell 31(9): 1685–1699

Article Google Scholar

Alpern M, Minardo K (2003) Developing a car gesture interface for use as a secondary task. In: CHI ’03 extended abstracts on human factors in computing systems. ACM Press, pp 932–933

Andrea C (2001) Dynamic time warping for offline recognition of a small gesture vocabulary. In: Proceedings of the IEEE ICCV workshop on recognition, analysis, and tracking of faces and gestures in real-time systems, July–August, p 83

Appenrodt J, Handrich S, Al-Hamadi A, Michaelis B (2010) Multi stereo camera data fusion for fingertip detection in gesture recognition systems. In: International conference of soft computing and pattern recognition (SoCPaR), 2010, pp 35–40

Argyros A, Lourakis MIA (2004a) Real-time tracking of multiple skin-colored objects with a possibly moving camera. In: Proceedings of the European conference on computer vision, Prague, pp 368–379

Argyros A, Lourakis MIA (2004b) 3D tracking of skin-colored regions by a moving stereoscopic observer. Appl Opt 43(2): 366–378

Argyros A, Lourakis MIA (2006) Binocular hand tracking and reconstruction based on 2D shape matching. In: Proceedings of the international conference on pattern recognition (ICPR), Hong-Kong

Bandera JP, Marfil R, Bandera A, Rodríguez JA, Molina-Tanco L, Sandoval F (2009) Fast gesture recognition based on a two-level representation. Pattern Recogn Lett 30: 1181–1189

Bao J, Song A, Guo Y, Tang H (2011) Dynamic hand gesture recognition based on SURF tracking. In: International conference on electric information and control engineering (ICEICE), pp 338–341

Baxter J (2000) A model of inductive bias learning. J Artif Intell Res 12: 149–198

MathSciNet MATH Google Scholar

Bellarbi A, Benbelkacem S, Zenati-Henda N, Belhocine M (2011) Hand gesture interaction using color-based method for Tabletop interfaces. In: IEEE 7th international symposium on intelligent signal processing (WISP), pp 1–6

Belongie S, Malik J, Puzicha J (2002) Shape matching and object recognition using shape contexts. IEEE Trans Pattern Anal Mach Intell 24(4): 509–522

Berci N, Szolgay P (2007) Vision based human–machine interface via hand gestures. In: 18th European conference on circuit theory and design (ECCTD 2007), pp 496–499

Bergh M, Gool L (2011) Combining RGB and ToF cameras for real-time 3D hand gesture interaction. In: Workshop on applications of computer vision (WACV), IEEE, pp 66–72

Bergh MV, Meier EK, Bosch’e F, Gool LV (2009) Haarlet-based hand gesture recognition for 3D interaction, workshop on applications of computer vision (WACV), pp 1–8

Bernardes J, Nakamura R, Tori R (2009) Design and implementation of a flexible hand gesture command interface for games based on computer vision. In: 8th Brazilian symposium on digital games and entertainment, pp 64–73

Berry G (1998) Small-wall, a multimodal human computer intelligent interaction test bed with applications, Dept. of ECE, University of Illinois at Urbana-Champaign, MS thesis

Bhuyan MK, Ghoah D, Bora PK (2006) A framework for hand gesture recognition with applications to sign language. In: Annual IEEE India conference, pp 1–6

Bimbo AD, Landucci L, Valli A (2006) Multi-user natural interaction system based on real-time hand tracking and gesture recognition. In: 18th International conference on pattern recognition (ICPR’06), pp 55–58

Binh ND, Ejima T (2006) A new approach dedicated to hand gesture recognition. In: 5th IEEE international conference on cognitive informatics (ICCI’06), pp 62–67

Birdal A, Hassanpour R (2008) Region based hand gesture recognition. In: 16th International conference in central Europe on computer graphics, visualization and computer vision, pp 1–7

Birk H, Moeslund TB, Madsen CB (1997) Real-time recognition of hand alphabet gestures using principal component analysis. In: Proceedings of the Scandinavian conference on image analysis, Lappeenranta

Blake A, North B, Isard M (1999) Learning multi-class dynamics. In: Proceedings advances in neural information processing systems (NIPS), vol 11, pp 389–395

Bolt RA, Herranz E (1992) Two-handed gesture in multi-modal natural dialog. In: Proceedings of the 5th annual ACM symposium on user interface software and technology, ACM Press, pp 7–14

Boulay B (2007) Human posture recognition for behavior understanding. PhD thesis, Universit’e de Nice-Sophia Antipolis

Bourke A, O’Brien J, Lyons G (2007) Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait & Posture 26(2):194–199. http://www.sciencedirect.com/science/article/B6T6Y-4MBCJHV-1/2/f87e4f1c82f3f93a3a5692357e3fe00c

Bowden R, Zisserman A, Kadir T, Brady M (2003) Vision based interpretation of natural sign languages. In: Exhibition at ICVS03: the 3rd international conference on computer vision systems. ACM Press, pp 1–2

Bradski G (1998) Real time face and object tracking as a component of a perceptual user interface. In: IEEE workshop on applications of computer vision. Los Alamitos, California, pp 214–219

Bradski G, Kaehler A (2008) Learning OpenCV, O‘Reilly, pp 337–341

Bretzner L, Laptev I, Lindeberg T (2002) Hand gesture recognition using multi-scale colour features, hierarchical models and particle filtering. In: Fifth IEEE international conference on automatic face and gesture recognition, pp 405–410. doi: 10.1109/AFGR.2002.1004190

Buchmann V, Violich S, Billinghurst M, Cockburn A (2004) Fingartips: gesture based direct manipulation in augmented reality. In: 2nd international conference on computer graphics and interactive techniques, ACM Press, pp 212–221

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Kluwer, Boston, pp 1–43

Google Scholar

Cao X, Balakrishnan R (2003) Visionwand: interaction techniques for large displays using a passive wand tracked in 3d. In: ‘UIST ’03: proceedings of the 16th annual ACM symposium on User Interface software and technology. ACM Press, New York, pp 173–182

Chai D, Ngan K (1998) Locating the facial region of a head and-shoulders color image. In: IEEE international conference on automatic face and gesture recognition, pp 124–129, Piscataway

Chalechale A, Naghdy G (2007) Visual-based human–machine interface using hand gestures. In: 9th International symposium on signal processing and its applications (ISSPA 2007), pp 1–4

Chalechale A, Safaei F, Naghdy G, Premaratn P (2005) Hand gesture selection and recognition for visual-based human–machine interface. In: IEEE international conference on electro information technology, pp 1–6

Chang CC (2006) Adaptive multiple sets of CSS features for hand posture recognition. Neuro Comput 69: 2017–2025

Charniak E (1993) Statistical language learning. MIT Press, Cambridge

Chatty S, Lecoanet P (1996) Pen computing for air traffic control. In: Proceedings of the SIGCHI conference on Human factors in computing systems, ACM Press, pp 87–94

Chaudhary A, Raheja JL, Das K, Raheja S (2011) Intelligent approaches to interact with machines using hand gesture recognition in natural way: a survey. Int J Comput Sci Eng Survey (IJCSES) 2(1): 122–133

Chen YT, Tseng KT (2007) Developing a multiple-angle hand gesture recognition system for human machine interactions. In: 33rd annual conference of the IEEE industrial electronics society (IECON), pp 489–492

Chen Q, Georganas ND, Petriu EM (2007) Real-time vision-based hand gesture recognition using Haar-like features. In: Conference on instrumentation and measurement technology (IMTC 2007), pp 1–6

Chen Q, Georganas ND, Petriu M (2008) Hand gesture recognition using Haar-like features and a stochastic context-free grammar. IEEE Trans Instrum Meas 57(8): 1562–1571

Cheng J, Xie X, Bian W, Tao D (2012) Feature fusion for 3D hand gesture recognition by learning a shared hidden space. Pattern Recogn Lett 33: 476–484

Choras RS (2009) Hand shape and hand gesture recognition. In: IEEE symposium on industrial electronics and applications (ISIEA 2009), pp 145–149

Chung WK, Wu X, Xu Y (2009) A real time hand gesture recognition based on Haar wavelet representation. In: International conference on robotics and biomimetics, Bangkok, pp 336–341

Cohen PR, Johnston M, McGee D, Oviatt S, Pittman J, Smith I, Chen L, Clow J (1997) Quickset: multimodal interaction for distributed applications. In: Proceedings of the fifth ACM international conference on Multimedia, ACM Press, pp 31–40

Conci N, Ceresato P, De Natale FGB (2007) Natural human–machine interface using an interactive virtual blackboard. In: IEEE international conference on image processing, pp 181–184

Cootes TF, Taylor CJ (1992) Active shape models smart snakes. In: British machine vision conference, pp 266–275

Cootes TF, Taylor CJ, Cooper DH, Graham J (1995) Active shape models—their training and applications. Comput Vis Image Underst 61(1): 38–59

Corera S, Krishnarajah N (2011) Capturing hand gesture movement: a survey on tools techniques and logical considerations. In: Proceedings of Chi Sparks 2011 HCI research, innovation and implementation, Arnhem, Netherlands. http://proceedings.chi-sparks.nl/documents/Education-Gestures/FP-35-AC-EG.pdf

Cote M, Payeur P, Comeau G (2006) Comparative study of adaptive segmentation techniques for gesture analysis in unconstrained environments. In: IEEE international workshop on imagining systems and techniques, pp 28–33

Crowley JL, Jolle Coutaz FB (2000) Perceptual user interfaces: things that see. Commun ACM 43(3): 54–64

Crowley J, Berard F, Coutaz J (1995) Finger tracking as an input device for augmented reality. In: International workshop on gesture and face recognition, Zurich

Cui Y, Weng J (1996) Hand sign recognition from intensity image sequences with complex background. In: Proceedings of the IEEE computer vision and pattern recognition (CVPR), pp 88–93

Cui Y, Swets D, Weng J (1995) Learning-based hand sign recognition using shoslf-m. In: International workshop on automatic face and gesture recognition, Zurich, pp 201–206

Cutler R, Turk M (1998) View-based interpretation of real-time optical flow for gesture recognition. In: Proceedings of the international conference on face and gesture recognition. IEEE Computer Society, Washington, pp 416–421

Darrell T, Essa I, Pentland A (1996) Task-specific gesture analysis in real-time using interpolated views. IEEE Trans Pattern Anal Mach Intell 18(12): 1236–1242

Davis JW, Vaks S (2001) A perceptual user interface for recognizing head gesture acknowledgements. In: Proceedings of the 2001 workshop on perceptive user interfaces. ACM Press, pp 1–7

De Tan T, Geo ZM (2011) Research of hand positioning and gesture recognition based on binocular vision. In: EEE international symposium on virtual reality innovation 2011, pp 311–315

Deng LY, Lee DL, Keh HC, Liu YJ (2010) Shape context based matching for hand gesture recognition. In: IET international conference on frontier computing. Theory, technologies and applications, pp 436–444

Derpanis KG (2004) A review of vision-based hand gestures. http://cvr.yorku.ca/members/gradstudents/kosta/publications/file_Gesture_review.pdf

Derpanis KG (2005) Mean shift clustering, Lecture Notes. http://www.cse.yorku.ca/~kosta/CompVis_Notes/mean_shift.pdf

Du H, Xiong W, Wang Z (2011) Modeling and interaction of virtual hand based on virtools. In: International conference on multimedia technology (ICMT), pp 416–419

Eamonn K, Pazzani MJ (2001) Derivative dynamic time warping. In: First international SIAM international conference on data mining, Chicago

Elmezain M, Al-Hamadi A, Michaelis B (2009) Hand trajectory-based gesture spotting and recognition using HMM. In: 16th IEEE international conference on image processing (ICIP 2009), pp 3577–3580

Elmezain M, Al-Hamadi A, Sadek S, Michaelis M (2010) Robust methods for hand gesture spotting and recognition using hidden Markov models and conditional random fields. In: IEEE international symposium on signal processing and information technology (ISSPIT), pp 133–136

EyeSight’s (2012) http://www.eyesight-tech.com/

Eyetoy (2003) http://asia.gamespot.com/eyetoy-play/

Fang G, Gao W, Zhao D (2003) Large vocabulary sign language recognition based on hierarchical decision trees. In: Proceedings of the 5th international conference on multimodal interfaces. ACM Press, pp 125–131

Fang Y, Wang K, Cheng J, Lu H (2007) A real-time hand gesture recognition method. In: IEEE international conference on multimedia and expo, pp 995–998

Ferscha A, Resmerita S, Holzmann C, Reichor M (2005) Orientation sensing for gesture-based interaction with smart artifacts. Comput Commun 28: 1552–1563

Forsberg A, Dieterich M, Zeleznik R (1998) The music notepad. In: Proceedings of the 11th annual ACM symposium on user interface software and technology, ACM Press, pp 203–210

Francois R, Medioni G (1999) Adaptive color background modeling for real-time segmentation of video streams. In: International conference on imaging science, systems, and technology, Las Vegas, pp 227–232

Freeman W, Weissman C (1995) Television control by hand gestures. In: International workshop on automatic face and gesture recognition, Zurich, pp 179–183

Freeman W, Tanaka K, Ohta J, Kyuma K (1996) Computer vision for computer games. In: Proceedings of the second international conference on automatic face and gesture recognition, pp 100–105

Freund Y, Schapire R (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1): 119–139

Article MathSciNet MATH Google Scholar

Friedman J, Hastie T, Tibshiranim R (2000) Additive logistic regression: a statistical view of boosting. Ann Stat 28(2): 337–374

Article MATH Google Scholar

Gandy M, Starner T, Auxier J, Ashbrook D (2000) The gesture pendant: a self illuminating, wearable, infrared computer vision system for home automation control and medical monitoring. In: ‘4th IEEE international symposium on wearable computers, IEEE Computer Society, pp 87–94

Gastaldi G, Pareschi A, Sabatini SP, Solari F, Bisio GM (2005) A man-machine communication system based on the visual analysis of dynamic gestures. In: IEEE international conference on image processing (ICIP 2005), pp 397–400

Gavrila DM, Davis LS (1995) Towards 3-d model-based tracking and recognition of human movement: multi-view approach. In: IEEE international workshop on automatic face- and gesture recognition. IEEE Computer Society, Zurich, pp 272–277

Ge SS, Yang Y, Lee TH (2006) Hand gesture recognition and tracking based on distributed locally linear embedding. In: IEEE conference on robotics, automation and mechatronics, pp 1–6

Ge SS, Yang Y, Lee TH (2008) Hand gesture recognition and tracking based on distributed locally linear embedding. Image Vis Comput 26:1607–1620

GestureTek (2008) http://www.gesturetek.com/

Gorce MDL, Fleet DJ, Paragios N (2011) Model-based 3D hand pose estimation from monocular video. IEEE Trans Pattern Anal Mach Intell 33(9): 1793–1805

Goza SM, Ambrose RO, Diftler MA, Spain IM (2004) Telepresence control of the nasa/darpa robonaut on a mobility platform. In: Conference on human factors in computing systems. ACM Press, pp 623–629

Graetzel C, Fong TW, Grange S, Baur C (2004) A non-contact mouse for surgeon-computer interaction. Technol Health Care 12(3): 245–257

Habib HA, Mufti M (2006) Real time mono vision gesture based virtual keyboard system. IEEE Trans Consumer Electron 52(4):1261–1266

Hackenberg G, McCall R, Broll W (2011) Lightweight palm and finger tracking for real-time 3D gesture control. In: IEEE virtual reality conference (VR), pp 19–26

Hall ET (1973) The silent language. Anchor Books. ISBN-13: 978-0385055499

HandGKET (2011) https://sites.google.com/site/kinectapps/kinect

HandVu (2003) http://www.movesinstitute.org/~kolsch/HandVu/HandVu.html

Hardenberg CV, Berard F (2001) Bare-hand human–computer interaction. Proceedings of the ACM workshop on perceptive user interfaces. ACM Press, pp 113–120

He GF, Kang SK, Song WC, Jung ST (2011) Real-time gesture recognition using 3D depth camera. In: 2nd International conference on software engineering and service science (ICSESS), pp 187–190

Heap T, Hogg D (1996) Towards 3D hand tracking using a deformable model. In: IEEE international conference automatic face and gesture recognition, Killington, pp 140–145

Henia OB, Bouakaz S (2011) 3D Hand model animation with a new data-driven method. In: Workshop on digital media and digital content management, IEEE, pp 72–76

Ho MF, Tseng CY, Lien CC, Huang CL (2011) A multi-view vision- based hand motion capturing system. Pattern Recogn 44: 443–453

Holzmann GJ (1925) Finite state machine: Ebook. http://www.spinroot.com/spin/Doc/Book91_PDF/F1.pdf

Hossain M, Jenkin M (2005) Recognizing hand-raising gestures using HMM. In: 2nd Canadian conference on computer and robot vision (CRV’05), pp 405–412

Howe LW, Wong F, Chekima A (2008) Comparison of hand segmentation methodologies for hand gesture recognition. In: International symposium on information technology (ITSim 2008), pp 1–7

Hsieh CC, Liou DH, Lee D (2010) A real time hand gesture recognition system using motion history image. In: 2nd International conference on signal processing systems (ICSPS), pp 394–398

Hu K, Canavan S, Yin L (2010) Hand pointing estimation for human computer interaction based on two orthogonal-views. In: International conference on pattern recognition 2010, pp 3760–3763

Huang S, Hong J (2011) Moving object tracking system based on camshift and Kalman filter. In: International conference on consumer electronics, communications and networks (CECNet), pp 1423–1426

Huang D, Tang W, Ding Y, Wan T, Wu X, Chen Y (2011a) Motion capture of hand movements using stereo vision for minimally invasive vascular interventions. In: Sixth international conference on image and graphics, pp 737–742

Huang DY, Hu WC, Chang SH (2011b) Gabor filter-based hand-pose angle estimation for hand gesture recognition under varying illumination. Expert Syst Appl 38(5):6031–6042

Iannizzotto G, Villari M, Vita L (2001) Hand tracking for human-computer interaction with gray level visual glove: turning back to the simple way. In: Workshop on perceptive user interfaces, ACM digital library, ISBN 1-58113-448-7

Ibarguren A, Maurtua I, Sierra B (2010) Layered architecture for real time sign recognition: hand gesture and movement. Eng Appl Artif Intell 23: 1216–1228

iGesture (2012) http://www.igesture.org/

Ionescu D, Ionescu B, Gadea C, Islam S (2011a) A multimodal interaction method that combines gestures and physical game controllers. In: Proceedings of 20th international conference on computer communications and networks (ICCCN), IEEE, pp 1–6

Ionescu D, Ionescu B, Gadea C, Islam S (2011b) An intelligent gesture interface for controlling TV sets and set-top boxes. In: 6th IEEE international symposium on applied computational intelligence and informatics, pp 159–164

Isard M, Blake A (1998) Condensation—conditional density propagation for visual tracking. Int J Comput Vis 29(1): 5–28

Joslin C, Sawah AE, Chen Q, Georganas N (2005) Dynamic gesture recognition. In: Conference on instrumentation and measurement technology, pp 1706–1711

Ju SX, Black MJ, Minneman S, Kimber D (1997) Analysis of gesture and action in technical talks for video indexing, Technical report, American Association for Artificial Intelligence. AAAI Technical Report SS-97-03

Juang CF, Ku KC (2005) A recurrent fuzzy network for fuzzy temporal sequence processing and gesture recognition. IEEE Trans Syst Man Cybern Part B Cybern 35(4): 646–658

Juang CF, Ku KC, Chen SK (2005) Temporal hand gesture recognition by fuzzified TSK-type recurrent fuzzy network. In: International joint conference on neural networks, pp 1848–1853

Just A, Marcel S (2009) A comparative study of two state-of-the-art sequence processing techniques for hand gesture recognition. Comput Vis Image Underst 113: 532–543

Kalman RE (1960) A new approach to linear filtering and prediction problems. Trans ASME J Basic Eng 82: 35–42

Kampmann M (1998) Segmentation of a head into face, ears, neck and hair for knowledge-based analysis-synthesis coding of video-phone sequences. In: Proceedings of the international conference on image processing (ICIP), vol 2, Chicago, pp 876–880

Kanniche MB (2009) Gesture recognition from video sequences. PhD Thesis, University of Nice

Kanungo T, Mount DM, Netanyahu NS, Piatko CD, Silverman R, Wu AY (2002) An efficient k-means clustering algorithm: analysis and implementation. IEEE Trans Pattern Anal Mach Intell 24(7): 881–892

Kapralos B, Hogue A, Sabri H (2007) Recognition of hand raising gestures for a remote learning application. In: Eight international workshop on image analysis for multimedia interactive services (WIAMIS’07), pp 1–4

Karam M (2006) A framework for research and design of gesture-based human computer interactions. PhD Thesis, University of Southampton

Keogh E, Ratanamahatana CA (2005) Exact indexing of dynamic time warping. Knowl Inf Syst 7(3): 358–386

Kevin NYY, Ranganath S, Ghosh D (2004) Trajectory modeling in gesture recognition using cybergloves and magnetic trackers. In: TENCON 2004. IEEE region 10 conference, pp 571–574

Konrad T, Demirdjian D, Darrell T (2003) Gesture + play: full-body interaction for virtual environments. In: ‘CHI ’03 extended abstracts on human factors in computing systems. ACM Press, pp 620–621

Kurata T, Okuma T, Kourogi M, Sakaue K (2001) The hand mouse: GMM hand-color classification and mean shift track-ing. In: International workshop on recognition, analysis and tracking of faces and gestures in real-time systems, Vancouver, pp 119–124

Kuzmanić A, Zanchi V (2007) Hand shape classification using DTW and LCSS as similarity measures for vision-based gesture recognition system. In: International conference on “Computer as a Tool (EUROCON 2007)”, pp 264–269

Laptev I, Lindeberg T (2001) Tracking of multi-state hand models using particle filtering and a hierarchy of multi-scale image features. In: Proceedings of the sScale-space’01, volume 2106 of Lecture Notes in Computer Science, p 63

Lee DH, Hong KS (2010) Game interface using hand gesture recognition. In: 5th international conference on computer sciences and convergence information technology (ICCIT), pp 1092–1097

Lee H-K, Kim JH (1999) An hmm-based threshold model approach for gesture recognition. IEEE Trans Pattern Anal Mach Intell 21(10): 961–973

Lee J, Kunii TL (1995) Model-based analysis of hand posture. IEEE Comput Graphics Appl 15(5): 77–86

Lee D, Park Y (2009) Vision-based remote control system by motion detection and open finger counting. IEEE Trans Consumer Electron 55(4): 2308–2313

Lenman S, Bretzner L, Thuresson B (2002) Using marking menus to develop command sets for computer vision based hand gesture interfaces. In: Proceedings of the second Nordic conference on human–computer interaction, ACM Press, pp 239–242

Li F, Wechsler H (2005) Open set face recognition using transduction. IEEE Trans Pattern Anal Mach Intell 27(11): 1686–1697

Li S, Zhang H (2004) Multi-view face detection with ^oat-boost. IEEE Trans Pattern Anal Mach Intell 26(9): 1112–1123

Liang R-H, Ouhyoung M (1996) A sign language recognition system using hidden Markov model and context sensitive search. In: Proceedings of the ACM symposium on virtual reality software and technology’96, ACM Press, pp 59–66

Licsar A, Sziranyi T (2005) User-adaptive hand gesture recognition system with interactive training. Image Vis Comput 23: 1102–1114

Lin SY, Lai YC, Chan LW, Hung YP (2010) Real-time 3D model-based gesture tracking for multimedia control. In: International conference on pattern recognition, pp 3822–3825

Liu N, Lovell BC (2005) Hand gesture extraction by active shape models. In: Proceedings of the digital imaging computing: techniques and applications (DICTA 2005), pp 1–6

Liu Y, Zhang P (2009) Vision-based human–computer system using hand gestures. In: International conference on computational intelligence and security, pp 529–532

Liu Y, Gan Z, Sun Y (2008) Static hand gesture recognition and its application based on support vector machines. In: Ninth ACIS international conference on software engineering, artificial intelligence, networking, and parallel/distributed computing, pp 517–521

Lloyd S (1982) Least squares quantization in PCM. IEEE Trans Inf Theory 28(2): 129–137

Lu W-L, Little JJ (2006) Simultaneous tracking and action recognition using the pca-hog descriptor. In: The 3rd Canadian conference on computer and robot vision, 2006. Quebec, pp 6–13

Lumsden J, Brewster S (2003) A paradigm shift: alternative interaction techniques for use with mobile & wearable devices. In: Proceedings of the 2003 conference of the centre for advanced studies conference on collaborative research. IBM Press, pp 197–210

Luo Q, Kong X, Zeng G, Fan J (2008) Human action detection via boosted local motion histograms. Mach Vis Appl. doi: 10.1007/s00138-008-0168-5

MacQueen J (1967) Some methods for classification and analysis of multivariate observations. In: The proceedings of the fifth Berkeley symposium on mathematical statistics and probability, vol 1, pp 281–297

Malassiotis S, Strintzis MG (2008) Real-time hand posture recognition using range data. Image Vis Comput 26: 1027–1037

Mammen JP, Chaudhuri S, Agrawal T (2001) Simultaneous tracking of both hands by estimation of erroneous observations. In: Proceedings of the British machine vision conference (BMVC), Manchester

Martin J, Devin V, Crowley J (1998) Active hand tracking. In: IEEE conference on automatic face and gesture recognition, Nara, Japan, pp 573–578

MATLAB (2012) http://www.mathworks.in/products/matlab/

McNeill D (1992) Hand and mind: what gestures reveal about thought. University Of Chicago Press. ISBN: 9780226561325

Mgestyk (2009) http://www.mgestyk.com/

Microsoft Kinect (2012) http://www.microsoft.com/en-us/kinectforwindows/

Mitra S, Acharya T (2007) Gesture recognition: a survey. IEEE Trans Syst Man Cybern (SMC)Part C Appl Rev 37(3): 311–324

Modler P, Myatt T (2008) Recognition of separate hand gestures by time delay neural networks based on multi-state spectral image patterns from cyclic hand movements. In: IEEE international conference on systems, man and cybernetics (SMC 2008), pp 1539–1544

Moeslund T, Granum E (2001) A survey of computer vision based human motion capture. Comput Vis Image Underst 81: 231–268

Moyle M, Cockburn A (2002) Gesture navigation: an alternative ‘back’ for the future. In: Human factors in computing systems, ACM Press, New York, pp 822–823

Murthy GRS, Jadon RS (2010) Hand gesture recognition using neural networks. In: 2nd International advance computing conference (IACC), IEEE, pp 134–138

Nickel K, Stiefelhagen R (2003) Pointing gesture recognition based on 3d-tracking of face, hands and head orientation. In: ICMI ’03: proceedings of the 5th international conference on multimodal interfaces. ACM Press, New York, pp 140–146

Nishikawa A, Hosoi T, Koara K, Negoro D, Hikita A, Asano S, Kakutani H, Miyazaki F, Sekimoto M, Yasui M, Miyake Y, Takiguchi S, Monden M (2003) FAce MOUSe: a novel human-machine interface for controlling the position of a laparoscope. IEEE Trans Robotics Autom 19(5): 825–841

Noury N, Barralon P, Virone G, Boissy P, Hamel M, Rumeau P (2003) A smart sensor based on rules and its evaluation in daily routines. In: Engineering in medicine and biology society, 2003. Proceedings of the 25th annual international conference of the IEEE, vol 4, pp 3286–3289

OMRON (2012) http://www.omron.com/

Ong SCW, Ranganath S, Venkatesh YV (2006) Understanding gestures with systematic variations in movement dynamics. Pattern Recogn 39: 1633–1648

Ongkittikul S, Worrall S, Kondoz A (2008) Two hand tracking using colour statistical model with the K-means embedded particle filter for hand gesture recognition. In: 7th Computer information systems and industrial management applications, pp 201–206

Osawa N, Asai K, Sugimoto YY (2000) Immersive graph navigation using direct manipulation and gestures. In: ACM symposium on virtual reality software and technology. ACM Press, pp 147–152

Ottenheimer HJ (2005) The anthropology of language: an introduction to linguistic anthropology. Wadsworth Publishing. ISBN-13: 978-0534594367

Ou J, Fussell SR, Chen X, Setlock LD, Yang J (2003) Gestural communication over video stream: supporting multimodal interaction for remote collaborative physical tasks. In: Proceedings of the 5th international conference on Multimodal interfaces. ACM Press, pp 242–249

Paiva A, Andersson G, Hk K, Mourao D, Costa M, Martinho C (2002) SenToy in fantasyA: designing an affective sympathetic interface to a computer game. Pers Ubiquitous Comput 6(5–6):378–389

Pang YY, Ismail NA, Gilbert PLS (2010) A real time vision-based hand gesture interaction. In: Fourth Asia international conference on mathematical/analytical modeling and computer simulation, IEEE, pp 237–242

Pantic M, Nijholt A, Pentland A, Huanag TS (2008) Human-centred intelligent human–computer Interaction ( HCI 2 ): how far are we from attaining it?. Int J Auton Adapt Commun Syst 1: 168–187

Patwardhan KS, Roy SD (2007) Hand gesture modelling and recognition involving changing shapes and trajectories, using a predictive EigenTracker. Pattern Recogn Lett 28: 329–334

Paulraj MP, Yaacob S, Desa H, Hema CR (2008) Extraction of head and hand gesture features for recognition of sign language. In: International conference on electronic design, pp 1–6

Pausch R, Williams RD (1990) Tailor: creating custom user interfaces based on gesture. In: Proceedings of the 3rd annual ACM SIGGRAPH symposium on user interface software and technology. ACM Press, pp 123–134

Pavlovic VI, Sharma R, Huang TS (1997) Visual interpretation of hand gestures for human–computer interaction: a review. Trans Pattern Anal Mach Intell 19(7): 677–695

Perez P, Hue C, Vermaak J, Gangnet M (2002) Color-based probabilistic tracking. In: Procedings of the European conference on computer vision, Copenhagen, pp 661–675

Peterfreund N (1999) Robust tracking of position and velocity with Kalman snakes. IEEE Trans Pattern Anal Mach Intell 10(6): 564–569

Pickering CA (2005) Gesture recognition driver controls. IEE J Comput Control Eng 16(1): 27–40

MathSciNet Google Scholar

PointGrab’s (2012) http://www.pointgrab.com/

Prieto A, Bellas F, Duro RJ, López-Peña F (2006) An adaptive visual gesture based interface for human machine interaction in intelligent workspaces. In: IEEE international conference on virtual environments, human–computer interfaces, and measurement systems, pp 43–48

Radkowski R, Stritzke C (2012) Interactive hand gesture-based assembly for augmented reality applications. In: ACHI 2012: the fifth international conference on advances in computer–human interactions, IARIA, pp 303–308

Ramage D (2007) Hidden Markov models fundamentals, Lecture Notes. http://cs229.stanford.edu/section/cs229-hmm.pdf

Rashid O, Al-Hamadi A, Michaelis B (2009) A framework for the integration of gesture and oosture recognition using HMM and SVM. In: IEEE international conference on intelligent computing and intelligent systems (ICIS 2009), pp 572–577

Rautaray SS, Agrawal A (2011) A novel human computer interface based on hand gesture recognition using computer vision techniques. In: International conference on intelligent interactive technologies and multimedia (IITM-2011), pp 292–296

Rautaray SS, Agrawal A (2012) Real time hand gesture recognition system for dynamic applications. Int J UbiComp 3(1): 21–31

Reale MJ, Canavan S, Yin L, Hu K, Hung T (2011) A multi-gesture interaction system using a 3-D Iris disk model for Gaze estimation and an active appearance model for 3-D hand pointing. IEEE Trans Multimed 13(3): 474–486

Rehg J, Kanade T (1994) Digiteyes: vision-based hand tracking for human–computer interaction. In: Workshop on motion of non-rigid and articulated bodies, Austin Texas, pp 16–24

Rehg J, Kanade T (1995) Model-based tracking of self-occluding articulated objects. In: Proceedings of the international conference on computer vision (ICCV), pp 612–617

Ren Y, Zhang F (2009a) Hand gesture recognition based on meb-svm. In: Second international conference on embedded software and systems, IEEE Computer Society, Los Alamitos, pp 344–349

Ren Y, Zhang F (2009b) Hand gesture recognition based on MEB-SVM. In: International conferences on embedded software and systems, pp 344–349

Rodriguez S, Picon A, Villodas A (2010) Robust vision-based hand tracking using single camera for ubiquitous 3D gesture interaction. In: IEEE symposium on 3D user interfaces (3DUI), pp 135–136

Sajjawiso T, Kanongchaiyos P (2011) 3D hand pose modeling from uncalibrate monocular images. In: Eighth international joint conference on computer science and software engineering (JCSSE), pp 177–181

Salinas RM, Carnicer RM, Cuevas FJ, Poyato AC (2008) Depth silhouettes for gesture recognition. Pattern Recogn Lett 29: 319–329

Sangineto E, Cupelli M (2012) Real-time viewpoint-invariant hand localization with cluttered backgrounds. Image Vis Comput 30:26–37

Sawah AE, Joslin C, Georganas ND, Petriu EM (2007) A framework for 3D hand tracking and gesture recognition using elements of genetic programming. In: Fourth Canadian conference on computer and robot vision (CRV’07), pp 495–502

Sawah AE, Georganas ND, Petriu EM (2008) A prototype for 3-D hand tracking and posture estimation. IEEE Trans Instrum Meas 57(8): 1627–1636

Saxe D, Foulds R (1996) Toward robust skin identification in video images. In: IEEE international conference on automatic face and gesture recognition, pp 379–384

Schapire R (2002) The boosting approach to machine learning: an overview. In: MSRI workshop on nonlinear estimation and classification

Schlomer T, Poppinga B, Henze N, Boll S (2008) Gesture recognition with a wii controller. In: TEI ’08: proceedings of the 2nd international conference on Tangible and embedded interaction. ACM, New York, pp 11–14

Schmandt C, Kim J, Lee K, Vallejo G, Ackerman M (2002) Mediated voice communication via mobile ip. In: Proceedings of the 15th annual ACM symposium on User interface software and technology. ACM Press, pp 141–150

Schultz M, Gill J, Zubairi S, Huber R, Gordin F (2003) Bacterial contamination of computer keyboards in a teaching hospital. Infect Control Hosp Epidemiol 4(24): 302–303

Sclaroff S, Betke M, Kollios G, Alon J, Athitsos V, Li R, Magee J, Tian TP (2005) Tracking, analysis, and recognition of human gestures in video. In: 8th International conference on document analysis and recognition, pp 806–810

Segen J, Kumar S (1998a) Gesture VR: vision-based 3d Hand interface for spatial interaction. In: Proceedings of the sixth ACM international conference on multimedia. ACM Press, pp 455–464

Segen J, Kumar S (1998b) Video acquired gesture interfaces for the handicapped. In: Proceedings of the sixth ACM international conference on multimedia. ACM Press, pp 45–48

Segen J, Kumar SS (1999) Shadow gestures: 3D hand pose estimation using a single camera. In: Proceedings of the IEEE computer vision and pattern recognition (CVPR), pp 479–485

Senin P (2008) Dynamic time warping algorithm review, technical report. http://csdl.ics.hawaii.edu/techreports/08-04/08-04.pdf

Sharma R, Huang TS, Pavovic VI, Zhao Y, Lo Z, Chu S, Schulten K, Dalke A, Phillips J, Zeller M, Humphrey W (1996) Speech/gesture interface to a visual computing environment for molecular biologists. In: International conference on pattern recognition (ICPR ’96) volume 7276. IEEE Computer Society, pp 964–968

Shimada N, Shirai Y, Kuno Y, Miura J (1998) Hand gesture estimation and model refinement using monocular camera ambiguity limitation by inequality constraints. In: IEEE international conference on face and gesture recognition, Nara, pp 268–273

Shimizu M, Yoshizuka T, Miyamoto H (2007) A gesture recognition system using stereo vision and arm model fitting. In: International congress series 1301, Elsevier, pp 89–92

Sigal L, Sclaroff S, Athitsos V (2004) Skin color-based video segmentation under time-varying illumination. IEEE Trans Pattern Anal Mach Intell 26(7): 862–877

Smith GM, Schraefel MC (2004) The radial scroll tool: scrolling support for stylus- or touch-based document navigation. In: Proceedings of the 17th annual ACM symposium on User interface software and technology, ACM Press, pp 53–56

SoftKinetic, IISU SDK (2012) http://www.softkinetic.com/Solutions/iisuSDK.aspx

Song L, Takatsuka M (2005) Real-time 3D − nger pointing for an augmented desk. In: Australasian conference on user interface, vol 40. Newcastle, pp 99–108

Sriboonruang Y, Kumhom P, Chamnongthai K (2006) Visual hand gesture interface for computer board game control. In: IEEE tenth international symposium on consumer electronics, pp 1–5

Stan S, Philip C (2004) Fastdtw: toward accurate dynamic time warping in linear time and space. In: KDD workshop on mining temporal and sequential data

Staner AT, Pentland A (1995a) Visual recognition of American sign language using hidden Markov models. Technical Report TR-306, Media Lab, MIT

Starner T, Pentland A (1995b) Real time American sign language recognition from video using hidden Markov models, Technical Report 375, MIT Media Lab

Stotts D, Smith JM, Gyllstrom K (2004a) Facespace: endo- and exo-spatial hypermedia in the transparent video face top. In: 15th ACM conference on hypertext & hypermedia. ACM Press, pp 48–57

Stotts D, Smith JM, Gyllstrom K (2004b) Facespace: endo- and exo-spatial hypermedia in the transparent video facetop. In: Proceedings of the fifteenth ACM conference on hypertext & hypermedia. ACM Press, pp 48–57

Suk H, Sin BK, Lee SW (2008) Robust modeling and recognition of hand gestures with dynamic Bayesian network. In: 19th international conference on pattern recognition, pp 1–4

Suka H, Sin B, Lee S (2010) Hand gesture recognition based on dynamic Bayesian network framework. Pattern Recogn 43: 3059–3072

Swindells C, Inkpen KM, Dill JC, Tory M (2002) That one there! Pointing to establish device identity. In: Proceedings of the 15th annual ACM symposium on user interface software and technology. ACM Press, pp 151–160

Teng X, Wu B, Yu W, Liu C (2005) A hand gesture recognition system based on local linear embedding. J Vis Lang Comput 16: 442–454

Terrillon J, Shirazi M, Fukamachi H, Akamatsu S (2000) Comparative performance of different skin chrominance models and chrominance spaces for the automatic detection of human faces in color images. In: Proceedings of the international conference on automatic face and gesture recognition (FG), pp 54–61

Terzopoulos D, Szeliski R (1992) Tracking with Kalman Snakes. MIT Press, Cambridge, pp 3–20

Thirumuruganathan S (2010) A detailed introduction to K-nearest neighbor (KNN) algorithm. http://saravananthirumuruganathan.wordpress.com/2010/05/17/a-detailed-introduction-to-k-nearest-neighbor-knn-algorithm/

Tran C, Trivedi MM (2012) 3-D posture and gesture recognition for interactivity in smart spaces. IEEE Trans Ind Inform 8(1): 178–187